On-Line Detection and Segmentation of Sports Motions Using a Wearable Sensor †

Abstract

:1. Introduction

2. Materials and Methods

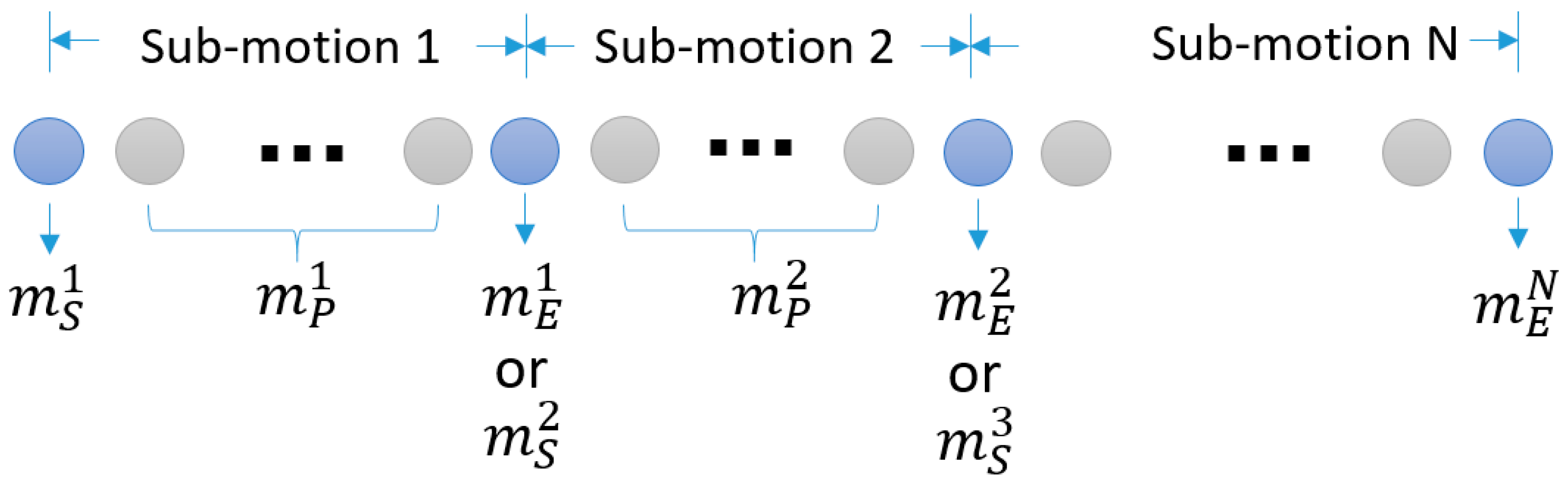

2.1. Motion Model

2.2. On-Line Detection and Segmentation

2.3. Implemetation

2.3.1. Hardware for Motion Data Acquisition

2.3.2. Datasets

2.3.3. Sequence Classifier

- Acceleration along Z-axis (opposite direction of gravity)

- Magnitude of acceleration

- Magnitude of angular velocity

- Magnitude of the first derivative of acceleration

- Magnitude of the first derivative of angular velocity

- Magnitude of the second derivative of acceleration

- Magnitude of the second derivative of angular velocity

- Angular difference between adjacent acceleration vectors

- Angular difference between adjacent angular velocity vectors

- Angular difference between adjacent vectors of the first derivative of acceleration

- Angular difference between adjacent vectors of the first derivative of angular velocity

2.3.4. Training

3. Results

3.1. Evaluation

3.2. Sports Motion Analysis System

4. Discussion and Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Knudson, D. Qualitative biomechanical principles for application in coaching. Sports Biomech. 2007, 6, 109–118. [Google Scholar] [CrossRef] [PubMed]

- Lees, A. Technique analysis in sports: A critical review. J. Sports Sci. 2002, 20, 813–828. [Google Scholar] [CrossRef] [PubMed]

- Knudson, D.V.; Morrison, C.S. Qualitative Analysis of Human Movement; Human Kinetics: Champaign, IL, USA, 2002; ISBN 978-0-7360-3462-3. [Google Scholar]

- Barris, S.; Button, C. A review of vision-based motion analysis in sport. Sports Med. 2008, 38, 1025–1043. [Google Scholar] [CrossRef] [PubMed]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D Convolutional Neural Networks for Human Action Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Molchanov, P.; Yang, X.; Gupta, S.; Kim, K.; Tyree, S.; Kautz, J. Online Detection and Classification of Dynamic Hand Gestures with Recurrent 3D Convolutional Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Caesars Palace, NV, USA, 26 June–1 July 2016; pp. 4207–4215. [Google Scholar]

- Werbos, P.J. Backpropagation through time: What it does and how to do it. Proc. IEEE 1990, 78, 1550–1560. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2014; pp. 568–576. [Google Scholar]

- Lea, C.; Flynn, M.D.; Vidal, R.; Reiter, A.; Hager, G.D. Temporal Convolutional Networks for Action Segmentation and Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Rabiner, L.R. A tutorial on hidden Markov models and selected applications in speech recognition. Proc. IEEE 1989, 77, 257–286. [Google Scholar] [CrossRef]

- Li, H.; Tang, J.; Wu, S.; Zhang, Y.; Lin, S. Automatic Detection and Analysis of Player Action in Moving Background Sports Video Sequences. IEEE Trans. Circuits Syst. Video Technol. 2010, 20, 351–364. [Google Scholar] [CrossRef]

- Kim, Y.; Baek, S.; Bae, B.-C. Motion capture of the human body using multiple depth sensors. ETRI J. 2017, 39, 181–190. [Google Scholar] [CrossRef]

- Zhang, L.; Hsieh, J.-C.; Ting, T.-T.; Huang, Y.-C.; Ho, Y.-C.; Ku, L.-K. A Kinect based Golf Swing Score and Grade System using GMM and SVM. In Proceedings of the 2012 5th International Congress on Image and Signal Processing (CISP), Chongqing, China, 16–18 October 2012; pp. 711–715. [Google Scholar]

- Gong, D.; Medioni, G.; Zhao, X. Structured Time Series Analysis for Human Action Segmentation and Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1414–1427. [Google Scholar] [CrossRef] [PubMed]

- Wu, D.; Pigou, L.; Kindermans, P.J.; Le, N.D.H.; Shao, L.; Dambre, J.; Odobez, J.M. Deep Dynamic Neural Networks for Multimodal Gesture Segmentation and Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1583–1597. [Google Scholar] [CrossRef] [PubMed]

- Bulling, A.; Blanke, U.; Schiele, B. A Tutorial on Human Activity Recognition Using Body-worn Inertial Sensors. ACM Comput. Surv. 2014, 46, 33. [Google Scholar] [CrossRef]

- Chambers, R.; Gabbett, T.J.; Cole, M.H.; Beard, A. The Use of Wearable Microsensors to Quantify Sport-Specific Movements. Sports Med. 2015, 45, 1065–1081. [Google Scholar] [CrossRef] [PubMed]

- Mannini, A.; Sabatini, A.M. A hidden Markov model-based technique for gait segmentation using a foot-mounted gyroscope. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 4369–4373. [Google Scholar]

- Saeedi, A.; Hoffman, M.; Johnson, M.; Adams, R. The Segmented iHMM: A Simple, Efficient Hierarchical Infinite HMM. In Proceedings of the 33rd International Conference on International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Volume 48, pp. 2682–2691. [Google Scholar]

- Weber, M.; Liwicki, M.; Stricker, D.; Scholzel, C.; Uchida, S. Lstm-based early recognition of motion patterns. In Proceedings of the 2014 22nd International Conference on Pattern Recognition (ICPR), Stockholm, Sweden, 24–28 August 2014; pp. 3552–3557. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Yang, D.; Tang, J.; Huang, Y.; Xu, C.; Li, J.; Hu, L.; Shen, G.; Liang, C.-J.M.; Liu, H. TennisMaster: An IMU-based Online Serve Performance Evaluation System. In Proceedings of the 8th Augmented Human International Conference, Mountain View, CA, USA, 16–18 March 2017; p. 17. [Google Scholar]

- Woosuk, K.; Myunggyu, K. Sports motion analysis system using wearable sensors and video cameras. In Proceedings of the 2017 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Korea, 18–20 October 2017; pp. 1089–1091. [Google Scholar]

- Lee, H.-K.; Kim, J.H. An HMM-based threshold model approach for gesture recognition. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 961–973. [Google Scholar] [CrossRef]

- Graves, A.; Liwicki, M.; Bunke, H.; Schmidhuber, J.; Fernández, S. Unconstrained on-line handwriting recognition with recurrent neural networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2008; pp. 577–584. [Google Scholar]

- Graves, A.; Fernández, S.; Gomez, F.; Schmidhuber, J. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd international Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 369–376. [Google Scholar]

- Bergroth, L.; Hakonen, H.; Raita, T. A survey of longest common subsequence algorithms. In Proceedings of the Seventh International Symposium on String Processing and Information Retrieval, A Curuna, Spain, 29 September 2000; pp. 39–48. [Google Scholar]

- E2BOX. Available online: http://www.e2box.co.kr (accessed on 13 February 2018).

- Nunome, H.; Asai, T.; Ikegami, Y.; Sakurai, S. Three-dimensional kinetic analysis of side-foot and instep soccer kicks. Med. Sci. Sports Exerc. 2002, 34, 2028–2036. [Google Scholar] [CrossRef] [PubMed]

- Brophy, R.H.; Backus, S.; Kraszewski, A.P.; Steele, B.C.; Ma, Y.; Osei, D.; Williams, R.J. Differences between sexes in lower extremity alignment and muscle activation during soccer kick. J. Bone Joint Surg. 2010, 92, 2050–2058. [Google Scholar] [CrossRef] [PubMed]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Clevert, D.-A.; Unterthiner, T.; Hochreiter, S. Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs). arXiv, 2015; arXiv:151107289. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Gated feedback recurrent neural networks. In Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2067–2075. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the Machine Learning Research, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Chollet, F. Keras. 2015. Available online: https://github.com/keras-team/keras (accessed on 19 March 2018).

- Theano Development Team. Theano: A Python framework for fast computation of mathematical expressions. arXiv, 2016; arXiv:1605.02688. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv, 2014; arXiv:14126980. [Google Scholar]

- Chorowski, J.K.; Bahdanau, D.; Serdyuk, D.; Cho, K.; Bengio, Y. Attention-based models for speech recognition. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2015; pp. 577–585. [Google Scholar]

| State | Description | Label |

|---|---|---|

| Landing of a kicking leg | ||

| Last step of a kicking leg before impact | ||

| or | Toe-off of a kicking leg | |

| Backswing of a kicking leg | ||

| or | Maximum hip extension | |

| Acceleration of a kicking leg | ||

| or | Ball impact | |

| Follow-through | ||

| or | Toe speed inflection | |

| Landing of a kicking leg | ||

| End of kicking |

| State | Description | Label |

|---|---|---|

| Ready | ||

| Brining two hands behind | ||

| or | Two hands behind of a head | |

| Arms foward | ||

| or | Maximum arm stretch | |

| Follow-through | ||

| End of throwing |

| State | Avg. Segmentation Errors(in Frames) |

|---|---|

| Landing of a kicking leg ( | 8.17 |

| Toe-off of a kicking leg ( | 2.82 |

| Maximum hip extension ( | 2.092 |

| Ball impact ( | 0.723 |

| Toe speed inflection ( | 2.855 |

| End of kicking ( | 5.342 |

| State | Avg. Segmentation Errors(in Frames) |

|---|---|

| Ready ( | 4.032 |

| Two hands behind of a head ( | 1.564 |

| Maximum arm stretch ( | 1.419 |

| End of throwing ( | 24.11 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, W.; Kim, M. On-Line Detection and Segmentation of Sports Motions Using a Wearable Sensor. Sensors 2018, 18, 913. https://doi.org/10.3390/s18030913

Kim W, Kim M. On-Line Detection and Segmentation of Sports Motions Using a Wearable Sensor. Sensors. 2018; 18(3):913. https://doi.org/10.3390/s18030913

Chicago/Turabian StyleKim, Woosuk, and Myunggyu Kim. 2018. "On-Line Detection and Segmentation of Sports Motions Using a Wearable Sensor" Sensors 18, no. 3: 913. https://doi.org/10.3390/s18030913

APA StyleKim, W., & Kim, M. (2018). On-Line Detection and Segmentation of Sports Motions Using a Wearable Sensor. Sensors, 18(3), 913. https://doi.org/10.3390/s18030913