Time-Sharing-Based Synchronization and Performance Evaluation of Color-Independent Visual-MIMO Communication

Abstract

:1. Introduction

2. Related Theory

2.1. Generalized Color Modulation

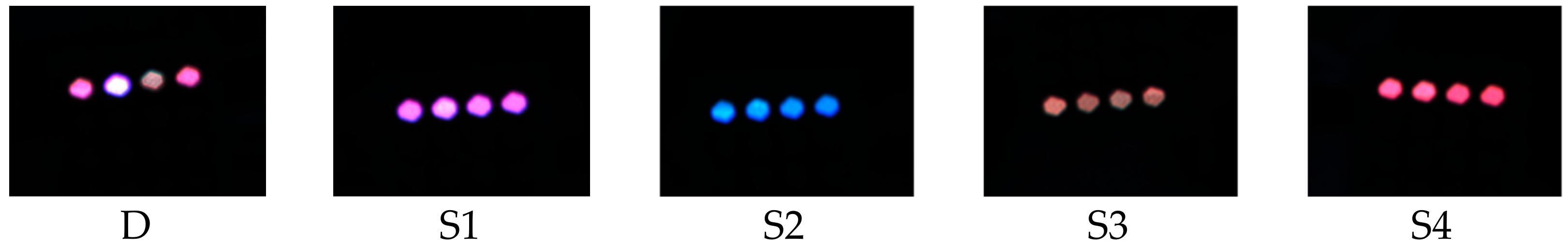

2.2. Color-Independent Visual-MIMO

3. The Proposed Algorithm

3.1. The Synchronization Issue

3.2. The Transmitter

3.3. The Receiver

- cnt: number of accumulated frames

- SD: standard deviation

- ThSD: the threshold of the standard deviation

- Sprevious: the symbols of the previous frame

- Scurrent: the symbols of the current frame

- ASSP (accumulated symbols same probability): the probability that all LEDs in the accumulated frames will have the same symbol

- Thsync: the threshold probability that can be used as a decision measure of a synchronous frame

4. Experimental Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kwon, T.H.; Kim, J.W.; Kim, K.-D. Synchronization Method for Color-independent Visual-MIMO Communication. In Proceedings of the IEEE International Conference on Consumer Electronics 2017 (ICCE), Las Vegas, NV, USA, 8–11 January 2017; pp. 458–459. [Google Scholar]

- Ashok, A.; Gruteser, M.; Mandayam, N.; Silva, J.; Varga, M.; Dana, K. Challenge: Mobile optical networks through visual MIMO. In Proceedings of the Sixteenth Annual International Conference on Mobile Computing and Networking (MobiCom’10), Chicago, IL, USA, 20–24 September 2010; pp. 105–112. [Google Scholar]

- Ashok, A.; Gruteser, M.; Mandayam, N.; Dana, K. Characterizing multiplexing and diversity in visual MIMO. In Proceedings of the IEEE 45th Annual Conference on Information Sciences and Systems, Piscataway, NJ, USA, 23–25 March 2011; pp. 1–6. [Google Scholar]

- Yoo, J.H.; Jung, S.Y. Cognitive vision communication based on LED array and image sensor. In Proceedings of the IEEE 56th International Midwest Symposium on Circuits and Systems(MWSCAS), Piscataway, NJ, USA, 4–7 August 2013; pp. 1228–1231. [Google Scholar]

- Hu, W.; Gu, H.; Pu, Q. LightSync: Unsynchronized visual communication over screen-camera links. In Proceedings of the 19th Annual International Conference on Mobile Computing & Networking (MobiCom’13), Miami, FL, USA, 30 September–4 October 2013; pp. 15–26. [Google Scholar]

- Takai, I.; Harada, T.; Andoh, M.; Yasutomi, K.; Kagawa, K.; Kawahito, S. Optical vehicle-to-vehicle communication system using LED transmitter and camera receiver. IEEE Photonics J. 2014, 6, 1–14. [Google Scholar] [CrossRef]

- Rajagopal, N.; Lazik, P.; Rowe, A. Visual light landmarks for mobile devices. In Proceedings of the 13th International Symposium on Information Processing in Sensor Networks (IPSN-14), Berlin, Germany, 15–17 April 2014; pp. 249–260. [Google Scholar]

- Li, T.; An, C.; Xiao, X.; Campbell, A.T.; Zhou, X. Real-Time Screen-Camera Communication Behind Any Scene. In Proceedings of the 13th Annual International Conference on Mobile Systems Applications and Services, Florence, Italy, 19–22 May 2015; pp. 197–211. [Google Scholar]

- Hu, P.; Pathak, P.H.; Feng, X.; Fu, H.; Mohapatra, P. ColorBars: Increasing data rate of LED-to-Camera communication using color shift keying. In Proceedings of the 11th ACM Conference on Emerging Networking Experiments and Technologies, Heidelberg, Germany, 1–4 December 2015. [Google Scholar]

- Cahyadi, W.A.; Kim, Y.H.; Chung, Y.H.; Ahan, C.J. Mobile Phone Camera-Based Indoor Visible Light Communications with Rotation Compensation. IEEE Photonics J. 2016, 8, 1–8. [Google Scholar] [CrossRef]

- Sugimoto, T.M.; Hashizume, H. Time Synchronization Method Using Visible Light Communication for Smartphone Localization. In Proceedings of the Twelfth International Conference on Wireless and Mobile Communications (ICWMC), Barcelona, Spain, 13–17 November 2016. [Google Scholar]

- Kim, J.E.; Kim, J.W.; Kim, K.-D. LEA Detection and tracking method for color independent visual-MIMO. Sensors 2016, 16, 1027. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.E.; Kim, J.W.; Park, Y.; Kim, K.-D. Color-space-based visual-MIMO for V2X communication. Sensors 2016, 16, 591. [Google Scholar] [CrossRef] [PubMed]

- Shiraki, Y.; Sato, T.G.; Kamamoto, Y.; Moriya, T. Flexible Synchronization in Optical Camera Communication with On-Off Keying. In Proceedings of the Globecom Workshops (GC Wkshps), Singapore, 4–8 December 2017. [Google Scholar]

- Das, P.; Park, Y.; Kim, K.-D. Performance improvement of color space based VLC modulation schemes under color and intensity variation. Opt. Commun. 2013, 303, 1–7. [Google Scholar] [CrossRef]

- Das, P.; Kim, B.Y.; Park, Y.; Kim, K.-D. Color-independent VLC based on a color space without sending target color information. Opt. Commun. 2013, 286, 69–73. [Google Scholar] [CrossRef]

- Das, P.; Kim, B.Y.; Park, Y.; Kim, K.-D. A new color space based constellation diagram and modulation scheme for color independent VLC. Adv. Electr. Comput. Eng. 2012, 12, 11–18. [Google Scholar] [CrossRef]

- Keeping, S. Characterizing and Minimizing LED Flicker in Lighting Applications. Available online: https://www.digikey.com/en/articles/techzone/2012/jul/characterizing-and-minimizing-led-flicker-in-lighting-applications (accessed on 2 July 2017).

| Name (Information) | |

|---|---|

| LED array | RGB LED WS2812B (4 × 4, 150 Hz) |

| Embedded | Arduino Uno (Atmega328) |

| Camera | Flea3 FL3-U3-13S2C (30FPS) |

| System | Win7 (64 bit) |

| Software | Visual studio 2013 (OpenCV) |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kwon, T.-H.; Kim, J.-E.; Kim, K.-D. Time-Sharing-Based Synchronization and Performance Evaluation of Color-Independent Visual-MIMO Communication. Sensors 2018, 18, 1553. https://doi.org/10.3390/s18051553

Kwon T-H, Kim J-E, Kim K-D. Time-Sharing-Based Synchronization and Performance Evaluation of Color-Independent Visual-MIMO Communication. Sensors. 2018; 18(5):1553. https://doi.org/10.3390/s18051553

Chicago/Turabian StyleKwon, Tae-Ho, Jai-Eun Kim, and Ki-Doo Kim. 2018. "Time-Sharing-Based Synchronization and Performance Evaluation of Color-Independent Visual-MIMO Communication" Sensors 18, no. 5: 1553. https://doi.org/10.3390/s18051553

APA StyleKwon, T.-H., Kim, J.-E., & Kim, K.-D. (2018). Time-Sharing-Based Synchronization and Performance Evaluation of Color-Independent Visual-MIMO Communication. Sensors, 18(5), 1553. https://doi.org/10.3390/s18051553