Multi-Touch Tabletop System Using Infrared Image Recognition for User Position Identification

Abstract

:1. Introduction

2. Related Research

2.1. User Collaboration Support by Tabletop and Its Application

2.2. Tabletop Sensing Methods

2.3. Multi-Touch Gestures

2.4. User Position Identification

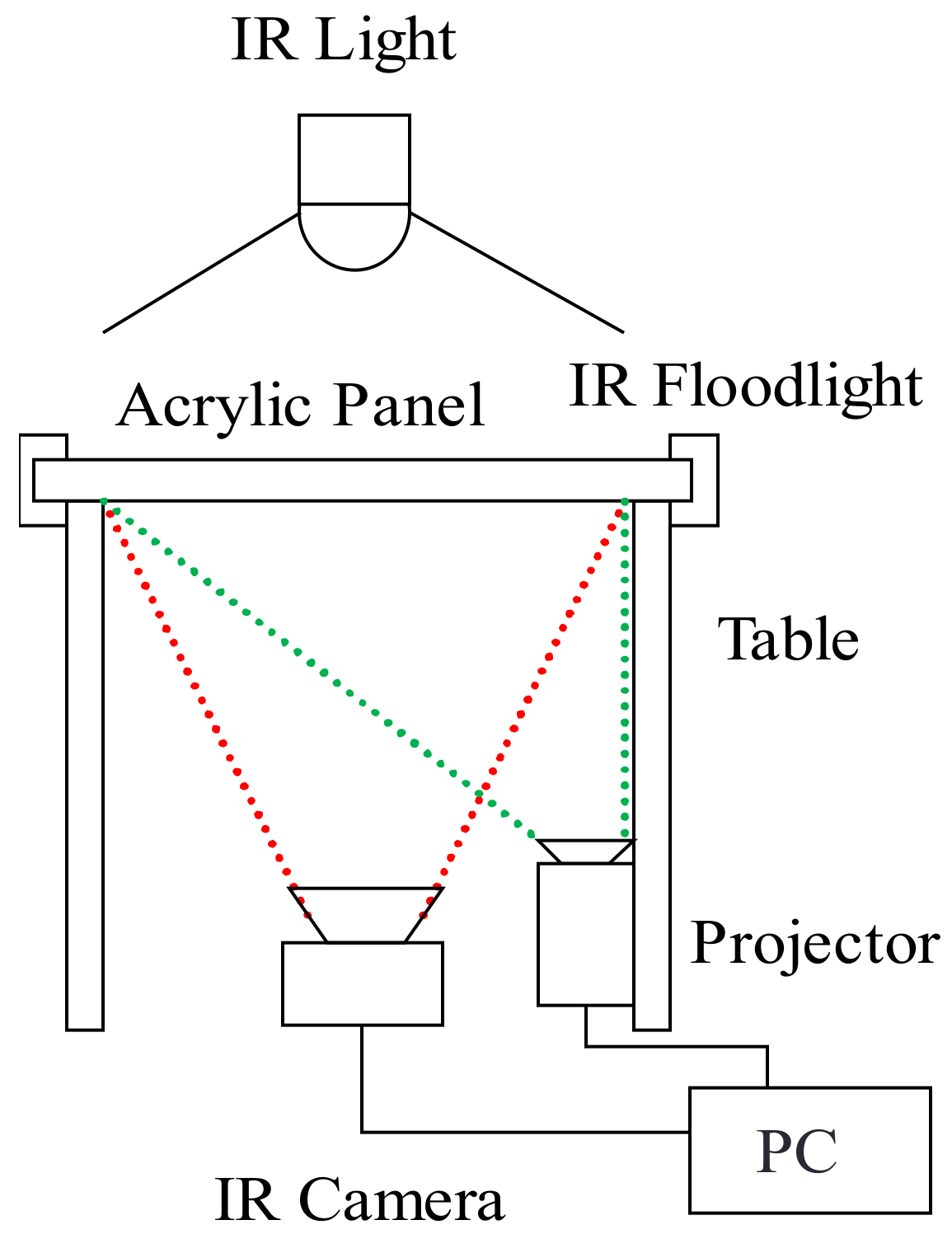

3. System Configuration

3.1. System Overview

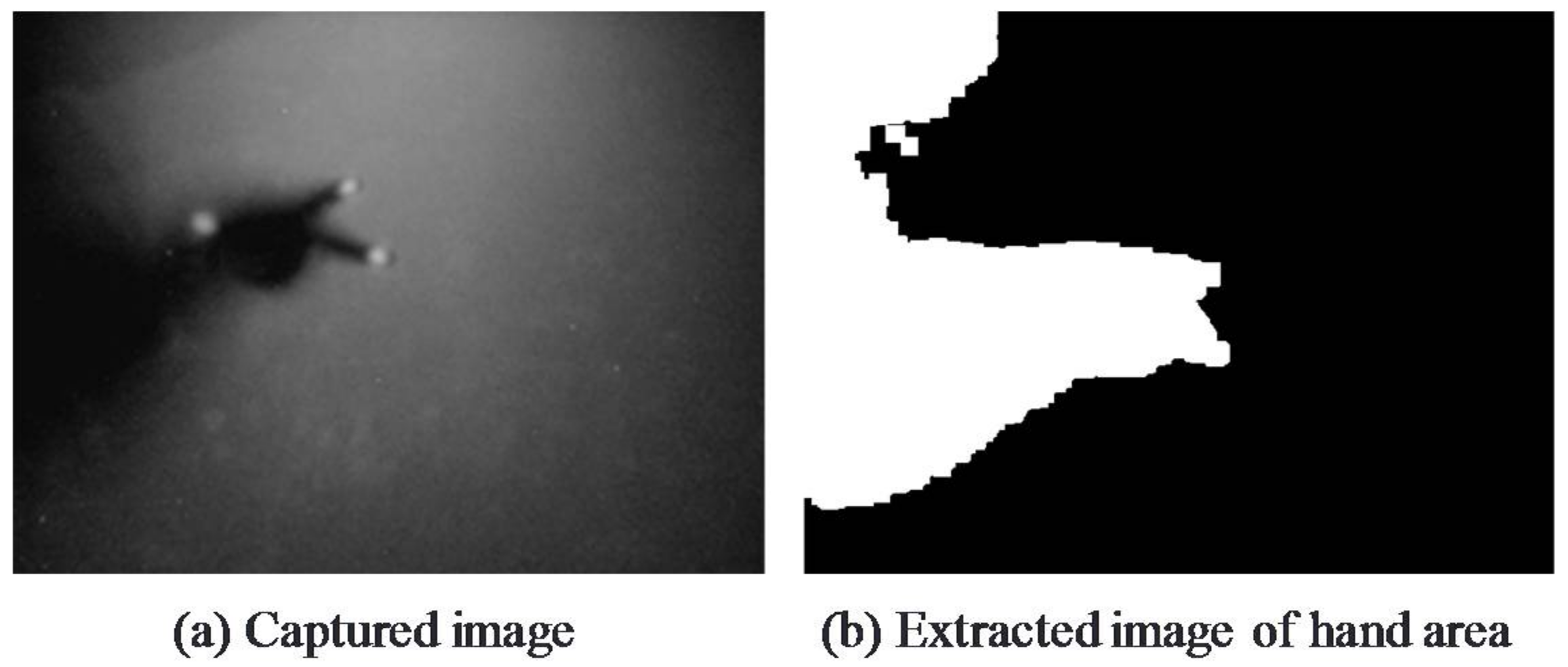

3.2. Overview of User-Position Estimation Technique

3.3. User-Position Estimation Model

3.3.1. Inclusive Relation between Touch Points and Hand Area

3.3.2. Model for Estimating User Position

3.4. User-Position Estimation Technique

- Scan label L[Edged] of each edge and calculate the number of pixels Pixeld having the same label as hand-label L[Hand].

- Derive the value of d satisfying MAX[Pixeld], establish that Hand is extending from direction d, and infer that the direction of the position of the user manipulating TP is d.

3.5. Object Touch Gestures

- Move object:With one finger touching the object, this gesture moves the object by moving the fingertip. The system detects finger movement and moves the object by only the amount of finger movement in the direction of that movement.

- Zoom object in/out:With two fingers touching the object, this gesture zooms the object in or out by expanding or contracting the space between the fingertips. The system detects the movement of these two fingers and expands the object if that space lengthens and contracts the object if that space shortens.

- Rotate object:With two fingers touching the object, this gesture rotates the object by performing a finger-twisting type of action. The system calculates the angle of rotation from the inclination of the two fingers and rotates the object accordingly.

- Change direction of object:With three fingers touching the object, this gesture changes the direction of the object to face the user. An example of changing the direction of an object by this gesture is shown in Figure 8.

- Copy object:On judging that two different users are each generating a touch point with respect to a single object, the system duplicates that object. Specifically, in the event that user B performs a single touch on an object while User A is performing a single touch on that object, the object will be copied and placed at the position of User B’s touch point. An example of the copy gesture is shown in Figure 9.

4. System Implementation

4.1. Tabletop

4.2. Photo-Object Manipulation Application

5. Evaluation Experiments

5.1. Experimental Setup

5.2. Recognition Accuracy Experiment for Change-Direction Gesture

5.3. Recognition Accuracy Experiment for Copy Gesture

5.4. Results of System-Usability Evaluation and Discussion

6. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

Appendix A

- I think that I would like to use this system frequently.

- I found the system unnecessarily complex.

- I thought the system was easy to use.

- I think that I would need the support of a technical person to be able to use this system.

- I found the various functions in this system were well integrated.

- I thought there was too much inconsistency in this system.

- I would imagine that most people would learn to use this system very quickly.

- I found the system very cumbersome to use.

- I felt very confident using the system.

- I needed to learn a lot of things before I could get going with this system.

References

- Taylor, J.; Bordeaux, L.; Cashman, T.; Corish, B.; Keskin, C.; Sharp, T.; Soto, E.; Sweeney, D.; Valentin, J.; Luff, B.; et al. Efficient and precise interactive hand tracking through joint, continuous optimization of pose and correspondences. ACM Trans. Graph. 2016, 35, 143. [Google Scholar] [CrossRef]

- Krupka, E.; Karmon, K.; Bloom, N.; Freedman, D.; Gurvich, I.; Hurvitz, A.; Leichter, I.; Smolin, Y.; Tzairi, Y.; Vinnikov, A.; et al. Toward realistic hands gesture Interface: Keeping it simple for developers and machines. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI’17), Denver, CO, USA, 6–11 May 2017; ACM: New York, NY, USA, 2017; pp. 1887–1898. [Google Scholar]

- Shen, C.; Ryall, K.; Forlines, C.; Esenther, A.; Vernier, F.D.; Everitt, K.; Wu, M.; Wigdor, D.; Morris, M.R.; Hancock, M.; et al. Informing the design of direct-touch tabletops. IEEE Comput. Graph. Appl. 2006, 26, 36–46. [Google Scholar] [CrossRef] [PubMed]

- Morris, M.R.; Huang, A.; Paepcke, A.; Winograd, T. Cooperative Gestures: Multi-user gestural interactions for co-located groupware. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’06), Montréal, QC, Canada, 22–27 April 2006; ACM: New York, NY, USA, 2006; pp. 1201–1210. [Google Scholar]

- Isenberg, P.; Fisher, D.; Paul, S.A.; Morris, M.R.; Inkpen, K.; Czerwinski, M. Co-located collaborative visual analytics around a tabletop display. IEEE Trans. Vis. Comput. Graph. 2012, 18, 689–702. [Google Scholar] [CrossRef] [PubMed]

- Walker, G. A review of technologies for sensing contact location on the surface of a Display: Review of touch technologies. J. Soc. Inf. Disp. 2012, 20, 413–440. [Google Scholar] [CrossRef]

- Han, J.Y. Low-cost multi-touch sensing through frustrated total internal reflection. In Proceedings of the 18th Annual ACM Symposium on User Interface Software and Technology (UIST’05), Seattle, WA, USA, 23–26 October 2005; ACM: New York, NY, USA, 2005; pp. 115–118. [Google Scholar]

- Wobbrock, J.O.; Morris, M.R.; Wilson, A.D. User-defined gestures for surface computing. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’09), Boston, MA, USA, 4–9 April 2009; ACM: New York, NY, USA, 2009; pp. 1083–1092. [Google Scholar]

- Hinrichs, U.; Carpendale, S. Gestures in the Wild: Studying multi-touch gesture sequences on interactive tabletop exhibits. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’11), Vancouver, BC, Canada, 7–12 May 2011; ACM: New York, NY, USA, 2011; pp. 3023–3032. [Google Scholar]

- Evans, A.C.; Wobbrock, J.O.; Davis, K. Modeling collaboration patterns on an interactive tabletop in a classroom setting. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing (CSCW’16), San Francisco, CA, USA, 27 February–2 March 2016; ACM: New York, NY, USA, 2016; pp. 860–871. [Google Scholar]

- Lundström, C.; Rydell, T.; Forsell, C.; Persson, A.; Ynnerman, A. Multi-touch table system for medical Visualization: Vpplication to orthopedic surgery planning. IEEE Trans. Vis. Comput. Graph. 2011, 17, 1775–1784. [Google Scholar] [CrossRef] [PubMed]

- Suto, S.; Shibusawa, S. A tabletop system using infrared image recognition for multi-user identification. In Proceedings of the Human-Computer Interaction-INTERACT 2013, Cape Town, South Africa, 2–6 September 2013; Kotze, P., Marsden, G., Lindgaard, G., Wesson, J., Winckler, M., Eds.; Lecture Notes in Computer Science, 8118, Part II. Springer: Berlin/Heidelberg, Germany, 2013; pp. 55–62. [Google Scholar]

- Evans, A.C.; Davis, K.; Fogarty, J.; Wobbrock, J.O. Group Touch: Distinguishing tabletop users in group settings via statistical modeling of touch pairs. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI’17), Denver, CO, USA, 6–11 May 2017; ACM Press: New York, NY, USA, 2017; pp. 35–47. [Google Scholar]

- Annett, M.; Grossman, T.; Wigdor, D.; Fitzmaurice, G. Medusa: A proximity-aware multi-touch tabletop. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology (UIST’11), Santa Barbara, CA, USA, 16–19 October 2011; ACM: New York, NY, USA, 2011; pp. 337–346. [Google Scholar]

- Marquardt, N.; Kiemer, J.; Ledo, D.; Boring, S.; Greenberg, S. Designing user-, hand-, and handpart-aware tabletop interactions with the TouchID toolkit. In Proceedings of the ACM International Conference on Interactive Tabletops and Surfaces, ITS’11, Kobe, Japan, 13–16 November 2011; ACM: New York, NY, USA, 2011; pp. 21–30. [Google Scholar]

- Dietz, P.; Leigh, D. Diamond Touch: A multi-user touch technology. In Proceedings of the 14th Annual ACM Symposium on User Interface Software and Technology (UIST’01), Orlando, FL, USA, 11–14 November 2001; ACM: New York, NY, USA, 2001; pp. 219–226. [Google Scholar]

- Lissermann, R.; Huber, J.; Schmitz, M.; Steimle, J.; Mühlhäuser, M. Permulin: Mixed-focus collaboration on multi-view tabletops. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’14), Toronto, ON, Canada, 28 April–1 May 2014; ACM: New York, NY, USA, 2014; pp. 3191–3200. [Google Scholar]

- Zhang, H.; Yang, X.-D.; Ens, B.; Liang, H.-N.; Boulanger, P.; Irani, P. See Me, See You: A lightweight method for discriminating user touches on tabletop displays. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’12), Austin, TX, USA, 5–10 May 2012; ACM: New York, NY, USA, 2012; pp. 2327–2336. [Google Scholar]

- Suto, S.; Shibusawa, S. Evaluation of multi-user gesture on infrared-image-recognition based tabletop system. In Proceedings of the IPSJ Technical Report on the 157th Human Computer Interaction, Tokyo, Japan, 13–14 March 2014; Information Processing Society of Japan: Tokyo, Japan, 2014; pp. 1–7. [Google Scholar]

- System Usability Scale (SUS). Available online: https://www.usability.gov/how-to-and-tools/methods/system-usability-scale.html (accessed on 22 February 2018).

- Ohashi, M.; Itou, J.; Munemori, J.; Matsushita, M.; Matsuda, M. Development and application of idea generation support system using table-top interface. IPSJ J. 2008, 49, 105–115. [Google Scholar]

- Chen, L.; Day, T.W.; Tang, W.; John, N.W. Recent developments and future challenges in medical mixed reality. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR 2017), Nantes, France, 9–13 October 2017; IEEE Computer Society: Washington, DC, USA, 2017; pp. 123–135. [Google Scholar]

- Genest, A.M.; Gutwin, C.; Tang, A.; Kalyn, M.; Ivkovic, Z. KinectArms: A toolkit for capturing and displaying arm embodiments in distributed tabletop groupware. In Proceedings of the 2013 Conference on Computer Supported Cooperative Work (CSCW’13), San Antonio, TX, USA, 23–27 February 2013; ACM: New York, NY, USA, 2013; pp. 157–166. [Google Scholar]

- Zagermann, J.; Pfeil, U.; Rädle, R.; Jetter, H.-C.; Klokmose, C.; Reiterer, H. When tablets meet Tabletops: The effect of tabletop size on around-the-table collaboration with personal tablets. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (CHI’16), San Jose, CA, USA, 7–12 May 2016; ACM: New York, NY, USA, 2016; pp. 5470–5481. [Google Scholar]

- Zhang, Y.; Laput, G.; Harrison, C. Electrick: Low-cost touch sensing using electric field tomography. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI’17), Denver, CO, USA, 6–11 May 2017; ACM: New York, NY, USA, 2017; pp. 1–14. [Google Scholar]

- North, C.; Dwyer, T.; Lee, B.; Fisher, D.; Isenberg, P.; Robertson, G.; Inkpen, K. Understanding multi-touch manipulation for surface computing. In Proceedings of the 12th IFIP TC 13 International Conference on Human-Computer Interaction (INTERACT’09), Uppsala, Sweden, 24–28 August 2009; Gross, T., Gulliksen, J., Kotzé, P., Oestreicher, L., Palanque, P., Prates, R.O., Winckler, M., Eds.; Lecture Notes in Computer Science, 5727, Part II. Springer: Berlin/Heidelberg, Germany, 2009; pp. 236–249. [Google Scholar]

| Operation | No. of Users | No. of Touches | Description |

|---|---|---|---|

| Move | 1 | 1 | Move object |

| Zoom in/out | 2 | Change object size | |

| Rotate | Rotate object | ||

| Change direction | 3 | Change object’s direction to face user | |

| Copy | 2 | 2 | Copy object |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suto, S.; Watanabe, T.; Shibusawa, S.; Kamada, M. Multi-Touch Tabletop System Using Infrared Image Recognition for User Position Identification. Sensors 2018, 18, 1559. https://doi.org/10.3390/s18051559

Suto S, Watanabe T, Shibusawa S, Kamada M. Multi-Touch Tabletop System Using Infrared Image Recognition for User Position Identification. Sensors. 2018; 18(5):1559. https://doi.org/10.3390/s18051559

Chicago/Turabian StyleSuto, Shota, Toshiya Watanabe, Susumu Shibusawa, and Masaru Kamada. 2018. "Multi-Touch Tabletop System Using Infrared Image Recognition for User Position Identification" Sensors 18, no. 5: 1559. https://doi.org/10.3390/s18051559

APA StyleSuto, S., Watanabe, T., Shibusawa, S., & Kamada, M. (2018). Multi-Touch Tabletop System Using Infrared Image Recognition for User Position Identification. Sensors, 18(5), 1559. https://doi.org/10.3390/s18051559