This paper improves the D-S theory by combining the accompanying rules of evidence and their processing of conflicts of evidence. In the implementation of D-S, this paper generates evidence frameworks for individual route segments. Each segment has some associated evidence from sensors. The following subsection will firstly introduce the path division, and then introduce the consequential improvement in D-S theory.

3.3.2.1. The Evidence Framework Based on Sensor Changes in Target Routes

Before establishing the frame of discernment, the user conducts several complete walks along the target business card route, and collects the sensor data of the smartphone on this route using any sensor data acquisition application. For each sensor, this study divides the route into segments according to the changes in its data. Consequently, this study could obtain the route segment set for each kind of sensor, namely, , , , , , and , which are used to establish the evidence framework based on divided route segments. Each route segment must have at least one sensor change feature. The combination of change features within a route segment build up the evidence framework for each route segment.

The first kind of sensor is a magnetometer, which is an instrument that measures the direction and strength of a magnetic field at a particular location. For a fixed point, the geomagnetic field can be decomposed into two horizontal components and a component that is perpendicular to the ground

. The vector sum of the two horizontal components points to magnetic north, so the geomagnetism in the current environment can be identified only using the magnetometer x- and y-component data. In some locations, magnetometer readings are stable, but the magnetic values change when the environment is changing. Specifically, it may exhibit a sudden increase or decrease in value, and the variation in change quantity can reach 20 μT or more. The character of both the changes and lack thereof in the environment can be used as evidence of discernment. For example,

Figure 2 illustrates two turns and the associated significant changes in magnetometer data. Consequently, this route can be divided into five segments based on the change features exhibited by the magnetometer, which indicate turns along the route. Based on the above division, this study could generate route segment sets

and

according to the x- and y-component data of magnetometer individually. The route segment set

in

Figure 2a, and

in

Figure 2b.

The second kind of sensor is the gyroscope. This sensor is able to detect the angular velocity of a moving object, which allows the recognition of turning. In the process of turning, the absolute value of angular velocity obtained by the gyroscope will increase significantly. However, in the situation of smooth walking, the z-axis value of the gyroscope is nearly zero. The positions of left and right turns correspond to the peaks and troughs of the gyroscope data, respectively, as shown in

Figure 3. Any simple moving window algorithm can detect the peaks and troughs in the data. The found peaks and troughs can be used to divide the target route into segments for constructing the evidence framework, such as the route segment set

in

Figure 3.

The third kind of sensor is the light meter.

Figure 4 illustrates an example of light intensity data within variable and stable environments, which can be distinguished by the first differential of light intensities, and indicates the divided segments by light intensity. Five thresholds

were trialed as the first difference in light intensities. The experiment showed that a threshold of

resulted in segmentation that was close to reality. Each segment had a unique light characteristic, either of fluctuation or stability. The route segment set

in

Figure 4.

The other sensors are for Wi-Fi and GPS and the processes associated with them are very similar. The Wi-Fi signal distribution has a certain range, and the mobile phone can accept that signal anywhere within that range. Therefore, Wi-Fi Media Access Control (MAC) address along the target route could be used to divide it into segments. Each segment has a limited Wi-Fi MAC address set and the neighbor segments will have a different Wi-Fi MAC address. For example, the route segment set

in

Figure 5. Similarly, the route can be divided into segments according to the change of the latitude and longitude of GPS data along the segments. The route segment set

in

Figure 6.

This study divides the target route into many tiny segments by intersecting the route segment set of all sensors, which is viewed as the frame of discernment,

. A schematic diagram of the derived framework is shown in

Figure 7. Each tiny route segment has a characteristic combination of sensors, namely,

. In this implementation,

is represented as:

where

i is the serial number of a route segments in the target route, and

is the discernment within route segment

i. The status of magnetometer data along the x- and y-axis

and

is such that 1 indicates that the data are stable and 2 indicates an obvious change in the data.

is the average of the x-component of the magnetometer data when the status is 1.

are the maximum, minimum, and slope of magnetometer data in the x-orientation.

have similar meanings in the y-orientation.

is the status of walking reflected by the gyroscope data,

, which indicates turning left, walking straight ahead, and turning right, respectively.

and

indicate the number of Wi-Fi MAC addresses and Wi-Fi RSSI respectively along the route segment and

and

are the list of them.

represent the maximum, minimum, and average light values in this route segment.

are the longitude and latitude of the start and end points of the route segment.

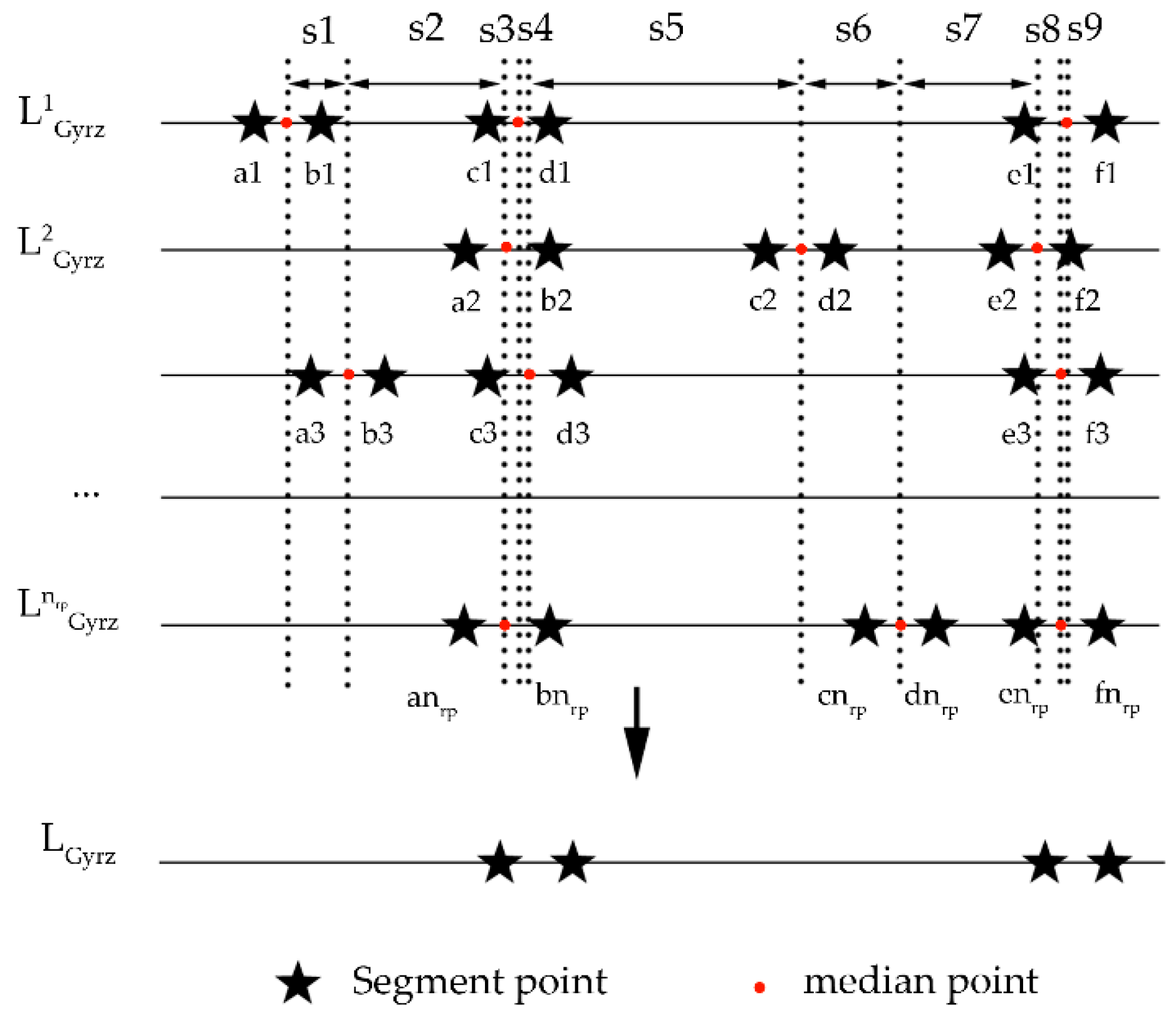

The determination of the above mentioned evidence framework is based on single data source. To a certain extent, the framework would bring about incorrect segment results due to external interference. For example, when encountering a temporarily parked vehicle in the process of targeting road, the record of magnetometer would fluctuate and generate redundant segment points. In addition, when data collector changes direction in order to avoid pedestrian, the record of gyroscope reaches peak or valley, which also affects the correctness of the framework. In order to eliminate above mentioned interferences, this paper optimizes the framework by using multiple repeated data sources. In this paper, each sensor data were collected

times. They were divided as

according to the above method. Then, this paper found the common segmentations of framework was the optimized framework of sensors.

Figure 8 illustrates all frameworks of gyroscope.

is the framework segmentation result of gyroscope in the collection of the

times, and

is the distance between two adjacent median points of a given segment route. Since the given GPS accuracy is about 15 m, if the distance between two points is less than 15 m, the two points are considered as a same position. Hence, this paper uses 15 m as the threshold to remove segment points whose median points are far from other median points. Moreover, the characteristic of the segmented section between each two deleted segment points is assigned as same as their previous section. For example, the characteristic of the deleted section

is a straight walk, which is identical with the one of the sections

. After this common segmentation finding, this paper could get a suitable framework for possible pedestrian navigation situations.

3.3.2.2. Basic Belief Assignment

Co-existing relationships of evidence are common phenomena for smartphone sensors used to locate pedestrians as a result of similarities in activity behaviors and constraints in a navigation environment. Usually, the best evidence is when they have co-existing relationships that can reflect the uniqueness of the actual scene in space and time sequences. Therefore, the basic selection rule of co-existing relationships between sensors is that the sensor data feature can be readily detected by simple filtering algorithms, such as moving windows, and the combination of sensors in each route segment or the sensor features presents an obvious difference between the neighboring route segments. For smartphones’ sensors in pedestrian navigation environments, the data from the magnetometer, Wi-Fi, and GPS are independent environmental information, and these data obtained at each route segment could become evidence of a unique environment. The gyroscope mainly detects the changes (or turns) in the journey through the environment so that the gyroscope can be added to the evidence framework when the real-time gyroscope data exhibits changing features. For example,

Figure 9 shows an example of the co-existing relationship of sensor signals in a target route. Areas #1 and #2 are two subset locations of the framework. The sensor data characteristics of the two subsets are listed in

Table 1. In area #1, the x- and y-components of the magnetometer data show a sudden increases and decreases, respectively; the gyroscope shows features that are consistent with a right turn, and the light data are in keeping with features observed outdoors. Therefore, there is a co-existing relationship between the signals of the x- and y-components of the magnetometer, the gyroscope z-component, and the light-level. Therefore, the values of the four sensors in area #1 can be viewed as an evidence combination for defining the basic belief probability distribution. Similarly, for area #2, the gyroscope does not have a co-existing relationship of evidence with other sensors because the gyroscope exhibits stable behavior. Therefore, the combination of evidence and the probability distribution only include data of sensors such as the magnetometer x- and y-components, light, and Wi-Fi.

After selecting sensors as a co-existing sensor combination for each route segment, our proposed approach calculates the similarity of the real-time data of co-existing sensors with a predefined evidence framework, according to the following Equations (6)–(15):

where

Here, is the weight relatively to weight of Wi-Fi Mac address while matching the sensors with predefined evidence framework. and are RSSI measurement values of Wi-Fi access point i in evidence framework and it in the real-time data set.

The light sensor is more complex than other sensors in that the intensity of light varies greatly across time and climate. The most accurate match result can be obtained when the light condition of

is similar with the

. Hence, this paper introduces a light similarity method which considers the continuity of data. In this method, the intensities of light under different light conditions are processed according to

Section 3.3.2.1. The light segment result can be obtained as

,

denotes the amount of light condition, such as day, night, sunny day, cloudy day, etc.

is the

section when

. Then, we calculate the

of real-time data with

according to Equation (13) in

times. The number of successful match results in different light conditions is represented as

. If

and

, we regard the

as the most similar light condition of real-time data. Finally, the segment route whose light condition is

is selected.

Table 2 is an example of selection of light segment.

represents the

real-time light data.

represents the match result of segment data under different light conditions.

where

,

,

,

and

represent the similarity of the magnetometer, gyroscope, Wi-Fi, light, and latitude and longitude.

,

,

,

,

are the real-time data of sensors that need to be checked for matching the location of pedestrians with the route segment of the evidence framework. Therefore, for real-time data of sensors, this approach will generate a similarity matrix as follows:

where

is the number of discernment in the frame of evidence theory.

In order to improve the error tolerance performance of the matching process, this study finds the five most similar discernments in the frame of evidence using Equations (6)–(15), and ranks them with levels of 1–5.

Table 3 lists the values of 1–5 and their corresponding evaluation characteristics. The Equation (17) shows an example of a similarity matrix with 6 discernments.

The Equation (17) gives an example of similarity matrix with 6 discernments (left part of ) and its probability matrix (right part of ).

This study uses Equation (18) to calculate the probabilities of the five most similar discernments. The probability is used to build up a basic belief assignment of each sensor for real-time sensor data.

where

denotes the probability value of the focal element whose similarity level is

. The right part of Equation (17) shows the probability matrix corresponding to the similarity matrix of the left part of Equation (17).

By combining the co-existing rules of evidence, this study proposes an improved theory of evidence combination formula as follows.

where

is a normalization constant,

is the BPA of evidence

, and

is the subset of evidence framework.

means that only the evidence

with characteristic changes are used in the basic belief assignment and evidence combination.

3.3.2.3. Conflict of Evidence Processing

In the process of evidence combination, two factors affect the accuracy of sensor data, one is data latency, the other is external interference. Conflicts of evidence may exist in the matching process of sensor data and evidence. Therefore, it is necessary to deal with this issue during evidence combination. Several approaches have been proposed to manage conflicts in D-S evidence theory, such as, averaging [

65], and combining conflict evidence [

67], and weighting evidence (evidence pretreatment) [

68,

69]. Assigning a small probability value to the frame of discernment is a practical operation when determining the basic belief assignment. Therefore, this study combines the approaches of Yager combination rules [

66] and weighted evidence to handle the conflict of evidence.

Weighting evidence is crucial to improving the accuracy of the evidence combination results in the case of different evidence association rules. The weights are usually subjectively assigned, or can be objectively calculated in the presence of historical data sets [

70]. In this study, the sensor data sets of the target route are precollected to build the framework of discernment. Hence, they can be preprocessed with objective calculations to obtain the weights for the evidence before the evidence is combined. The detail determining process of sensor’s weight is described as follows:

Figure 10 illustrates an example of calculating the matching errors for assigning weights of sensor

i. Let

indicate the number of sensors,

represents the repeated times of collecting historical data set along the target route,

is the frame of discernment of the target route, and

u is the number of labelled points. The matching error can be estimated by comparing the location of the labelled points with the corresponding matched route segments.

indicates the matched route segment of sensor

i at times

for the corresponding labelled point

. This study assigns the weight of sensor

i according to a basic rule that if the matching error of a sensor is smaller, the weight of this sensor is higher. Therefore, the weight of sensor

i is defined as follows:

where

is the location of labelled point

in

, and

represent the start and end locations of

. For example, in

Figure 10, the calculated distances between the matched points and labelled point 1 are

D11,

D11,

D1q-1,

D1q. Here,

= [(

D11 +

D11 + …… +

D1q-1 +

D1q) + (

D21 +

D21 + …… +

D2q-1 +

D2q) + (

D31 +

D31 + …… +

D3q-1 +

D3q)]/3

q in the case of sensor

i.

Then, this study improves the theoretical formula for evidence by employing two strategies. The first is to assign a small probability to the whole frame

, which makes the inconsistency of evidence negligible [

67]. The other strategy is to add weights of the sensors belonging to the evidence, which reflect the accompanying influence of sensors on estimated results. Therefore, the improved theory of evidence combination formulas can be further defined as

where

denotes the weight of evidence

.