Detecting Malicious False Frame Injection Attacks on Surveillance Systems at the Edge Using Electrical Network Frequency Signals

Abstract

:1. Introduction

- The feasibility of frame duplication attacks at the edge has been investigated and an attack with smart adaptability to environment and automatic triggering mechanism is implemented and tested;

- The authenticity of ENF signals is validated using signal traces collected at multiple locations within the same power grid;

- A robust method is proposed to extract the fluctuations in audio recordings and to compare with the reference ENF power signal using the cross-correlation factor;

- The relationships between the strength of the acoustic mains hum and the signal to noise ratio (SNR) of the ENF signal are verified;

- The effectiveness and correctness of the proposed detection scheme are validated through an experimental study using real-world ENF signal traces.

2. Background Knowledge and Related Work

2.1. Attacks on a Surveillance System

2.2. Electrical Network Frequency Signals

2.3. ENF Signal Applications

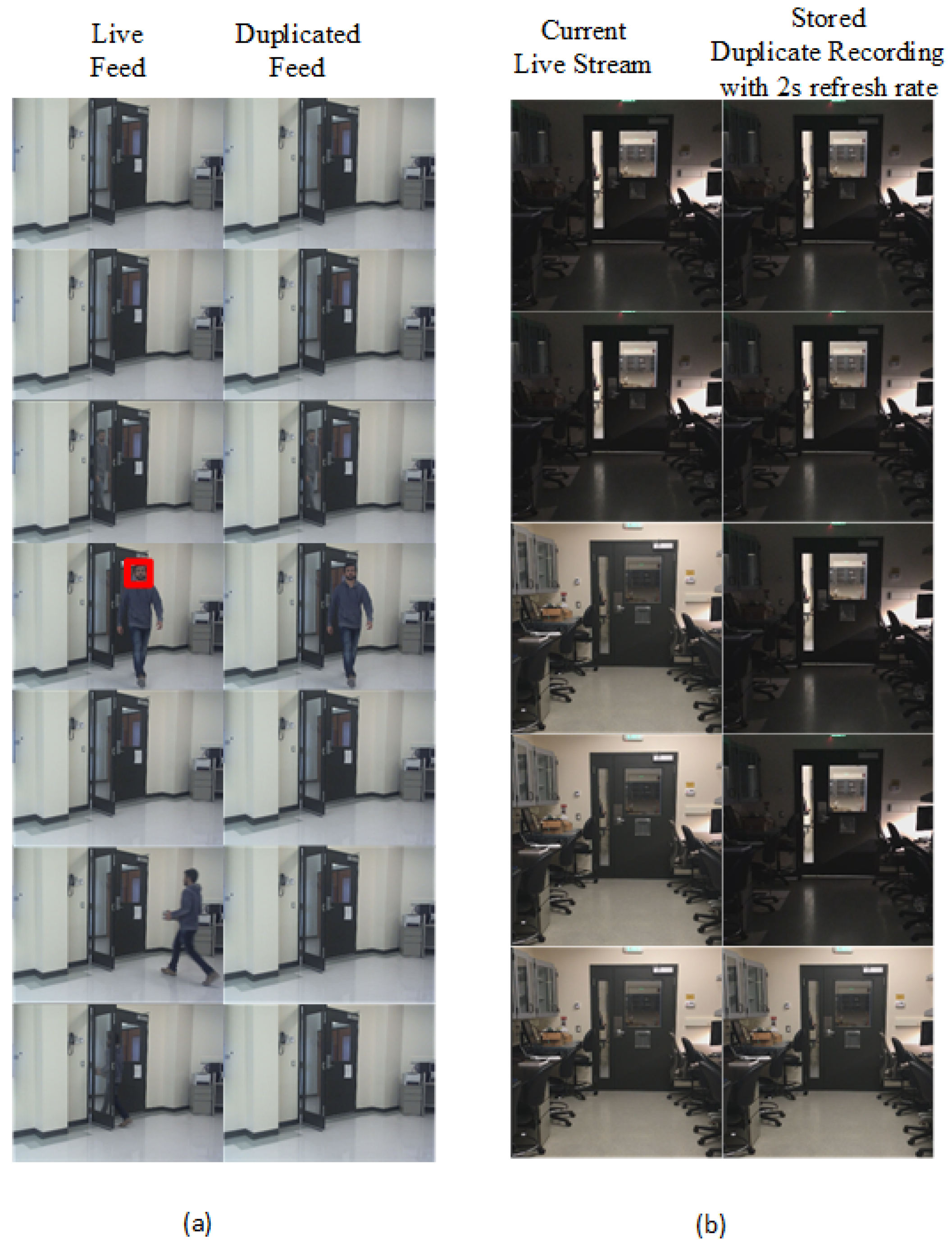

3. Real-Time Frame Duplication Attack Implementation

3.1. Overview

3.2. Attack Algorithm Functionality

4. Detecting Malicious Frame Injection Attacks Using ENF Signals

4.1. Applied Model

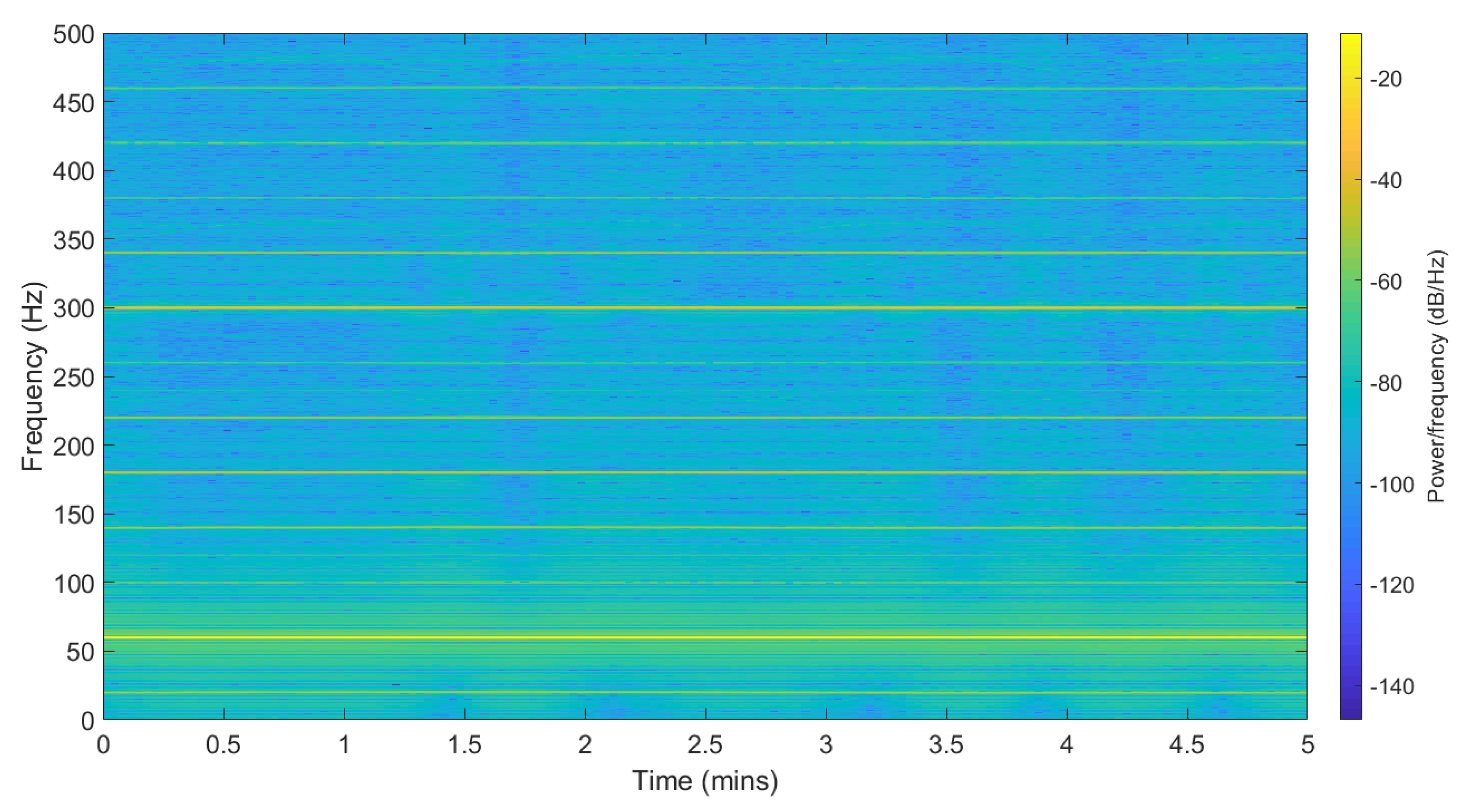

4.2. Robust Extraction of ENF signals

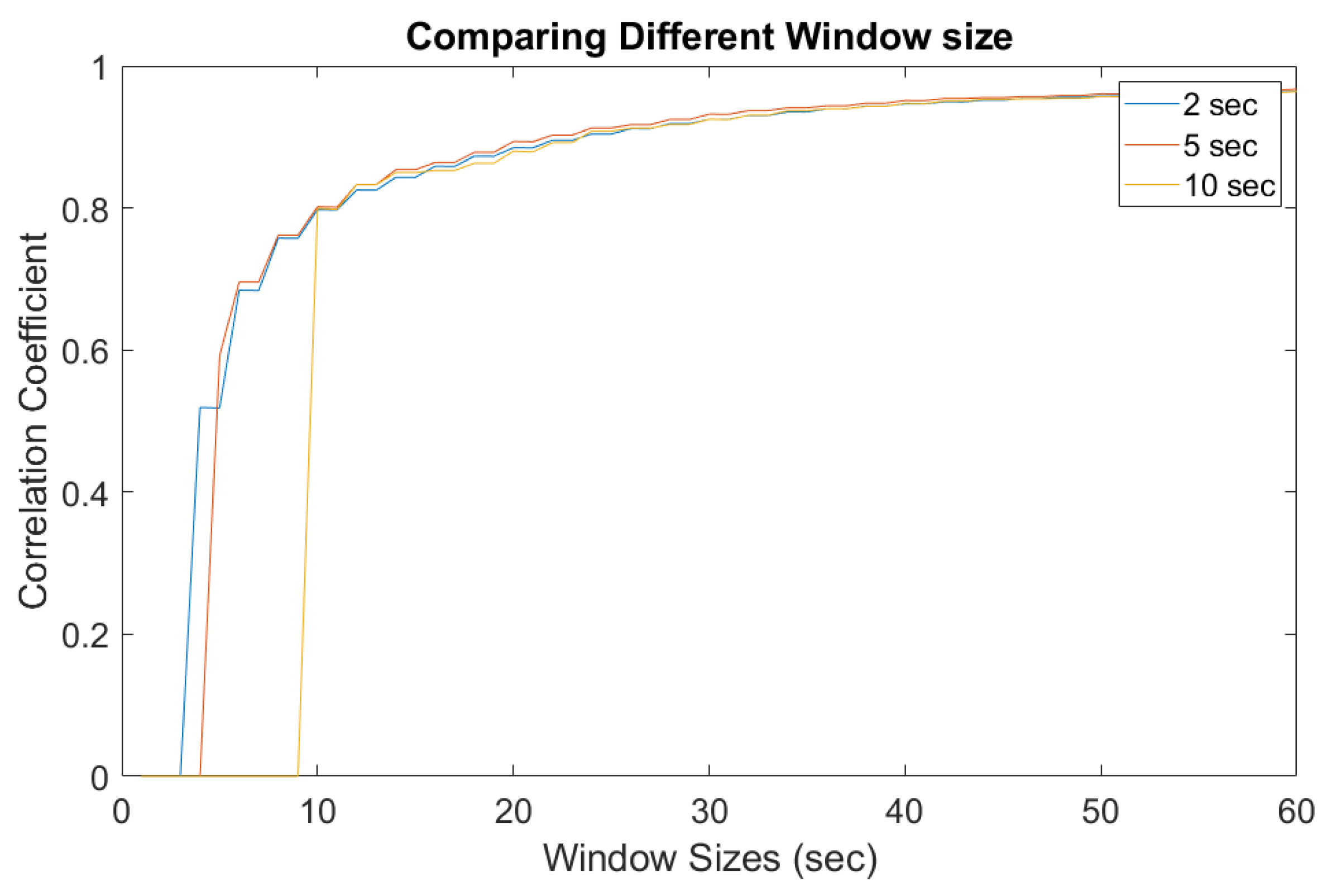

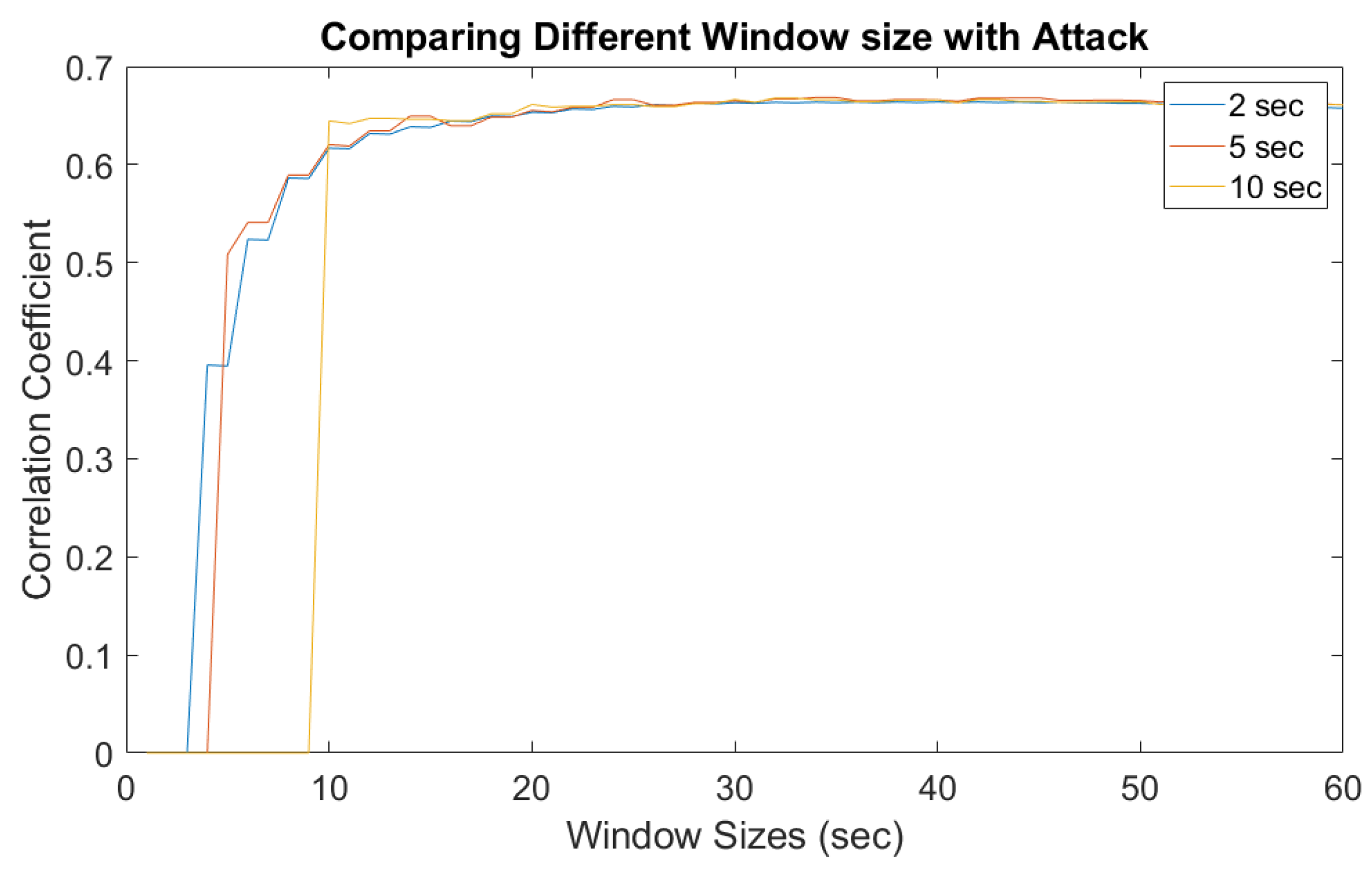

4.3. Correlation Coefficient for Extracted ENF Signals

5. Experimental Results

5.1. Testbed Setup

5.2. Implementation and Results

5.3. A Case Study on Foscam Camera

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ENF | Electrical Network Frequency |

| FFI | False Frame Injection |

| STFT | Short Time Fourier Transform |

| FFT | Fast Fourier Transform |

| NFFT | Number of FFT bins |

| SNR | Signal to Noise Ratio |

| POV | Point of View |

| QR | Quick Response Code |

| AC | Alternating Current |

| CCD | Charge Couple device |

| CMOS | Complimentary Metal Oxide Semiconductor |

| FPS | Frames Per Second |

| HOG | Histogram of Oriented Gradients |

| PSD | Power Spectral Density |

References

- Nikouei, S.Y.; Xu, R.; Nagothu, D.; Chen, Y.; Aved, A.; Blasch, E. Real-time index authentication for event-oriented surveillance video query using blockchain. arXiv 2018, arXiv:1807.06179. [Google Scholar]

- Nikouei, S.Y.; Chen, Y.; Song, S.; Xu, R.; Choi, B.Y.; Faughnan, T. Smart Surveillance as an Edge Network Service: From Harr-Cascade, SVM to a Lightweight CNN. In Proceedings of the 2018 IEEE 4th International Conference on Collaboration and Internet Computing (CIC), Philadelphia, PA, USA, 18–20 October 2018; pp. 256–265. [Google Scholar]

- Nikouei, S.Y.; Chen, Y.; Song, S.; Xu, R.; Choi, B.Y.; Faughnan, T.R. Real-time human detection as an edge service enabled by a lightweight cnn. In Proceedings of the 2018 IEEE International Conference on Edge Computing (EDGE), San Francisco, CA, USA, 2–7 July 2018; pp. 125–129. [Google Scholar]

- Hampapur, A.; Brown, L.; Connell, J.; Pankanti, S.; Senior, A.; Tian, Y. Smart surveillance: Applications, technologies and implications. In Proceedings of the 2003 Joint Fourth International Conference on Information, Communications and Signal Processing, Singapore, 15–18 December 2003; Volume 2, pp. 1133–1138. [Google Scholar]

- Costin, A. Security of CCTV and video surveillance systems: Threats, vulnerabilities, attacks, and mitigations. In Proceedings of the 6th International Workshop on Trustworthy Embedded Devices, Vienna, Austria, 28 October 2016; pp. 45–54. [Google Scholar]

- Ulutas, G.; Ustubioglu, B.; Ulutas, M.; Nabiyev, V. Frame duplication/mirroring detection method with binary features. IET Image Process. 2017, 11, 333–342. [Google Scholar] [CrossRef]

- Sowmya, K.; Chennamma, H. A survey on video forgery detection. Int. J. Comput. Eng. Appl. 2015, 9, 17–27. [Google Scholar]

- Milani, S.; Fontani, M.; Bestagini, P.; Barni, M.; Piva, A.; Tagliasacchi, M.; Tubaro, S. An overview on video forensics. APSIPA Trans. Signal Inf. Process. 2012, 1. [Google Scholar] [CrossRef] [Green Version]

- Nagothu, D.; Schwell, J.; Chen, Y.; Blasch, E.; Zhu, S. A Study on Smart Online Frame Forging Attacks against Video Surveillance System. arXiv 2019, arXiv:1903.03473. [Google Scholar]

- Hyun, D.K.; Lee, M.J.; Ryu, S.J.; Lee, H.Y.; Lee, H.K. Forgery detection for surveillance video. In The Era of Interactive Media; Springer: Berlin, Germany, 2013; pp. 25–36. [Google Scholar]

- Grigoras, C.; Smith, J.; Jenkins, C. Advances in ENF database configuration for forensic authentication of digital media. In Audio Engineering Society Convention 131; Audio Engineering Society: New York, NY, USA, 2011. [Google Scholar]

- Fnet World-Wide Frequency Map. Available online: http://powerit.utk.edu/worldmap/ (accessed on 5 January 2019).

- Garg, R.; Varna, A.L.; Hajj-Ahmad, A.; Wu, M. “Seeing” ENF: Power-signature-based timestamp for digital multimedia via optical sensing and signal processing. IEEE Trans. Inf. Forensics Secur. 2013, 8, 1417–1432. [Google Scholar] [CrossRef]

- Hajj-Ahmad, A.; Garg, R.; Wu, M. Spectrum combining for ENF signal estimation. IEEE Signal Process. Lett. 2013, 20, 885–888. [Google Scholar] [CrossRef]

- Wang, W.; Farid, H. Exposing digital forgeries in video by detecting duplication. In Proceedings of the 9th Workshop on Multimedia & Security, Dallas, TX, USA, 20–21 September 2007; pp. 35–42. [Google Scholar]

- Singh, V.K.; Pant, P.; Tripathi, R.C. Detection of frame duplication type of forgery in digital video using sub-block based features. In Proceedings of the International Conference on Digital Forensics and Cyber Crime, Seoul, Korea, 6–8 October 2015; pp. 29–38. [Google Scholar]

- Wahab, A.W.A.; Bagiwa, M.A.; Idris, M.Y.I.; Khan, S.; Razak, Z.; Ariffin, M.R.K. Passive video forgery detection techniques: A survey. In Proceedings of the 2014 10th International Conference on Information Assurance and Security, Okinawa, Japan, 28–30 November 2014; pp. 29–34. [Google Scholar]

- Fadl, S.M.; Han, Q.; Li, Q. Authentication of surveillance videos: Detecting frame duplication based on residual frame. J. Forensic Sci. 2018, 63, 1099–1109. [Google Scholar] [CrossRef] [PubMed]

- Brixen, E.B.; ENF. Quantification of the magnetic field. In Audio Engineering Society Conference: 33rd International Conference: Audio Forensics-Theory and Practice; Audio Engineering Society: Denver, CO, USA, 2008. [Google Scholar]

- Chai, J.; Liu, F.; Yuan, Z.; Conners, R.W.; Liu, Y. Source of ENF in battery-powered digital recordings. In Audio Engineering Society Convention 135; Audio Engineering Society: New York, NY, USA, 2013. [Google Scholar]

- Fechner, N.; Kirchner, M. The humming hum: Background noise as a carrier of ENF artifacts in mobile device audio recordings. In Proceedings of the 2014 Eighth International Conference on IT Security Incident Management & IT Forensics (IMF), Munster, Germany, 12–14 May 2014; pp. 3–13. [Google Scholar]

- Hajj-Ahmad, A.; Wong, C.W.; Gambino, S.; Zhu, Q.; Yu, M.; Wu, M. Factors Affecting ENF Capture in Audio. IEEE Trans. Inf. Forensics Secur. 2019, 14, 277–288. [Google Scholar] [CrossRef]

- Garg, R.; Varna, A.L.; Wu, M. Seeing ENF: Natural time stamp for digital video via optical sensing and signal processing. In Proceedings of the 19th ACM International Conference on Multimedia, Scottsdale, AZ, USA, 28 November–1 December 2011; pp. 23–32. [Google Scholar]

- Vatansever, S.; Dirik, A.E.; Memon, N. Detecting the Presence of ENF Signal in Digital Videos: A Superpixel-Based Approach. IEEE Signal Process. Lett. 2017, 24, 1463–1467. [Google Scholar] [CrossRef]

- Bykhovsky, D.; Cohen, A. Electrical network frequency (ENF) maximum-likelihood estimation via a multitone harmonic model. IEEE Trans. Inf. Forensics Secur. 2013, 8, 744–753. [Google Scholar] [CrossRef]

- Ojowu, O.; Karlsson, J.; Li, J.; Liu, Y. ENF extraction from digital recordings using adaptive techniques and frequency tracking. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1330–1338. [Google Scholar] [CrossRef]

- Rodríguez, D.P.N.; Apolinário, J.A.; Biscainho, L.W.P. Audio authenticity: Detecting ENF discontinuity with high precision phase analysis. IEEE Trans. Inf. Forensics Secur. 2010, 5, 534–543. [Google Scholar] [CrossRef]

- Su, H.; Hajj-Ahmad, A.; Garg, R.; Wu, M. Exploiting rolling shutter for ENF signal extraction from video. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 5367–5371. [Google Scholar]

- Vidyamol, K.; George, J.P.J.E. Exploring electric network frequency for joint audio-visual synchronization and multimedia authentication. In Proceedings of the 2017 International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kannur, India, 6–7 July 2017; pp. 240–246. [Google Scholar]

- Hua, G.; Zhang, Y.; Goh, J.; Thing, V.L. Audio authentication by exploring the absolute-error-map of ENF signals. IEEE Trans. Inf. Forensics Secur. 2016, 11, 1003–1016. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the International Conference on Computer Vision & Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Zheng, Y.; Blasch, E.; Liu, Z. Multispectral Image Fusion and Colorization; SPIE Press: Bellingham, WA, USA, 2018. [Google Scholar]

- Chen, N.; Chen, Y.; Song, S.; Huang, C.T.; Ye, X. Smart urban surveillance using fog computing. In Proceedings of the IEEE/ACM Symposium on Edge Computing (SEC), Washington, DC, USA, 27–28 October 2016; pp. 95–96. [Google Scholar]

- Nagothu, D.; Xu, R.; Nikouei, S.Y.; Chen, Y. A microservice-enabled architecture for smart surveillance using blockchain technology. In Proceedings of the 2018 IEEE International Smart Cities Conference (ISC2), Kansas City, MO, USA, 16–19 September 2018. [Google Scholar]

- Xu, R.; Nikouei, S.Y.; Chen, Y.; Polunchenko, A.; Song, S.; Deng, C.; Faughnan, T.R. Real-Time Human Objects Tracking for Smart Surveillance at the Edge. In Proceedings of the 2018 IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018; pp. 1–6. [Google Scholar]

- Blasch, E. NAECON08 grand challenge entry using the belief filter in audio-video track and ID fusion. In Proceedings of the IEEE 2009 National Aerospace & Electronics Conference (NAECON), Dayton, OH, USA, 21–23 July 2009; pp. 296–303. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nagothu, D.; Chen, Y.; Blasch, E.; Aved, A.; Zhu, S. Detecting Malicious False Frame Injection Attacks on Surveillance Systems at the Edge Using Electrical Network Frequency Signals. Sensors 2019, 19, 2424. https://doi.org/10.3390/s19112424

Nagothu D, Chen Y, Blasch E, Aved A, Zhu S. Detecting Malicious False Frame Injection Attacks on Surveillance Systems at the Edge Using Electrical Network Frequency Signals. Sensors. 2019; 19(11):2424. https://doi.org/10.3390/s19112424

Chicago/Turabian StyleNagothu, Deeraj, Yu Chen, Erik Blasch, Alexander Aved, and Sencun Zhu. 2019. "Detecting Malicious False Frame Injection Attacks on Surveillance Systems at the Edge Using Electrical Network Frequency Signals" Sensors 19, no. 11: 2424. https://doi.org/10.3390/s19112424

APA StyleNagothu, D., Chen, Y., Blasch, E., Aved, A., & Zhu, S. (2019). Detecting Malicious False Frame Injection Attacks on Surveillance Systems at the Edge Using Electrical Network Frequency Signals. Sensors, 19(11), 2424. https://doi.org/10.3390/s19112424