A Deep Learning-Based Automatic Mosquito Sensing and Control System for Urban Mosquito Habitats

Abstract

:1. Introduction

2. Materials and Methods

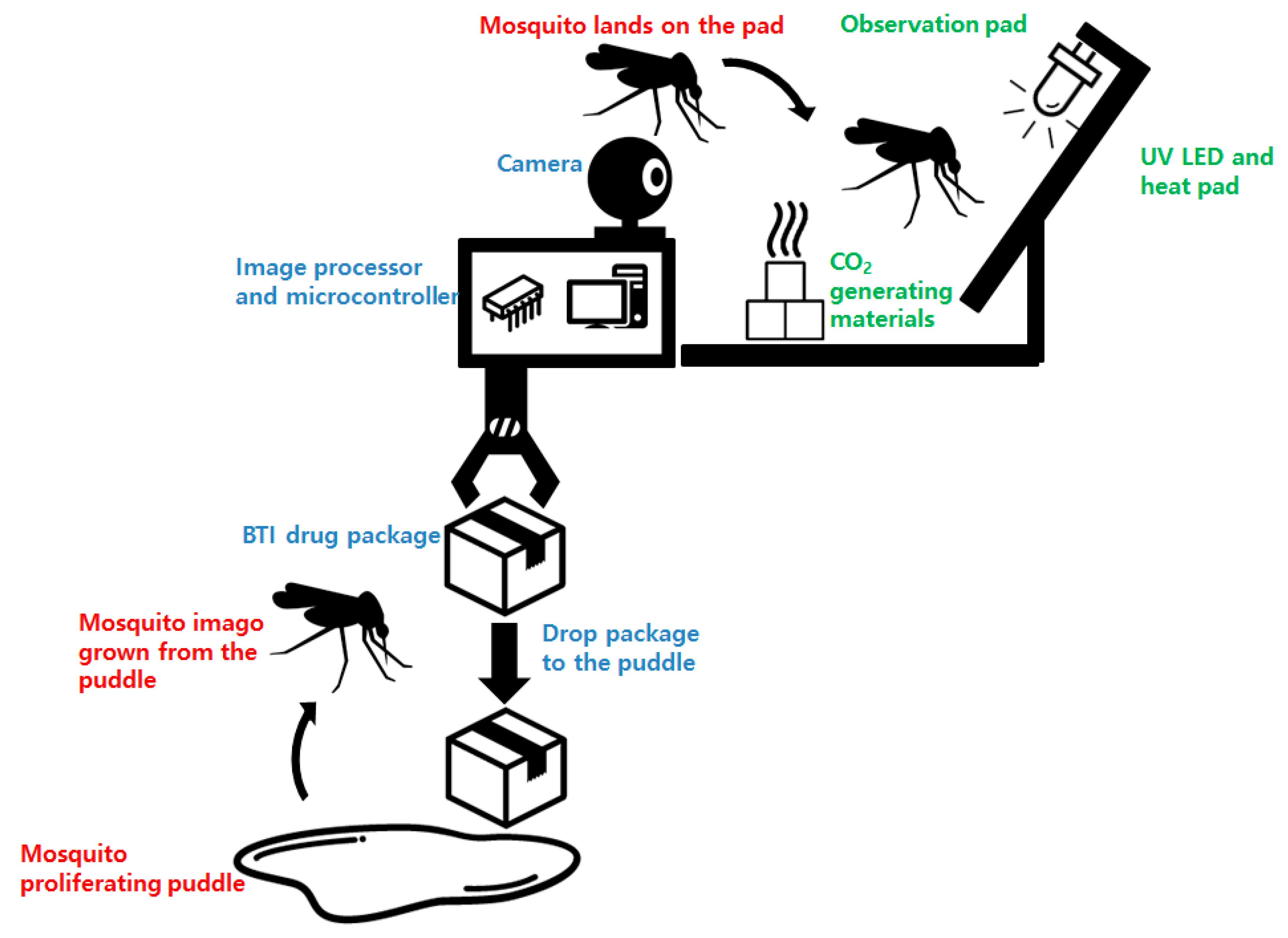

2.1. Platform Overview

2.2. Mosquito Luring Experiments

2.3. Image Preparation and Deep Learning Architecture

3. Results and Discussion

3.1. Mosquito Luring Experiment

3.2. Deep Learning-Based Mosquito Detection

3.3. Examination of the Mosquito Counting Pipeline

4. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Thomas, T.; De, T.D.; Shrma, P.; Lata, S.; Saraswat, P.; Pandey, K.C.; Dixit, R. Hemocytome: Deep sequencing analysis of mosquito blood cells in Indian malarial vector Anopheles stephensi. Gene 2016, 585, 177–190. [Google Scholar] [CrossRef] [PubMed]

- Pastula, D.M.; Smith, D.E.; Beckham, J.D.; Tyler, K.L. Four emerging arboviral diseases in North America: Jamestown Canyon, Powassan, chikungunya, and Zika virus diseases. J. Neurovirol. 2016, 22, 257–260. [Google Scholar] [CrossRef] [PubMed]

- Biswas, A.K.; Siddique, N.A.; Tian, B.B.; Wong, E.; Caillouët, K.A.; Motai, Y. Design of a Fiber-Optic Sensing Mosquito Trap. IEEE Sens. J. 2013, 13, 4423–4431. [Google Scholar] [CrossRef]

- Seo, M.J.; Gil, Y.J.; Kim, T.H.; Kim, H.J.; Youn, Y.N.; Yu, Y.M. Control Effects against Mosquitoes Larva of Bacillus thuringiensis subsp. israelensis CAB199 isolate according to Different Formulations. Korean J. Appl. Entomol. 2010, 49, 151–158. [Google Scholar] [CrossRef]

- Kim, Y.K.; Lee, J.B.; Kim, E.J.; Chung, A.H.; Oh, Y.H.; Yoo, Y.A.; Park, I.S.; Mok, E.K.; Kwon, J. Mosquito Prevalence and Spatial and Ecological Characteristics of Larval Occurrence in Eunpyeong Newtown, Seoul Korea. Kor. J. Nat. Conserv. 2015, 9, 59–68. [Google Scholar] [CrossRef]

- Burke, R.; Barrera, R.; Lewis, M.; Kluchinsky, T.; Claborn, D. Septic tanks as larval habitats for the mosquitoes Aedes aegypti and Culex quinquefasciatus in Playa-Playita, Puerto Rico. Med. Vet. Entomol. 2010, 24, 117–123. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems (NIPS’12), Lake Tahoe, ND, USA, 3–6 December 2012; Pereira, F., Burges, C.J.C., Bottou, L., Weinberger, K.Q., Eds.; Curran Associates Inc.: Red Hook, NY, USA, December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. Available online: https://arxiv.org/abs/1409.1556 (accessed on 23 August 2017).

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Kim, H.; Koo, J.; Kim, D.; Jung, S.; Shin, J.U.; Lee, S.; Myung, H. Image-Based Monitoring of Jellyfish using Deep Learning Architecture. IEEE Sens. J. 2016, 16, 2215–2216. [Google Scholar] [CrossRef]

- Motta, D.; Santos, A.Á.B.; Winkler, I.; Machado, B.A.S.; Pereira, D.A.D.I.; Cavalcanti, A.M.; Fonseca, E.O.L.; Kirchner, F.; Badaró, R. Application of convolutional neural networks for classification of adult mosquitoes in the field. PLoS ONE 2019, 14, e0210829. [Google Scholar] [CrossRef] [PubMed]

- Fuchida, M.; Pathmakumar, T.; Mohan, R.; Tan, N.; Nakamura, A. Vision-based perception and classification of mosquitoes using support vector machine. Appl. Sci. 2017, 7, 51. [Google Scholar] [CrossRef]

- Zhong, Y.; Gao, J.; Lei, Q.; Zhou, Y. A vision-based counting and recognition system for flying insects in intelligent agriculture. Sensors 2018, 18, 1489. [Google Scholar] [CrossRef] [PubMed]

- Hapairai, L.K.; Plichart, C.; Naseri, T.; Silva, U.; Tesimale, L.; Pemita, P.; Bossin, H.C.; Burkot, T.R.; Ritchie, S.A.; Graves, P.M.; et al. Evaluation of traps and lures for mosquito vectors and xenomonitoring of Wuchereria bancrofti infection in a high prevalence Samoan Village. Parasit. Vectors 2015, 287, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Moore, S.J.; Du, Z.W.; Zhou, H.N.; Wang, X.Z.; Li, H.B.; Xiao, Y.J.; Hill, N. The efficacy of different mosquito trapping methods in a forest-fringe village, Yunnan Province, Southern China. Southeast Asian J. Trop. Med. Public Health 2001, 32, 282–289. [Google Scholar] [PubMed]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Available online: https://arxiv.org/abs/1409.0575 (accessed on 23 August 2017).

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. Available online: https://arxiv.org/abs/1605.06211 (accessed on 23 August 2017).

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional Architecture for Fast Feature Embedding. Available online: https://arxiv.org/abs/1408.5093 (accessed on 23 August 2017).

- Kim, K.; Myung, H. Autoencoder-Combined Generative Adversarial Networks for Synthetic Image Data Generation and Detection of Jellyfish Swarm. IEEE Access 2018, 6, 54207–54214. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. Available online: https://arxiv.org/abs/1506.02640 (accessed on 23 August 2017).

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. Available online: https://arxiv.org/abs/1612.08242 (accessed on 23 August 2017).

- Hoiem, D.; Chodpathumwan, Y.; Dai, Q. Diagnosing Error in Object Detectors. In Proceedings of the 12th European Conference on Computer Vision—Volume Part III (ECCV’12), Florence, Italy, 7–13 October 2012; Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 340–353. [Google Scholar] [CrossRef]

| Name | Type | Input Size | Output Size | Kernel Size | Stride | # of Filters |

|---|---|---|---|---|---|---|

| data | data | 3 × 500 × 500 | 3 × 500 × 500 | |||

| conv1 | convolution | 3 × 500 × 500 | 64 × 500 × 500 | 3 | 2 | |

| pool1 | max pooling | 64 × 500 × 500 | 64 × 250 × 250 | 2 | 2 | 1 |

| conv2 | convolution | 128 × 250 × 250 | 128 × 250 × 250 | 3 | 2 | |

| pool2 | max pooling | 128 × 250 × 250 | 128 × 125 × 125 | 2 | 2 | 1 |

| conv3 | convolution | 256 × 125 × 125 | 256 × 125 × 125 | 3 | 3 | |

| pool3 | max pooling | 256 × 125 × 125 | 256 × 63 × 63 | 2 | 2 | 1 |

| conv4 | convolution | 512 × 63 × 63 | 512 × 63 × 63 | 3 | 3 | |

| pool4 | max pooling | 512 × 63 × 63 | 512 × 32 × 32 | 2 | 2 | 1 |

| conv5 | convolution | 512 × 32 × 32 | 512 × 32 × 32 | 3 | 3 | |

| pool5 | max pooling | 512 × 32 × 32 | 512 × 16 × 16 | 2 | 2 | 1 |

| fc6 | convolution | 512 × 16 × 16 | 4096 × 10 × 10 | 7 | 1 | |

| drop6 | dropout (rate 0.5) | 4096 × 10 × 10 | 4096 × 10 × 10 | |||

| fc7 | convolution | 4096 × 10 × 10 | 4096 × 10 × 10 | 1 | 1 | |

| drop7 | dropout (rate 0.5) | 4096 × 10 × 10 | 4096 × 10 × 10 | |||

| score | convolution | 4096 × 10 × 10 | 21 × 10 × 10 | 1 | 1 | |

| score2 | deconvolution | 21 × 10 × 10 | 21 × 22 × 22 | 4 | 2 | 1 |

| score-pool4 | convolution | 512 × 32 × 32 | 21 × 32 × 32 | 1 | 1 | |

| score-pool4c | crop | 21 × 32 × 32 | 21 × 22 × 22 | |||

| score-fuse | eltwise | 21 × 22 × 22 | 21 × 22 × 22 | |||

| bigscore | deconvolution | 21 × 22 × 22 | 21 × 368 × 368 | 32 | 16 | 1 |

| upscore | crop | 21 × 368 × 368 | 21 × 500 × 500 | |||

| output | softmax | 21 × 500 × 500 | 21 × 500 × 500 |

| Name | Type | Input Size | Output Size | Kernel Size | Stride | # of Filters |

|---|---|---|---|---|---|---|

| data | data | 3 × 227 × 227 | 3 × 227 × 227 | |||

| conv1 | convolution | 3 × 227 × 227 | 96 × 55 × 55 | 11 | 4 | 1 |

| norm1 | LRN | 96 × 55 × 55 | 96 × 55 × 55 | |||

| pool1 | max pooling | 96 × 55 × 55 | 96 × 27 × 27 | 3 | 2 | 1 |

| conv2 | convolution | 96 × 27 × 27 | 256 × 27 × 27 | 5 | 1 | |

| norm2 | LRN | 256 × 27 × 27 | 256 × 27 × 27 | |||

| pool2 | max pooling | 256 × 27 × 27 | 256 × 13 × 13 | 3 | 2 | 1 |

| conv3 | convolution | 256 × 13 × 13 | 384 × 13 × 13 | 3 | 1 | |

| conv4 | convolution | 384 × 13 × 13 | 384 × 13 × 13 | 3 | 1 | |

| conv5 | convolution | 384 × 13 × 13 | 256 × 13 × 13 | 3 | 1 | |

| pool5 | max pooling | 256 × 13 × 13 | 256 × 6 × 6 | 3 | 2 | 1 |

| fc6 | InnerProduct | 256 × 6 × 6 | 4096 × 1 × 1 | 1 | ||

| drop6 | dropout (rate 0.5) | 4096 × 1 × 1 | 4096 × 1 × 1 | |||

| fc7 | InnerProduct | 4096 × 1 × 1 | 4096 × 1 × 1 | 1 | ||

| drop7 | dropout (rate 0.5) | 4096 × 1 × 1 | 4096 × 1 × 1 | |||

| fc8 | InnerProduct | 4096 × 1 × 1 | 1000 × 1 × 1 | 1 | ||

| loss | SoftmaxWithLoss | 1000 × 1 × 1 | 1000 × 1 × 1 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, K.; Hyun, J.; Kim, H.; Lim, H.; Myung, H. A Deep Learning-Based Automatic Mosquito Sensing and Control System for Urban Mosquito Habitats. Sensors 2019, 19, 2785. https://doi.org/10.3390/s19122785

Kim K, Hyun J, Kim H, Lim H, Myung H. A Deep Learning-Based Automatic Mosquito Sensing and Control System for Urban Mosquito Habitats. Sensors. 2019; 19(12):2785. https://doi.org/10.3390/s19122785

Chicago/Turabian StyleKim, Kyukwang, Jieum Hyun, Hyeongkeun Kim, Hwijoon Lim, and Hyun Myung. 2019. "A Deep Learning-Based Automatic Mosquito Sensing and Control System for Urban Mosquito Habitats" Sensors 19, no. 12: 2785. https://doi.org/10.3390/s19122785

APA StyleKim, K., Hyun, J., Kim, H., Lim, H., & Myung, H. (2019). A Deep Learning-Based Automatic Mosquito Sensing and Control System for Urban Mosquito Habitats. Sensors, 19(12), 2785. https://doi.org/10.3390/s19122785