Can We Rely on Mobile Devices and Other Gadgets to Assess the Postural Balance of Healthy Individuals? A Systematic Review

Abstract

:1. Introduction

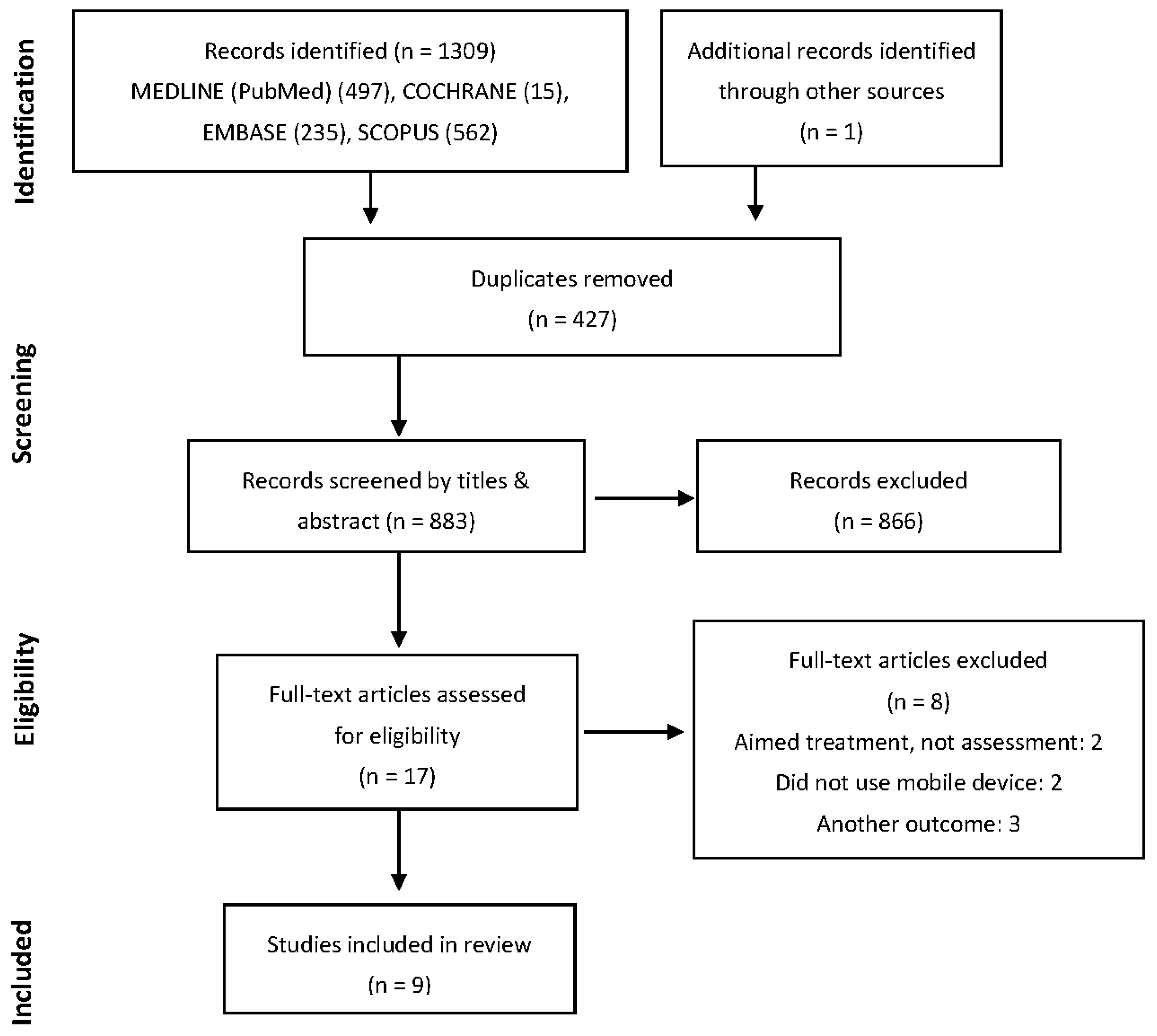

2. Materials and Methods

2.1. Eligibility and Inclusion Criteria

2.2. Search Strategy

2.3. Data Extraction, Risk of Bias and Quality Assessment

3. Results

3.1. Sample Characteristics

3.2. Overview of Studies Objectives

3.3. Balance Assessment Protocols

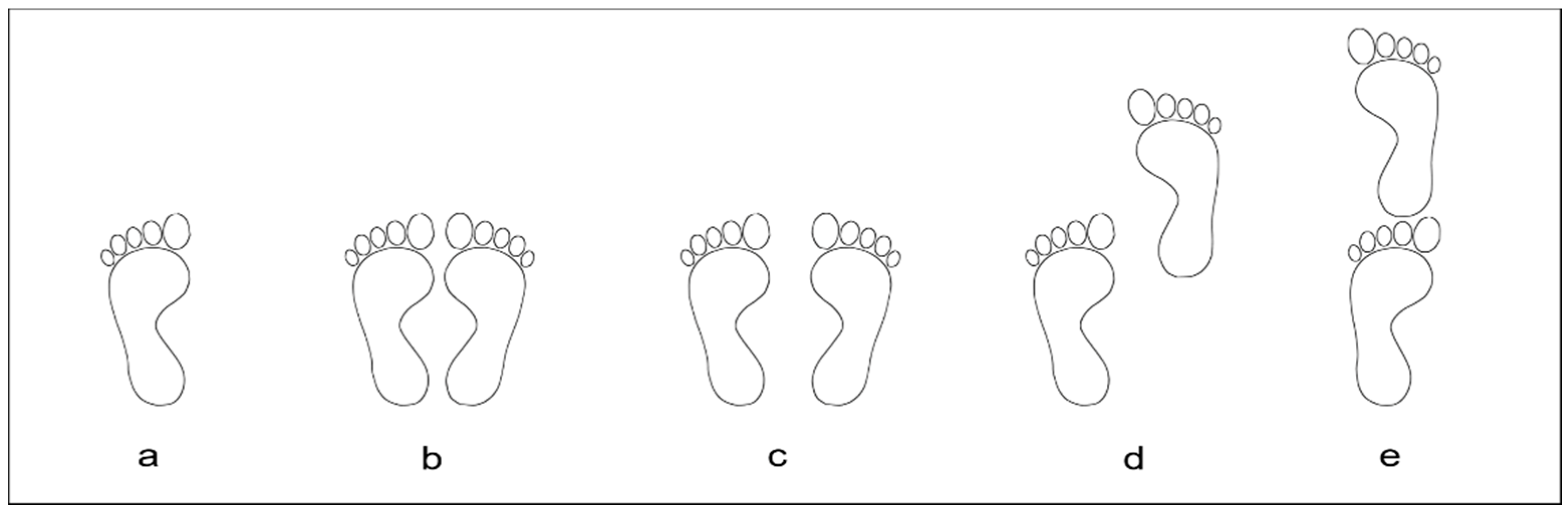

3.3.1. Feet and Arms Position

3.3.2. Number of Acquisitions, Sessions and Total Time of Acquisition

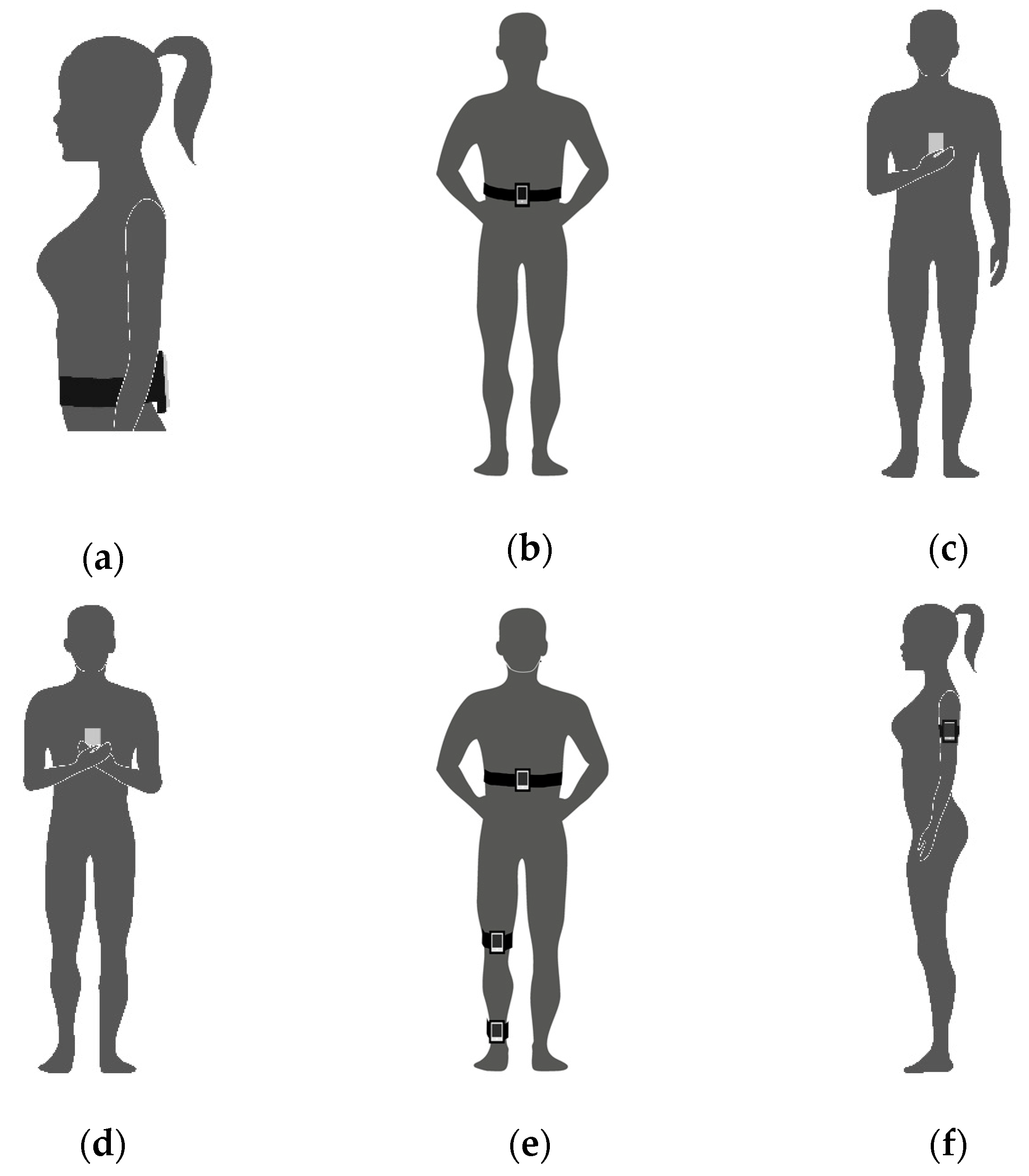

3.3.3. Measurement Device and Position

3.3.4. Devices Synchronization

3.3.5. Measurements and Signal Processing Parameters

3.4. Methodological Quality Assessment

3.4.1. Quality Assessment Tool for Observational Cohort and Cross-Sectional Studies NIH-NHLBI

3.4.2. A 10-Point Checklist for Balance Assessment Protocols

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. Search Strategy (Databases)

| #1 |

| “Accelerometry”[Mesh] |

| “Accelerometer” |

| “Gyroscope” |

| “Bodywear sensors” |

| “Wearable sensors” |

| “Wear sensor” |

| “Inertial sensors” |

| “IMU” |

| “inertial measurement unit” |

| #2 |

| “App Mobile”[Mesh] |

| “Apps, Mobile” |

| “Application, Mobile” |

| “Applications, Mobile” |

| “Mobile Application” |

| “Mobile Apps” |

| “Portable Electronic Apps” |

| “App, Portable Electronic” |

| “Apps, Portable Electronic” |

| “Electronic App, Portable” |

| “Electronic Apps, Portable” |

| “Portable Electronic App” |

| “Portable Electronic Applications” |

| “Application, Portable Electronic” |

| “Applications, Portable Electronic” |

| “Electronic Application, Portable” |

| “Electronic Applications, Portable” |

| “Portable Electronic Application” |

| “Portable Software Apps” |

| “App, Portable Software” |

| “Apps, Portable Software” |

| “Portable Software App” |

| “Software App, Portable” |

| “Software Apps, Portable” |

| “Portable Software Applications” |

| “Application, Portable Software” |

| “Applications, Portable Software” |

| “Portable Software Application” |

| “Software Application, Portable” |

| “Software Applications, Portable” |

| “mobile device” |

| “mobile smartphone” |

| “smartphone application” |

| “smartphone app” |

| #3 |

| “Postural Balance”[MESH] |

| “Balance, Postural” |

| “Musculoskeletal Equilibrium” |

| “Equilibrium, Musculoskeletal” |

| “Postural Equilibrium” |

| “Equilibrium, Postural” |

| “Musculoskeletal Equilibrium” |

| “Equilibrium, Musculoskeletal” |

| “Postural Equilibrium” |

| “Equilibrium, Postural” |

| “Balance, Postural” |

| “sway” |

| “postural control” |

| “body sway” |

| #1 AND #2 AND #3 |

Appendix B. Quality Assessment Tool for Observational Cohort and Cross-Sectional Studies (NIH–NHLBI)

| Quality Assessment | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Study | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | Total | % |

| Alberts et al., 2015 [26] | 2 | 2 | 2 | 2 | 0 | 0 | 0 | 2 | 2 | 2 | 2 | - | - | 0 | 16 | 67% |

| Alberts et al., 2015 [27] | 2 | 2 | 2 | 2 | 2 | 0 | 0 | 2 | 0 | 0 | 2 | - | - | 2 | 16 | 67% |

| Kosse et al., 2015 [14] | 2 | 2 | 2 | 2 | 2 | 0 | 0 | 2 | 0 | 2 | 2 | - | - | 0 | 16 | 67% |

| Hsieh et al., 2019 [30] | 2 | 2 | 2 | 2 | 2 | 0 | 0 | 2 | 2 | 0 | 2 | - | - | 0 | 16 | 67% |

| Ozinga et al., 2014 [15] | 2 | 2 | 2 | 2 | 2 | 0 | 0 | 2 | 2 | 0 | 2 | - | - | 2 | 18 | 75% |

| Patterson et al., 2014 [17] | 2 | 2 | 2 | 2 | 2 | 0 | 0 | 2 | 0 | 0 | 1 | - | - | 0 | 13 | 50% |

| Patterson et al., 2014 [28] | 2 | 2 | 2 | 2 | 2 | 0 | 0 | 0 | 0 | 0 | 2 | - | - | 0 | 12 | 50% |

| Shah et al., 2016 [18] | 2 | 2 | 2 | 1 | 2 | 0 | 0 | 2 | 2 | 0 | 2 | - | - | 2 | 17 | 71% |

| Yvon et al., 2015 [29] | 2 | 1 | 2 | 2 | 0 | 0 | 0 | 2 | 0 | 0 | 1 | - | - | 0 | 10 | 42% |

Appendix C. 10-Point Checklist for Balance Assessment Protocols

| Author | Sample Information | Tasks Description | Feet Condition | Feet and Arms Position | Visual Reference Eyes | Visual Reference Target | Cropped Time | Sampling Rates | Data/Signal Processing Method | Synch Method | Total Core |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Alberts et al., 2015 [26] | Y | Y | Y | Y | Y | Y | N | Y | Y | Y | 9 |

| Alberts et al., 2015 [27] | N | Y | Y | Y | Y | Y_(NA) | N | Y | Y | Y | 8 |

| Kosse et al., 2015 [14] | N | Y | N | N | Y | N | N | Y | Y | Y | 5 |

| Hsieh et al., 2019 [30] | N | Y | Y | N | Y | N | N | Y | Y | N | 5 |

| Ozinga et al., 2014 [15] | N | Y | Y | Y | Y | Y | N | Y | Y | Y | 8 |

| Patterson et al., 2014 [17] | Y | Y | Y | Y | Y | Y_(NA) | N | N | Y | Y_(NA) | 8 |

| Patterson et al., 2014 [28] | Y | Y | N | Y | Y | N | N | N | Y | N | 5 |

| Shah et al., 2016 [18] | Y | Y | Y | Y | Y | Y | N | Y | Y | N | 8 |

| Yvon et al., 2015 [29] | N | Y | N | Y | Y | N | N | N | N | Y_(NA) | 4 |

Appendix D. Overview (Description of Parameters and Measurements as Stated in the Study and General Comments)

| Author | Overview | Parameters and Measurements | General Comments on the Strengths and Limitations |

|---|---|---|---|

| Alberts et al., 2015 [26] | Used the Neurocom® device through force plate measurements with sensory organization test (SOT) to determine whether an iPad2 provides sufficient resolution of the center of gravity (COG) movements to quantify postural stability in healthy young people accurately. | Center of pressure (COP) of the anterior-posterior (AP) and medium-lateral (ML) sway. Three-dimensional (3D) device-rotation rates and linear acceleration. COG of the AP angle was used for all outcomes. | Only sample curves are shown for the CoG-AP sways for conditions 1, 4, 5 and 6. No numerical data are given for the actual physical measures from the Neurocom A-P sway-data as compared to the calculated A-P sway-data from iPad sensor. Nevertheless, the overall performance of the iPad for predicting the Equilibrium Score of the Neurocom appears excellent. The 100 Hz data sampling is more than sufficient to determine low-frequency body sways, probably using the smaller gadget/mobile, rather than the large iPad-2 may have resulted in even better results. |

| Alberts et al., 2015 [27] | Assessed the accuracy of the iPad by comparing the metrics of postural stability with a 3D motion capture system and proposed a method of quantification of the Balance error scoring system (BESS) using the center of mass (COM) acceleration data. | 3D Position, linear and angular accelerations of the COM at the AP and ML. 3D Linear acceleration and rotation-rate, (1) peak-to-peak, (2) normalized path length, (3) root mean square (RMS) of the displacements COM, (4) 95% ellipsoid volume of sway. A spectral analysis of ML, AP, and trunk (TR) acceleration. | No numerical data were compared between the motion capture results and the calculated values from the iPad sensors. Only correlations were calculated, and no raw data were presented, what leave readers not sure of the measurements’ consistency. This applies to Table 1, where no raw data for normalized path length (NPL), peak-to-peak (P2P), and RMS are presented, just comparisons with low to medium rho values. The small (Correlation coefficient) Rho values of only 0.55 and less (Table 2) for the iBESS Volume against the error score is even less convincing, but this can also be due to the low reliability of the subjective error scoring. The iPad sensors are probably much better able to detect balance deficits as compared to the more subjective BESS. |

| Kosse et al., 2015 [14] | Compared to the data from a stand-alone accelerometer unit to establish the validity and reliability of gait and posture assessment of an iPod. | AP and ML trunk acceleration and a resultant vector (1) RMS accelerations of body sway in AP and ML, (2) sway area, (3) total power median of the signal from frequency spectrum signals. | Good direct comparison study from an iPod with a "Gold Standard" DynaPort triaxial accelerometer. For comparing wave, similarity Cross-correlations were determined after time normalization (100 Hz). The values were around 0.9 for all experimental conditions in AP and ML directions suggesting a high-quality acceleration signal and software evaluation. Time-lag values were almost identical between the two transducers. Validity and test–retest reliability intraclass-correlations (ICC) values were also excellent for RMS signals in both the AP and ML direction. Only for the median power frequency (MPF) lower ICC´s were found for the test–retest reliability, (possibly caused by the different sampling frequencies, requiring time normalization procedures. Excellent and comprehensive analysis, including a measurement section for a pure comparison of transducer technology as well as application to groups of three age group participants. |

| Hsieh et al., 2019 [30] | Static balance tests were conducted while standing on a force plate and holding a smartphone. COP data from the force plate and acceleration data from the smartphone were compared. Validity between the measures was assessed and the Correlations coefficients were extracted to determine if a smartphone embedded accelerometer can measure static postural stability and distinguish older adults at high levels of fall risk. | The COP parameters included in the analysis were: (1) 95% confidence ellipse and (2) velocity in the anteroposterior (AP) direction and mediolateral (ML) direction. From the smartphone, (1) maximum acceleration in the ML, vertical, and AP directions and (4) root mean square (RMS) in the ML, vertical, and AP axis were exported and processed. | A promising approach was used to distinguish subjects with risks of falling associating acceleration data and COP parameters to the "physiological profile assessment” which is an evaluation of the risk of falling based on the assessment of multiple domains. Strong significant correlations between measures were found during challenging balance conditions (ρ = 0.42–0.81, p < 0.01–0.05). Correlations that, to some extent, were expected although it seems to be quite difficult to differentiate between vertical, AP, and ML components between the force plate and the accelerometer. Especially, during challenging balance tasks, there will be quite a bit of movement of the upper extremities against the body, creating all kinds of extra accelerations at the phone. A more trustful comparison of acceleration data from the phone to the force plate seems only possible if the phone would have been fastened at the CoG of subjects or close to the CoG. |

| Ozinga et al., 2014 [15] | Simultaneous kinematic measurements from a 3D motion analysis system during balance conditions were used to compare the movements of COM to investigate if an iPad can provide sufficient accuracy and quality for the quantification of postural stability in older adults. | Angular velocities and linear accelerations were processed to allow direct comparison to Position of whole-body COM, (1) peak-to-peak displacement amplitude, (2) normalized path length, (3) RMS displacements of COM, (4) 95% ellipsoid volume of sway. Spectral analysis of the magnitude of the ML, AP, and trunk acceleration was used. | Fairly high correlations were present between the cinematographic, and the iPad derived data, suggesting that the iPad would be a good alternative to cinematographic posture analyses. Procedures and methods were well chosen. However, the number of subjects was fairly low. |

| Patterson et al., 2014 [17] | Compared the scores of a mobile technology application within an iPod through balance tasks with a commonly used subjective balance assessment, the Balance error scoring system BESS. | Balance scores by 3D Acceleration measurements. | An inverse relationship of r = -0.77 (p < 0.01) was found between the BEES score and the SWAY results. Thus, the iPod acceleration signals were proven to be a fairly good predictor of stability. An elevated BESS score reflected a high number of balance errors, whereas the SWAY Balance score assigns a higher value to more stable performance. The fact that subjects had to press the iPod with their hands against the sternum brings some weakness to the test procedures. It restricts freedom for balancing their body in the five exercises and introducing mechanical artifacts by having their hands at the sensors during balancing task. Because no direct comparisons between two sensors were made, only an indirect estimation of the quality of the iPod touch transducer can be made. Most likely, the major error of the limited r = -0.77 is not a function of the quality of the iPod sensor but rather a consequence of poor BESS rating quality. Mentioning yet, that BESS scoring test was done by only two people. However, since BESS is well accepted, this paper shows, that mobile phone integrated sensors are well suited for evaluating postural stability. |

| Patterson et al, 2014 [28] | A Biodex© Balance System which gives an AP Stability Index from a force platform was used to compare and evaluate the validity of a Balance Mobile Application which uses the 3D accelerometers from an iPod while subjects were performing a single trial of the Athlete Single Leg Test protocol. | Degree of tilt about each axis: (1) ML stability index, (2) AP stability index and the (3) overall stability index; The displacement in degrees from level was termed the “balance score” from the AP stability index (APSI). | AP stability index (APSI) score on the balance platform 1.41 was similar to the smartphone SWAY score 1.38 with no statistically significant difference. However, the correlation (ICC) between the scores was low - only r= 0.632 (p<0.01). As it was the case in the Patterson et al., 2014a, the same weakness was found, once the subjects had to hold the iPod touch with both hands at the sternum and only sway in AP direction was measured. Other than indicated the authors, an ICC of only r= 0.632 appears very low when considering that the same measure was taken by two systems at the same time for a single leg stance. |

| Shah et al, 2016 [18] | A mobile application was developed to provide a method of objectively measuring standing balance using the phone’s accelerometer. Eight independent therapists ranked a balance protocol based on their clinical experience to assess the degree of exercise difficulty. The concordance between the results was obtained to determine if the mobile can quantify standing balance and distinguish between exercises of varying difficulty. | 3D accelerometer data were obtained from three mobile phones and mean acceleration was calculated; After a correction for static bias the corrected value was applied, the magnitude of the resultant vector (R) was calculated for each of measurement; The metric "mean R" was the average magnitude all resultant vectors and was then used as an index of balance. | Even though Shah et al., 2016 did not make a direct comparison between 2 sensor systems, accelerometer readings were calculated for each exercise at each ankle and knee and the torso. A high differentiation between the stability exercises shows lower values for ankle, knee, and torso, indicating that the acceleration results from the mobile phones have a strong relationship to the subjective rating of the 8 experienced clinicians. The results indicate that one sensor location appears sufficient since all sensors follow the same trend, it appears that knee, and torso locations could be used. From a practical point of view, easiest to mount and use would be the torso or hip location. |

| Yvon et al, 2015 [29] | An iPhone application was used to quantify sway while performing the Romberg and the Romberg tandem tests in a soundproof room and then in a normal room. | Output data (‘K’ value) was used to represent the area of an ellipse with two standard deviations in the anteriorposterior and lateral planes about a mean point. | The article explores a not usual protocol trying to evaluate the contributions of auditory sensory inputs on balance, through a combination of postures in different sound room condition. No raw data were presented or clearly specified; data processing procedures were not reported. Differences on postural sway measurements have been found among different room conditions with a dedicated application |

References

- World Health Organization. Falls. The Problem & Key Facts Sheets Reviewed. 2018. Available online: http://www.who.int/mediacentre/factsheets/fs344/en/ (accessed on 7 April 2018).

- Lee, J.; Geller, A.I.; Strasser, D.C. Analytical Review: Focus on Fall Screening Assessments. PM R 2013, 5, 609–621. [Google Scholar] [CrossRef] [PubMed]

- Van der Kooij, H.; van Asseldonk, E.; van der Helm, F.C.T. Comparison of different methods to identify and quantify balance control. J. Neurosci. Methods 2005, 145, 175–203. [Google Scholar] [CrossRef] [PubMed]

- Fabre, J.M.; Ellis, R.; Kosma, M.; Wood, R.H. Falls risk factors and a compendium of falls risk screening instruments. J. Geriatr. Phys. Ther. 2010, 33, 184–197. [Google Scholar] [PubMed]

- Baratto, L.; Morasso, P.G.; Re, C.; Spada, G. A new look at the posturographic analysis in the clinical context: Sway-density versus other parameterization techniques. Mot. Control 2002, 6, 246–270. [Google Scholar] [CrossRef]

- Clark, S.; Riley, M.A. Multisensory information for postural control: Sway-referencing gain shapes center of pressure variability and temporal dynamics. Exp. Brain. Res. 2007, 176, 299–310. [Google Scholar] [CrossRef] [PubMed]

- Wong, S.J.; Robertson, G.A.; Connor, K.L.; Brady, R.R.; Wood, A.M. Smartphone apps for orthopedic sports medicine—A smart move? BMC Sports Sci. Med. Rehabil. 2015, 7, 23. Available online: http://bmcsportsscimedrehabil.biomedcentral.com/articles/10.1186/s13102-015-0017-6 (accessed on 21 April 2018).

- Del Rosario, M.; Redmond, S.; Lovell, N. Tracking the Evolution of Smartphone Sensing for Monitoring Human Movement. Sensors 2015, 15, 18901–18933. [Google Scholar] [CrossRef] [Green Version]

- Dobkin, B.H.; Dorsch, A. The Promise of mHealth: Daily Activity Monitoring and Outcome Assessments by Wearable Sensors. Neurorehabil. Neural Repair 2011, 25, 788–798. [Google Scholar] [CrossRef]

- Ruhe, A.; Fejer, R.; Walker, B. The test-retest reliability of center of pressure measures in bipedal static task conditions—A systematic review of the literature. Gait Posture 2010, 32, 436–445. [Google Scholar] [CrossRef]

- Habib, M.; Mohktar, M.; Kamaruzzaman, S.; Lim, K.; Pin, T.; Ibrahim, F. Smartphone-Based Solutions for Fall Detection and Prevention: Challenges and Open Issues. Sensors 2014, 14, 7181–7208. [Google Scholar] [CrossRef] [Green Version]

- Whitney, S.L.; Roche, J.L.; Marchetti, G.F.; Lin, C.-C.; Steed, D.P.; Furman, G.R.; Musolino, M.C.; Redfern, M.S. A comparison of accelerometry and center of pressure measures during computerized dynamic posturography: A measure of balance. Gait Posture 2011, 33, 594–599. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chung, C.C.; Soangra, R.; Lockhart, T.E. Recurrence Quantitative Analysis of Postural Sway using Force Plate and Smartphone. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2014, 58, 1271–1275. [Google Scholar] [CrossRef] [Green Version]

- Kosse, N.M.; Caljouw, S.; Vervoort, D.; Vuillerme, N.; Lamoth, C.J.C. Validity and Reliability of Gait and Postural Control Analysis Using the Tri-axial Accelerometer of the iPod Touch. Ann. Biomed. Eng. 2015, 43, 1935–1946. [Google Scholar] [CrossRef] [PubMed]

- Ozinga, S.J.; Alberts, J.L. Quantification of postural stability in older adults using mobile technology. Exp. Brain. Res. 2014, 232, 3861–3872. [Google Scholar] [CrossRef] [PubMed]

- Ozinga, S.J. Quantification of Postural Stability in Parkinson’s Disease Patients Using Mobile Technology; Cleveland State University: Cleveland, OH, USA, 2015; Available online: http://rave.ohiolink.edu/etdc/view?acc_num=csu1450261576 (accessed on 21 April 2018). [CrossRef]

- Patterson, J.A.; Amick, R.Z.; Pandya, P.D.; Hakansson, N.; Jorgensen, M.J. Comparison of a Mobile Technology Application with the Balance Error Scoring System. Int. J. Athl. Ther. Train. 2014, 19, 4–7. [Google Scholar] [CrossRef]

- Shah, N.; Aleong, R.; So, I. Novel Use of a Smartphone to Measure Standing Balance. JMIR Rehabil. Assist. Technol. 2016, 3, e4. [Google Scholar] [CrossRef] [PubMed]

- Mayagoitia, R.E.; Lötters, J.C.; Veltink, P.H.; Hermens, H. Standing balance evaluation using a triaxial accelerometer. Gait Posture 2002, 16, 55–59. [Google Scholar] [CrossRef]

- Neville, C.; Ludlow, C.; Rieger, B. Measuring postural stability with an inertial sensor: Validity and sensitivity. Med. Devices Evid. Res. 2015, 8, 447. [Google Scholar] [CrossRef]

- Van Tang, P.; Tan, T.D.; Trinh, C.D. Characterizing Stochastic Errors of MEMS-Based Inertial Sensors. VNU J. Sci. Math. Phys. 2016, 32, 34–42. [Google Scholar]

- Poushter, J.; Caldwell, B.; Hanyu, C. Social Media Use Continues to Rise in Developing Countries But Plateaus Across Developed Ones. Available online: https://assets.pewresearch.org/wp-content/uploads/sites/2/2018/06/15135408/Pew-Research-Center_Global-Tech-Social-Media-Use_2018.06.19.pdf (accessed on 21 April 2018).

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; Group, P. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef]

- Chandler, J.; Higgins, J.P.; Deeks, J.J.; Davenport, C.; Clarke, M.J. Cochrane Handbook for Systematic Reviews of Interventions, 50. Available online: https://community.cochrane.org/book_pdf/764 (accessed on 3 February 2018).

- National Heart, Lung, and Blood Institute (NHLBI) of the United States National Institute of Health (NIH). Quality Assessment Tool for Observational Cohort and Cross-Sectional Studies. Available online: https://www.nhlbi.nih.gov/health-pro/guidelines/in-develop/cardiovascular-risk-reduction/tools/cohort (accessed on 7 April 2018).

- Alberts, J.L.; Hirsch, J.R.; Koop, M.M.; Schindler, D.D.; Kana, D.E.; Linder, S.M.; Campbell, S.; Thota, A.K. Using Accelerometer and Gyroscopic Measures to Quantify Postural Stability. J. Athl. Train. 2015, 50, 578–588. [Google Scholar] [CrossRef] [PubMed]

- Alberts, J.L.; Thota, A.; Hirsch, J.; Ozinga, S.; Dey, T.; Schindler, D.D.; Koop, M.M.; Burke, D.; Linder, S.M. Quantification of the Balance Error Scoring System with Mobile Technology. Med. Sci. Sports Exerc. 2015, 47, 2233. [Google Scholar] [CrossRef] [PubMed]

- Patterson, J.A.; Amick, R.Z.; Thummar, T.; Rogers, M.E. Validation of measures from the smartphone sway balance application: A pilot study. Int. J. Sports Phys. Ther. 2014, 9, 135. [Google Scholar] [PubMed]

- Yvon, C.; Najuko-Mafemera, A.; Kanegaonkar, R. The D+R Balance application: A novel method of assessing postural sway. J. Laryngol. Otol. 2015, 129, 773–778. [Google Scholar] [CrossRef] [PubMed]

- Hsieh, K.L.; Roach, K.L.; Wajda, D.A.; Sosnoff, J.J. Smartphone technology can measure postural stability and discriminate fall risk in older adults. Gait Posture 2019, 67, 160–165. [Google Scholar] [CrossRef] [PubMed]

- Lord, S.R.; Menz, H.B.; Tiedemann, A. A Physiological Profile Approach to Falls Risk Assessment and Prevention. Phys. Ther. 2003, 83, 237–252. [Google Scholar] [CrossRef] [PubMed]

- Scoppa, F.; Capra, R.; Gallamini, M.; Shiffer, R. Clinical stabilometry standardization. Gait Posture 2013, 37, 290–292. [Google Scholar] [CrossRef] [PubMed]

- Evans, O.M.; Goldie, P.A. Force platform measures for evaluating postural control: Reliability and validity. Arch. Phys. Med. Rehabil. 1989, 70, 510–517. [Google Scholar]

- Kirby, R.L.; Price, N.A.; MacLeod, D.A. The influence of foot position on standing balance. J. Biomech. 1987, 20, 423–427. [Google Scholar] [CrossRef]

- Winter, D.A. Human balance and posture control during standing and walking. Gait Posture 1995, 3, 193–214. [Google Scholar] [CrossRef]

- Zatsiorsky, V.M.; Duarte, M. Instant equilibrium point and its migration in standing tasks: Rambling and trembling components of the stabilogram. Motor Control 1999, 3, 28–38. [Google Scholar] [CrossRef]

- Morasso, P.G.; Spada, G.; Capra, R. Computing the COM from the COP in postural sway movements. Hum. Mov. Sci. 1999, 18, 759–767. [Google Scholar] [CrossRef]

- Zatsiorsky, V.M.; Duarte, M. Rambling and trembling in quiet standing. Mot. Control 2000, 4, 185–200. [Google Scholar] [CrossRef]

- Lin, D.; Seol, H.; Nussbaum, M.A.; Madigan, M.L. Reliability of COP-based postural sway measures and age-related differences. Gait Posture 2008, 28, 337–342. [Google Scholar] [CrossRef]

- Deshmukh, P.M.; Russell, C.M.; Lucarino, L.E.; Robinovitch, S.N. Enhancing clinical measures of postural stability with wearable sensors. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 4521–4524. [Google Scholar] [CrossRef]

- Roeing, K.L.; Hsieh, K.L.; Sosnoff, J.J. A systematic review of balance and fall risk assessments with mobile phone technology. Arch. Gerontol. Geriatr. 2017, 73, 222–226. [Google Scholar] [CrossRef]

| Author | Sample Gender | Age Years | Height (cm) Body Mass (kg) |

|---|---|---|---|

| Alberts et al., 2015 [26] | n = 49 22 male | 19.5 ± 3.1 | 167.7 ± 13.2 68.5 ± 17.5 |

| Alberts et al., 2015 [27] | n = 32 14 male | 20.9 ± NR | NR |

| Kosse et al., 2015 [14] | n = 60 28 male | 26 ± 3.9 (young) 45 ± 6.7 (middle) 65 ± 5.5 (older) | NR |

| Hsieh et al., 2019 [30] | n = 30 12 male | 64.8 ± 4.5 (Low RF) 72.3 ± 6.6 (High RF) | NR |

| Ozinga et al., 2014 [15] | n = 12 5 male | 68.3 ± 6.9 | NR |

| Patterson et al., 2014 [17] | n = 21 7 male | 23 ± 3.34 | 171.66 ± 10.2 82.76 ± 25.69 |

| Patterson et al., 2014 [28] | n = 30 13 male | 26.1 ± 8.5 | 170,1 ± 7,9 72.3 ± 15.5 |

| Shah et al., 2016 [18] | n = 48 21 male | 22 ± 2.5 | 175 ± 9.7 72.57 ± 1.29 |

| Yvon et al., 2015 [29] | n = 50 13 male | NR | NR |

| Author | Assessed Tasks | Feet Condition | Feet Position | Hands/Arms Position | Visual Input | Visual Reference |

|---|---|---|---|---|---|---|

| Alberts et al., 2015 [26] | Six conditions NeuroCom® SOT | According to SOT | According to SOT | According to SOT | EO/EC | According to SOT |

| Alberts et al., 2015 [27] | Six conditions BESS | Wearing socks | According to BESS | Resting on the iliac crests | EC | NA |

| Kosse et al., 2015 [14] | Two conditions 1- Quiet standing 2- a Dual-task (letter fluency test) | NR | Parallel Semi-tandem | NR | EO/EC | NR |

| Hsieh et al., 2019 [30] | 1- Quiet standing 2- a Dual-task (subtracting numbers) | Wearing socks | (NC ) Semi-tandem Tandem Single leg | dominate hand holding phone medially against the chest | EO/EC | NR |

| Ozinga et al., 2014 [15] | Six conditions adapted from BESS | Barefoot | According to BESS | Resting on the iliac crests | EO/EC | 3m target |

| Patterson et al., 2014 [17] | Six conditions BESS (adapted) Five conditions Sway Test | Shoed | According to BESS | Holding Mobile at Sternum mid-point | EC | NA |

| Patterson et al., 2014 [28] | Single condition Athlete’s Single Leg Test | NR | Non-dominant foot stance | Holding Mobile at Sternum mid-point | EO | NR |

| Shah et al., 2016 [18] | Eight conditions | Barefoot | Apart Together Tandem | On the hips | EO/EC | 4.37 m target |

| Yvon et al., 2015 [29] | Romberg and tandem Romberg tests in Sixteen conditions | NR | Apart Together Tandem | Side arms | EO/EC | NR |

| Author | Number of Trials | Total Time (Time Cropped) Seconds | Device I Device II (Sampling Rate) | Device Position | App Used for Acquisition | Synchronization |

|---|---|---|---|---|---|---|

| Alberts et al., 2015 [26] | 3 | 20 s (NR) | iPad2 (100 Hz) NeuroCom® (100 Hz) | Sacrum | Sensor Data by Wavefront Labs | LabVIEW data collection program. |

| Alberts et al., 2015 [27] | 1 | 20 s (NR) | iPad (SNR) (100 Hz) Eagle 3D Motion analysis System (100 Hz) | Sacrum | Cleveland Clinic Concussion | Arduino Pro Mini 3.3 v and a LED light |

| Kosse et al., 2015 [14] | 1 | 60 s (NR) | iPod Touch (88–92 Hz) Accelerometer DynaPort® hybrid unit (100 Hz) | L3 vertebrae | iMoveDetection | Cross-correlation analysis |

| Hsieh et al., 2019 [30] | 2 | 30 s (NR) | Samsung Galaxy S6 (200 Hz) Force plate (Bertec Inc, Columbus, OH) (1000 Hz) | Sternum | NR | NR |

| Ozinga et al., 2014 [15] | 2 | 60 s (NR) | iPad 3 (100 Hz) Eagle 3D Motion analysis System (100 Hz) | Second sacral vertebrae | Cleveland Clinic Balance Assessment | Arduino Pro Mini 3.3 v and a LED light |

| Patterson et al., 2014 [17] | 1 | 10 s STS 20 s BESS (NA) | iPod Touch (NR) NA | Sternum midpoint | SWAY Balance Mobile | NA |

| Patterson et al., 2014 [28] | 1 | 10 s (NR) | iPod Touch (NR) Biodex© Balance System (NR) | Sternum midpoint | SWAY Balance Mobile | NR |

| Shah et al., 2016 [18] | 1 | (NR) | LG Optimus One (14–15 Hz) NA | Malleols Patella Umbilics | myAnkle | NR |

| Yvon et al., 2015 [29] | 1 | 30 s (NR) | iPhone (SNR) NA | Participant’s left upper arm | D + R Balance | NR |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pinho, A.S.; Salazar, A.P.; Hennig, E.M.; Spessato, B.C.; Domingo, A.; Pagnussat, A.S. Can We Rely on Mobile Devices and Other Gadgets to Assess the Postural Balance of Healthy Individuals? A Systematic Review. Sensors 2019, 19, 2972. https://doi.org/10.3390/s19132972

Pinho AS, Salazar AP, Hennig EM, Spessato BC, Domingo A, Pagnussat AS. Can We Rely on Mobile Devices and Other Gadgets to Assess the Postural Balance of Healthy Individuals? A Systematic Review. Sensors. 2019; 19(13):2972. https://doi.org/10.3390/s19132972

Chicago/Turabian StylePinho, Alexandre S., Ana P. Salazar, Ewald M. Hennig, Barbara C. Spessato, Antoinette Domingo, and Aline S. Pagnussat. 2019. "Can We Rely on Mobile Devices and Other Gadgets to Assess the Postural Balance of Healthy Individuals? A Systematic Review" Sensors 19, no. 13: 2972. https://doi.org/10.3390/s19132972

APA StylePinho, A. S., Salazar, A. P., Hennig, E. M., Spessato, B. C., Domingo, A., & Pagnussat, A. S. (2019). Can We Rely on Mobile Devices and Other Gadgets to Assess the Postural Balance of Healthy Individuals? A Systematic Review. Sensors, 19(13), 2972. https://doi.org/10.3390/s19132972