UAV Flight and Landing Guidance System for Emergency Situations †

Abstract

:1. Introduction

- UAV should be allowed to continue its mission in situations where GPS is not available or the network is disconnected;

- The system that guides the UAVs’ flight should be able to overcome multi-path fading and interference that may occur in urban areas;

- When the UAV is landing, the UAV must be able to land safely while avoiding obstacles.

2. Preliminaries

2.1. Related Work

2.1.1. Laser Guidance Systems

2.1.2. Obstacle Avoidance Studies

2.1.3. Autonomous UAV Landing Systems

2.2. Background

2.2.1. Particle Filter Theory

2.2.2. Optical Flow Method

3. Methods

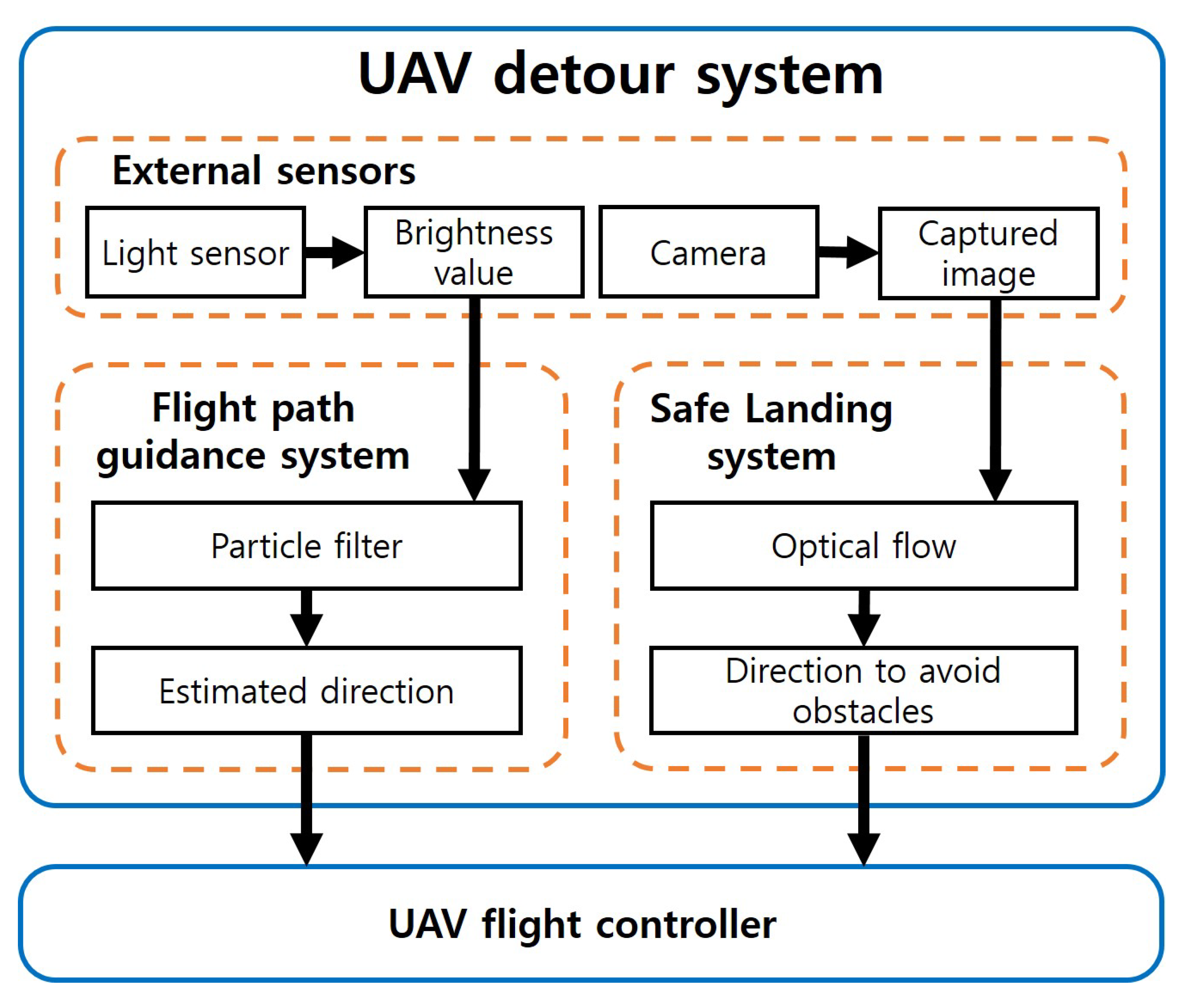

3.1. System Overview

3.2. Flight Guidance System for UAV

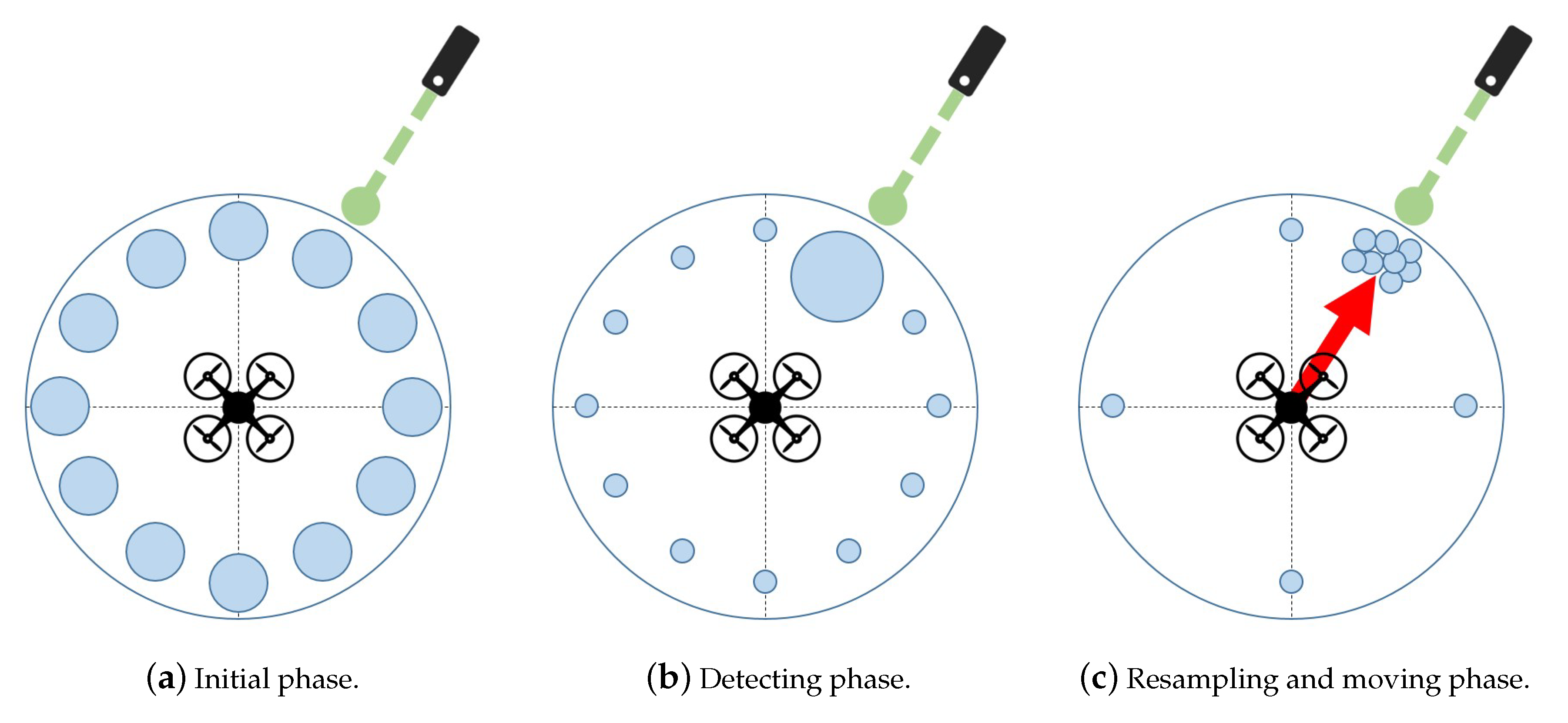

3.2.1. Particle Filter Based Flight Guidance

3.2.2. Resampling Method of Particle Filter

3.2.3. Delay Reduction Analysis through Sample Dispersion Modeling

3.2.4. Optimal Number of Particles through Modeling

3.3. Safe Landing System for UAV

3.3.1. Optical Flow Based Obstacle Avoidance

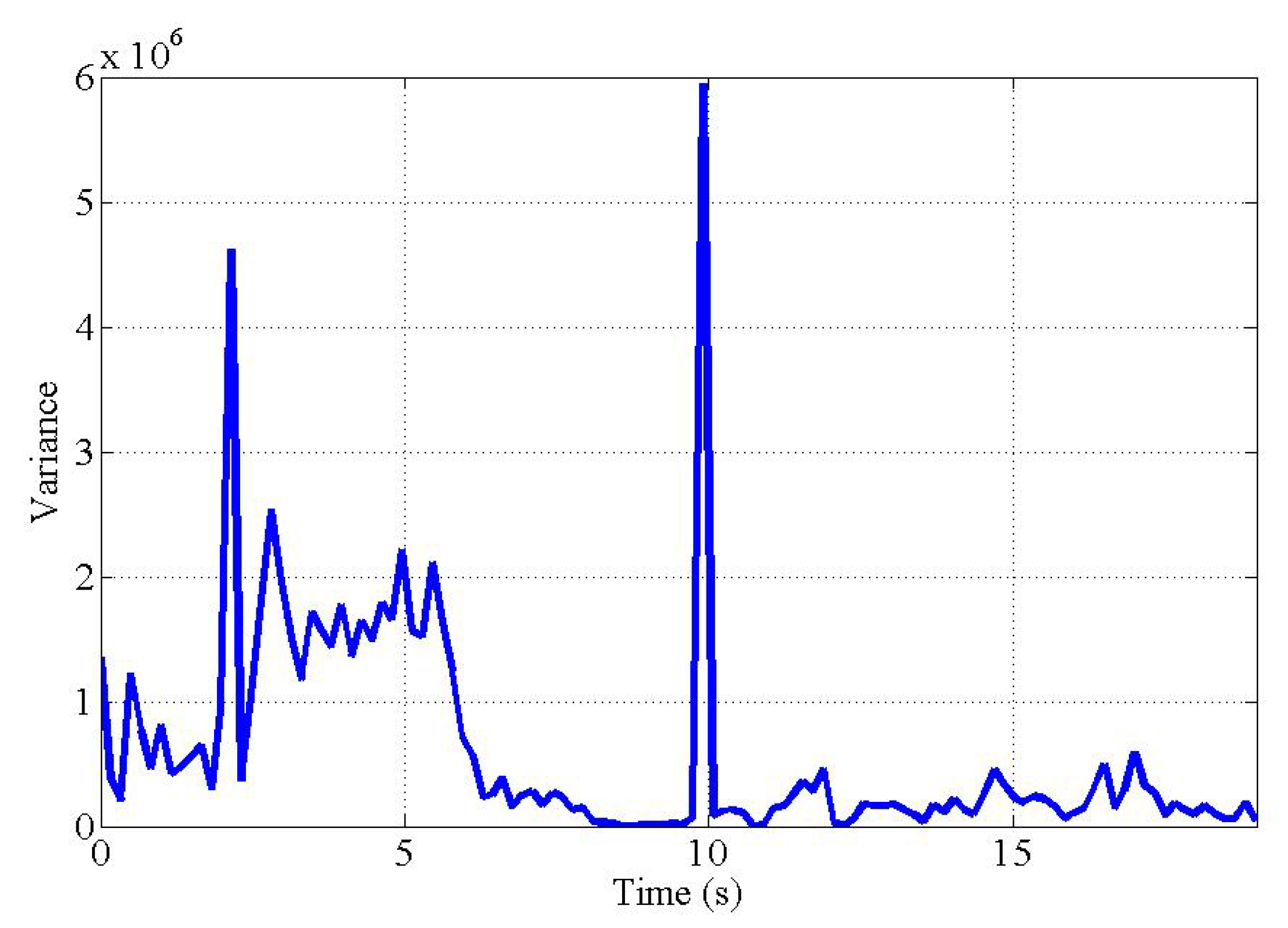

3.3.2. Optical Flow Modeling

4. System Implementation

4.1. Flight Guidance System

4.1.1. Laser Detector

4.1.2. Guiding Direction Estimator

| Algorithm 1 Guiding direction estimator. |

Initialization

|

4.2. Safe Landing System

4.2.1. Optical Flow Magnitude Map Generator

4.2.2. Obstacle Analyzer

| Algorithm 2 Obstacle analyzer. |

|

5. Experiments and Demonstrations

5.1. Experimental Setup

5.2. Flight Guidance System Demonstration

5.3. Safe Landing System Demonstration

5.4. UAV Detour System Demonstration

6. Future Work

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Park, S.; Lee, J.Y.; Um, I.; Joe, C.; Kim, H.T.; Kim, H. RC Function Virtualization-You Can Remote Control Drone Squadrons. In Proceedings of the 17th Annual International Conference on Mobile Systems, Applications, and Services, Seoul, Korea, 17–21 June 2019; ACM: New York, NY, USA, 2019; pp. 598–599. [Google Scholar]

- Hart, W.S.; Gharaibeh, N.G. Use of micro unmanned aerial vehicles in roadside condition surveys. In Proceedings of the Transportation and Development Institute Congress 2011: Integrated Transportation and Development for a Better Tomorrow, Chicago, IL, USA, 13–16 March 2011; pp. 80–92. [Google Scholar]

- Cheng, P.; Zhou, G.; Zheng, Z. Detecting and counting vehicles from small low-cost UAV images. In Proceedings of the ASPRS 2009 Annual Conference, Baltimore, MD, USA, 9–13 March 2009; Volume 3, pp. 9–13. [Google Scholar]

- Jensen, O.B. Drone city—Power, design and aerial mobility in the age of “smart cities”. Geogr. Helv. 2016, 71, 67–75. [Google Scholar] [CrossRef]

- Jung, J.; Yoo, S.; La, W.; Lee, D.; Bae, M.; Kim, H. Avss: Airborne video surveillance system. Sensors 2018, 18, 1939. [Google Scholar] [CrossRef] [PubMed]

- Bae, M.; Yoo, S.; Jung, J.; Park, S.; Kim, K.; Lee, J.; Kim, H. Devising Mobile Sensing and Actuation Infrastructure with Drones. Sensors 2018, 18, 624. [Google Scholar] [CrossRef] [PubMed]

- Chung, A.Y.; Jung, J.; Kim, K.; Lee, H.K.; Lee, J.; Lee, S.K.; Yoo, S.; Kim, H. Poster: Swarming drones can connect you to the network. In Proceedings of the 13th Annual International Conference on Mobile Systems, Applications, and Services, MobiSys 2015, Florence, Italy, 18–22 May 2015; Association for Computing Machinery, Inc.: New York, NY, USA, 2015; p. 477. [Google Scholar]

- Park, S.; Kim, K.; Kim, H.; Kim, H. Formation control algorithm of multi-UAV-based network infrastructure. Appl. Sci. 2018, 8, 1740. [Google Scholar] [CrossRef]

- Farris, E.; William, F.M.I. System and Method for Controlling Drone Delivery or Pick Up during a Delivery or Pick Up Phase of Drone Operation. U.S. Patent 14/814,501, 4 February 2016. [Google Scholar]

- Kimchi, G.; Buchmueller, D.; Green, S.A.; Beckman, B.C.; Isaacs, S.; Navot, A.; Hensel, F.; Bar-Zeev, A.; Rault, S.S.J.M. Unmanned Aerial Vehicle Delivery System. U.S. Patent 14/502,707, 30 Seotember 2014. [Google Scholar]

- Paek, J.; Kim, J.; Govindan, R. Energy-efficient Rate-adaptive GPS-based Positioning for Smartphones. In Proceedings of the 8th International Conference on Mobile Systems, Applications, and Services, San Francisco, CA, USA, 15–18 June 2010; MobiSys ’10. ACM: New York, NY, USA, 2010; pp. 299–314. [Google Scholar] [CrossRef]

- QUARTZ Amazon Drones won’t Replace the Mailman or FedEx Woman any Time soon. Available online: http://qz.com/152596 (accessed on 28 September 2019).

- Nguyen, P.; Ravindranatha, M.; Nguyen, A.; Han, R.; Vu, T. Investigating cost-effective rf-based detection of drones. In Proceedings of the 2nd Workshop on Micro Aerial Vehicle Networks, Systems, and Applications for Civilian Use, Singapore, 26 June 2016; ACM: New York, NY, USA, 2016; pp. 17–22. [Google Scholar]

- Chung, A.Y.; Lee, J.Y.; Kim, H. Autonomous mission completion system for disconnected delivery drones in urban area. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, China, 5–8 December 2017; pp. 56–61. [Google Scholar]

- Lee, J.Y.; Shim, H.; Park, S.; Kim, H. Flight Path Guidance System. Available online: https://youtu.be/vjX0nKODgqU (accessed on 2 October 2019).

- Lee, J.Y.; Joe, C.; Park, S.; Kim, H. Safe Landing System. Available online: https://youtu.be/VSHTZG1XVLs (accessed on 28 September 2019).

- Lee, J.Y.; Shim, H.; Joe, C.; Park, S.; Kim, H. UAV Detour System. Available online: https://youtu.be/IQn9M1OHXCQ (accessed on 2 October 2019).

- Stary, V.; Krivanek, V.; Stefek, A. Optical detection methods for laser guided unmanned devices. J. Commun. Netw. 2018, 20, 464–472. [Google Scholar] [CrossRef]

- Shaqura, M.; Alzuhair, K.; Abdellatif, F.; Shamma, J.S. Human Supervised Multirotor UAV System Design for Inspection Applications. In Proceedings of the 2018 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Philadelphia, PA, USA, 6–8 August 2018; pp. 1–6. [Google Scholar]

- Jang, W.; Miwa, M.; Shim, J.; Young, M. Location Holding System of Quad Rotor Unmanned Aerial Vehicle (UAV) using Laser Guide Beam. Int. J. Appl. Eng. Res. 2017, 12, 12955–12960. [Google Scholar]

- Fox, D.; Burgard, W.; Kruppa, H.; Thrun, S. A probabilistic approach to collaborative multi-robot localization. Auton. Robot. 2000, 8, 325–344. [Google Scholar] [CrossRef]

- Hightower, J.; Borriello, G. Particle filters for location estimation in ubiquitous computing: A case study. In Proceedings of the International conference on ubiquitous computing, Nottingham, UK, 7–10 September 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 88–106. [Google Scholar]

- Rosa, L.; Hamel, T.; Mahony, R.; Samson, C. Optical-flow based strategies for landing vtol uavs in cluttered environments. IFAC Proc. Vol. 2014, 47, 3176–3183. [Google Scholar] [CrossRef]

- Souhila, K.; Karim, A. Optical flow based robot obstacle avoidance. Int. J. Adv. Robot. Syst. 2007, 4, 13–16. [Google Scholar] [CrossRef]

- Yoo, D.W.; Won, D.Y.; Tahk, M.J. Optical flow based collision avoidance of multi-rotor uavs in urban environments. Int. J. Aeronaut. Space Sci. 2011, 12, 252–259. [Google Scholar] [CrossRef]

- Miller, A.; Miller, B.; Popov, A.; Stepanyan, K. UAV Landing Based on the Optical Flow Videonavigation. Sensors 2019, 19, 1351. [Google Scholar] [CrossRef] [PubMed]

- Herissé, B.; Hamel, T.; Mahony, R.; Russotto, F.X. Landing a VTOL unmanned aerial vehicle on a moving platform using optical flow. IEEE Trans. Robot. 2011, 28, 77–89. [Google Scholar] [CrossRef]

- Mori, T.; Scherer, S. First results in detecting and avoiding frontal obstacles from a monocular camera for micro unmanned aerial vehicles. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 1750–1757. [Google Scholar]

- Hrabar, S. 3D path planning and stereo-based obstacle avoidance for rotorcraft UAVs. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 807–814. [Google Scholar]

- Ferrick, A.; Fish, J.; Venator, E.; Lee, G.S. UAV obstacle avoidance using image processing techniques. In Proceedings of the 2012 IEEE International Conference on Technologies for Practical Robot Applications (TePRA), Woburn, MA, USA, 23–24 April 2012; pp. 73–78. [Google Scholar]

- Kendoul, F. Survey of advances in guidance, navigation, and control of unmanned rotorcraft systems. J. Field Robot. 2012, 29, 315–378. [Google Scholar] [CrossRef]

- Bi, Y.; Duan, H. Implementation of autonomous visual tracking and landing for a low-cost quadrotor. Opt.-Int. J. Light Electron Opt. 2013, 124, 3296–3300. [Google Scholar] [CrossRef]

- Lange, S.; Sünderhauf, N.; Protzel, P. A vision based onboard approach for landing and position control of an autonomous multirotor UAV in GPS-denied environments. In Proceedings of the 2009 International Conference on Advanced Robotics, Munich, Germany, 22–26 June 2009; pp. 1–6. [Google Scholar]

- Venugopalan, T.; Taher, T.; Barbastathis, G. Autonomous landing of an Unmanned Aerial Vehicle on an autonomous marine vehicle. In Proceedings of the 2012 Oceans, Hampton Roads, VA, USA, 14–19 October 2012; pp. 1–9. [Google Scholar]

- Cesetti, A.; Frontoni, E.; Mancini, A.; Zingaretti, P.; Longhi, S. A vision-based guidance system for UAV navigation and safe landing using natural landmarks. In Proceedings of the Selected papers from the 2nd International Symposium on UAVs, Reno, NV, USA, 8–10 June 2009; Springer: Berlin/Heidelberg, Germany, 2010; pp. 233–257. [Google Scholar]

- Eendebak, P.; van Eekeren, A.; den Hollander, R. Landing spot selection for UAV emergency landing. In Proceedings of the SPIE Defense, Security, and Sensing, Baltimore, MD, USA, 29 April–3 May 2013; International Society for Optics and Photonics: Bellingham, WA, USA, 2013; p. 874105. [Google Scholar]

- Ristic, B.; Arulampalam, S.; Gordon, N. Beyond the Kalman filter. IEEE Aerosp. Electron. Syst. Mag. 2004, 19, 37–38. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th International Joint Conference (IJCAI) 1981, Vancouver, BC, Canada, 24–28 August 1981. [Google Scholar]

- Farnebäck, G. Two-frame motion estimation based on polynomial expansion. In Proceedings of the Scandinavian Conference on Image Analysis, Halmstad, Sweden, 29 June–2 July 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 363–370. [Google Scholar]

- Bouguet, J.Y. Pyramidal implementation of the affine lucas kanade feature tracker description of the algorithm. Intel Corp. 2001, 5, 4. [Google Scholar]

- Bradski, G. The opencv library. Dr Dobb’s J. Software Tools 2000, 25, 120–125. [Google Scholar]

- Yoo, S.; Kim, K.; Jung, J.; Chung, A.Y.; Lee, J.; Lee, S.K.; Lee, H.K.; Kim, H. A Multi-Drone Platform for Empowering Drones’ Teamwork. Available online: http://youtu.be/lFaWsEmiQvw (accessed on 28 September 2019).

- Chung, A.Y.; Jung, J.; Kim, K.; Lee, H.K.; Lee, J.; Lee, S.K.; Yoo, S.; Kim, H. Swarming Drones Can Connect You to the Network. Available online: https://youtu.be/zqRQ9W-76oM (accessed on 28 September 2019).

| Number of Particles | 100 | 500 | 2000 | 10,000 |

|---|---|---|---|---|

| RMSE (rad) | 0.047 | 0.011 | 0.004 | 0.002 |

| RMSE (degree) | 2.712 | 0.609 | 0.204 | 0.129 |

| Distance (15 m) | Distance (30 m) | |||

|---|---|---|---|---|

| Without Laser (lx) | Laser Projected (lx) | Without Laser (lx) | Laser Projected (lx) | |

| Sunny | 10,820.45 | 30,306.2 | 11781.6 | 20,264.17 |

| Cloudy | 7031.69 | 31,509.4 | 7250.12 | 15,792 |

| Night | 2.31 | 32,870.2 | 2.82 | 14,827.4 |

| Indoor (flourescent light) | 202.59 | 41,238.2 | 133.4 | 24,834 |

| Number of Particles | 100 | 500 | 2000 | 10,000 |

|---|---|---|---|---|

| Average time lag (ms) | 27.2 | 91.7 | 397.1 | 1642.4 |

| RMSE (rad) | 0.0568 | 0.0239 | 0.0118 | 0.0047 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.Y.; Chung, A.Y.; Shim, H.; Joe, C.; Park, S.; Kim, H. UAV Flight and Landing Guidance System for Emergency Situations †. Sensors 2019, 19, 4468. https://doi.org/10.3390/s19204468

Lee JY, Chung AY, Shim H, Joe C, Park S, Kim H. UAV Flight and Landing Guidance System for Emergency Situations †. Sensors. 2019; 19(20):4468. https://doi.org/10.3390/s19204468

Chicago/Turabian StyleLee, Joon Yeop, Albert Y. Chung, Hooyeop Shim, Changhwan Joe, Seongjoon Park, and Hwangnam Kim. 2019. "UAV Flight and Landing Guidance System for Emergency Situations †" Sensors 19, no. 20: 4468. https://doi.org/10.3390/s19204468

APA StyleLee, J. Y., Chung, A. Y., Shim, H., Joe, C., Park, S., & Kim, H. (2019). UAV Flight and Landing Guidance System for Emergency Situations †. Sensors, 19(20), 4468. https://doi.org/10.3390/s19204468