eHomeSeniors Dataset: An Infrared Thermal Sensor Dataset for Automatic Fall Detection Research

Abstract

:1. Introduction

- Fall datasets are still few, as we will see in Section 2.

- Due to the ethical problems mentioned above, the datasets do not include elderly falls but falls of healthy young volunteers, who fall differently compared to older adults. The most noticeable difference is that young people fall with a greater acceleration than the elderly [9]. Other kinesthetic differences will be described in more detail in Section 3.2. Because of this, the performance of many algorithms could drastically decrease by changing their laboratory environment to that of a real environment (i.e., the elderly home).

- Many fall datasets are based on acceleration data, which has been shown to be insufficient on its own as predictors of falls. In fact, it has been proven that FDSs based on acceleration amplitude produce a large number of false alerts unless post-fall posture identification is also considered [10].

- Although datasets based on video recordings often use low-resolution images (e.g., depth images with 320 × 240 resolution from Kinect sensors), these resolutions still allow for the identification of certain characteristics of people (e.g., height, texture, and gender) and, therefore, present privacy problems.

- Finally, there is no standardized format for presenting fall data. This makes it difficult to use different datasets for application development.

2. Related Work

3. Materials and Methods

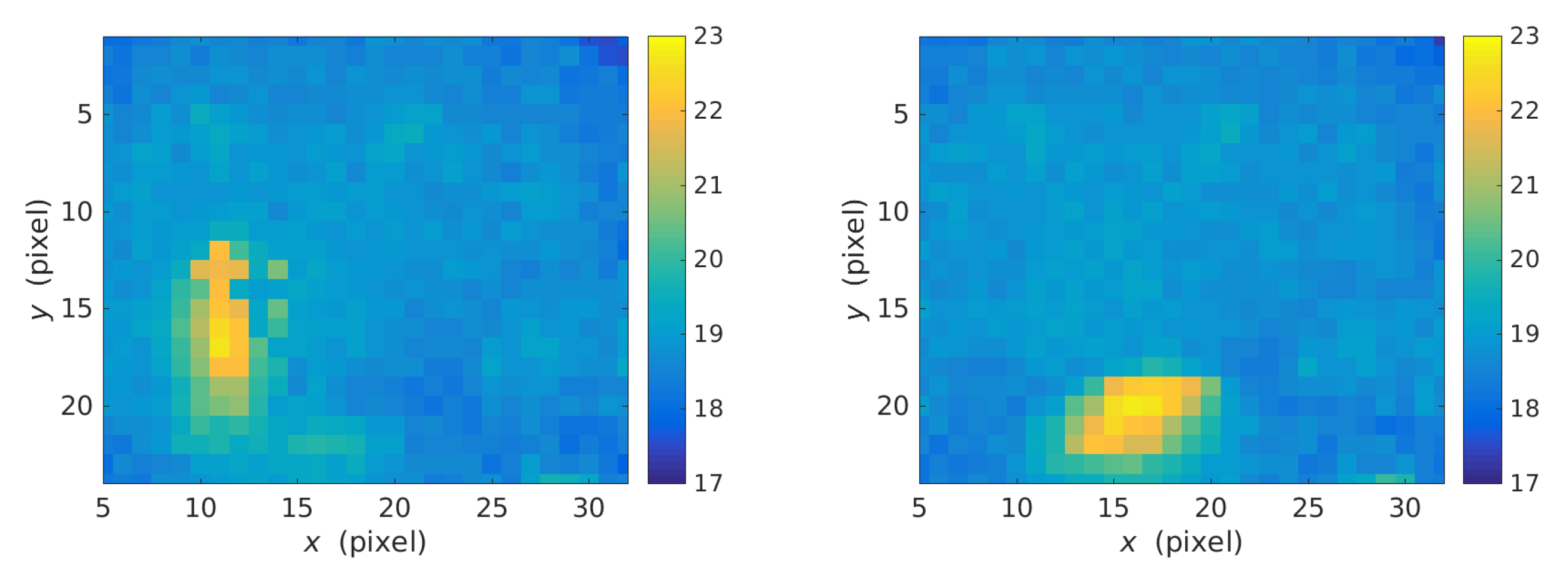

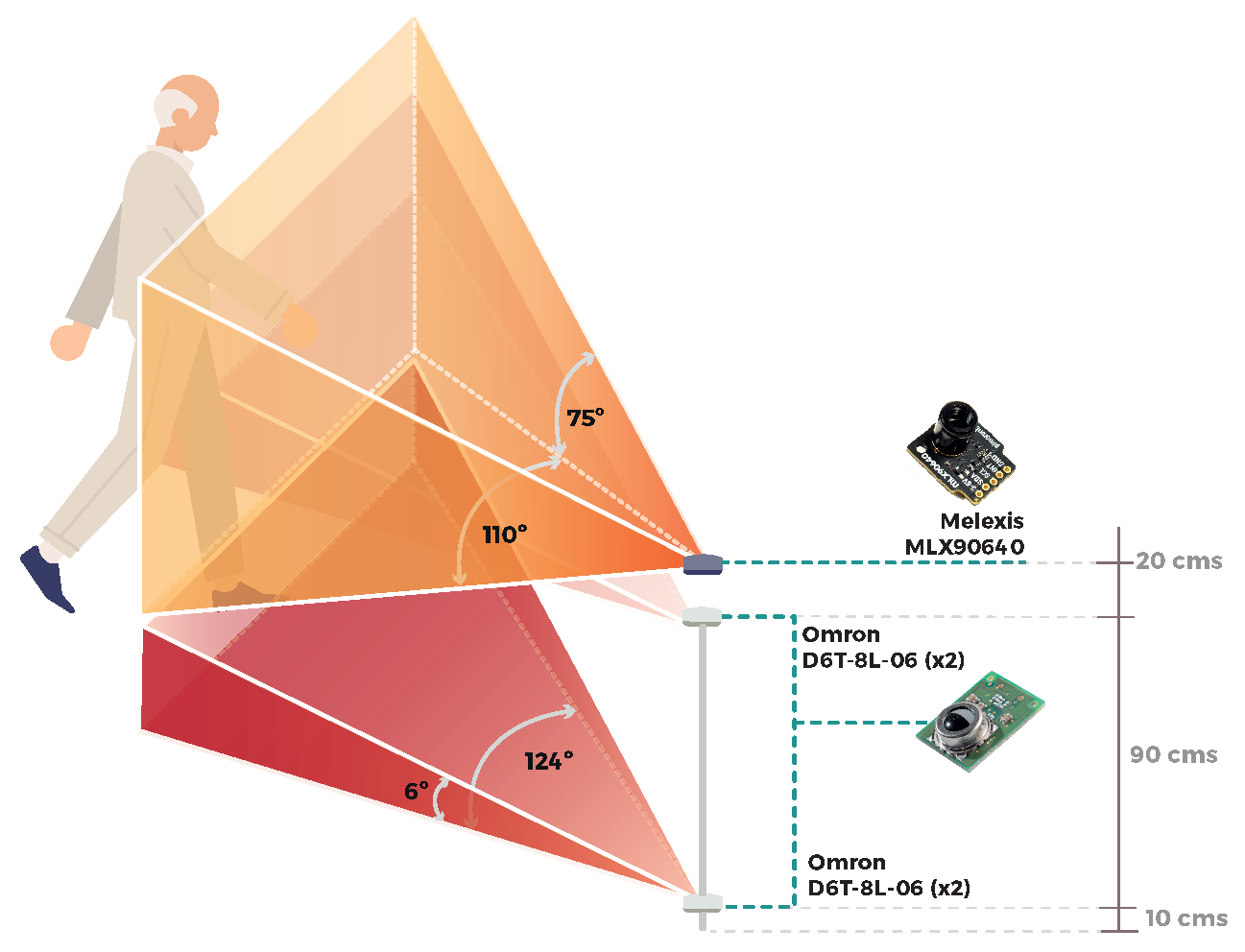

3.1. Data Collection Systems

3.2. Methodology Description

- Backward (from walking backward)

- Forward while walking caused by a trip

- Cause by fainting (slow lateral)

- Backward when trying to sit down (empty chair)

- From bed

- Backward (legs straight)

- Forward (legs straight)

- Forward (knee flexion)

- Backward (from standing; knee flexion, slow)

- Forward (from standing; knee flexion; slow)

- Lateral (from standing; legs straight)

- Lateral (from standing; knee flexion, slow)

- Cause by fainting or falling asleep (slow backward)

- Cause by fainting or falling asleep (slow forward)

- From chair, caused by fainting or falling asleep

3.3. eHomeSeniors Dataset Description

4. Experimental Results: Estimation of the Fall Duration

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ADL | Activities of Daily Living |

| FDS | Fall Detection Systems |

| IMU | Inertial Measurement Unit |

| fps | Frames per second |

References

- Sanderson, W.C.; Scherbov, S.; Gerland, P. Probabilistic population aging. PLoS ONE 2017, 12, e0179171. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. WHO Global Report on Falls Prevention in Older Age; WHO Press: Geneva, Switzerland, 2007. [Google Scholar]

- Fleming, J.; Brayne, C. Inability to get up after falling, subsequent time on floor, and summoning help: Prospective cohort study in people over 90. BMJ 2008, 337. [Google Scholar] [CrossRef]

- Melton, L.J., 3rd; Christen, D.; Riggs, B.L.; Achenbach, S.J.; Müller, R.; van Lenthe, G.H.; Amin, S.; Atkinson, E.J.; Khosla, S. Assessing forearm fracture risk in postmenopausal women. Osteoporos. Int. 2010, 21, 1161–1169. [Google Scholar] [CrossRef] [PubMed]

- Tinetti, M.E.; Liu, W.L.; Claus, E.B. Predictors and Prognosis of Inability to Get Up After Falls Among Elderly Persons. JAMA 1993, 269, 65–70. [Google Scholar] [CrossRef] [PubMed]

- Ambrose, A.F.; Paul, G.; Hausdorff, J.M. Risk factors for falls among older adults: A review of the literature. Maturitas 2013, 75, 51–61. [Google Scholar] [CrossRef]

- Forbes, G.; Massie, S.; Craw, S. Fall prediction using behavioural modelling from sensor data in smart homes. Artif. Intell. Rev. 2019. [Google Scholar] [CrossRef]

- Vadivelu, S.; Ganesan, S.; Murthy, O.V.R.; Dhall, A. Thermal Imaging Based Elderly Fall Detection. In Proceedings of the Computer Vision—ACCV 2016 Workshops—ACCV 2016 International Workshops, Taipei, Taiwan, 20–24 November 2016; Chen, C., Lu, J., Ma, K., Eds.; Revised Selected Papers, Part III; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2017; Volume 10118, pp. 541–553. [Google Scholar] [CrossRef]

- Sucerquia, A.; López, J.D.; Vargas-Bonilla, J.F. SisFall: A Fall and Movement Dataset. Sensors 2017, 17, 198. [Google Scholar] [CrossRef]

- Wang, F.T.; Chan, H.L.; Hsu, M.H.; Lin, C.K.; Chao, P.K.; Chang, Y.J. Threshold-based fall detection using a hybrid of tri-axial accelerometer and gyroscope. Physiol. Meas. 2018, 39, 105002. [Google Scholar] [CrossRef]

- Taramasco, C.; Rodenas, T.; Martinez, F.; Fuentes, P.; Munoz, R.; Olivares, R.; De Albuquerque, V.H.C.; Demongeot, J. A Novel Monitoring System for Fall Detection in Older People. IEEE Access 2018, 6, 43563–43574. [Google Scholar] [CrossRef]

- Taniguchi, Y.; Nakajima, H.; Tsuchiya, N.; Tanaka, J.; Aita, F.; Hata, Y. A falling detection system with plural thermal array sensors. In Proceedings of the 2014 Joint 7th International Conference on Soft Computing and Intelligent Systems (SCIS) and 15th International Symposium on Advanced Intelligent Systems (ISIS), Kitakyushu, Japan, 3–6 December 2014; pp. 673–678. [Google Scholar] [CrossRef]

- Adolf, J.; Macas, M.; Lhotska, L.; Dolezal, J. Deep neural network based body posture recognitions and fall detection from low resolution infrared array sensor. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2394–2399. [Google Scholar] [CrossRef]

- Schwickert, L.; Becker, C.; Lindemann, U.; Maréchal, C.; Bourke, A.; Chiari, L.; Helbostad, J.L.; Zijlstra, W.; Aminian, K.; Todd, C.; et al. Fall detection with body-worn sensors. Z. Für Gerontol. Geriatr. 2013, 46, 706–719. [Google Scholar] [CrossRef]

- Zhang, Z.; Conly, C.; Athitsos, V. A survey on vision-based fall detection. In Proceedings of the 8th ACM International Conference on PErvasive Technologies Related to Assistive Environments, PETRA 2015, Corfu, Greece, 1–3 July 2015; Makedon, F., Ed.; ACM: New York, NY, USA, 2015; pp. 46:1–46:7. [Google Scholar] [CrossRef]

- Casilari, E.; Santoyo-Ramón, J.A.; Cano-García, J.M. Analysis of Public Datasets for Wearable Fall Detection Systems. Sensors 2017, 17, 1513. [Google Scholar] [CrossRef] [PubMed]

- Dhiman, C.; Kumar-Vishwakarma, D. A review of state-of-the-art techniques for abnormal human activity recognition. Eng. Appl. Artif. Intell. 2019, 77, 21–45. [Google Scholar] [CrossRef]

- Mardini, M.T.; Iraqi, Y.; Agoulmine, N. A Survey of Healthcare Monitoring Systems for Chronically Ill Patients and Elderly. J. Med. Syst. 2019, 43, 50:1–50:21. [Google Scholar] [CrossRef] [PubMed]

- Fan, Y.; Levine, M.D.; Wen, G.; Qiu, S. A deep neural network for real-time detection of falling humans in naturally occurring scenes. Neurocomputing 2017, 260, 43–58. [Google Scholar] [CrossRef]

- Martínez-Villaseñor, L.; Ponce, H.; Brieva, J.; Moya-Albor, E.; Núñez-Martínez, J.; Peñafort-Asturiano, C. UP-Fall Detection Dataset: A Multimodal Approach. Sensors 2019, 19, 1988. [Google Scholar] [CrossRef]

- Tran, T.; Le, T.; Pham, D.; Hoang, V.; Khong, V.; Tran, Q.; Nguyen, T.; Pham, C. A multi-modal multi-view dataset for human fall analysis and preliminary investigation on modality. In Proceedings of the 24th International Conference on Pattern Recognition, ICPR 2018, Beijing, China, 20–24 August 2018; pp. 1947–1952. [Google Scholar] [CrossRef]

- Peñafort-Asturiano, C.J.; Santiago, N.; Núñez-Martínez, J.P.; Ponce, H.; Martínez-Villaseñor, L. Challenges in Data Acquisition Systems: Lessons Learned from Fall Detection to Nanosensors. In Proceedings of the 2018 Nanotechnology for Instrumentation and Measurement (NANOfIM), Mexico City, Mexico, 7–8 November 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Martínez-Villaseñor, M.; Ponce, H.; Espinosa-Loera, R.A. Multimodal Database for Human Activity Recognition and Fall Detection. In Proceedings of the 12th International Conference on Ubiquitous Computing and Ambient Intelligence, UCAmI 2018, Punta Cana, Dominican Republic, 4–7 December 2018; Bravo, J., Baños, O., Eds.; MDPI: Basel, Switzerland, 2018; Volume 2, p. 1237. [Google Scholar] [CrossRef]

- Tran, T.; Le, T.; Hoang, V.; Vu, H. Continuous detection of human fall using multimodal features from Kinect sensors in scalable environment. Comput. Methods Progr. Biomed. 2017, 146, 151–165. [Google Scholar] [CrossRef]

- Baldewijns, G.; Debard, G.; Mertes, G.; Vanrumste, B.; Croonenborghs, T. Bridging the gap between real-life data and simulated data by providing a highly realistic fall dataset for evaluating camera-based fall detection algorithms. Health Technol. Lett. 2016, 3, 6–11. [Google Scholar] [CrossRef]

- Chua, J.; Chang, Y.C.; Lim, W.K. A simple vision-based fall detection technique for indoor video surveillance. Signal Image Video Process 2015, 9, 623–633. [Google Scholar] [CrossRef]

- Zhang, Z.; Conly, C.; Athitsos, V. Evaluating Depth-Based Computer Vision Methods for Fall Detection under Occlusions. In Proceedings of the Advances in Visual Computing, 10th International Symposium, ISVC 2014, Las Vegas, NV, USA, 8–10 December 2014; Bebis, G., Boyle, R., Parvin, B., Koracin, D., McMahan, R., Jerald, J., Zhang, H., Drucker, S.M., Kambhamettu, C., Choubassi, M.E., et al., Eds.; Proceedings, Part II; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2014; Volume 8888, pp. 196–207. [Google Scholar] [CrossRef]

- Ma, X.; Wang, H.; Xue, B.; Zhou, M.; Ji, B.; Li, Y. Depth-Based Human Fall Detection via Shape Features and Improved Extreme Learning Machine. IEEE J. Biomed. Health Inform. 2014, 18, 1915–1922. [Google Scholar] [CrossRef]

- Gasparrini, S.; Cippitelli, E.; Spinsante, S.; Gambi, E. A Depth-Based Fall Detection System Using a Kinect® Sensor. Sensors 2014, 14, 2756–2775. [Google Scholar] [CrossRef]

- Kwolek, B.; Kepski, M. Human fall detection on embedded platform using depth maps and wireless accelerometer. Comput. Methods Progr. Biomed. 2014, 117, 489–501. [Google Scholar] [CrossRef] [PubMed]

- Charfi, I.; Mitéran, J.; Dubois, J.; Atri, M.; Tourki, R. Optimized spatio-temporal descriptors for real-time fall detection: Comparison of support vector machine and Adaboost-based classification. J. Electron. Imaging 2013, 22, 041106. [Google Scholar] [CrossRef]

- Charfi, I.; Mitéran, J.; Dubois, J.; Atri, M.; Tourki, R. Definition and Performance Evaluation of a Robust SVM Based Fall Detection Solution. In Proceedings of the Eighth International Conference on Signal Image Technology and Internet Based Systems, SITIS 2012, Sorrento, Naples, Italy, 25–29 November 2012; Yétongnon, K., Chbeir, R., Dipanda, A., Gallo, L., Eds.; IEEE: Piscataway, NJ, USA, 2012; pp. 218–224. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, W.; Metsis, V.; Athitsos, V. A viewpoint-independent statistical method for fall detection. In Proceedings of the 21st International Conference on Pattern Recognition, ICPR 2012, Tsukuba, Japan, 11–15 November 2012; pp. 3626–3630. [Google Scholar]

- Auvinet, E.; Reveret, L.; St-Arnaud, A.; Rousseau, J.; Meunier, J. Fall detection using multiple cameras. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–24 August 2008; pp. 2554–2557. [Google Scholar] [CrossRef]

- Auvinet, E.; Multon, F.; Saint-Arnaud, A.; Rousseau, J.; Meunier, J. Fall Detection with Multiple Cameras: An Occlusion-Resistant Method Based on 3-D Silhouette Vertical Distribution. IEEE Trans. Inf. Technol. Biomed. 2011, 15, 290–300. [Google Scholar] [CrossRef] [PubMed]

- Bulotta, S.; Mahmoud, H.; Masulli, F.; Palummeri, E.; Rovetta, S. Fall Detection Using an Ensemble of Learning Machines. In Neural Nets and Surroundings. Smart Innovation, Systems and Technologies; Apolloni, B., Bassis, S.E.A., Morabito, F., Eds.; Springer: Berlin/Heidelberg, Germnay, 2013; Volume 19. [Google Scholar] [CrossRef]

- Pannurat, N.; Thiemjarus, S.; Nantajeewarawat, E. Automatic Fall Monitoring: A Review. Sensors 2014, 14, 12900–12936. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Medrano, C.; Igual, R.; Plaza, I.; Castro, M. Detecting Falls as Novelties in Acceleration Patterns Acquired with Smartphones. PLoS ONE 2014, 9, e94811. [Google Scholar] [CrossRef] [PubMed]

- Liu, K.; Hsieh, C.; Hsu, S.J.; Chan, C. Impact of Sampling Rate on Wearable-Based Fall Detection Systems Based on Machine Learning Models. IEEE Sens. J. 2018, 18, 9882–9890. [Google Scholar] [CrossRef]

- Mauldin, T.R.; Canby, M.E.; Metsis, V.; Ngu, A.H.H.; Rivera, C.C. SmartFall: A Smartwatch-Based Fall Detection System Using Deep Learning. Sensors 2018, 18, 3363. [Google Scholar] [CrossRef] [PubMed]

- Sherrington, C.; Tiedemann, A. Physiotherapy in the prevention of falls in older people. J. Physiother. 2015, 61, 54–60. [Google Scholar] [CrossRef] [Green Version]

- Edelberg, H.K. Falls and function. How to prevent falls and injuries in patients with impaired mobility. Geriatrics 2001, 56, 41–45. [Google Scholar]

- Palvanen, M.; Kannus, P.; Parkkari, J.; Pitkäjärvi, T.; Pasanen, M.; Vuori, I.; Järvinen, M. The injury mechanisms of osteoporotic upper extremity fractures among older adults: A controlled study of 287 consecutive patients and their 108 controls. Osteoporos. Int. 2000, 11, 822–831. [Google Scholar] [CrossRef]

- Sterling, D.A.; O’Connor, J.A.; Bonadies, J. Geriatric falls: Injury severity is high and disproportionate to mechanism. J. Trauma Inj. Infect. Crit. Care 2001, 50, 116–119. [Google Scholar] [CrossRef] [PubMed]

- Parkkari, J.; Kannus, P.; Palvanen, M.; Natri, A.; Vainio, J.; Aho, H.; Järvinen, M. Majority of Hip Fractures Occur as a Result of a Fall and Impact on the Greater Trochanter of the Femur: A Prospective Controlled Hip Fracture Study with 206 Consecutive Patients. Calcif. Tissue Int. 1999, 65, 183–187. [Google Scholar] [CrossRef] [PubMed]

- Pierleoni, P.; Belli, A.; Maurizi, L.; Palma, L.; Pernini, L.; Paniccia, M.; Valenti, S. A Wearable Fall Detector for Elderly People Based on AHRS and Barometric Sensor. IEEE Sens. J. 2016, 16, 6733–6744. [Google Scholar] [CrossRef]

| Devices | Examples | Type of Data | Positive | Negative |

|---|---|---|---|---|

| Wereable devices | smartwatch, smartphone (compass, accelerometer, magnetometer, and gyroscope), inertial measurement unit (IMU), and EEG | acceleration, orientation data, rotation data, angular velocity, magnetic signal, and brain electrical activity | privacy-friendly, rich data, and highly accurate performance | invasive and depends on both the user’s memory and abilities to use them all the time. |

| Ambient-based sensors | camera, Kinect sensor, infrared thermal sensor, and pressure sensor (on the floor), | low-resolution video, low-resolution image (RGB, depth, or skeleton data), and ambient light | noninvasive, user independence, and long battery life | intrusive (it depends on resolution and data quality); only suitable for closed spaces; noise from other objects, people, or pets. |

| Year | Dataset Name | Ref. | Falls | Participants | Data Collection Systems | ||||

|---|---|---|---|---|---|---|---|---|---|

| # | #types | # | #F | #M | Age | ||||

| 2019 | UP-Fall | [20] | 255 | 5 | 17 | 8 | 9 | 18–24 | 6 infrared sensors, 2 cameras (18 fps), 5 IMUs with accelerometer, gyroscope, ambient light, 1 EEG |

| 2018 | CMDFALL | [21] | 400 | 8 | 50 | 20 | 30 | 21–40 | 7 overlapped Kinect sensors and 2 WAX3 wireless accelerometers |

| FALL-UP | [22] | 255 | 5 | 17 | ? | ? | ? | 6 infrared sensors; 2 cameras; 1 EEG; 5 wearable inertial sensors on left ankle, right wrist, neck, waist, and right pocket with accelerometer, angular velocity, and luminosity | |

| UP-Fall | [23] | 60 | 5 | 4 | 2 | 2 | 22–58 | 4 ambient infrared presence/absence sensors, 1 RaspberryPI3, 4 IMUs with accelerometer, ambient light, angular velocity, 1 EEG | |

| 2017 | Dataset-D | [24] | 95 | 2 | 4 | ? | ? | 30–40 | 4 Kinect sensors (RGB, depth, skeleton data; 20 fps, 640 × 480) |

| MICAFALL-1 | [24] | 40 | 2 | 20 | ? | ? | 25–35 | idem | |

| Thermal Simulated Fall | [8] | 35 | ? | ? | ? | ? | ? | 9 FLIR ONE thermal cameras (640 × 480) mounted to Android phone | |

| 2016 | KUL Simulated Fall | [25] | 55 | ? | 10 | ? | ? | ? | 5 web-cameras (12 fps, 640 × 480) |

| 2015 | – | [26] | 21 | 4 | ? | ? | ? | ? | IP camera (Dlink DCS-920) through Wi-Fi connection (MJPEG, 320 × 240) |

| EDF | [15] | 320 | ? | 10 | ? | ? | ? | 2 Kinect sensors (depth maps, 320 × 240, 30 fps) | |

| 2014 | OCCU | [27] | 60 | 1 | 5 | ? | ? | ? | idem |

| SDU Fall | [28] | 30 | 1 | 10 | 2–8 | 2–8 | young | 1 Kinect sensor | |

| TST | [29] | 132 | 4 | 11 | ? | ? | 22–39 | 1 Kinect sensor (depth maps); 2 IMUs on waist and right wrist with accelerometer | |

| UR Fall | [30] | 30 | 2 | 5 | 0 | 5 | >26 | 2 Kinect sensors (depth maps); 1 IMU on waist (near the pelvis) with accelerometer | |

| 2013 | Le2i fall | [31] | 143 | 3 | 11 | ? | ? | ? | 1 video camera in 4 different locations (25 fps, 320 × 240) |

| 2012 | Le2i fall | [32] | 192 | 3 | 11 | ? | ? | ? | idem |

| vlm1 | [33] | 26 | ? | 6 | ? | ? | ? | 2 Kinect sensors (RGB, depth; 10 fps, 320 × 240) | |

| 2008 | Multi camera fall | [34,35] | 22 | 8 | 1 | 0 | 1 | adult | 8 video cameras |

| average | 121 | 4 | 12 | ||||||

| median | 60 | 4 | 10 | ||||||

| Fall | Reference | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| by | ID | Description | [34] | [32] | [29] | [30] | [26] | [9] | [24] | [23] | [22] | [21] | [20] | # |

| general | F1 | Fall (from standing) | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | 2 |

| F2 | Backward (from standing) | ✗ | ✗ | ✗ | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | ✓ | 5 | |

| F3 | Forward (from standing) | ✗ | ✓ | ✗ | ✓ | ✓ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | 4 | |

| F4 | Lateral (from standing) | ✗ | ✗ | ✗ | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | ✓ | 5 | |

| F5 | Backward (from walking) | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | 1 | |

| F6 | Forward (from walking) | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | 1 | |

| F7 | Leftward (from walking) | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | 1 | |

| F8 | Rightward (from walking) | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | 1 | |

| cause | F9 | Forward while walking caused by a slip | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 |

| F10 | Backward while walking caused by a slip | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F11 | Lateral while walking caused by a slip | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F12 | Forward while walking caused by a trip | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F13 | Forward while jogging caused by a trip | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F14 | Cause by fainting/syncope/loss of balance | ✗ | ✓ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | 2 | |

| F15 | Vertical fall while walking, by fainting | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F16 | Forward while sitting, caused by fainting | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F17 | Backward while sitting, caused by fainting | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F18 | Lateral while sitting, caused by fainting | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F19 | Fall while walking caused by fainting | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| (use of hands in a table to dampen fall) | ||||||||||||||

| F20 | Forward when trying to get up | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F21 | Lateral when trying to get up | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F22 | Forward when trying to sit down | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F23 | Backward when trying to sit down | ✓ | ✓ | ✗ | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | ✓ | 5 | |

| F24 | Lateral when trying to sit down | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F25 | Leftward when trying to sit down | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | 1 | |

| F26 | Rightward when trying to sit down | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | 1 | |

| location | F27 | On bed (then leftward) | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | 1 |

| F28 | On bed (then rightward) | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | 1 | |

| F29 | From chair | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | 2 | |

| impact | F30 | Fall (impact on hands and elbows) | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | 1 |

| F31 | Forward (impact on hands and elbows) | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | 1 | |

| F32 | Forward (impact on knee) | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✓ | 2 | |

| termination | F33 | Backward (end up sitting) | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 |

| F34 | Backward (end up lying) | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F35 | Forward (end up lying) | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F36 | Lateral (end up lying) | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F37 | Forward on knees (stay down) | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | 1 | |

| articulation | F38 | Fall (legs straight) | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 |

| F39 | Fall Backward (legs straight) | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F40 | Fall Forward (legs straight) | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F41 | Fall Leftward (legs straight) | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F42 | Fall Rightward (legs straight) | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| F43 | Fall Fall (knee flexion) | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | 2 | |

| F44 | Fall Rightward (knee flexion) | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | 1 | |

| # fall types | 8 | 3 | 4 | 5 | 4 | 15 | 2 | 5 | 5 | 8 | 5 | |||

| # | Gender | Age | Weight | Height | |

|---|---|---|---|---|---|

| group 1 | 1 | F | 37 | 59 | 1.64 |

| 2 | F | 34 | 51 | 1.62 | |

| 3 | M | 35 | 62 | 1.80 | |

| group 2 | 4 | F | 27 | 49 | 1.52 |

| 5 | M | 28 | 89 | 1.73 | |

| 6 | M | 29 | 66 | 1.65 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Riquelme, F.; Espinoza, C.; Rodenas, T.; Minonzio, J.-G.; Taramasco, C. eHomeSeniors Dataset: An Infrared Thermal Sensor Dataset for Automatic Fall Detection Research. Sensors 2019, 19, 4565. https://doi.org/10.3390/s19204565

Riquelme F, Espinoza C, Rodenas T, Minonzio J-G, Taramasco C. eHomeSeniors Dataset: An Infrared Thermal Sensor Dataset for Automatic Fall Detection Research. Sensors. 2019; 19(20):4565. https://doi.org/10.3390/s19204565

Chicago/Turabian StyleRiquelme, Fabián, Cristina Espinoza, Tomás Rodenas, Jean-Gabriel Minonzio, and Carla Taramasco. 2019. "eHomeSeniors Dataset: An Infrared Thermal Sensor Dataset for Automatic Fall Detection Research" Sensors 19, no. 20: 4565. https://doi.org/10.3390/s19204565

APA StyleRiquelme, F., Espinoza, C., Rodenas, T., Minonzio, J.-G., & Taramasco, C. (2019). eHomeSeniors Dataset: An Infrared Thermal Sensor Dataset for Automatic Fall Detection Research. Sensors, 19(20), 4565. https://doi.org/10.3390/s19204565