Lightweight Convolutional Neural Network and Its Application in Rolling Bearing Fault Diagnosis under Variable Working Conditions

Abstract

:1. Introduction

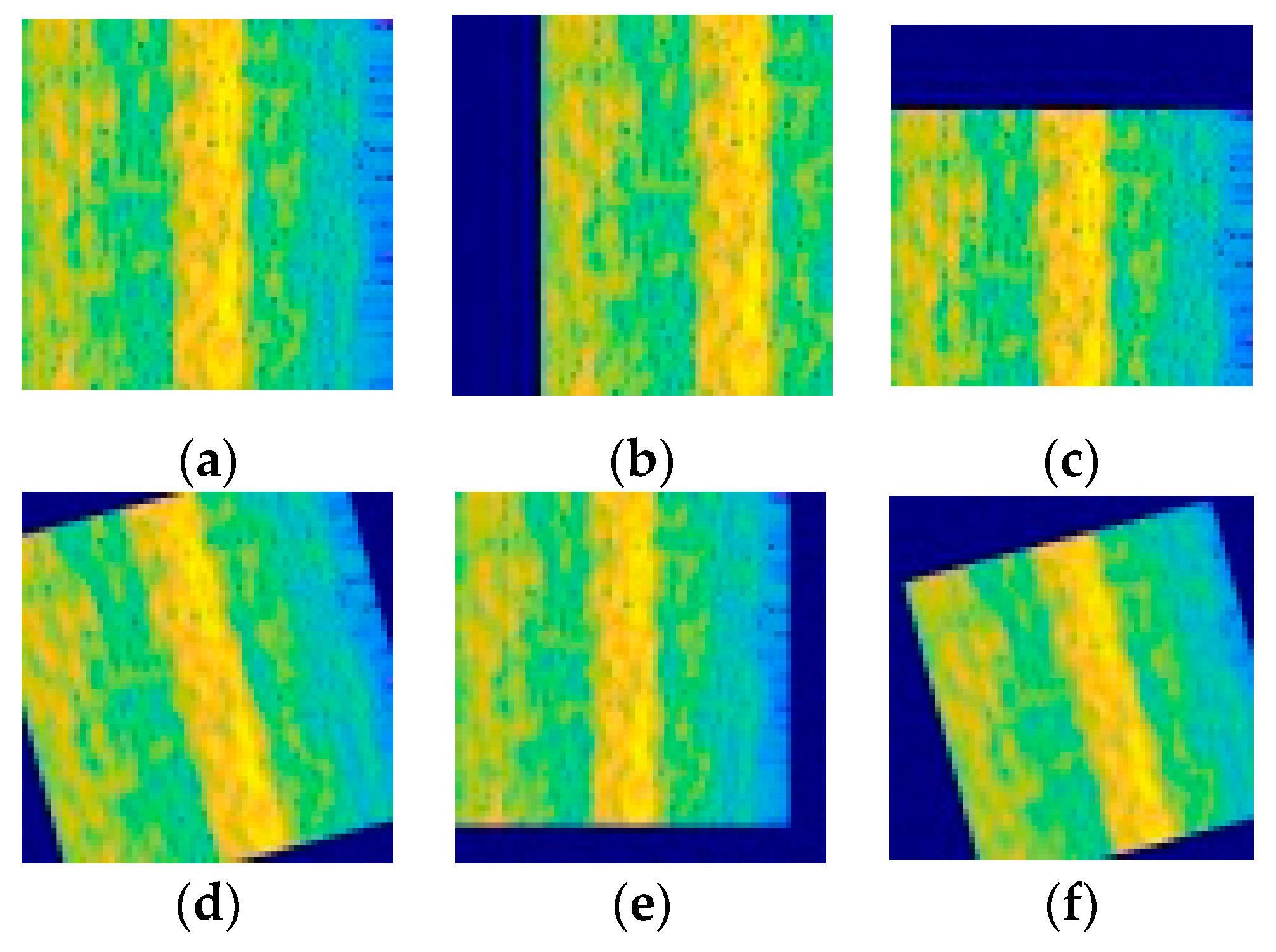

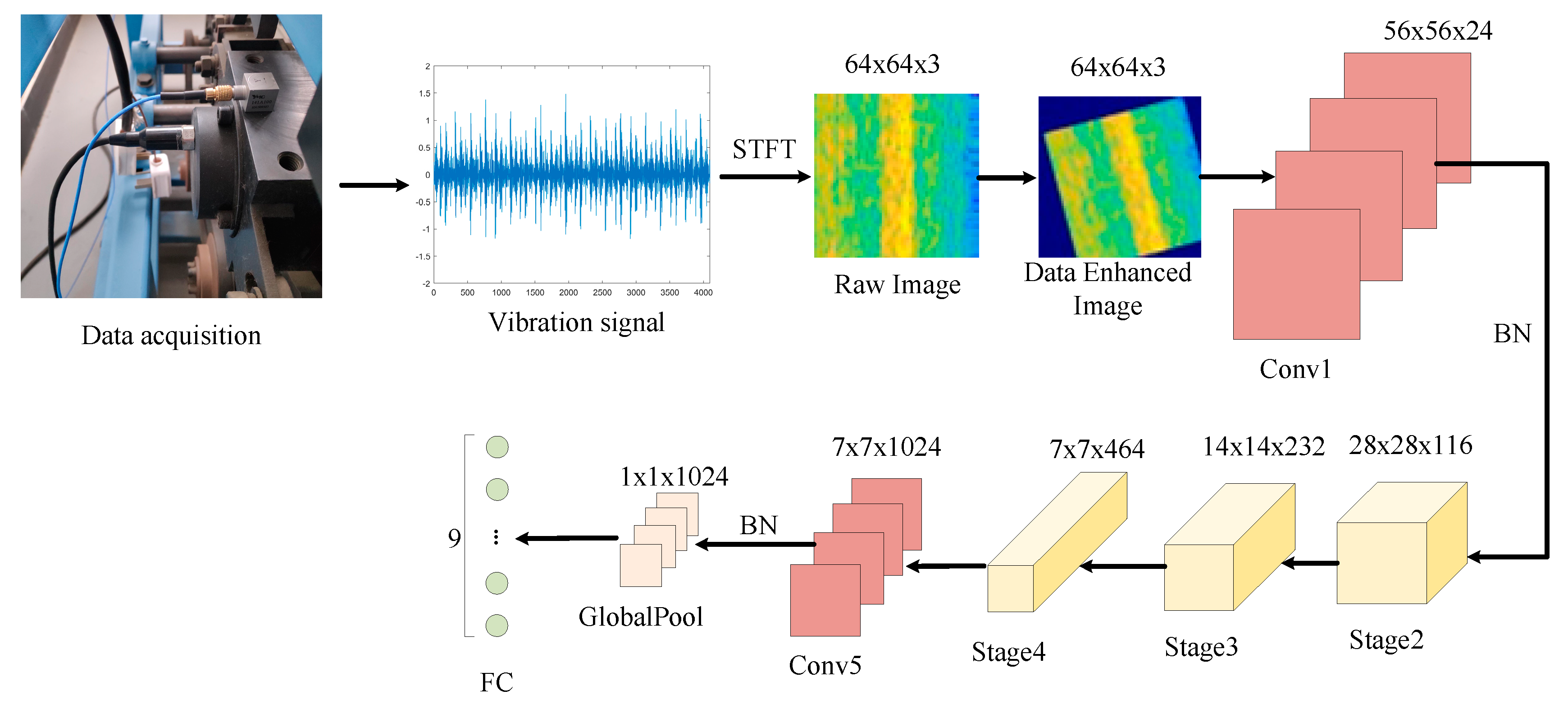

- Data augmentation is performed on the training set using the principle of graphics [31], which improves the size of the dataset and enhances the generalization ability of the model.

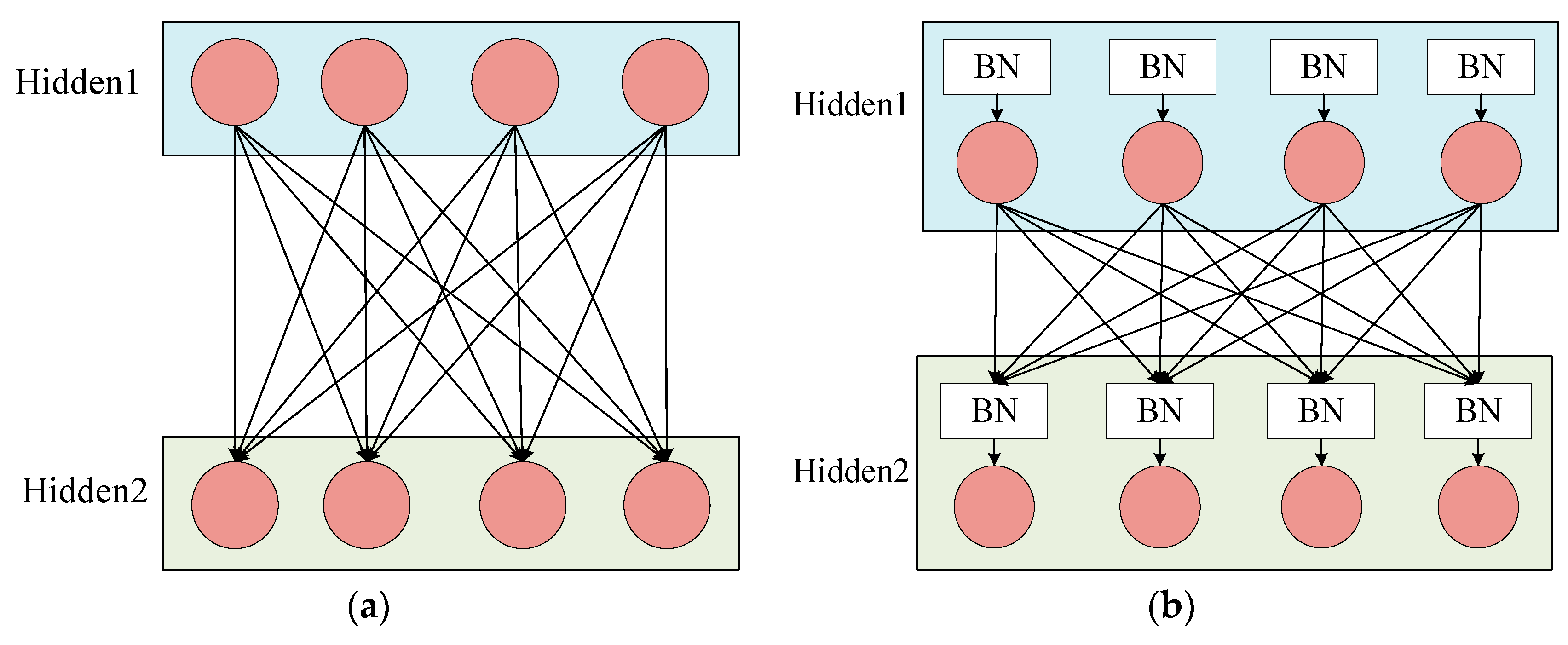

- Adding the Batch Normalization (BN) layer at the input and output positions of the model prevents the model from overfitting [32].

- The L2 regularization is added to the fully connected layer of the model to realize the function of weight attenuation, which reduces the overfitting to some extent.

2. Theoretical Background

2.1. Batch Normalization

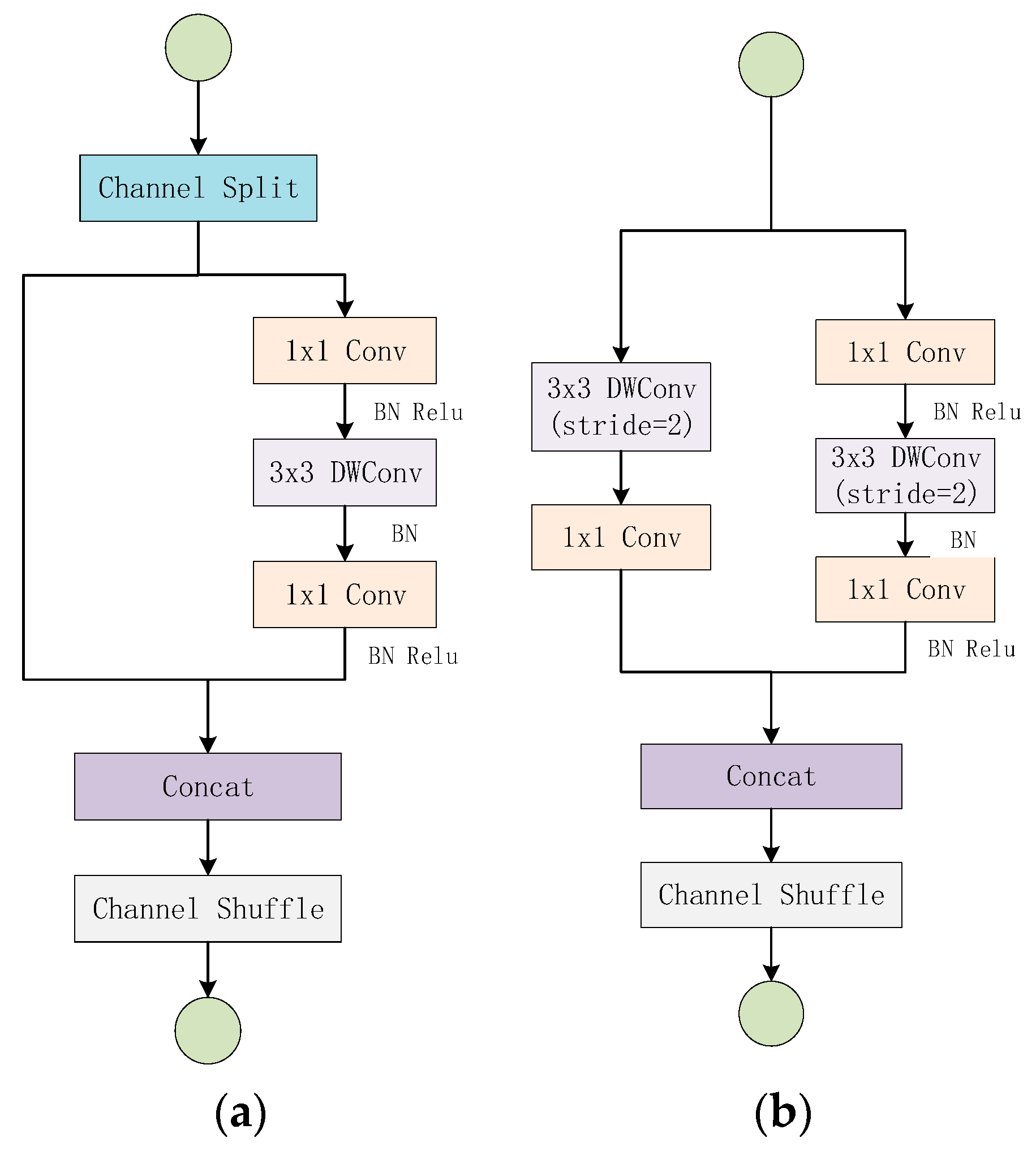

2.2. Shufflenet V2

3. Details of the Proposed Method

3.1. Data Preprocessing

3.2. Improvement of Shufflenet V2

3.3. The Use of Optimizer

| Algorithm 1 RMSProp Algorithm |

| 1: Initialize accumulation variables |

| 2: While stopping criterion not met do |

| 3: Sample a minibatch of examples from the training set with corresponding targets |

| 4: Computing gradient: |

| 5: Accumulate squared gradient: 6: Compute parameter update: |

| 7: Apply update: |

| 8: end while |

4. Experimental Verification and Analysis

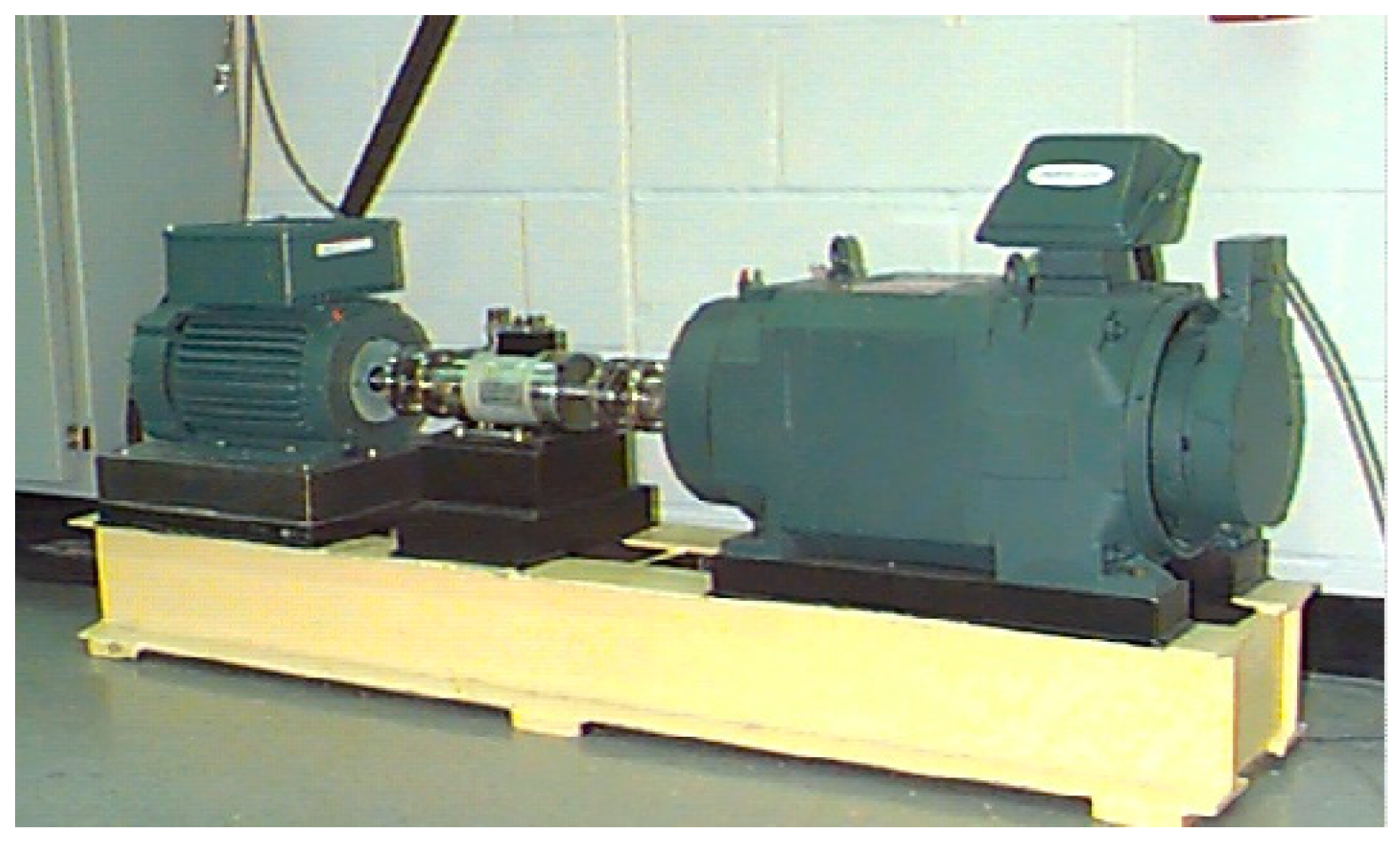

4.1. Case 1: Generalization on Different Loads in the Case Western Reserve University Dataset

4.1.1. Data Description

4.1.2. Introduction to Contrast Experiments

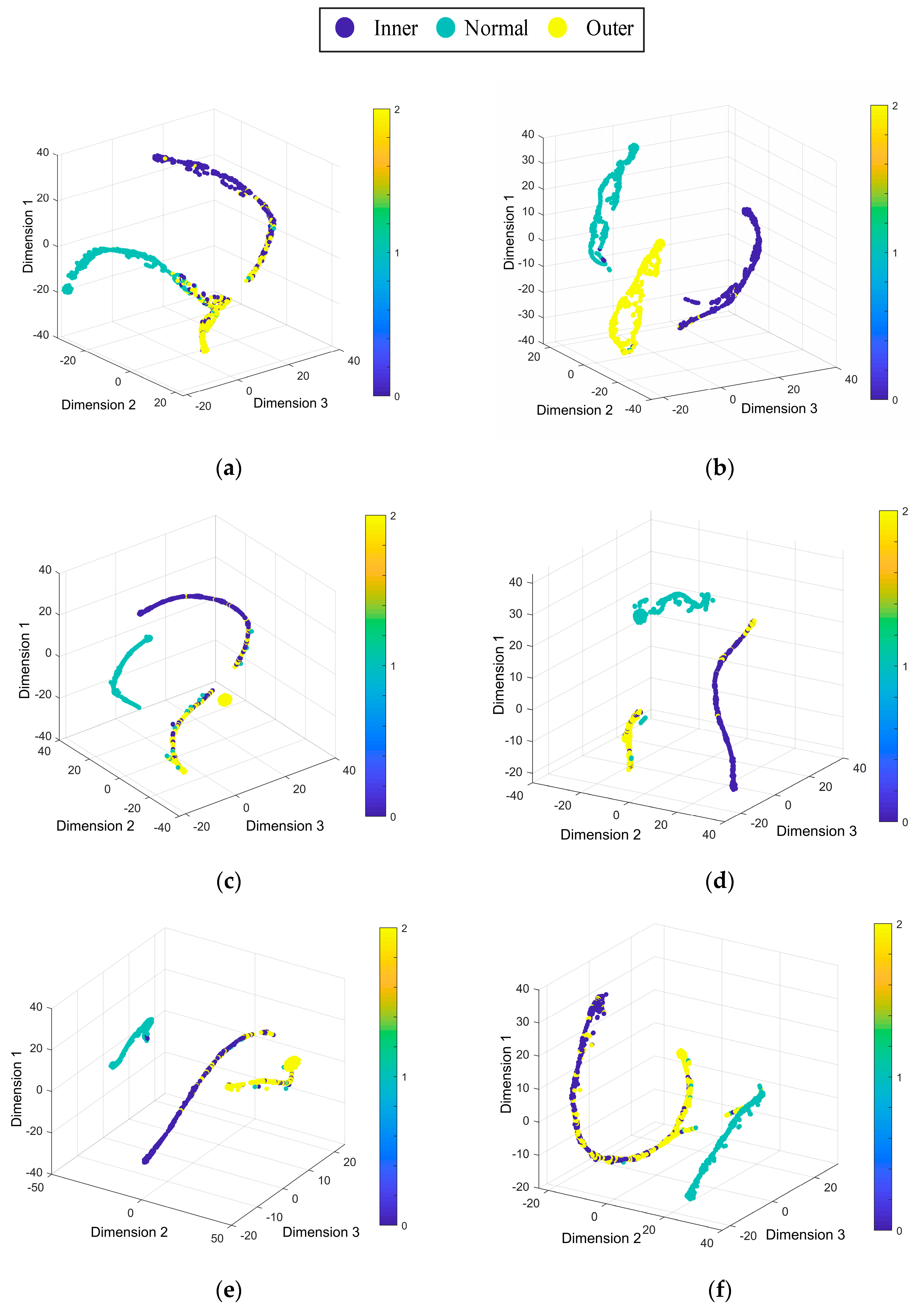

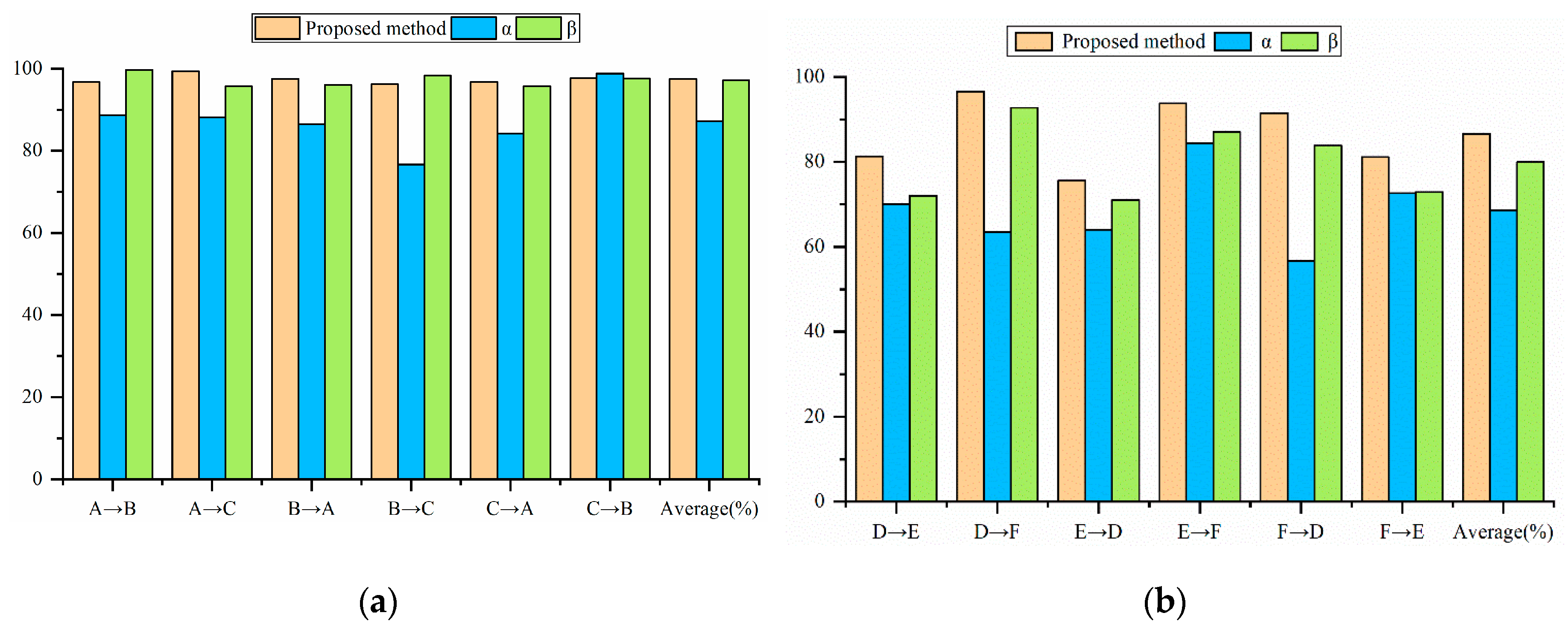

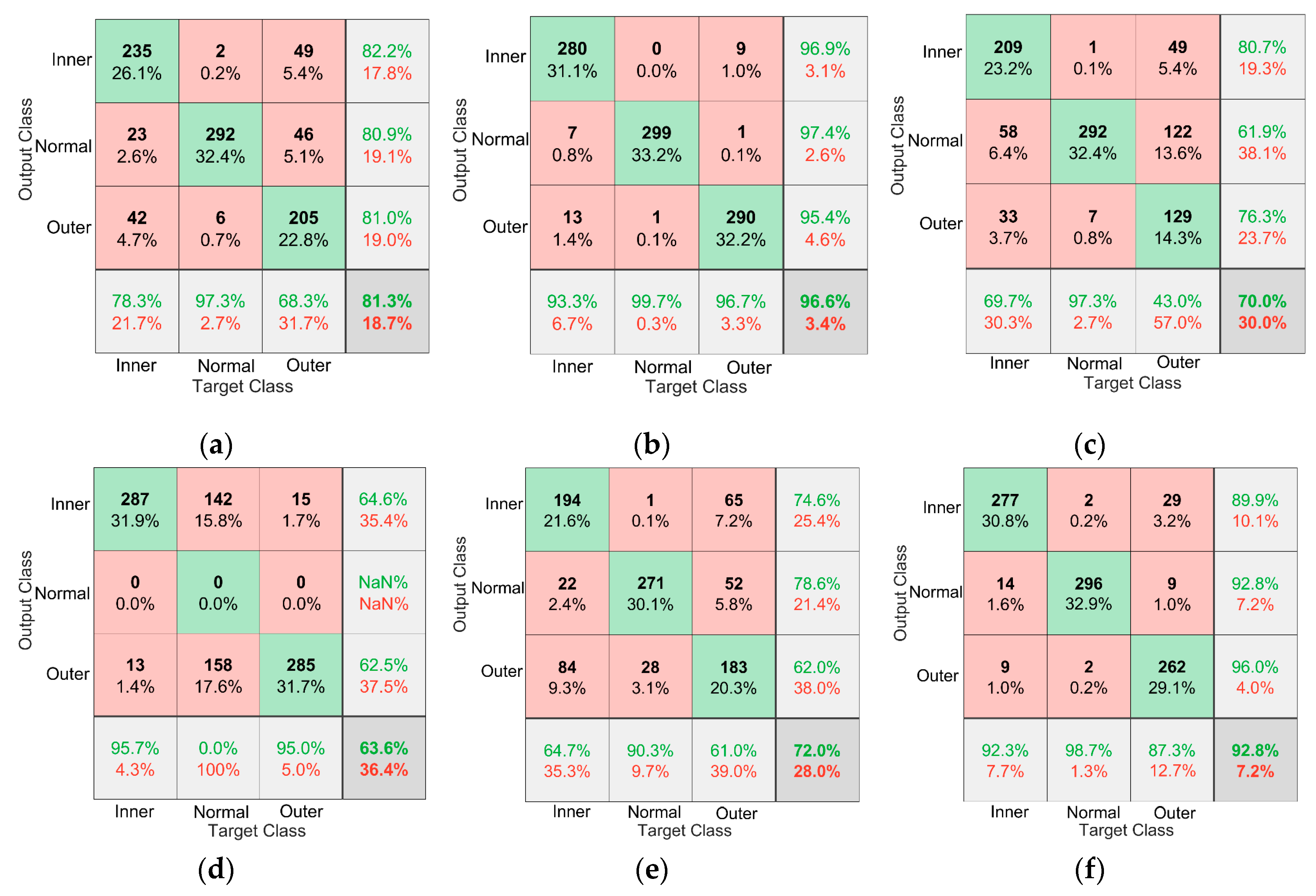

4.1.3. Diagnostic Results and Analysis

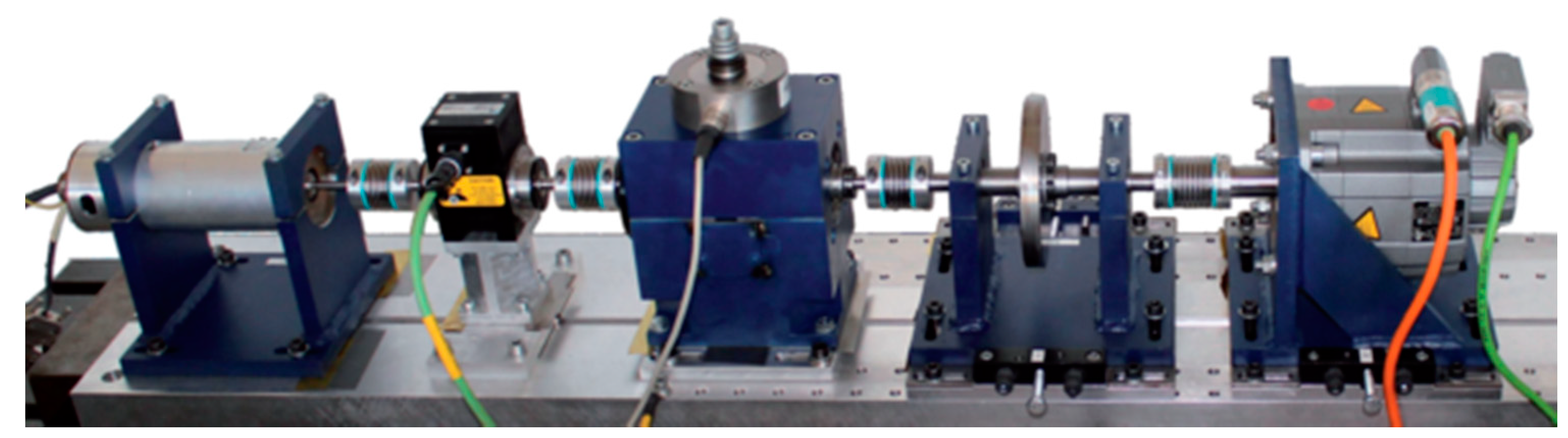

4.2. Case 2: Generalization on Different Loads in the Paderborn University Dataset

4.2.1. Data Description

4.2.2. Diagnostic Results and Analysis

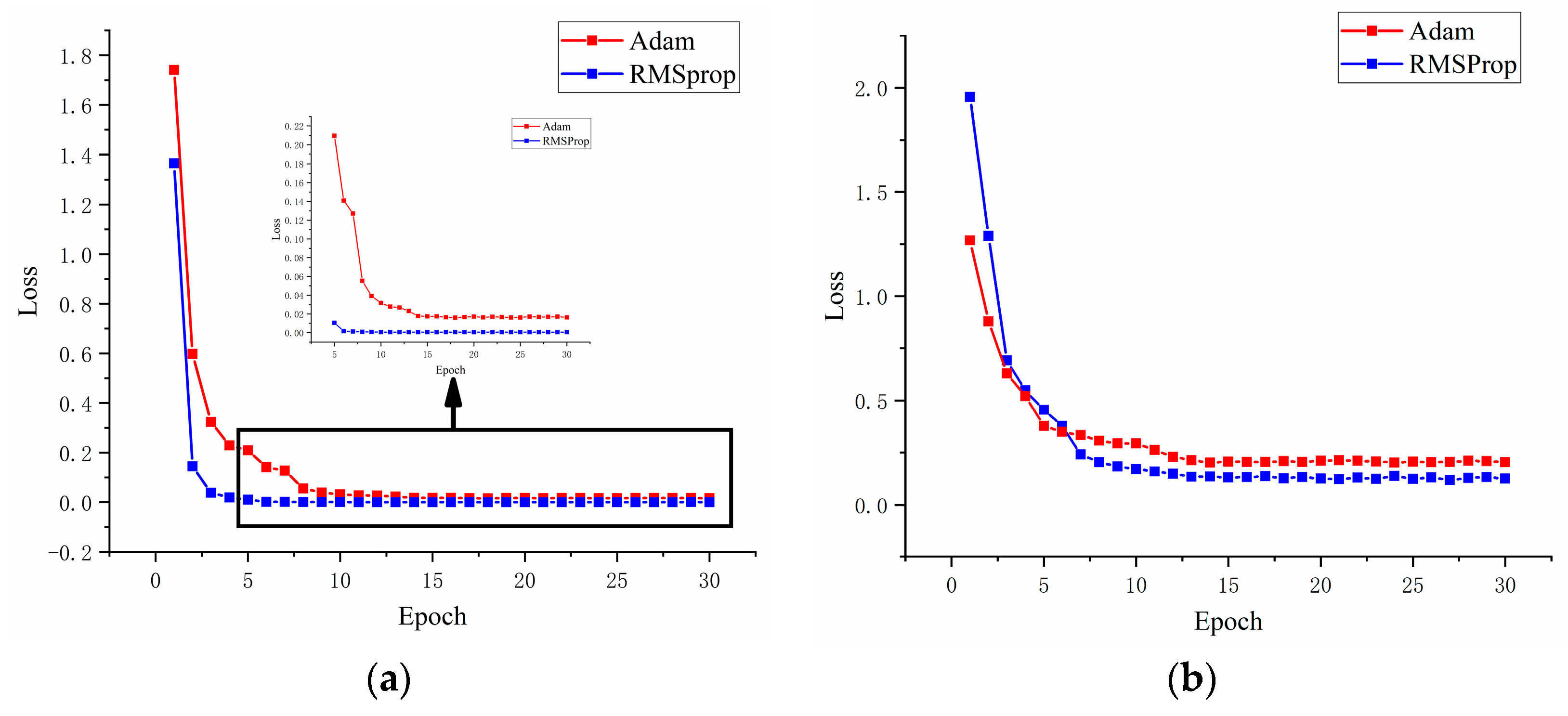

4.3. Analysis of Cost Function optimization

4.4. Analysis of the Proposed Method

5. Conclusions

- 1)

- The method proposed in this study not only improves the accuracy of the model but also greatly reduces the size of the model, illustrating the lightweight design of the model.

- 2)

- The traditional machine learning model can still achieve similar performance with deep learning when extracting appropriate features. But in different environments, feature selection needs to be repeated since otherwise, the diagnostic accuracy will decrease significantly.

- 3)

- Through data augmentation of the network input image, adding BN layer and L2 regularization in the network, the diagnostic accuracy of ShuffleNet V2 for bearings under different conditions can be effectively improved, and the model has strong generalization ability.

Author Contributions

Funding

Conflicts of Interest

References

- Zhang, D.; Entezami, M.; Stewart, E.; Roberts, C.; and Yu, D. Adaptive fault feature extraction from wayside acoustic signals from train bearings. J. Sound Vib. 2018, 425, 221–238. [Google Scholar] [CrossRef]

- Poddar, S.; Tandon, N. Detection of particle contamination in journal bearing using emission and vibration monitoring techniques. Tribol. Int. 2019, 134, 154–164. [Google Scholar] [CrossRef]

- Zhou, X.; Zhang, H.; Hao, X.; Liao, X.; Han, Q. Investigation on thermal behavior and temperature distribution of bearing inner and outer rings. Tribol. Int. 2019, 130, 289–298. [Google Scholar] [CrossRef]

- Cui, L.; Huang, J.; Zhang, F. Quantitative and localization diagnosis of a defective ball bearing based on vertical-horizontal synchronization signal analysis. IEEE Trans. Ind. Electron. 2017, 64, 8695–8705. [Google Scholar] [CrossRef]

- Wang, Z.; Zhou, J.; Wang, J.; Du, W.; Wang, J.; Han, X.; He, G. A Novel Fault Diagnosis Method of Gearbox Based on Maximum Kurtosis Spectral Entropy Deconvolution. IEEE Access 2019, 7, 29520–29532. [Google Scholar] [CrossRef]

- Yang, J.; Bai, Y.; Wang, J.; Zhao, Y. Tri-axial vibration information fusion model and its application to gear fault diagnosis in variable working conditions. Meas. Sci. Technol. 2019, 30. [Google Scholar] [CrossRef]

- Zhao, R.; Yan, R.; Chen, Z.; Mao, K.; Wang, P.; Gao, R. Deep learning and its applications to machine health monitoring. Mech. Syst. Signal Process. 2019, 115, 213–237. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, Y.; Wan, F.; Chen, B.; Liu, J. Isolation and Identification of Compound Faults in Rotating Machinery via Adaptive Deep Filtering Technique. IEEE Access 2019, 7, 139118–139130. [Google Scholar] [CrossRef]

- Ding, J.; Zhou, J.; Yin, Y. Fault detection and diagnosis of a wheelset-bearing system using a multi-Q-factor and multi-level tunable Q-factor wavelet transform. Measurement 2019, 143, 112–124. [Google Scholar] [CrossRef]

- Huang, Y.; Lin, J.; Liu, Z.; Wu, W. A modified scale-space guiding variational mode decomposition for high-speed railway bearing fault diagnosis. J. Sound Vib. 2019, 444, 216–234. [Google Scholar] [CrossRef]

- Li, X.; Yang, Y.; Pan, H.; Cheng, J.; Cheng, J. A novel deep stacking least squares support vector machine for rolling bearing fault diagnosis. Comput. Ind. 2019, 110, 36–47. [Google Scholar] [CrossRef]

- Qiao, H.; Wang, T.; Wang, P.; Zhang, L.; Xu, M. An Adaptive Weighted Multiscale Convolutional Neural Network for Rotating Machinery Fault Diagnosis under Variable Operating Conditions. IEEE Access 2019, 7, 118954–118964. [Google Scholar] [CrossRef]

- Liu, R.; Yang, B.; Zio, E.; Chen, X. Artificial intelligence for fault diagnosis of rotating machinery: A review. Mech. Syst. Signal Process. 2018, 108, 33–47. [Google Scholar] [CrossRef]

- Zhuang, Z.; Lv, H.; Xu, J.; Huang, Z.; Qin, W. A Deep Learning Method for Bearing Fault Diagnosis through Stacked Residual Dilated Convolutions. Appl. Sci. 2019, 9, 1823. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the 26th Annual Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar] [CrossRef]

- Yang, B.; Lei, Y.; Jia, F.; Xing, S. An intelligent fault diagnosis approach based on transfer learning from laboratory bearings to locomotive bearings. Mech. Syst. Signal Process. 2019, 122, 692–706. [Google Scholar] [CrossRef]

- Gong, W.; Chen, H.; Zhang, Z.; Zhang, M.; Wang, R.; Guan, C.; Wang, Q. A Novel Deep Learning Method for Intelligent Fault Diagnosis of Rotating Machinery Based on Improved CNN-SVM and Multichannel Data Fusion. Sensors 2019, 19, 1693. [Google Scholar] [CrossRef]

- Lu, C.; Wang, Z.; Zhou, B. Intelligent fault diagnosis of rolling bearing using hierarchical convolutional network based health state classification. Adv. Eng. Inform. 2017, 32, 139–151. [Google Scholar] [CrossRef]

- Li, X.; Zhang, W.; Ding, Q. A robust intelligent fault diagnosis method for rolling element bearings based on deep distance metric learning. Neurocomputing 2018, 310, 77–95. [Google Scholar] [CrossRef]

- Zhang, R.; Tao, H.; Wu, L.; Guan, Y. Transfer Learning with Neural Networks for Bearing Fault Diagnosis in Changing Working Conditions. IEEE Access 2017, 5, 14347–14357. [Google Scholar] [CrossRef]

- Guo, S.; Yang, T.; Gao, W.; Zhang, C.; Zhang, Y. An Intelligent Fault Diagnosis Method for Bearings with Variable Rotating Speed Based on Pythagorean Spatial Pyramid Pooling CNN. Sensors 2018, 18, 3857. [Google Scholar] [CrossRef]

- Zhang, W.; Li, C.; Peng, G.; Chen, Y.; Zhang, Z. A deep convolutional neural network with new training methods for bearing fault diagnosis under noisy environment and different working load. Mech. Syst. Signal Process. 2018, 100, 439–453. [Google Scholar] [CrossRef]

- Wang, N.; Wang, Z.; Jia, L.; Qin, Y.; Chen, X.; Zuo, Y. Adaptive Multiclass Mahalanobis Taguchi System for Bearing Fault Diagnosis under Variable Conditions. Sensors 2019, 19, 26. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Z.; Peng, G.; Chen, Y.; Gao, H. A convolutional neural network based on a capsule network with strong generalization for bearing fault diagnosis. Neurocomputing 2019, 323, 62–75. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, Q.; Chen, M.; Sun, Y.; Qin, X.; Li, H. A two-stage feature selection and intelligent fault diagnosis method for rotating machinery using hybrid filter and wrapper method. Neurocomputing 2018, 275, 2426–2439. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zhen, H.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. arXiv 2018, arXiv:1807.11164. [Google Scholar]

- Howard, G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Landola, F.; Han, S.; Moskewicz, M.; Ashraf, K.; Dally, W.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. arXiv 2016, arXiv:1610.02357. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. arXiv 2017, arXiv:1707.01083. [Google Scholar]

- Montserrat, D.M.; Qian, L.; Allebach, J.; Delp, E. Training object detection and recognition CNN models using data augmentation. Electron. Imaging 2017, 2017, 27–36. [Google Scholar] [CrossRef]

- Loffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Wang, J.; Li, S.; An, Z.; Jiang, X.; Qian, W.; Ji, S. Batch-normalized deep neural networks for achieving fast intelligent fault diagnosis of machines. Neurocomputing 2019, 329, 53–65. [Google Scholar] [CrossRef]

- Santurkar, S.; Tsipras, D.; Ilyas, A.; Madry, A. How Does Batch Normalization Help Optimization? arXiv 2019, arXiv:1805.11604v5. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T. A survey on Image Data Augmentation for Deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Smith, W.A.; Randall, R.B. Rolling element bearing diagnosis using the Case Western Reserve University data: A benchmark study. Mech. Syst. Signal Process. 2015, 64, 100–131. [Google Scholar] [CrossRef]

- Maaten, L.V.d.; Hinton, G. Visualizing data using t-sne. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar] [CrossRef]

- Chen, Y.; Peng, G.; Xie, C.; Zhang, W.; Li, C.; Liu, S. ACDIN: Bridging the gap between artificial and real bearing damages for bearing fault diagnosis. Neurocomputing 2018, 294, 61–71. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980v9. [Google Scholar]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2017, arXiv:1609.04747v2. [Google Scholar]

| Layer | Output Size | Kernel Size | Stride | Repeat | Output Channels |

|---|---|---|---|---|---|

| Image | 224224 | 3 | |||

| Conv1 MaxPool | 112 × 112 5656 | 33 33 | 2 2 | 1 | 24 |

| Stage2 | 2828 2828 | 2 1 | 1 3 | 116 | |

| Stage3 | 1414 1414 | 2 1 | 1 7 | 232 | |

| Stage4 | 77 77 | 2 1 | 1 3 | 464 | |

| Conv5 | 77 | 11 | 1 | 1 | 1024 |

| GlobalPool | 11 | 77 | |||

| FC | 1000 |

| Layer | Output Size | Kernel Size | Stride | Repeat | Output Channels |

|---|---|---|---|---|---|

| Image | 6464 | 3 | |||

| Conv1 | 5656 | 99 | 1 | 24 | |

| BN | 5656 | ||||

| Stage2 | 2828 2828 | 2 1 | 1 3 | 116 | |

| Stage3 | 1414 1414 | 2 1 | 1 7 | 232 | |

| Stage4 | 77 77 | 2 1 | 1 3 | 464 | |

| Conv5 | 77 | 11 | 1 | 1 | 1024 |

| BN | 77 | ||||

| GlobalPool | 1 × 1 | 77 | |||

| FC | 9 |

| Motor Speed (rpm) | Motor Load (HP) | Fault Diameter (mils) | Name of Setting |

|---|---|---|---|

| 1772 | 1 | 7,14,21 | A |

| 1750 | 2 | 7,14,21 | B |

| 1730 | 3 | 7,14,21 | C |

| Model | Training Time (s) |

|---|---|

| Proposed method | 805.6 |

| MobileNet | 978.4 |

| Vgg16 | 810.8 |

| ResNet | 1401.1 |

| ICN | 1856.0 |

| Model | Model Size (MB) | Average (%) | ||||||

|---|---|---|---|---|---|---|---|---|

| Proposed method | 96.80 | 99.40 | 97.55 | 96.30 | 96.30 | 97.80 | 16 | 97.42 |

| MobileNet | 90.00 | 98.40 | 95.00 | 96.30 | 89.80 | 96.80 | 12.5 | 94.38 |

| Vgg16 | 99.50 | 85.30 | 88.80 | 93.60 | 74.30 | 73.70 | 58.5 | 84.20 |

| ResNet | 95.44 | 94.33 | 92.33 | 91.88 | 68 | 93.99 | 20.3 | 89.33 |

| ICN | 98.23 | 97.17 | 99.80 | 94.71 | 94.93 | 98.10 | 56.9 | 97.15 |

| kNN | 83.27 | 87.33 | 78.57 | 83.17 | 97.80 | 91.97 | 875 | 87.02 |

| SVM | 71.93 | 72.90 | 76.33 | 75.30 | 98.03 | 94.77 | 145 | 81.55 |

| Rotating Speed (rpm) | Running State | Load Torque (Nm) | Radialforce (N) | Name of Setting |

|---|---|---|---|---|

| 1500 | health, inner fault, outer fault | 0.1 | 1000 | D |

| 1500 | health, inner fault, outer fault | 0.7 | 400 | E |

| 1500 | health, inner fault, outer fault | 0.7 | 1000 | F |

| Model | Model Size (MB) | Average (%) | ||||||

|---|---|---|---|---|---|---|---|---|

| Proposed method | 81.30 | 96.55 | 75.55 | 93.77 | 91.44 | 81.11 | 16 | 86.62 |

| MobileNet | 66.70 | 78.77 | 72.66 | 86.44 | 70.22 | 76.40 | 12.5 | 75.19 |

| Vgg16 | 62.20 | 89.77 | 87.11 | 91.00 | 84.88 | 83.66 | 58.5 | 83.10 |

| ResNet | 82.55 | 95.22 | 92.22 | 79.55 | 81.88 | 79.33 | 20.3 | 85.13 |

| ICN | 80.67 | 96.97 | 70.23 | 70.67 | 94.27 | 79.50 | 53.3 | 82.05 |

| kNN | 72.13 | 93.27 | 60.47 | 58.60 | 90.67 | 74.13 | 594 | 74.88 |

| SVM | 81.20 | 92.97 | 72.13 | 77.23 | 93.00 | 72.77 | 281 | 81.55 |

| Model | Training Time (s) |

|---|---|

| Proposed method | 557.1 |

| MobileNet | 678.9 |

| Vgg16 | 681.9 |

| ResNet | 910.0 |

| ICN | 569.6 |

| Model | Average (%) | ||||||

|---|---|---|---|---|---|---|---|

| Proposed method | 96.80 | 99.40 | 97.55 | 96.30 | 96.30 | 97.80 | 97.42 |

| 88.70 | 88.11 | 86.5 | 76.66 | 84.11 | 98.77 | 87.14 | |

| 99.70 | 95.70 | 96.00 | 98.30 | 95.70 | 97.66 | 97.16 |

| Model | Average (%) | ||||||

|---|---|---|---|---|---|---|---|

| Proposed method | 81.30 | 96.55 | 75.55 | 93.77 | 91.44 | 81.11 | 86.62 |

| 70.00 | 63.55 | 64.00 | 84.33 | 56.70 | 72.60 | 68.53 | |

| 72.00 | 92.77 | 71.00 | 87.00 | 83.77 | 72.88 | 79.90 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Yao, D.; Yang, J.; Li, X. Lightweight Convolutional Neural Network and Its Application in Rolling Bearing Fault Diagnosis under Variable Working Conditions. Sensors 2019, 19, 4827. https://doi.org/10.3390/s19224827

Liu H, Yao D, Yang J, Li X. Lightweight Convolutional Neural Network and Its Application in Rolling Bearing Fault Diagnosis under Variable Working Conditions. Sensors. 2019; 19(22):4827. https://doi.org/10.3390/s19224827

Chicago/Turabian StyleLiu, Hengchang, Dechen Yao, Jianwei Yang, and Xi Li. 2019. "Lightweight Convolutional Neural Network and Its Application in Rolling Bearing Fault Diagnosis under Variable Working Conditions" Sensors 19, no. 22: 4827. https://doi.org/10.3390/s19224827

APA StyleLiu, H., Yao, D., Yang, J., & Li, X. (2019). Lightweight Convolutional Neural Network and Its Application in Rolling Bearing Fault Diagnosis under Variable Working Conditions. Sensors, 19(22), 4827. https://doi.org/10.3390/s19224827