Deep Learning on Multi Sensor Data for Counter UAV Applications—A Systematic Review

Abstract

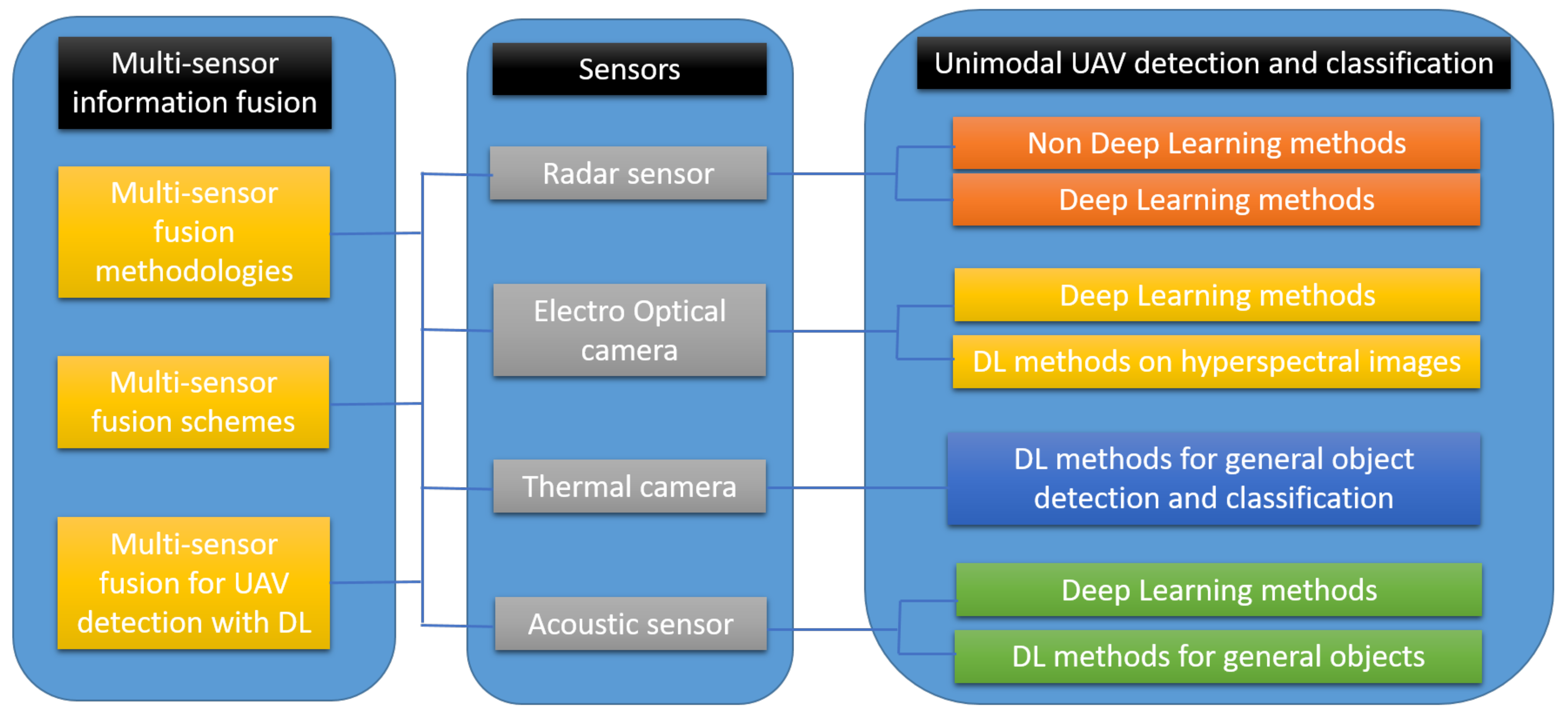

:1. Introduction

2. Radar Sensor

2.1. Traditional UAV Detection and Classification Methods for Radar Sensors

2.1.1. Micro Doppler Based Methods

2.1.2. Surveillance Radars and Motion Based Methods

2.2. Deep Learning Based UAV Detection and Classification Methods for Radar Sensors

3. Optical Sensor

Hyperspectral Image Sensors

4. Thermal Sensor

4.1. Deep Learning Based Methods Using Thermal Imagery

4.1.1. Detection

4.1.2. Classification

4.1.3. Feature Extraction

4.1.4. Domain Adaptation

5. Acoustic Sensor

6. Multi Sensor Fusion

6.1. Multi-Sensor Fusion Methodologies

6.2. Multi-Sensor Data Fusion Schemes

6.3. Multi-Sensor UAV Detection

6.4. UAV Detection Using Multi-Sensor Artificial Intelligence Enabled Methods

7. Discussion and Recommendations

7.1. Impact of Reported Studies

7.1.1. Radar Sensor

7.1.2. Optical Sensor

7.1.3. Thermal Sensor

7.1.4. Acoustic Sensor

7.1.5. Multi-Sensor Information Fusion

7.2. C-UAV System Recommendation

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Group, T. World Civil Unmanned Aerial Systems Market Profile and Forecast. 2017. Available online: http://tealgroup.com/images/TGCTOC/WCUAS2017TOC_EO.pdf (accessed on 24 April 2019).

- Research, G.V. Commercial UAV Market Analysis By Product (Fixed Wing, Rotary Blade, Nano, Hybrid), By Application (Agriculture, Energy, Government, Media and Entertainment) In addition, Segment Forecasts to 2022. 2016. Available online: https://www.grandviewresearch.com/industry-analysis/commercial-uav-market (accessed on 24 April 2019).

- Guardian, T. Gatwick Drone Disruption Cost Airport Just £1.4 m. 2018. Available online: https://www.theguardian.com/uk-news/2019/jun/18/gatwick-drone-disruption-cost-airport-just-14m (accessed on 6 May 2019).

- Anti-Drone. Anti-Drone System Overview and Technology Comparison. 2016. Available online: https://anti-drone.eu/blog/anti-drone-publications/anti-drone-system-overview-and-technology-comparison.html (accessed on 6 May 2019).

- Liggins II, M.; Hall, D.; Llinas, J. Handbook of Multisensor Data Fusion: Theory and Practice; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef]

- Namatēvs, I. Deep convolutional neural networks: Structure, feature extraction and training. Inf. Technol. Manag. Sci. 2017, 20, 40–47. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object detection with deep learning: A review. arXiv 2019, arXiv:1807.05511. [Google Scholar] [CrossRef] [PubMed]

- Fiaz, M.; Mahmood, A.; Jung, S.K. Tracking Noisy Targets: A Review of Recent Object Tracking Approaches. arXiv 2018, arXiv:1802.03098. [Google Scholar]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Google Scholar Search. Available online: https://scholar.google.gr/schhp?hl=en (accessed on 15 June 2019).

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, S.N. Multisensor data fusion: A review of the state-of-the-art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Hu, D.; Wang, C.; Nie, F.; Li, X. Dense Multimodal Fusion for Hierarchically Joint Representation. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3941–3945. [Google Scholar]

- Liu, K.; Li, Y.; Xu, N.; Natarajan, P. Learn to Combine Modalities in Multimodal Deep Learning. arXiv 2018, arXiv:1805.11730. [Google Scholar]

- Knott, E.F.; Schaeffer, J.F.; Tulley, M.T. Radar Cross Section; SciTech Publishing: New York, NY, USA, 2004. [Google Scholar]

- Molchanov, P.; Harmanny, R.I.; de Wit, J.J.; Egiazarian, K.; Astola, J. Classification of small UAVs and birds by micro-Doppler signatures. Int. J. Microw. Wirel. Technol. 2014, 6, 435–444. [Google Scholar] [CrossRef] [Green Version]

- Tait, P. Introduction to Radar Target Recognition; IET: London, UK, 2005; Volume 18. [Google Scholar]

- Jokanovic, B.; Amin, M.; Ahmad, F. Radar fall motion detection using deep learning. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 1–6 May 2016; pp. 1–6. [Google Scholar]

- De Wit, J.M.; Harmanny, R.; Premel-Cabic, G. Micro-Doppler analysis of small UAVs. In Proceedings of the 2012 9th European Radar Conference, Amsterdam, The Netherlands, 31 October–2 November 2012; pp. 210–213. [Google Scholar]

- Harmanny, R.; De Wit, J.; Cabic, G.P. Radar micro-Doppler feature extraction using the spectrogram and the cepstrogram. In Proceedings of the 2014 11th European Radar Conference, Cincinnati, OH, USA, 11–13 October 2014; pp. 165–168. [Google Scholar]

- De Wit, J.; Harmanny, R.; Molchanov, P. Radar micro-Doppler feature extraction using the singular value decomposition. In Proceedings of the 2014 International Radar Conference, Lille, France, 13–17 October 2014; pp. 1–6. [Google Scholar]

- Fuhrmann, L.; Biallawons, O.; Klare, J.; Panhuber, R.; Klenke, R.; Ender, J. Micro-Doppler analysis and classification of UAVs at Ka band. In Proceedings of the 2017 18th International Radar Symposium (IRS), Prague, Czech Republic, 28–30 June 2017; pp. 1–9. [Google Scholar]

- Ren, J.; Jiang, X. Regularized 2D complex-log spectral analysis and subspace reliability analysis of micro-Doppler signature for UAV detection. Pattern Recognit. 2017, 69, 225–237. [Google Scholar] [CrossRef]

- Oh, B.S.; Guo, X.; Wan, F.; Toh, K.A.; Lin, Z. Micro-Doppler mini-UAV classification using empirical-mode decomposition features. IEEE Geosci. Remote Sens. Lett. 2017, 15, 227–231. [Google Scholar] [CrossRef]

- Ma, X.; Oh, B.S.; Sun, L.; Toh, K.A.; Lin, Z. EMD-Based Entropy Features for micro-Doppler Mini-UAV Classification. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 1295–1300. [Google Scholar]

- Sun, Y.; Fu, H.; Abeywickrama, S.; Jayasinghe, L.; Yuen, C.; Chen, J. Drone classification and localization using micro-doppler signature with low-frequency signal. In Proceedings of the 2018 IEEE International Conference on Communication Systems (ICCS), Chengdu, China, 19–21 December 2018; pp. 413–417. [Google Scholar]

- Fioranelli, F.; Ritchie, M.; Griffiths, H.; Borrion, H. Classification of loaded/unloaded micro-drones using multistatic radar. Electron. Lett. 2015, 51, 1813–1815. [Google Scholar] [CrossRef]

- Hoffmann, F.; Ritchie, M.; Fioranelli, F.; Charlish, A.; Griffiths, H. Micro-Doppler based detection and tracking of UAVs with multistatic radar. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 1–6 May 2016; pp. 1–6. [Google Scholar]

- Zhang, P.; Yang, L.; Chen, G.; Li, G. Classification of drones based on micro-Doppler signatures with dual-band radar sensors. In Proceedings of the 2017 Progress in Electromagnetics Research Symposium-Fall (PIERS-FALL), Singapore, 20 July 2017; pp. 638–643. [Google Scholar]

- Chen, W.; Liu, J.; Li, J. Classification of UAV and bird target in low-altitude airspace with surveillance radar data. Aeronaut. J. 2019, 123, 191–211. [Google Scholar] [CrossRef]

- Messina, M.; Pinelli, G. Classification of Drones with a Surveillance Radar Signal. In Proceedings of the 12th International Conference on Computer Vision Systems (ICVS), Thessaloniki, Greece, 23–25 September 2019. [Google Scholar]

- Torvik, B.; Olsen, K.E.; Griffiths, H. Classification of birds and UAVs based on radar polarimetry. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1305–1309. [Google Scholar] [CrossRef]

- Kim, B.K.; Kang, H.S.; Park, S.O. Drone classification using convolutional neural networks with merged Doppler images. IEEE Geosci. Remote Sens. Lett. 2016, 14, 38–42. [Google Scholar] [CrossRef]

- Mendis, G.J.; Randeny, T.; Wei, J.; Madanayake, A. Deep learning based doppler radar for micro UAS detection and classification. In Proceedings of the MILCOM 2016-2016 IEEE Military Communications Conference, Baltimore, MD, USA, 1–3 November 2016; pp. 924–929. [Google Scholar]

- Wang, L.; Tang, J.; Liao, Q. A Study on Radar Target Detection Based on Deep Neural Networks. IEEE Sens. Lett. 2019. [Google Scholar] [CrossRef]

- Stamatios Samaras, V.M.; Anastasios Dimou, D.Z.; Daras, P. UAV classification with deep learning using surveillance radar data. In Proceedings of the 12th International Conference on Computer Vision Systems (ICVS), Thessaloniki, Greece, 23–25 September 2019. [Google Scholar]

- Regev, N.; Yoffe, I.; Wulich, D. Classification of single and multi propelled miniature drones using multilayer perceptron artificial neural network. In Proceedings of the International Conference on Radar Systems (Radar 2017), Belfast, UK, 23–26 October 2017. [Google Scholar]

- Habermann, D.; Dranka, E.; Caceres, Y.; do Val, J.B. Drones and helicopters classification using point clouds features from radar. In Proceedings of the 2018 IEEE Radar Conference (RadarConf18), Oklahoma City, OK, USA, 23–27 April 2018; pp. 0246–0251. [Google Scholar]

- Mohajerin, N.; Histon, J.; Dizaji, R.; Waslander, S.L. Feature extraction and radar track classification for detecting UAVs in civillian airspace. In Proceedings of the 2014 IEEE Radar Conference, Cincinnati, OH, USA, 19–23 May 2014; pp. 0674–0679. [Google Scholar]

- Chen, V.C.; Li, F.; Ho, S.S.; Wechsler, H. Micro-Doppler effect in radar: Phenomenon, model, and simulation study. IEEE Trans. Aerosp. Electron. Syst. 2006, 42, 2–21. [Google Scholar] [CrossRef]

- Al Hadhrami, E.; Al Mufti, M.; Taha, B.; Werghi, N. Transfer learning with convolutional neural networks for moving target classification with micro-Doppler radar spectrograms. In Proceedings of the 2018 International Conference on Artificial Intelligence and Big Data (ICAIBD), Chengdu, China, 26–28 May 2018; pp. 148–154. [Google Scholar]

- Al Hadhrami, E.; Al Mufti, M.; Taha, B.; Werghi, N. Classification of ground moving radar targets using convolutional neural network. In Proceedings of the 2018 22nd International Microwave and Radar Conference (MIKON), Poznań, Poland, 15–17 May 2018; pp. 127–130. [Google Scholar]

- Al Hadhrami, E.; Al Mufti, M.; Taha, B.; Werghi, N. Ground moving radar targets classification based on spectrogram images using convolutional neural networks. In Proceedings of the 2018 19th International Radar Symposium (IRS), Bonn, Germany, 20–22 June 2018; pp. 1–9. [Google Scholar]

- Chen, X.; Guan, J.; Bao, Z.; He, Y. Detection and extraction of target with micromotion in spiky sea clutter via short-time fractional Fourier transform. IEEE Trans. Geosci. Remote Sens. 2013, 52, 1002–1018. [Google Scholar] [CrossRef]

- Tahmoush, D.; Silvious, J. Radar micro-Doppler for long range front-view gait recognition. In Proceedings of the 2009 IEEE 3rd International Conference on Biometrics: Theory, Applications, and Systems, Washington, DC, USA, 28–30 September 2009; pp. 1–6. [Google Scholar]

- Raj, R.; Chen, V.; Lipps, R. Analysis of radar human gait signatures. IET Signal Process. 2010, 4, 234–244. [Google Scholar] [CrossRef]

- Li, Y.; Peng, Z.; Pal, R.; Li, C. Potential Active Shooter Detection Based on Radar Micro-Doppler and Range–Doppler Analysis Using Artificial Neural Network. IEEE Sens. J. 2018, 19, 1052–1063. [Google Scholar] [CrossRef]

- Kim, Y.; Ling, H. Human activity classification based on micro-Doppler signatures using a support vector machine. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1328–1337. [Google Scholar]

- Ritchie, M.; Fioranelli, F.; Griffiths, H.; Torvik, B. Micro-drone RCS analysis. In Proceedings of the 2015 IEEE Radar Conference, Arlington, VA, USA, 10–15 May 2015; pp. 452–456. [Google Scholar]

- Bogert, B.P. The quefrency alanysis of time series for echoes; Cepstrum, pseudo-autocovariance, cross-cepstrum and saphe cracking. Time Ser. Anal. 1963, 15, 209–243. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Rish, I. An empirical study of the naive Bayes classifier. In Proceedings of the IJCAI 2001 Workshop on Empirical Methods in Artificial Intelligence, Seattle, WA, USA, 4 August 2001; Volume 3, pp. 41–46. [Google Scholar]

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Yen, N.C.; Tung, C.C.; Liu, H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer Series in Statistics; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Patel, J.S.; Fioranelli, F.; Anderson, D. Review of radar classification and RCS characterisation techniques for small UAVs ordrones. IET Radar Sonar Navig. 2018, 12, 911–919. [Google Scholar] [CrossRef]

- Ghadaki, H.; Dizaji, R. Target track classification for airport surveillance radar (ASR). In Proceedings of the 2006 IEEE Conference on Radar, Verona, NY, USA, 24–27 April 2006. [Google Scholar]

- Lundén, J.; Koivunen, V. Deep learning for HRRP-based target recognition in multistatic radar systems. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–6. [Google Scholar]

- Wan, J.; Chen, B.; Xu, B.; Liu, H.; Jin, L. Convolutional neural networks for radar HRRP target recognition and rejection. EURASIP J. Adv. Signal Process. 2019, 2019, 5. [Google Scholar] [CrossRef] [Green Version]

- Guo, C.; He, Y.; Wang, H.; Jian, T.; Sun, S. Radar HRRP Target Recognition Based on Deep One-Dimensional Residual-Inception Network. IEEE Access 2019, 7, 9191–9204. [Google Scholar] [CrossRef]

- El Housseini, A.; Toumi, A.; Khenchaf, A. Deep Learning for target recognition from SAR images. In Proceedings of the 2017 Seminar on Detection Systems Architectures and Technologies (DAT), Algiers, Algeria, 20–22 February 2017; pp. 1–5. [Google Scholar]

- Chen, S.; Wang, H. SAR target recognition based on deep learning. In Proceedings of the 2014 International Conference on Data Science and Advanced Analytics (DSAA), Shanghai, China, 30 October–2 November 2014; pp. 541–547. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. Large margin classification using the perceptron algorithm. Mach. Learn. 1999, 37, 277–296. [Google Scholar] [CrossRef]

- Granström, K.; Schön, T.B.; Nieto, J.I.; Ramos, F.T. Learning to close loops from range data. Int. J. Robot. Res. 2011, 30, 1728–1754. [Google Scholar] [CrossRef]

- Dizaji, R.M.; Ghadaki, H. Classification System for Radar and Sonar Applications. U.S. Patent 7,567,203, 28 July 2009. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Saqib, M.; Khan, S.D.; Sharma, N.; Blumenstein, M. A study on detecting drones using deep convolutional neural networks. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Mrunalini Nalamati, A.K.; Muhammed Saqib, N.S.; Blumenstein, M. Drone Detection in Long-range Surveillance Videos. In Proceedings of the 2019 16th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Taiwan, China, 18–21 September 2019. [Google Scholar]

- Schumann, A.; Sommer, L.; Klatte, J.; Schuchert, T.; Beyerer, J. Deep cross-domain flying object classification for robust UAV detection. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Craye, C.; Ardjoune, S. Spatio-temporal Semantic Segmentation for Drone Detection. In Proceedings of the 2019 16th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Taiwan, China, 18–21 September 2019. [Google Scholar]

- Vasileios Magoulianitis, D.A.; Anastasios Dimou, D.Z.; Daras, P. Does Deep Super-Resolution Enhance UAV Detection. In Proceedings of the 2019 16th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Taiwan, China, 18–21 September 2019. [Google Scholar]

- Opromolla, R.; Fasano, G.; Accardo, D. A Vision-Based Approach to UAV Detection and Tracking in Cooperative Applications. Sensors 2018, 18, 3391. [Google Scholar] [CrossRef]

- Aker, C.; Kalkan, S. Using deep networks for drone detection. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Rozantsev, A.; Lepetit, V.; Fua, P. Detecting flying objects using a single moving camera. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 879–892. [Google Scholar] [CrossRef]

- Gökçe, F.; Üçoluk, G.; Şahin, E.; Kalkan, S. Vision-based detection and distance estimation of micro unmanned aerial vehicles. Sensors 2015, 15, 23805–23846. [Google Scholar] [CrossRef]

- Chang, C.I. Hyperspectral Imaging: Techniques for Spectral Detection and Classification; Springer Science & Business Media: New York, NY, USA, 2003; Volume 1. [Google Scholar]

- Wang, D.; Vinson, R.; Holmes, M.; Seibel, G.; Bechar, A.; Nof, S.; Tao, Y. Early Detection of Tomato Spotted Wilt Virus by Hyperspectral Imaging and Outlier Removal Auxiliary Classifier Generative Adversarial Nets (OR-AC-GAN). Sci. Rep. 2019, 9, 4377. [Google Scholar] [CrossRef]

- Lu, Y.; Perez, D.; Dao, M.; Kwan, C.; Li, J. Deep learning with synthetic hyperspectral images for improved soil detection in multispectral imagery. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 20–22 October 2018; pp. 8–10. [Google Scholar]

- Liang, J.; Zhou, J.; Tong, L.; Bai, X.; Wang, B. Material based salient object detection from hyperspectral images. Pattern Recognit. 2018, 76, 476–490. [Google Scholar] [CrossRef] [Green Version]

- Al-Sarayreh, M.; Reis, M.M.; Yan, W.Q.; Klette, R. A Sequential CNN Approach for Foreign Object Detection in Hyperspectral Images. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Salerno, Italy, 3–5 September 2019; pp. 271–283. [Google Scholar]

- Freitas, S.; Silva, H.; Almeida, J.; Silva, E. Hyperspectral imaging for real-time unmanned aerial vehicle maritime target detection. J. Intell. Robot. Syst. 2018, 90, 551–570. [Google Scholar] [CrossRef]

- Pham, T.; Takalkar, M.; Xu, M.; Hoang, D.; Truong, H.; Dutkiewicz, E.; Perry, S. Airborne Object Detection Using Hyperspectral Imaging: Deep Learning Review. In Proceedings of the International Conference on Computational Science and Its Applications, Saint Petersburg, Russia, 1–4 July 2019; pp. 306–321. [Google Scholar]

- Manolakis, D.; Truslow, E.; Pieper, M.; Cooley, T.; Brueggeman, M. Detection algorithms in hyperspectral imaging systems: An overview of practical algorithms. IEEE Signal Process. Mag. 2013, 31, 24–33. [Google Scholar] [CrossRef]

- Zhang, L.; Wei, W.; Zhang, Y.; Shen, C.; van den Hengel, A.; Shi, Q. Cluster sparsity field: An internal hyperspectral imagery prior for reconstruction. Int. J. Comput. Vis. 2018, 126, 797–821. [Google Scholar] [CrossRef]

- Zhou, P.; Han, J.; Cheng, G.; Zhang, B. Learning compact and discriminative stacked autoencoder for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4823–4833. [Google Scholar] [CrossRef]

- Paoletti, M.; Haut, J.; Plaza, J.; Plaza, A. A new deep convolutional neural network for fast hyperspectral image classification. ISPRS J. Photogramm. Remote Sens. 2018, 145, 120–147. [Google Scholar] [CrossRef]

- Anthony Thomas, V.L.; Antoine Cotinat, P.F.; Gilber, M. UAV localization using panoramic thermal cameras. In Proceedings of the 12th International Conference on Computer Vision Systems (ICVS), Thessaloniki, Greece, 23–25 September 2019. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, S.; Wang, S.; Metaxas, D.N. Multispectral deep neural networks for pedestrian detection. arXiv 2016, arXiv:1611.02644. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; So Kweon, I. Multispectral pedestrian detection: Benchmark dataset and baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1037–1045. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Konig, D.; Adam, M.; Jarvers, C.; Layher, G.; Neumann, H.; Teutsch, M. Fully convolutional region proposal networks for multispectral person detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 49–56. [Google Scholar]

- Bondi, E.; Fang, F.; Hamilton, M.; Kar, D.; Dmello, D.; Choi, J.; Hannaford, R.; Iyer, A.; Joppa, L.; Tambe, M.; et al. Spot poachers in action: Augmenting conservation drones with automatic detection in near real time. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Cao, Y.; Guan, D.; Huang, W.; Yang, J.; Cao, Y.; Qiao, Y. Pedestrian detection with unsupervised multispectral feature learning using deep neural networks. Inf. Fusion 2019, 46, 206–217. [Google Scholar] [CrossRef]

- Kwaśniewska, A.; Rumiński, J.; Rad, P. Deep features class activation map for thermal face detection and tracking. In Proceedings of the 2017 10th International Conference on Human System Interactions (HSI), Ulsan, Korea, 17–19 July 2017; pp. 41–47. [Google Scholar]

- Sharif Razavian, A.; Azizpour, H.; Sullivan, J.; Carlsson, S. CNN features off-the-shelf: An astounding baseline for recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 24–27 June 2014; pp. 806–813. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? arXiv 2014, arXiv:1411.1792. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 1 June–26 July 2016; pp. 2818–2826. [Google Scholar]

- John, V.; Mita, S.; Liu, Z.; Qi, B. Pedestrian detection in thermal images using adaptive fuzzy C-means clustering and convolutional neural networks. In Proceedings of the 2015 14th IAPR International Conference on Machine Vision Applications (MVA), Tokyo, Japan, 18–22 May 2015; pp. 246–249. [Google Scholar]

- Lee, E.J.; Shin, S.Y.; Ko, B.C.; Chang, C. Early sinkhole detection using a drone-based thermal camera and image processing. Infrared Phys. Technol. 2016, 78, 223–232. [Google Scholar] [CrossRef]

- Beleznai, C.; Steininger, D.; Croonen, G.; Broneder, E. Multi-Modal Human Detection from Aerial Views by Fast Shape-Aware Clustering and Classification. In Proceedings of the 2018 10th IAPR Workshop on Pattern Recognition in Remote Sensing (PRRS), Beijing, China, 19–20 August 2018; pp. 1–6. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Ulrich, M.; Hess, T.; Abdulatif, S.; Yang, B. Person recognition based on micro-Doppler and thermal infrared camera fusion for firefighting. In Proceedings of the 2018 21st International Conference on Information Fusion (FUSION), Cambridge, UK, 10–13 July 2018; pp. 919–926. [Google Scholar]

- Viola, P.; Jones, M. Robust real-time object detection. Int. J. Comput. Vis. 2001, 4, 4. [Google Scholar]

- Quero, J.; Burns, M.; Razzaq, M.; Nugent, C.; Espinilla, M. Detection of Falls from Non-Invasive Thermal Vision Sensors Using Convolutional Neural Networks. Proceedings 2018, 2, 1236. [Google Scholar] [CrossRef]

- Bastan, M.; Yap, K.H.; Chau, L.P. Remote detection of idling cars using infrared imaging and deep networks. arXiv 2018, arXiv:1804.10805. [Google Scholar] [CrossRef]

- Bastan, M.; Yap, K.H.; Chau, L.P. Idling car detection with ConvNets in infrared image sequences. In Proceedings of the 2018 IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018; pp. 1–5. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Liu, Q.; Lu, X.; He, Z.; Zhang, C.; Chen, W.S. Deep convolutional neural networks for thermal infrared object tracking. Knowl.-Based Syst. 2017, 134, 189–198. [Google Scholar] [CrossRef]

- Felsberg, M.; Berg, A.; Hager, G.; Ahlberg, J.; Kristan, M.; Matas, J.; Leonardis, A.; Cehovin, L.; Fernandez, G.; Vojír, T.; et al. The thermal infrared visual object tracking VOT-TIR2015 challenge results. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 76–88. [Google Scholar]

- Chen, Z.; Wang, Y.; Liu, H. Unobtrusive Sensor-Based Occupancy Facing Direction Detection and Tracking Using Advanced Machine Learning Algorithms. IEEE Sens. J. 2018, 18, 6360–6368. [Google Scholar] [CrossRef]

- Gao, P.; Ma, Y.; Song, K.; Li, C.; Wang, F.; Xiao, L. Large margin structured convolution operator for thermal infrared object tracking. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 2380–2385. [Google Scholar]

- Herrmann, C.; Ruf, M.; Beyerer, J. CNN-based thermal infrared person detection by domain adaptation. In Proceedings of the Autonomous Systems: Sensors, Vehicles, Security, and the Internet of Everything, Orlando, FL, USA, 16–18 April 2018; Volume 10643, p. 1064308. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Patel, S.N.; Robertson, T.; Kientz, J.A.; Reynolds, M.S.; Abowd, G.D. At the flick of a switch: Detecting and classifying unique electrical events on the residential power line (nominated for the best paper award). In Proceedings of the International Conference on Ubiquitous Computing, Innsbruck, Austria, 16–19 September 2007; pp. 271–288. [Google Scholar]

- Lee, H.; Pham, P.; Largman, Y.; Ng, A.Y. Unsupervised feature learning for audio classification using convolutional deep belief networks. In Proceedings of the 22nd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 7–10 December 2009; pp. 1096–1104. [Google Scholar]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.r.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Kim, Y.; Lee, H.; Provost, E.M. Deep learning for robust feature generation in audiovisual emotion recognition. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 3687–3691. [Google Scholar]

- Deng, L.; Li, J.; Huang, J.T.; Yao, K.; Yu, D.; Seide, F.; Seltzer, M.; Zweig, G.; He, X.; Williams, J.; et al. Recent advances in deep learning for speech research at Microsoft. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 8604–8608. [Google Scholar]

- Tu, Y.H.; Du, J.; Wang, Q.; Bao, X.; Dai, L.R.; Lee, C.H. An information fusion framework with multi-channel feature concatenation and multi-perspective system combination for the deep-learning-based robust recognition of microphone array speech. Comput. Speech Lang. 2017, 46, 517–534. [Google Scholar] [CrossRef]

- Piczak, K.J. Environmental sound classification with convolutional neural networks. In Proceedings of the 2015 IEEE 25th International Workshop on Machine Learning for Signal Processing (MLSP), Boston, MA, USA, 17–20 September 2015; pp. 1–6. [Google Scholar]

- Cakir, E.; Heittola, T.; Huttunen, H.; Virtanen, T. Polyphonic sound event detection using multi label deep neural networks. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–16 July 2015; pp. 1–7. [Google Scholar]

- Lane, N.D.; Georgiev, P.; Qendro, L. DeepEar: Robust smartphone audio sensing in unconstrained acoustic environments using deep learning. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Osaka, Japan, 7–11 Sepember 2015; pp. 283–294. [Google Scholar]

- Wilkinson, B.; Ellison, C.; Nykaza, E.T.; Boedihardjo, A.P.; Netchaev, A.; Wang, Z.; Bunkley, S.L.; Oates, T.; Blevins, M.G. Deep learning for unsupervised separation of environmental noise sources. J. Acoust. Soc. Am. 2017, 141, 3964. [Google Scholar] [CrossRef]

- Barchiesi, D.; Giannoulis, D.; Stowell, D.; Plumbley, M.D. Acoustic scene classification: Classifying environments from the sounds they produce. IEEE Signal Process. Mag. 2015, 32, 16–34. [Google Scholar] [CrossRef]

- Parascandolo, G.; Heittola, T.; Huttunen, H.; Virtanen, T. Convolutional Recurrent Neural Networks for Polyphonic Sound Event Detection. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 1291–1303. [Google Scholar] [Green Version]

- Eghbal-Zadeh, H.; Lehner, B.; Dorfer, M.; Widmer, G. CP-JKU submissions for DCASE-2016: A hybrid approach using binaural i-vectors and deep convolutional neural networks. In Proceedings of the 2017 IEEE 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; pp. 2749–2753. [Google Scholar]

- Salamon, J.; Bello, J.P. Deep convolutional neural networks and data augmentation for environmental sound classification. IEEE Signal Process. Lett. 2017, 24, 279–283. [Google Scholar] [CrossRef]

- Liu, J.; Yu, X.; Wan, W.; Li, C. Multi-classification of audio signal based on modified SVM. In Proceedings of the IET International Communication Conference on Wireless Mobile and Computing (CCWMC 2009), Shanghai, China, 7–9 December 2009; pp. 331–334. [Google Scholar]

- Xu, Y.; Huang, Q.; Wang, W.; Foster, P.; Sigtia, S.; Jackson, P.J.B.; Plumbley, M.D. Unsupervised Feature Learning Based on Deep Models for Environmental Audio Tagging. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 1230–1241. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Dai, W.; Metze, F.; Qu, S.; Das, S. A comparison of Deep Learning methods for environmental sound detection. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 126–130. [Google Scholar]

- Chowdhury, A.S.K. Implementation and Performance Evaluation of Acoustic Denoising Algorithms for UAV. Master’s Thesis, University of Nevada, Las Vegas, NV, USA, 2016. [Google Scholar]

- Mezei, J.; Molnár, A. Drone sound detection by correlation. In Proceedings of the 2016 IEEE 11th International Symposium on Applied Computational Intelligence and Informatics (SACI), Timisoara, Romania, 12–14 May 2016; pp. 509–518. [Google Scholar]

- Bernardini, A.; Mangiatordi, F.; Pallotti, E.; Capodiferro, L. Drone detection by acoustic signature identification. Electron. Imaging 2017, 2017, 60–64. [Google Scholar] [CrossRef]

- Park, S.; Shin, S.; Kim, Y.; Matson, E.T.; Lee, K.; Kolodzy, P.J.; Slater, J.C.; Scherreik, M.; Sam, M.; Gallagher, J.C.; et al. Combination of radar and audio sensors for identification of rotor-type unmanned aerial vehicles (uavs). In Proceedings of the 2015 IEEE SENSORS, Busan, Korea, 1–4 November 2015; pp. 1–4. [Google Scholar]

- Liu, H.; Wei, Z.; Chen, Y.; Pan, J.; Lin, L.; Ren, Y. Drone detection based on an audio-assisted camera array. In Proceedings of the 2017 IEEE Third International Conference on Multimedia Big Data (BigMM), Laguna Hills, CA, USA, 19–21 April 2017; pp. 402–406. [Google Scholar]

- Kim, J.; Park, C.; Ahn, J.; Ko, Y.; Park, J.; Gallagher, J.C. Real-time UAV sound detection and analysis system. In Proceedings of the 2017 IEEE Sensors Applications Symposium (SAS), Glassboro, NJ, USA, 13–15 March 2017; pp. 1–5. [Google Scholar]

- Kim, J.; Kim, D. Neural Network based Real-time UAV Detection and Analysis by Sound. J. Adv. Inf. Technol. Converg. 2018, 8, 43–52. [Google Scholar] [CrossRef]

- Salamon, J.; Jacoby, C.; Bello, J.P. A dataset and taxonomy for urban sound research. In Proceedings of the 22nd ACM International Conference on Multimedia. ACM, Mountain View, CA, USA, 18–19 June 2014; pp. 1041–1044. [Google Scholar]

- Jeon, S.; Shin, J.W.; Lee, Y.J.; Kim, W.H.; Kwon, Y.; Yang, H.Y. Empirical study of drone sound detection in real-life environment with deep neural networks. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; pp. 1858–1862. [Google Scholar]

- Esteban, J.; Starr, A.; Willetts, R.; Hannah, P.; Bryanston-Cross, P. A review of data fusion models and architectures: Towards engineering guidelines. Neural Comput. Appl. 2005, 14, 273–281. [Google Scholar] [CrossRef]

- Baltrušaitis, T.; Ahuja, C.; Morency, L.P. Multimodal machine learning: A survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 423–443. [Google Scholar] [CrossRef]

- Ngiam, J.; Khosla, A.; Kim, M.; Nam, J.; Lee, H.; Ng, A.Y. Multimodal deep learning. In Proceedings of the 28th International Conference on Machine Learning (ICML-11), Bellevue, WA, USA, 28 June–2 July 2011; pp. 689–696. [Google Scholar]

- Sutskever, I.; Hinton, G.E.; Taylor, G.W. The recurrent temporal restricted boltzmann machine. In Proceedings of the 21st International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–10 December 2009; pp. 1601–1608. [Google Scholar]

- Patterson, E.K.; Gurbuz, S.; Tufekci, Z.; Gowdy, J.N. CUAVE: A new audio-visual database for multimodal human-computer interface research. In Proceedings of the 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing, Orlando, FL, USA, 13–17 May 2002; Volume 2. [Google Scholar]

- Matthews, I.; Cootes, T.F.; Bangham, J.A.; Cox, S.; Harvey, R. Extraction of visual features for lipreading. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 198–213. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images. Available online: https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf (accessed on 3 June 2019).

- Baldi, P.; Sadowski, P.; Whiteson, D. Searching for exotic particles in high-energy physics with deep learning. Nat. Commun. 2014, 5, 4308. [Google Scholar] [CrossRef] [Green Version]

- Gender Classification. Available online: https://www.kaggle.com/hb20007/gender-classification (accessed on 3 June 2019).

- Dong, J.; Zhuang, D.; Huang, Y.; Fu, J. Advances in multi-sensor data fusion: Algorithms and applications. Sensors 2009, 9, 7771–7784. [Google Scholar] [CrossRef]

- Patil, U.; Mudengudi, U. Image fusion using hierarchical PCA. In Proceedings of the 2011 International Conference on Image Information Processing, Shimla, India, 3–5 November 2011; pp. 1–6. [Google Scholar]

- Al-Wassai, F.A.; Kalyankar, N.; Al-Zuky, A.A. The IHS transformations based image fusion. arXiv 2011, arXiv:1107.4396. [Google Scholar]

- Snoek, C.G.; Worring, M.; Smeulders, A.W. Early versus late fusion in semantic video analysis. In Proceedings of the 13th Annual ACM International Conference on Multimedia, Singapore, 6–11 November 2005; pp. 399–402. [Google Scholar]

- NIST TREC Video Retrieval Evaluation. Available online: http://www-nlpir.nist.gov/projects/trecvid/ (accessed on 11 June 2019).

- Ye, G.; Liu, D.; Jhuo, I.H.; Chang, S.F. Robust late fusion with rank minimization. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3021–3028. [Google Scholar]

- Nilsback, M.E.; Zisserman, A. A visual vocabulary for flower classification. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 2, pp. 1447–1454. [Google Scholar]

- Bombini, L.; Cerri, P.; Medici, P.; Alessandretti, G. Radar-Vision Fusion for Vehicle Detection. Available online: http://www.ce.unipr.it/people/bertozzi/publications/cr/wit2006-crf-radar.pdf (accessed on 11 June 2019).

- Jovanoska, S.; Brötje, M.; Koch, W. Multisensor data fusion for UAV detection and tracking. In Proceedings of the 2018 19th International Radar Symposium (IRS), Bonn, Germany, 20–22 June 2018; pp. 1–10. [Google Scholar]

- Koch, W.; Koller, J.; Ulmke, M. Ground target tracking and road map extraction. ISPRS J. Photogramm. Remote Sens. 2006, 61, 197–208. [Google Scholar] [CrossRef]

- Hengy, S.; Laurenzis, M.; Schertzer, S.; Hommes, A.; Kloeppel, F.; Shoykhetbrod, A.; Geibig, T.; Johannes, W.; Rassy, O.; Christnacher, F. Multimodal UAV detection: Study of various intrusion scenarios. In Proceedings of the Electro-Optical Remote Sensing XI International Society for Optics and Photonics, Warsaw, Poland, 11–14 September 2017; Volume 10434, p. 104340P. [Google Scholar]

- Laurenzis, M.; Hengy, S.; Hammer, M.; Hommes, A.; Johannes, W.; Giovanneschi, F.; Rassy, O.; Bacher, E.; Schertzer, S.; Poyet, J.M. An adaptive sensing approach for the detection of small UAV: First investigation of static sensor network and moving sensor platform. In Proceedings of the Signal Processing, Sensor/Information Fusion, and Target Recognition XXVII International Society for Optics and Photonics, Orlando, FL, USA, 16–19 April 2018; Volume 10646, p. 106460S. [Google Scholar]

- Shi, W.; Arabadjis, G.; Bishop, B.; Hill, P.; Plasse, R.; Yoder, J. Detecting, tracking, and identifying airborne threats with netted sensor fence. In Sensor Fusion-Foundation and Applications; IntechOpen: Rijeka, Croatia, 2011. [Google Scholar]

- Charvat, G.L.; Fenn, A.J.; Perry, B.T. The MIT IAP radar course: Build a small radar system capable of sensing range, Doppler, and synthetic aperture (SAR) imaging. In Proceedings of the 2012 IEEE Radar Conference, Atlanta, GA, USA, 7–11 May 2012; pp. 0138–0144. [Google Scholar]

- Eleni Diamantidou, A.L.; Votis, K.; Tzovaras, D. Multimodal Deep Learning Framework for Enhanced Accuracy of UAV Detection. In Proceedings of the 12th International Conference on Computer Vision Systems (ICVS), Thessaloniki, Greece, 23–25 September 2019. [Google Scholar]

- Endo, Y.; Toda, H.; Nishida, K.; Ikedo, J. Classifying spatial trajectories using representation learning. Int. J. Data Sci. Anal. 2016, 2, 107–117. [Google Scholar] [CrossRef] [Green Version]

- Kumaran, S.K.; Dogra, D.P.; Roy, P.P.; Mitra, A. Video Trajectory Classification and Anomaly Detection Using Hybrid CNN-VAE. arXiv 2018, arXiv:1812.07203. [Google Scholar]

- Chen, Y.; Aggarwal, P.; Choi, J.; Jay, C.C. A deep learning approach to drone monitoring. In Proceedings of the 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; pp. 686–691. [Google Scholar]

- Bounding Box Detection of Drones. 2017. Available online: https://github.com/creiser/drone-detection (accessed on 15 October 2019).

- MultiDrone Public DataSet. 2018. Available online: https://multidrone.eu/multidrone-public-dataset/ (accessed on 15 October 2019).

- Coluccia, A.; Ghenescu, M.; Piatrik, T.; De Cubber, G.; Schumann, A.; Sommer, L.; Klatte, J.; Schuchert, T.; Beyerer, J.; Farhadi, M.; et al. Drone-vs-bird detection challenge at IEEE AVSS2017. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- 2nd International Workshop on Small-Drone Surveillance, Detection and Counteraction Techqniques (WOSDETC) 2019. 2019. Available online: https://wosdetc2019.wordpress.com/challenge/ (accessed on 1 July 2019).

- Workshop on Vision-Enabled UAV and Counter-UAV Technologies for Surveillance and Security of Critical Infrastructures (UAV4S) 2019. 2019. Available online: https://icvs2019.org/content/workshop-vision-enabled-uav-and-counter-uav-technologies-surveillance-and-security-critical (accessed on 15 May 2019).

- Chhetri, A.; Hilmes, P.; Kristjansson, T.; Chu, W.; Mansour, M.; Li, X.; Zhang, X. Multichannel Audio Front-End for Far-Field Automatic Speech Recognition. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Eternal City, Italy, 3–7 September 2018; pp. 1527–1531. [Google Scholar]

| Task | Signal Processing | Classification | Reference |

|---|---|---|---|

| Feature extraction | MDS with spectrogram, handcrafted features | - | [19] |

| Feature extraction | MDS with spectrogram and cepstrogram, handcrafted features | - | [20] |

| UAV classification | MDS with spectrogram, Eigenpairs extracted from MDS | linear and non linear SVM, NBC | [16] |

| UAV classification, feature extraction | MDS with spectrogram, cepstrogram and CVD, SVD on MDS | SVM | [21,22] |

| UAV classification, feature extraction | MDS with 2D regularized complex-log-Fourier transform | Subspace reliability analysis | [23] |

| UAV classification, feature extraction | MDS with EMD, features from EMD | SVM | [24] |

| UAV classification, feature extraction | MDS with EMD, entropy from EMD features | SVM | [25] |

| UAV classification, localization | MDS with EMD, PCA on MDS | Nearest Neighbor, NBC, random forest, SVM | [26] |

| UAV classification | MDS with spectrogram, handcrafted features | NBC, DAC | [27] |

| UAV detection, tracking | MDS with spectrogram, CFAR for detection, Kalman for tracking | - | [28] |

| UAV classification, feature extraction | MDS with spectrogram, PCA on MDS | SVM | [29] |

| UAV trajectory classification | Features from moving direction, velocity, and position of the target | Probabilistic motion estimation model | [30] |

| UAV trajectory and type classification, feature extraction | Features from motion, velocity, signature | SVM | [31] |

| UAV classification, feature extraction | Radar polarimetric features | Nearest Neighbor | [32] |

| UAV classification | MDS with spectrogram and CVD | CNN | [33] |

| UAV classification | SCF reference banks | DBN | [34] |

| Target detection | Doppler processing | CNN | [35] |

| UAV classification | Direct learning on Range Profile matrix | CNN | [36] |

| UAV classification | Direct learning on IQ signal | MLP | [37] |

| UAV classification | Point cloud from radar signal | MLP | [38] |

| UAV trajectory classification, feature extraction | Features from motion, velocity, RCS | MLP | [39] |

| Classification Task (Num. of Classes) | Classification Method | Accuracy (%) | Reference |

|---|---|---|---|

| UAV type vs. birds (11) | Eigenpairs of MDS + non linear SVM | 82 | [16] |

| UAV type vs. birds (11) | MDS with EMD + SVM | 89.54 | [24] |

| UAV type vs. birds (11) | MDS with EMD, entropy from EMD + SVM | 92.61 | [25] |

| UAV vs. birds (2) | SVD on MDS + SVM | 100 | [22] |

| UAV type (2) | SVD on MDS + SVM | 96.2 | [22] |

| UAV vs. birds (2) | 2D regularized complex log-Fourier transform + Subspace reliability analysis | 96.73 | [23] |

| UAV type + localization (66) | PCA on MDS + random forest | 91.2 | [26] |

| loaded vs. unloaded UAV (3) | MDS handcrafted features + DAC | 100 | [27] |

| UAV type (3) | PCA on MDS + SVM | 97.6 | [29] |

| UAV type vs. birds (4) | Radar polarimetric features + Nearest Neighbor | 99.2 | [32] |

| UAV vs. birds (2) | Range Profile Matrix + CNN | 95 | [36] |

| UAV type (6) | MDS and CVD images + CNN | 99.59 | [33] |

| UAV type vs. birds (3) | SCF reference banks + DBN | 90 | [34] |

| UAV type (2) | Learning on IQ signal + MLP | 100 | [37] |

| UAV type (3) | Point cloud features + MLP | 99.3 | [38] |

| UAV vs. birds (2) | Motion, velocity and RCS features + MLP | 99 | [39] |

| UAV type vs. birds (3) | Motion, velocity and signature features + SVM | 98 | [31] |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Samaras, S.; Diamantidou, E.; Ataloglou, D.; Sakellariou, N.; Vafeiadis, A.; Magoulianitis, V.; Lalas, A.; Dimou, A.; Zarpalas, D.; Votis, K.; et al. Deep Learning on Multi Sensor Data for Counter UAV Applications—A Systematic Review. Sensors 2019, 19, 4837. https://doi.org/10.3390/s19224837

Samaras S, Diamantidou E, Ataloglou D, Sakellariou N, Vafeiadis A, Magoulianitis V, Lalas A, Dimou A, Zarpalas D, Votis K, et al. Deep Learning on Multi Sensor Data for Counter UAV Applications—A Systematic Review. Sensors. 2019; 19(22):4837. https://doi.org/10.3390/s19224837

Chicago/Turabian StyleSamaras, Stamatios, Eleni Diamantidou, Dimitrios Ataloglou, Nikos Sakellariou, Anastasios Vafeiadis, Vasilis Magoulianitis, Antonios Lalas, Anastasios Dimou, Dimitrios Zarpalas, Konstantinos Votis, and et al. 2019. "Deep Learning on Multi Sensor Data for Counter UAV Applications—A Systematic Review" Sensors 19, no. 22: 4837. https://doi.org/10.3390/s19224837

APA StyleSamaras, S., Diamantidou, E., Ataloglou, D., Sakellariou, N., Vafeiadis, A., Magoulianitis, V., Lalas, A., Dimou, A., Zarpalas, D., Votis, K., Daras, P., & Tzovaras, D. (2019). Deep Learning on Multi Sensor Data for Counter UAV Applications—A Systematic Review. Sensors, 19(22), 4837. https://doi.org/10.3390/s19224837