A Novel Radar HRRP Recognition Method with Accelerated T-Distributed Stochastic Neighbor Embedding and Density-Based Clustering

Abstract

:1. Introduction

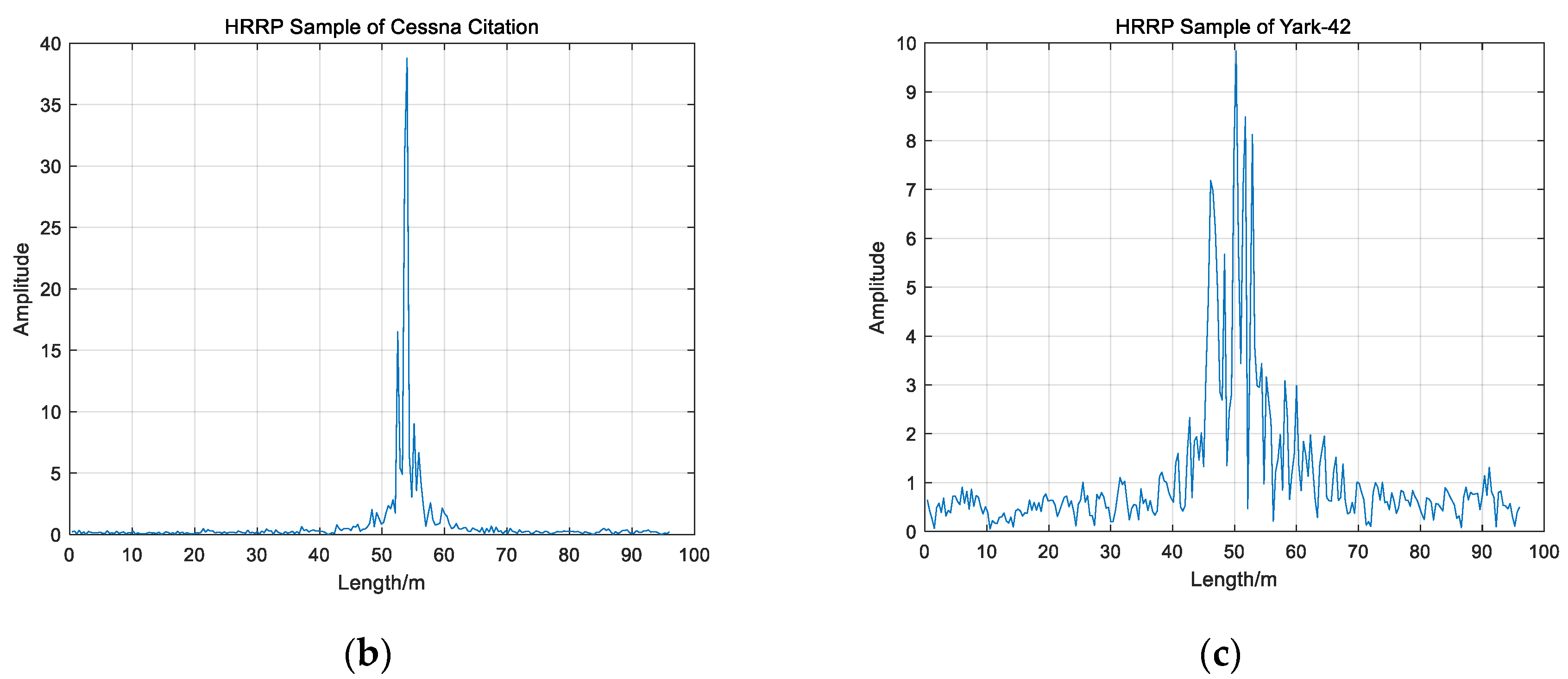

- Effective and fast dimensional reduction. PCA greatly reduces the dimensionality of the data while preserving the HRRP information. Meanwhile, with the accelerated t-SNE, we can achieve further dimensionality reduction much faster than the conventional t-SNE.

- Visualization of high-dimensional data. After the operation of PCA, the dimension of data is still high, and it is difficult to express the distribution of data points in 2D or 3D coordinate system. The t-SNE algorithm provides a valid approach to present data for visualization which is conducive to the intuitive judgment.

- High accuracy of clustering without training. At this stage, many recognition algorithms need to be trained for classification. However, in some cases, especially in military field, the samples of specific targets cannot be obtained. In this paper, the high-accuracy HRRP clustering method can obtain classification results without training.

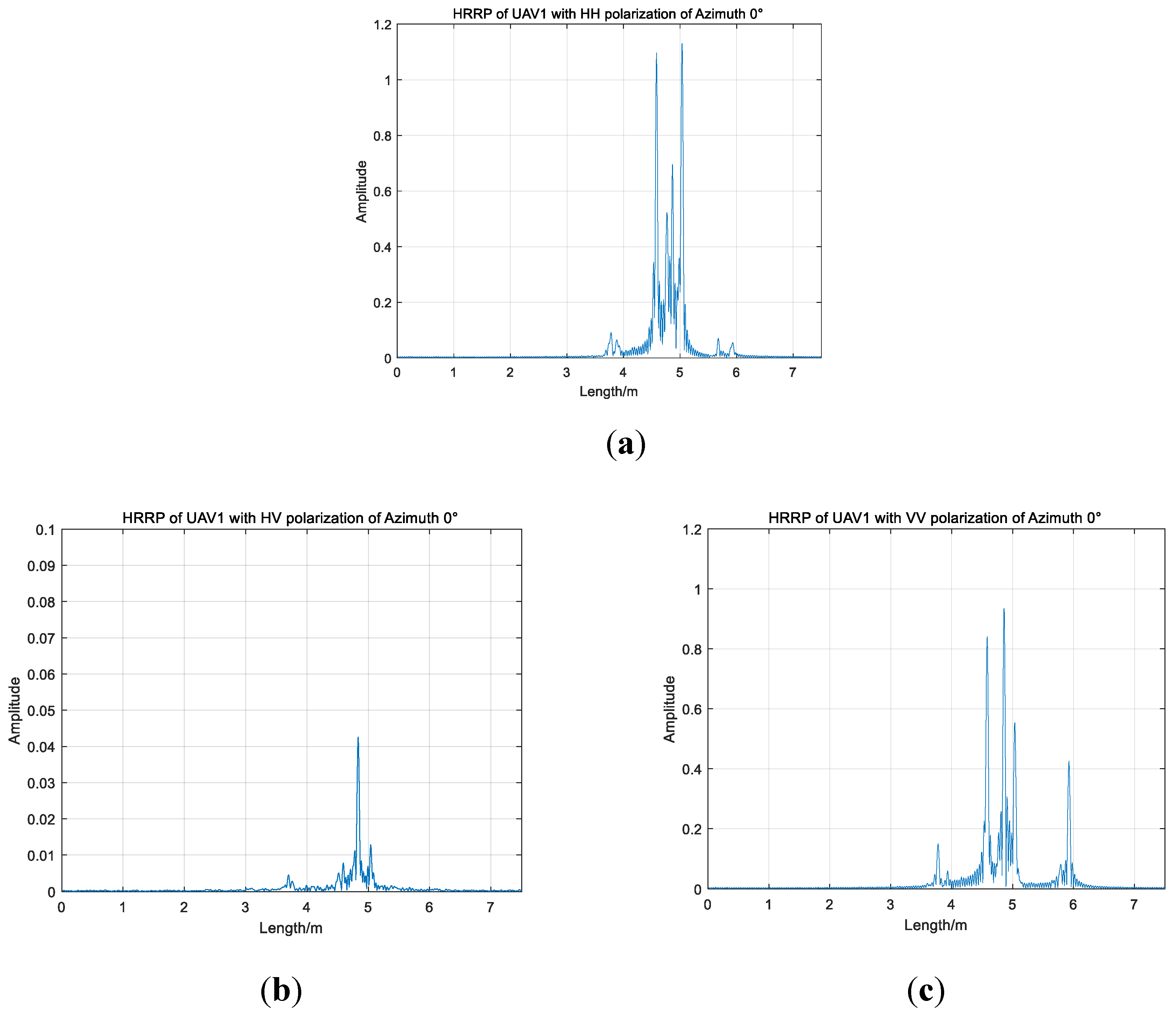

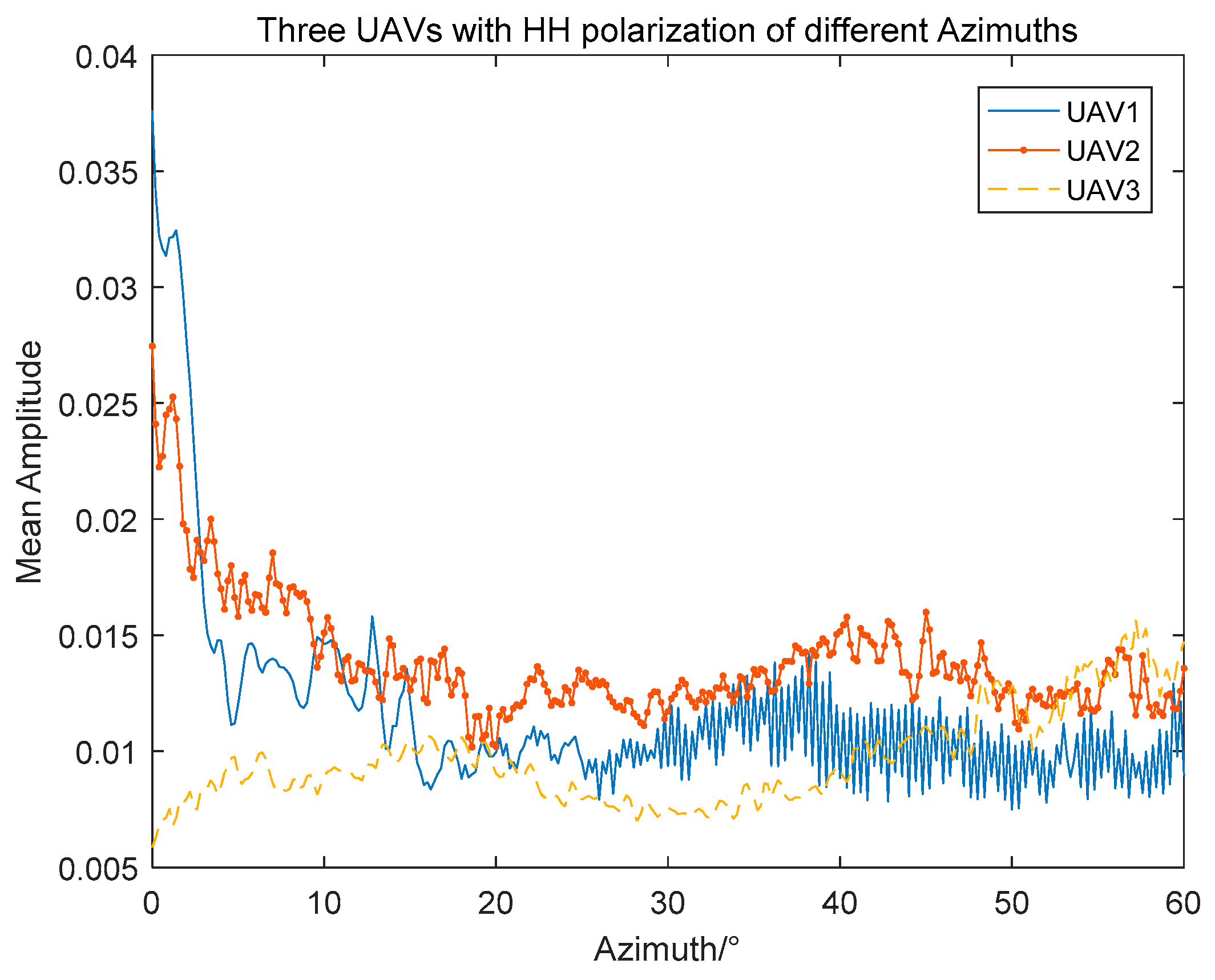

2. The Signal Model of HRRPs

3. Proposed Method

3.1. Principal Component Analysis Based on Singular Value Decomposition

3.2. Accelerated t-SNE with Barnes–Hut Approximation

3.3. A Novel Density-Based Clustering

| Algorithm 1. Simple version of the novel density-based algorithm. The steps for the novel density-based algorithm. |

| 1: Input: Distance |

| 2: Initialization: Cutoff distance , where [] represent rounding function and . Attribute of points . |

| 3: Results: Number of categories of the HRRPs and the type of each sample. |

| 4: Begin |

| 5: Step 1. The computation of and . |

| 6: Step 2. Calculation of distance and attribute . |

| 7: Step 3. Identification of clustering centers and classification of the other points. |

| 8: Step 4. The selection of average local density in the border region. |

| 9: Step 5. Classification of points with the label of cluster core or cluster halo. |

| 10: End |

3.4. Overall Structure of Proposed Method

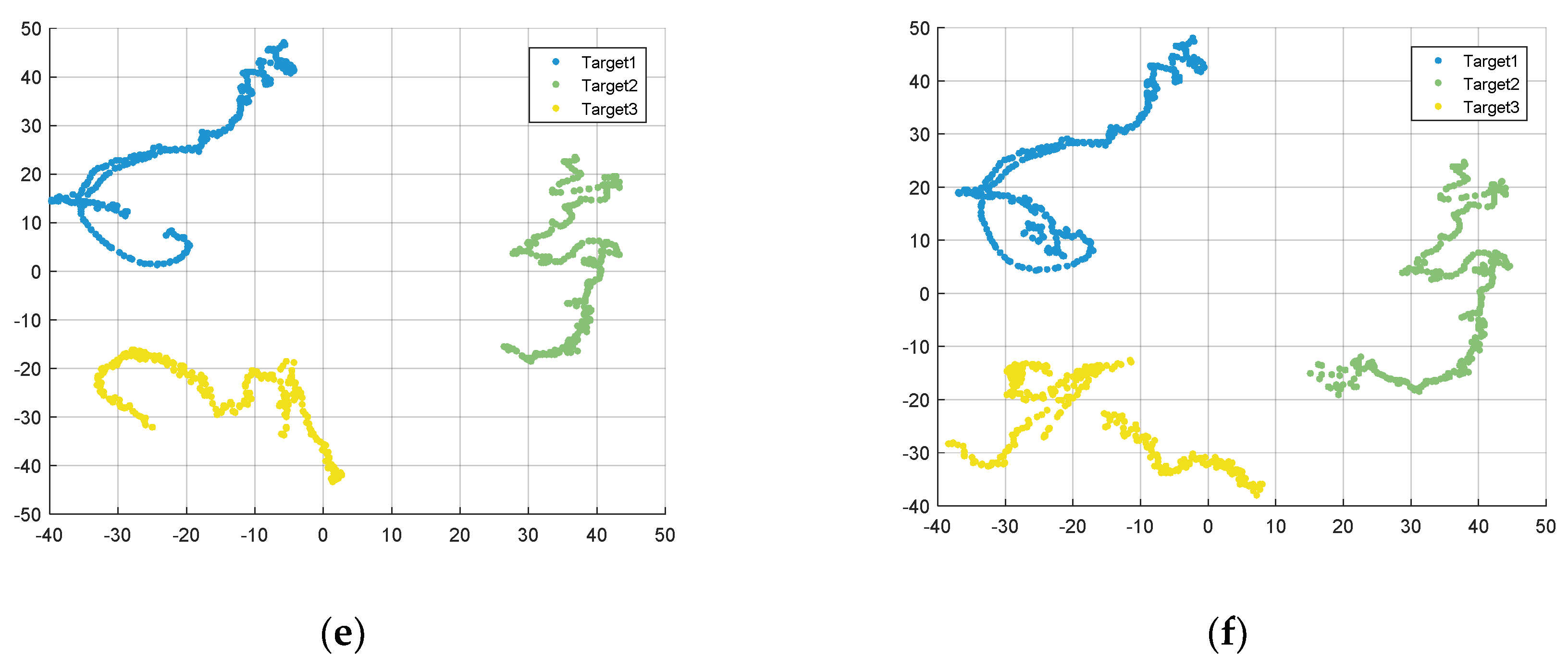

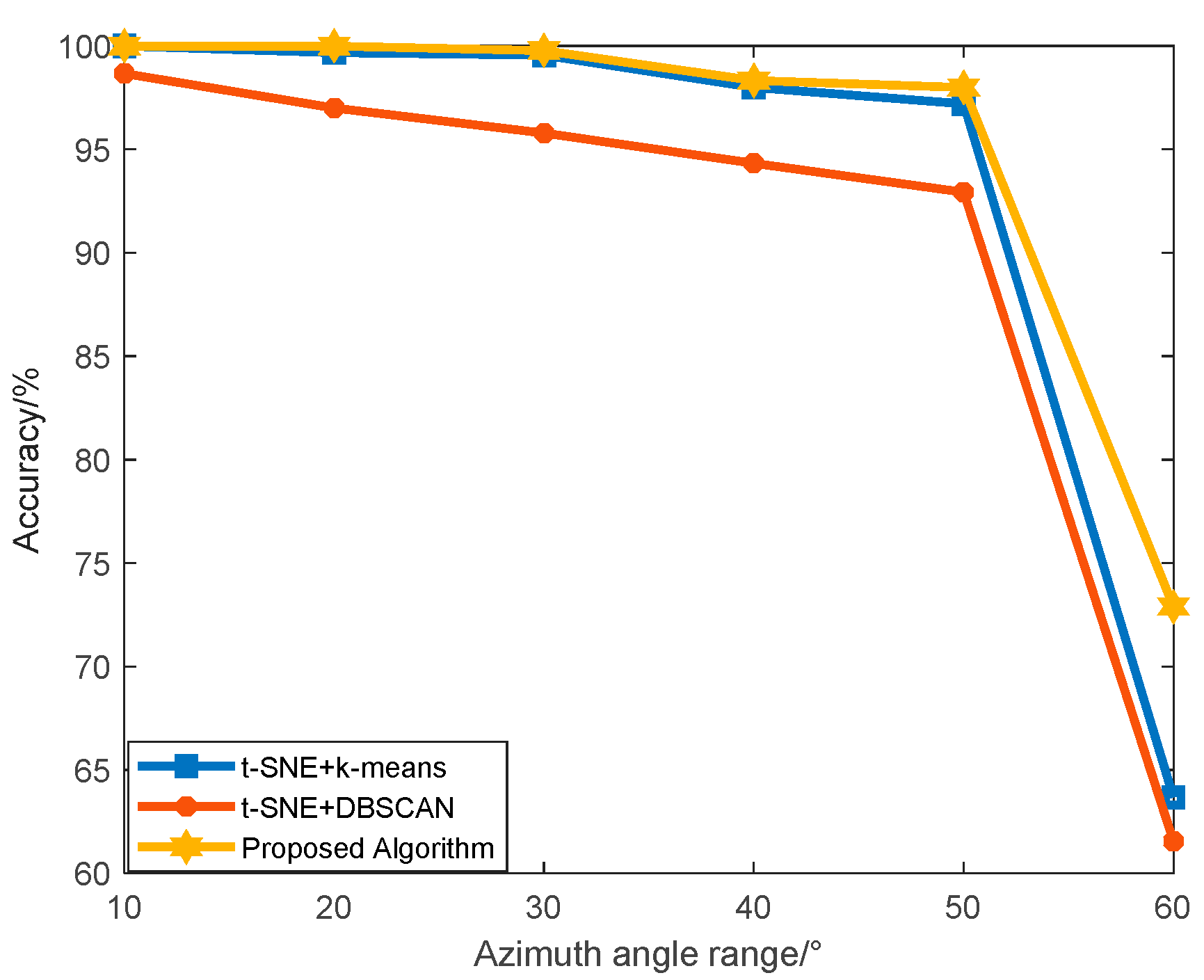

4. Experiment Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wang, F.; Pang, C.; Li, Y. Algorithms for designing unimodular sequences with high Doppler tolerance for simultaneous fully polarimetric radar. Sensors 2018, 18, 905. [Google Scholar] [CrossRef] [PubMed]

- El-Darymli, K. Automatic target recognition in synthetic aperture radar imagery: A state-of-the-art review. IEEE Access 2016, 4, 6014–6058. [Google Scholar] [CrossRef]

- Bhattacharyya, K.; Deka, R.; Baruah, S. Automatic RADAR Target Recognition System at THz Frequency Band. A Review. ADBU J. Eng. Technol. 2017, 6, 1–15. [Google Scholar]

- Tao, C.; Chen, S.; Li, Y. PolSAR land cover classification based on roll-invariant and selected hidden polarimetric features in the rotation domain. Remote Sens. 2017, 9, 660. [Google Scholar]

- Chen, S.W.; Wang, X.S.; Sato, M. PolInSAR complex coherence estimation based on covariance matrix similarity test. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4699–4710. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G. Convolutional neural networks for large-scale remote-sensing image classification. IEEE Trans. Geosci. Remote Sens. 2016, 55, 645–657. [Google Scholar] [CrossRef]

- Romero, A.; Gatta, C.; Camps-Valls, G. Unsupervised deep feature extraction for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2015, 54, 1349–1362. [Google Scholar] [CrossRef]

- Yang, X.L.; Wen, G.J.; Ma, C.H. CFAR detection of moving range-spread target in white Gaussian noise using waveform contrast. IEEE Geosci. Remote Sens. Lett. 2016, 13, 282–286. [Google Scholar] [CrossRef]

- Aubry, A.; Carotenuto, V.; De Maio, A. High resolution range profile estimation via a cognitive stepped frequency technique. IEEE Trans. Aerosp. Electron. Syst. 2018, 55, 444–458. [Google Scholar] [CrossRef]

- Du, L.; He, H.; Zhao, L. Noise robust radar HRRP target recognition based on scatterer matching algorithm. IEEE Sens. J. 2015, 16, 1743–1753. [Google Scholar] [CrossRef]

- Guo, C.; He, Y.; Wang, H. Radar HRRP target recognition based on deep one-dimensional residual-inception network. IEEE Access 2019, 7, 9191–9204. [Google Scholar] [CrossRef]

- Lee, S.J.; Jeong, S.J.; Yang, E. Target identification using bistatic high-resolution range profiles. IET Radar Sonar Navig. 2016, 11, 498–504. [Google Scholar] [CrossRef]

- Guo, Y.; Xiao, H.; Kan, Y. Learning using privileged information for HRRP-based radar target recognition. IET Signal Process. 2017, 12, 188–197. [Google Scholar] [CrossRef]

- Pan, M.; Jiang, J.; Kong, Q. Radar HRRP target recognition based on t-SNE segmentation and discriminant deep belief network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1609–1613. [Google Scholar] [CrossRef]

- Zhou, D. Radar target HRRP recognition based on reconstructive and discriminative dictionary learning. Signal Process. 2016, 126, 52–64. [Google Scholar] [CrossRef]

- Feng, B.; Chen, B.; Liu, H. Radar HRRP target recognition with deep networks. Pattern Recognit. 2017, 61, 379–393. [Google Scholar] [CrossRef]

- Du, C.; Chen, B.; Xu, B. Factorized discriminative conditional variational auto-encoder for radar HRRP target recognition. Signal Process. 2019, 158, 176–189. [Google Scholar] [CrossRef]

- Zhao, F.; Liu, Y.; Huo, K. Radar HRRP target recognition based on stacked autoencoder and extreme learning machine. Sensors 2018, 18, 173. [Google Scholar] [CrossRef]

- Li, L.; Liu, Z. Noise-robust HRRP target recognition method via sparse-low-rank representation. Electron. Lett. 2017, 53, 1602–1604. [Google Scholar] [CrossRef]

- Ma, Y.; Zhu, L.; Li, Y. HRRP-based target recognition with deep contractive neural network. J. Electromagn. Waves Appl. 2019, 33, 911–928. [Google Scholar] [CrossRef]

- Shi, L.; Wang, P.; Liu, H. Radar HRRP statistical recognition with local factor analysis by automatic Bayesian Ying-Yang harmony learning. IEEE Trans. Signal Process. 2010, 59, 610–617. [Google Scholar] [CrossRef]

- Yan, H.; Zhang, Z.; Xiong, G. Radar HRRP recognition based on sparse denoising autoencoder and multi-layer perceptron deep model. In Proceedings of the Fourth International Conference on Ubiquitous Positioning, Indoor Navigation and Location Based Services (UPINLBS), Shanghai, China, 3–4 November 2016; pp. 283–288. [Google Scholar]

- Zhao, Z.; Shkolnisky, Y.; Singer, A. Fast steerable principal component analysis. IEEE Trans. Comput. Imaging 2016, 2, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Abdi, H.; Williams, L.J. Principal component analysis. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- Kalika, D.; Knox, M.T.; Collins, L.M. Leveraging robust principal component analysis to detect buried explosive threats in handheld ground-penetrating radar data. In Proceedings of the SPIE, Baltimore, MD, USA, 21 May 2015; Volume 9454. [Google Scholar]

- Borcea, L.; Callaghan, T.; Papanicolaou, G. Synthetic aperture radar imaging and motion estimation via robust principal component analysis. SIAM J. Imaging Sci. 2013, 6, 1445–1476. [Google Scholar] [CrossRef]

- Ai, X.; Luo, Y.; Zhao, G. Transient interference excision in over-the-horizon radar by robust principal component analysis with a structured matrix. IEEE Geosci. Remote Sens. Lett. 2015, 13, 48–52. [Google Scholar]

- Zhou, W.; Yeh, C.; Jin, R. ISAR imaging of targets with rotating parts based on robust principal component analysis. IET Radar Sonar Navig. 2016, 11, 563–569. [Google Scholar] [CrossRef]

- Nguyen, L.H.; Tran, T.D. RFI-radar signal separation via simultaneous low-rank and sparse recovery. In Proceedings of the 2016 IEEE Radar Conference, Philadelphia, PA, USA, 2–6 May 2016; pp. 1–5. [Google Scholar]

- Van Der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Kanungo, T.; Mount, D.M.; Netanyahu, N.S. An efficient k-means clustering algorithm: Analysis and implementation. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 7, 881–892. [Google Scholar] [CrossRef]

- Han, J.; Kamber, M.; Tung, A.K.H. Spatial clustering methods in data mining. Geogr. Data Min. Knowl. Discov. 2001, 1, 188–217. [Google Scholar]

- Rodriguez, A.; Laio, A. Clustering by fast search and find of density peaks. Science 2014, 344, 1492–1496. [Google Scholar] [CrossRef]

- Du, L.; Liu, H.; Bao, Z. Radar automatic target recognition using complex high-resolution range profiles. IET Radar Sonar Navig. 2007, 1, 18–26. [Google Scholar] [CrossRef]

- Van Der Maaten, L. Accelerating t-SNE using tree-based algorithms. J. Mach. Learn. Res. 2014, 15, 3221–3245. [Google Scholar]

| Algorithm | Total Time(s) | Average Time(s) |

|---|---|---|

| Conventional t-SNE | 1954.156 | 0.1617 |

| Accelerated t-SNE with Barnes–Hut | 150.771 | 0.0125 |

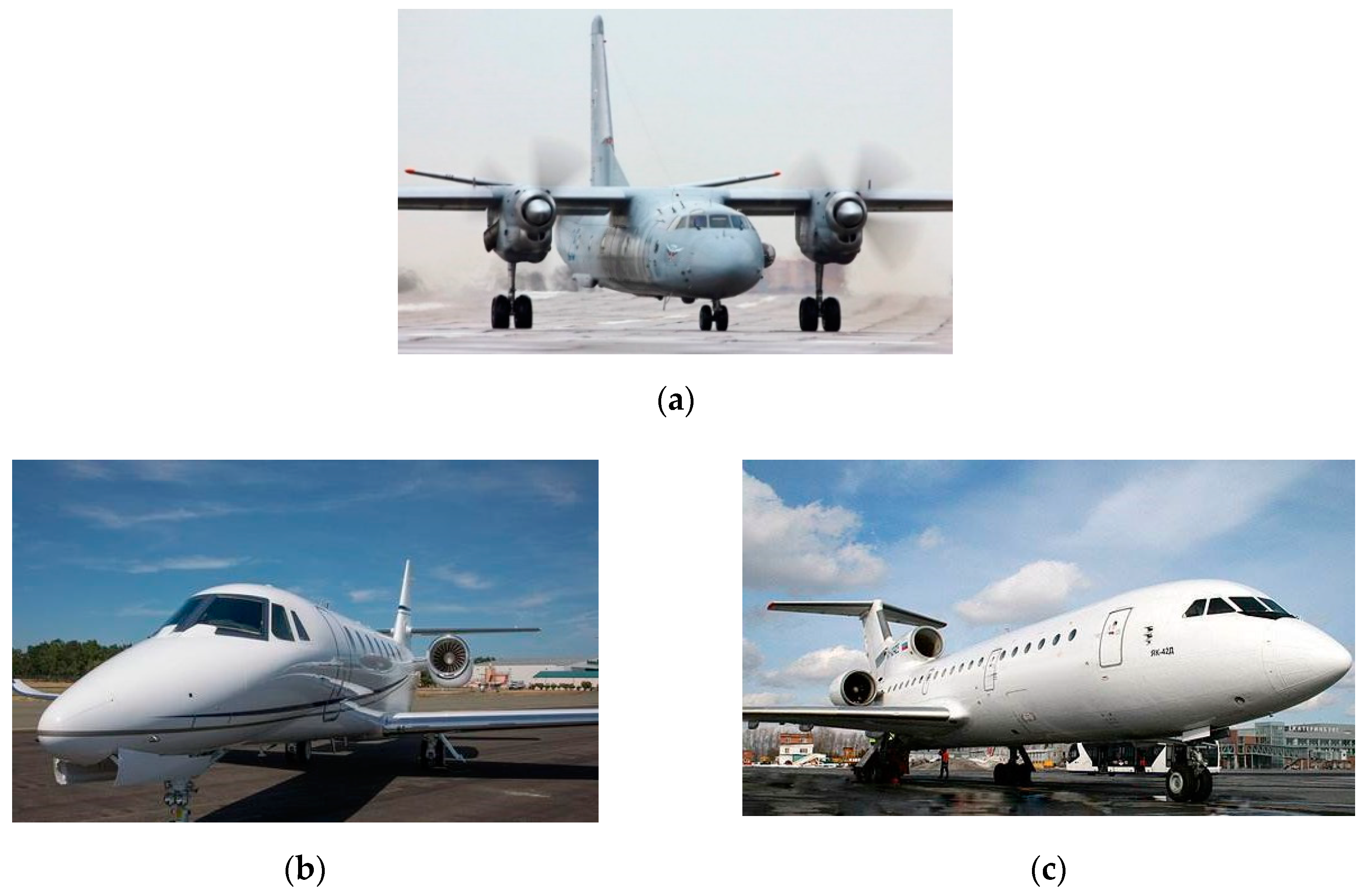

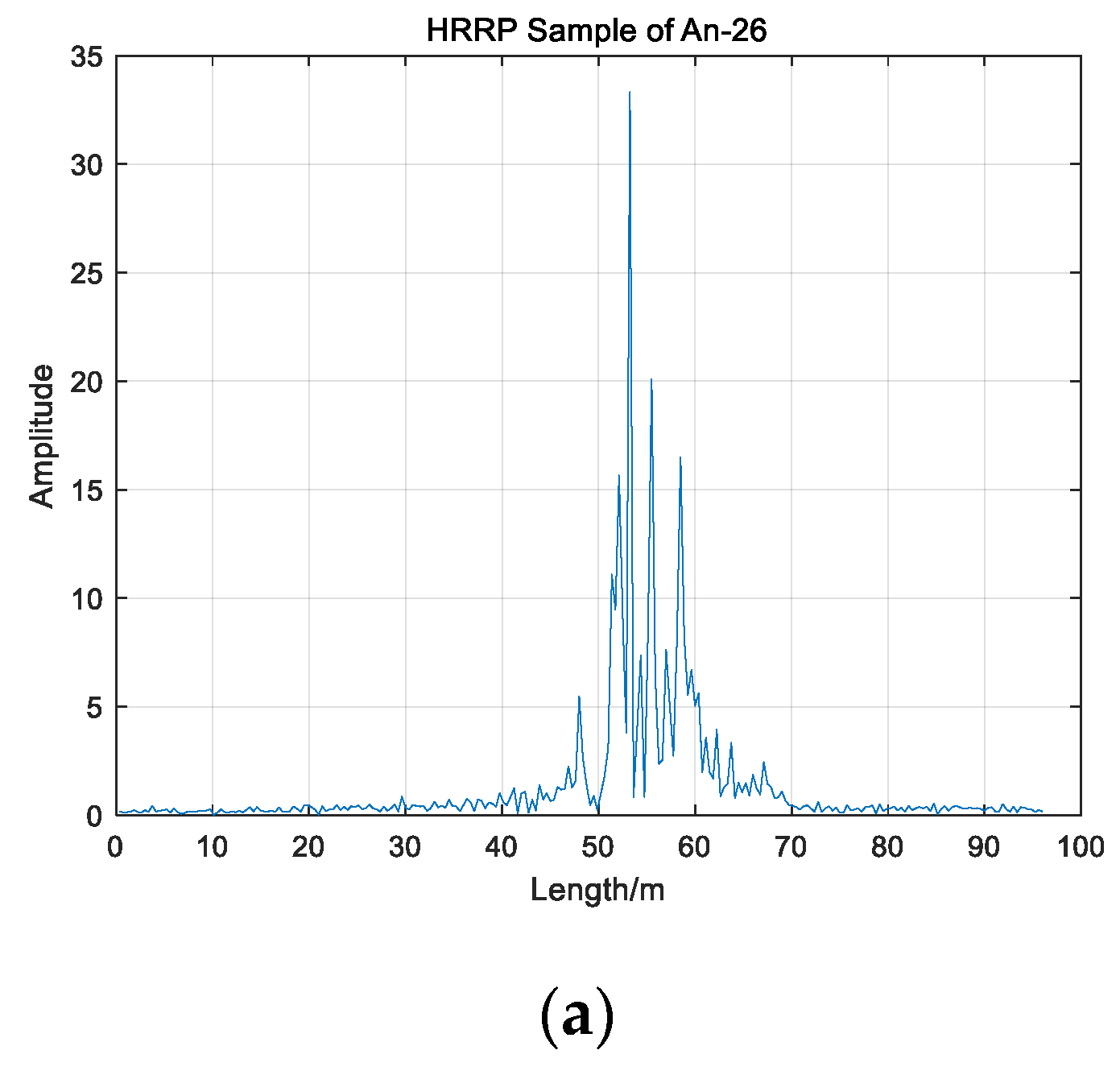

| Aircraft | Length/m | Width/m | Height/m |

|---|---|---|---|

| An-26 | 14.40 | 15.90 | 4.57 |

| Cessna Citation | 23.80 | 29.20 | 9.83 |

| Yark-42 | 36.38 | 34.88 | 9.83 |

| Algorithm | Accelerated t-SNE + k-Means | Accelerated t-SNE + DBSCAN | Proposed Algorithm |

|---|---|---|---|

| Accuracy | 92.17% | 92.00% | 94.23% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, H.; Dai, D.; Wang, X. A Novel Radar HRRP Recognition Method with Accelerated T-Distributed Stochastic Neighbor Embedding and Density-Based Clustering. Sensors 2019, 19, 5112. https://doi.org/10.3390/s19235112

Wu H, Dai D, Wang X. A Novel Radar HRRP Recognition Method with Accelerated T-Distributed Stochastic Neighbor Embedding and Density-Based Clustering. Sensors. 2019; 19(23):5112. https://doi.org/10.3390/s19235112

Chicago/Turabian StyleWu, Hao, Dahai Dai, and Xuesong Wang. 2019. "A Novel Radar HRRP Recognition Method with Accelerated T-Distributed Stochastic Neighbor Embedding and Density-Based Clustering" Sensors 19, no. 23: 5112. https://doi.org/10.3390/s19235112