Evaluation of Pattern Recognition Methods for Head Gesture-Based Interface of a Virtual Reality Helmet Equipped with a Single IMU Sensor

Abstract

:1. Introduction

1.1. Head Motion Analysis and Classification

1.2. Effective Methods of Human Motion Analysis and Classification

1.3. Motivations of This Paper

2. Materials and Methods

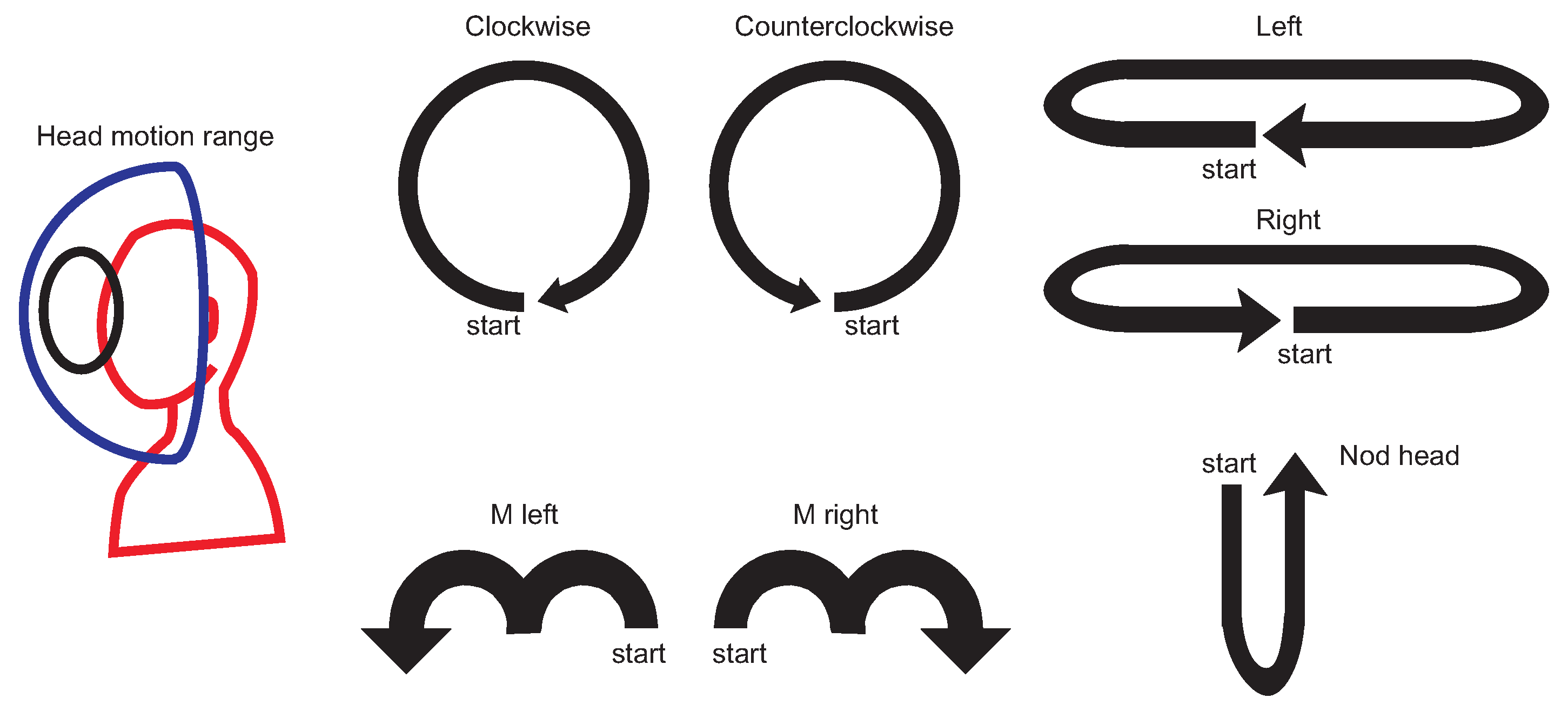

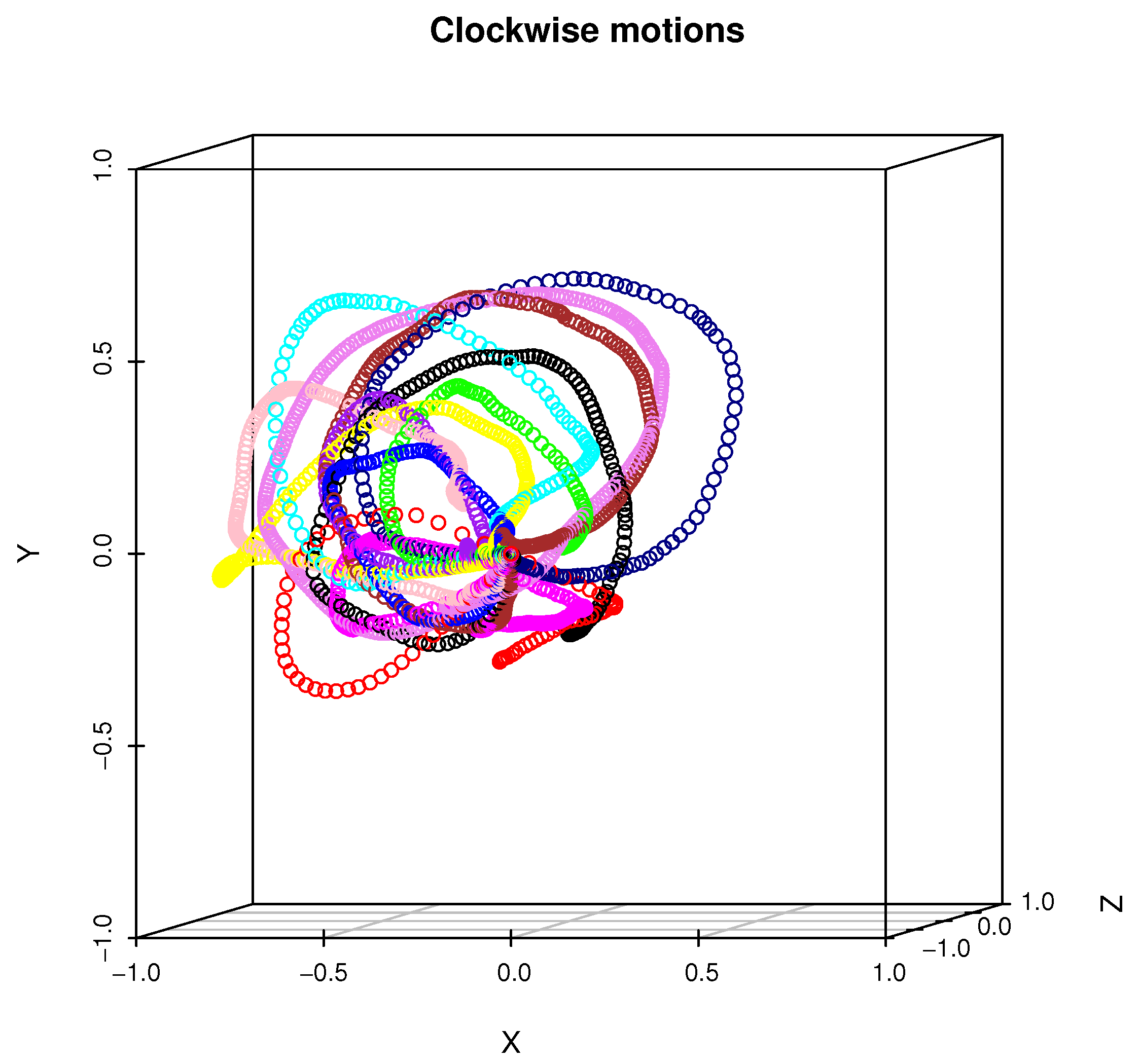

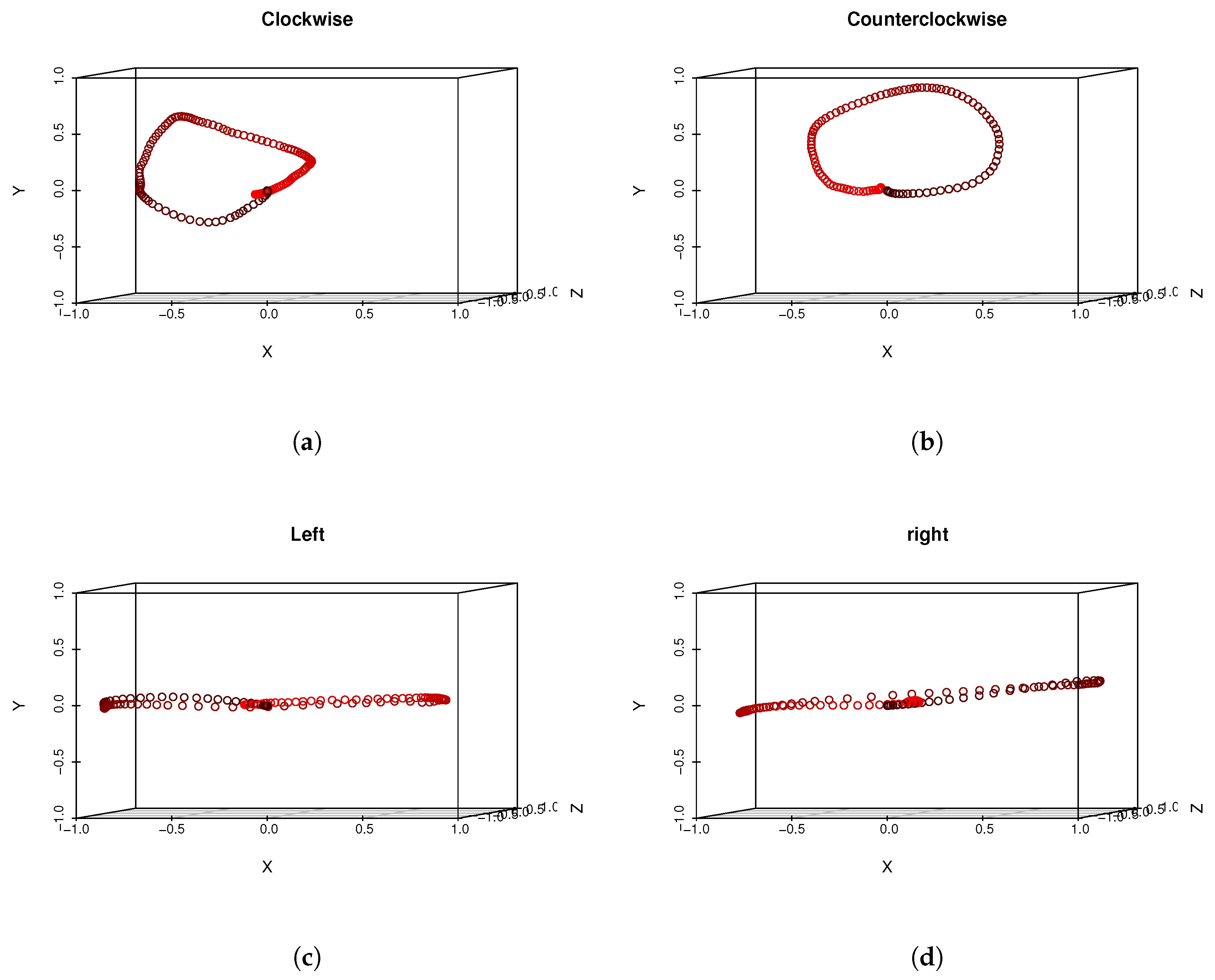

2.1. Evaluation Dataset

| Algorithm 1: Head gestures segmentation from quaternion signal |

|

2.2. Head Gestures Recognition with DTW Classifier

| Algorithm 2: Markley’s quaternion averaging |

|

2.3. Bagged DTW Classier

2.4. Head Gestures Recognition with PCA-Based Features

2.4.1. Features Generation (Training)

2.4.2. Classification

2.5. Two-Tage PCA-Based Method

2.6. Neural Network and Random Forest

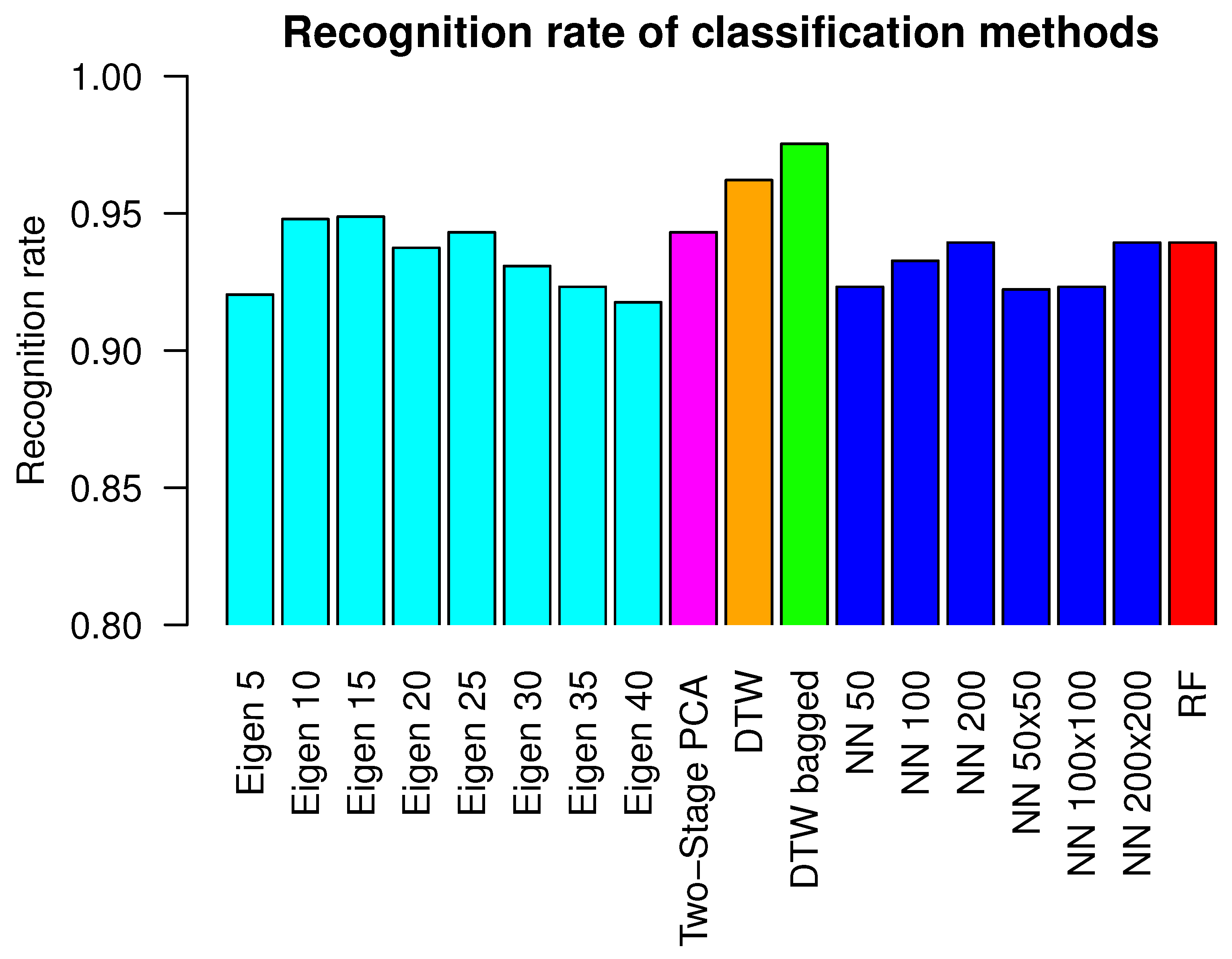

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kim, M.; Choi, S.H.; Park, K.B.; Lee, J.Y. User Interactions for Augmented Reality Smart Glasses: A Comparative Evaluation of Visual Contexts and Interaction Gestures. Appl. Sci. 2019, 9, 3171. [Google Scholar] [CrossRef] [Green Version]

- Kangas, J.; Rantala, J.; Akkil, D.; Isokoski, P.; Majaranta, P.; Raisamo, R. Vibrotactile Stimulation of the Head Enables Faster Gaze Gestures. Int. J. Hum. Comput. Stud. 2017, 98, 62–71. [Google Scholar] [CrossRef]

- Morales, J.; Akopian, D. Physical activity recognition by smartphones, a survey. Biocybernetics Biomed. Eng. 2017, 37, 388–400. [Google Scholar] [CrossRef]

- Farooq, M.; Sazonov, E. Accelerometer-Based Detection of Food Intake in Free-Living Individuals. IEEE Sens. J. 2018, 18, 3752–3758. [Google Scholar] [CrossRef]

- Ahuja, K.; Islam, R.; Parashar, V.; Dey, K.; Harrison, C.; Goel, M. EyeSpyVR: Interactive Eye Sensing Using Off-the-Shelf, Smartphone-Based VR Headsets. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 57. [Google Scholar] [CrossRef]

- Mavuş, U.; Sezer, V. Head gesture recognition via dynamic time warping and threshold optimization. In Proceedings of the 2017 IEEE Conference on Cognitive and Computational Aspects of Situation Management (CogSIMA), Savannah, GA, USA, 27–31 March 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Yi, S.; Qin, Z.; Novak, E.; Yin, Y.; Li, Q. GlassGesture: Exploring head gesture interface of smart glasses. In Proceedings of the IEEE INFOCOM 2016—The 35th Annual IEEE International Conference on Computer Communications, San Francisco, CA, USA, 10–14 April 2016; pp. 1–9. [Google Scholar] [CrossRef]

- Kelly, D.; Delannoy, D.; McDonald, J.; Markham, C. Automatic recognition of head movement gestures in sign language sentences. In Proceedings of the 4th China-Ireland Information and Communications Technologies Conference, Maynooth, Ireland, 19–21 August 2009; pp. 142–145. [Google Scholar]

- Morimoto, C.; Yacoob, Y.; Davis, L. Recognition of head gestures using hidden Markov models. In Proceedings of the 13th International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996; Volume 3, pp. 461–465. [Google Scholar] [CrossRef]

- Hasna, O.L.; Potolea, R. Time series—A taxonomy based survey. In Proceedings of the 2017 13th IEEE International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 7–9 September 2017; pp. 231–238. [Google Scholar] [CrossRef]

- Shokoohi-Yekta, M.; Hu, B.; Jin, H.; Wang, J.; Keogh, E. Generalizing DTW to the Multi-dimensional Case Requires an Adaptive Approach. Data Min. Knowl. Discov. 2017, 31, 1–31. [Google Scholar] [CrossRef] [Green Version]

- Xue, Y.; Ju, Z.; Xiang, K.; Chen, J.; Liu, H. Multimodal Human Hand Motion Sensing and Analysis—A Review. IEEE Trans. Cognitive Dev. Syst. 2019, 11, 162–175. [Google Scholar] [CrossRef]

- Cheng, H.; Yang, L.; Liu, Z. Survey on 3D Hand Gesture Recognition. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 1659–1673. [Google Scholar] [CrossRef]

- Dalmazzo, D.; Ramírez, R. Bowing Gestures Classification in Violin Performance: A Machine Learning Approach. Front. Psychol. 2019, 10, 344. [Google Scholar] [CrossRef] [Green Version]

- Parnandi, A.; Uddin, J.; Nilsen, D.M.; Schambra, H.M. The Pragmatic Classification of Upper Extremity Motion in Neurological Patients: A Primer. Front. Neurol. 2019, 10, 996. [Google Scholar] [CrossRef]

- Huang, J.; Zhou, W.; Li, H.; Li, W. Attention-Based 3D-CNNs for Large-Vocabulary Sign Language Recognition. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 2822–2832. [Google Scholar] [CrossRef]

- Liu, J.; Li, Y.; Song, S.; Xing, J.; Lan, C.; Zeng, W. Multi-Modality Multi-Task Recurrent Neural Network for Online Action Detection. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 2667–2682. [Google Scholar] [CrossRef]

- ur Rehman, M.Z.; Waris, M.; Gilani, S.; Jochumsen, M.; Niazi, I.; Jamil, M.; Farina, D.; Kamavuako, E. Multiday EMG-Based Classification of Hand Motions with Deep Learning Techniques. Sensors 2018, 18, 2497. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhao, H.Y.; Wang, Z.; Qiu, S.; Xu, F.; Wang, Z.; Shen, Y. Adaptive gait detection based on foot-mounted inertial sensors and multi-sensor fusion. Inf. Fusion 2019, 52. [Google Scholar] [CrossRef]

- Switonski, A.; Josinski, H.; Wojciechowski, K. Dynamic time warping in classification and selection of motion capture data. Multidimension. Syst. Signal Process. 2019, 30, 1437–1468. [Google Scholar] [CrossRef]

- A Survey on Gait Recognition. ACM Comput. Surv. 2018, 51, 1–35. [CrossRef] [Green Version]

- Berman, S.; Stern, H. Sensors for Gesture Recognition Systems. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2012, 42, 277–290. [Google Scholar] [CrossRef]

- Hachaj, T.; Ogiela, M.R. Classification of Karate Kicks with Hidden Markov Models Classifier and Angle-Based Features. In Proceedings of the 2018 11th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Beijing, China, 13–15 October 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Billon, R.; Nédélec, A.; Tisseau, J. Gesture Recognition in Flow Based on PCA and Using Multiagent System. In Proceedings of the 2008 ACM Symposium on Virtual Reality Software and Technology, Bordeaux, France, 27–29 October 2008; pp. 239–240. [Google Scholar] [CrossRef]

- Bottino, A.; Simone, M.D.; Laurentini, A. Recognizing Human Motion using Eigensequences. J. WSCG 2007, 15, 135–142. [Google Scholar]

- Świtoński, A.; Mucha, R.; Danowski, D.; Mucha, M.; Polanski, A.; Cieslar, G.; Wojciechowski, K.; Sieron, A. Diagnosis of the motion pathologies based on a reduced kinematical data of a gait. Przeglad Elektrotechniczny 2011, 87, 173–176. [Google Scholar]

- Mantovani, G.; Ravaschio, A.; Piaggi, P.; Landi, A. Fine classification of complex motion pattern in fencing. Procedia Eng. 2010, 2, 3423–3428. [Google Scholar] [CrossRef]

- Choi, W.; Ono, T.; Hachimura, K. Body Motion Analysis for Similarity Retrieval of Motion Data and Its Evaluation. In Proceedings of the 2009 Fifth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Kyoto, Japan, 12–14 September 2009; pp. 1177–1180. [Google Scholar] [CrossRef]

- Skurowski, P.; Pruszowski, P.; Pęszor, D. Synchronization of Motion Sequences from Different Sources. AIP Conf. Proc. 2016, 1738, 180013. [Google Scholar] [CrossRef]

- Hinkel-Lipsker, J.; Hahn, M. Coordinative structuring of gait kinematics during adaptation to variable and asymmetric split-belt treadmill walking – A principal component analysis approach. Hum. Movement Sci. 2018, 59. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Zeng, L.; Leung, H. Keyframe Extraction from Motion Capture Data for Visualization. In Proceedings of the 2016 International Conference on Virtual Reality and Visualization (ICVRV), Hangzhou, China, 24–26 September 2016; pp. 154–157. [Google Scholar] [CrossRef]

- Lee, M.; Roan, M.; Smith, B. An application of principal component analysis for lower body kinematics between loaded and unloaded walking. J. Biomech. 2009, 42, 2226–2230. [Google Scholar] [CrossRef] [PubMed]

- Zago, M.; Pacifici, I.; Lovecchio, N.; Galli, M.; Federolf, P.; Sforza, C. Multi-segmental movement patterns reflect juggling complexity and skill level. Hum. Movement Sci. 2017, 54. [Google Scholar] [CrossRef]

- Peng, S. Motion Segmentation Using Central Distance Features and Low-Pass Filter. In Proceedings of the 2010 International Conference on Computational Intelligence and Security, Nanning, China, 11–14 December 2010; pp. 223–226. [Google Scholar] [CrossRef]

- Fotiadou, E.; Nikolaidis, N. Activity-based methods for person recognition in motion capture sequences. Pattern Recognit. Lett. 2014, 49, 48–54. [Google Scholar] [CrossRef]

- Choi, W.; Li, L.; Sekiguchi, H.; Hachimura, K. Recognition of gait motion by using data mining. In Proceedings of the 2013 13th International Conference on Control, Automation and Systems (ICCAS 2013), Gwangju, Korea, 20–23 October 2013; pp. 1213–1216. [Google Scholar] [CrossRef]

- Choi, W.; Sekiguchi, H.; Hachimura, K. Analysis of Gait Motion by Using Motion Capture in the Japanese Traditional Performing Arts. In Proceedings of the 2009 Fifth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Kyoto, Japan, 12–14 September 2009; pp. 1164–1167. [Google Scholar] [CrossRef]

- Chalodhorn, R.; Rao, R.P.N. Learning to Imitate Human Actions through Eigenposes. In From Motor Learning to Interaction Learning in Robots; Sigaud, O., Peters, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 357–381. [Google Scholar]

- Das, S.R.; Wilson, R.C.; Lazarewicz, M.T.; Finkel, L.H. Two-Stage PCA Extracts Spatiotemporal Features for Gait Recognition. J. Multimedia 2006, 1, 9–17. [Google Scholar]

- Hachaj, T.; Piekarczyk, M.; Ogiela, M.R. Human Actions Analysis: Templates Generation, Matching and Visualization Applied to Motion Capture of Highly-Skilled Karate Athletes. Sensors 2017, 17, 2590. [Google Scholar] [CrossRef] [Green Version]

- Hachaj, T. Head Motion—Based Robot’s Controlling System Using Virtual Reality Glasses. In Image Processing and Communications; Choraś, M., Choraś, R.S., Eds.; Springer: Cham, Switzerland, 2020; pp. 6–13. [Google Scholar]

- Field, M.; Pan, Z.; Stirling, D.; Naghdy, F. Human motion capture sensors and analysis in robotics. Ind. Rob. 2011, 38, 163–171. [Google Scholar] [CrossRef]

- Kim, H.; Gabbard, J.L.; Anon, A.M.; Misu, T. Driver Behavior and Performance with Augmented Reality Pedestrian Collision Warning: An Outdoor User Study. IEEE Trans. Visual Comput. Graphics 2018, 24, 1515–1524. [Google Scholar] [CrossRef]

- Li, G.; Chung, W. Combined EEG-Gyroscope-tDCS Brain Machine Interface System for Early Management of Driver Drowsiness. IEEE Trans. Hum. Mach. Syst. 2018, 48, 50–62. [Google Scholar] [CrossRef]

- Bao, H.; Fang, W.; Guo, B.; Wang, P. Real-Time Eye-Interaction System Developed with Eye Tracking Glasses and Motion Capture. In Advances in Human Factors in Wearable Technologies and Game Design; Ahram, T., Falcão, C., Eds.; Springer: Cham, Switzerland, 2018; pp. 72–81. [Google Scholar]

- Chui, K.T.; Alhalabi, W.; Liu, R.W. Head motion coefficient-based algorithm for distracted driving detection. Data Technol. Appl. 2019, 53, 171–188. [Google Scholar] [CrossRef]

- Zhang, C.; Wu, X.; Zheng, X.; Yu, S. Driver Drowsiness Detection Using Multi-Channel Second Order Blind Identifications. IEEE Access 2019, 7, 11829–11843. [Google Scholar] [CrossRef]

- Karatas, C.; Liu, L.; Gruteser, M.; Howard, R. Single-Sensor Motion and Orientation Tracking in a Moving Vehicle. In Proceedings of the 2018 15th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), Hong Kong, China, 11–13 June 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Zhao, Y.; Görne, L.; Yuen, I.M.; Cao, D.; Sullman, M.; Auger, D.J.; Lv, C.; Wang, H.; Matthias, R.; Skrypchuk, L.; et al. An Orientation Sensor-Based Head Tracking System for Driver Behaviour Monitoring. Sensors 2017, 17, 2692. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kela, J.; Korpipää, P.; Mäntyjärvi, J.; Kallio, S.; Savino, G.; Jozzo, L.; Marca, S.D. Accelerometer-based gesture control for a design environment. Pers. Ubiquitous Comput. 2005, 10, 285–299. [Google Scholar] [CrossRef]

- LSM6DS3. iNEMO inertial module: always-on 3D accelerometer and 3D gyroscope. Available online: www.st.com/web/en/resource/technical/document/datasheet/DM00133076.pdf (accessed on 29 October 2019).

- Petitjean, F.; Ketterlin, A.; Gançarski, P. A Global Averaging Method for Dynamic Time Warping, with Applications to Clustering. Pattern Recogn. 2011, 44, 678–693. [Google Scholar] [CrossRef]

- Markley, L.; Cheng, Y.; Crassidis, J.; Oshman, Y. Averaging Quaternions. J. Guidance Control Dyn. 2007, 30, 1193–1196. [Google Scholar] [CrossRef]

- Breiman, L. Bagging Predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef] [Green Version]

- Hachaj, T. Improving Human Motion Classification by Applying Bagging and Symmetry to PCA-Based Features. Symmetry 2019, 11, 1264. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Sun, J.; Li, W.; Hu, T.; Wang, P. Deep Learning on Point Clouds and Its Application: A Survey. Sensors 2019, 19, 4188. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Zhou, L.; Bouguila, N.; Zhong, B.; Wu, F.; Lei, Z.; Du, J.; Li, H. Semi-Convex Hull Tree: Fast Nearest Neighbor Queries for Large Scale Data on GPUs. In Proceedings of the 2018 IEEE International Conference on Data Mining (ICDM), Singapore, 17–20 November 2018; pp. 911–916. [Google Scholar] [CrossRef]

- Li, K.; Malik, J. Fast K-nearest Neighbour Search via Dynamic Continuous Indexing. In Proceedings of the 33rd International Conference on International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 671–679. [Google Scholar]

- Recht, B.; Ré, C.; Wright, S.J.; Niu, F. Hogwild: A Lock-Free Approach to Parallelizing Stochastic Gradient Descent. In Advances in Neural Information Processing Systems 24 (NIPS 2011); Shawe-Taylor, J., Zemel, R.S., Bartlett, P.L., Pereira, F., Weinberger, K.Q., Eds.; NIPS Proceedings: Granada, Spain, 2011; pp. 693–701. [Google Scholar]

| Clockwise | Counterclockwise | Left | Nod Head | M Left | M Right | Right | |

|---|---|---|---|---|---|---|---|

| 1 | 10 | 11 | 11 | 12 | 12 | 12 | 12 |

| 2 | 12 | 13 | 11 | 13 | 11 | 11 | 11 |

| 3 | 14 | 11 | 11 | 11 | 11 | 11 | 11 |

| 4 | 10 | 10 | 11 | 11 | 13 | 13 | 11 |

| 5 | 12 | 11 | 12 | 23 | 11 | 11 | 11 |

| 6 | 11 | 11 | 12 | 11 | 12 | 11 | 11 |

| 7 | 11 | 12 | 10 | 12 | 11 | 10 | 10 |

| 8 | 11 | 12 | 11 | 13 | 11 | 12 | 11 |

| 9 | 11 | 12 | 11 | 12 | 12 | 12 | 11 |

| 10 | 12 | 11 | 11 | 22 | 12 | 12 | 11 |

| 11 | 11 | 12 | 11 | 11 | 11 | 11 | 11 |

| 12 | 14 | 11 | 11 | 10 | 10 | 11 | 10 |

| SUM | 139 | 137 | 133 | 161 | 137 | 137 | 131 |

| MIN | 85 | 86 | 81 | 50 | 75 | 76 | 81 |

| MAX | 332 | 412 | 528 | 183 | 255 | 264 | 406 |

| Clockwise | Counterclockwise | Left | Nod Head | M Left | M Right | Right | Error | |

|---|---|---|---|---|---|---|---|---|

| Eigen 5 | 0.148 | 0.074 | 0.090 | 0.035 | 0.054 | 0.020 | 0.147 | 0.080 |

| Eigen 10 | 0.101 | 0.074 | 0.030 | 0.075 | 0.007 | 0.027 | 0.049 | 0.052 |

| Eigen 15 | 0.054 | 0.095 | 0.028 | 0.087 | 0.020 | 0.020 | 0.049 | 0.051 |

| Eigen 20 | 0.074 | 0.115 | 0.014 | 0.110 | 0.020 | 0.074 | 0.021 | 0.063 |

| Eigen 25 | 0.067 | 0.088 | 0.028 | 0.104 | 0.013 | 0.047 | 0.042 | 0.057 |

| Eigen 30 | 0.081 | 0.095 | 0.042 | 0.098 | 0.034 | 0.081 | 0.049 | 0.069 |

| Eigen 35 | 0.074 | 0.115 | 0.042 | 0.098 | 0.067 | 0.081 | 0.056 | 0.077 |

| Eigen 40 | 0.087 | 0.122 | 0.042 | 0.110 | 0.094 | 0.074 | 0.042 | 0.082 |

| Two-Stage PCA | 0.134 | 0.061 | 0.069 | 0.046 | 0.034 | 0.040 | 0.014 | 0.057 |

| DTW | 0.087 | 0.014 | 0.000 | 0.029 | 0.054 | 0.067 | 0.014 | 0.038 |

| DTW bagged | 0.020 | 0.007 | 0.000 | 0.040 | 0.027 | 0.060 | 0.014 | 0.025 |

| NN 50 | 0.087 | 0.115 | 0.035 | 0.150 | 0.013 | 0.013 | 0.112 | 0.077 |

| NN 100 | 0.094 | 0.088 | 0.028 | 0.127 | 0.027 | 0.013 | 0.084 | 0.067 |

| NN 200 | 0.087 | 0.115 | 0.035 | 0.069 | 0.020 | 0.007 | 0.091 | 0.061 |

| NN 50x50 | 0.081 | 0.122 | 0.028 | 0.087 | 0.020 | 0.020 | 0.063 | 0.061 |

| NN 100x100 | 0.114 | 0.095 | 0.028 | 0.150 | 0.020 | 0.007 | 0.112 | 0.077 |

| NN 200x200 | 0.107 | 0.101 | 0.035 | 0.168 | 0.027 | 0.007 | 0.084 | 0.078 |

| RF | 0.081 | 0.101 | 0.021 | 0.104 | 0.027 | 0.007 | 0.077 | 0.061 |

| Clockwise | Counterclockwise | Left | Nod Head | M Left | M Right | Right | |

|---|---|---|---|---|---|---|---|

| clockwise | 146 | 0 | 0 | 2 | 0 | 0 | 1 |

| counterclockwise | 0 | 147 | 1 | 0 | 0 | 0 | 0 |

| left | 0 | 0 | 144 | 0 | 0 | 0 | 0 |

| nod head | 0 | 7 | 0 | 166 | 0 | 0 | 0 |

| m left | 0 | 4 | 0 | 0 | 145 | 0 | 0 |

| m right | 5 | 0 | 0 | 4 | 0 | 140 | 0 |

| right | 0 | 0 | 2 | 0 | 0 | 0 | 141 |

| Clockwise | Counterclockwise | Left | Nod Head | M Left | M Right | Right | Error | |

|---|---|---|---|---|---|---|---|---|

| clockwise | 0.980 | 0.000 | 0.000 | 0.013 | 0.000 | 0.000 | 0.007 | 0.020 |

| counterclockwise | 0.000 | 0.993 | 0.007 | 0.000 | 0.000 | 0.000 | 0.000 | 0.007 |

| left | 0.000 | 0.000 | 1.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

| nod head | 0.000 | 0.040 | 0.000 | 0.960 | 0.000 | 0.000 | 0.000 | 0.040 |

| m left | 0.000 | 0.027 | 0.000 | 0.000 | 0.973 | 0.000 | 0.000 | 0.027 |

| m right | 0.034 | 0.000 | 0.000 | 0.026 | 0.000 | 0.940 | 0.000 | 0.060 |

| right | 0.000 | 0.000 | 0.014 | 0.000 | 0.000 | 0.000 | 0.986 | 0.014 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hachaj, T.; Piekarczyk, M. Evaluation of Pattern Recognition Methods for Head Gesture-Based Interface of a Virtual Reality Helmet Equipped with a Single IMU Sensor. Sensors 2019, 19, 5408. https://doi.org/10.3390/s19245408

Hachaj T, Piekarczyk M. Evaluation of Pattern Recognition Methods for Head Gesture-Based Interface of a Virtual Reality Helmet Equipped with a Single IMU Sensor. Sensors. 2019; 19(24):5408. https://doi.org/10.3390/s19245408

Chicago/Turabian StyleHachaj, Tomasz, and Marcin Piekarczyk. 2019. "Evaluation of Pattern Recognition Methods for Head Gesture-Based Interface of a Virtual Reality Helmet Equipped with a Single IMU Sensor" Sensors 19, no. 24: 5408. https://doi.org/10.3390/s19245408