Kinematic and Dynamic Vehicle Model-Assisted Global Positioning Method for Autonomous Vehicles with Low-Cost GPS/Camera/In-Vehicle Sensors

Abstract

:1. Introduction

2. Related Work

2.1. Global Navigation Satellite System (GNSS) Localisation

2.2. DR Localisation

2.3. Map Matching Localisation

2.4. Mobile Radio-Based Localisation

2.5. Vision/LiDAR-Based Localisation

2.6. Multiple Sensor-Based Localisation

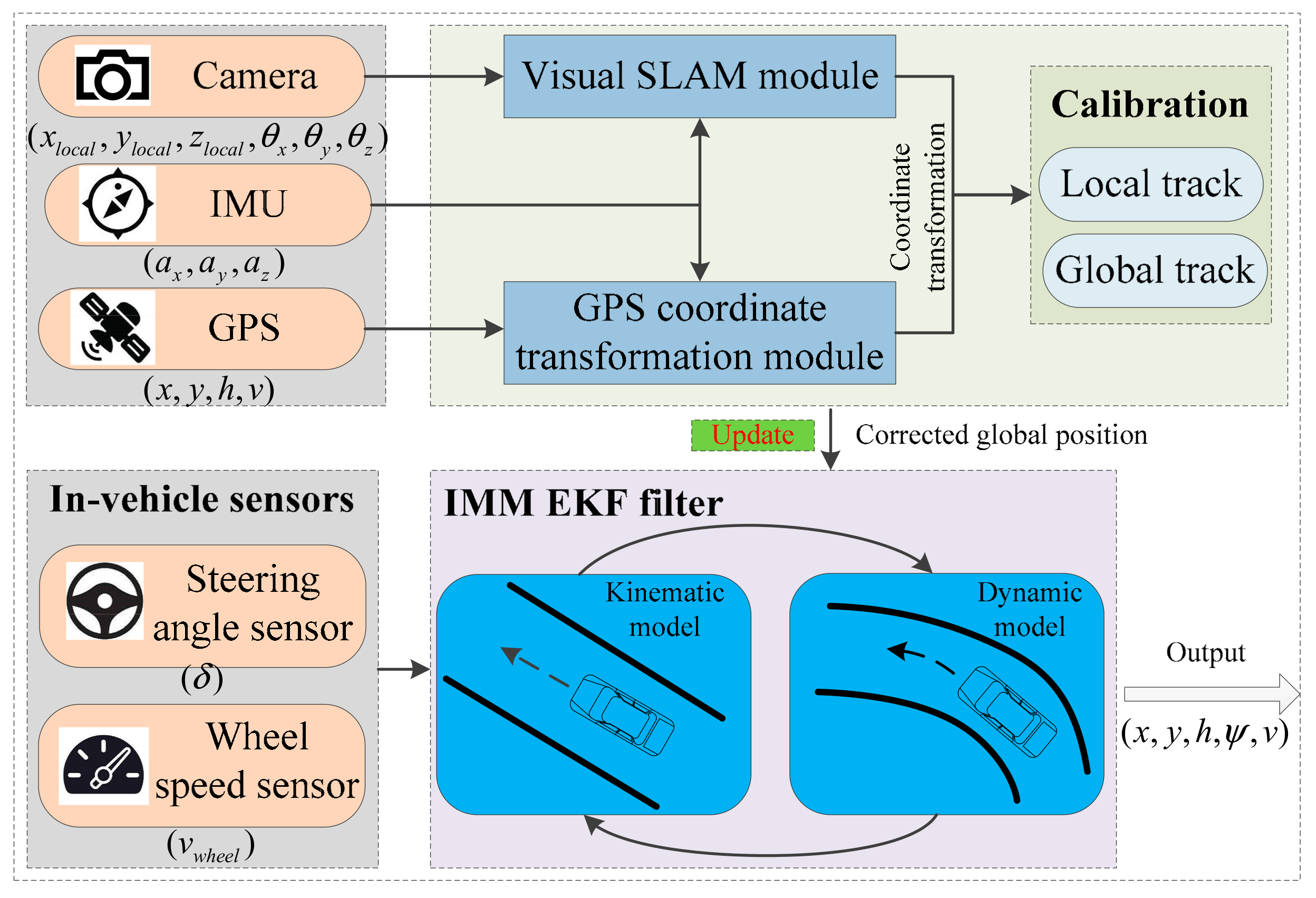

3. Proposed Fusion Positioning Strategy

3.1. Vehicle Modelling

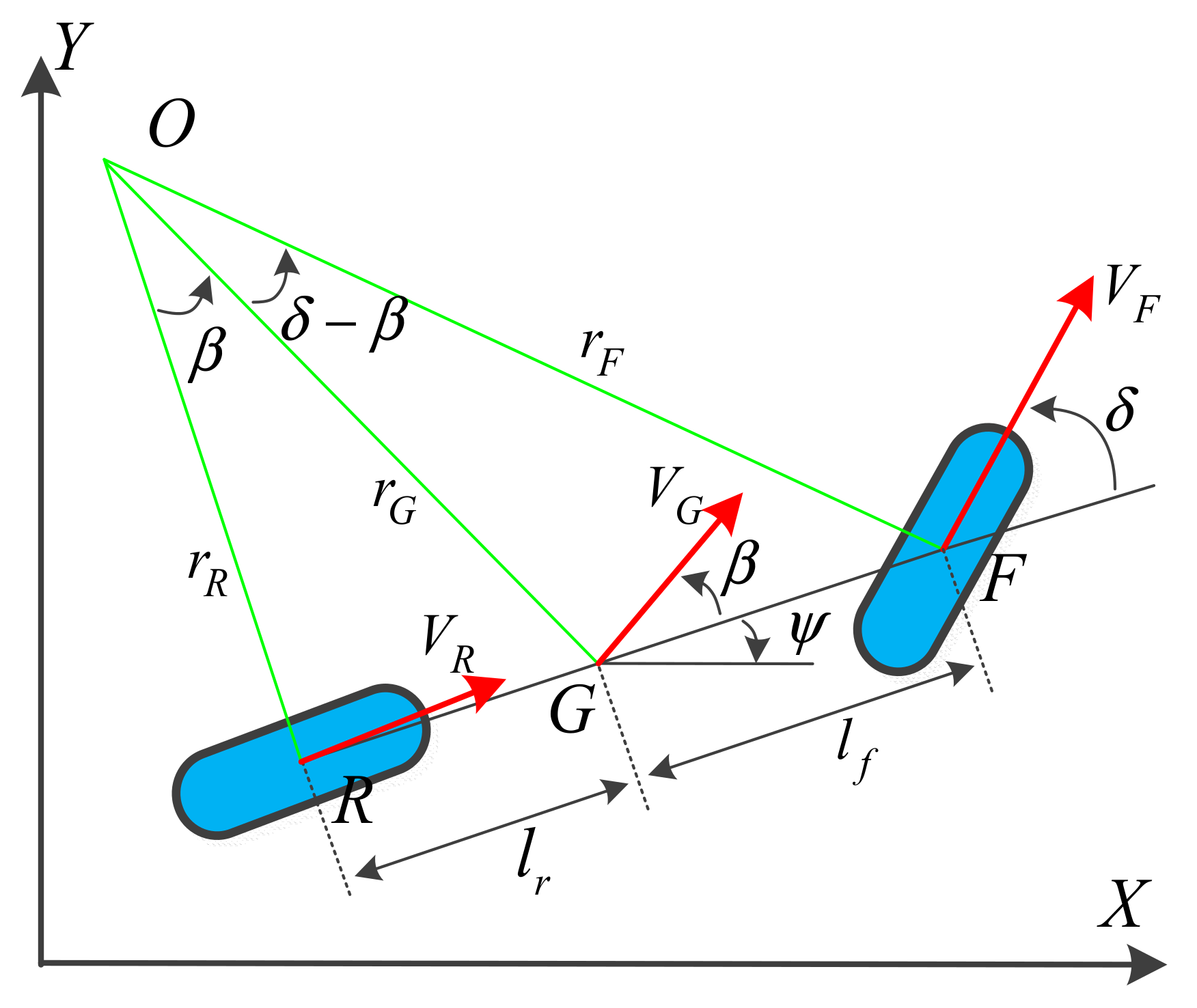

3.1.1. Kinematic Vehicle Model

3.1.2. Dynamic Vehicle Model

3.2. V-SLAM Algorithm

3.2.1. ISVD Algorithm

| Algorithm 1: Calculation of degree of blurring using image singular value decomposition (ISVD). |

| Input: RGB image Output: Blurred degree of image Initialisation: , 1: Convert colour to grayscale 2: Calculate a singular value with singular value decomposition on 3: For in 4: If 5: 6: End 7: End 8: Return blurred degree |

3.2.2. SFFSD Algorithm

| Algorithm 2: Statistic filtering of feature displacement |

| Input: Candidate matched images , . Output: Good feature matches 1: Detect features , to obtain descriptors , and key points , 2: Match , to obtain the original matches with brute force matcher and hamming distance 3: Calculate the key-points displacements for in and components , 4: Create a 2D histogram with and to confirm the highest bins for mode approximation 5: Use the sample within the radius to perform parameter estimation of the Laplacian distribution in and 6: Determine the min and max boundary values to include a certain percentage ratio = 0.9 of inliers, assuming a Laplacian distribution 7: Find the matches according to the boundary in Step 6 8: Repeat Steps 6 and 7 to find the matches 9: Calculate the common element from and 10: For in 11: If in 12: Pushback the corresponding element into 13: End 14: End |

3.3. V-SLAM Track and GPS Track Calibration

| Algorithm 3: Improved weighted iterative closest point (ICP) |

| Input: V-SLAM track , GPS track , weights on timestamps , Output: Rotation matrix and translation vector that minimises 1: Centroids , 2: Centred vectors , 3: Covariance matrix , where and have and as columns, respectively, and is a diagonal matrix with on the diagonal 4: Singular value decomposition 5: and 6: V-SLAM track after calibration in global coordinates |

3.4. Interacting Multiple Model (IMM) Filter

3.4.1. Interaction

3.4.2. Extended Kalman Filter

3.4.3. Model Probability Update

3.4.4. Estimation Fusion

4. Experiment and Results

4.1. Simulation

4.2. Benchmark Dataset

4.3. Real Data

4.3.1. Bad GPS Conditions

4.3.2. Short-Distance Trajectory with Different Driving Behaviours

4.3.3. Long-Distance Trajectory in a Cluttered Environment

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Li, X.; Zhang, X.; Ren, X.; Fritsche, M.; Wickert, J.; Schuh, H. Precise positioning with current multi-constellation global navigation satellite systems: GPS, GLONASS, Galileo and BeiDou. Sci. Rep. 2015, 5, 8328. [Google Scholar] [CrossRef] [PubMed]

- Pivarčiová, E.; Božek, P.; Turygin, Y.; Zajačko, I.; Shchenyatsky, A.; Václav, Š.; Císar, M.; Gemela, B. Analysis of control and correction options of mobile robot trajectory by an inertial navigation system. Int. J. Adv. Robot. Syst. 2018, 15, 1–10. [Google Scholar] [CrossRef]

- Pirník, R.; Hruboš, M.; Nemec, D.; Mravec, T.; Božek, P. Integration of inertial sensor data into control of the mobile platform. In Proceedings of the Federated Conference on Software Development and Object Technologies, Zilina, Slovakia, 19 November 2015; Springer: Berlin, Germany, 2016; pp. 271–282. [Google Scholar]

- Cadena, C.; Carlone, L.; Carrillo, H. Simultaneous localization and mapping: Present, future, and the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Xu, Q. A reliable fusion positioning strategy for land vehicles in GPS-denied environments based on low-cost sensors. IEEE Trans. Ind. Electron. 2017, 64, 3205–3215. [Google Scholar] [CrossRef]

- Kok, M.; Hol, J.D.; Schön, T.B. Using inertial sensors for position and orientation estimation. Found. Trends Signal. Process. 2017, 11, 1–153. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Qi, W.; Wei, L.; Chang, J.; Zhao, Y. Multipath error correction in radio interferometric positioning systems. arXiv 2017, arXiv:1702.07624. Available online: https://arxiv.org/abs/1702.07624 (accessed on 2 November 2018).

- Jo, K.; Chu, K.; Sunwoo, M. Interacting multiple model filter-based sensor fusion of GPS with in-vehicle sensors for real-time vehicle positioning. IEEE Trans. Intell. Transp. Syst. 2012, 13, 329–343. [Google Scholar] [CrossRef]

- Zaidi, A.S.; Suddle, M.R. Global navigation satellite systems: A survey. In Proceedings of the IEEE International Conference on Advances in Space Technologies, Islamabad, Pakistan, 2–3 September 2006; pp. 84–87. [Google Scholar]

- King, T.; Füßler, H.; Transier, M.; Effelsberg, W. Dead-reckoning for position-based forwarding on highways. In Proceedings of the 3rd International Workshop on Intelligent Transportation, Hamburg, Germany, 18–19 May 2006; pp. 199–204. [Google Scholar]

- Bevly, D.M.; Parkinson, B. Cascaded Kalman filters for accurate estimation of multiple biases, dead-reckoning navigation, and full state feedback control of ground vehicles. IEEE Trans. Control. Syst. Technol. 2007, 15, 199–208. [Google Scholar] [CrossRef]

- Chausse, F.; Laneurit, J.; Chapuis, R. Vehicle localization on a digital map using particles filtering. In Proceedings of the IEEE Intelligent Vehicles Symposium, Las Vegas, NV, USA, 6–8 June 2005; pp. 243–248. [Google Scholar]

- Jagadeesh, G.R.; Srikanthan, T.; Zhang, X.D. A map matching method for GPS based real-time vehicle location. J. Navig. 2004, 57, 429–440. [Google Scholar] [CrossRef]

- Wiest, J.; Deusch, H.; Nuss, D.; Reuter, S.; Fritzsche, M.; Dietmayer, K. Localization based on region descriptors in grid maps. In Proceedings of the IEEE Intelligent Vehicles Symposium Proceedings, Seoul, Korea, 28 June–1 July 2014; pp. 793–799. [Google Scholar]

- Sun, G.; Chen, J.; Guo, W.; Liu, K.J.R. Signal processing techniques in network-aided positioning: A survey of state-of-the-art positioning designs. IEEE Signal. Process. Mag. 2005, 22, 12–23. [Google Scholar] [CrossRef]

- Lee, J.Y.; Scholtz, R.A. Ranging in a dense multipath environment using an UWB radio link. IEEE J. Sel. Areas Commun. 2003, 20, 1677–1683. [Google Scholar] [CrossRef]

- Cheng, Y.C.; Chawathe, Y.; Lamarca, A.; Krumm, J. Accuracy characterization for metropolitan-scale Wi-Fi localization. In Proceedings of the International Conference on Mobile Systems, Applications, and Services, Seattle, WA, USA, 6–8 June 2005; pp. 233–245. [Google Scholar]

- Thangavelu, A.; Bhuvaneswari, K.; Kumar, K.; Senthilkumar, K.; Sivanandam, S.N. Location identification and vehicle tracking using VANET. In Proceedings of the International Conference on Signal Processing, Communications and Networking, Chennai, India, 22–24 February 2007; pp. 112–116. [Google Scholar]

- Gentner, C.; Jost, T.; Wang, W.; Zhang, S.; Dammann, A.; Fiebig, U.C. Multipath assisted positioning with simultaneous localization and mapping. IEEE Trans. Wirel. Commun. 2016, 15, 6104–6117. [Google Scholar] [CrossRef] [Green Version]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An open-source SLAM system for monocular, stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Wang, R.; Schworer, M.; Cremers, D. Stereo DSO: Large-scale direct sparse visual odometry with stereo cameras. IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3923–3931. [Google Scholar]

- Min, H.; Zhao, X.; Xu, Z.; Zhang, L. Robust features and accurate inliers detection framework: application to stereo ego-motion estimation. KSII Trans. Internet Inf. Syst. 2017, 11, 302–320. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. In Proceedings of the Robotics: Science and Systems (RSS), Berkeley, CA, USA, 12–16 July 2014; pp. 109–111. [Google Scholar]

- Dubé, R.; Dugas, D.; Stumm, E.; Nieto, J.; Siegwart, R.; Cadena, C. Segmatch: Segment based place recognition in 3D point clouds. In Proceedings of the Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5266–5272. [Google Scholar]

- Koide, K.; Miura, J.; Menegatti, E. A Portable 3D LIDAR-based system for long-term and wide-area people behavior measurement. IEEE Trans. Hum. Mach. Syst. 2018. accepted. [Google Scholar]

- Falco, G.; Pini, M.; Marucco, G. Loose and tight GNSS/INS integrations: Comparison of performance assessed in real urban scenarios. Sensors 2017, 17, 255. [Google Scholar] [CrossRef]

- Al Hage, J.; El Najjar, M.E. Improved outdoor localization based on weighted Kullback-Leibler divergence for measurements diagnosis. IEEE Intell. Transp. Syst. Mag. 2018. [Google Scholar] [CrossRef]

- Han, S.; Wang, J. Land vehicle navigation with the integration of GPS and reduced INS: Performance improvement with velocity aiding. J. Navig. 2010, 63, 153–166. [Google Scholar] [CrossRef] [Green Version]

- Bryson, M.; Sukkarieh, S. Vehicle model aided inertial navigation for a UAV using low-cost sensors. In Proceedings of the Australasian Conference on Robotics and Automation, Canberra, Australia, 6–8 December 2004; pp. 1–9. [Google Scholar]

- Miller, I.; Campbell, M.; Huttenlocher, D. Map-aided localization in sparse global positioning system environments using vision and particle filtering. J. Field Robot. 2011, 28, 619–643. [Google Scholar] [CrossRef]

- Zhao, X.; Min, H.; Xu, Z.; Wu, X.; Li, X.; Sun, P. Image anti-blurring and statistic filter of feature space displacement: Application to visual odometry for outdoor ground vehicle. J. Sens. 2018. [Google Scholar] [CrossRef] [Green Version]

- Sheikh, H.R.; Wang, Z.; Cormack, L.; Bovik, A.C. LIVE Image Quality Assessment Database Release 2. Available online: http://live.ece.utexas.edu/research/quality (accessed on 23 April 2017).

- Zhao, X.; Min, H.; Xu, Z.; Wang, W. An ISVD and SFFSD-based vehicle ego-positioning method and its application on indoor parking guidance. Transp. Res. Part C Emerg. Technol. 2019, 108, 29–48. [Google Scholar] [CrossRef]

- Badino, H.; Yamamoto, A.; Kanade, T. Visual odometry by multi-frame feature integration. IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 222–229. [Google Scholar]

- Geiger, A.; Ziegler, J.; Stiller, C. StereoScan: Dense 3D reconstruction in real-time. In Proceedings of the Intelligent Vehicles Symposium, Baden, Germany, 5–9 June 2011; pp. 963–968. [Google Scholar]

| Parameter | Symbol | Value | Unit |

|---|---|---|---|

| Vehicle mass | m | 1395 | kg |

| Yaw moment of inertial | Iz | 4192 | kg·m2 |

| The distance from G to the front wheel F | a | 1.04 | m |

| The distance from G to the rear wheel R | b | 1.62 | m |

| The height of center of gravity | H | 0.54 | m |

| Wheel track | d | 1.52 | m |

| Rolling resistance coefficient | f | 0.02 | _ |

| The rolling radius of the tyres | r | 0.335 | m |

| Frontal area | A | 1.8 | m2 |

| The coefficient of air resistance | CD | 0.343 | _ |

| The density of air | ρ | 1.206 | kg/m3 |

| Scenarios | No. | Duration (s−1) | Distance (m−1) OXTS RT3003/Proposed Method | RMSE (m−1) |

|---|---|---|---|---|

| Residential | Figure 12 | 593.24 | 3734.375/3727.332 | 0.645 |

| Figure 13 | 584.79 | 5066.272/5051.748 | 1.265 | |

| Figure 14 | 67.08 | 392.705/391.232 | 0.959 | |

| City | Figure 15 | 130.90 | 341.491/333.710 | 1.868 |

| Figure 16 | 48.61 | 401.604/397.509 | 0.858 |

| No. | Duration (s) | Methods | Distance (m) | RMSE (m) |

|---|---|---|---|---|

| Figure 17 | 264.796 | GPS | 1685.755 | 13.137 |

| GPS + IMU | 1582.860 | 4.880 | ||

| GPS + IMU + CAN | 1589.760 | - | ||

| Proposed method | 1570.616 | 1.564 | ||

| Figure 18 | 158.069 | GPS | 1066.827 | 73.160 |

| GPS+IMU | 1045.851 | 1.765 | ||

| GPS+IMU+CAN | 1044.588 | - | ||

| Proposed method | 1038.825 | 1.229 |

| No. | Duration | Methods | Distance | RMSE |

|---|---|---|---|---|

| Figure 19 | 76.105 | GPS | 218.940 | 7.105 |

| GPS + IMU | 216.019 | 4.657 | ||

| GPS + IMU + CAN | 218.946 | - | ||

| Proposed method | 213.382 | 2.341 | ||

| Figure 20 | 60.885 | GPS | 136.538 | 1.491 |

| GPS + IMU | 142.303 | 1.139 | ||

| GPS + IMU + CAN | 131.749 | - | ||

| Proposed method | 130.997 | 0.941 |

| No. | Duration | Methods | Distance | RMSE |

|---|---|---|---|---|

| Figure 21 | 2843.571 | GPS | 21,371.167 | 180.517 |

| GPS + IMU | 21,255.434 | 11.436 | ||

| GPS + IMU + CAN | 21,237.723 | - | ||

| Proposed method | 21,212.237 | 1.165 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Min, H.; Wu, X.; Cheng, C.; Zhao, X. Kinematic and Dynamic Vehicle Model-Assisted Global Positioning Method for Autonomous Vehicles with Low-Cost GPS/Camera/In-Vehicle Sensors. Sensors 2019, 19, 5430. https://doi.org/10.3390/s19245430

Min H, Wu X, Cheng C, Zhao X. Kinematic and Dynamic Vehicle Model-Assisted Global Positioning Method for Autonomous Vehicles with Low-Cost GPS/Camera/In-Vehicle Sensors. Sensors. 2019; 19(24):5430. https://doi.org/10.3390/s19245430

Chicago/Turabian StyleMin, Haigen, Xia Wu, Chaoyi Cheng, and Xiangmo Zhao. 2019. "Kinematic and Dynamic Vehicle Model-Assisted Global Positioning Method for Autonomous Vehicles with Low-Cost GPS/Camera/In-Vehicle Sensors" Sensors 19, no. 24: 5430. https://doi.org/10.3390/s19245430

APA StyleMin, H., Wu, X., Cheng, C., & Zhao, X. (2019). Kinematic and Dynamic Vehicle Model-Assisted Global Positioning Method for Autonomous Vehicles with Low-Cost GPS/Camera/In-Vehicle Sensors. Sensors, 19(24), 5430. https://doi.org/10.3390/s19245430