Gaze and Eye Tracking: Techniques and Applications in ADAS

Abstract

:1. Introduction

1.1. Background and Motivation

1.2. Contribution and Organization

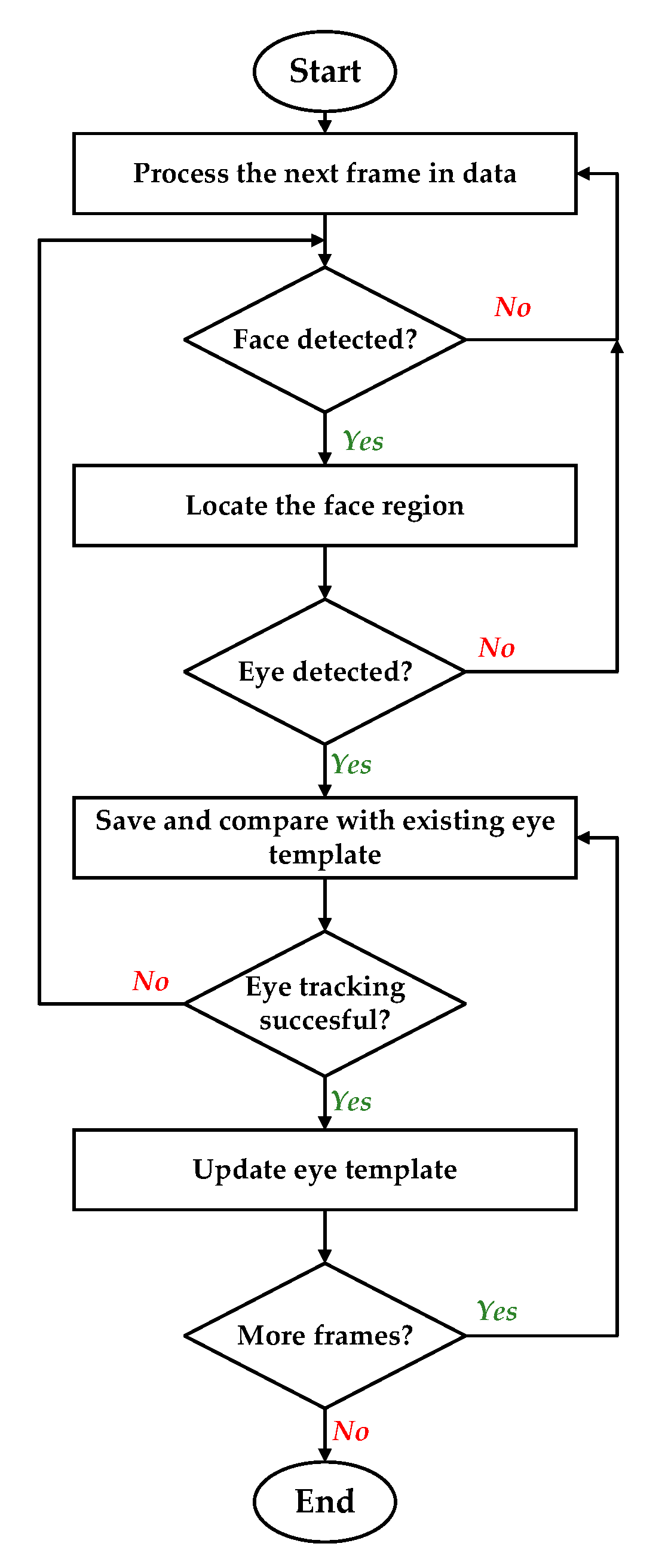

2. Eye Tracking

2.1. Introduction

2.2. Shape-Based Techniques

2.2.1. Elliptical Eye Models

2.2.2. Complex Shape Models

2.3. Feature-Based Techniques

2.3.1. Local Features

2.3.2. Filter Response

2.3.3. Detection of Iris and Pupil

2.4. Appearance-Based Techniques

2.5. Hybrid Models and Other Techniques

2.6. Discussion

3. Gaze Tracking

3.1. Introduction

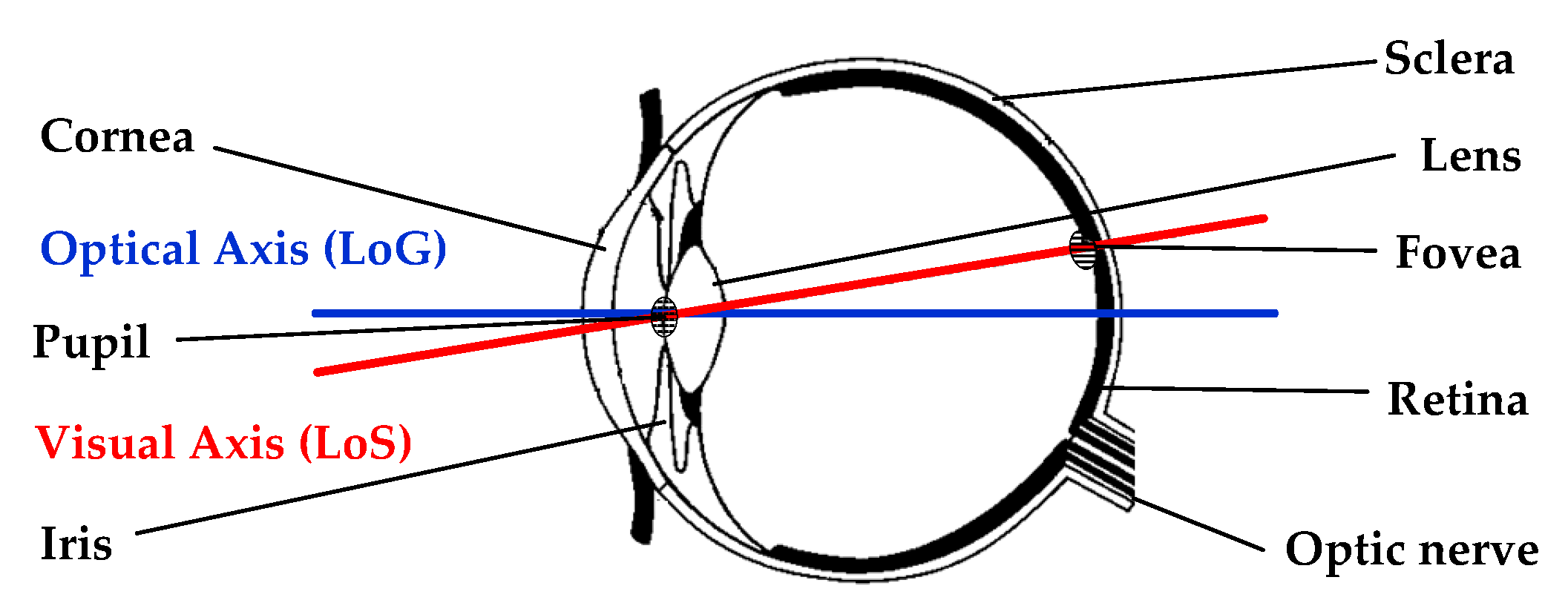

- Calibration of geometric configuration of the setup is necessary to determine the relative orientations and locations of various devices (e.g., light sources and cameras).

- Calibration associated with individuals is carried out to estimate corneal curvature—the angular offset between optical and visual axes.

- Calibration of eye-gaze mapping functions according to the applied method.

- Calibration of the camera is performed to incorporate the inherent parameters of the camera.

3.2. Feature-Based Techniques

3.2.1. Model-Based Techniques

3.2.2. Interpolation-Based Techniques

3.3. Other Techniques

3.3.1. Appearance-Based Techniques

3.3.2. Visible Light-Based Techniques

3.4. Discussion

4. Applications in ADAS

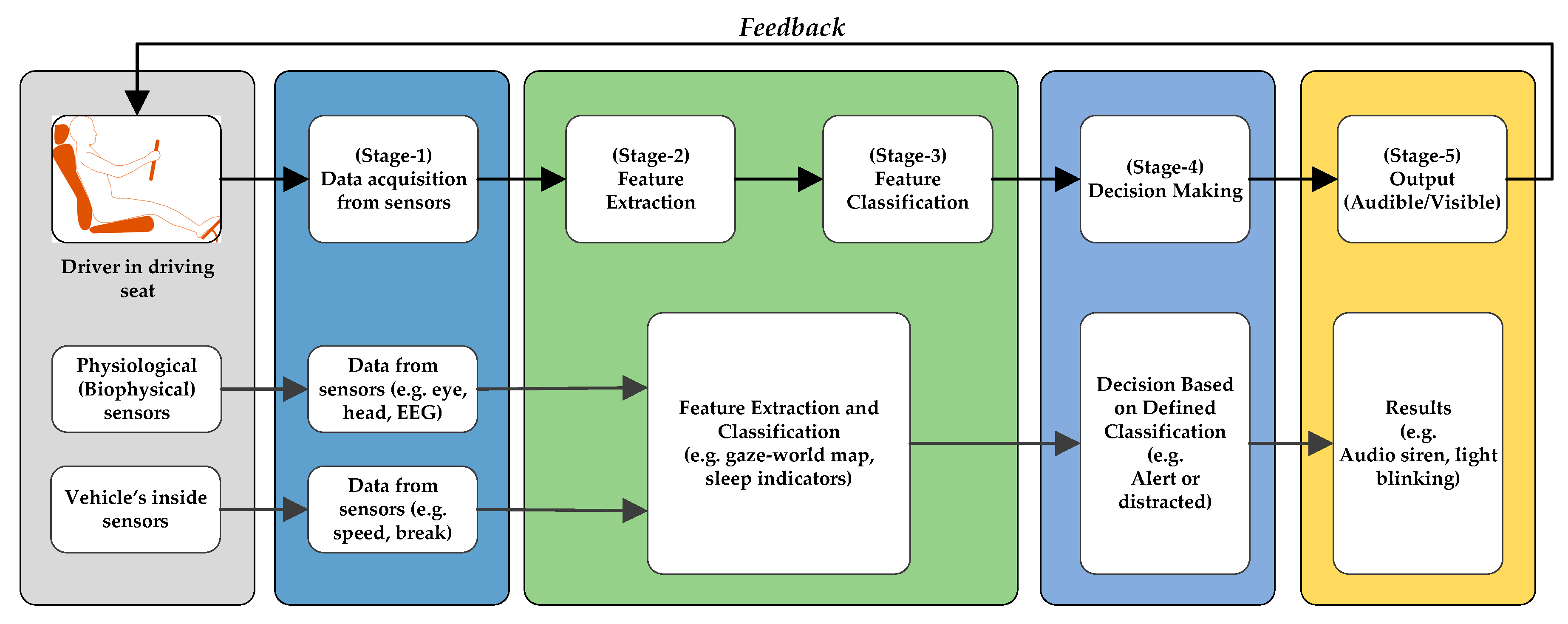

4.1. Introduction

4.2. Driving Process and Associated Challenges

- Visual distraction (taking the eyes off the road);

- Physical distraction (e.g., hands off the steering wheel);

- Cognitive distraction (e.g., mind off the duty of driving);

- Auditory distraction (e.g., taking ears off of the auditory signals and honks).

4.3. Visual Distraction and Driving Performance

4.3.1. Lateral Control

4.3.2. Reaction Time

4.3.3. Speed

4.4. Measurement Approaches

- PERCLOS: It is a measure of percentage of eye closure. It corresponds to the percentage of time during a one-minute period for which the eyes remain at least 70% or 80% closed.

- Percentage eyes >70% closed (PERCLOS70).

- Percentage eyes >80% closed (PERCLOS80).

- PERCLOS70 baselined.

- PERCLOS80 baselined.

- Blink Amplitude: Blink amplitude is the measure of electric voltage during a blink. Its typical value ranges from 100 to 400 μV.

- Amplitude/velocity ratio (APVC).

- APCV with regression.

- Energy of blinking (EC).

- EC baselined.

- Blink Duration: It is the total time from the start to the end of a blink. It is typically measured in the units of milliseconds. A challenge associated with blink behavior-based drowsiness detection techniques is the individually-dependent nature of the measure. For instance, some people blink more frequently in wakeful conditions or some persons’ eyes remain slightly open even in sleepy conditions. So, personal calibration is a prerequisite to apply these techniques.

- Blink Frequency: Blink frequency is the number of blinks per minute. An increased blink frequency is typically associated with the onset of sleep.

- Lid Reopening Delay: It is measure of the time from fully closed eyelids to the start of their reopening. Its value is in the range of few milliseconds for an awake person; it increases for a drowsy person; and is prolonged to several hundred milliseconds for a person undergoing a microsleep.

- Microsleep event 0.5 sec rate.

- Microsleep event 1.0 sec rate.

- Mean square eye closure.

- Mean eye closure.

- Average eye closure speed.

4.5. Data Processing Algorithms

- Difference between the maximum and minimum value of the data;

- Standard deviation of the data;

- Root mean square value of the data;

- Duration of the signal data;

- Maximum difference between any two consecutive values;

- Median of the data;

- Mean of the data;

- Maximum value of the data;

- Minimum value of the data;

- Amplitude of the difference between the first value and the last value;

- Difference between the max and min value of the differential of data.

4.6. Application in Modern Vehicles

5. Summary and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Omer, Y.; Sapir, R.; Hatuka, Y.; Yovel, G. What Is a Face? Critical Features for Face Detection. Perception 2019, 48, 437–446. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cho, S.W.; Baek, N.R.; Kim, M.C.; Koo, J.H.; Kim, J.H.; Park, K.R. Face Detection in Nighttime Images Using Visible-Light Camera Sensors with Two-Step Faster Region-Based Convolutional Neural Network. Sensors 2018, 18, 2995. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bozomitu, R.G.; Păsărică, A.; Tărniceriu, D.; Rotariu, C. Development of an Eye Tracking-Based Human-Computer Interface for Real-Time Applications. Sensors 2019, 19, 3630. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cornia, M.; Baraldi, L.; Serra, G.; Cucchiara, R. Predicting Human Eye Fixations via an LSTM-Based Saliency Attentive Model. IEEE Trans. Image Process. 2018, 27, 5142–5154. [Google Scholar]

- Huey, E.B. The Psychology and Pedagogy of Reading; The Macmillan Company: New York, NY, USA, 1908. [Google Scholar]

- Buswell, G.T. Fundamental Reading Habits: A Study of Their Development; American Psychological Association: Worcester, MA, USA, 1922. [Google Scholar]

- Buswell, G.T. How People Look at Pictures: A Study of the Psychology and Perception in Art; American Psychological Association: Worcester, MA, USA, 1935. [Google Scholar]

- Yarbus, A.L. Eye Movements and Vision; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Rayner, K. Eye movements in reading and information processing. Psychol. Bull. 1978, 85, 618. [Google Scholar] [CrossRef]

- Wright, R.D.; Ward, L.M. Orienting of Attention; Oxford University Press: Oxford, UK, 2008. [Google Scholar]

- Posner, M.I. Orienting of attention. Q. J. Exp. Psychol. 1980, 32, 3–25. [Google Scholar] [CrossRef]

- Carpenter, P.A.; Just, M.A. What your eyes do while your mind is reading. In Eye Movements in Reading; Elsevier: Amsterdam, The Netherlands, 1983; pp. 275–307. [Google Scholar]

- Jacob, R.J.; Karn, K.S. Eye tracking in human-computer interaction and usability research: Ready to deliver the promises. In The Mind’s Eye; Elsevier: Amsterdam, The Netherlands, 2003; pp. 573–605. [Google Scholar]

- Aleem, I.S.; Vidal, M.; Chapeskie, J. Systems, Devices, and Methods for Laser Eye Tracking. U.S. Patent Application No. 9,904,051, 27 February 2018. [Google Scholar]

- Naqvi, R.A.; Arsalan, M.; Batchuluun, G.; Yoon, H.S.; Park, K.R. Deep Learning-Based Gaze Detection System for Automobile Drivers Using a NIR Camera Sensor. Sensors 2018, 18, 456. [Google Scholar] [CrossRef] [Green Version]

- Swaminathan, A.; Ramachandran, M. Enabling Augmented Reality Using Eye Gaze Tracking. U.S. Patent Application No. 9,996,15, 12 June 2018. [Google Scholar]

- Vicente, F.; Huang, Z.; Xiong, X.; De la Torre, F.; Zhang, W.; Levi, D. Driver gaze tracking and eyes off the road detection system. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2014–2027. [Google Scholar] [CrossRef]

- Massé, B.; Ba, S.; Horaud, R. Tracking gaze and visual focus of attention of people involved in social interaction. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2711–2724. [Google Scholar] [CrossRef] [Green Version]

- Ramirez Gomez, A.; Lankes, M. Towards Designing Diegetic Gaze in Games: The Use of Gaze Roles and Metaphors. Multimodal Technol. Interact. 2019, 3, 65. [Google Scholar] [CrossRef] [Green Version]

- World Health Organization. Global Status Report on Road Safety 2013; WHO: Geneva, Switzerland, 2015. [Google Scholar]

- World Health Organization. World Report on Road Traffic Injury Prevention; WHO: Geneva, Switzerland, 2014. [Google Scholar]

- World Health Organization. Global Status Report on Road Safety: Time for Action; WHO: Geneva, Switzerland, 2009. [Google Scholar]

- Bayly, M.; Fildes, B.; Regan, M.; Young, K. Review of crash effectiveness of intelligent transport systems. Emergency 2007, 3, 14. [Google Scholar]

- Society of Automotive Engineers. Operational Definitions of Driving Performance Measures and Statistics; Society of Automotive Engineers: Warrendale, PA, USA, 2015. [Google Scholar]

- Kiefer, P.; Giannopoulos, I.; Raubal, M.; Duchowski, A. Eye tracking for spatial research: Cognition, computation, challenges. Spat. Cogn. Comput. 2017, 17, 1–19. [Google Scholar] [CrossRef]

- Topolšek, D.; Areh, I.; Cvahte, T. Examination of driver detection of roadside traffic signs and advertisements using eye tracking. Transp. Res. F Traffic Psychol. Behav. 2016, 43, 212–224. [Google Scholar] [CrossRef]

- Hansen, D.W.; Pece, A.E.C. Eye tracking in the wild. Comput. Vis. Image Underst. 2005, 98, 155–181. [Google Scholar] [CrossRef]

- Daugman, J. The importance of being random: Statistical principles of iris recognition. Pattern Recognit. 2003, 36, 279–291. [Google Scholar] [CrossRef] [Green Version]

- Young, D.; Tunley, H.; Samuels, R. Specialised Hough Transform and Active Contour Methods for Real-Time Eye Tracking; University of Sussex, Cognitive & Computing Science: Brighton, UK, 1995. [Google Scholar]

- Kyung-Nam, K.; Ramakrishna, R.S. Vision-based eye-gaze tracking for human computer interface. In Proceedings of the IEEE SMC’99 Conference Proceedings, IEEE International Conference on Systems, Man, and Cybernetics, Tokyo, Japan, 12–15 October 1999; pp. 324–329. [Google Scholar]

- Peréz, A.; Córdoba, M.L.; Garcia, A.; Méndez, R.; Munoz, M.; Pedraza, J.L.; Sanchez, F. A Precise Eye-Gaze Detection and Tracking System; Union Agency: Leeds, UK, 2003. [Google Scholar]

- Comaniciu, D.; Ramesh, V.; Meer, P. Kernel-based object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 564–577. [Google Scholar] [CrossRef] [Green Version]

- Locher, P.J.; Nodine, C.F. Symmetry Catches the Eye. In Eye Movements from Physiology to Cognition; O’Regan, J.K., Levy-Schoen, A., Eds.; Elsevier: Amsterdam, The Netherlands, 1987; pp. 353–361. [Google Scholar]

- Dongheng, L.; Winfield, D.; Parkhurst, D.J. Starburst: A hybrid algorithm for video-based eye tracking combining feature-based and model-based approaches. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05)-Workshops, San Diego, CA, USA, 21–23 September 2005; p. 79. [Google Scholar]

- Yuille, A.L.; Hallinan, P.W.; Cohen, D.S. Feature extraction from faces using deformable templates. Int. J. Comput. Vision 1992, 8, 99–111. [Google Scholar] [CrossRef]

- Kin-Man, L.; Hong, Y. Locating and extracting the eye in human face images. Pattern Recognit. 1996, 29, 771–779. [Google Scholar] [CrossRef]

- Edwards, G.J.; Cootes, T.F.; Taylor, C.J. Face recognition using active appearance models. In European Conference on Computer Vision—ECCV’98; Springer: Berlin/Heidelberg, Germany, 1998; pp. 581–595. [Google Scholar]

- Heo, H.; Lee, W.O.; Shin, K.Y.; Park, K.R. Quantitative Measurement of Eyestrain on 3D Stereoscopic Display Considering the Eye Foveation Model and Edge Information. Sensors 2014, 14, 8577–8604. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L. Estimation of eye and mouth corner point positions in a knowledge-based coding system. In Proceedings of the Digital Compression Technologies and Systems for Video Communications, Berlin, Germany, 16 September 1996; pp. 21–28. [Google Scholar]

- Kampmann, M.; Zhang, L. Estimation of eye, eyebrow and nose features in videophone sequences. In Proceedings of the International Workshop on Very Low Bitrate Video Coding (VLBV 98), Urbana, IL, USA, 8–9 October 1998; pp. 101–104. [Google Scholar]

- Chow, G.; Li, X. Towards a system for automatic facial feature detection. Pattern Recognit. 1993, 26, 1739–1755. [Google Scholar] [CrossRef]

- Herpers, R.; Michaelis, M.; Lichtenauer, K.; Sommer, G. Edge and keypoint detection in facial regions. In Proceedings of the Second International Conference on Automatic Face and Gesture Recognition, Killington, VT, USA, 14–16 October 1996; pp. 212–217. [Google Scholar]

- Li, B.; Fu, H.; Wen, D.; Lo, W. Etracker: A Mobile Gaze-Tracking System with Near-Eye Display Based on a Combined Gaze-Tracking Algorithm. Sensors 2018, 18, 1626. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vincent, J.M.; Waite, J.B.; Myers, D.J. Automatic location of visual features by a system of multilayered perceptrons. IEE Proc. F Radar Signal Process. 1992, 139, 405–412. [Google Scholar] [CrossRef]

- Reinders, M.J.T.; Koch, R.W.C.; Gerbrands, J.J. Locating facial features in image sequences using neural networks. In Proceedings of the Second International Conference on Automatic Face and Gesture Recognition, Killington, VT, USA, 14–16 October 1996; pp. 230–235. [Google Scholar]

- Kawato, S.; Ohya, J. Two-step approach for real-time eye tracking with a new filtering technique. In Proceedings of the Smc Conference Proceedings, IEEE International Conference on Systems, Man and Cybernetics. ‘Cybernetics Evolving to Systems, Humans, Organizations, and Their Complex Interactions’ (Cat. No. 0), Nashville, TN, USA, 8–11 October 2000; pp. 1366–1371. [Google Scholar]

- Kawato, S.; Ohya, J. Real-time detection of nodding and head-shaking by directly detecting and tracking the “between-eyes”. In Proceedings of the Fourth IEEE International Conference on Automatic Face and Gesture Recognition, Grenoble, France, 28–30 March 2000; pp. 40–45. [Google Scholar]

- Kawato, S.; Tetsutani, N. Detection and tracking of eyes for gaze-camera control. Image Vis. Comput. 2004, 22, 1031–1038. [Google Scholar] [CrossRef]

- Kawato, S.; Tetsutani, N. Real-time detection of between-the-eyes with a circle frequency filter. In Proceedings of the 5th Asian Conference on Computer Vision (ACCV2002), Melbourne, Australia, 23–25 January 2002; pp. 442–447. [Google Scholar]

- Sirohey, S.; Rosenfeld, A.; Duric, Z. A method of detecting and tracking irises and eyelids in video. Pattern Recognit. 2002, 35, 1389–1401. [Google Scholar] [CrossRef]

- Sirohey, S.A.; Rosenfeld, A. Eye detection in a face image using linear and nonlinear filters. Pattern Recognit. 2001, 34, 1367–1391. [Google Scholar] [CrossRef]

- Yang, J.; Stiefelhagen, R.; Meier, U.; Waibel, A. Real-time face and facial feature tracking and applications. In Proceedings of the AVSP’98 International Conference on Auditory-Visual Speech Processing, Sydney Australia, 4–6 December 1998. [Google Scholar]

- Ying-li, T.; Kanade, T.; Cohn, J.F. Dual-state parametric eye tracking. In Proceedings of the Fourth IEEE International Conference on Automatic Face and Gesture Recognition, Grenoble, France, 28–30 March 2000; pp. 110–115. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence-Volume 2, Vancouver, BC, Canada, 24–28 August 1981; pp. 674–679. [Google Scholar]

- Weimin, H.; Mariani, R. Face detection and precise eyes location. In Proceedings of the 15th International Conference on Pattern Recognition, ICPR-2000, Barcelona, Spain, 3–7 September 2000; pp. 722–727. [Google Scholar]

- Samaria, F.; Young, S. HMM-based architecture for face identification. Image Vis. Comput. 1994, 12, 537–543. [Google Scholar] [CrossRef]

- Kovesi, P. Symmetry and asymmetry from local phase. In Proceedings of the Tenth Australian Joint Conference on Artificial Intelligence, Perth, Australia, 30 November–4 December 1997; pp. 2–4. [Google Scholar]

- Lin, C.-C.; Lin, W.-C. Extracting facial features by an inhibitory mechanism based on gradient distributions. Pattern Recognit. 1996, 29, 2079–2101. [Google Scholar] [CrossRef]

- Sela, G.; Levine, M.D. Real-Time Attention for Robotic Vision. Real Time Imaging 1997, 3, 173–194. [Google Scholar] [CrossRef] [Green Version]

- Grauman, K.; Betke, M.; Gips, J.; Bradski, G.R. Communication via eye blinks-detection and duration analysis in real time. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Crowley, J.L.; Berard, F. Multi-modal tracking of faces for video communications. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, Puerto Rico, USA, 17–19 June 1997; pp. 640–645. [Google Scholar]

- Bala, L.-P. Automatic detection and tracking of faces and facial features in video sequences. In Proceedings of the Picture Coding Symposium, Berlin, Germany, 10–12 September 1997. [Google Scholar]

- Ishikawa, T. Passive Driver Gaze Tracking with Active Appearance Models. 2004. Available online: https://kilthub.cmu.edu/articles/Passive_driver_gaze_tracking_with_active_appearance_models/6557315/1 (accessed on 7 September 2019).

- Matsumoto, Y.; Zelinsky, A. An algorithm for real-time stereo vision implementation of head pose and gaze direction measurement. In Proceedings of the Fourth IEEE International Conference on Automatic Face and Gesture Recognition (Cat. No. PR00580), Grenoble, France, 28–30 March 2000; pp. 499–504. [Google Scholar]

- Xie, X.; Sudhakar, R.; Zhuang, H. On improving eye feature extraction using deformable templates. Pattern Recognit. 1994, 27, 791–799. [Google Scholar] [CrossRef]

- Feris, R.S.; de Campos, T.E.; Marcondes, R.C. Detection and Tracking of Facial Features in Video Sequences; Springer: Berlin/Heidelberg, Germany, 2000; pp. 127–135. [Google Scholar]

- Horng, W.-B.; Chen, C.-Y.; Chang, Y.; Fan, C.-H. Driver fatigue detection based on eye tracking and dynamic template matching. In Proceedings of the IEEE International Conference on Networking, Sensing and Control, Taipei, Taiwan, 21–23 March 2004; pp. 7–12. [Google Scholar]

- Stiefelhagen, R.; Yang, J.; Waibel, A. Tracking eyes and monitoring eye gaze. In Proceedings of the Workshop on Perceptual User Interfaces, Banff, AB, Canada, 20–21 October 1997; pp. 98–100. [Google Scholar]

- Hansen, D.W.; Hansen, J.P.; Nielsen, M.; Johansen, A.S.; Stegmann, M.B. Eye typing using Markov and active appearance models. In Proceedings of the Sixth IEEE Workshop on Applications of Computer Vision (WACV 2002), Orlando, FL, USA, 4 December 2002; pp. 132–136. [Google Scholar]

- Stiefelhagen, R.; Jie, Y.; Waibel, A. A model-based gaze tracking system. In Proceedings of the IEEE International Joint Symposia on Intelligence and Systems, Washington, DC, USA, 4–5 November 1996; pp. 304–310. [Google Scholar]

- Xie, X.; Sudhakar, R.; Zhuang, H. A cascaded scheme for eye tracking and head movement compensation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 1998, 28, 487–490. [Google Scholar] [CrossRef]

- Valenti, R.; Gevers, T. Accurate eye center location and tracking using isophote curvature. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Morimoto, C.H.; Koons, D.; Amir, A.; Flickner, M. Pupil detection and tracking using multiple light sources. Image Vis. Comput. 2000, 18, 331–335. [Google Scholar] [CrossRef]

- Morimoto, C.H.; Flickner, M. Real-time multiple face detection using active illumination. In Proceedings of the Fourth IEEE International Conference on Automatic Face and Gesture Recognition (Cat. No. PR00580), Grenoble, France, 28–30 March 2000; pp. 8–13. [Google Scholar]

- Ebisawa, Y. Realtime 3D position detection of human pupil. In Proceedings of the 2004 IEEE Symposium on Virtual Environments, Human-Computer Interfaces and Measurement Systems (VCIMS), Boston, MA, USA, 12–14 July 2004; pp. 8–12. [Google Scholar]

- Colombo, C.; Del Bimbo, A. Real-time head tracking from the deformation of eye contours using a piecewise affine camera. Pattern Recognit. Lett. 1999, 20, 721–730. [Google Scholar] [CrossRef]

- Feng, G.C.; Yuen, P.C. Variance projection function and its application to eye detection for human face recognition. Pattern Recognit. Lett. 1998, 19, 899–906. [Google Scholar] [CrossRef]

- Orazio, T.D.; Leo, M.; Cicirelli, G.; Distante, A. An algorithm for real time eye detection in face images. In Proceedings of the 17th International Conference on Pattern Recognition, ICPR 2004, Cambridge, UK, 26–26 August 2004; pp. 278–281. [Google Scholar]

- Hallinan, P.W. Recognizing Human Eyes; SPIE: Bellingham, WA, USA, 1991; Volume 1570. [Google Scholar]

- Hillman, P.M.; Hannah, J.M.; Grant, P.M. Global fitting of a facial model to facial features for model-based video coding. In Proceedings of the 3rd International Symposium on Image and Signal Processing and Analysis, ISPA 2003, Rome, Italy, 18–20 September 2003; pp. 359–364. [Google Scholar]

- Zhu, Z.; Fujimura, K.; Ji, Q. Real-time eye detection and tracking under various light conditions. In Proceedings of the 2002 Symposium on Eye Tracking Research & Applications, New York, NY, USA, 25–27 March 2002; pp. 139–144. [Google Scholar]

- Fasel, I.; Fortenberry, B.; Movellan, J. A generative framework for real time object detection and classification. Comput. Vis. Image Underst. 2005, 98, 182–210. [Google Scholar] [CrossRef]

- Huang, J.; Wechsler, H. Eye location using genetic algorithm. In Proceedings of the 2nd International Conference on Audio and Video-Based Biometric Person Authentication, Washington, DC, USA, 22–23 March 1999. [Google Scholar]

- Hansen, D.W.; Hammoud, R.I. An improved likelihood model for eye tracking. Comput. Vis. Image Underst. 2007, 106, 220–230. [Google Scholar] [CrossRef]

- Cristinacce, D.; Cootes, T.F. Feature detection and tracking with constrained local models. In Proceedings of the British Machine Vision Conference, Edinburgh, UK, 4–7 September 2006; p. 3. [Google Scholar]

- Kimme, C.; Ballard, D.; Sklansky, J. Finding circles by an array of accumulators. Commun. ACM 1975, 18, 120–122. [Google Scholar] [CrossRef]

- Ruddock, K.H. Movements of the Eyes. J. Mod. Opt. 1989, 36, 1273. [Google Scholar] [CrossRef]

- Guestrin, E.D.; Eizenman, M. General theory of remote gaze estimation using the pupil center and corneal reflections. IEEE Trans. Biomed. Eng. 2006, 53, 1124–1133. [Google Scholar] [CrossRef] [PubMed]

- Baluja, S.; Pomerleau, D. Non-Intrusive Gaze Tracking Using Artificial Neural Networks; Carnegie Mellon University: Pittsburgh, PA, USA, 1994. [Google Scholar]

- Wang, J.-G.; Sung, E.; Venkateswarlu, R. Estimating the eye gaze from one eye. Comput. Vis. Image Underst. 2005, 98, 83–103. [Google Scholar] [CrossRef]

- Fridman, L.; Lee, J.; Reimer, B.; Victor, T. ‘Owl’ and ‘Lizard’: Patterns of head pose and eye pose in driver gaze classification. In IET Computer Vision; Institution of Engineering and Technology: Stevenage, UK, 2016; Volume 10, pp. 308–314. [Google Scholar]

- Ohno, T. One-point calibration gaze tracking method. In Proceedings of the 2006 Symposium on Eye Tracking Research & Applications, San Diego, CA, USA, 27 March 2006; p. 34. [Google Scholar]

- Ohno, T.; Mukawa, N.; Yoshikawa, A. FreeGaze: A gaze tracking system for everyday gaze interaction. In Proceedings of the 2002 Symposium on Eye Tracking Research & Applications, New Orleans, LA, USA, 25 March 2002; pp. 125–132. [Google Scholar]

- Villanueva, A.; Cabeza, R. Models for Gaze Tracking Systems. EURASIP J. Image Video Process. 2007, 2007, 023570. [Google Scholar] [CrossRef]

- Coutinho, F.L.; Morimoto, C.H. Free head motion eye gaze tracking using a single camera and multiple light sources. In Proceedings of the 2006 19th Brazilian Symposium on Computer Graphics and Image Processing, Manaus, Brazil, 8–11 October 2006; pp. 171–178. [Google Scholar]

- Shih, S.W.; Wu, Y.T.; Liu, J. A calibration-free gaze tracking technique. In Proceedings of the 15th International Conference on Pattern Recognition, ICPR-2000, Barcelona, Spain, 3–7 September 2000; pp. 201–204. [Google Scholar]

- Villanueva, A.; Cabeza, R.; Porta, S. Eye tracking: Pupil orientation geometrical modeling. Image Vis. Comput. 2006, 24, 663–679. [Google Scholar] [CrossRef]

- Yoo, D.H.; Chung, M.J. A novel non-intrusive eye gaze estimation using cross-ratio under large head motion. Comput. Vis. Image Underst. 2005, 98, 25–51. [Google Scholar] [CrossRef]

- Beymer, D.; Flickner, M. Eye gaze tracking using an active stereo head. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; p. II-451. [Google Scholar]

- Brolly, X.L.C.; Mulligan, J.B. Implicit Calibration of a Remote Gaze Tracker. In Proceedings of the 2004 Conference on Computer Vision and Pattern Recognition Workshop, Washington, DC, USA, 27 June–2 July 2004; p. 134. [Google Scholar]

- Ohno, T.; Mukawa, N. A free-head, simple calibration, gaze tracking system that enables gaze-based interaction. In Proceedings of the 2004 symposium on Eye Tracking Research & Applications, San Antonio, TX, USA, 22 March 2004; pp. 115–122. [Google Scholar]

- Shih, S.-W.; Liu, J. A novel approach to 3-D gaze tracking using stereo cameras. Trans. Sys. Man Cyber. Part B 2004, 34, 234–245. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, S.M.; Sked, M.; Ji, Q. Non-intrusive eye gaze tracking under natural head movements. In Proceedings of the 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Francisco, CA, USA, 1–5 September 2004; pp. 2271–2274. [Google Scholar]

- Meyer, A.; Böhme, M.; Martinetz, T.; Barth, E. A Single-Camera Remote Eye Tracker. In International Tutorial and Research Workshop on Perception and Interactive Technologies for Speech-Based Systems; Springer: Berlin/Heidelberg, Germany, 2006; pp. 208–211. [Google Scholar]

- Morimoto, C.H.; Amir, A.; Flickner, M. Detecting eye position and gaze from a single camera and 2 light sources. In Proceedings of the Object Recognition Supported by User Interaction for Service Robots, Quebec, QC, Canada, 11–15 August 2002; pp. 314–317. [Google Scholar]

- Newman, R.; Matsumoto, Y.; Rougeaux, S.; Zelinsky, A. Real-time stereo tracking for head pose and gaze estimation. In Proceedings of the Fourth IEEE International Conference on Automatic Face and Gesture Recognition (Cat. No. PR00580), Grenoble, France, 28–30 March 2000; pp. 122–128. [Google Scholar]

- Noureddin, B.; Lawrence, P.D.; Man, C.F. A non-contact device for tracking gaze in a human computer interface. Comput. Vis. Image Underst. 2005, 98, 52–82. [Google Scholar] [CrossRef]

- Villanueva, A.; Cabeza, R.; Porta, S. Gaze Tracking system model based on physical parameters. Int. J. Pattern Recognit. Artif. Intell. 2007, 21, 855–877. [Google Scholar] [CrossRef]

- Hansen, D.W.; Skovsgaard, H.H.T.; Hansen, J.P.; Møllenbach, E. Noise tolerant selection by gaze-controlled pan and zoom in 3D. In Proceedings of the 2008 Symposium on Eye Tracking Research & Applications, Savannah, GA, USA, 26 March 2008; pp. 205–212. [Google Scholar]

- Vertegaal, R.; Weevers, I.; Sohn, C. GAZE-2: An attentive video conferencing system. In Proceedings of the CHI’02 Extended Abstracts on Human Factors in Computing Systems, Kingston, ON, Canada, 20 April 2002; pp. 736–737. [Google Scholar]

- Ebisawa, Y.; Satoh, S. Effectiveness of pupil area detection technique using two light sources and image difference method. In Proceedings of the 15th Annual International Conference of the IEEE Engineering in Medicine and Biology Societ, San Diego, CA, USA, 31 October 1993; pp. 1268–1269. [Google Scholar]

- Morimoto, C.H.; Mimica, M.R.M. Eye gaze tracking techniques for interactive applications. Comput. Vis. Image Underst. 2005, 98, 4–24. [Google Scholar] [CrossRef]

- Ji, Q.; Yang, X. Real-Time Eye, Gaze, and Face Pose Tracking for Monitoring Driver Vigilance. Real Time Imaging 2002, 8, 357–377. [Google Scholar] [CrossRef] [Green Version]

- Williams, O.; Blake, A.; Cipolla, R. Sparse and Semi-supervised Visual Mapping with the S^ 3GP. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; pp. 230–237. [Google Scholar]

- Bin Suhaimi, M.S.A.; Matsushita, K.; Sasaki, M.; Njeri, W. 24-Gaze-Point Calibration Method for Improving the Precision of AC-EOG Gaze Estimation. Sensors 2019, 19, 3650. [Google Scholar] [CrossRef] [Green Version]

- Hansen, D.W. Committing Eye Tracking; IT University of Copenhagen, Department of Innovation: København, Denmark, 2003. [Google Scholar]

- Stampe, D.M. Heuristic filtering and reliable calibration methods for video-based pupil-tracking systems. Behav. Res. Methods Instrum. Comput. 1993, 25, 137–142. [Google Scholar] [CrossRef] [Green Version]

- Merchant, J.; Morrissette, R.; Porterfield, J.L. Remote Measurement of Eye Direction Allowing Subject Motion Over One Cubic Foot of Space. IEEE Trans. Biomed. Eng. 1974, BME-21, 309–317. [Google Scholar] [CrossRef]

- White, K.P.; Hutchinson, T.E.; Carley, J.M. Spatially dynamic calibration of an eye-tracking system. IEEE Trans. Syst. Man Cybern. 1993, 23, 1162–1168. [Google Scholar] [CrossRef]

- Zhu, Z.; Ji, Q. Eye and gaze tracking for interactive graphic display. Mach. Vis. Appl. 2004, 15, 139–148. [Google Scholar] [CrossRef]

- Zhiwei, Z.; Qiang, J.; Bennett, K.P. Nonlinear Eye Gaze Mapping Function Estimation via Support Vector Regression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; pp. 1132–1135. [Google Scholar]

- Kolakowski, S.M.; Pelz, J.B. Compensating for eye tracker camera movement. In Proceedings of the 2006 Symposium on Eye Tracking Research & Applications, San Diego, CA, USA, 27 March 2006; pp. 79–85. [Google Scholar]

- Zhu, Z.; Ji, Q. Novel Eye Gaze Tracking Techniques Under Natural Head Movement. IEEE Trans. Biomed. Eng. 2007, 54, 2246–2260. [Google Scholar] [PubMed]

- Müller, P.U.; Cavegn, D.; d’Ydewalle, G.; Groner, R. A comparison of a new limbus tracker, corneal reflection technique, Purkinje eye tracking and electro-oculography. In Perception and Cognition: Advances in Eye Movement Research; North-Holland/Elsevier Science Publishers: Amsterdam, The Netherlands, 1993; pp. 393–401. [Google Scholar]

- Crane, H.D.; Steele, C.M. Accurate three-dimensional eyetracker. Appl. Opt. 1978, 17, 691–705. [Google Scholar] [CrossRef]

- Xu, L.-Q.; Machin, D.; Sheppard, P. A Novel Approach to Real-time Non-intrusive Gaze Finding. In Proceedings of the British Machine Vision Conference, Southampton, UK, 14–17 September 1998; pp. 1–10. [Google Scholar]

- Wang, J.-G.; Sung, E. Gaze determination via images of irises. Image Vis. Comput. 2001, 19, 891–911. [Google Scholar] [CrossRef]

- Tan, K.-H.; Kriegman, D.J.; Ahuja, N. Appearance-based eye gaze estimation. In Proceedings of the Sixth IEEE Workshop on Applications of Computer Vision (WACV 2002), Orlando, FL, USA, 4 December 2002; pp. 191–195. [Google Scholar]

- Heinzmann, K.; Zelinsky, A. 3-D Facial Pose and Gaze Point Estimation Using a Robust Real-Time Tracking Paradigm. In Proceedings of the 3rd, International Conference on Face & Gesture Recognition, Nara, Japan, 14–16 April 1998; p. 142. [Google Scholar]

- Yamazoe, H.; Utsumi, A.; Yonezawa, T.; Abe, S. Remote gaze estimation with a single camera based on facial-feature tracking without special calibration actions. In Proceedings of the 2008 Symposium on Eye Tracking Research & Applications, Savannah, GA, USA, 26 March 2008; pp. 245–250. [Google Scholar]

- Matsumoto, Y.; Ogasawara, T.; Zelinsky, A. Behavior recognition based on head pose and gaze direction measurement. In Proceedings of the 2000 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2000) (Cat. No.00CH37113), Takamatsu, Japan, 31 October–5 November 2000; pp. 2127–2132. [Google Scholar]

- Li, G.; Li, S.E.; Zou, R.; Liao, Y.; Cheng, B. Detection of road traffic participants using cost-effective arrayed ultrasonic sensors in low-speed traffic situations. Mech. Syst. Signal Process. 2019, 132, 535–545. [Google Scholar] [CrossRef] [Green Version]

- Scott, D.; Findlay, J.M.; Hursley Human Factors Laboratory, W.; Laboratory, I.U.H.H.F. Visual Search, Eye Movements and Display Units; IBM UK Hursley Human Factors Laboratory: Winchester, UK, 1991. [Google Scholar]

- Talmi, K.; Liu, J. Eye and gaze tracking for visually controlled interactive stereoscopic displays. Signal Process. Image Commun. 1999, 14, 799–810. [Google Scholar] [CrossRef]

- Tomono, A.; Iida, M.; Kobayashi, Y. A TV Camera System Which Extracts Feature Points for Non-Contact Eye Movement Detection; SPIE: Bellingham, WA, USA, 1990; Volume 1194. [Google Scholar]

- Harbluk, J.L.; Noy, Y.I.; Eizenman, M. The Impact of Cognitive Distraction on Driver Visual Behaviour and Vehicle Control. 2002. Available online: https://trid.trb.org/view/643031 (accessed on 14 December 2019).

- Sodhi, M.; Reimer, B.; Cohen, J.L.; Vastenburg, E.; Kaars, R.; Kirschenbaum, S. On-road driver eye movement tracking using head-mounted devices. In Proceedings of the 2002 Symposium on Eye Tracking Research & Applications, New Orleans, LA, USA, 25–27 March 2002; pp. 61–68. [Google Scholar]

- Reimer, B.; Mehler, B.; Wang, Y.; Coughlin, J.F. A Field Study on the Impact of Variations in Short-Term Memory Demands on Drivers’ Visual Attention and Driving Performance Across Three Age Groups. Hum. Factors 2012, 54, 454–468. [Google Scholar] [CrossRef]

- Reimer, B.; Mehler, B.; Wang, Y.; Coughlin, J.F. The Impact of Systematic Variation of Cognitive Demand on Drivers’ Visual Attention across Multiple Age Groups. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2010, 54, 2052–2055. [Google Scholar] [CrossRef]

- Liang, Y.; Lee, J.D. Combining cognitive and visual distraction: Less than the sum of its parts. Accid. Anal. Prev. 2010, 42, 881–890. [Google Scholar] [CrossRef]

- Harbluk, J.L.; Noy, Y.I.; Trbovich, P.L.; Eizenman, M. An on-road assessment of cognitive distraction: Impacts on drivers’ visual behavior and braking performance. Accid. Anal. Prev. 2007, 39, 372–379. [Google Scholar] [CrossRef] [PubMed]

- Victor, T.W.; Harbluk, J.L.; Engström, J.A. Sensitivity of eye-movement measures to in-vehicle task difficulty. Transp. Res. Part F Traffic Psychol. Behav. 2005, 8, 167–190. [Google Scholar] [CrossRef]

- Recarte, M.A.; Nunes, L.M. Effects of verbal and spatial-imagery tasks on eye fixations while driving. J. Exp. Psychol. Appl. 2000, 6, 31–43. [Google Scholar] [CrossRef] [PubMed]

- Recarte, M.A.; Nunes, L.M. Mental workload while driving: Effects on visual search, discrimination, and decision making. J. Exp. Psychol. Appl. 2003, 9, 119–137. [Google Scholar] [CrossRef] [PubMed]

- Nunes, L.; Recarte, M.A. Cognitive demands of hands-free-phone conversation while driving. Transp. Res. Part F Traffic Psychol. Behav. 2002, 5, 133–144. [Google Scholar] [CrossRef]

- Kass, S.J.; Cole, K.S.; Stanny, C.J. Effects of distraction and experience on situation awareness and simulated driving. Transp. Res. Part F Traffic Psychol. Behav. 2007, 10, 321–329. [Google Scholar] [CrossRef]

- Engström, J.; Johansson, E.; Östlund, J. Effects of visual and cognitive load in real and simulated motorway driving. Transp. Res. Part F Traffic Psychol. Behav. 2005, 8, 97–120. [Google Scholar] [CrossRef]

- Ahlström, C.; Kircher, K.; Kircher, A. Considerations when calculating percent road centre from eye movement data in driver distraction monitoring. In Proceedings of the Fifth International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design, Big Sky, MT, USA, 22–25 June 2009; pp. 132–139. [Google Scholar]

- Wang, Y.; Reimer, B.; Dobres, J.; Mehler, B. The sensitivity of different methodologies for characterizing drivers’ gaze concentration under increased cognitive demand. Transp. Res. F Traffic Psychol. Behav. 2014, 26, 227–237. [Google Scholar] [CrossRef]

- Endsley, M.R. Toward a Theory of Situation Awareness in Dynamic Systems; Routledge: Abingdon, UK, 2016. [Google Scholar]

- Khan, M.Q.; Lee, S. A Comprehensive Survey of Driving Monitoring and Assistance Systems. Sensors 2019, 19, 2574. [Google Scholar] [CrossRef] [Green Version]

- Martinez, C.M.; Heucke, M.; Wang, F.; Gao, B.; Cao, D. Driving Style Recognition for Intelligent Vehicle Control and Advanced Driver Assistance: A Survey. IEEE Trans. Intell. Transp. Syst. 2018, 19, 666–676. [Google Scholar] [CrossRef] [Green Version]

- Regan, M.A.; Lee, J.D.; Young, K. Driver Distraction: Theory, Effects, and Mitigation; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Ranney, T.; Mazzae, E.; Garrott, R.; Goodman, M.; Administration, N.H.T.S. Driver distraction research: Past, present and future. In Proceedings of the 17th International Technical Conference of Enhanced Safety of Vehicles, Amsterdam, The Netherlands, 4–7 June 2001. [Google Scholar]

- Young, K.; Regan, M.; Hammer, M. Driver distraction: A review of the literature. Distracted Driv. 2007, 2007, 379–405. [Google Scholar]

- Stutts, J.C.; Reinfurt, D.W.; Staplin, L.; Rodgman, E. The Role of Driver Distraction in Traffic Crashes; AAA Foundation for Traffic Safety: Washington, DC, USA, 2001. [Google Scholar]

- Zhao, Y.; Görne, L.; Yuen, I.-M.; Cao, D.; Sullman, M.; Auger, D.; Lv, C.; Wang, H.; Matthias, R.; Skrypchuk, L.; et al. An Orientation Sensor-Based Head Tracking System for Driver Behaviour Monitoring. Sensors 2017, 17, 2692. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Khandakar, A.; Chowdhury, M.E.H.; Ahmed, R.; Dhib, A.; Mohammed, M.; Al-Emadi, N.A.M.A.; Michelson, D. Portable System for Monitoring and Controlling Driver Behavior and the Use of a Mobile Phone While Driving. Sensors 2019, 19, 1563. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ranney, T.A.; Garrott, W.R.; Goodman, M.J. NHTSA Driver Distraction Research: Past, Present, and Future; SAE Technical Paper: Warrendale, PA, USA, 2001. [Google Scholar]

- Fitch, G.M.; Soccolich, S.A.; Guo, F.; McClafferty, J.; Fang, Y.; Olson, R.L.; Perez, M.A.; Hanowski, R.J.; Hankey, J.M.; Dingus, T.A. The Impact of Hand-Held and Hands-Free Cell Phone Use on Driving Performance and Safety-Critical Event Risk; U.S. Department of Transportation, National Highway Traffic Safety Administration: Washington, DC, USA, 2013. [Google Scholar]

- Miller, J.; Ulrich, R. Bimanual Response Grouping in Dual-Task Paradigms. Q. J. Exp. Psychol. 2008, 61, 999–1019. [Google Scholar] [CrossRef] [PubMed]

- Gazes, Y.; Rakitin, B.C.; Steffener, J.; Habeck, C.; Butterfield, B.; Ghez, C.; Stern, Y. Performance degradation and altered cerebral activation during dual performance: Evidence for a bottom-up attentional system. Behav. Brain Res. 2010, 210, 229–239. [Google Scholar] [CrossRef] [Green Version]

- Törnros, J.E.B.; Bolling, A.K. Mobile phone use—Effects of handheld and handsfree phones on driving performance. Accid. Anal. Prev. 2005, 37, 902–909. [Google Scholar] [CrossRef]

- Young, K.L.; Salmon, P.M.; Cornelissen, M. Distraction-induced driving error: An on-road examination of the errors made by distracted and undistracted drivers. Accid. Anal. Prev. 2013, 58, 218–225. [Google Scholar] [CrossRef]

- Chan, M.; Singhal, A. The emotional side of cognitive distraction: Implications for road safety. Accid. Anal. Prev. 2013, 50, 147–154. [Google Scholar] [CrossRef]

- Strayer, D.L.; Cooper, J.M.; Turrill, J.; Coleman, J.; Medeiros-Ward, N.; Biondi, F. Measuring Cognitive Distraction in the Automobile; AAA Foundation for Traffic Safety: Washington, DC, USA, 2013. [Google Scholar]

- Rakauskas, M.E.; Gugerty, L.J.; Ward, N.J. Effects of naturalistic cell phone conversations on driving performance. J. Saf. Res. 2004, 35, 453–464. [Google Scholar] [CrossRef]

- Horberry, T.; Anderson, J.; Regan, M.A.; Triggs, T.J.; Brown, J. Driver distraction: The effects of concurrent in-vehicle tasks, road environment complexity and age on driving performance. Accid. Anal. Prev. 2006, 38, 185–191. [Google Scholar] [CrossRef]

- Awais, M.; Badruddin, N.; Drieberg, M. A Hybrid Approach to Detect Driver Drowsiness Utilizing Physiological Signals to Improve System Performance and Wearability. Sensors 2017, 17, 1991. [Google Scholar] [CrossRef] [Green Version]

- Chien, J.-C.; Chen, Y.-S.; Lee, J.-D. Improving Night Time Driving Safety Using Vision-Based Classification Techniques. Sensors 2017, 17, 2199. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thum Chia, C.; Mustafa, M.M.; Hussain, A.; Hendi, S.F.; Majlis, B.Y. Development of vehicle driver drowsiness detection system using electrooculogram (EOG). In Proceedings of the 2005 1st International Conference on Computers, Communications, & Signal Processing with Special Track on Biomedical Engineering, Kuala Lumpur, Malaysia, 14–16 November 2005; pp. 165–168. [Google Scholar]

- Sirevaag, E.J.; Stern, J.A. Ocular Measures of Fatigue and Cognitive Factors. Engineering Psychophysiology: Issues and Applications; CRC Press: Boca Raton, FL, USA, 2000; pp. 269–287. [Google Scholar]

- Schleicher, R.; Galley, N.; Briest, S.; Galley, L. Blinks and saccades as indicators of fatigue in sleepiness warnings: Looking tired? Ergonomics 2008, 51, 982–1010. [Google Scholar] [CrossRef] [PubMed]

- Yue, C. EOG Signals in Drowsiness Research. 2011. Available online: https://pdfs.semanticscholar.org/8b77/9934f6ceae3073b3312c947f39467a74828f.pdf (accessed on 14 December 2019).

- Thorslund, B. Electrooculogram Analysis and Development of a System for Defining Stages of Drowsiness; Statens väg-och transportforskningsinstitut: Linköping, Sweden, 2004. [Google Scholar]

- Pohl, J.; Birk, W.; Westervall, L. A driver-distraction-based lane-keeping assistance system. Proc Inst. Mech. Eng. Part I J. Syst. Control Eng. 2007, 221, 541–552. [Google Scholar] [CrossRef] [Green Version]

- Kircher, K.; Ahlstrom, C.; Kircher, A. Comparison of two eye-gaze based real-time driver distraction detection algorithms in a small-scale field operational test. In Proceedings of the Fifth International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design, Big Sky, MT, USA, 22–25 June 2009. [Google Scholar]

- Kim, W.; Jung, W.-S.; Choi, H.K. Lightweight Driver Monitoring System Based on Multi-Task Mobilenets. Sensors 2019, 19, 3200. [Google Scholar] [CrossRef] [Green Version]

- Mavely, A.G.; Judith, J.E.; Sahal, P.A.; Kuruvilla, S.A. Eye gaze tracking based driver monitoring system. In Proceedings of the 2017 IEEE International Conference on Circuits and Systems (ICCS), Thiruvananthapuram, India, 20–21 December 2017; pp. 364–367. [Google Scholar]

- Wollmer, M.; Blaschke, C.; Schindl, T.; Schuller, B.; Farber, B.; Mayer, S.; Trefflich, B. Online Driver Distraction Detection Using Long Short-Term Memory. IEEE Trans. Intell. Transp. Syst. 2011, 12, 574–582. [Google Scholar] [CrossRef] [Green Version]

- Castro, M.J.C.D.; Medina, J.R.E.; Lopez, J.P.G.; Goma, J.C.d.; Devaraj, M. A Non-Intrusive Method for Detecting Visual Distraction Indicators of Transport Network Vehicle Service Drivers Using Computer Vision. In Proceedings of the IEEE 10th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM), Baguio, Philippines, 29 November–2 December 2018; pp. 1–5. [Google Scholar]

- Banaeeyan, R.; Halin, A.A.; Bahari, M. Nonintrusive eye gaze tracking using a single eye image. In Proceedings of the 2015 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Lumpur, Malaysia, 19–21 October 2015; pp. 139–144. [Google Scholar]

- Anjali, K.U.; Thampi, A.K.; Vijayaraman, A.; Francis, M.F.; James, N.J.; Rajan, B.K. Real-time nonintrusive monitoring and detection of eye blinking in view of accident prevention due to drowsiness. In Proceedings of the 2016 International Conference on Circuit, Power and Computing Technologies (ICCPCT), Nagercoil, India, 18–19 March 2016; pp. 1–6. [Google Scholar]

- Hirayama, T.; Mase, K.; Takeda, K. Analysis of Temporal Relationships between Eye Gaze and Peripheral Vehicle Behavior for Detecting Driver Distraction. Int. J. Veh. Technol. 2013, 2013, 8. [Google Scholar] [CrossRef]

- Yang, Y.; Sun, H.; Liu, T.; Huang, G.-B.; Sourina, O. Driver Workload Detection in On-Road Driving Environment Using Machine Learning. In Proceedings of ELM-2014 Volume 2; Springer: Berlin/Heidelberg, Germany, 2015; pp. 389–398. [Google Scholar]

- Tango, F.; Botta, M. Real-Time Detection System of Driver Distraction Using Machine Learning. IEEE Trans. Intell. Transp. Syst. 2013, 14, 894–905. [Google Scholar] [CrossRef] [Green Version]

- Mbouna, R.O.; Kong, S.G.; Chun, M. Visual Analysis of Eye State and Head Pose for Driver Alertness Monitoring. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1462–1469. [Google Scholar] [CrossRef]

- Ahlstrom, C.; Kircher, K.; Kircher, A. A Gaze-Based Driver Distraction Warning System and Its Effect on Visual Behavior. IEEE Trans. Intell. Transp. Syst. 2013, 14, 965–973. [Google Scholar] [CrossRef]

- Liu, T.; Yang, Y.; Huang, G.; Yeo, Y.K.; Lin, Z. Driver Distraction Detection Using Semi-Supervised Machine Learning. IEEE Trans. Intell. Transp. Syst. 2016, 17, 1108–1120. [Google Scholar] [CrossRef]

- Yekhshatyan, L.; Lee, J.D. Changes in the Correlation between Eye and Steering Movements Indicate Driver Distraction. IEEE Trans. Intell. Transp. Syst. 2013, 14, 136–145. [Google Scholar] [CrossRef]

- Carsten, O.; Brookhuis, K. Issues arising from the HASTE experiments. Transp. Res. Part F Traffic Psychol. Behav. 2005, 8, 191–196. [Google Scholar] [CrossRef] [Green Version]

- Ebrahim, P. Driver Drowsiness Monitoring Using Eye Movement Features Derived from Electrooculography. Ph.D. Thesis, University of Stuttgart, Stuttgart, Germany, 2016. [Google Scholar]

- Shin, D.U.K.; Sakai, H.; Uchiyama, Y. Slow eye movement detection can prevent sleep-related accidents effectively in a simulated driving task. J. Sleep Res. 2011, 20, 416–424. [Google Scholar] [CrossRef] [PubMed]

- Liang, Y.; Reyes, M.L.; Lee, J.D. Real-Time Detection of Driver Cognitive Distraction Using Support Vector Machines. IEEE Trans. Intell. Transp. Syst. 2007, 8, 340–350. [Google Scholar] [CrossRef]

- Liang, Y.; Lee, J.D. A hybrid Bayesian Network approach to detect driver cognitive distraction. Transp. Res. Part C Emerg. Technol. 2014, 38, 146–155. [Google Scholar] [CrossRef] [Green Version]

- Weller, G.; Schlag, B. A robust method to detect driver distraction. Available online: http://www.humanist-vce.eu/fileadmin/contributeurs/humanist/Berlin2010/4a_Weller.pdf (accessed on 14 December 2019).

- Miyaji, M.; Kawanaka, H.; Oguri, K. Driver’s cognitive distraction detection using physiological features by the adaboost. In Proceedings of the 2009 12th International IEEE Conference on Intelligent Transportation Systems, St. Louis, MO, USA, 4–7 October 2009; pp. 1–6. [Google Scholar]

- Xu, J.; Min, J.; Hu, J. Real-time eye tracking for the assessment of driver fatigue. Healthc. Technol. Lett. 2018, 5, 54–58. [Google Scholar] [CrossRef]

- Tang, J.; Fang, Z.; Hu, S.; Ying, S. Driver fatigue detection algorithm based on eye features. In Proceedings of the 2010 Seventh International Conference on Fuzzy Systems and Knowledge Discovery, Yantai, China, 10–12 August 2010; pp. 2308–2311. [Google Scholar]

- Li, J.; Yang, Z.; Song, Y. A hierarchical fuzzy decision model for driver’s unsafe states monitoring. In Proceedings of the 2011 Eighth International Conference on Fuzzy Systems and Knowledge Discovery (FSKD), Shanghai, China, 26–28 July 2011; pp. 569–573. [Google Scholar]

- Rigane, O.; Abbes, K.; Abdelmoula, C.; Masmoudi, M. A Fuzzy Based Method for Driver Drowsiness Detection. In Proceedings of the IEEE/ACS 14th International Conference on Computer Systems and Applications (AICCSA), Hammamet, Tunisia, 30 October–3 November 2017; pp. 143–147. [Google Scholar]

- Lethaus, F.; Baumann, M.R.; Köster, F.; Lemmer, K. Using pattern recognition to predict driver intent. In International Conference on Adaptive and Natural Computing Algorithms; Springer: Berlin/Heidelberg, Germany, 2011; pp. 140–149. [Google Scholar]

- Xiao, Z.; Hu, Z.; Geng, L.; Zhang, F.; Wu, J.; Li, Y. Fatigue driving recognition network: Fatigue driving recognition via convolutional neural network and long short-term memory units. IET Trans. Intell. Transp. Syst. 2019, 13, 1410–1416. [Google Scholar] [CrossRef]

- Qiang, J.; Zhiwei, Z.; Lan, P. Real-time nonintrusive monitoring and prediction of driver fatigue. IEEE Trans. Veh. Technol. 2004, 53, 1052–1068. [Google Scholar]

- Wang, H.; Song, W.; Liu, W.; Song, N.; Wang, Y.; Pan, H. A Bayesian Scene-Prior-Based Deep Network Model for Face Verification. Sensors 2018, 18, 1906. [Google Scholar] [CrossRef] [Green Version]

- Jain, A.; Koppula, H.S.; Soh, S.; Raghavan, B.; Singh, A.; Saxena, A. Brain4cars: Car that knows before you do via sensory-fusion deep learning architecture. arXiv 2016, arXiv:1601.00740. [Google Scholar]

- Fridman, L.; Langhans, P.; Lee, J.; Reimer, B. Driver Gaze Region Estimation without Use of Eye Movement. IEEE Intell. Syst. 2016, 31, 49–56. [Google Scholar] [CrossRef]

- Li, G.; Yang, Y.; Qu, X. Deep Learning Approaches on Pedestrian Detection in Hazy Weather. IEEE Trans. Ind. Electron. 2019, 2945295. [Google Scholar] [CrossRef]

- Song, C.; Yan, X.; Stephen, N.; Khan, A.A. Hidden Markov model and driver path preference for floating car trajectory map matching. IET Intell. Transp. Syst. 2018, 12, 1433–1441. [Google Scholar] [CrossRef]

- Muñoz, M.; Reimer, B.; Lee, J.; Mehler, B.; Fridman, L. Distinguishing patterns in drivers’ visual attention allocation using Hidden Markov Models. Transp. Res. Part F Traffic Psychol. Behav. 2016, 43, 90–103. [Google Scholar] [CrossRef]

- Hou, H.; Jin, L.; Niu, Q.; Sun, Y.; Lu, M. Driver Intention Recognition Method Using Continuous Hidden Markov Model. Int. J. Comput. Intell. Syst. 2011, 4, 386–393. [Google Scholar] [CrossRef]

- Fu, R.; Wang, H.; Zhao, W. Dynamic driver fatigue detection using hidden Markov model in real driving condition. Expert Syst. Appl. 2016, 63, 397–411. [Google Scholar] [CrossRef]

- Morris, B.; Doshi, A.; Trivedi, M. Lane change intent prediction for driver assistance: On-road design and evaluation. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 895–901. [Google Scholar]

- Tang, J.; Liu, F.; Zhang, W.; Ke, R.; Zou, Y. Lane-changes prediction based on adaptive fuzzy neural network. Expert Syst. Appl. 2018, 91, 452–463. [Google Scholar] [CrossRef]

- Zhu, W.; Miao, J.; Hu, J.; Qing, L. Vehicle detection in driving simulation using extreme learning machine. Neurocomputing 2014, 128, 160–165. [Google Scholar] [CrossRef]

- Kumar, P.; Perrollaz, M.; Lefevre, S.; Laugier, C. Learning-based approach for online lane change intention prediction. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, Australia, 23–26 June 2013; pp. 797–802. [Google Scholar]

- Beggiato, M.; Pech, T.; Leonhardt, V.; Lindner, P.; Wanielik, G.; Bullinger-Hoffmann, A.; Krems, J. Lane Change Prediction: From Driver Characteristics, Manoeuvre Types and Glance Behaviour to a Real-Time Prediction Algorithm. In UR: BAN Human Factors in Traffic; Springer: Berlin/Heidelberg, Germany, 2018; pp. 205–221. [Google Scholar]

- Krumm, J. A Markov Model for Driver Turn Prediction. Available online: https://www.microsoft.com/en-us/research/publication/markov-model-driver-turn-prediction/ (accessed on 2 October 2019).

- Li, X.; Wang, W.; Roetting, M. Estimating Driver’s Lane-Change Intent Considering Driving Style and Contextual Traffic. IEEE Trans. Intell. Transp. Syst. 2019, 20, 3258–3271. [Google Scholar] [CrossRef]

- Husen, M.N.; Lee, S.; Khan, M.Q. Syntactic pattern recognition of car driving behavior detection. In Proceedings of the 11th International Conference on Ubiquitous Information Management and Communication, Beppu, Japan, 5–7 January 2017; pp. 1–6. [Google Scholar]

- Kuge, N.; Yamamura, T.; Shimoyama, O.; Liu, A. A Driver Behavior Recognition Method Based on a Driver Model Framework; 0148-7191; SAE Technical Paper: Warrendale, PA, USA, 2000. [Google Scholar]

- Doshi, A.; Trivedi, M.M. On the roles of eye gaze and head dynamics in predicting driver’s intent to change lanes. IEEE Trans. Intell. Transp. Syst. 2009, 10, 453–462. [Google Scholar] [CrossRef] [Green Version]

- McCall, J.C.; Trivedi, M.M. Driver behavior and situation aware brake assistance for intelligent vehicles. Proc. IEEE 2007, 95, 374–387. [Google Scholar] [CrossRef]

- Cheng, S.Y.; Trivedi, M.M. Turn-intent analysis using body pose for intelligent driver assistance. IEEE Pervasive Comput. 2006, 5, 28–37. [Google Scholar] [CrossRef]

- Li, G.; Li, S.E.; Cheng, B.; Green, P. Estimation of driving style in naturalistic highway traffic using maneuver transition probabilities. Transp. Res. Part C Emerg. Technol. 2017, 74, 113–125. [Google Scholar] [CrossRef]

- Bergasa, L.M.; Nuevo, J.; Sotelo, M.A.; Barea, R.; Lopez, M.E. Real-time system for monitoring driver vigilance. IEEE Trans. Intell. Transp. Syst. 2006, 7, 63–77. [Google Scholar] [CrossRef] [Green Version]

- Smith, P.; Shah, M.; Lobo, N.D.V. Determining driver visual attention with one camera. IEEE Trans. Intell. Transp. Syst. 2003, 4, 205–218. [Google Scholar] [CrossRef] [Green Version]

- Sigari, M.-H.; Fathy, M.; Soryani, M. A Driver Face Monitoring System for Fatigue and Distraction Detection. Int. J. Veh. Technol. 2013, 2013, 263983. [Google Scholar] [CrossRef] [Green Version]

- Flores, M.; Armingol, J.; de la Escalera, A. Driver Drowsiness Warning System Using Visual Information for Both Diurnal and Nocturnal Illumination Conditions. EURASIP J. Adv. Signal Process. 2010, 2010, 438205. [Google Scholar] [CrossRef] [Green Version]

- Wang, R.-B.; Guo, K.-Y.; Shi, S.-M.; Chu, J.-W. A monitoring method of driver fatigue behavior based on machine vision. In Proceedings of the IEEE IV2003 Intelligent Vehicles Symposium, Proceedings (Cat. No.03TH8683), Columbus, OH, USA, 9–11 June 2003; pp. 110–113. [Google Scholar]

- Zhang, Z.; Zhang, J.S. Driver Fatigue Detection Based Intelligent Vehicle Control. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; pp. 1262–1265. [Google Scholar]

- Wenhui, D.; Xiaojuan, W. Fatigue detection based on the distance of eyelid. In Proceedings of the 2005 IEEE International Workshop on VLSI Design and Video Technology, Suzhou, China, 28–30 May 2005; pp. 365–368. [Google Scholar]

- Lalonde, M.; Byrns, D.; Gagnon, L.; Teasdale, N.; Laurendeau, D. Real-time eye blink detection with GPU-based SIFT tracking. In Proceedings of the Fourth Canadian Conference on Computer and Robot Vision (CRV ‘07), Montreal, QC, Canada, 28–30 May 2007; pp. 481–487. [Google Scholar]

- Batista, J. A Drowsiness and Point of Attention Monitoring System for Driver Vigilance. In Proceedings of the 2007 IEEE Intelligent Transportation Systems Conference, Seattle, WA, USA, 30 September–3 October 2007; pp. 702–708. [Google Scholar]

- Audi|Luxury Sedans, SUVs, Convertibles, Electric Vehicles & More. Available online: https://www.audiusa.com (accessed on 2 October 2019).

- Bayerische Motoren Werke AG. The International BMW Website|BMW.com. Available online: https://www.bmw.com/en/index.html (accessed on 2 October 2019).

- National Highway Traffic Safety Administration. Crash Factors in Intersection-Related Crashes: An on-Scene Perspective. Nat. Center Stat. Anal.; National Highway Traffic Safety Administration: Washington, DC, USA, 2010; p. 811366. [Google Scholar]

- Ford. Ford–New Cars, Trucks, SUVs, Crossovers & Hybrids|Vehicles Built Just for You|Ford.com. Available online: https://www.ford.com/ (accessed on 2 October 2019).

- Mercedes-Benz International. News, Pictures, Videos & Livestreams. Available online: https://www.mercedes-benz.com/content/com/en (accessed on 2 October 2019).

- New Cars, Trucks, SUVs & Hybrids|Toyota Official Site. Available online: https://www.toyota.com (accessed on 2 October 2019).

- National Highway Traffic Safety Administration. Federal Automated Vehicles Policy: Accelerating the Next Revolution in Roadway Safety; Department of Transportationl: Washington, DC, USA, 2016. [Google Scholar]

- Lee, J.D. Dynamics of Driver Distraction: The process of engaging and disengaging. Ann. Adv. Automot. Med. 2014, 58, 24–32. [Google Scholar]

- Fridman, L.; Brown, D.E.; Glazer, M.; Angell, W.; Dodd, S.; Jenik, B.; Terwilliger, J.; Patsekin, A.; Kindelsberger, J.; Ding, L. MIT advanced vehicle technology study: Large-scale naturalistic driving study of driver behavior and interaction with automation. IEEE Access 2019, 7, 102021–102038. [Google Scholar] [CrossRef]

- Su, D.; Li, Y.; Chen, H. Toward Precise Gaze Estimation for Mobile Head-Mounted Gaze Tracking Systems. IEEE Trans. Ind. Inform. 2019, 15, 2660–2672. [Google Scholar] [CrossRef]

| Technique | Information | Illumination | Robustness | Requirements | References | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Pupil | Iris | Corner | Eye | Between-the-Eyes | Indoor | Outdoor | Infrared | Scale | Head Pose | Occlusion | High Resolution | High Contrast | Temporal Dependent | Good Initialization | ||

| Shape-based (circular) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | [30,31,34] | |||||||||

| Shape-based (elliptical) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | [27,28,72] | ||||||||

| Shape-based (elliptical) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | [73,74,75] | |||||||||

| Shape-based (complex) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | [35,39,41,76] | ||||||

| Feature-based | ✓ | ✓ | ✓ | [42,77] | ||||||||||||

| Feature-based | ✓ | ✓ | ✓ | [62,78] | ||||||||||||

| Feature-based | ✓ | ✓ | ✓ | ✓ | [50,51] | |||||||||||

| Feature-based | ✓ | ✓ | ✓ | [53,68,70] | ||||||||||||

| Feature-based | ✓ | ✓ | ✓ | ✓ | ✓ | [46,47,48] | ||||||||||

| Feature-based | ✓ | ✓ | ✓ | [52,60,79,80,81] | ||||||||||||

| Appearance-based | ✓ | ✓ | ✓ | ✓ | ✓ | [82,83,84,85] | ||||||||||

| Symmetry | ✓ | ✓ | ✓ | [57,58,86] | ||||||||||||

| Eye motion | ✓ | ✓ | ✓ | ✓ | [60,61,62] | |||||||||||

| Hybrid | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | [65,69,85] | |||||

| No. of Cameras | No. of Lights | Gaze Information | Head Pose Invariant? | Calibration | Accuracy (Degrees) | Comments | References |

|---|---|---|---|---|---|---|---|

| 1 | 0 | PoR | No. Needs extra unit. | 2–4 | webcam | [27,69,114] | |

| 1 | 0 | LoS/LoG | No. Needs extra unit. | Fully | 1–2 | [90,97,108] | |

| 1 | 0 | LoG | Approximate solution | < 1 | additional markers, iris radius, parallel with screen | [30] | |

| 1 | 1 | PoR | No. Needs extra unit. | 1–2 | Polynomial approximation | [73,119,120] | |

| 1 | 2 | PoR | Yes | Fully | 1–3 | [88,104,105] | |

| 1 + 1 PT camera | 1 | PoR | Yes | Fully | 3 | Mirrors | [107] |

| 1 + 1 PT camera | 4 | PoR | Yes | <2.5 | PT camera used during implementation | [95,98] | |

| 2 | 0 | PoR | Yes | 1 | 3D face model | [106] | |

| 2 + 1 PT camera | 1 | LoG | Yes | 0.7–1 | [134] | ||

| 2 + 2 PT cameras | 2 | PoR | Yes | Fully | 0.6 | [99] | |

| 2 | 2(3) | PoR | Yes | Fully | <2 | extra lights used during implementation, experimentation conducted with three glints | [96,102] |

| 3 | 2 | PoR | Yes | Fully | not reported | [100,135] | |

| 1 | 1 | PoR | No. Needs extra unit. | 0.5–1.5 | Appearance-based | [68,89,128] |

| Measurement | Ability to Detect Distraction | Pros | Cons | ||

|---|---|---|---|---|---|

| Visual | Cognitive | Visual and Cognitive | |||

| Driving Performance | Y | N | N |

|

|

| Physical Measurements | Y | Y | N |

|

|

| Biological Measurements | Y | Y | Y |

|

|

| Subjective Reports | N | Y | N |

|

|

| Hybrid Measurements | Y | Y | Y |

|

|

| Eye Detection | Tracking Method | Used Features | Algorithm for Distraction/Fatigue Detection | Performance | References |

|---|---|---|---|---|---|

| Imaging in the IR spectrum and verification by SVM | Combination of Kalman filter and mean shift | PERCLOS, Head nodding, Head orientation, Eye blink speed, Gaze direction Eye saccadic movement, Yawning | Probability theory (Bayesian network) | Very good | [204] |

| Imaging in the IR Spectrum | Adaptive filters (Kalman filter) | PERCLOS, Eye blink speed, Gaze direction, Head rotation | Probability theory (Bayesian network) | Very Good | [113] |

| Imaging in the IR Spectrum | Adaptive filters (Kalman filter) | PERCLOS, Eye blink rate, Eye saccadic movement, Head nodding, Head orientation | Knowledge-based (Fuzzy expert system) | [226] | |

| Feature-based (binarization) | Combination of 4 hierarchical tracking method | PERCLOS, Eye blink rate, Gaze direction, Yawning, Head orientation | Knowledge-based (Finite State Machine) | Average | [227] |

| Explicitly by Feature-based (projection) | Search window (based on face template matching) | PERCLOS Distance between eyelids, Eye blink rate, Head orientation | Knowledge-based (Fuzzy expert System) | Good | [228] |

| Other methods (elliptical model in daylight and IR imaging in nightlight) | Combination of NN and condensation algorithm | PERCLOS, Eye blink rate, Head orientation | Thresholding | Good | [229] |

| Feature-based (projection) | Search window (based on face template matching) | PERCLOS, Distance between eyelids | Thresholding | Good | [230] |

| Feature-based (projection) | Adaptive filters (UKF) | Continuous eye closure | Thresholding | Average | [231] |

| Feature-based (projection and connected component analysis) | Search window (eye template matching) | Eyelid distance | Thresholding | Very good | [232] |

| Feature-based (projection) | Adaptive filters (Kalman filter) | Eye blink rate | Poor | [233] | |

| Feature-based (variance projection and face model) | Adaptive filters (Kalman filter) | PERCLOS, Eye blink speed, Head rotation | Poor | [234] |

| Make | Technology Brand | Description | Alarm Type | Reference |

|---|---|---|---|---|

| Audi | Rest recommendation system + Audi pre sense | Uses features extracted with the help of far infrared system, camera, radar, thermal camera, lane position, proximity detection to offer features such as collision avoidance assist sunroof and windows closinghigh beam assistturn assistrear cross-path assistexit assist (to warn door opening when a nearby car passes) traffic jam assistnight vision | Audio, display, vibration | [235] |

| BMW | Active Driving Assistant with Attention Assistant | Uses features extracted with the help of radar, camera, thermal camera, lane position, proximity detection to offer features such as lane change warning, night vision, steering and lane control system for semi-automated driving, crossroad warning, assistive parking | Audio, display, vibration | [236] |

| Cadillac | Cadillac Super Cruise | System based on FOVIO vision technology developed by Seeing Machines IR camera on the steering wheel column to accurately determine the driver’s attention state | Audio and visual | [237] |

| Ford | Ford Safe and Smart (Driver alert control) | Uses features extracted with the help of radar, camera, steering sensors, lane position, proximity detection to offer features such as lane-keeping system, adaptive cruise control, forward collision warning with brake support, front rain-sensing windshield wipers, auto high-beam headlamps, blind spot information system, reverse steering | Audio, display, vibration | [238] |

| Mercedez-Benz | MB Pre-safe Technology | Uses features extracted with the help of radar, camera, sensors on the steering column, steering wheel movement and speed to offer features such as driver’s profile and behaviour, accident investigation, pre-safe brake and distronic plus technology, night view assist plus, active lane keeping assist and active blind spot monitoring, adaptive high beam assist, attention assist | Audio, display | [239] |

| Toyota | Toyota Safety Sense | Uses features extracted with the help of radar, charge-coupled camera, eye tracking and head motion, audio, display advanced obstacle detection system, pre-collision system, lane departure alert, automatic high beams, dynamic radar cruise control, pedestrian detection, | Audio, display | [240] |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, M.Q.; Lee, S. Gaze and Eye Tracking: Techniques and Applications in ADAS. Sensors 2019, 19, 5540. https://doi.org/10.3390/s19245540

Khan MQ, Lee S. Gaze and Eye Tracking: Techniques and Applications in ADAS. Sensors. 2019; 19(24):5540. https://doi.org/10.3390/s19245540

Chicago/Turabian StyleKhan, Muhammad Qasim, and Sukhan Lee. 2019. "Gaze and Eye Tracking: Techniques and Applications in ADAS" Sensors 19, no. 24: 5540. https://doi.org/10.3390/s19245540

APA StyleKhan, M. Q., & Lee, S. (2019). Gaze and Eye Tracking: Techniques and Applications in ADAS. Sensors, 19(24), 5540. https://doi.org/10.3390/s19245540