Comparison and Efficacy of Synergistic Intelligent Tutoring Systems with Human Physiological Response

Abstract

:1. Introduction

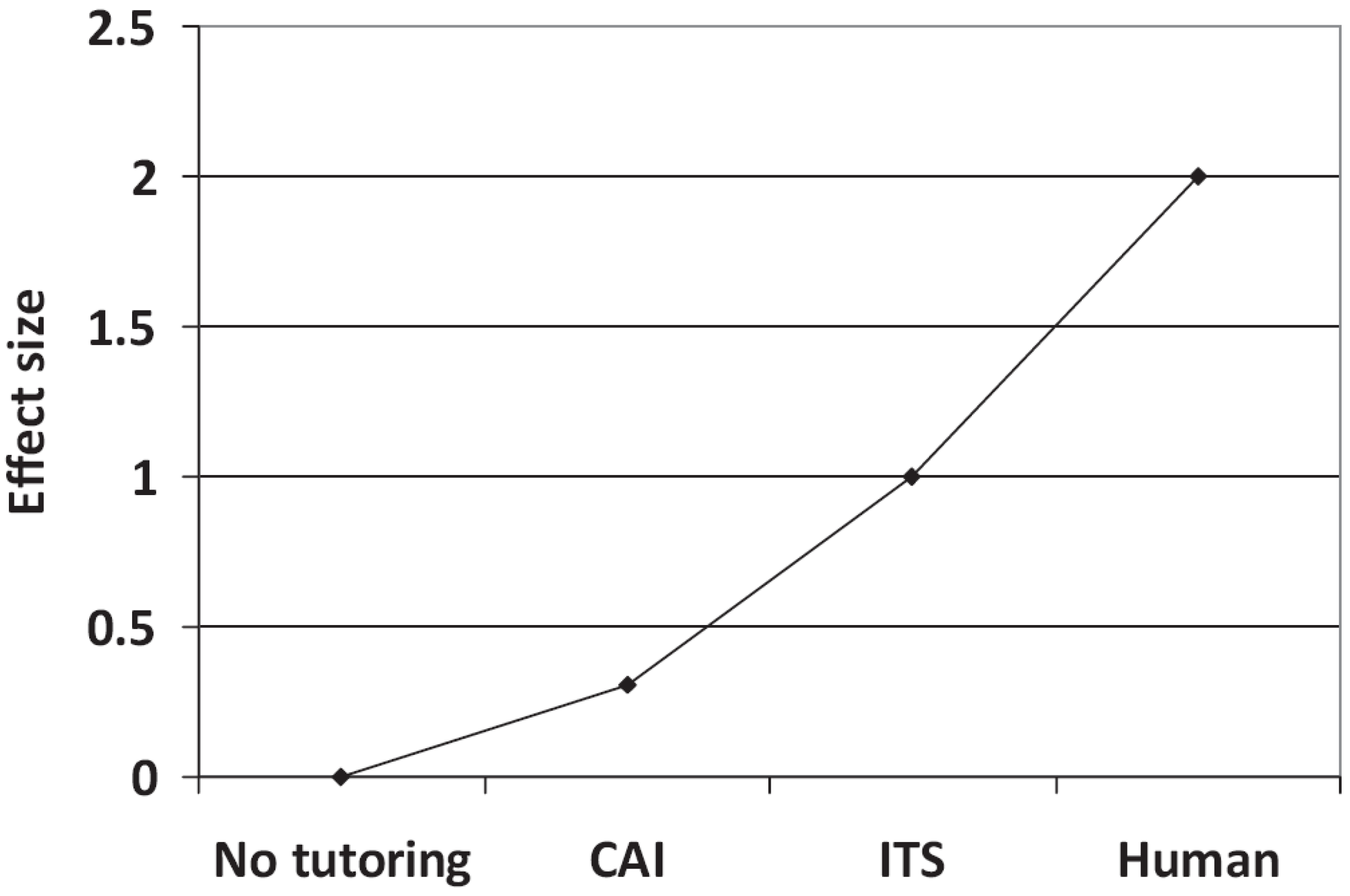

2. Intelligent Tutoring Systems (ITS)

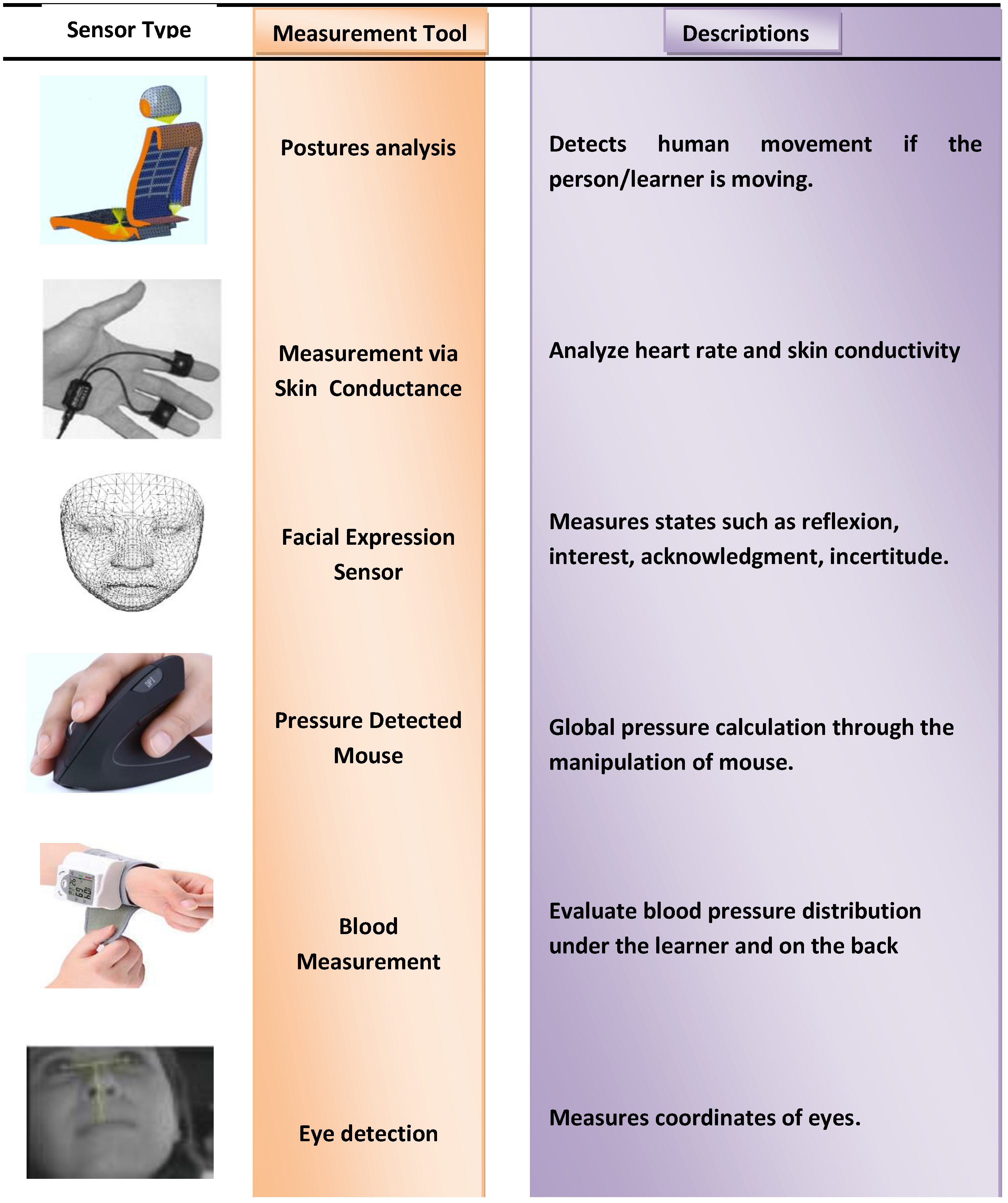

3. Measurement Techniques

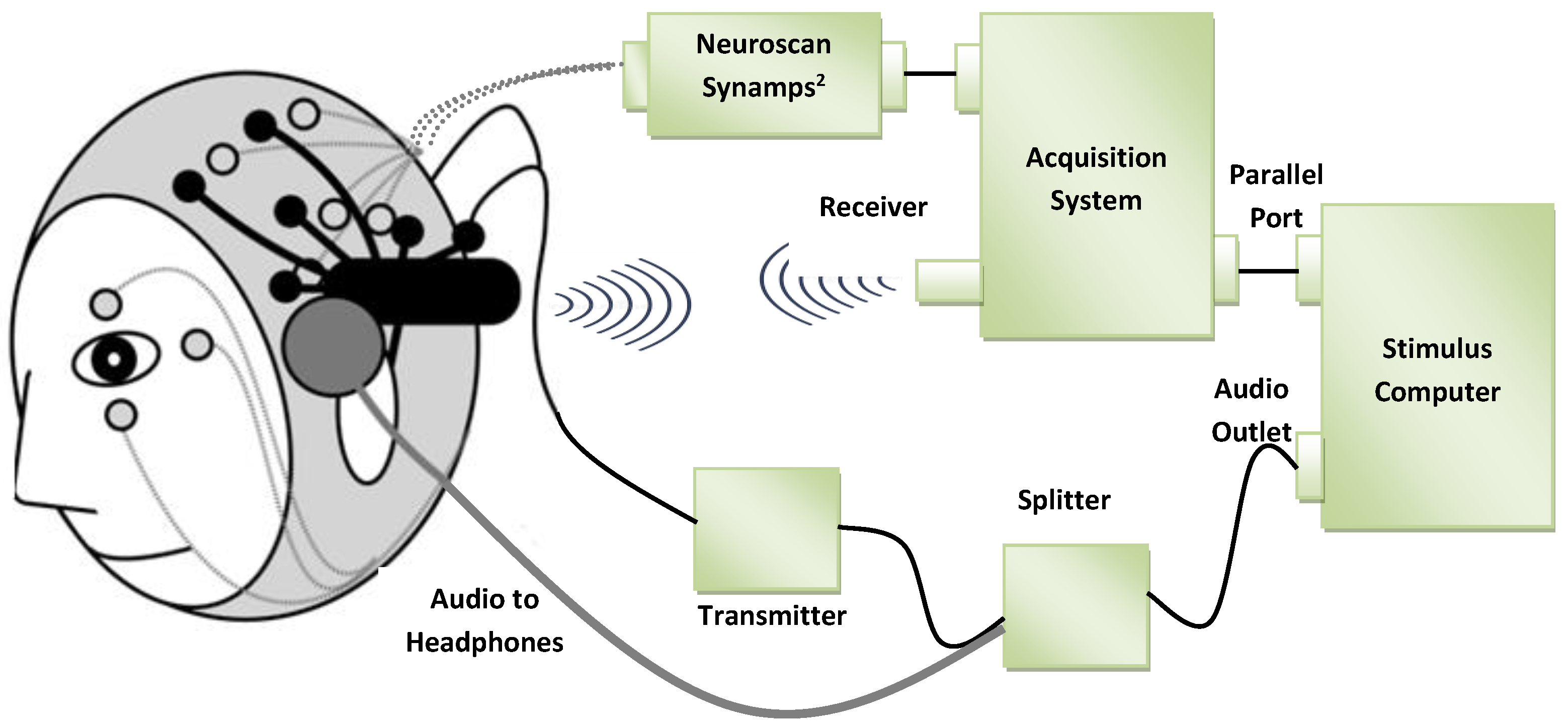

3.1. Electroencephalography and Electrocardiography

3.2. Contactless Measurements

3.3. Performance Analysis

3.4. Physiological Modeling and Classification

3.4.1. Valence-Arousal Model

3.4.2. The Stein and Levine Model

3.4.3. The Kort, Reily, and Picard Model

3.4.4. The Cognitive Disequilibrium Model

3.5. Classifications of Emotions

3.5.1. Standard Classifiers

3.5.2. Biologically Motivated Classifiers

4. Use of Human–Computer Interaction

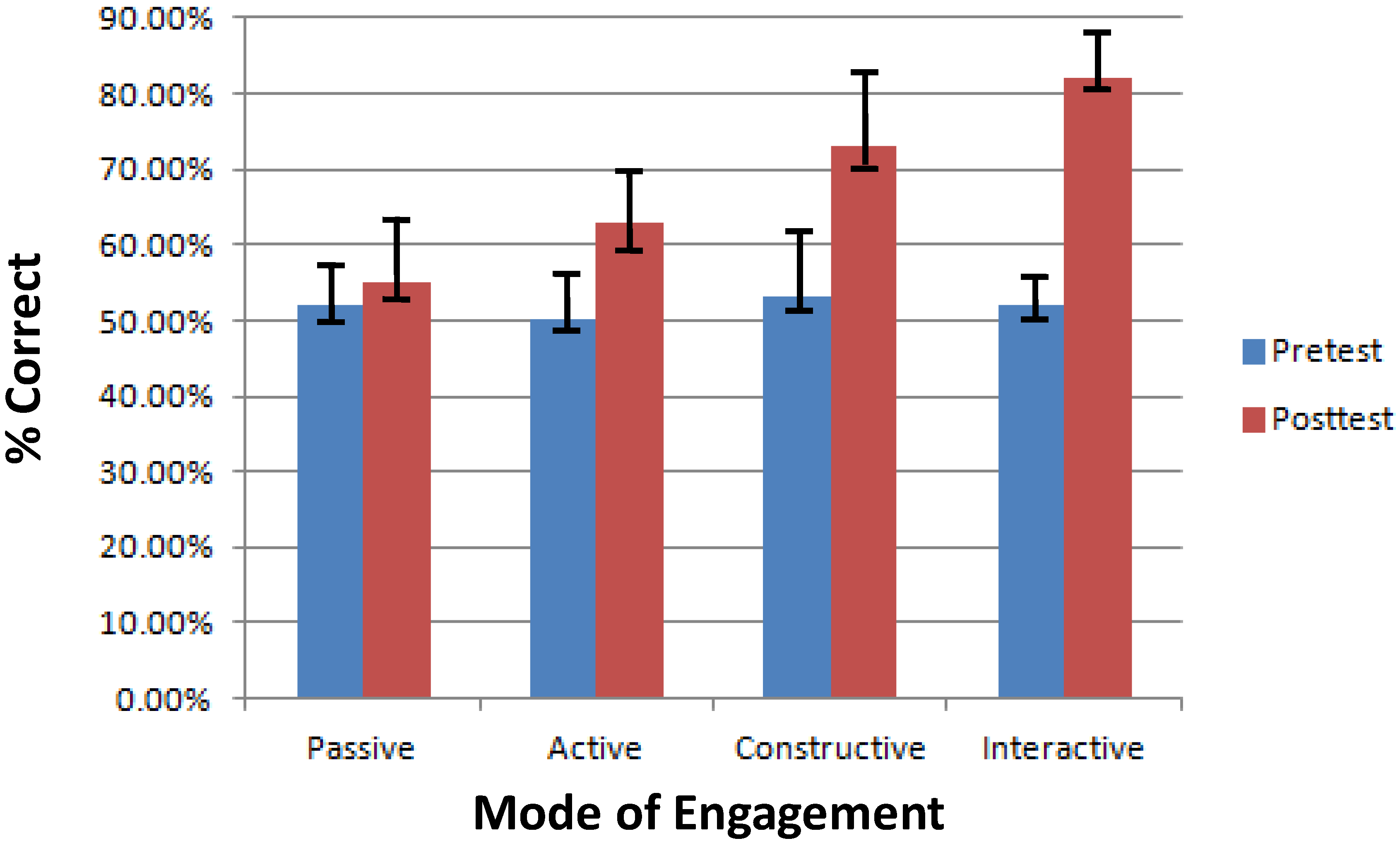

5. Modern Intelligent Tutoring Systems

- Passive learning is where the student is listening to or receiving the teacher’s instructions, without displaying any overt behavior that signifies high intellectual engagement. It is the classified as having the learn receptiveness to learning.

- Active mode is defined as the student actively taking some action in the learning, such as choosing one out of the presented options or pointing/gesturing to their answers. Active learning is classified as a higher learning mode compared to passive learning.

- Constructive learning is where a learner contributes to what is being through by overt display of attentiveness and active participation in discussion. This can also include a student, for instance, creating a diagram of a text-based solution or giving a presentation on some idea.

- Interaction refers to a student’s ability to incorporate constructive learning with some participation or interaction with the environment, be it a group discussion or generate some knowledge that is beyond the idea taught up to that point.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Lynch, T.R.; Chapman, A.L.; Rosenthal, M.Z.; Kuo, J.R.; Linehan, M.M. Mechanisms of change in dialectical behavior therapy: Theoretical and empirical observations. J. Clin. Psychol. 2006, 62, 459–480. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Posner, M.I.; Rothbart, M.K. Educating the Human Brain; American Psychological Association: Washington, DC, USA, 2007. [Google Scholar]

- Kwong, K.K.; Belliveau, J.W.; Chesler, D.A.; Goldberg, I.E.; Weisskoff, R.M.; Poncelet, B.P.; Kennedy, D.N.; Hoppel, B.E.; Cohen, M.S.; Turner, R. Dynamic magnetic resonance imaging of human brain activity during primary sensory stimulation. Proc. Natl. Acad. Sci. USA 1992, 89, 5675–5679. [Google Scholar] [CrossRef]

- Hagemann, D.; Waldstein, S.R.; Thayer, J.F. Central and autonomic nervous system integration in emotion. Brain Cogn. 2003, 52, 79–87. [Google Scholar] [CrossRef]

- Engelke, U.; Darcy, D.P.; Mulliken, G.H.; Bosse, S.; Martini, M.G.; Arndt, S.; Antons, J.N.; Chan, K.Y.; Ramzan, N.; Brunnström, K. Psychophysiology-based QoE assessment: A survey. IEEE J. Sel. Top. Signal Process. 2017, 11, 6–21. [Google Scholar] [CrossRef]

- Kunze, K.; Strohmeier, D. Examining subjective evaluation methods used in multimedia Quality of Experience research. In Proceedings of the 2012 Fourth International Workshop on IEEE Quality of Multimedia Experience (QoMEX), Yarra Valley, Australia, 5–7 July 2012; pp. 51–56. [Google Scholar]

- Levenson, R.W.; Ekman, P.; Heider, K.; Friesen, W.V. Emotion and autonomic nervous system activity in the Minangkabau of West Sumatra. J. Personal. Soc. Psychol. 1992, 62, 972. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V.; O’sullivan, M.; Chan, A.; Diacoyanni-Tarlatzis, I.; Heider, K.; Krause, R.; LeCompte, W.A.; Pitcairn, T.; Ricci-Bitti, P.E.; et al. Universals and cultural differences in the judgments of facial expressions of emotion. J. Personal. Soc. Psychol. 1987, 53, 712. [Google Scholar] [CrossRef]

- Parrott, W.G. Emotions in Social Psychology: Essential Readings; Psychology Press: Hove, UK, 2001. [Google Scholar]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161. [Google Scholar] [CrossRef]

- Plutchik, R. The nature of emotions: Human emotions have deep evolutionary roots, a fact that may explain their complexity and provide tools for clinical practice. Am. Sci. 2001, 89, 344–350. [Google Scholar] [CrossRef]

- Kulik, J.A.; Fletcher, J. Effectiveness of intelligent tutoring systems: A meta-analytic review. Rev. Educ. Res. 2016, 86, 42–78. [Google Scholar] [CrossRef]

- Ma, W.; Adesope, O.O.; Nesbit, J.C.; Liu, Q. Intelligent tutoring systems and learning outcomes: A meta-analysis. J. Educ. Psychol. 2014, 106, 901. [Google Scholar] [CrossRef]

- Nye, B.D. Intelligent tutoring systems by and for the developing world: A review of trends and approaches for educational technology in a global context. Int. J. Artif. Intell. Educ. 2015, 25, 177–203. [Google Scholar] [CrossRef]

- Ahuja, N.J.; Sille, R. A critical review of development of intelligent tutoring systems: Retrospect, present and prospect. Int. J. Comput. Sci. Issues (IJCSI) 2013, 10, 39. [Google Scholar]

- Malekzadeh, M.; Mustafa, M.B.; Lahsasna, A. A Review of Emotion Regulation in Intelligent Tutoring Systems. J. Educ. Technol. Soc. 2015, 18, 435–445. [Google Scholar]

- Steenbergen-Hu, S.; Cooper, H. A meta-analysis of the effectiveness of intelligent tutoring systems on K–12 students’ mathematical learning. J. Educ. Psychol. 2013, 105, 970. [Google Scholar] [CrossRef]

- Steenbergen-Hu, S.; Cooper, H. A meta-analysis of the effectiveness of intelligent tutoring systems on college students’ academic learning. J. Educ. Psychol. 2014, 106, 331. [Google Scholar] [CrossRef]

- Sodhro, A.H.; Luo, Z.; Sangaiah, A.K.; Baik, S.W. Mobile edge computing based QoS optimization in medical healthcare applications. Int. J. Inf. Manag. 2018. [Google Scholar] [CrossRef]

- Leidner, D.E.; Jarvenpaa, S.L. The information age confronts education: Case studies on electronic classrooms. Inf. Syst. Res. 1993, 4, 24–54. [Google Scholar] [CrossRef]

- Smith, D.H. Situational Instruction: A Strategy for Facilitating the Learning Process. Lifelong Learn. 1989, 12, 5–9. [Google Scholar]

- Bradáč, V.; Kostolányová, K. Intelligent Tutoring Systems. J. Intell. Syst. 2017, 26, 717–727. [Google Scholar]

- Salaberry, M.R. The use of technology for second language learning and teaching: A retrospective. Mod. Lang. J. 2001, 85, 39–56. [Google Scholar] [CrossRef]

- Brown, E. Mobile learning explorations at the Stanford Learning Lab. Speak. Comput. 2001, 55, 112–120. [Google Scholar]

- Chinnery, G.M. Emerging technologies. Going to the mall: Mobile assisted language learning. Lang. Learn. Technol. 2006, 10, 9–16. [Google Scholar]

- Dell, A.G.; Newton, D.A.; Petroff, J.G. Assistive Technology in the Classroom: Enhancing the School Experiences of Students with Disabilities; Pearson Merrill Prentice Hall: Upper Saddle River, NJ, USA, 2008. [Google Scholar]

- VanLehn, K. The relative effectiveness of human tutoring, intelligent tutoring systems, and other tutoring systems. Educ. Psychol. 2011, 46, 197–221. [Google Scholar] [CrossRef]

- Evens, M.; Michael, J. One-on-One Tutoring by Humans and Machines; Computer Science Department, Illinois Institute of Technology: Chicago, IL, USA, 2006. [Google Scholar]

- Graesser, A.C.; VanLehn, K.; Rosé, C.P.; Jordan, P.W.; Harter, D. Intelligent tutoring systems with conversational dialogue. AI Magazine, 15 December 2001. [Google Scholar]

- Core, M.G.; Moore, J.D.; Zinn, C. The role of initiative in tutorial dialogue. In Proceedings of the Tenth Conference on European Chapter of the Association for Computational Linguistics-Volume 1. Association for Computational Linguistics, Budapest, Hungary, 12–17 April 2003; pp. 67–74. [Google Scholar]

- Lepper, M.R.; Woolverton, M. The wisdom of practice: Lessons learned from the study of highly effective tutors. In Improving Academic Achievement: Impact of Psychological Factors on Education; Academic Press: San Diego, CA, USA, 2002; pp. 135–158. [Google Scholar]

- VanLehn, K.; Graesser, A.C.; Jackson, G.T.; Jordan, P.; Olney, A.; Rosé, C.P. When are tutorial dialogues more effective than reading? Cognit. Sci. 2007, 31, 3–62. [Google Scholar] [CrossRef]

- VanLehn, K.; Jordan, P.W.; Rosé, C.P.; Bhembe, D.; Böttner, M.; Gaydos, A.; Makatchev, M.; Pappuswamy, U.; Ringenberg, M.; Roque, A.; et al. The architecture of Why2-Atlas: A coach for qualitative physics essay writing. In Proceedings of the International Conference on Intelligent Tutoring Systems, San Sebastián, Spain, 2–7 June 2002; pp. 158–167. [Google Scholar]

- Nye, B.D.; Graesser, A.C.; Hu, X. AutoTutor and family: A review of 17 years of natural language tutoring. Int. J. Artif. Intell. Educ. 2014, 24, 427–469. [Google Scholar] [CrossRef]

- Koelstra, S.; Yazdani, A.; Soleymani, M.; Muhl, C.; Lee, J.S.; Nijholt, A.; Pun, T.; Ebrahimi, T.; Patras, I. Single trial classification of EEG and peripheral physiological signals for recognition of emotions induced by music videos. In Proceedings of the International Conference on Brain Informatics, Toronto, ON, Canada, 28–30 August 2010; pp. 89–100. [Google Scholar]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. Deap: A database for emotion analysis: Using physiological signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Chanel, G.; Kronegg, J.; Grandjean, D.; Pun, T. Emotion assessment: Arousal evaluation using EEG’s and peripheral physiological signals. In Proceedings of the International Workshop on Multimedia Content Representation, Classification and Security, Istanbul, Turkey, 11–13 September 2006; pp. 530–537. [Google Scholar]

- Zywietz, C. A Brief History of Electrocardiography-Progress through Technology. Available online: https://www.scribd.com/document/289656762/A-Brief-History-of-Electrocardiography-by-Burch-Chr-Zywietz (accessed on 5 December 2018).

- Berntson, G.G.; Thomas Bigger, J.; Eckberg, D.L.; Grossman, P.; Kaufmann, P.G.; Malik, M.; Nagaraja, H.N.; Porges, S.W.; Saul, J.P.; Stone, P.H.; et al. Heart rate variability: Origins, methods, and interpretive caveats. Psychophysiology 1997, 34, 623–648. [Google Scholar] [CrossRef] [Green Version]

- Furness, J.B. The enteric nervous system: Normal functions and enteric neuropathies. Neurogastroenterol. Motil. 2008, 20, 32–38. [Google Scholar] [CrossRef]

- Willis, W. The Autonomic Nervous System and Its Central Control. Available online: https://www.scribd.com/document/362250855/The-Autonomic-Nervous-System-and-Its-Central-Control (accessed on 5 December 2018).

- Goldberger, A.L.; West, B.J. Applications of nonlinear dynamics to clinical cardiology. Ann. N. Y. Acad. Sci. 1987, 504, 195–213. [Google Scholar] [CrossRef]

- Pincus, S.M. Approximate entropy as a measure of system complexity. Proc. Natl. Acad. Sci. USA 1991, 88, 2297–2301. [Google Scholar] [CrossRef]

- Acharya, U.R.; Joseph, K.P.; Kannathal, N.; Lim, C.M.; Suri, J.S. Heart rate variability: A review. Med. Biol. Eng. Comput. 2006, 44, 1031–1051. [Google Scholar] [CrossRef] [PubMed]

- Nemati, E.; Deen, M.J.; Mondal, T. A wireless wearable ECG sensor for long-term applications. IEEE Commun. Mag. 2012, 50, 36–43. [Google Scholar] [CrossRef]

- Badcock, N.; Mousikou, P.; Mahajan, Y.; De Lissa, P.; Thie, J.; McArthur, G. Validation of the Emotiv EPOC® EEG gaming system for measuring research quality auditory ERPs. PeerJ 2013, 1. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Irani, R. Computer Vision Based Methods for Detection and Measurement of Psychophysiological Indicators. Ph.D. Thesis, Aalborg Universitetsforlag, Aalborg, Denmark, 2017. [Google Scholar]

- Irani, R.; Nasrollahi, K.; Moeslund, T.B. Improved pulse detection from head motions using DCT. In Proceedings of the 2014 International Conference on IEEE Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014; Volume 3, pp. 118–124. [Google Scholar]

- Wusk, G.; Gabler, H. Non-Invasive Detection of Respiration and Heart Rate with a Vehicle Seat Sensor. Sensors 2018, 18, 1463. [Google Scholar] [CrossRef] [PubMed]

- Cardone, D.; Merla, A. New frontiers for applications of thermal infrared imaging devices: Computational psychopshysiology in the neurosciences. Sensors 2017, 17, 1042. [Google Scholar] [CrossRef] [PubMed]

- Frasson, C.; Chalfoun, P. Managing learner’s affective states in intelligent tutoring systems. In Studies in Computational Intelligence; Springer: Heidelberg, Germany, 2010. [Google Scholar]

- Brawner, K.W.; Goldberg, B.S. Real-time monitoring of ecg and gsr signals during computer-based training. In Proceedings of the International Conference on Intelligent Tutoring Systems, Chania, Greece, 14–18 June 2012; pp. 72–77. [Google Scholar]

- Kim, J.; André, E. Emotion recognition based on physiological changes in music listening. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 2067–2083. [Google Scholar] [CrossRef] [PubMed]

- Pour, P.A.; Hussain, M.S.; AlZoubi, O.; D’Mello, S.; Calvo, R.A. The impact of system feedback on learners’ affective and physiological states. In Proceedings of the International Conference on Intelligent Tutoring Systems, Pittsburgh, PA, USA, 14–18 June 2010; pp. 264–273. [Google Scholar]

- Hussain, M.S.; Monkaresi, H.; Calvo, R.A. Categorical vs. dimensional representations in multimodal affect detection during learning. In Proceedings of the International Conference on Intelligent Tutoring Systems, Chania, Greece, 14–18 June 2012; pp. 78–83. [Google Scholar]

- MATLAB and Statistics Toolbox Release 2017b; The MathWorks, Inc.: Natick, MA, USA, 2017.

- Barron-Estrada, M.L.; Zatarain-Cabada, R.; Oramas-Bustillos, R.; Gonzalez-Hernandez, F. Sentiment Analysis in an Affective Intelligent Tutoring System. In Proceedings of the 2017 IEEE 17th International Conference on IEEE Advanced Learning Technologies (ICALT), Timisoara, Romania, 3–7 July 2017; pp. 394–397. [Google Scholar]

- Arnau, D.; Arevalillo-Herraez, M.; Gonzalez-Calero, J.A. Emulating human supervision in an intelligent tutoring system for arithmetical problem solving. IEEE Trans. Learn. Technol. 2014, 7, 155–164. [Google Scholar] [CrossRef]

- D’Mello, S.K.; Graesser, A. Language and discourse are powerful signals of student emotions during tutoring. IEEE Trans. Learn. Technol. 2012, 5, 304–317. [Google Scholar] [CrossRef]

- Mohanan, R.; Stringfellow, C.; Gupta, D. An emotionally intelligent tutoring system. In Proceedings of the 2017 IEEE Computing Conference, London, UK, 18–20 July 2017; pp. 1099–1107. [Google Scholar]

- Njeru, A.M.; Paracha, S. Learning analytics: Supporting at-risk student through eye-tracking and a robust intelligent tutoring system. In Proceedings of the 2017 International Conference on IEEE Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017; pp. 1002–1005. [Google Scholar]

- Jerritta, S.; Murugappan, M.; Nagarajan, R.; Wan, K. Physiological signals based human emotion recognition: A review. In Proceedings of the 2011 IEEE 7th International Colloquium on IEEE Signal Processing and its Applications (CSPA), Penang, Malaysia, 4–6 March 2011; pp. 410–415. [Google Scholar]

- Kim, K.H.; Bang, S.W.; Kim, S.R. Emotion recognition system using short-term monitoring of physiological signals. Med. Biol. Eng. Comput. 2004, 42, 419–427. [Google Scholar] [CrossRef]

- Glick, G.; Braunwald, E.; Lewis, R.M. Relative roles of the sympathetic and parasympathetic nervous systems in the reflex control of heart rate. Circ. Res. 1965, 16, 363–375. [Google Scholar] [CrossRef]

- Zanstra, Y.J.; Johnston, D.W. Cardiovascular reactivity in real life settings: Measurement, mechanisms and meaning. Biol. Psychol. 2011, 86, 98–105. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Obrist, P.A. Cardiovascular Psychophysiology: A Perspective; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Tomaka, J.; Blascovich, J.; Kibler, J.; Ernst, J.M. Cognitive and physiological antecedents of threat and challenge appraisal. J. Personal. Soc. Psychol. 1997, 73, 63. [Google Scholar] [CrossRef]

- Zanstra, Y.J.; Johnston, D.W.; Rasbash, J. Appraisal predicts hemodynamic reactivity in a naturalistic stressor. Int. J. Psychophysiol. 2010, 77, 35–42. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vrijkotte, T.G.; Van Doornen, L.J.; De Geus, E.J. Effects of work stress on ambulatory blood pressure, heart rate, and heart rate variability. Hypertension 2000, 35, 880–886. [Google Scholar] [CrossRef] [PubMed]

- Brand, S.; Reimer, T.; Opwis, K. How do we learn in a negative mood? Effects of a negative mood on transfer and learning. Learn. Instr. 2007, 17, 1–16. [Google Scholar] [CrossRef]

- Pekrun, R.; Goetz, T.; Titz, W.; Perry, R.P. Academic emotions in students’ self-regulated learning and achievement: A program of qualitative and quantitative research. Educ. Psychol. 2002, 37, 91–105. [Google Scholar] [CrossRef]

- Picard, R.W. Affective computing: Challenges. Int. J. Hum. Comput. Stud. 2003, 59, 55–64. [Google Scholar] [CrossRef]

- Levine, L.J.; Stein, N.L. Making sense out of emotion: The representation and use of goal-structured knowledge. In Psychological and BIological Approaches to Emotion; Psychology Press: Hova, UK, 2013; pp. 63–92. [Google Scholar]

- Kort, B.; Reilly, R. Analytical Models of Emotions, Learning and Relationships: Towards an Affect-Sensitive Cognitive Machine. Available online: https://www.media.mit.edu/publications/analytical-models-of-emotions-learning-and-relationships-towards\-an-affect-sensitive-cognitive-machine-january/ (accessed on 5 December 2018).

- Han, J.; Pei, J.; Kamber, M. Data Mining: Concepts and Techniques; Elsevier: Amsterdam, The Netherlands, 2011. [Google Scholar]

- Kozma, R.; Freeman, W.J. Chaotic resonance—Methods and applications for robust classification of noisy and variable patterns. Int. J. Bifurc. Chaos 2001, 11, 1607–1629. [Google Scholar] [CrossRef]

- Kozma, R.; Freeman, W.J. Classification of EEG patterns using nonlinear dynamics and identifying chaotic phase transitions. Neurocomputing 2002, 44, 1107–1112. [Google Scholar] [CrossRef]

- Bal, E.; Harden, E.; Lamb, D.; Van Hecke, A.V.; Denver, J.W.; Porges, S.W. Emotion recognition in children with autism spectrum disorders: Relations to eye gaze and autonomic state. J. Autism Dev. Disord. 2010, 40, 358–370. [Google Scholar] [CrossRef]

- Martinez, R.; de Ipina, K.L.; Irigoyen, E.; Asla, N.; Garay, N.; Ezeiza, A.; Fajardo, I. Emotion elicitation oriented to the development of a human emotion management system for people with intellectual disabilities. In Trends in Practical Applications of Agents and Multiagent Systems; Springer: Berlin/Heidelberg, Germany, 2010; pp. 689–696. [Google Scholar]

- Kessous, L.; Castellano, G.; Caridakis, G. Multimodal emotion recognition in speech-based interaction using facial expression, body gesture and acoustic analysis. J. Multimodal User Interfaces 2010, 3, 33–48. [Google Scholar] [CrossRef]

- Raouzaiou, A.; Ioannou, S.; Karpouzis, K.; Tsapatsoulis, N.; Kollias, S.; Cowie, R. An intelligent scheme for facial expression recognition. In Artificial Neural Networks and Neural Information Processing—ICANN/ICONIP 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 1109–1116. [Google Scholar]

- Bethel, C.L.; Salomon, K.; Murphy, R.R.; Burke, J.L. Survey of psychophysiology measurements applied to human-robot interaction. In Proceedings of the 16th IEEE International Symposium on IEEE Robot and Human Interactive Communication (RO-MAN 2007), Jeju, Korea, 26–29 August 2007; pp. 732–737. [Google Scholar]

- Fernandez-Egea, E.; Parellada, E.; Lomena, F.; Falcon, C.; Pavia, J.; Mane, A.; Horga, G.; Bernardo, M. 18FDG PET study of amygdalar activity during facial emotion recognition in schizophrenia. Eur. Arch. Psychiatry Clin. Neurosci. 2010, 260, 69. [Google Scholar] [CrossRef] [PubMed]

- Teeni, D.; Carey, J.M.; Zhang, P. Human-Computer Interaction: Developing Effective Organizational Information Systems; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar]

- Karray, F.; Alemzadeh, M.; Saleh, J.A.; Arab, M.N. Human-Computer Interaction: Overview on State of the Art. Int. J. Smart Sens. Intell. Syst. 2008, 1, 137–159. [Google Scholar] [CrossRef] [Green Version]

- Robles-De-La-Torre, G. The importance of the sense of touch in virtual and real environments. IEEE Multimedia 2006, 13, 24–30. [Google Scholar] [CrossRef]

- Hayward, V.; Astley, O.R.; Cruz-Hernandez, M.; Grant, D.; Robles-De-La-Torre, G. Haptic interfaces and devices. Sens. Rev. 2004, 24, 16–29. [Google Scholar] [CrossRef] [Green Version]

- Vince, J. Introduction to Virtual Reality; Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Sodhro, A.H.; Pirbhulal, S.; Qaraqe, M.; Lohano, S.; Sodhro, G.H.; Junejo, N.U.R.; Luo, Z. Power control algorithms for media transmission in remote healthcare systems. IEEE Access 2018, 6, 42384–42393. [Google Scholar] [CrossRef]

- Magsi, H.; Sodhro, A.H.; Chachar, F.A.; Abro, S.A.K.; Sodhro, G.H.; Pirbhulal, S. Evolution of 5G in Internet of medical things. In Proceedings of the 2018 International Conference on IEEE Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 3–4 March 2018; pp. 1–7. [Google Scholar]

- Sodhro, A.H.; Shaikh, F.K.; Pirbhulal, S.; Lodro, M.M.; Shah, M.A. Medical-QoS based telemedicine service selection using analytic hierarchy process. In Handbook of Large-Scale Distributed Computing in Smart Healthcare; Springer: Berlin/Heidelberg, Germany, 2017; pp. 589–609. [Google Scholar]

- Sodhro, A.H.; Pirbhulal, S.; Sodhro, G.H.; Gurtov, A.; Muzammal, M.; Luo, Z. A joint transmission power control and duty-cycle approach for smart healthcare system. IEEE Sens. J. 2018. [Google Scholar] [CrossRef]

- Arnau-Gonzalez, P.; Althobaiti, T.; Katsigiannis, S.; Ramzan, N. Perceptual video quality evaluation by means of physiological signals. In Proceedings of the IEEE Ninth International Conference on Quality of Multimedia Experience (QoMEX), Erfurt, Germany, 31 May–2 June 2017; pp. 1–6. [Google Scholar]

- Wagner, J. Augsburg Biosignal Toolbox (AuBT); University of Augsburg: Augsburg, Germany, 2005. [Google Scholar]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Rigas, G.; Katsis, C.D.; Ganiatsas, G.; Fotiadis, D.I. A user independent, biosignal based, emotion recognition method. In Proceedings of the International Conference on User Modeling, Corfu, Greece, 25–29 July 2007; pp. 314–318. [Google Scholar]

- Chang, C.Y.; Zheng, J.Y.; Wang, C.J. Based on support vector regression for emotion recognition using physiological signals. In Proceedings of the 2010 International Joint Conference on IEEE Neural Networks (IJCNN), Barcelona, Spain, 18–23 July 2010; pp. 1–7. [Google Scholar]

- Bracewell, R.N.; Bracewell, R.N. The Fourier Transform and Its Applications; McGraw-Hill: New York, NY, USA, 1986; Volume 31999. [Google Scholar]

- Daubechies, I. The wavelet transform, time-frequency localization and signal analysis. IEEE Trans. Inf. Theory 1990, 36, 961–1005. [Google Scholar] [CrossRef]

- Davidson, R.J. Affective neuroscience and psychophysiology: Toward a synthesis. Psychophysiology 2003, 40, 655–665. [Google Scholar] [CrossRef] [Green Version]

- Abadi, M.K.; Subramanian, R.; Kia, S.M.; Avesani, P.; Patras, I.; Sebe, N. DECAF: MEG-based multimodal database for decoding affective physiological responses. IEEE Trans. Affect. Comput. 2015, 6, 209–222. [Google Scholar] [CrossRef]

- Li, L.; Chen, J.H. Emotion recognition using physiological signals from multiple subjects. In Proceedings of the IIH-MSP’06 International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Kaohsiung, Taiwan, 21–23 November 2006; pp. 355–358. [Google Scholar]

- Maaoui, C.; Pruski, A. Emotion recognition through physiological signals for human-machine communication. In Cutting Edge Robotics 2010; InTech: Rijeka, Crotia, 2010. [Google Scholar]

- Mauldin, M.L. Chatterbots, tinymuds, and the turing test: Entering the loebner prize competition. AAAI 1994, 94, 16–21. [Google Scholar]

- Masche, J.; Le, N.T. A Review of Technologies for Conversational Systems. In Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Shah, H. Chatterbox Challenge 2005: Geography of the Modern Eliza. In Proceedings of the 3rd International Workshop on Natural Language Understanding and Cognitive Science (NLUCS 2006), Paphos, Cyprus, 23–27 May 2006; pp. 133–138. [Google Scholar]

- Hutchens, J.L. Conversation simulation and sensible surprises. In Parsing the Turing Test; Springer: Berlin/Heidelberg, Germany, 2009; pp. 325–342. [Google Scholar]

- Abdul-Kader, S.A.; Woods, J. Survey on chatbot design techniques in speech conversation systems. Int. J. Adv. Comput. Sci. Appl. 2015, 6, 72–80. [Google Scholar]

- Bradeško, L.; Mladenić, D. A survey of chatbot systems through a loebner prize competition. In Proceedings of the Slovenian Language Technologies Society Eighth Conference of Language Technologies, Ljubljana, Slovenia, 8–9 October 2012; pp. 34–37. [Google Scholar]

- Graesser, A.C.; Chipman, P.; Haynes, B.C.; Olney, A. AutoTutor: An intelligent tutoring system with mixed-initiative dialogue. IEEE Trans. Educ. 2005, 48, 612–618. [Google Scholar] [CrossRef]

- Ivanova, M. Researching affective computing techniques for intelligent tutoring systems. In Proceedings of the 2013 International Conference on IEEE Interactive Collaborative Learning (ICL), Kazan, Russia, 25–27 September 2013; pp. 596–602. [Google Scholar]

- Chi, M.T.; Wylie, R. The ICAP framework: Linking cognitive engagement to active learning outcomes. Educ. Psychol. 2014, 49, 219–243. [Google Scholar] [CrossRef]

- Menekse, M.; Stump, G.S.; Krause, S.; Chi, M.T. Differentiated overt learning activities for effective instruction in engineering classrooms. J. Eng. Educ. 2013, 102, 346–374. [Google Scholar] [CrossRef]

- Aleven, V.; Roll, I.; McLaren, B.M.; Koedinger, K.R. Automated, unobtrusive, action-by-action assessment of self-regulation during learning with an intelligent tutoring system. Educ. Psychol. 2010, 45, 224–233. [Google Scholar] [CrossRef]

- Petrovica, S.; Ekenel, H.K. Emotion Recognition for Intelligent Tutoring. In Proceedings of the 4th International Workshop on Intelligent Educational Systems, Technology-Enhanced Learning a Technology Transfer Models (INTEL-EDU), Prague, Czech Republic, 14–16 September 2016. [Google Scholar]

- Xiaomei, T.; Niu, Q.; Jackson, M.; Ratcliffe, M. Classification of video lecture learners’ cognitive and negative emotional states using a Bayesian belief network. Filomat 2018, 32, 41. [Google Scholar]

- d Baker, R.S.; Gowda, S.M.; Wixon, M.; Kalka, J.; Wagner, A.Z.; Salvi, A.; Aleven, V.; Kusbit, G.W.; Ocumpaugh, J.; Rossi, L. Towards Sensor-Free Affect Detection in Cognitive Tutor Algebra. In Proceedings of the 5th International Educational Data Mining Society, Chania, Greece, 19–21 June 2012. [Google Scholar]

- Burleson, W. Affective Learning Companions: Strategies for Empathetic Agents with Real-Time Multimodal Affective Sensing to Foster Meta-Cognitive and Meta-Affective Approaches to Learning, Motivation, and Perseverance. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2006. [Google Scholar]

- Rowe, J.; Mott, B.; McQuiggan, S.; Robison, J.; Lee, S.; Lester, J. Crystal island: A narrative-centered learning environment for eighth grade microbiology. In Proceedings of the 14th International Conference on Artificial Intelligence in Education, Brighton, UK, 6–10 July 2009; pp. 11–20. [Google Scholar]

- Alexander, S.; Sarrafzadeh, A.; Hill, S. Easy with eve: A functional affective tutoring system. In Proceedings of the 8th International Conference on ITS, Jhongli, Taiwan, 26–30 June 2006; pp. 5–12. [Google Scholar]

- Zakharov, K.; Mitrovic, A.; Ohlsson, S. Feedback Micro-Engineering in EER-Tutor. In Proceedings of the 12th International Conference on Artificial Intelligence in Education, Amsterdam, The Netherlands, 18–22 July 2005. [Google Scholar]

- Gobert, J. Inq-ITS: Design decisions used for an inquiry intelligent system that both assesses and scaffolds students as they learn. In Handbook of Cognition and Assessment; Wiley/Blackwell: New York, NY, USA, 2015. [Google Scholar]

- Poel, M.; op den Akker, R.; Heylen, D.; Nijholt, A. Emotion based agent architectures for tutoring systems the INES architecture. In Proceedings of the Workshop on Affective Computational Entities (ACE), Vienna, Austria, 13–16 April 2004. [Google Scholar]

- Litman, D.J.; Silliman, S. ITSPOKE: An Intelligent Tutoring Spoken Dialogue System; Demonstration Papers at HLT-NAACL 2004; Association for Computational Linguistics: Stroudsburg, PA, USA, 2004; pp. 5–8. [Google Scholar]

- Arroyo, I.; Wixon, N.; Allessio, D.; Woolf, B.; Muldner, K.; Burleson, W. Collaboration improves student interest in online tutoring. In Proceedings of the International Conference on Artificial Intelligence in Education, Wuhan, China, 28 June–2 July 2017; pp. 28–39. [Google Scholar]

- Azevedo, R.; Witherspoon, A.M.; Chauncey, A.; Burkett, C.; Fike, A. MetaTutor: A MetaCognitive Tool for Enhancing Self-Regulated Learning. In Proceedings of the AAAI Fall Symposium: Cognitive and Metacognitive Educational Systems, Arlington, VA, USA, 5–7 November 2009. [Google Scholar]

- Jaques, P.A.; Seffrin, H.; Rubi, G.; de Morais, F.; Ghilardi, C.; Bittencourt, I.I.; Isotani, S. Rule-based expert systems to support step-by-step guidance in algebraic problem solving: The case of the tutor PAT2Math. Expert Syst. Appl. 2013, 40, 5456–5465. [Google Scholar] [CrossRef]

- Conati, C.; Chabbal, R.; Maclaren, H. A study on Using Biometric Sensors for Monitoring User Emotions in Educational Games. Available online: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.132.3171 (accessed on 5 December 2018).

- Mavrikis, M. Data-driven modelling of students’ interactions in an ILE. In Proceedings of the Educational Data Mining 2008, Montreal, QC, Canada, 20–21 June 2008. [Google Scholar]

| Source | Measurement Techniques | Objective | Methodology | Results |

|---|---|---|---|---|

| Brawner and Goldberg [52] | ECG and GSR, Measured: heart rate, mean, SD and energy of GSR signal | To train bilateral negotiations with ITS, and check stress and anxiety levels via measurement during the learning process | CMT, a web-based prototype Participants interacted via static dialog for a sample size of 35 | Anxiety, boredom, stress, and frustration were checked using GSR. For well-defined, no interruption learning, the average signal densities were lower by 67% compared to interrupted learning, meaning much lower anxiety and stress. |

| Chanel, et al. [37] | EEG and plethysmograph, Measured: arousal and blood pressure | To assess the impact of stimuli on test subject using the valence-arousal model, using mathematical classifiers | Exposure of 4 test subjects to various videos Comparison of measured values of arousal and valence to a Self-Assessment Manikin (SAM) quiz | The Bayes classifier resulted in an average accuracy of 54% between the test subject’s SAM score and the measured EEG readings. EEG signals were found to be reliable indicators with up to 72% accuracy in a test subject. |

| Kim and Andre [53] | EMG, ECG, GSR, and Respiration Measured: Skin conductivity, heart and breathing rate | Emotion recognition of subject based on physiological impact of music listening | Subjects listened to music and classification performed between responses on the valence-arousal model. The classification was performed using a novel emotion-specific multilevel dichotomous classification (EMDC) technique | Emotion recognition on arousal-valence was found to be up to 95% for subject-dependent classification and 70% for subject-dependent classification using the EMDC model which is higher compared to previously established PLDA classifier. |

| Pour, et al. [54] | ECG, EMG, GSR Measured: skin conductivity, heart rate, and facial muscle signal | Assess the correlation between physiological responses and self-reported learning by subjects when taught using a feedback-based learning software in the fields of hardware, internet and operating systems | Autotutor, a dialog and feedback-based learning software. Using Autotutor, 16 learners were asked to self-report their learning progress while being monitored for physiological signals | Using support vector machine (SVM) classifier, the classification accuracy for emotions vs. self-reported learning was up to 84% which shows the feasibility of the approach |

| Hussain, et al. [55] | ECG, EMG, respiration, and GSR Measured: skin conductivity, heart rate, facial muscle signals, breathing rate | Establish emotional classification for subjects in terms of categorical (frustration, confusion, etc.) and dimensional (valence-arousal) by interaction with ITS | To achieve the objective, feature extraction from data was implemented in MATLAB [56] | Baseline accuracy was established at 33%. For video measurement accuracy was up to 62% for dimensional classification and 45% for categorical classification. For a combination of video and physiological signals, accuracy was up to 64.63% |

| Barron-Estrada, et al. [57] | N/A | The paper focuses on analyzing the sentiments of the learner and implement the results in an ITS. | Sentiment Analysis Module, a computer-based system, used to take subject opinions in terms of likes and dislikes and to classify them according to self-reported correctness | Out of 178 subject opinions taken, the correct classification had accuracy of 80.75% |

| Arnau, et al. [58] | N/A | Learning of arithmetical problem-solving via intelligent tutoring. Aimed at both expert and novice teachers | By implementing a search technique, the user’s answers were compared against a database of steps, and math problems were presented and solved on a step-by-step basis. | An accuracy of 82.14% was achieved in total out of the 75 subjects. |

| D’Mello and Graesser [59] | Direct measurement of expressions | Predicting student emotions such as boredom and engagement while using an ITS named Autotutor. | The research used the Autotutor, a validated ITS that helps students learn topics in science and computing via a mixed conversational dialog between the student and the tutor. There were 28 subjects in this study | The results showed that methods that detect affect in a text by identifying explicit articulations of emotion or by monitoring the valence of its content words are not always viable solutions. |

| Mohanan, et al. [60] | Face Detection | To examine the possibility of integrating an emotion detection system within an ITS | A class of subjects was observed while being taught by a teacher. The samples obtained from this method can be used to train the ITS. The ITS assessed the learner’s knowledge and present more challenging or less challenging tasks based on this assessment. | Capturing emotions by means of pictures and videos may not be an effective emotion recognition strategy. Students that are not very expressive, may not find the proposed ITS system effective. A more sophisticated way of identifying emotions can improve the quality of the research. |

| Njeru and Paracha [61] | Eye tracking | ITS with the use of model tracing and knowledge tracing, to assess the feasibility of using eye-tracking in learning. | Autotutor, a dialog and feedback-based learning software. Using Autotutor, 16 learners were asked to self-report their learning progress while being monitored for physiological signals | The paper explains how eye-tracking data can be used to detect learning behavior. However, it does not provide enough evidence to show feasibility of this method for emotion detection. |

| ITS | Sensors Used | Classification | Recognized Emotions |

|---|---|---|---|

| Autotutor [110] | Video camera Pressure sensitive chair. | Extraction of eye pattern and posture, analysis of log files. Classifiers: C4.5 decision trees, neural networks, Naive Bayes, logistic regression, and nearest neighbor. | Frustrated, confused, bored, and neutral. |

| Cognitive BBN [116] | Video camera | Bayesian belief networks | Frustrated, confused, bored, happy, and interested. |

| Algebra Tutor [117] | N/A | Feature analysis such as activity history and student behavior. Classifiers: K* algorithm, J48 decision trees, step regression, Naive Bayes, REP-Trees. | Bored, concentrated, frustrated, and confused. |

| Affective learning companions [118] | mouse, posture chair, video camera, skin conductance bracelet | Hidden Markov Models, State Vector Machines, and Dynamic Bayesian Networks | Boredom, confusion, flow, and frustration. |

| Dynamic Bayesian Network (DBN) modeler [119] | N/A | Analysis of log files, interviews, and surveys. Through DBN emotions are modeled. | Enthusiastic, anxious, frustrated, bored, curious, confused, and focused. |

| Easy Eve [120] | Video Camera | Analysis of facial feature. Classifier: SVM. | Scared, surprised, smiling, laughing, disgusted, neutral, angry, and sad. |

| Arroyo’s ITS [120] | mouse, posture chair, camera, bracelet | Linear regression. | Confidence, frustration, excitement, interest. |

| EER-Tutor [121] | Video Camera | Extraction and tracking of facial features such as eyebrows and lips. Feature classification is carried out via comparing the neutral face with calculated distance for each facial feature. | Happy, angry, smiling, frustrated and neutral emotions. |

| Facial Features-based Tutor [115] | Video Camera | Extraction and tracking of facial feature and Regions of Interest (ROI). Classifiers: Fuzzy system, and neural network. | Scared, angry, disgusted, happy, sad, neutral, and surprised. |

| Gnu-Tutor [111] | Eye tracker | Analysis of log files, Gaze pattern extraction and eye tracking. | Disinterested, and bored. |

| InqITS [122] | N/A | Analysis of log files. Classifiers: step regression, J48 decision trees, JRip. | Concentrated, bored, confused, and frustrated. |

| INES-Tutor [123] | N/A | Analysis of the student’s difficulty of the task, activity level, previous progress, number of errors, severity of the error. | Confident, Worried, sad, and enthusiastic. |

| SPOKE [124] | Microphone | Extraction of acoustic-prosodic, lexical features and dialog features. Answer accuracy is assessed through semantic analysis. | Negative, positive, and neutral emotions. |

| MathSpring [125] | N/A | Analysis of log files, behavior patterns, and self-assessment reports. Classifier: linear regression. | Excited, inactive, confident, worried, interested, bored, satisfied, and frustrated. |

| Meta-Tutor [126] | Eye tracker | Extraction of gaze data features. Classifiers: SVM, random forests, logistic regression, and Naive Bayes. | Bored, curious, and interested. |

| PAT [127] | Video Camera | Analysis of log files, tracking, and extraction of facial feature points. Classification of motions are done via facial action coding. | Disappointed, happy, sad, satisfied, ashamed, grateful, and angry. |

| Prime-Climb [128] | Physiological sensors | Measuring heart rate, muscle activity, skin conductivity. Analyzing Biometric through unsupervised clustering. | Happy, sad (for the game), admiration, criticism (for PA), pride, shame (for himself). |

| VALERIE-Tutor [115] | Video camera, mic, physiological sensors | Measuring of skin conductivity, heart rate, extraction of speech, facial features, and analysis of mouse movement. Classifiers: Marquardt Back-propagation algorithm, nearest neighbor, linear discriminant function analysis. | Angry, surprised, sad, scared, amused, and frustrated. |

| Mavrikis’s ITS [129] | N/A | Analysis of log files. Classifier: J4.8 decision tree algorithm. | Frustrated, enthusiastic, confused, confident, bored, and happy. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alqahtani, F.; Ramzan, N. Comparison and Efficacy of Synergistic Intelligent Tutoring Systems with Human Physiological Response. Sensors 2019, 19, 460. https://doi.org/10.3390/s19030460

Alqahtani F, Ramzan N. Comparison and Efficacy of Synergistic Intelligent Tutoring Systems with Human Physiological Response. Sensors. 2019; 19(3):460. https://doi.org/10.3390/s19030460

Chicago/Turabian StyleAlqahtani, Fehaid, and Naeem Ramzan. 2019. "Comparison and Efficacy of Synergistic Intelligent Tutoring Systems with Human Physiological Response" Sensors 19, no. 3: 460. https://doi.org/10.3390/s19030460

APA StyleAlqahtani, F., & Ramzan, N. (2019). Comparison and Efficacy of Synergistic Intelligent Tutoring Systems with Human Physiological Response. Sensors, 19(3), 460. https://doi.org/10.3390/s19030460