1. Introduction

Electroencephalography (EEG) is the standard method for measuring the electrical activity of the brain with proven efficacy as a tool for understanding cognitive processes and mental disorders. The recent emergence of embedded EEG technology in low-cost wearable devices allows, in addition, to perform EEG recordings in everyday life conditions. Likewise, it offers the possibility to bring mobile, real-time applications to the consumer such as neurofeedback, mental fatigue measurement, sleep monitoring or stress reduction [

1]. Often running in uncontrolled environments, portable devices are more susceptible to be contaminated by the typical sources of noise (both internal such as subject movements, blinks, muscular contraction, or external like electro-magnetic interferences, power line noise, etc.) than standard EEG systems [

2]. Hence, a fast and robust quality assessment of EEG recordings is of crucial importance in order to provide reliable data for further analysis.

Common ways to assess the quality of an EEG recording system include the comparisons of signal-to-noise (SNR) ratios, event-related potentials (ERP) and steady-state visually evoked potentials (SSVEP) simultaneously estimated by different recording systems [

3]. As the visual inspection, these approaches apply an off-line strategy that evaluates the general quality of EEG recordings. Although there is no single rhythm, feature, or characteristic of an EEG that must be present to consider it normal, it is generally accepted that normality on an EEG is simply the absence of identifiable abnormalities [

4,

5]. The statistical definition of a “clear” EEG signal can provide some help in setting threshold values to determine the artefact level of an EEG recording [

2]. These thresholds are generally based on the amplitude, skewness and kurtosis of the EEG signal [

2,

6,

7]. Amplifier drifts or instrumental artefacts, for instance, are generally accompanied by large mean shifts of the EEG amplitudes [

6]. Some artefacts like strong muscle activity have a skewed distribution which can be detected by a kurtosis test [

7]. Similar thresholds can also be applied to spectral features to detect instrumental and physiological artefacts on EEG signals [

8,

9]. On the same idea, authors in [

10] proposed a method to assess the skin-sensor contact of wearable EEG sensors in several environments like public parks, offices or in-home. Their approach combines several spectral features to establish a decision rule about the quality of this contact that impacts the EEG quality. Although these threshold-based approaches are commonly used to reject EEG segments, they have two main drawbacks: first, they require to manually define the statistical detection thresholds [

7]. Secondly, the specificity and sensitivity of these procedures to distinguish between high and low level of contamination are not straightforward [

11].

To address the drawbacks of thresholding, classification-based approaches have been proposed to automatically adapt the decision rule to detect the artefact contamination level [

12,

13,

14]. In [

15], authors combine EEG and gyroscope signals with support vector machines (SVMs) to detect head movement artefacts. In [

16], a fuzzy-c means clustering method is applied on measurements of the fluctuations of the second-order power amplitudes to determine the quality of the EEG signal.

Blind source separation methods like Independent Component Analysis (ICA) can also be used to detect muscle or cardiac artefacts [

17] with a visual selection of the component containing the corresponding activity. Appropriate filtering techniques can be applied to physiological recordings (e.g., electrocardiogram or ocular movements), to detect or reduce some artefacts in real time. For instance, motion artefacts can be detected with a gyroscope and subtracted from the raw EEG signal with an appropriate adaptive filter [

18]. In the same idea, an approach based on a FIR filter [

19,

20] can distinguish, on a single EEG channel, ocular artefacts which are detected as irregular spikes. The main disadvantage of these approaches is, however, that they assume that one or more reference channels with the artefacts waveforms are available. For other approaches, like in [

21], an ensemble learning approach is used to detect, in an off-line analysis, EEG segments contaminated with muscle artefacts.

Despite the vast number of solutions proposed to reject or reduce artefacts in EEG signals, most of the proposed solutions are applied to classical EEG multi-channel electrode settings. In the context of wearable EEG recording systems with a reduced number of dry electrodes, few methods are capable of distinguishing between “good” EEG quality signals and different type of artefacts.

In this paper, we propose a classifier-based method that combines a spectral comparison technique to assess the quality of EEG and to discriminate muscular artefacts. It has been purposely designed for reduced electrode sets (or single EEG channel configuration) from portable devices used in real-life conditions. We specifically used the Melomind device (myBrain Technologies, Paris, France), a new portable EEG system based on two dry electrodes, to validate the method. The performance of our approach is also validated on standard wet sensors recording subsets: one from Acticap BrainProducts (GmbH, Gilching, Germany) and another from an artefact-free EEG public database [

22] contaminated with simulated artefacts of several types (muscular artefacts, blinks, …). Our method is also compared with another algorithm for artefact detection in single-channel EEG systems [

2]. Finally, our method is evaluated for the quality assessment of unlabelled EEG databases.

The remainder of the paper is organized as follows:

Section 2 describes the databases and the methods used in the proposed approach.

Section 3 presents the statistical assessment of our method in terms of accuracy of artefact detection. Finally, we conclude the paper with a discussion in

Section 4.

2. Materials and Methods

2.1. Databases

In this work we consider three levels of artefact contamination:

Low quality level (LOW-Q): EEG data with a very poor quality, corresponding to a signal saturation, a recording during sensor peeling off, etc.

Medium quality level (MED-Q): EEG signal contaminated by standard artefacts like muscular activity, eye blinking, head movements, etc. For this level of contamination, the proposed method also discriminates muscular artefacts (MED-MUSC).

High quality level (HIGH-Q): EEG signals without any type contamination (head movement, eye blinking or muscular artefacts). These EEG signals are considered as “clean”.

In order to validate our method, we first studied two databases containing EEG signals recorded with different EEG sensors on healthy subjects for whom we asked to deliberately generate different type of artefacts. Thirty seconds of EEG data were recorded for each type of artefacts (including eye blinking, head and eye movements, jaw clenching). Very contaminated data (signal saturation and electrode peeling off), was also deliberately produced during 30 s of recording. Finally, 1 min of EEG data was collected during the subjects were asked to be quiet but alert.

The first database (artBA) is composed of EEG signals from three subjects recorded by an Acticap BrainProducts (GmbH, Gilching, Germany) system using 32 wet electrodes in the 10–20 International System. Signals were amplified, digitized at 1000 Hz sampling frequency, then down-sampled to 250 Hz and segmented in one second non-overlapping windows. For all recordings, the impedance between the skin and the sensors was below 5 k.

The second database (artMM) is composed of EEG signals from 21 subjects recorded by Melomind (myBrain Technologies, Paris, France), a portable and wireless EEG headset equipped with two dry sensors on P3 and P4 positions according to the 10–20 International System. EEG signals were amplified and digitized at 250 Hz and segmented in one second non-overlapped windows, then corrected to remove DC offset and 50 Hz power line interferences by Melomind’s embedded system before being sent via Bluetooth to a mobile device.

We used a third database (

publicDB) which comes from the BNCI Horizon 2020 European public repository, dataset 13 [

22]. It contains motor imagery-related EEG signals from 9 subjects recorded by g.tec GAMMAsys system using 30 wet active electrodes (g.LADYbird) and two g.USBamp biosignal amplifiers (Guger Technolgies, Graz, Austria). Artefact-free EEG segments were selected to build this database contaminated with artificially-generated artefacts.

Finally, two unlabelled databases containing real EEG activity were also collected on 10 subjects in parietal regions (P3 and P4) with a standard system (Acticap BrainProducts, GmbH, Gilching, Germany) and with a low-cost system (Melomind, myBrain Technologies, Paris, France). One dataset contains therefore EEG recordings made with wet standard (wetRS) electrodes, whereas the second one contains the data recorded with the dry sensors (dryRS). In all these EEG recordings, the subjects were asked to be at rest with closed eyes but in alert condition during 1 min.

According to the declaration of Helsinki, we obtained written informed consent from all the subjects (of the previous described databases) after explanation of the study, which received the approval from the local ethical committee (CPP-IDF-VI, num. 2016-AA00626-45). More details about the composition (the number of EEG segments and type of artefacts) of each database can be found on

Table 1.

2.2. Overview of the Method

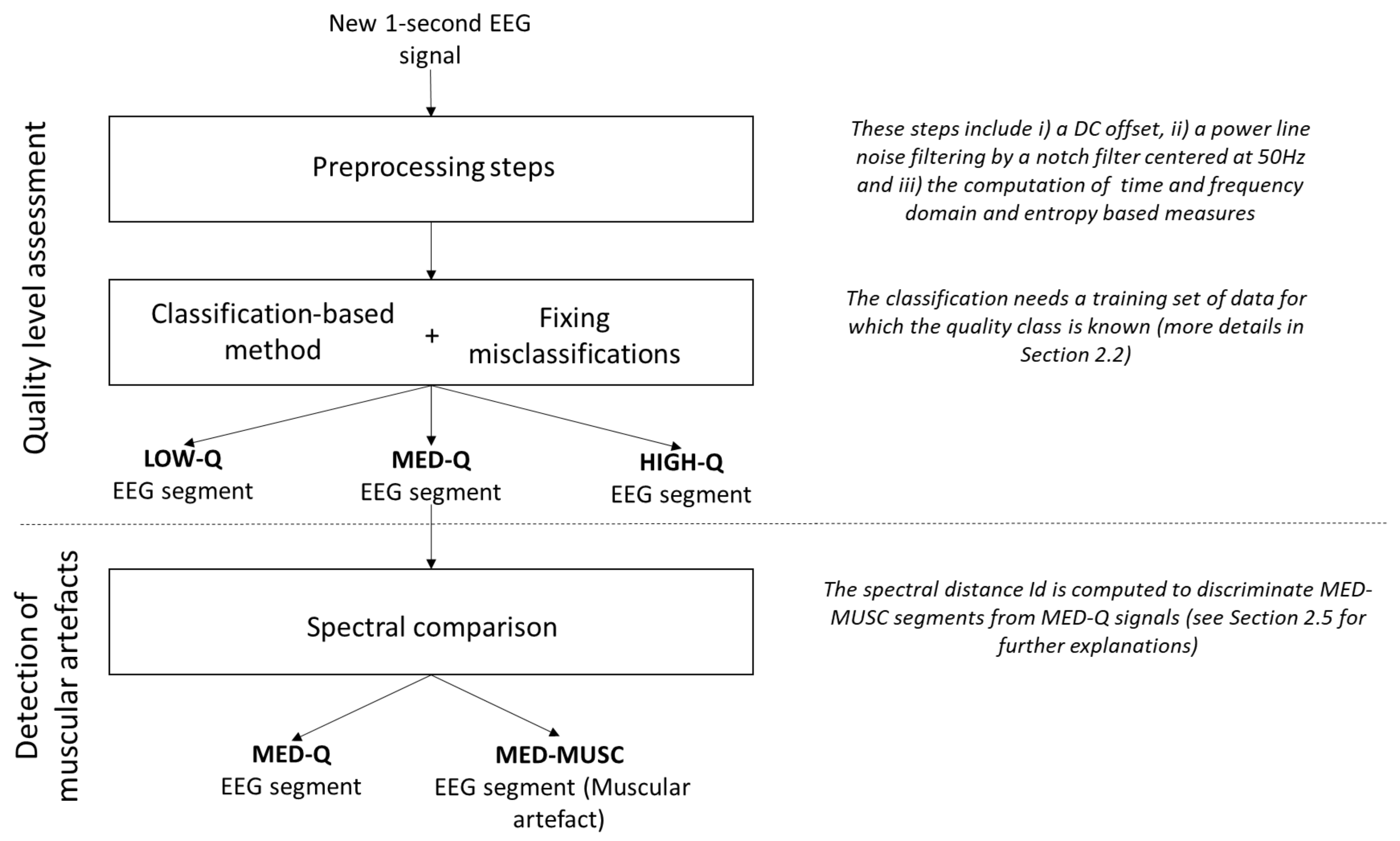

Our method, summarized in

Figure 1, includes the following main steps:

Pre-processing: All EEG recordings are segmented in one second non-overlapping windows. For each segment, the DC offset level is removed and power line noise is suppressed by a notch filter centred at 50 Hz. Then, several time and frequency domain and entropy-based measures are computed (see

Section 2.3).

Quality assessment: Different classifiers are trained (see

Section 2.4 below) on a subset of data (training set), for which the quality class is known, to assign each EEG segment of the remaining subset (testing set) to one of the three levels of artefact contamination (low, medium and high). To reduce the number of misclassifications, EEG segments with more than 70% of constant values (saturation and flat signals) and those with extreme values (

V) are considered as low quality data [

6].

Discrimination of muscular artefacts: To discriminate muscular artefacts from EEG segments, we compare the spectrum of contaminated segments with a reference spectrum obtained from the training set of clean segments. An EEG segment is considered to include a muscular artefact if the spectral distance exceeds a threshold

T (details of the method are described in

Section 2.5).

2.3. Features Extraction

The collection of features used to assess the quality of the EEG segments includes a total of 114 parameters obtained from both time and frequency domains that are commonly used in artefact detection from electrophysiological signals [

2,

6,

7,

8,

10,

23,

24], or to detect seizures from neonatal EEG [

11,

25].

Time-domain features include the maximum value, the standard deviation, the kurtosis and the skewness [

7,

11,

26]. Some of these features were extracted from EEG signals filtered in different frequency bands [

2]. In this context, a band-pass filter was applied with specific cut-off frequencies according to the EEG frequency bands:

–4 Hz for

band, 4–8 Hz for

band, 8–13 Hz for

band, 13–28 Hz for

band and 28–110 Hz for

band. See

Table A1 (in

Appendix A.1) for the full list of time domain features.

Frequency-domain features offer the possibility to quantify changes in the power spectrum. Most of these features were inspired from three studies [

10,

11,

23]. Certain features, originally defined for speech recognition and quality assessment of electromyogram, were adapted for EEG signals. Some parameters (like the log-scale or the relative power spectrum) were extracted directly from the spectrum in the frequency bands used for the time domain features. To see the full list of extracted features in frequency domain, see

Table A2 (in

Appendix A.2).

Supplementary structural and uncertainty information from EEG segments were extracted using Shannon entropy, spectral entropy, and singular value decomposition entropy [

11].

2.4. Classification-Based Methods

In this work, we compare several classifiers to categorize EEG segments into the three quality levels (low medium and high), using a 5-fold cross validation. Before the classification, the value of each feature (in every EEG segment) was normalized with the mean and standard deviation obtained from EEG segments contained in the training set. The classifiers evaluated in this work are the following:

Linear Discriminant Analysis (LDA) is a standard algorithm that finds a linear decision surface to discriminate the classes [

27]. This classifier can be derived from simple probabilistic rules which model the class conditional distribution

of an observation

X for each class

l. The class of each new EEG segment is predicted by using Bayes’ theorem [

28]:

where

denotes the class priors estimated from the training set by the proportion of instances of class

l. Although this classifier is easy to interpret and to implement, its performance is sensitive to outliers. We assign to an EEG segment the class

l which maximizes the conditional probability and minimizes the misclassification rate [

28].

Support Vector Machines (SVMs) use a kernel-based transformation to project data into a higher dimensional space. The aim is to find a separating hyper-plane in the space between the two classes [

29]. Although it exists an infinity of hyper-planes to discriminate the two classes, SVMs keep the hyper-plane which maximizes the distance between the two classes and minimizes the misclassifications. In our case, a “one-against-one” approach is used to solve our multi-class classification problem. This method builds

classifiers where

L is the number of classes. Each classifier is trained on data from two classes [

30]. In this work, SVM with linear kernel function is tested (Linear SVM). SVMs present several advantages: they generally provide good performance with fast computations, over-fitting can be avoided by making use of a regularization parameter and non-linear classifications overcome with the choice of the appropriate kernel. However, the setting of such parameters is not straightforward and improper parametrization may result in low performance.

K-Nearest Neighbours (kNN) classifier is a simple nonparametric algorithm widely used for pattern classification [

31]. An object is classified by a majority vote of its neighbours, with the object being assigned to the most common class among its

k nearest neighbours in the training set [

31]. A neighbour can be defined using many different notions of distance, the most common being the Euclidean distance between the vector

x containing the feature values of the tested EEG segment, and the vector

y containing the feature values of each EEG segment from the training set, which is defined as Equation (

2):

where

n denotes the number of features computed from each EEG segment.

We evaluated the Euclidean distance kNN (referred here as “Euclidean kNN”) but also a weighted kNN (referred as “Weighted kNN”). In the latter, distances are transformed into weights by a distance weighting function using the squared inverse distance, following this equation:

By weighting the contribution of each of the

k neighbours according to Equation (

3), closer neighbours are assigned a higher weight in the classification decision. The advantage of this weighting schema lies in making the kNN more global which overcomes some limitations of the kNN [

32]. In general, the main advantage of kNN is that it does not need a training phase, it is easy to implement, it learns fast and the results are easy to interpret [

32]. However, this algorithm can be computationally expensive and is prone to be biased by the value of

k [

32].

2.5. Spectral Distance to Distinguish Muscular Artefacts

As mentioned above, our method can discriminate artefacts of muscular origin. For this purpose, a spectral distance is first estimated between all the clean (high-quality or HIGH-Q) segments of the training set. An EEG segment detected with a medium quality (MED-Q) can be further discriminated as a muscular artefact (MED-MUSC) if the distance of its spectrum () to the averaged spectrum of clean segments () is higher than a threshold T, defined as N standard deviations above the mean distance computed between all clean segments. For each database, N is chosen iteratively so that the accuracy of detection of MED-MUSC segments is maximum in the training set.

Here, we use the Itakura distance, a statistical distance widely used in spectral analysis [

33,

34], defined by:

where

and

are the spectra to be compared over the spectra frequency range, set here

Hz as it contains the most relevant information of the EEG.

2.6. Validation Procedure

2.6.1. Generation of Artefacts

To test the performance of the different classifiers under study, we generated contaminated data both in a real (True artefacts) and in an artificial condition (Synthetic artefacts), by controlling the level of contamination with respect to the clean EEG.

True artefacts: The databases obtained from the standard EEG system (wet electrodes) and the low-cost device (dry sensors) are composed of three sets, each containing a data collection with different types of artefacts:

Clean EEG signals without internal or external artefacts recorded while subjects were instructed to remain quiet but alert during 1 min.

EEG signals from subjects instructed to deliberately produce 30 s of different type of artefacts like eye blinking, head and eye movements and muscular artefacts (jaw clenching) at short intervals.

Very contaminated EEG data after the subjects with deliberately produced signal saturation or electrode peeling off during 30 s.

These datasets were visually inspected by trained EEG experts. 1 s-EEG segments were manually label as LOW-Q, MED-Q, MED-MUSC and HIGH-Q to constitute the ground truth of the classification.

Synthetic artefacts: Artificially contaminated EEG signals were simulated using data from the public database described in

Section 2.1. Artefacts were generated in three different ways to dispose of the following patterns:

Clean EEG signals (selected by visual inspection) and simulated artefacts came from different subjects to ensure that all segments of simulated and real EEG data were independent of each other. Synthetic artefacts

v were superimposed on the clean EEG segments

b of 1 s of duration as follows:

, where

represents the contribution of the artefact. For each segment and artefact type, the signal to noise ratio (SNR) was adjusted by changing the parameter

as follows:

where

corresponds to the root mean squared value of the clean segment, and

denotes the root mean squared value of the synthetic artefact. We generated 300 segments with SNRs between 0 and 15 dB: 200 for pattern 1 (100 for slow eye movements and 100 for blinks, that were labelled MED-Q) and 100 for pattern 2 (labelled MED-MUSC). For low quality EEG (LOW-Q), we generated 300 excerpts for pattern 3 with SNRs from −10 to 0 dB.

2.6.2. Measures of Performance

Classification performance was measured in terms of accuracy (percentage of correctly detected artefacts) and area under the Receiver Operating Characteristic (ROC) curves (AUC). Here, we computed one ROC curve for each class selected as positive against the other two classes [

36]. To evaluate both measures of performance on the classifiers under test, we applied a 5-fold cross-validation procedure.

The EEG signals may be influenced by subject-related characteristics (e.g., skin, scalp thickness or hair), or by technical and environmental factors during the recorded time (e.g., electromagnetic noise levels and humidity levels). EEG recordings cannot therefore be comparable in their quality between subjects or recording times. We notice, however, that the inter-individual variability of resting state as well as physiological (eye movement and blinks, muscular contamination, …) or environmental artefacts is lower than intra-individual variability across time (days or weeks) [

37,

38]. The cross-validation procedure tests the reliability of our algorithm by testing data collected in different subjects.

4. Discussion and Conclusions

The proposed approach is a classification-based method to evaluate the quality of EEG data that includes a spectral distance to discriminate muscular artefacts from the other types of artefacts. We propose a fast and efficient an approach for detecting and characterizing artefacts that can be applied in single-channel EEG configurations. The method was validated on different databases containing real artefacts generated in real conditions, and one database with artificially generated artefacts superimposed to clean EEG data.

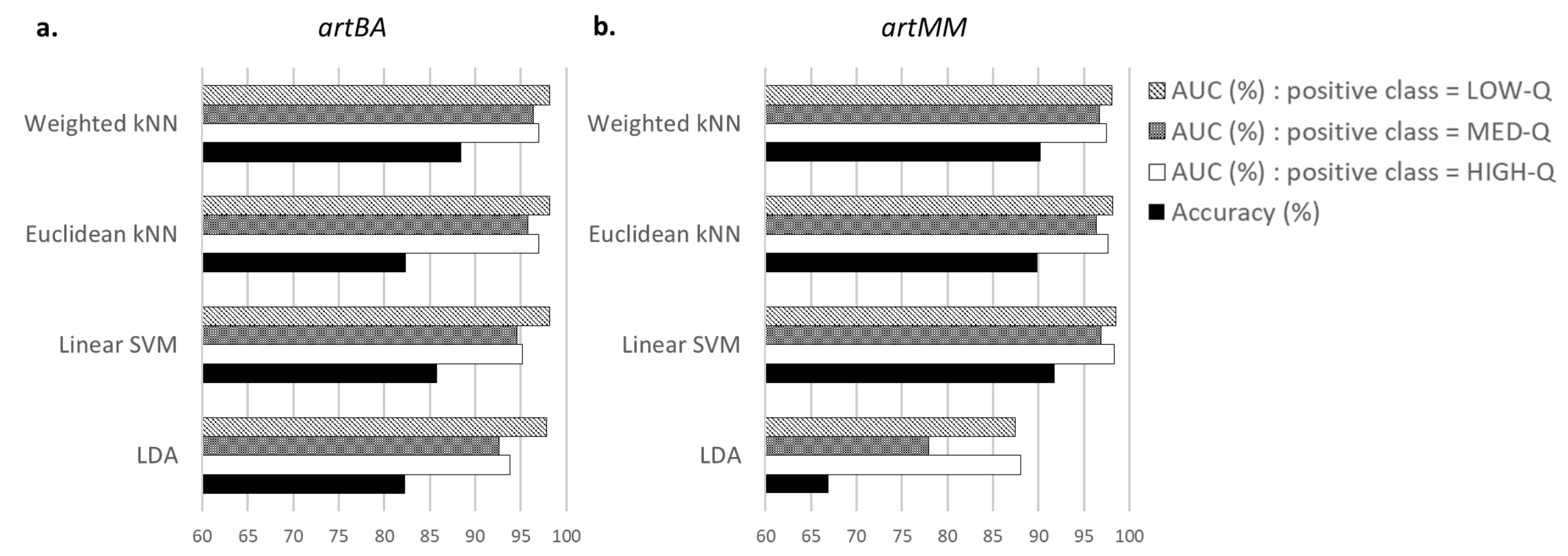

A comparison of performance in terms of accuracy and AUC was made to choose the best classifier among the LDA, Linear SVM, Euclidean and Weighted kNNs. Although the Linear SVM obtained slightly better accuracies for one of the tested databases, the Weighted kNN was selected as a good compromise regarding the artefact detection and the execution time. Indeed, for each labelled database (for which the quality level of segments was known), the proposed approach with a Weighted kNN reached more than 90% of good detection in the quality assessment of EEG segments, taking less than 15 ms for each EEG segment.

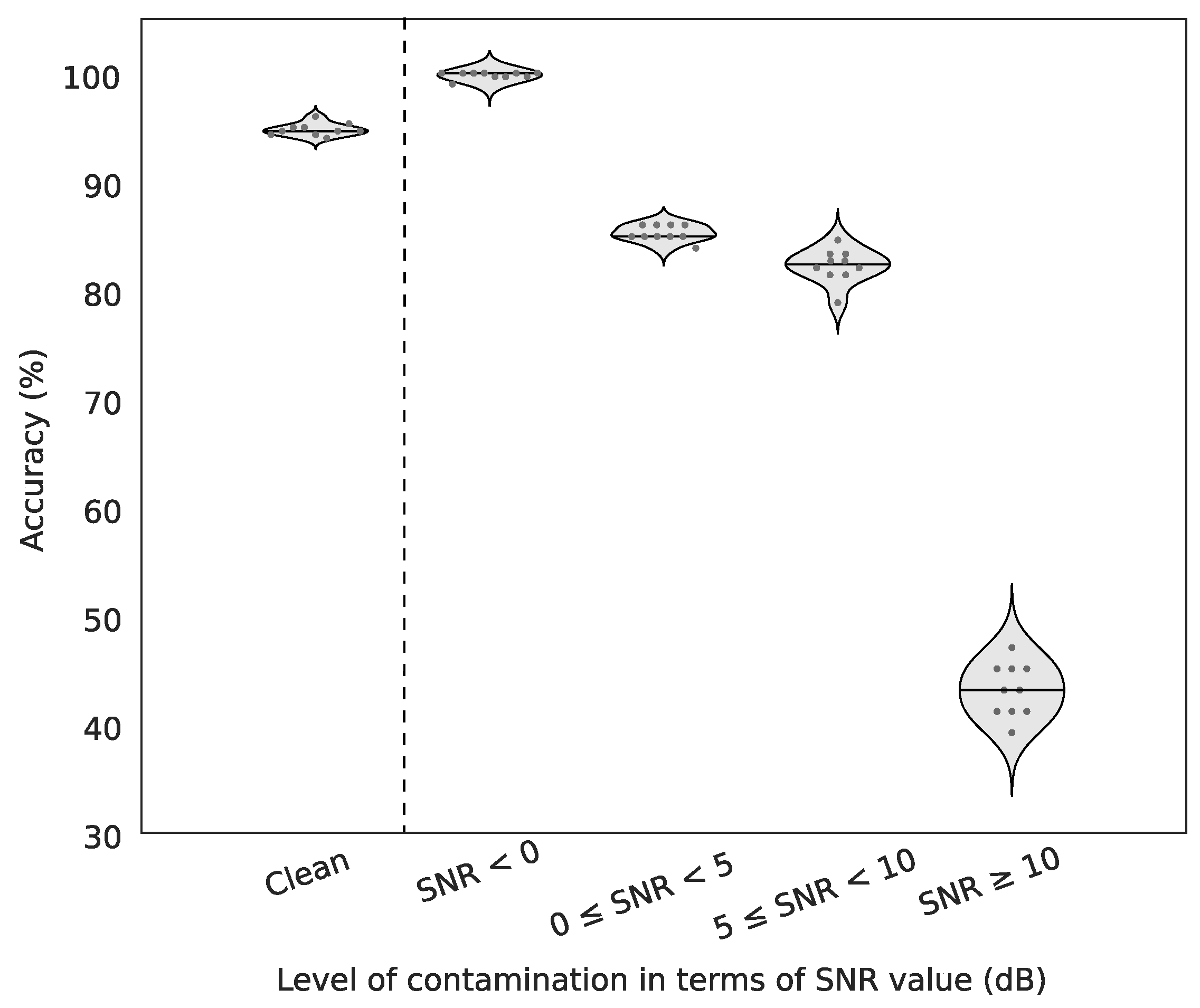

For artificially contaminated EEG signals, we show that our method may yield almost perfect detections in moderate to high artefactual conditions and very fair performance even with high signal-to-noise ratios. Indeed, contaminated signals with SNR between 0 and 10 dB were detected more than 80% of times although they are hardly recognizable visually. Currently, the algorithm can detect muscular artefacts but further investigations will be performed to automatically recognize specific patterns of other sources of artefact contamination (blinks, saccades, head movements, …).

When applied to the unlabelled databases, our algorithm detected similar amount of contaminated segments on EEG recordings from both the standard EEG system (with wet electrodes) and the dry sensor device. These results were in full agreement with the high quality of EEG recordings obtained during resting state, where the subjects were asked to be at rest with eyes closed.

Finally, the results presented in this work suggest that our approach is a good EEG quality checker in off-line environments with either dry or wet EEG electrodes. The presented algorithm is not subject-driven and classifiers are trained with data collected from different subjects at different time periods. Although beyond the scope of our study, we notice that an optimization of subject-driven classifiers for longitudinal recordings (weeks or months) might increase classification performance. Results indicate that the proposed method is suitable for real-time applications dealing with embedded EEG in mobile environments, such as the monitoring of cognitive or emotional states, ambulatory healthcare systems [

43] or sleep stage scoring. In practice, this method is currently used to provide an efficient, fast and automated quality assessment of EEG signals recorded in uncontrolled environments with Melomind (myBrain Technologies, Paris, France), a low-cost wearable device composed of two dry EEG channels.