An Appearance-Based Tracking Algorithm for Aerial Search and Rescue Purposes †

Abstract

:1. Introduction

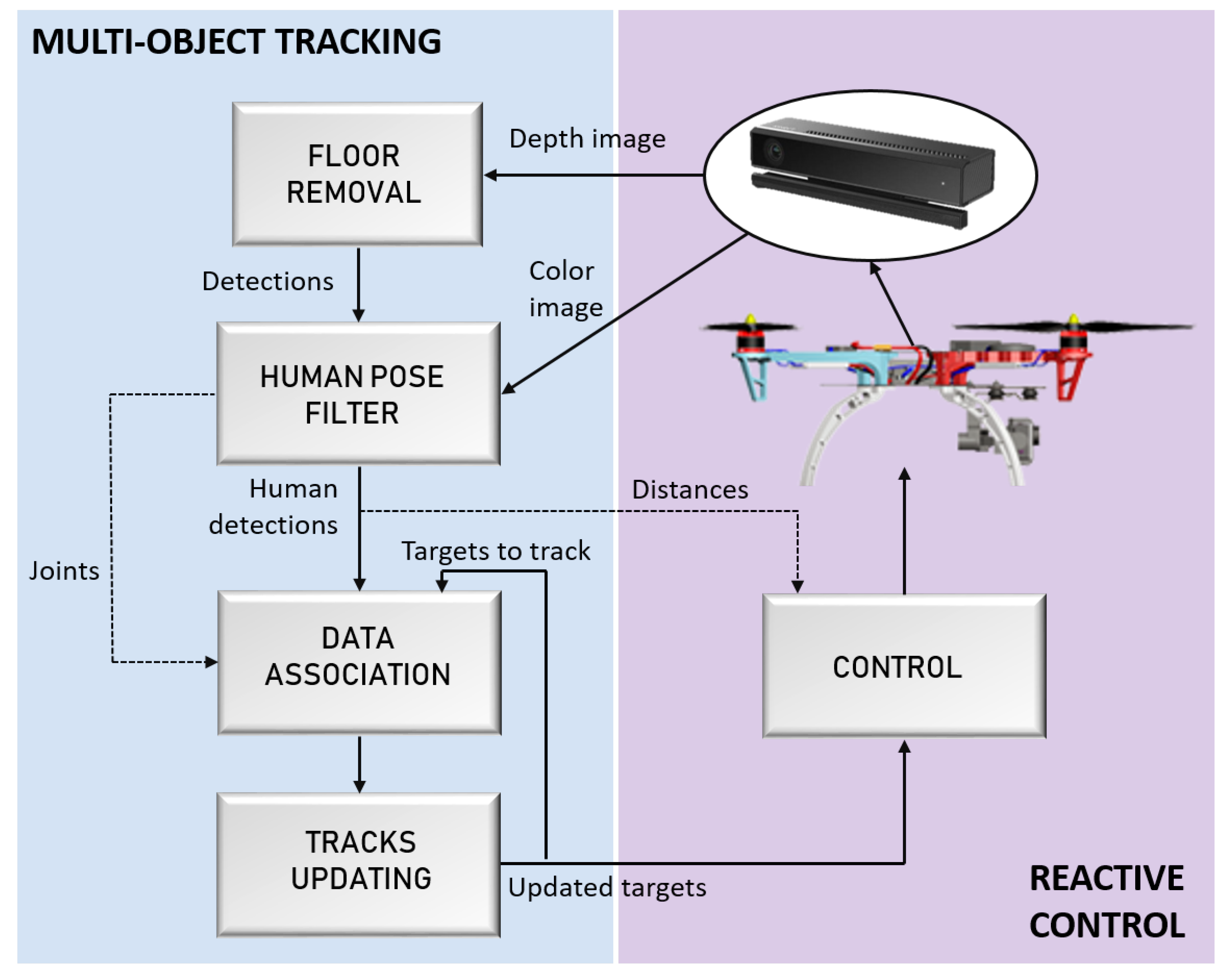

- A human detection stage based on color and depth data and the use of a Human Shape Validation Filter. This filter employs the human joints locations provided by a Convolutional Pose Machine (CPM) [27] to study the human skeleton shape of the found detections, avoiding the false positive ones.

- An automatic Multi-Object Tracking algorithm, which is invariant to a scale, translation and rotation of the point of view with respect to the target objects.

- A novel matching method based on pose and appearance similarity that is able to re-identify people after long term disappearances in the scene.

- A semi-autonomous reactive control is presented; to allow the pilot to approach the UAV to the detected object in a safe and smooth maneuver.

2. Related Work

2.1. Sensing

2.2. Perception

2.3. Control

2.4. Motivation

3. System Overview

4. Multi-Object Tracking (MOT) Algorithm

4.1. Floor Removal

4.2. Human Pose Filter (HPF)

4.3. Data Association

4.4. Tracks Updating Strategy

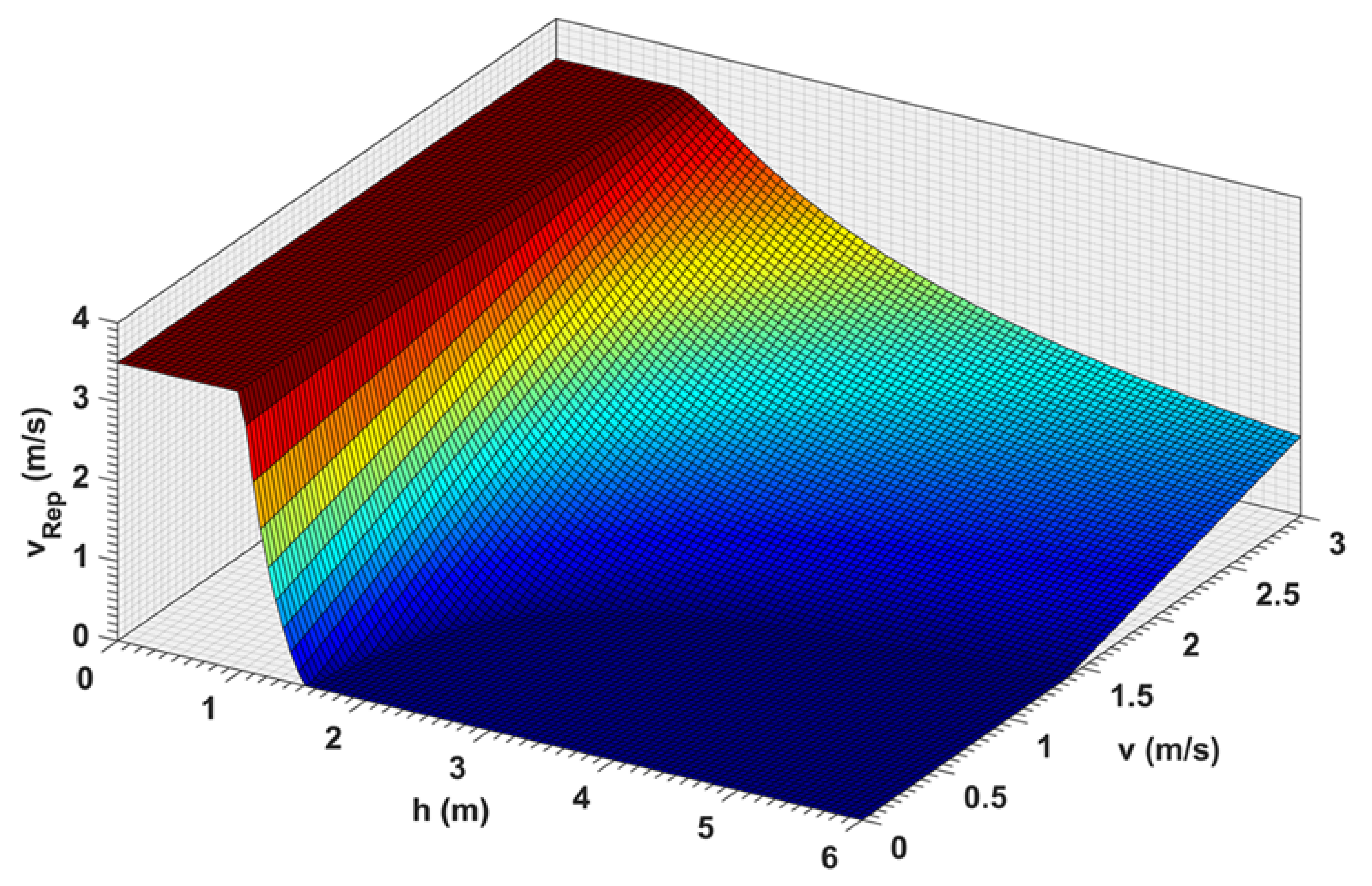

5. Reactive Control

5.1. Dynamic Model

5.1.1. Semi-Automatic Control

- Safe zone: [], where no risk of impact, thus, no opposing velocity perceived.

- Warning zone: [], low levels of opposing velocity.

- Transition zone: [], which makes the pilot aware of imminent impact through a sudden increase in repulsion.

- Collision zone: [], within which the impact is unavoidable.

6. Experimental Results

6.1. Platforms

6.2. MOT Results

6.2.1. Dataset

- Urban scenario with hovering mode UAV on static human bodies.

- Urban scenario with hovering mode UAV on dynamic human bodies (walking and jumping), with periods of blurred frames.

- Open arena with high-velocity flight and static human bodies.

- Open arena with high-velocity flight with several disappearances and re-appearances of the people in the field of view.

6.2.2. MOT Metrics

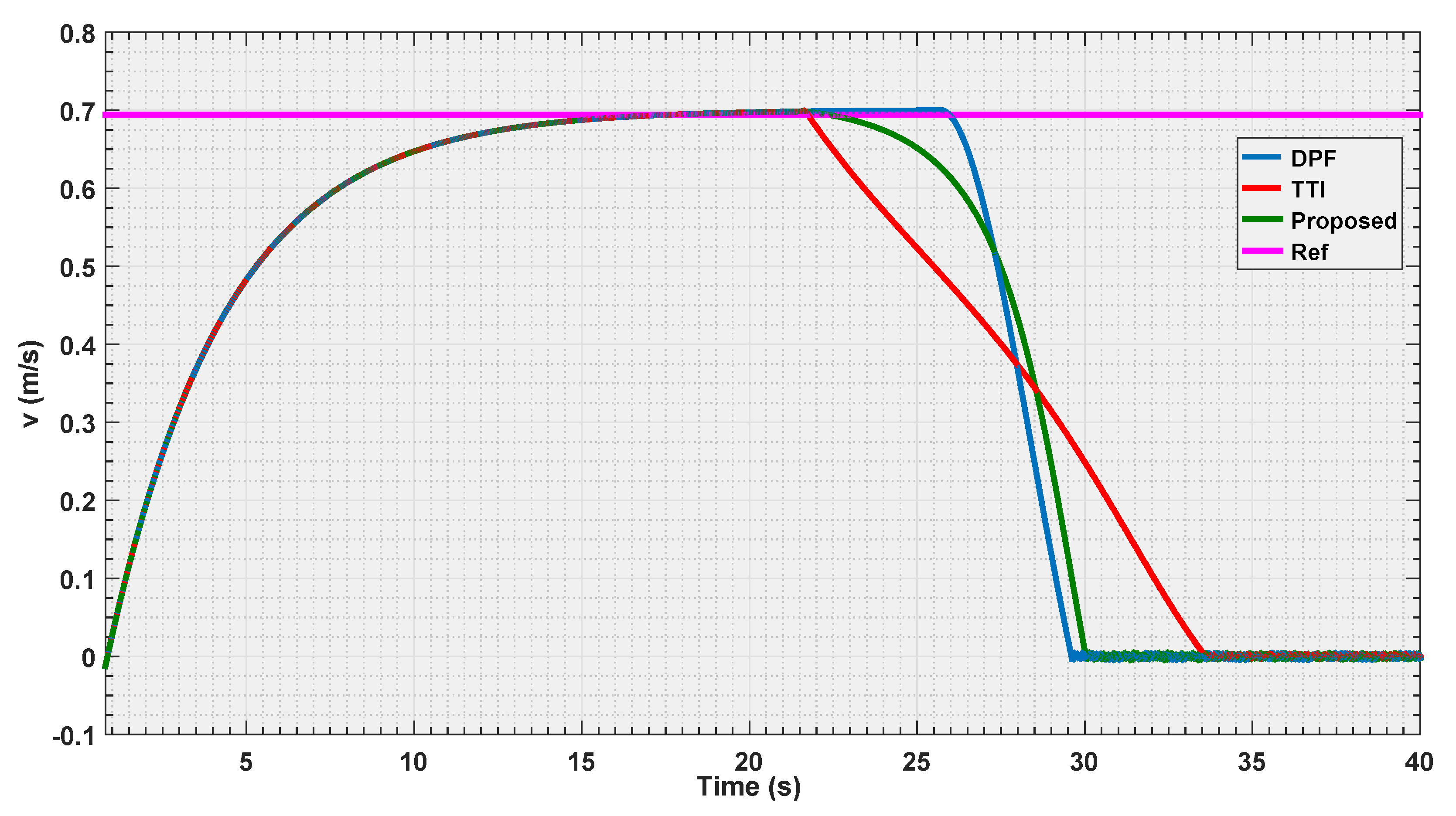

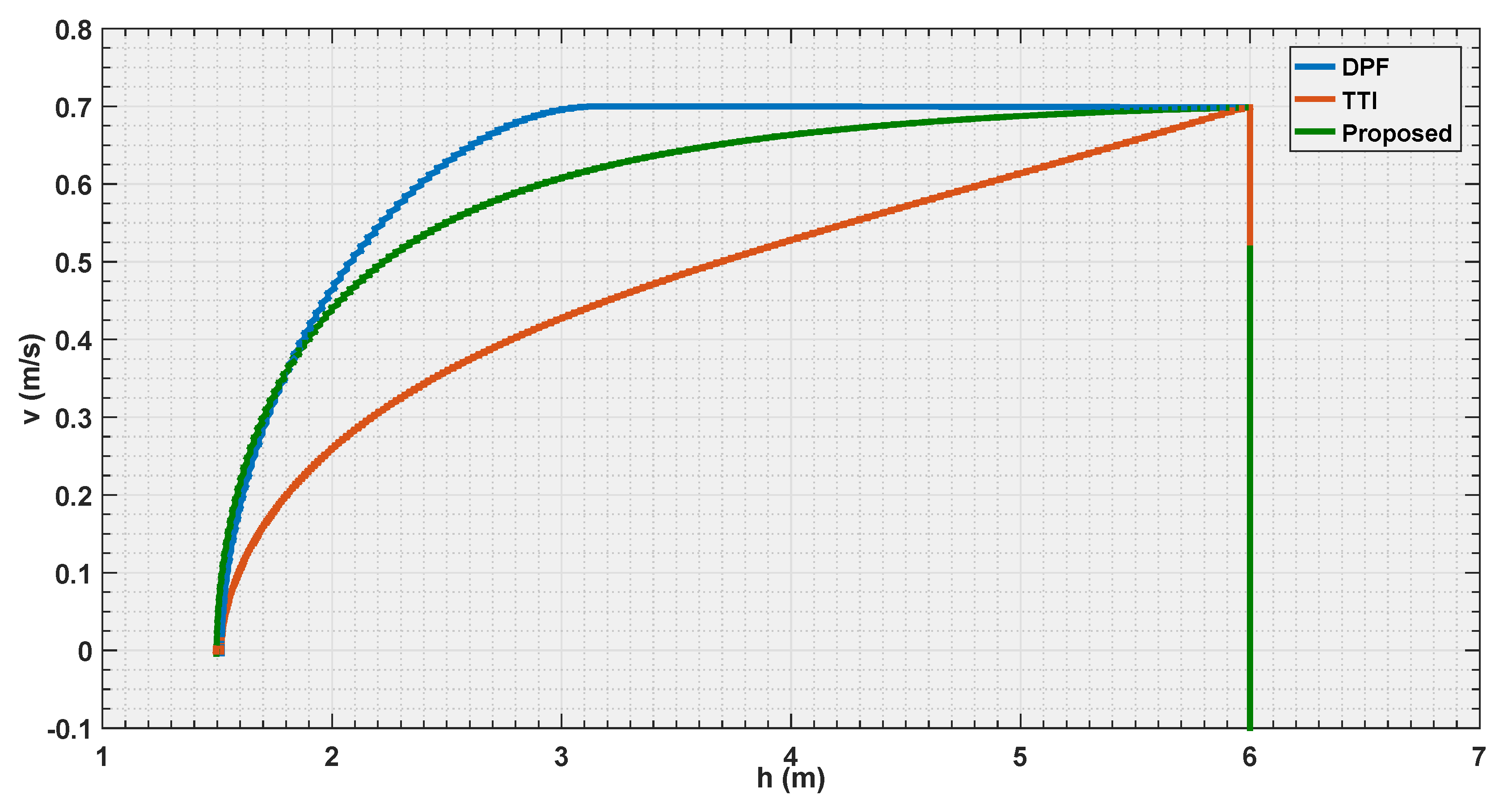

6.3. Reactive Control Results

6.3.1. Experiment 1

6.3.2. Experiment 2

6.3.3. Experiment 3

7. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Ahmad, A.; Tahar, K.N.; Udin, W.S.; Hashim, K.A.; Darwin, N.; Hafis, M.; Room, M.; Hamid, N.F.A.; Azhar, N.A.M.; Azmi, S.M. Digital aerial imagery of unmanned aerial vehicle for various applications. In Proceedings of the 2013 IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Mindeb, Malaysia, 29 November–1 December 2013; pp. 535–540. [Google Scholar]

- Ma, L.; Li, M.; Tong, L.; Wang, Y.; Cheng, L. Using unmanned aerial vehicle for remote sensing application. In Proceedings of the 2013 21st International Conference on Geoinformatics (GEOINFORMATICS), Kaifeng, China, 20–22 June 2013; pp. 1–5. [Google Scholar]

- Tampubolon, W.; Reinhardt, W. UAV Data Processing for Large Scale Topographical Mapping. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-5, 565–572. [Google Scholar]

- Li, Y.-C.; Ye, D.-M.; Ding, X.-B.; Teng, C.-S.; Wang, G.-H.; Li, T.-H. UAV Aerial Photography Technology in Island Topographic Mapping. In Proceedings of the 2011 International Symposium on Image and Data Fusion (ISIDF), Tengchong, China, 9–11 August 2011; pp. 1–4. [Google Scholar]

- Rathinam, S.; Almeida, P.; Kim, Z.; Jackson, S.; Tinka, A.; Grossman, W.; Sengupta, R. Autonomous searching and tracking of a river using an UAV. In Proceedings of the American Control Conference, New York, NY, USA, 11–13 July 2007; pp. 359–364. [Google Scholar]

- Yuan, C.; Liu, Z.; Zhang, Y. UAV-based forest fire detection and tracking using image processing techniques. In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 639–643. [Google Scholar]

- Sujit, P.B.; Sousa, J.; Pereira, F.L. Coordination strategies between UAV and AUVs for ocean exploration. In Proceedings of the 2009 European Control Conference (ECC), Budapest, Hungary, 23–26 August 2009; pp. 115–120. [Google Scholar]

- Govindaraju, V.; Leng, G.; Qian, Z. Visibility-based UAV path planning for surveillance in cluttered environments. In Proceedings of the 2014 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Toyako, Japan, 27–30 October 2014; pp. 1–6. [Google Scholar]

- Lilien, L.T.; ben Othmane, L.; Angin, P.; Bhargava, B.; Salih, R.M.; DeCarlo, A. Impact of Initial Target Position on Performance of UAV Surveillance Using Opportunistic Resource Utilization Networks. In Proceedings of the 2015 IEEE 34th Symposium on Reliable Distributed Systems Workshop (SRDSW), Montreal, QC, Canada, 28 September–1 October 2015; pp. 57–61. [Google Scholar]

- Qu, Y.; Jiang, L.; Guo, X. Moving vehicle detection with convolutional networks in UAV videos. In Proceedings of the 2016 2nd International Conference on Control, Automation and Robotics (ICCAR), Hong Kong, China, 28–30 April 2016; pp. 225–229. [Google Scholar]

- Kim, S.W.; Gwon, G.P.; Choi, S.T.; Kang, S.N.; Shin, M.O.; Yoo, I.S.; Lee, E.D.; Frazzoli, E.; Seo, S.W. Multiple vehicle driving control for traffic flow efficiency. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium (IV), Alcala de Henares, Spain, 3–7 June 2012; pp. 462–468. [Google Scholar]

- Ke, R.; Kim, S.; Li, Z.; Wang, Y. Motion-vector clustering for traffic speed detection from UAV video. In Proceedings of the IEEE First International of Smart Cities Conference (ISC2), Guadalajara, Mexico, 25–28 October 2015; pp. 1–5. [Google Scholar]

- Kruijff, G.J.M.; Tretyakov, V.; Linder, T.; Pirri, F.; Gianni, M.; Papadakis, P.; Pizzoli, M.; Sinha, A.; Pianese, E.; Corrao, S.; et al. Rescue robots at earthquake-hit mirandola, italy: A field report. In Proceedings of the 2012 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Station, TX, USA, 5–8 November 2012; pp. 1–8. [Google Scholar]

- Erdos, D.; Erdos, A.; Watkins, S. An experimental UAV system for search and rescue challenge. IEEE Aerosp. Electron. Syst. Mag. 2013, 28, 32–37. [Google Scholar] [CrossRef]

- Michael, N.; Shen, S.; Mohta, K.; Mulgaonkar, Y.; Kumar, V.; Nagatani, K.; Okada, Y.; Kiribayashi, S.; Otake, K.; Yoshida, K.; et al. Collaborative mapping of an earthquake-damaged building via ground and aerial robots. J. Field Robot. 2012, 29, 832–841. [Google Scholar] [CrossRef]

- Goodrich, M.A.; Morse, B.S.; Engh, C.; Cooper, J.L.; Adams, J.A. Towards using unmanned aerial vehicles (UAVs) in wilderness search and rescue: Lessons from field trials. Interact. Stud. 2009, 10, 453–478. [Google Scholar]

- Pestana, J.; Sanchez-Lopez, J.L.; Campoy, P.; Saripalli, S. Vision based gps-denied object tracking and following for unmanned aerial vehicles. In Proceedings of the 2013 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Linköping, Sweden, 21–26 October 2013; pp. 1–6. [Google Scholar]

- Xiao, J.; Yang, C.; Han, F.; Cheng, H. Vehicle and person tracking in aerial videos. In Multimodal Technologies for Perception of Humans; Springer: Berlin, Germany, 2008; pp. 203–214. [Google Scholar]

- Xiao, J.; Cheng, H.; Sawhney, H.; Han, F. Vehicle detection and tracking in wide field-of-view aerial video. In Proceedings of the 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 679–684. [Google Scholar]

- Prokaj, J.; Zhao, X.; Medioni, G. Tracking many vehicles in wide area aerial surveillance. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Providence, RI, USA, 12–21 June 2012; pp. 37–43. [Google Scholar]

- Rudol, P.; Doherty, P. Human body detection and geolocalization for UAV search and rescue missions using color and thermal imagery. In Proceedings of the 2008 IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2008; pp. 1–8. [Google Scholar]

- Portmann, J.; Lynen, S.; Chli, M.; Siegwart, R. People detection and tracking from aerial thermal views. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1794–1800. [Google Scholar]

- Schmidt, F.; Hinz, S. A scheme for the detection and tracking of people tuned for aerial image sequences. In Photogrammetric Image Analysis; Springer: Berlin, Germany, 2011; pp. 257–270. [Google Scholar]

- Burkert, F.; Schmidt, F.; Butenuth, M.; Hinz, S. People tracking and trajectory interpretation in aerial image sequences. Int. Arch. Photogram. Remote Sens. Spat. Inf. Sci. Comm. III (Part A) 2010, 38, 209–214. [Google Scholar]

- Tao, H.; Sawhney, H.S.; Kumar, R. Object tracking with bayesian estimation of dynamic layer representations. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 75–89. [Google Scholar] [CrossRef]

- Birk, A.; Wiggerich, B.; Bülow, H.; Pfingsthorn, M.; Schwertfeger, S. Safety, security, and rescue missions with an Unmanned Aerial Vehicle (UAV). J. Intell. Robot. Syst. 2011, 64, 57–76. [Google Scholar] [CrossRef]

- Wei, S.E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional pose machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4724–4732. [Google Scholar]

- Al-Kaff, A.; Martín, D.; García, F.; de la Escalera, A.; Armingol, J.M. Survey of computer vision algorithms and applications for unmanned aerial vehicles. Expert Syst. Appl. 2018, 92, 447–463. [Google Scholar] [CrossRef]

- Adams, S.M.; Friedland, C.J. A Survey of Unmanned Aerial Vehicle (UAV) Usage for Imagery Collection in Disaster Research and Management. In Proceedings of the 9th International Workshop on Remote Sensing for Disaster Response, Stanford, CA, USA, 15–16 September 2011; Available online: https://pdfs.semanticscholar.org/fd8e/960ca48e183452335743c273ea41c6930a75.pdf?_ga=2.32925182.1964409590.1549279136-1373315960.1549279136 (accessed on 15 September 2018).

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Ferworn, A.; Herman, S.; Tran, J.; Ufkes, A.; Mcdonald, R. Disaster scene reconstruction: Modeling and simulating urban building collapse rubble within a game engine. In Proceedings of the 2013 Summer Computer Simulation Conference, Toronto, ON, USA, 7–10 July 2013; p. 18. [Google Scholar]

- Yamazaki, F.; Matsuda, T.; Denda, S.; Liu, W. Construction of 3D models of buildings damaged by earthquakes using UAV aerial images. In Tenth Pacific Conference Earthquake Engineering Building an Earthquake-Resilient Pacific; Australian Earthquake Engineering Society: McKinnon, Australia, 2015; pp. 6–8. [Google Scholar]

- Verykokou, S.; Doulamis, A.; Athanasiou, G.; Ioannidis, C.; Amditis, A. UAV-based 3D modelling of disaster scenes for urban search and rescue. In Proceedings of the 2016 IEEE International Conference on Imaging Systems and Techniques (IST), Chania, Greece, 4–6 October 2016; pp. 106–111. [Google Scholar]

- Reardon, C.; Fink, J. Air-ground robot team surveillance of complex 3D environments. In Proceedings of the 2016 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Lausanne, Switzerland, 23–27 October 2016; pp. 320–327. [Google Scholar]

- Giitsidis, T.; Karakasis, E.G.; Gasteratos, A.; Sirakoulis, G.C. Human and fire detection from high altitude uav images. In Proceedings of the 2015 23rd Euromicro International Conference on Parallel, Distributed and Network-Based Processing (PDP), Turku, Finland, 4–6 March 2015; pp. 309–315. [Google Scholar]

- Kim, I.; Yow, K.C. Object Location Estimation from a Single Flying Camera. In Proceedings of the 2015 9th International Conference on Mobile Ubiquitous Computing, Systems, Services and Technologies (UBICOMM), Nice, France, 19–24 July 2015; pp. 82–88. [Google Scholar]

- Perez-Grau, F.; Ragel, R.; Caballero, F.; Viguria, A.; Ollero, A. Semi-autonomous teleoperation of UAVs in search and rescue scenarios. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 1066–1074. [Google Scholar]

- Rasmussen, C.; Hager, G.D. Probabilistic data association methods for tracking complex visual objects. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 560–576. [Google Scholar] [CrossRef]

- Kim, C.; Li, F.; Ciptadi, A.; Rehg, J.M. Multiple hypothesis tracking revisited. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 4696–4704. [Google Scholar]

- Huang, C.; Wu, B.; Nevatia, R. Robust object tracking by hierarchical association of detection responses. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2008; pp. 788–801. [Google Scholar]

- Kuhn, H.W. The Hungarian method for the assignment problem. Naval Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- McLaughlin, N.; Del Rincon, J.M.; Miller, P. Enhancing linear programming with motion modeling for multi-target tracking. In Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 6–9 January 2015; pp. 71–77. [Google Scholar]

- Siam, M.; ElHelw, M. Robust autonomous visual detection and tracking of moving targets in UAV imagery. In Proceedings of the 2012 IEEE 11th International Conference on Signal Processing (ICSP), Beijing, China, 21–25 October 2012; Volume 2, pp. 1060–1066. [Google Scholar]

- Gerónimo Gomez, D.; Lerasle, F.; López Peña, A. State-driven particle filter for multi-person tracking. In Advanced Concepts for Intelligent Vision Systems; Springer: Berlin/Heidelberg, Germany, 2012; pp. 467–478. [Google Scholar]

- Zhang, J.; Presti, L.L.; Sclaroff, S. Online multi-person tracking by tracker hierarchy. In Proceedings of the 2012 IEEE Ninth International Conference on Advanced Video and Signal-Based Surveillance (AVSS), Beijing, China, 18–21 September 2012; pp. 379–385. [Google Scholar]

- Khan, Z.; Balch, T.; Dellaert, F. MCMC-based particle filtering for tracking a variable number of interacting targets. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1805–1819. [Google Scholar] [CrossRef] [PubMed]

- Andriluka, M.; Roth, S.; Schiele, B. People-tracking-by-detection and people-detection-by-tracking. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Dufek, J.; Murphy, R. Visual pose estimation of USV from UAV to assist drowning victims recovery. In Proceedings of the 2016 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Lausanne, Switzerland, 23–27 October 2016; pp. 147–153. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Lake Tahoe, NV, USA, 2012; pp. 1097–1105. [Google Scholar]

- Cheng, G.; Zhou, P.; Han, J. Rifd-cnn: Rotation-invariant and fisher discriminative convolutional neural networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2884–2893. [Google Scholar]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4293–4302. [Google Scholar]

- Cheng, G.; Zhou, P.; Han, J. Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Watanabe, Y.; Calise, A.; Johnson, E. Vision-based obstacle avoidance for UAVs. In Proceedings of the AIAA Guidance, Navigation and Control Conference and Exhibit, Hilton Head, SC, USA, 20–23 August 2007; p. 6829. [Google Scholar]

- Gageik, N.; Benz, P.; Montenegro, S. Obstacle Detection and Collision Avoidance for a UAV with Complementary Low-Cost Sensors. IEEE Access 2015, 3, 599–609. [Google Scholar] [CrossRef]

- Mettenleiter, M.; Obertreiber, N.; Härtl, F.; Ehm, M.; Baur, J.; Fröhlich, C. 3D laser scanner as part of kinematic measurement systems. In Proceedings of the 1st International Conference on Machine Control & Guidance, Zurich, Switzerland, 61–69 June 2008. [Google Scholar]

- Fu, C.; Olivares-Mendez, M.A.; Suarez-Fernandez, R.; Campoy, P. Monocular Visual-Inertial SLAM-Based Collision Avoidance Strategy for Fail-Safe UAV Using Fuzzy Logic Controllers: Comparison of Two Cross-Entropy Optimization Approaches. J. Intell. Robot. Syst. 2014, 73, 513–533. [Google Scholar] [CrossRef]

- Du, B.; Liu, S. A common obstacle avoidance module based on fuzzy algorithm for Unmanned Aerial Vehicle. In Proceedings of the 2016 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), Chengdu, China, 19–22 June 2016; pp. 245–248. [Google Scholar]

- Hromatka, M. A Fuzzy Logic Approach to Collision Avoidance in Smart UAVs. 2013. Available online: https://digitalcommons.csbsju.edu/honors_theses/13/ (accessed on 15 July 2018).

- Olivares-Mendez, M.A.; Campoy, P.; Mellado-Bataller, I.; Mejias, L. See-and-avoid quadcopter using fuzzy control optimized by cross-entropy. In Proceedings of the 2012 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Brisbane, Australia, 10–15 June 2012; pp. 1–7. [Google Scholar]

- Bradski, G.R. Computer vision face tracking for use in a perceptual user interface. Intel Technol. J. 1998, Q2, 214–219. [Google Scholar]

- Rubinstein, R.Y.; Kroese, D.P. The Cross-Entropy Method: A Unified Approach to Combinatorial Optimization, Monte-Carlo Simulation and Machine Learning; Springer Science & Business Media: New York, NY, USA, 2013. [Google Scholar]

- Omrane, H.; Masmoudi, M.S.; Masmoudi, M. Fuzzy Logic Based Control for Autonomous Mobile Robot Navigation. Comput. Intell. Neurosci. 2016, 2016, 9548482. [Google Scholar] [CrossRef] [PubMed]

- Thongchai, S.; Kawamura, K. Application of fuzzy control to a sonar-based obstacle avoidance mobile robot. In Proceedings of the 2000 IEEE International Conference on Control Applications, Anchorage, Alaska, 25–27 September 2000; pp. 425–430. [Google Scholar]

- Lian, S.H. Fuzzy logic control of an obstacle avoidance robot. In Proceedings of the Fifth IEEE International Conference on Fuzzy Systems, New Orleans, LA, USA, 8–11 September 1996; Volume 1, pp. 26–30. [Google Scholar]

- Gageik, N.; Müller, T.; Montenegro, S. Obstacle Detection and Collision Avoidance Using Ultrasonic Distance Sensors for an Autonomous Quadrocopter. 2012. Available online: http://www8.informatik.uni-wuerzburg.de/fileadmin/10030800/user_upload/quadcopter/Paper/Gageik_Mueller_Montenegro_2012_OBSTACLE_DETECTION_AND_COLLISION_AVOIDANCE_USING_ULTRASONIC_DISTANCE_SENSORS_FOR_AN_AUTONOMOUS_QUADROCOPTER.pdf (accessed on 15 September 2018).

- Cheong, M.K.; Bahiki, M.R.; Azrad, S. Development of collision avoidance system for useful UAV applications using image sensors with laser transmitter. IOP Conf. Ser. Mater. Sci. Eng. 2016, 152, 012026. [Google Scholar] [CrossRef]

- Grzonka, S.; Grisetti, G.; Burgard, W. A Fully Autonomous Indoor Quadrotor. IEEE Trans. Robot. 2012, 28, 90–100. [Google Scholar] [CrossRef]

- Yano, K.; Takemoto, A.; Terashima, K. Semi-automatic obstacle avoidance control for operation support system with haptic joystick. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 1229–1234. [Google Scholar]

- Lam, T.M.; Mulder, M.; (René) van Paassen, M.M. Haptic Interface For UAV Collision Avoidance. Int. J. Aviat. Psychol. 2007, 17, 167–195. [Google Scholar] [CrossRef]

- Brandt, A.M.; Colton, M.B. Haptic collision avoidance for a remotely operated quadrotor uav in indoor environments. In Proceedings of the 2010 IEEE International Conference on Systems Man and Cybernetics (SMC), Istanbul, Turkey, 10–13 October 2010; pp. 2724–2731. [Google Scholar]

- Gómez-Silva, M.; Armingol, J.; de la Escalera, A. Multi-Object Tracking with Data Association by a Similarity Identification Model. In Proceedings of the 7th International Conference on Imaging for Crime Detection and Prevention (ICDP 2016), Madrid, Spain, 23–25 November 2016; pp. 25–31. [Google Scholar]

- Gómez-Silva, M.; Armingol, J.; de la Escalera, A. Multi-Object Tracking Errors Minimisation by Visual Similarity and Human Joints Detection. In Proceedings of the 8th International Conference on Imaging for Crime Detection and Prevention (ICDP 2017), Madrid, Spain, 13–15 December 2017; pp. 25–30. [Google Scholar]

- Al-Kaff, A.; Moreno, F.M.; San José, L.J.; García, F.; Martín, D.; de la Escalera, A.; Nieva, A.; Garcéa, J.L.M. VBII-UAV: Vision-based infrastructure inspection-UAV. In World Conference on Information Systems and Technologies; Springer: Cham, Switzerland, 2017; pp. 221–231. [Google Scholar]

- Fisher, R.; Santos-Victor, J.; Crowley, J. CAVIAR: Context Aware Vision Using Image-Based Active Recognition. 2005. Available online: https://homepages.inf.ed.ac.uk/rbf/CAVIAR/ (accessed on 20 January 2019).

- Bernardin, K.; Stiefelhagen, R. Evaluating multiple object tracking performance: The CLEAR MOT metrics. EURASIP J. Image Video Process. 2008, 2008, 246309. [Google Scholar] [CrossRef]

| SEQs | Frame Rate (FPS) | Resolution | Length (Frames) | Duration (s) |

|---|---|---|---|---|

| 1 | 15 | 960 × 540 | 221 | 14 |

| 2 | 30 | 960 × 540 | 251 | 8 |

| 3 | 15 | 960 × 540 | 120 | 8 |

| 4 | 15 | 960 × 540 | 645 | 43 |

| SEQs | ||||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 440 | 0 | 0 | 0 | 0.000 | 0.000 | 0.000 | 1.000 |

| 2 | 250 | 73 | 0 | 0 | 0.292 | 0.000 | 0.000 | 0.708 |

| 3 | 12 | 3 | 0 | 1 | 0.250 | 0.000 | 0.083 | 0.667 |

| 4 | 190 | 163 | 0 | 1 | 0.858 | 0.000 | 0.005 | 0.137 |

| Total | 892 | 236 | 0 | 2 | 0.265 | 0.000 | 0.002 | 0.733 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Kaff, A.; Gómez-Silva, M.J.; Moreno, F.M.; de la Escalera, A.; Armingol, J.M. An Appearance-Based Tracking Algorithm for Aerial Search and Rescue Purposes. Sensors 2019, 19, 652. https://doi.org/10.3390/s19030652

Al-Kaff A, Gómez-Silva MJ, Moreno FM, de la Escalera A, Armingol JM. An Appearance-Based Tracking Algorithm for Aerial Search and Rescue Purposes. Sensors. 2019; 19(3):652. https://doi.org/10.3390/s19030652

Chicago/Turabian StyleAl-Kaff, Abdulla, María José Gómez-Silva, Francisco Miguel Moreno, Arturo de la Escalera, and José María Armingol. 2019. "An Appearance-Based Tracking Algorithm for Aerial Search and Rescue Purposes" Sensors 19, no. 3: 652. https://doi.org/10.3390/s19030652

APA StyleAl-Kaff, A., Gómez-Silva, M. J., Moreno, F. M., de la Escalera, A., & Armingol, J. M. (2019). An Appearance-Based Tracking Algorithm for Aerial Search and Rescue Purposes. Sensors, 19(3), 652. https://doi.org/10.3390/s19030652