Apple Fruit Recognition Algorithm Based on Multi-Spectral Dynamic Image Analysis

Abstract

1. Introduction

2. Materials and Methods

2.1. Imaging Acquisition System

2.2. Experimental Environments

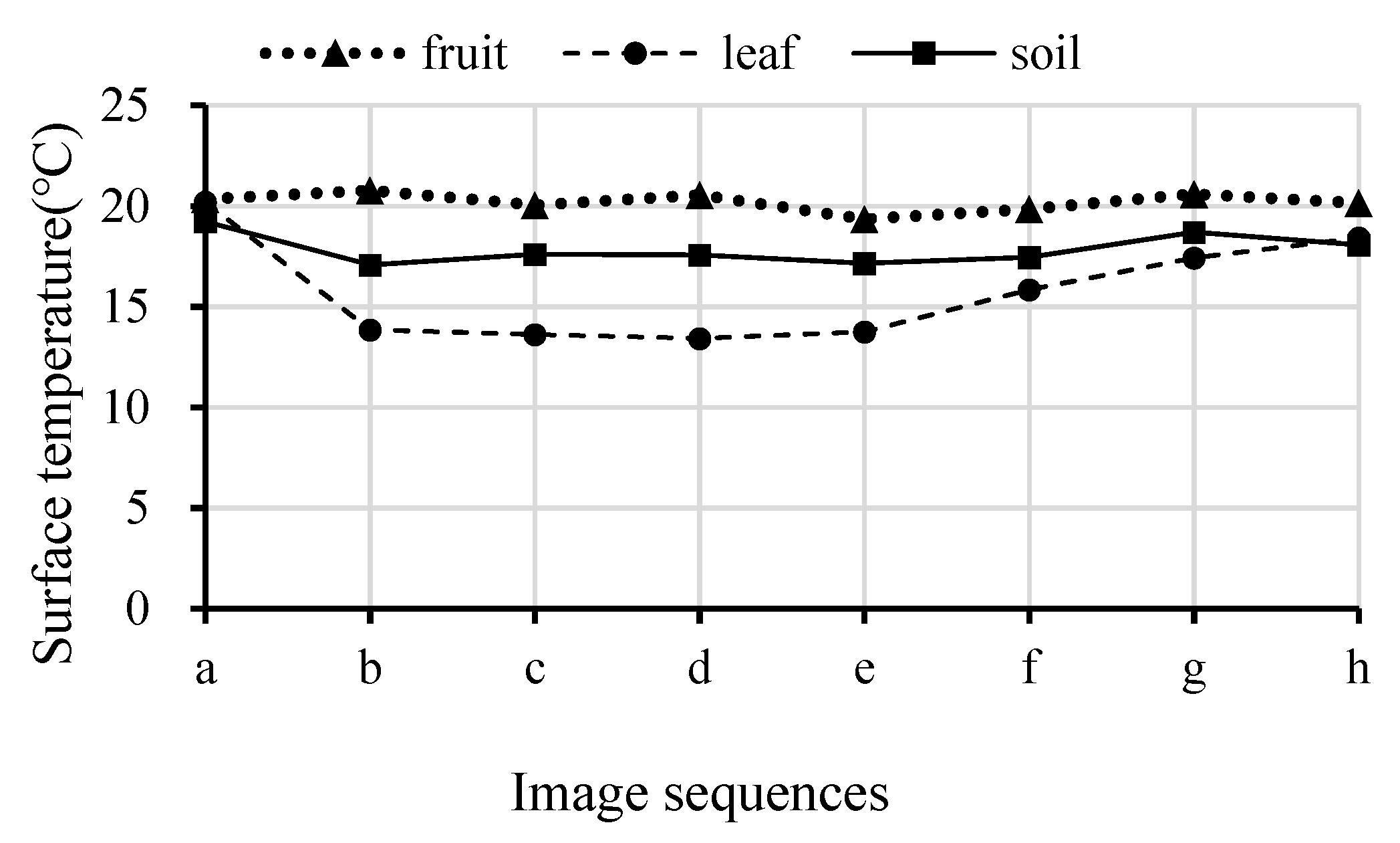

2.3. Fruit Tree Image Characteristics Analysis

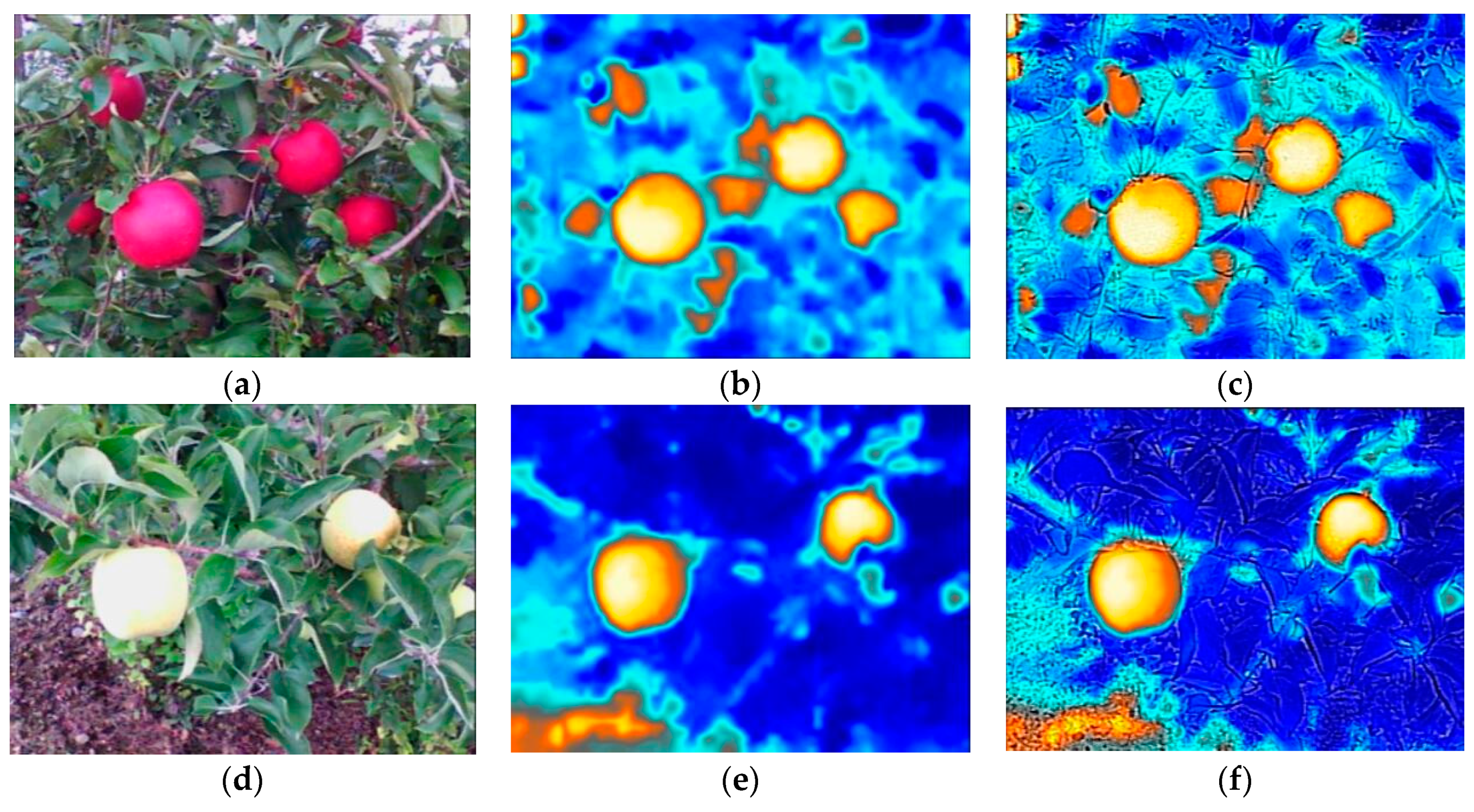

2.3.1. Image Characteristics of Different Apple Varieties

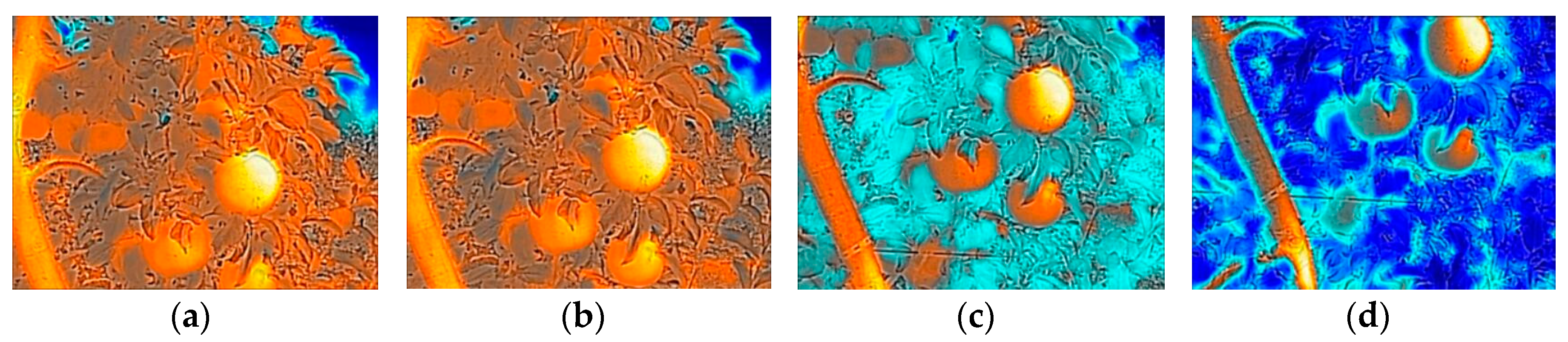

2.3.2. Image Characteristics with Different Camera Shot Angles

2.4. Fruit Recognition Algorithm

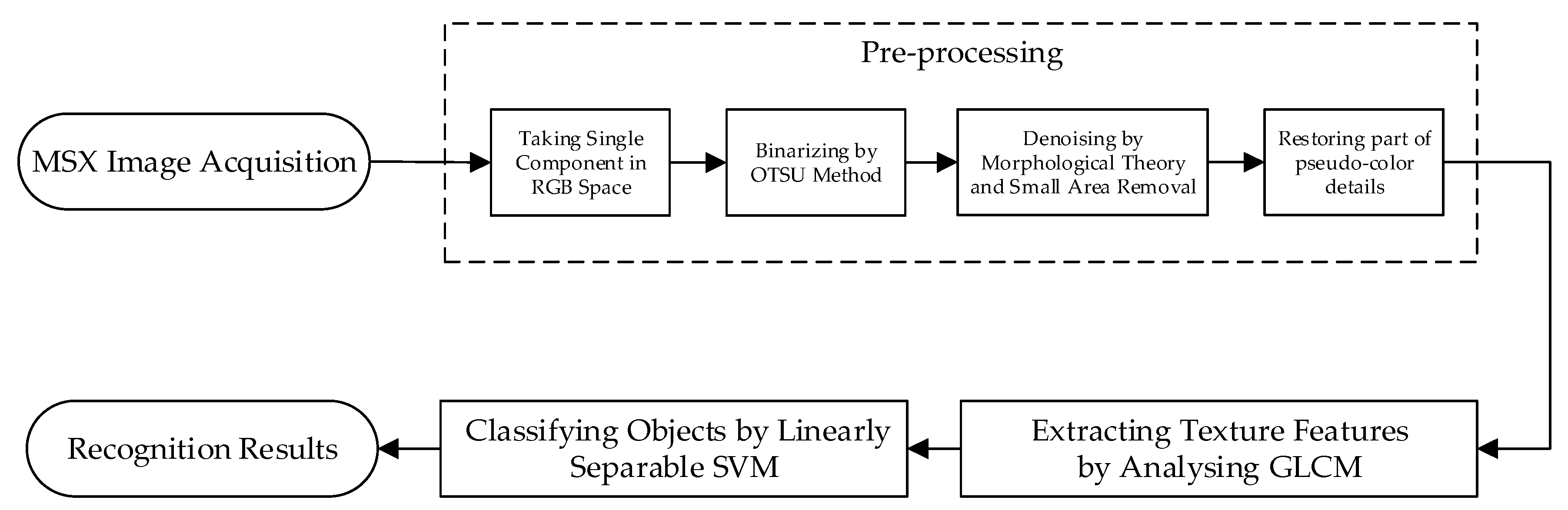

2.4.1. Pre-Processing

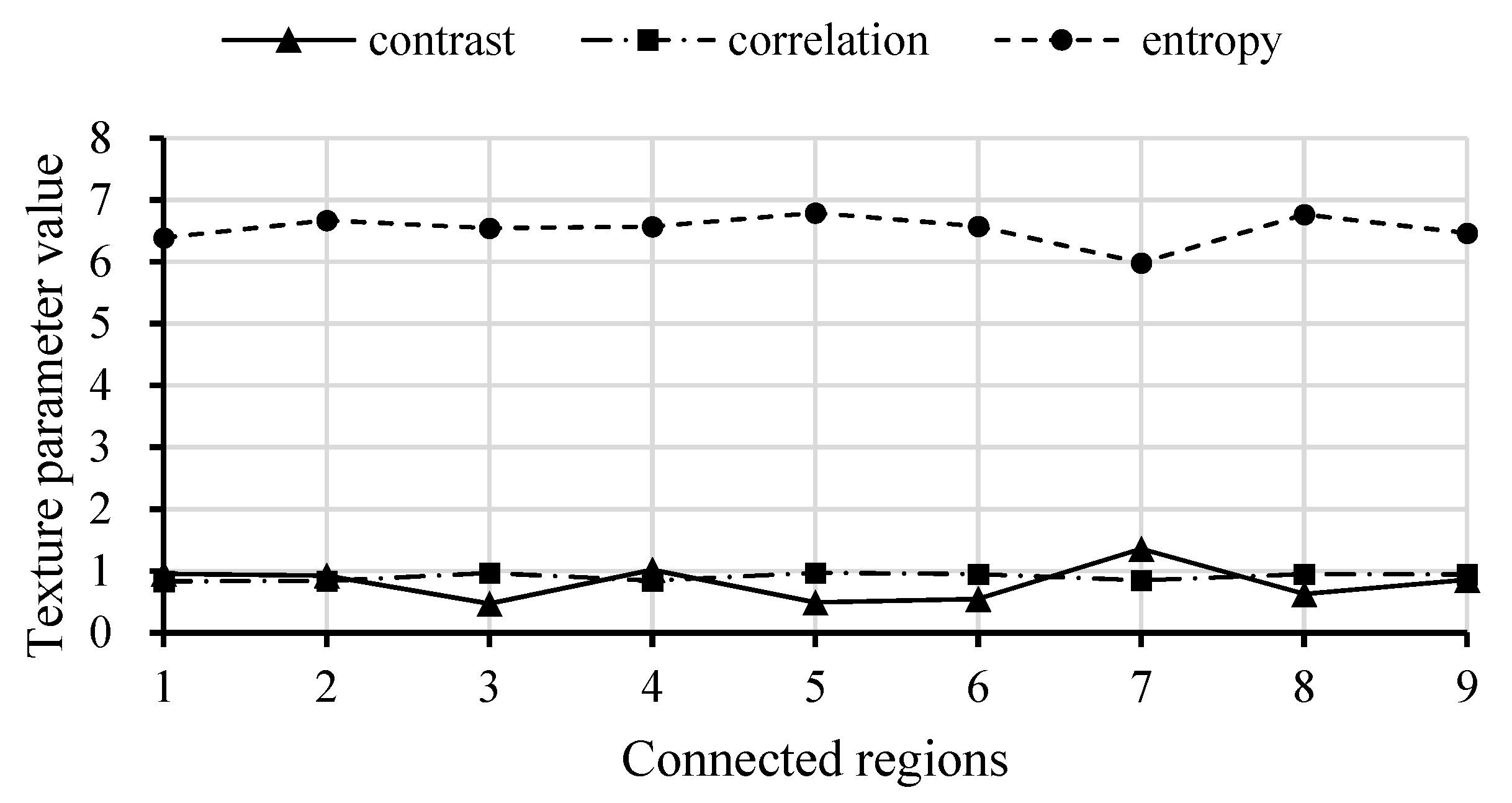

2.4.2. Texture Feature Extraction

2.4.3. Objects Classification

2.5. Measurable Parameters

3. Results and Discussion

3.1. Image Effects Using Different Light Stimulation

3.2. Recognition Results on Different Images

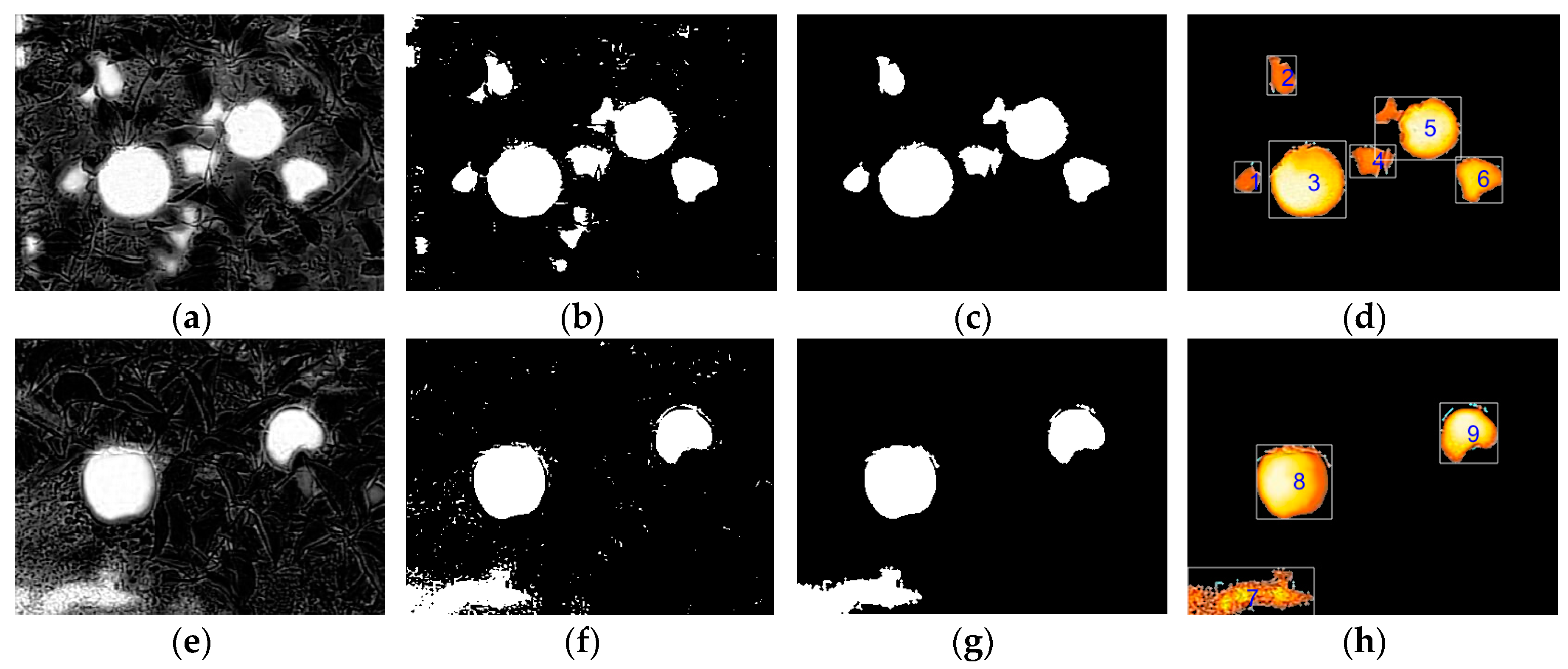

3.2.1. Image Pre-Processing Results

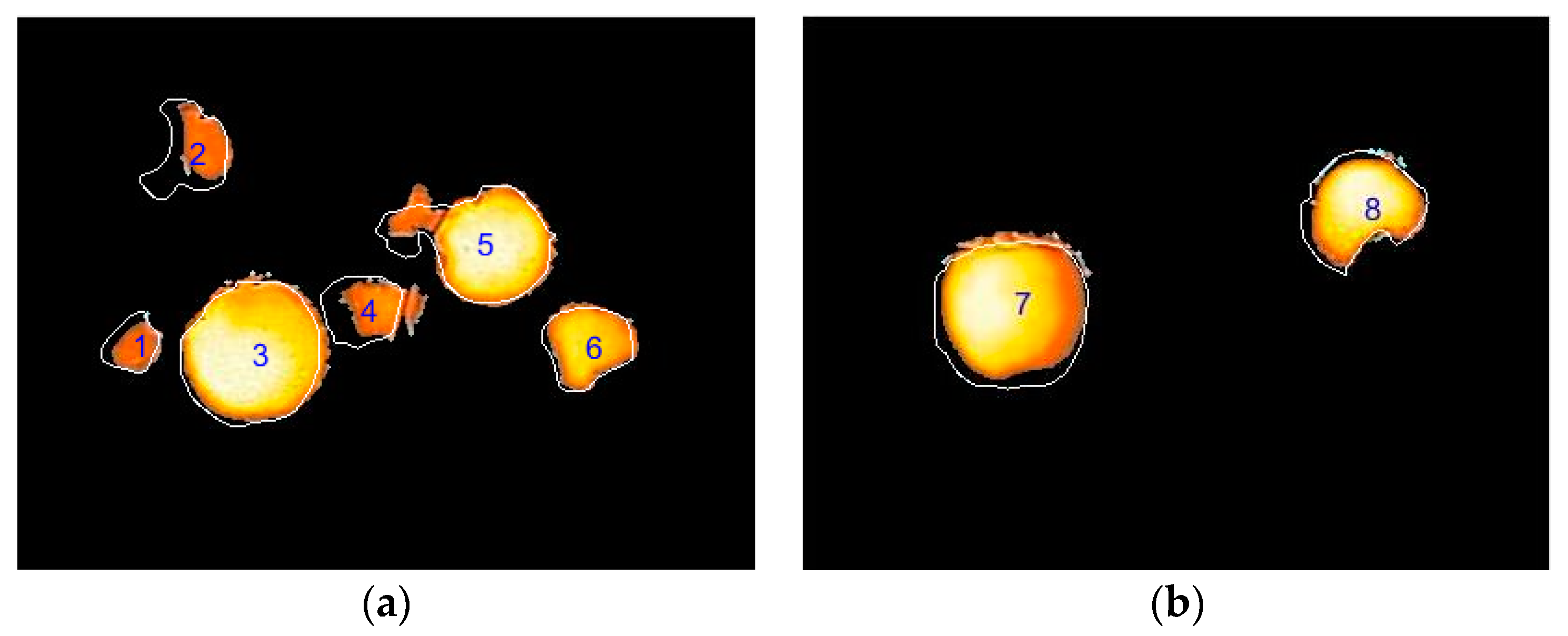

3.2.2. Assessment of Recognition Results

3.3. Time Efficiency Analysis

3.4. Discussion

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- United State Department of Agriculture - Economic Research Service (USDA-ERS). Fruit and Tree Nut Yearbook Tables. 2018. Available online: https://www.ers.usda.gov/data-products/fruit-and-tree-nut-data/fruit-and-tree-nut-yearbook-tables/#General (accessed on 9 November 2018).

- Gallardo, K.; Taylor, M.; Hinman, H. 2009 Cost Estimates of Establishing and Producing Gala Apples in Washington. Washington State University Extension Fact Sheet. FS005E. 2011. Available online: http://cru.cahe.wsu.edu/EPublications/FS005E/FS005E.pdf (accessed on 10 September 2018).

- Bulanon, D.M.; Burks, T.; Alchanatis, V. A multispectral imaging analysis for enhancing citrus fruit detection. Environ. Control Biol. 2010, 48, 81–91. [Google Scholar] [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed]

- Van Henten, E.J.; Hemming, J.; Van Tuijl, B.A.J.; Kornet, J.G.; Meuleman, J.; Bontsema, J.; Vanos, E.A. An Autonomous Robot for Harvesting Cucumbers in Greenhouses. Auton. Robots 2002, 13, 241–258. [Google Scholar] [CrossRef]

- Stein, M.; Bargoti, S.; Underwood, J. Image Based Mango Fruit Detection, Localisation and Yield Estimation Using Multiple View Geometry. Sensors 2016, 16, 1915. [Google Scholar] [CrossRef] [PubMed]

- Kondo, N.; Yamamoto, K.; Shimizu, H.; Yata, K.; Kurita, M.; Shiigi, T.; Monta, M.; Nishizu, T. A Machine Vision System for Tomato Cluster Harvesting Robot. Eng. Agric. Environ. Food 2009, 2, 60–65. [Google Scholar] [CrossRef]

- Bulanon, D.M.; Kataoka, T. A Fruit Detection System and an End Effector for Robotic Harvesting of Fuji Apples. Agric. Eng. Int. CIGR J. 2010, 12, 203–210. [Google Scholar]

- Liu, Z.X.; Liu, G. Apple maturity discrimination and positioning system in an apple harvesting robot. New Zeal. J. Agric. Res. 2007, 50, 1103–1113. [Google Scholar]

- Feng, J.; Zeng, L.; Liu, G.; Si, Y. Fruit Recognition Algorithm Based on Multi-source Images Fusion. Trans. Chin. Soc. Agric. Mach. 2014, 45, 73–80. [Google Scholar]

- Nguyen, T.T.; Vandevoorde, K.; Wouters, N.; Kayacan, E.; De Baerdemaeker, J.G.; Saeys, W. Detection of red and bicoloured apples on tree with an RGB-D camera. Biosyst. Eng. 2016, 146, 33–44. [Google Scholar] [CrossRef]

- Song, Y.; Glasbey, C.A.; Horgan, G.W.; Polder, G.; Dieleman, J.A.; van der Heijden, G.W. Automatic fruit recognition and counting from multiple images. Biosyst. Eng. 2014, 118, 203–215. [Google Scholar] [CrossRef]

- Gongal, A.; Silwal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Apple crop-load estimation with over-the-row machine vision system. Comput. Electron. Agric. 2016, 120, 26–35. [Google Scholar] [CrossRef]

- Bulanon, D.M.; Burks, T.F.; Alchanatis, V. Study on temporal variation in citrus canopy using thermal imaging for citrus fruit detection. Biosyst. Eng. 2008, 101, 161–171. [Google Scholar] [CrossRef]

- Raza, S.; Prince, G.; Clarkson, J.P.; Rajpoot, N.M. Automatic Detection of Diseased Tomato Plants Using Thermal and Stereo Visible Light Images. PLoS ONE 2015, 10, e123262. [Google Scholar] [CrossRef] [PubMed]

- Zhu, W.; Chen, H.; Ciechanowska, I.; Spaner, D. Application of infrared thermal imaging for the rapid diagnosis of crop disease. IFAC-PapersOnLine 2018, 51, 424–430. [Google Scholar] [CrossRef]

- Satone, M.; Diwakar, S.; Joshi, V. Automatic Bruise Detection in Fruits Using Thermal Images. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2017, 7, 727–732. [Google Scholar] [CrossRef]

- García-Tejero, I.; Durán-Zuazo, V.H.; Arriaga, J.; Hernández, A.; Vélez, L.M.; Muriel-Fernández, J.L. Approach to assess infrared thermal imaging of almond trees under water-stress conditions. Fruits 2012, 67, 463–474. [Google Scholar] [CrossRef]

- Wiriya-Alongkorn, W.; Spreer, W.; Ongprasert, S.; Spohrer, K.; Pankasemsuk, T.; Müller, J. Detecting drought stress in longan tree using thermal imaging. Maejo Int. J. Sci. Technol. 2013, 7, 166–180. [Google Scholar]

- Bulanon, D.M.; Burks, T.F.; Alchanatis, V. Image fusion of visible and thermal images for fruit detection. Biosyst. Eng. 2009, 103, 12–22. [Google Scholar] [CrossRef]

- Wachs, J.P.; Stern, H.I.; Burks, T.; Alchanatis, V. Low and high-level visual feature-based apple detection from multi-modal images. Precis. Agric. 2010, 11, 717–735. [Google Scholar] [CrossRef]

- Gan, H.; Lee, W.S.; Alchanatis, V.; Ehsani, R.; Schueller, J.K. Immature green citrus fruit detection using color and thermal images. Comput. Electron. Agric. 2018, 152, 117–125. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Bo, H.; Ma, F.; Jiao, L.C. Research on computation of GLCM of image texture. Acta Electron. Sin. 2006, 34, 155–158. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

| Parameters | Complete Fruit Regions | Incomplete Fruit Regions |

|---|---|---|

| No. of regions | 759 | 1012 |

| Average recognition precision | 95.74% | 87.50% |

| Average sensitivity | 92.69% | 72.96% |

| Average relative error | 8.68% | 37.04% |

| Step | Processing Time (ms) |

|---|---|

| Image Pre-processing | 725.63 |

| Texture features extraction | 13.32 |

| Objects classification | 1.09 |

| total | 740.04 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, J.; Zeng, L.; He, L. Apple Fruit Recognition Algorithm Based on Multi-Spectral Dynamic Image Analysis. Sensors 2019, 19, 949. https://doi.org/10.3390/s19040949

Feng J, Zeng L, He L. Apple Fruit Recognition Algorithm Based on Multi-Spectral Dynamic Image Analysis. Sensors. 2019; 19(4):949. https://doi.org/10.3390/s19040949

Chicago/Turabian StyleFeng, Juan, Lihua Zeng, and Long He. 2019. "Apple Fruit Recognition Algorithm Based on Multi-Spectral Dynamic Image Analysis" Sensors 19, no. 4: 949. https://doi.org/10.3390/s19040949

APA StyleFeng, J., Zeng, L., & He, L. (2019). Apple Fruit Recognition Algorithm Based on Multi-Spectral Dynamic Image Analysis. Sensors, 19(4), 949. https://doi.org/10.3390/s19040949