1. Introduction

Land Use and Land Cover (LULC) data are important inputs for countries to monitor how their soil and land use are being modified over time [

1,

2]. It is also possible to identify the impacts of increasing urban environments in different ecosystems [

3,

4,

5,

6], monitoring protected areas, and the expansion of deforested areas in tropical forests [

1,

7,

8,

9].

Remote sensing data and techniques are used as tools for monitoring changes in environmental protection projects reducing in most cases the prices of surveillance. An example is the LULC approach for monitoring Reduced Emissions from Deforestation and Forest Degradation (REDD+) [

10,

11] and for ecosystem services (ES) modeling and valuation [

12,

13,

14]. For the latter purpose, the LULC mapping has been used to enhance the results found for Costanza et al. [

15] that provided global ES values. In that research, the values have been rectified since its first publication [

16,

17]; the LULC approach provides land classes which allow to estimate ES by unit area, making it possible to extrapolate ES estimates and values for greater areas and biomes around the world by using the benefit transfer method [

1,

17,

18]. Nonetheless, Song [

19] highlights the limitation of these values estimations, since LULC satellite-based products have uncertainties related to the data.

From an Earth Observation (EO) perspective, it is desirable to have free and open data access, e.g., Landsat and Sentinel families [

20]. These are orbital sensors which, when combined (Landsat-5–8 and the optical sensor of the Sentinel family, the Sentinel-2, hereafter S-2), will provide a 3-day revisit time on the same point on the Earth surface [

21,

22]. In contrast, these sensors have a medium-quality spatial resolution (30 m for the Landsat and 10 m for the S-2) when compared with other data, such as the Quickbird (0.6 m) and Worldview (0.5 m), but can deliver satisfactory results when the correct methodology is applied [

23,

24,

25].

More recently, the United States (US) started to consider reintroduce prices for the Landsat products acquisition [

26]. That is a step backwards when we consider that more than 100,000 published articles were produced since 2008 when US made Landsat products available for free. In contrast, S-2 products are becoming more popular, and despite not having enough data to produce temporal analysis yet, they have a better spatial resolution to develop more precise results [

22,

27,

28,

29].

In the perspective of monitoring areas with high cloud coverage, such as tropical regions and estuarine areas, some developments have been reported in using Synthetic Aperture Radar (SAR) products [

30,

31,

32]. To increase SAR usage products for environmental monitoring and security, the European Spatial Agency (ESA) launched the Sentinel-1 (hereafter S-1) in 2014 [

33]. Similar to the S-2 products, the S-1 is available for free in the Copernicus platform and covers the entire Earth [

34,

35].

Recently, processes such as stacking, coregistration, and data fusion of optical with radar products have been applied to improve classification quality and its accuracy. Although radar polarization may be a barrier to identify some features, this product does not have the presence of atmospheric obstacles, such as clouds [

11,

27,

36].

The synergetic use between optical and radar data is a recognized alternative for urban areas studies [

37,

38,

39]. The literature indicates that although the limitations of the microdots in detecting the variety of spectral signatures over the urban environment, such data aggregation contributes to improve the classification accuracy. Also, the importance of radar data has been emphasized in tropical environmental studies [

31,

40,

41,

42], once the cloud coverage in these areas is high throughout the year hindering the use of optical images [

43,

44], and therefore, making radar data an alternative to acquiring imagery during all months of the year in these locations.

Another alternative described in the literature for accuracy enhancement of final classification results is the inclusion of optical indexes of vegetation, soil, water, among others [

45,

46,

47,

48]. Indexes included in the data sets allow us to increase the training data range and class statistical possibilities of classification algorithms, thereby raising their efficacy.

Besides choosing suitable products and deciding how to combine different imagery types, the developer of LULC classifications must test different methodologies to select the most accurate for each landscape analyzed [

27,

36,

49]. In this sense, the Machine Learning (ML) algorithms are presented as optimal solutions to supervised classification, where there is no need of ground information measurements of the entire landscape to determine the different classes of LULC for the whole area. Some ML algorithms have been used to classify images with low, medium, or high spatial resolutions [

50,

51], among them are the Random Forest (RF) [

52], Support Vector Machine (SVM) [

53], Artificial Neural Networks (ANN) [

54], and the k-nearest neighbor (k-NN) [

55]. In addition, for the proper selection of satellite imagery, application (or not) of data fusion, and choosing the fittest classification algorithm method, we should also select computer programs that are robust enough to run each of those LULC ML classifications [

50].

In this perspective, this study aims to investigate the synergy use between S-1 and S-2 products to identify the suitability for LULC based on ML classification approach. In addition, derivate products such as vegetation and water indexes and SAR textural analysis were applied in the RF classification to finally test all data sets and select the most thematic accurate product for tropical urban environments at regional spatial scale.

3. Results

The analysis of potentially separable classes through the VV and VH polarizations are presented in

Figure 3. The separability found with low values occurs due of a superficial backscatter, highlighting in this case separation in the order of 7 dB, 6 dB, and 9 dB, for airport, beaches, and water with sediment, respectively. On the other hand, we could also identify the occurrence of backscatter double bouncing (built-up areas) and volumetric (primary and secondary vegetation). Because of the identified backscattering, the possibility of class identification through RF increases. However, for the other classes, in which the box plots significantly overlap, the likelihood of variable distinction decreases.

For the bands explored in the analysis of separation by the spectral response (surface reflectance) of each class (

Figure 4), we could see that some classes tend to get overlaid, once their spectral responses are quite similar in different wavelengths. This was the case for agriculture, grassland, primary vegetation, and urban vegetation, in which the lines generated in the dispersion have close values at different wavelengths. A high separability of classes was noted in the following classes: mining, airport, water with sediments and water without sediments. The classes that present different spectral behaviors from the others tend to perform better classification results since when applying the RF algorithm, it can produce trees and choose the variables for classification with higher precision. The analysis of these backscattering and spectral patterns allows us to provide information for further monitoring of these classes in the study area.

The JM and TD variability results are illustrated in matrix presented in

Table 5. For the separability of classes in S-1, we were able to identify only good values (above 1.8) for some airport separability with other classes, for primary vegetation separability with water with sediments and water with sediments with water without sediments. On the other hand, the class separability in for the S-2 bands was significant for different classes, reaching the maximum value for both water categories and primary vegetation. These separability results reassure us that the potential of S-2 to identify a more significant number of classes is significantly better than for the S-1 to do so.

In

Figure 5 it is illustrated the six LULC classification produced.

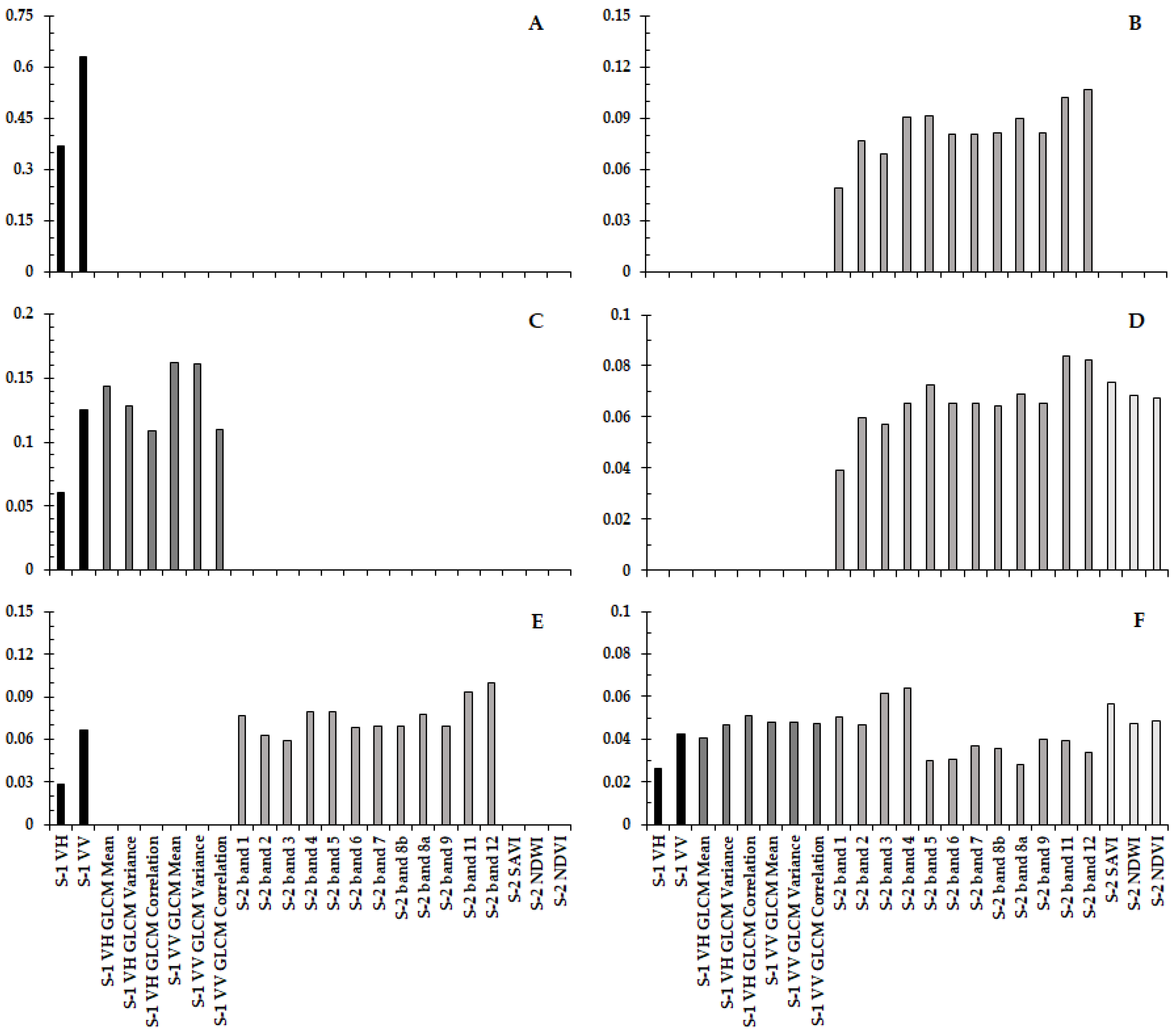

The contribution of each band for the six RF classifications produced is described in

Figure 6A–F. Band 12 of the S-2 product (SWIR band) was the only band that repeats, as the most significant contributor for the RF classification made (see

Figure 6B,E), this band is the main contributor for the classifications of S-2 only and the integration of S-1 with S-2. Thus, in all the classifications that contain the S-2 band, some of its bands were identified as one of the most significant contributors to the classification. Therefore, for the classification with all products, the red band of S-2 had the highest contribution (0.0641), for S-1, together with S-2, and for the S-2 only, it was SWIR band 12 of S-2 (0.1 and 0.1067, respectively), and for S-2 and indexes it was the SWIR band 11 (0.084). On the other hand, for the classifications that only consider the SAR products, it was shown that the largest contribution was from S-1 VV (0.6307), and for S-1 and its textures, it was the S-1 VH GLCM Mean (0.1434).

The PA and UA results are presented in

Table 6. The optical integration with radar and the optical only classifiers stands out with generally better results of PA. The worst results were found for classifiers without the S-2, being S-1 only and S-1 with GLCM secondary products. The UA followed a similar trend. The worst result by class was found for the agriculture and mining classes in the classifier that used radar and its textures, where the results for both PA and UA were equal to 0%. However, the mining class achieved 100% in PA for all classifiers that had S-2 bands. S-2 only, S-2 with its indexes, and the integration of S-1 with S-2 had more than one PA equal to 100%; Agriculture (C1) and mining (C8) were repeated in all these classifications, for the integration of S-1 and S-2, the Airport (C2) class also had PA equals to 100%. The only UA with 100% was for the identification of beaches in the classification S-2 with indexes.

Table 7 illustrates the OA and the Kappa coefficients found in this research. It is possible to understand that the integration of the S-1 and S-2 products resulted in a more precise product (91.07% of OA and 0.8709 of Kappa) and, as expected, the S-1 product alone had the worst result of all the analyses (56.01% of OA and 0.4194 of Kappa). The inclusion of textures in the S-1 products increased the results of the RF classification (61.61% OA and 0.4870 of Kappa), on the other hand, the inclusion of vegetation and water indexes in the S-2 product reduced its OA (89.45%) and Kappa coefficient (0.8476) when compared with the S-2 alone (89.53% of OA and 0.8487 for the Kappa coefficient). The integration of all the products analyzed produced the worst result among all the data combination that have S-2 products involved (87.09% OA and 0.8132 of Kappa coefficient). However, the results of OA and Kappa coefficient for the four best classifications were similar.

4. Discussion

Among the ML methods described in the literature [

50], SVM and RF stand out for their good classification accuracy. These methods usually have good results when compared with similar methods such as k-NN, or more sophisticated ones, such as ANN and Object Based Image Analysis (OBIA) methods [

27,

36,

49]. Since these two types of ML algorithms are the most outstanding, we analyzed the discussion considering some papers that used both algorithms.

We verified that the application of optical radiometric indexes and radar textures is widely accepted as mean to improve ML classification [

11,

27,

36,

46,

63,

78]. However, our OA result, when considering all bands in the classification, was lower than for S-1 and S-2, S-2 only, and S-2 with indexes. These results show that the insertion of the data derived from the optical image did not have a significant impact on the final classification, whereas the SAR product data, which improved the classification when considering S-1 only, contributed to the classification, but not enough to raise OA when all bands were considered. Some authors argue that major classification enhancements occur only when we insert primary data into the dataset, such as SRTM [

63,

64,

79].

The lowest OA values found were for S-1 products; this is in agreement with what is found in the literature [

27,

36,

40,

46,

80]. SAR products, while having the advantage of penetrating the clouds and always giving views consistent with the Earth’s surface, fail when forced to distinct a vast amount of features (classes) [

80,

81]. In our study, this is noticeable in

Table 5, in which JM and TD values were lower than 1.8 in almost all categories, and this can be seen in local studies on the Brazilian Amazon coast, even using radar images with better quality than those of S-1 [

82,

83]. Discrimination of a large number of classes on the radar is possible only through the application of advanced techniques, such as SAR polarimetry [

84].

Maschler et al. [

85], in an application of the RF classifier with high spatial resolution data of 0.4 m (Hyspex VNIR 1600 airborne data), obtained excellent separability of the classes in the electromagnetic spectrum. From this good separability, they were also able to produce an excellent OA of 91.7%. This separability and these good results can be identified in our study too. The possibility of separating the classes from the insertion of the training and validation samples is fundamental for the satisfactory production of the results for LULC classification.

Among the authors who applied several ML methods (RF, SVM, and k-NN), Clerici et al. [

36] consider the data fusion of S-1 and S-2 products. They also applied radiometric vegetation indexes (NDVI, Sentinel-2 Red-Edge Position index (S2REP), Green Normalized Difference Vegetation Index (GNDVI), and Modified SAVI) and textural analysis of the S-1, in order to interpret their contributions to the supervised classification accuracy. Six classes were tested after segmentation to have similar pixel values. Their results were considerably worse than ours. In their image stacking, they found an OA of 55.50% and a Kappa coefficient of 0.49. The isolated results of S-1 and S-2 were also worse than our integration. They suggest SVM for their study area because it presents better accuracy results.

Whyte et al. [

27] applied RF and SVM algorithms for LULC classification in the synergy of S-1 and S-2. These authors used the eCognition image processing software to produce segments and the ArcGIS 10.3 for RF and SVM classifications. They applied derivatives from both S-1 and S-2 to test the data combination for LULC. They selected 15 classes and found better results when using all products (S-1, S-2, and their derivatives) using the RF algorithm. The OA was 83.3% and the Kappa coefficient was 0.72. However, all the scenarios of synergy appeared to have higher results than using optical data only. This study contrast to what we found, once our results with derivatives were worse in almost all circumstances, except in S-1 and GLCM stacking.

Zhang & Xu [

49] also applied the fusion test of optical images and radar for multiple classifiers (RF, SVM, ANN, and Maximum Likelihood). Optical images of Landsat TM and SPOT 5 were used, while SAR images were of ENVISAT ASAR/TSX. The authors could interpret that the best values were found for the RF and SVM classifications, while the fusion of optical and SAR data contributed to the improvement of the classification, increasing the accuracy by 10%.

Deus [

46] used the synergy of ALOS/PALSAR L band and Landsat 5 TM and applied several vegetation indexes and SAR textural analysis. The author applied the SVM algorithm in order to obtain five LULC classes. Their highest OA was 95% when only the features with the best performance in the classification were combined, including both PALSAR and TM bands and their derivatives.

Jhonnerie et al. [

86] and Pavanelli et al. [

40] also used ALOS/PALSAR and the Landsat family. They considered LULC classes 8 and 17, respectively. Both studies applied the RF algorithm. While the first authors used the ERDAS image application for RF classification, the second authors used the R software for their image classification. They applied vegetation indexes and GLCM textural analysis. For the RF classification, both authors found their highest OA and Kappa coefficient results for the hybrid model, 81.1% and 0.760 [

86] and 82.96% and 0.81 [

40].

Erinjery et al. [

11] also used the synergy between S-1 and S-2, and their derivatives to compare results from two ML, including Maximum Likelihood and RF. The total number of classes was seven. For the RF classification, an OA of 83.5% and a Kappa coefficient of 0.79 were found. They state the inclusion of SAR data and textural features for RF classification as a tool to improve the classification accuracy. Similarly to our study, they found an OA lower than 50% when using only the S-1 product.

Shao et al. [

78] integrated the S-1 SAR imagery with the GaoFan optical data to apply a RF classification algorithm in six different LULC classes. They also produce SAR GLCM textures and vegetation indexes. Their best result was obtained considering all the features stacked. An OA of 95.33% and a Kappa coefficient of 0.91 was obtained. The use of S-1 only had the worst result, with an OA of 68.80% and a Kappa coefficient of 0.35.

Haas & Ban [

37], in a data fusion analysis of S-1 and S-2, applied the SVM classification method. Considering 14 classes, they found an OA of 79.81% and a Kappa coefficient of 0.78. The authors suggested the postclassification analysis to improve its accuracy once their final objective was to have area values to apply the benefits transfer method of Burkhard et al. [

17] to environmentally evaluate the urban ecosystem services of an area. The number of classes was similar to our study, and it was possible to check that the accuracy was superior.

In regional studies [

2], and for global applications [

87,

88] of RF classification, the results found of OA were below those of ours. Their OA results were 75.17% [

88], 63% for South America using RF [

87], and 76.64% for Amazon LULC classification [

2]. All these studies used satellite data with a temporal resolution of 16 days and a spatial resolution of 30 m. In contrast, we found results with a revisit time of five days for optical and six days for SAR, and with a greater spatial resolution (10 m). Also, we produced a dataset with more classes (12) against six [

87] and seven [

88] classes found for global studies.

5. Conclusions

In this work, the best result found was in the integration of S-1 and S-2 products. In general, integrating the vegetation and water indexes and SAR textural features made the OA and kappa coefficient decrease. The worse result was found for the S-1 only classification. The results encountered agreed in its most part with the literature. For our better classifications, the OA results were significantly greater than what is found in the literature for global and Amazon applications of RF classification.

In both the PA and UA scenarios and the Kappa statistic scenario it can be stated that the integration of S-1 and S-2 presented better results in the implemented ML technique. However, if on the one hand the GLCM increased the SAR product accuracy, on the other hand, the inclusion of vegetation and water indexes decreased the optical accuracy, when compared with the single use of S-1 and S-2, respectively. Lastly, the results found for the integration of all products were worse than the ones observed for the combination of S-1 and S-2 only.

Depending on the final aim of the LULC classification, it could be relevant to make a postclassification analysis because many spectral responses resemble each other and can confuse the ML process. However, in the best scenario produced, the accuracy found was satisfactory for several types of analysis. Furthermore, it is possible to use the data integration of S-1 and S-2 to LULC cover classification in tropical regions. It is noteworthy that few studies with similar methodology were found in the literature for the southern hemisphere.

In this sense, the research findings can contribute to current knowledge on urban land classification since methodology applied produces more accurate local data. Furthermore, the present results were obtained by using a smaller revisit period and a higher number of land classes than previous global studies which considered our study area. Future work must be done with S-1 and its variables; once in tropical regions it is difficult to have long terms of optical data available. Finally, we encourage the synergetic use of S-1 and S-2 for LULC classification, considering the availability on near date.