1. Introduction

Due to their high mobility and flexibility, unmanned aerial vehicle (UAV) systems with sensors onboard can be organized to carry out monitoring alike tasks without carrying any human personnel [

1]. In the past decade, we have witnessed increasing military and civilian applications such as wind estimation, managing wildfire, disaster monitoring, remote sensing, traffic monitoring, border surveillance and mobile wireless coverage, etc. [

2,

3,

4,

5]. These applications often require a team of cooperating UAVs to continually track one or more moving entities [

6,

7,

8]. For example, in the case of search and rescue task, the rescued agents should be kept under constant observation to ensure their safety. However, since the observation sensors of UAVs have limited sensing range and the number of UAVs might be less than that of moving targets, these UAVs should cooperate with each other in information sharing and path planning to maximize the number of targets under observation.

Along the main thread of the related research, the problem of moving targets search by UAV swarms has been characterized as a special form of Cooperative Multi-robot Observation of Multiple Moving Targets (CMOMMT) problem formalized in Ref. [

9]. In the CMOMMT model, a team of homogeneous mobile robots is deployed to observe a set of targets moving within a restricted area of interest. The goal is to keep as many targets as possible under observation by at least one of the robots. In traditional CMOMMT approaches, the robots make decisions based on two separate factors: the targets currently under observation by a robot, and the need for coordination with other UAVs [

9,

10]. As far as we know, all the previous works consider these factors separately, and UAV operating decisions are not made from a clear metric.

The original contribution of this paper is a novel distributed algorithm called PAMTS, which stands for Profit-driven Adaptive Moving Targets Search with UAV Swarms. In PAMTS, the search region is first partitioned into a set of equally sized cells, and an observation history map for each cell is associated with individual UAVs. The history maps can be exchanged among UAVs within communication ranges to enrich their global knowledge, such that better moving decisions can be made. The decision making involves a trade-off between two intentions: the follow intention, which means keeping track of targets currently under observation; and the explore intention, which means searching recently unexplored cells for discovering more targets. The weights on the two intentions are implicitly captured by profit-of-follow (PoF) and profit-of-explore (PoE) respectively at each cell, and they will be combined into a single metric called observation profit. With this metric value for each cell calculated, the UAV will be able to make decisions on where to move by picking a destination cell with the best observation profit.

The rest of this paper is organized as follows. In

Section 2, we discuss the related studies in multiple moving targets search. The problem formulation is introduced in

Section 3.

Section 4 describes our algorithm in detail. In

Section 5, simulation results are presented. Finally, we conclude the paper in

Section 6.

2. Related Studies

Moving targets search with a UAV swarm has attracted increasing research attention [

11,

12,

13,

14,

15]. In essence, to effectively observe multiple moving targets, UAVs in the swarm should cooperate with each other like teammates [

16,

17,

18].

In a broader context, this can be viewed as a special form of the Cooperative Multi-robot Observation of Multiple Moving Targets (CMOMMT) problem, which is NP-hard [

9]. In essence, robots work in

or

mode to maximize their observation coverage on targets. Mode switching is based on the presence of targets in the field of view (FOV) of each robot. On the one hand, when a robot finds one or more targets in its FOV, it changes to

mode and moves toward the center of mass of all the detected moving targets. On the other hand, when the robot is not monitoring any target, the robot switches to

mode for seeking a new target. The local force vectors, introduced in Refs. [

9,

10], suggest that a robot is attractive by nearby targets and repulsive by nearby robots. The calculation of the local force vectors is shown in

Figure 1. To reduce overlapping observations on the same target, Paker [

10] extends their initial work with a new approach called A-CMOMMT, which is based on the use of weighted local force vectors. In Refs. [

19,

20], the authors propose a behavioral solution with an algorithm, called B-CMOMMT, which adds the

mode of operation to reduce the risk of losing a target. A robot that is about to lose a target broadcasts a help request to other robots and the robots in

mode respond to this request by approaching the requester. Furthermore, personality CMOMMT (PCMOMMT) [

21] uses the information entropy to balance the contradiction between the individual benefit and the collective benefit. More recently, C-CMOMMT [

22] proposes an approach based on contribution in which each robot is endowed a contribution value derived from the number of assigned targets to it. Robots with low contribution receive strengthened repulsive forces from all others and robots with high contribution receive weakened attractive forces from low-weighted targets.

Besides using local force vectors, some other techniques have also been investigated. For example, model-predictive control strategies are used for CMOMMT in Ref. [

23], but they have much higher computational complexity. The authors in Ref. [

24] extend the conventional CMOMMT problem with fixed-altitude or fixed-FOV-size to multi-scale observations by using a multi-MAV system with noisy sensors. The authors in Ref. [

25] replace the use of local force vectors with the introduction of a tracking algorithm based on unsupervised extended Kohonen maps. In Refs. [

26,

27], the authors present a novel optimization model for CMOMMT scenarios which features fairness of observation among different targets as an additional objective.The authors in Ref. [

28] extend the conventional CMOMMT problem with limited sensing range and the moving targets are un-directional. In Ref. [

29], the authors incorporate a multi-hop clustering and a dual-pheromone ant-colony model to optimize the target detection and tracking problem. The authors in Ref. [

30] utilize the Mixed Integer Linear Programming (MILP) techniques to arrange the UAVs to perform city-scale video monitoring of a set of Points of Interest (PoI).

Since the above algorithms consider the impact of moving targets and collaborating UAVs separately, they fail to provide an elegant framework for making trade-offs among target searching and target tracking for each UAV. In our earlier conference paper [

31], we presented our initial efforts to make trade-offs between target

searching and

tracking in a single framework. In this work, we extend this framework with more comprehensive investigations and experiments. By characterizing each cell with a changing observation profit, both of the impact factors of moving targets and collaborating UAVs are considered in a unified framework. With this framework, a profit-driven algorithm that makes moving decisions for each UAV can be designed conveniently by picking observation cells with the best observation profit.

3. Problem Formulation

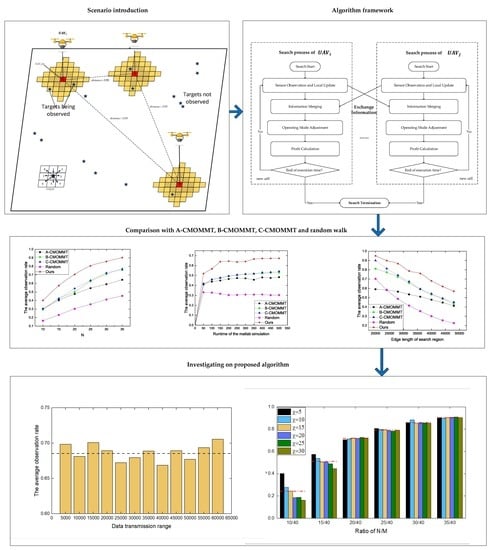

Figure 2 illustrates the problem of Cooperative Multi-UAV Observation of Multiple Moving Targets, with some concepts and terms introduced as follows.

Search Region. The search region is considered to be a 2D bounded area partitioned into C equally sized cells, and each UAV is aware of its cell location with its onboard GPS sensors.

Time Steps. We define symbol t as the discrete time step within which UAVs can make a move-and-observe action.

Targets. The mission is about observing a set of M moving targets in the search region. For target j, the term is the Cell of Target j at time step t. We assume and the velocity of targets is smaller than that of UAVs. More specifically, each target can move into one of its eight neighbor cells or stay at its current cell at time step .

UAVs. A set of N UAVs are deployed in the search region to observe the M targets (we assume ). The term denotes the Cell of UAV i at time step t. With the help of onboard sensors, UAVs can act as a team to share information and coordinate with each other for more efficient target searching. Each UAV is equipped with several other sensors as follows:

A GPS sensor to provide geographical location for the UAV;

An omnidirectional sensor for target observation. Let be the sensing range of each UAV, and its field of view (FOV) of UAV i at time step t is represented as a subset of contingent cells centered in .

A wireless network interface for communication with other UAVs. Assume the data transmission range is , which is larger than the sensing range of the UAVs.

A computing unit onboard for execution of the search algorithm.

Observation History Map. Each UAV maintains an observation history map which records two pieces of information: (1) the last observed time for each cell c, denoted as a timestamp , and (2) the locations of observed targets, if any. At each time step, UAV i makes observation on the subset of cells , then the values of corresponding cells in the observation history map will be updated with the latest observation. In addition to updates by local observation, the exchange of observation history maps among UAVs will also trigger updates on the local map of each UAV. One of the benefits is that a UAV i can avoid wasting its effort on observing a subarea which has just been observed by other UAVs without discovering any target.

Objective. A target

j can be monitored by more than one UAV at time step

t, and we define the observation state of target

j as

:

where

means that target

j is observed by at least one UAV at time

t, and

, otherwise.

The objective of this work is to develop an algorithm to maximize the average observation rate of

M targets in a period of

T time steps, which can be characterized by the metric

:

Ideally, can reach its maximum value 1 when is 1 for every target j at every moment t. However, as given by Formula (1), is constrained by the locations of targets as well as the field of view of UAVs; therefore, it is unrealistic for for every target j at every time t. In other words, Formula (1) serves as constraints for the objective function in Formula (2), and our task is to maximize the number of situations.

For ease of comprehension, the notations introduced above, along with some more notations in subsequent sections, have been put in

Table A1 in the

Appendix of this paper.

4. Algorithm

We formulate the CMOMMT algorithm as follows. First, the operation of each UAV can be characterized as a trade-off between two intentions: the

follow intention, which keeps tracking of targets currently under observation; and the

explore intention, which searches recently unexplored cells to discover more targets. Depending on the recent observation history, a UAV can adjust its weights of intentions, which will be characterized by the unified

observation profits on the cells. Intuitively, a cell with higher profit means the area surrounding it deserves observation more than a cell with a lower profit in terms of the

follow/

explore intensions, and the detailed definition and computation of the

observation profits will be given in

Section 4.4. Driven by this metric, UAVs will make decisions by moving towards cells with higher profits. The high-level framework is shown in

Figure 3, which mainly consists of five components: (i) Sensor observation and local update; (ii) Information merging; (iii) Operating mode adjustment; (iv) Profit calculation; and (v) Path planning. We elaborate on each of these components as follows.

4.1. Sensor Observation and Local Update

At each time step t, each UAV will make observations on the targets within its FOV and update its observation history map, including the observing times of cells and the positions of the observed targets. With the completion of local update, each UAV should broadcast its observation history map to other UAVs and meanwhile receive broadcasts from teammates to trigger the information merging process.

4.2. Information Merging

We assume that the information exchange is restricted among UAVs within their data transmission range. Therefore, the observation history maps may not be consistent among all UAVs. Based on the distance among UAVs, the whole UAV swarm will be divided into serval sub teams and the UAVs in the same team will share the information. We enforce the following rules for information merging. First, when a target j is observed by more than one UAV at time t, we tag its tracker to be the closest UAV, denoted as . This tracker UAV will take the responsibility for following the target in the next time step, and this responsibility will be reflected in its observation profit calculation. Other UAVs in the team that also observed this target will then delete the position information of target j to guarantee that they will not be affected by this target.

Second, when the timestamp of a cell received from broadcast is newer than that in the local observation history, it will be updated accordingly. Therefore, after information merging, the observation history map of each UAV contains two pieces of information: (1) observation timestamps of cells, (2) the targets that should be tracked in the next time step and their positions.

Overall, in addition to the observation history map, an observation summary for each UAV i derived from information merging is also maintained, which includes the following items:

The number of targets that UAV i should track at time t, denoted as ;

The number of UAVs in the sub team which contains UAV i at time t, denoted as ;

The total number of targets observed by UAV i and its teammates that have exchanged information with it at time t, denoted as . Note that this number is not equal to the total number of observed targets by the whole UAV swarm because some observations may not get exchanged among UAVs beyond their communication ranges.

4.3. Operating Mode Adjustment

In conventional CMOMMT search strategies, when a UAV finds one or more targets in its FOV, it moves toward the virtual center of the observed targets to follow them; otherwise, it will perform an explore action on unobserved cells to try to find targets. However, when fewer UAVs are deployed to observe a larger number of targets, each UAV may need to take both the responsibility of follow action and explore action in case the number of targets observed by it is less than a threshold, e.g., . Otherwise, if each UAV is just following a single target in dedication, there will be no UAV spending its effort to discover the rest unobserved targets ( in the worst case).

Instead of switching between the follow and explore actions, our framework enables a more flexible adjustment of operating modes. It takes follow and explore as intentions rather than actions, and a trade-off on the degrees of the two intentions is made based on the observation histories of UAVs. Intuitively, a UAV currently with more targets under observation will have a higher follow intention, which will make the UAV to move towards cells containing the observed targets. Otherwise, if a UAV has very few targets under observation, it will have a higher explore intention, and will move towards a direction with more unobserved cells by itself as well as its teammates recently.

To make quantitative trade-off, we introduce two parameters— and —to characterize the intentions for follow and explore, respectively. The calculation of the two parameters is actually decided by two levels of information: the local number of observed targets by a UAV, and the global number of observed targets by all UAVs. We take the global level into account because each UAV will have a higher responsibility to explore unobserved cells if only a small number of targets are observed by the whole UAV swarm. This means that, for the same number of targets being observed by a UAV, it may spend different effort for explore, depending on the total number of observed targets by the whole team.

Table 1 gives the details on how the local parameters are decided for each UAV at a specific time

t. Since the overall search system is discretized in both time and space, the parameter values are in discretized levels as well. In particular, we divide the number of targets observed by a UAV into five levels. If the number of targets observed by UAV

i is above

(which is the average number of targets that should be observed by each UAV), we will have

and

. This means that the effort of UAV

i will be entirely on following the observed targets, and it corresponds to level 1 in

Table 1. In essence, the less number of targets observed a UAV, the less value of

and the more value of

are assigned to that UAV.

At the global level, we use the merged information to make adjustments on the efforts that should be spent on

follow and

explore, respectively. In particular, we use

to adjust its search efforts. To reflect the quality of its knowledge on observed targets,

is also included to calibrate the calculation. The concrete formulas for global level parameters are given as follows:

By combining the parameters at both the local and global levels, the final parameters for

and

are calculated as follows:

4.4. Profit Calculation

As described earlier, unlike existing techniques that consider UAV-target and UAV-UAV relationships separately, our work provides a single framework to account for the impact from the two aspects. For this purpose, we introduce a key metric called observation profit that unifies the parameters and , and this metric is not applied to individual UAVs or targets. Instead, observation profit is used to characterize the cells for observation, and UAVs will move towards nearby cells with higher observation profits. The observation profit is calculated from two aspects for each cell: profit of follow (PoF) and profit of explore (PoE).

Calculation of PoF. For the CMOMMT problem, due to the high mobility of targets and UAVs, an observed target may escape from the UAV’s FOV at the next time step. Thus, targets in different locations will contribute different

values to the observing UAV. Intuitively, a target in the middle of the UAV’s FOV contributes higher profit of follow than another target in the edge area, as the former target has lower chances to escape observation.

Figure 4 presents the concrete method for calculating PoF contribution according to the distance between the UAV and a target. The distance

is a threshold in which targets will have no chance to escape observation in the next time step even in the worst case (where the UAV and the target are moving apart at their maximum speed). Targets with a distance between

and

from the UAV will have increasing chances to escape observation in the next time step, and targets beyond this distance will have no chance to be observed, thus they will have no contributions to PoF.

Let be the subset of cells to which UAV i can move in one time step, which is a surrounding area of its current cell . For each potential destination , its PoF value will be the summation of PoF contributions from targets within distance . A higher PoF value for cell c means that UAV i can observe more targets if it moves to c at time . The detailed PoF calculation for cells is described together with the calculation of another metric PoE in Algorithm 1.

| Algorithm 1: and Calculation |

Input:

(1) Parameters of the search mission (M, N, etc);

(2) Observation history map for each UAV (depicted in Section 3);

(3) Observation summary for each UAV (depicted in Section 4.2)

![Sensors 19 01545 i001]() |

Calculation of PoE. In addition to tracking observed targets, another task for UAVs is to discover targets currently not being observed. Intuitively, a cell that has never been observed should have the highest value 1 for exploration, and a cell that has just been observed should not be observed immediately, thus having value 0 for exploration. With time elapsed, the chance a target moving to the observed cell gets increased gradually, and its exploration value should be recovered to 1 gradually as well. Since a UAV

i knows the last observation time

of cell

c from its observation history map, and this knowledge might be outdated, we introduce a term

to denote the exploration value for cell

c at time

t in the view of UAV

i, which is calcuated as follows:

where

decides the window of time steps that the exploration value of a cell is recovered from 0 to 1. This is to reflect the fact that the longer the elapsed time since the last observation on some cell, the more chances a target moved to this cell, thus the higher exploration value for this cell.

With the exploration values for individual cells defined above, we can calculate the profit of explore (PoE) for a destination cell c for UAV i at time by summing up the exploration values of cells around c. The higher PoE for c, the more cells around it that deserve exploration by the UAV. Note that these surrounding cells actually correspond to the FOV of UAV i, assuming it is flying above c. Algorithm 1 gives the details on PoE calculation together with PoF discussed earlier.

Calculation ofobservation profit. With

and

calculated, we proposed an objective function to calculate the

observation profit of each cell

for

as follows:

In this formula, the trade-off between follow and explore is calibrated by the two parameters and calculated the operating mode adjustment step.

4.5. Path Planning

Path planning is about deciding a destination cell for UAV i at time t, to which UAV i will fly at time to make observations. Since we have calculated the observation profit for each cell in , we can easily make decisions by choosing the cell with the maximum observation profit.

4.6. Complexity Analysis

For a given UAV

i in cell

c at time step

t, assuming that the maximum velocity of UAV is

v, it is easy to determine that the maximum number of cells in subset

can be represented as mathematical expression:

. Similarly, the maximum number of cells in subset

is

. According the the proposed algorithm, the computational complexity at each UAV is listed in

Table 2.

As for the space complexity, each UAV maintains an observation map and an observation summary. As mentioned in

Section 4.2, the observation summary only needs to store three numbers, thus it consumes negligible memory. As described in

Section 3, the observation history map for each UAV contains two sets of information, the observation timestamp consumes

memory, and the locations of observed targets consume

memory. Therefore, the total amount of memory consumption at each UAV is in the level of

.