Improved Correlation Filter Tracking with Enhanced Features and Adaptive Kalman Filter

Abstract

:1. Introduction

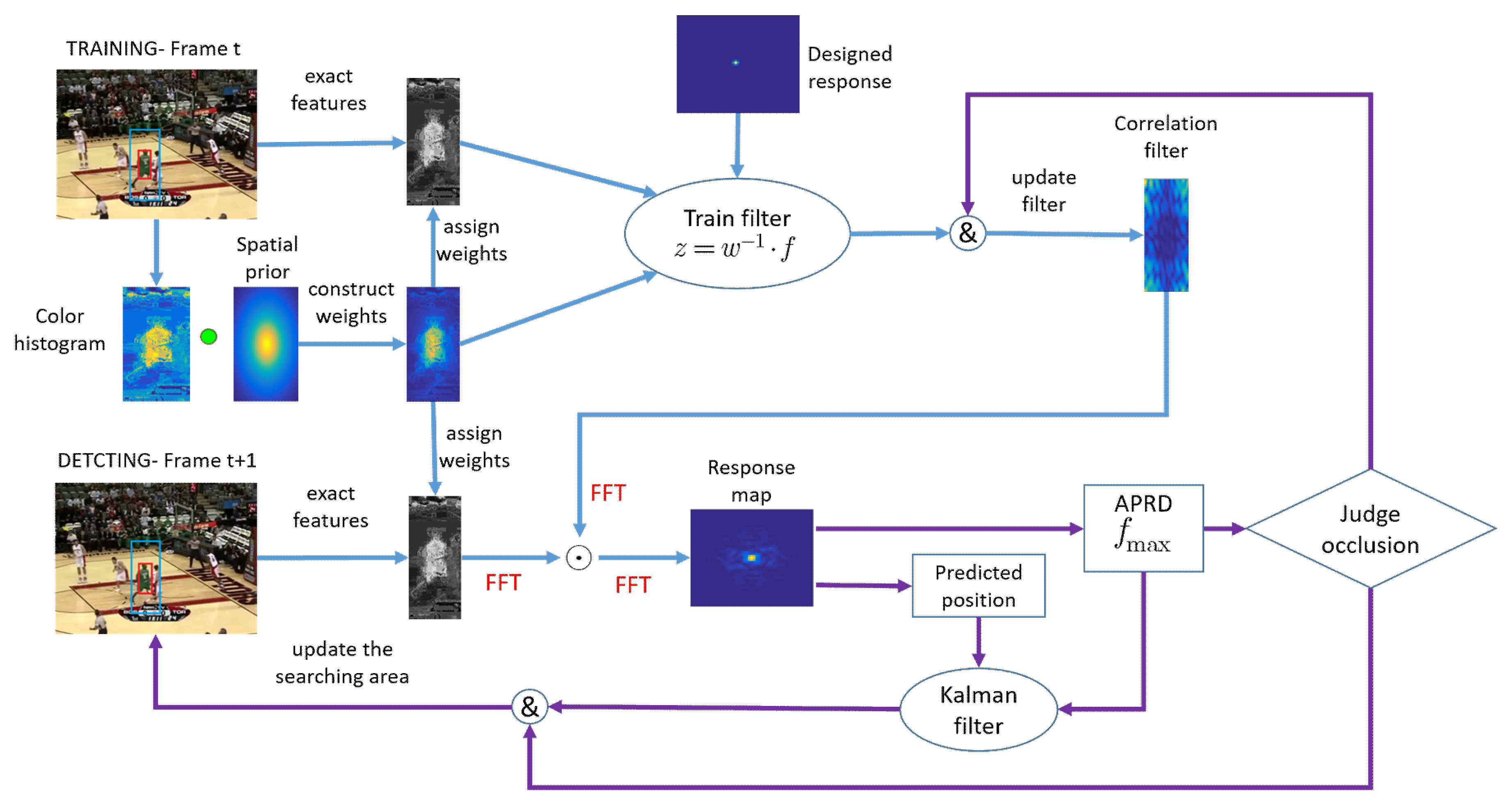

- (1)

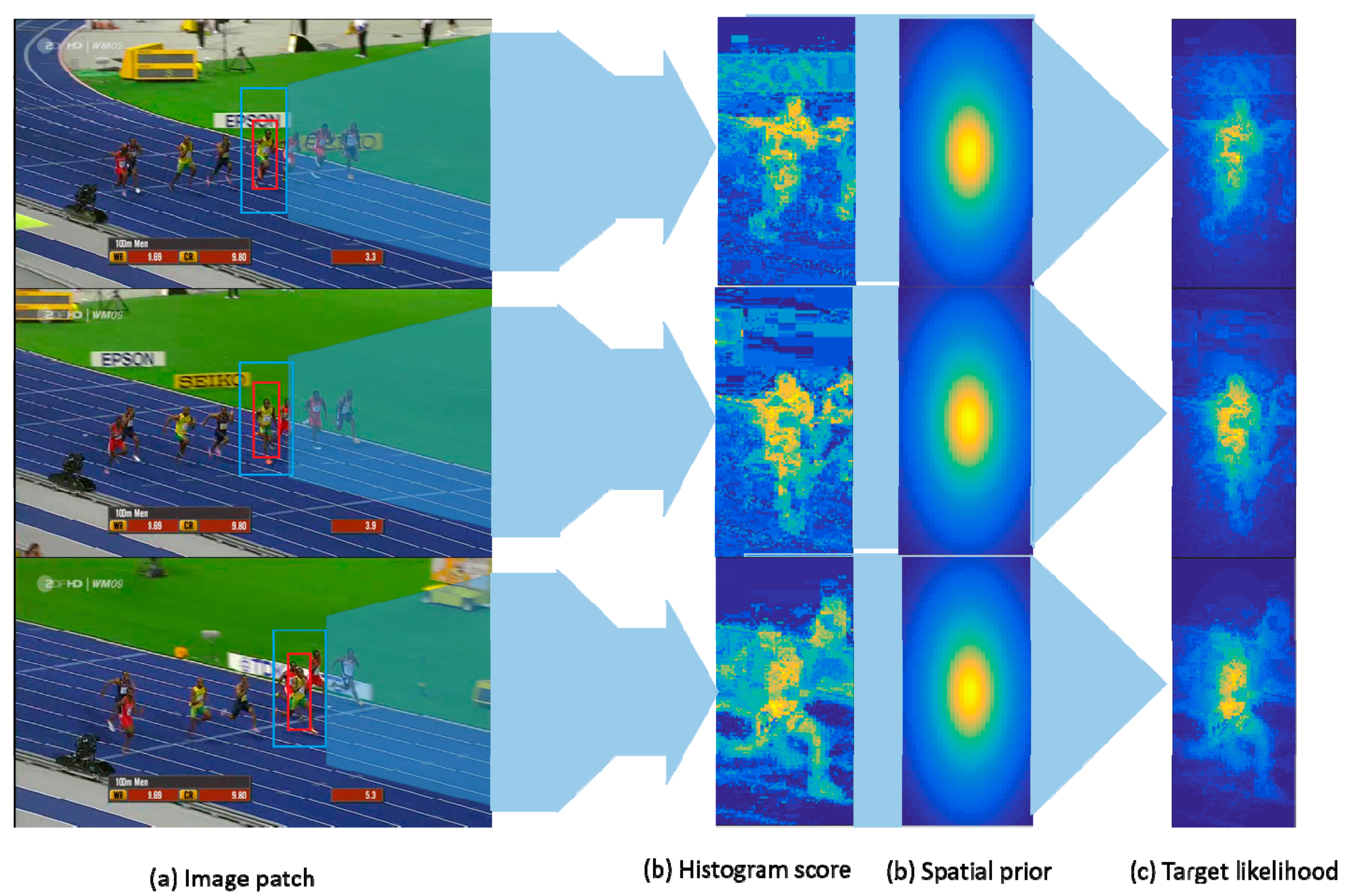

- In order to solve the problem of insufficient expression of texture features (like HOG [18]), a spatial-adaptive weight is constructed by the color-histogram and spatial-prior function, which can increase the discrimination of the texture features between target and background-distractors.

- (2)

- Based on a correlation filter framework, a feature-enhanced correlation filter tracker (FEDCF) is proposed. The tracker combines the spatial-adaptive weights with the correlation filter on the feature level, which helps to enhance the target features while suppress the background-distractor features, and thus train the powerful discriminative filter. The Gauss-Seidel iteration [13] is applied to effectively solve the objective function.

- (3)

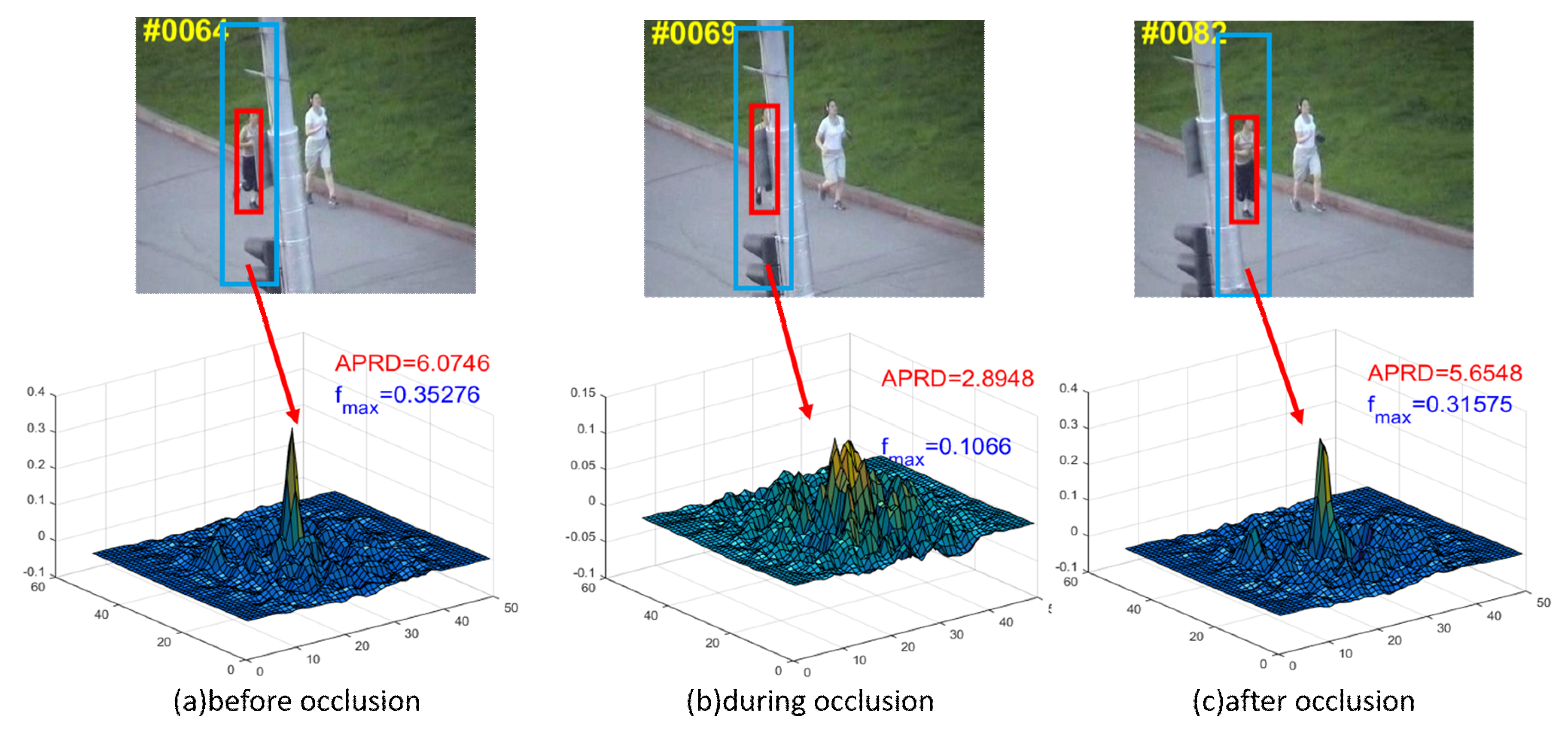

- For target redetection under occlusion, the APRD parameter is proposed, which can be used to reflect the degree of target occlusion and decide model updating. An adaptive Kalman filter based on APRD is proposed to update search area.

- (4)

- The proposed FEDCF was compared with the current state-of-the-art trackers on some standard datasets (OTB2013 (Figures S1–S24) [19] and OTB2015 (Figures S25–S48) [20]). The proposed algorithm runs at approximate 5 fps and achieves the top rank in term of accuracy and robustness.

2. Related Works

2.1. Correlation Filter Based Trackers

2.2. Target Redetection

3. Proposed Method

3.1. The FEDCF Framework

3.2. Feature Enhancement Based on Color Histogram

3.3. Learning Improved Correlation Filter

3.4. Target Redetection Mechanism

3.4.1. Model Update

3.4.2. Update the Searching Area

4. Experiments

4.1. Detailed Parameters

4.2. Analysis of the FEDCF

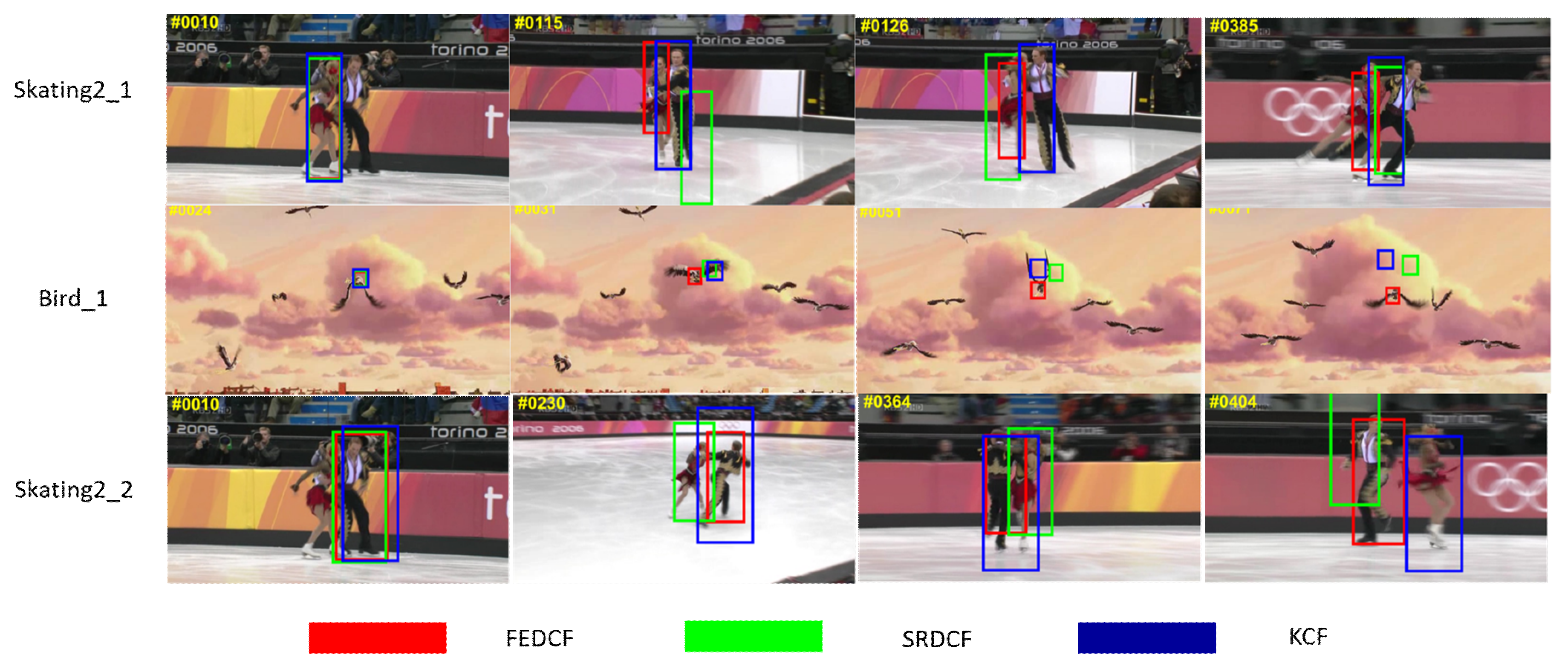

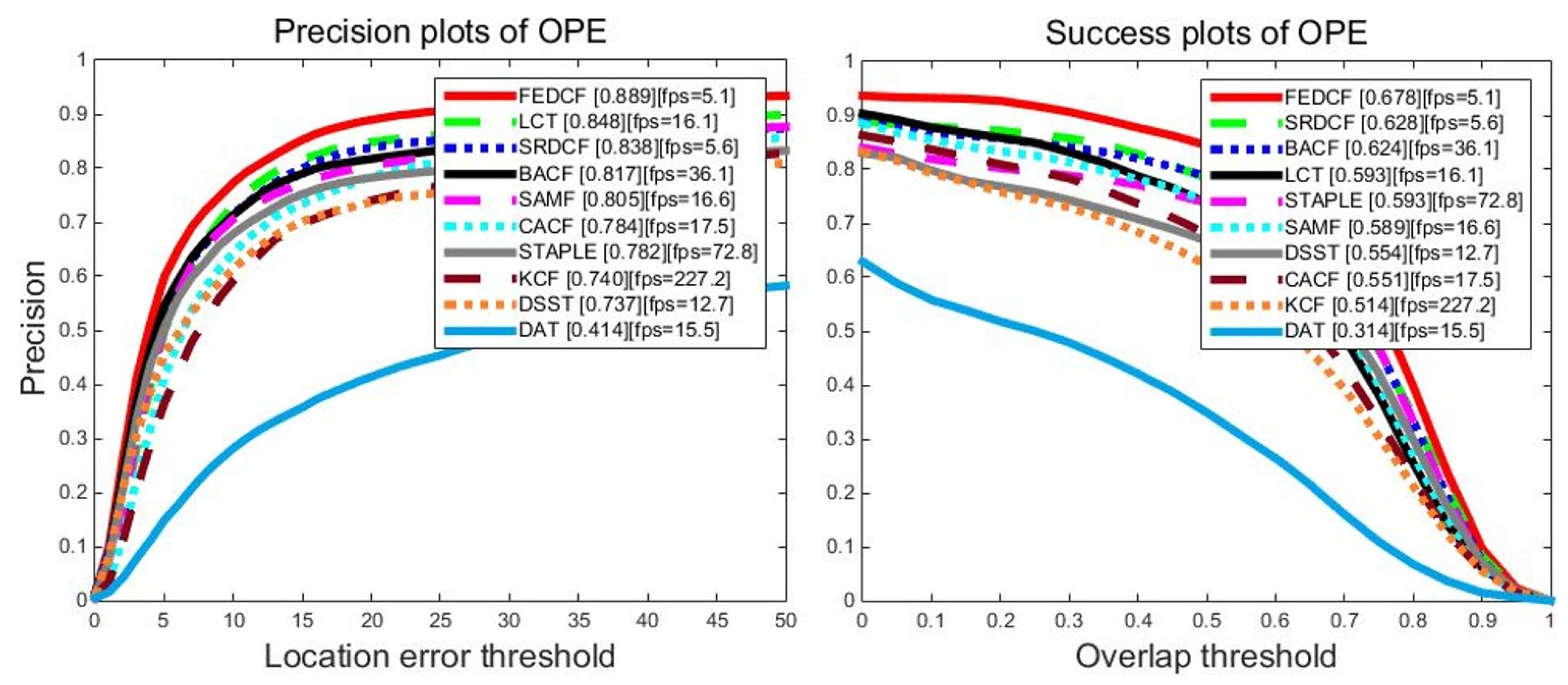

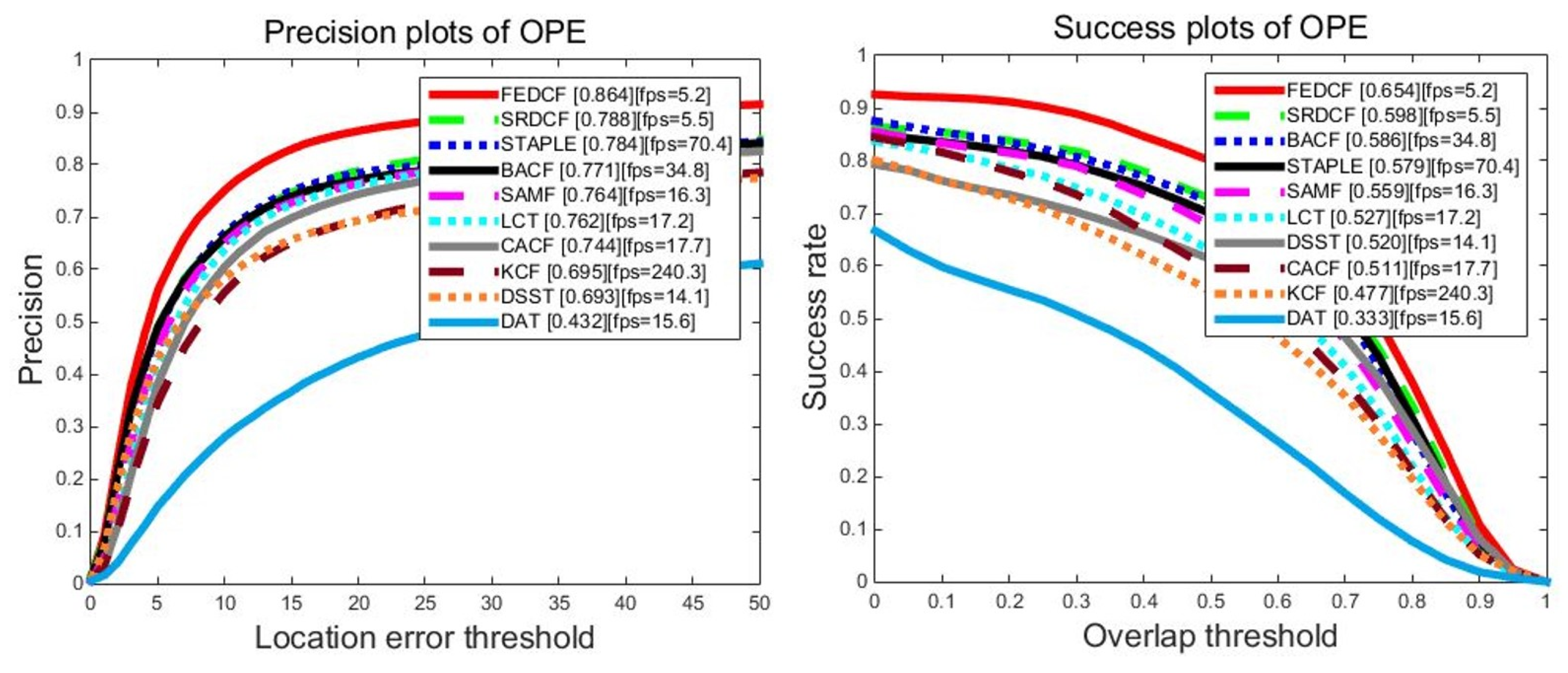

4.3. The evaluation on OTB

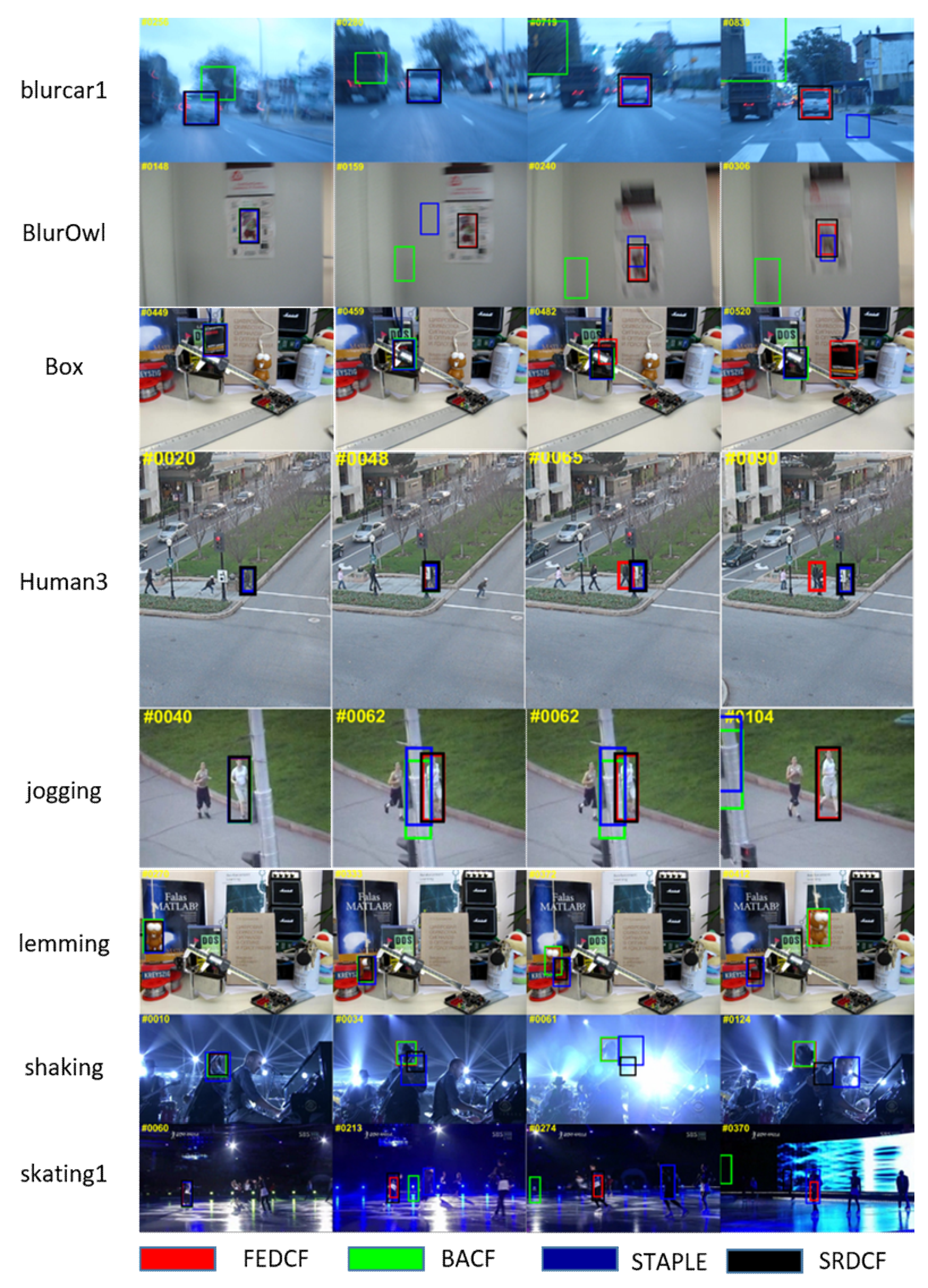

4.3.1. State-of-the-Art Comparison

4.3.2. Attribute-Based Evaluation

5. Discussion and Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Schmid, C., Soatto, S., Tomasi, C., Eds.; IEEE Computer Soc.: Los Alamitos, CA, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Yilmaz, A.; Javed, O.; Shah, M. Object tracking: A survey. ACM Comput. Surv. 2006, 38, 45. [Google Scholar] [CrossRef]

- Lee, K.-H.; Hwang, J.-N. On-Road Pedestrian Tracking Across Multiple Driving Recorders. IEEE Trans. Multimed. 2015, 17, 1429–1438. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision & Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Danelljan, M.; Häger, G.; Khan, F.; Felsberg, M. Accurate scale estimation for robust visual tracking. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Li, Y.; Zhu, J. A Scale Adaptive Kernel Correlation Filter Tracker with Feature Integration. In Computer Vision—ECCV 2014 Workshops; Agapito, L., Bronstein, M.M., Rother, C., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 8926, pp. 254–265. [Google Scholar]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; Weijer, J.V.D. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M. The Visual Object Tracking VOT2017 challenge results. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2017; pp. 1949–1972. [Google Scholar]

- Zhang, K.H.; Zhang, L.; Liu, Q.S.; Zhang, D.; Yang, M.H. Fast Visual Tracking via Dense Spatio-temporal Context Learning. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Lecture Notes in Computer Science; Springer International Publishing Ag: Cham, Switzerland, 2014; Volume 8693, pp. 127–141. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Nummiaro, K.; Koller-Meier, E.; Van Gool, L. An adaptive color-based particle filter. Image Vis. Comput. 2003, 21, 99–110. [Google Scholar] [CrossRef] [Green Version]

- Mueller, M.; Smith, N.; Ghanem, B. IEEE: Context-Aware Correlation Filter Tracking. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 1387–1395. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. IEEE: Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Galoogahi, H.K.; Fagg, A.; Lucey, S. IEEE: Learning Background-Aware Correlation Filters for Visual Tracking. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1144–1152. [Google Scholar]

- Possegger, H.; Mauthner, T.; Bischof, H. In Defense of Color-based Model-free Tracking. In Proceedings of the 2015 IEEE Conference on Computer Vision & Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Du, F.; Liu, P.; Zhao, W.; Tang, X.L. Spatial-temporal adaptive feature weighted correlation filter for visual tracking. Signal Process.-Image Commun. 2018, 67, 58–70. [Google Scholar] [CrossRef]

- Shen, Y.Q.; Gong, H.J.; Xiong, Y. Application of Adaptive Extended Kalman Filter for Tracking a Moving Target. Comput. Simul. 2007, 24, 210–213. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.-H. IEEE: Online Object Tracking: A Benchmark. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar]

- Zaitouny, A.; Stemler, T.; Small, M. Tracking a single pigeon using a shadowing filter algorithm. Ecol. Evol. 2017, 7, 4419–4431. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barrett, L.; Henzi, S.P. Particle Filters for Positioning, Navigation and Tracking. IEEE Trans. Signal Process. 2002, 50, 425–437. [Google Scholar]

- Míguez, J. Analysis of selection methods for cost-reference particle filtering with applications to maneuvering target tracking and dynamic optimization. Digit. Signal Process. 2007, 17, 787–807. [Google Scholar] [CrossRef]

- Zaitouny, A.A.; Stemler, T.; Judd, K. Tracking Rigid Bodies Using Only Position Data: A Shadowing Filter Approach based on Newtonian Dynamics. Digit. Signal Process. 2017, 67, 81–90. [Google Scholar] [CrossRef]

- Zaitouny, A.; Stemler, T.; Algar, S.D. Optimal Shadowing Filter for a Positioning and Tracking Methodology with Limited Information. Sensors 2019, 19, 931. [Google Scholar] [CrossRef] [PubMed]

- Henriques, J.F.; Rui, C.; Martins, P.; Batista, J. Exploiting the Circulant Structure of Tracking-by-Detection with Kernels. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 702–715. [Google Scholar]

- Tang, M.; Feng, J. IEEE: Multi-kernel Correlation Filter for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3038–3046. [Google Scholar]

- Bibi, A.; Ghanem, B. IEEE: Multi-Template Scale-Adaptive Kernelized Correlation Filters. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Choi, J.; Chang, H.J.; Jeong, J.; Demiris, Y.; Choi, J.Y. IEEE: Visual Tracking Using Attention-Modulated Disintegration and Integration. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4321–4330. [Google Scholar]

- Li, X.; Liu, Q.; He, Z.; Wang, H.; Zhang, C.; Chen, W.-S. A multi-view model for visual tracking via correlation filters. Knowl.-Based Syst. 2016, 113, 88–99. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. IEEE: Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar]

- Montero, A.S.; Lang, J.; Laganiere, R. IEEE: Scalable Kernel Correlation Filter with Sparse Feature Integration. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Discriminative Scale Space Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1561–1575. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Galoogahi, H.K.; Sim, T.; Lucey, S. IEEE: Correlation Filters with Limited Boundaries. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4630–4638. [Google Scholar]

- Gundogdu, E.; Alatan, A.A. IEEE: Spatial windowing for correlation filter based visual tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing ICIP, Phoenix, AZ, USA, 25–28 September 2016; pp. 1684–1688. [Google Scholar]

- Lukezic, A.; Vojir, T.; Zajc, L.C.; Matas, J.; Kristan, M. IEEE: Discriminative Correlation Filter with Channel and Spatial Reliability. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 4847–4856. [Google Scholar]

- Hu, H.; Ma, B.; Shen, J.; Shao, L. Manifold Regularized Correlation Object Tracking. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 1786–1795. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond Correlation Filters: Learning Continuous Convolution Operators for Visual Tracking. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 9909, pp. 472–488. [Google Scholar]

- Ma, C.; Huang, J.-B.; Yang, X.; Yang, M.-H. Adaptive Correlation Filters with Long-Term and Short-Term Memory for Object Tracking. Int. J. Comput. Vis. 2018, 126, 771–796. [Google Scholar] [CrossRef] [Green Version]

- Hong, Z.; Chen, Z.; Wang, C.; Mei, X.; Prokhorov, D.; Tao, D. IEEE: MUlti-Store Tracker (MUSTer): A Cognitive Psychology Inspired Approach to Object Tracking. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 749–758. [Google Scholar]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-Learning-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xiang, Y.; Alahi, A.; Savarese, S. Learning to Track: Online Multi-object Tracking by Decision Making. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ma, C.; Yang, X.; Chongyang, Z.; Yang, M.-H. Long-term correlation tracking. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Kim, H.-U.; Lee, D.-Y.; Sim, J.-Y.; Kim, C.-S. IEEE: SOWP: Spatially Ordered and Weighted Patch Descriptor for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3011–3019. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.-H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

| Input: | Frames , initial target location x1, spatial prior wp. |

| Output: | Detected target location at each frame . |

| 1: | repeat |

| 2: | Crop a searching patch St which is centered at xt. |

| 3: | Exact feature samples Vt and construct the color histogram model via (2). |

| 4: | Construct the adaptive weights wt via (4). |

| 5: | Obtain APRD and wf according to (15)–(17). |

| 6: | If target is judged to be occluded via (18). |

| 7: | Predict the target location xt+1 by adaptive Kalman filter via (19)–(23). |

| 8: | Else |

| 9: | Update the correlation model via (12)–(13). |

| 10: | Detect the target location with certain scale xt+1,r via (15). |

| 11: | End if |

| 12: | Until end of video sequence. |

| Trackers | Success Score (%) | Precision Score (%) | Frames Per Second |

|---|---|---|---|

| DCF | 47.7 | 69.5 | 240.3 |

| DCF+ enhancement | 55.9 | 76.2 | 14.9 |

| DCF+ enhancement+ prior spatial | 62.7 | 81.6 | 5.3 |

| FEDCF | 65.4 | 86.4 | 5.2 |

| SRDCF | 59.8 | 78.8 | 5.5 |

| DAT | 33.3 | 43.2 | 15.6 |

| FEDCF | SRDCF | STAPLE | BACF | CACF | DSST | SAMF | KCF | LCT | DAT | |

|---|---|---|---|---|---|---|---|---|---|---|

| Iv(37) | 83.7 | 78.1 | 77.8 | 75.8 | 76.0 | 72.0 | 74.0 | 72.4 | 74.3 | 33.4 |

| OPR(63) | 85.0 | 74.0 | 73.8 | 75.3 | 69.2 | 66.5 | 75.0 | 67.6 | 74.6 | 45.4 |

| SV(63) | 84.0 | 74.3 | 72.4 | 75.6 | 69.4 | 65.3 | 72.2 | 63.5 | 67.8 | 41.4 |

| OCC(48) | 81.0 | 72.7 | 72.4 | 69.6 | 66.9 | 60.2 | 73.6 | 63.2 | 67.8 | 42.4 |

| DEF(43) | 84.1 | 73.0 | 74.7 | 72.6 | 69.2 | 56.0 | 67.1 | 61.9 | 68.5 | 46.9 |

| MB(29) | 82.6 | 76.7 | 69.9 | 58.4 | 71.1 | 57.0 | 68.7 | 60.0 | 66.9 | 34.3 |

| FM(39) | 80.2 | 76.9 | 71.0 | 68.9 | 72.7 | 57.5 | 70.6 | 62.1 | 68.1 | 38.1 |

| IPR(51) | 81.1 | 74.2 | 76.8 | 76.0 | 75.1 | 71.1 | 74.4 | 70.1 | 78.1 | 44.3 |

| OV(14) | 76.6 | 60.2 | 66.8 | 74.8 | 57.8 | 48.0 | 67.6 | 49.9 | 59.2 | 36.5 |

| BC(31) | 87.2 | 77.5 | 74.9 | 80.1 | 76.4 | 70.4 | 72.2 | 71.2 | 17.2 | 37.1 |

| LR(9) | 73.7 | 66.3 | 61.0 | 74.1 | 59.4 | 60.2 | 68.4 | 56.0 | 53.7 | 39.3 |

| Overall | 86.4 | 78.8 | 78.4 | 77.1 | 74.4 | 69.3 | 76.4 | 69.5 | 76.2 | 43.2 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, H.; Huang, Y.; Xie, Z. Improved Correlation Filter Tracking with Enhanced Features and Adaptive Kalman Filter. Sensors 2019, 19, 1625. https://doi.org/10.3390/s19071625

Yang H, Huang Y, Xie Z. Improved Correlation Filter Tracking with Enhanced Features and Adaptive Kalman Filter. Sensors. 2019; 19(7):1625. https://doi.org/10.3390/s19071625

Chicago/Turabian StyleYang, Hao, Yingqing Huang, and Zhihong Xie. 2019. "Improved Correlation Filter Tracking with Enhanced Features and Adaptive Kalman Filter" Sensors 19, no. 7: 1625. https://doi.org/10.3390/s19071625

APA StyleYang, H., Huang, Y., & Xie, Z. (2019). Improved Correlation Filter Tracking with Enhanced Features and Adaptive Kalman Filter. Sensors, 19(7), 1625. https://doi.org/10.3390/s19071625