Wide Swath and High Resolution Airborne HyperSpectral Imaging System and Flight Validation

Abstract

:1. Introduction

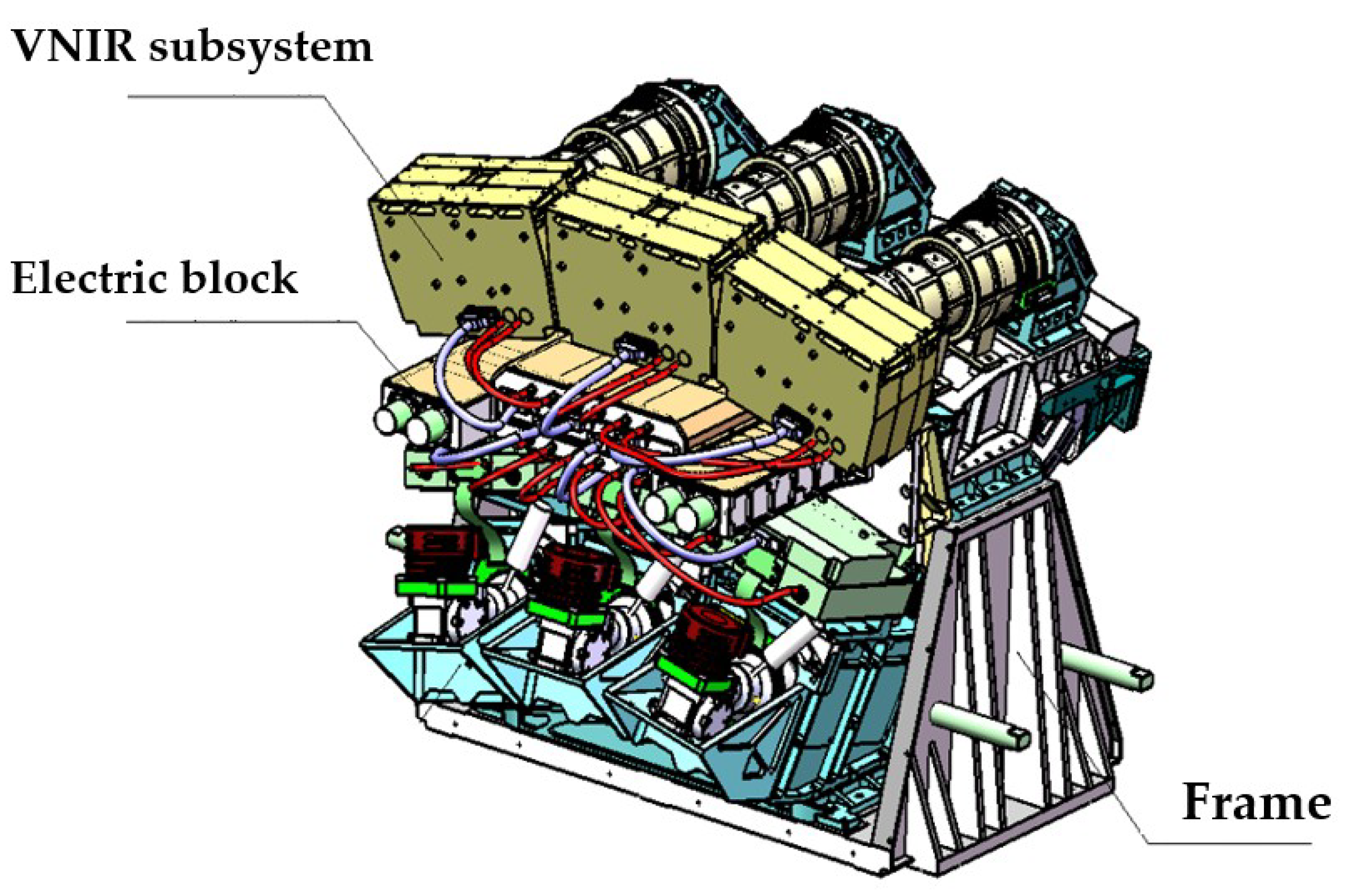

2. WiSHiRaPHI System Introduction

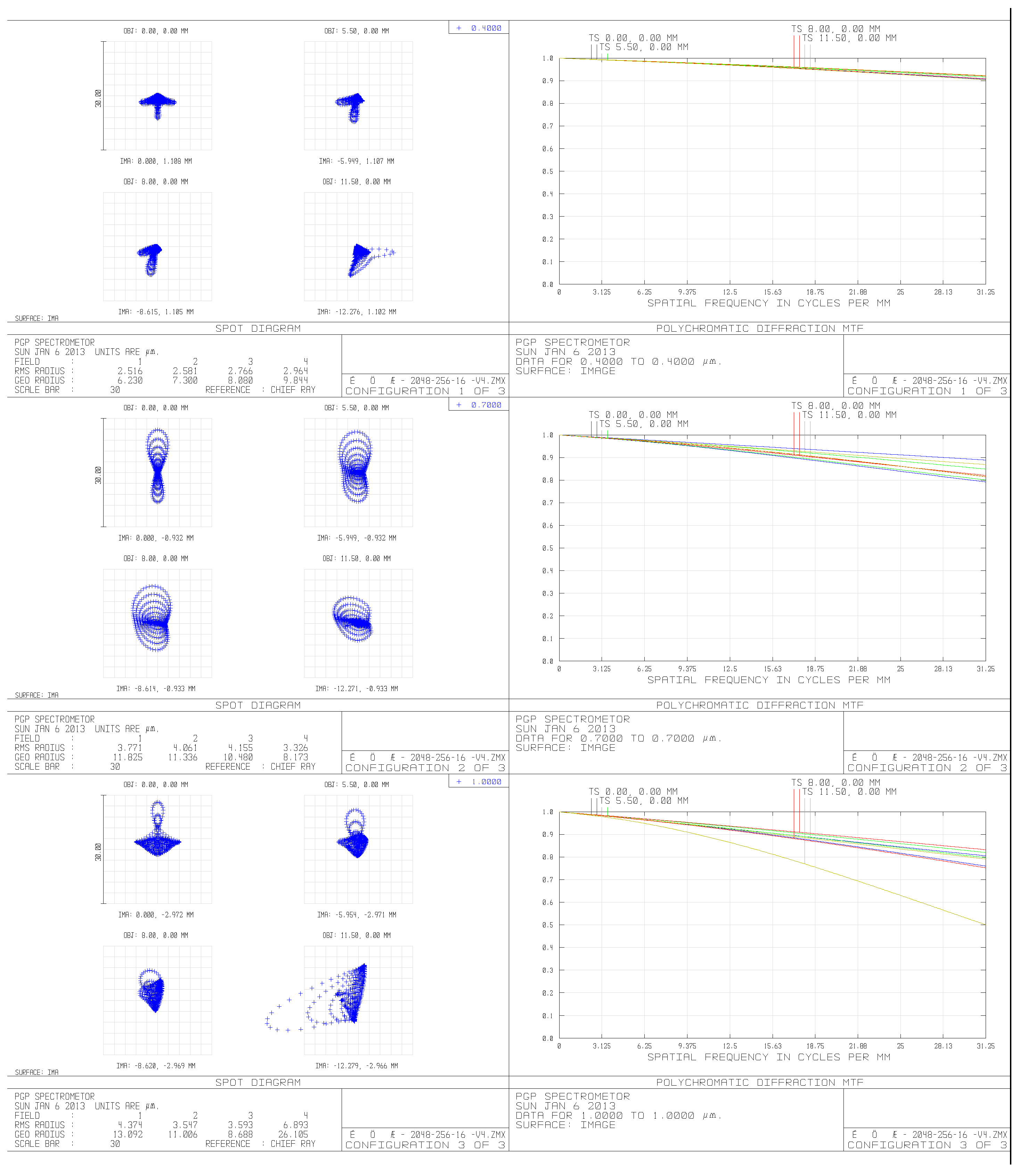

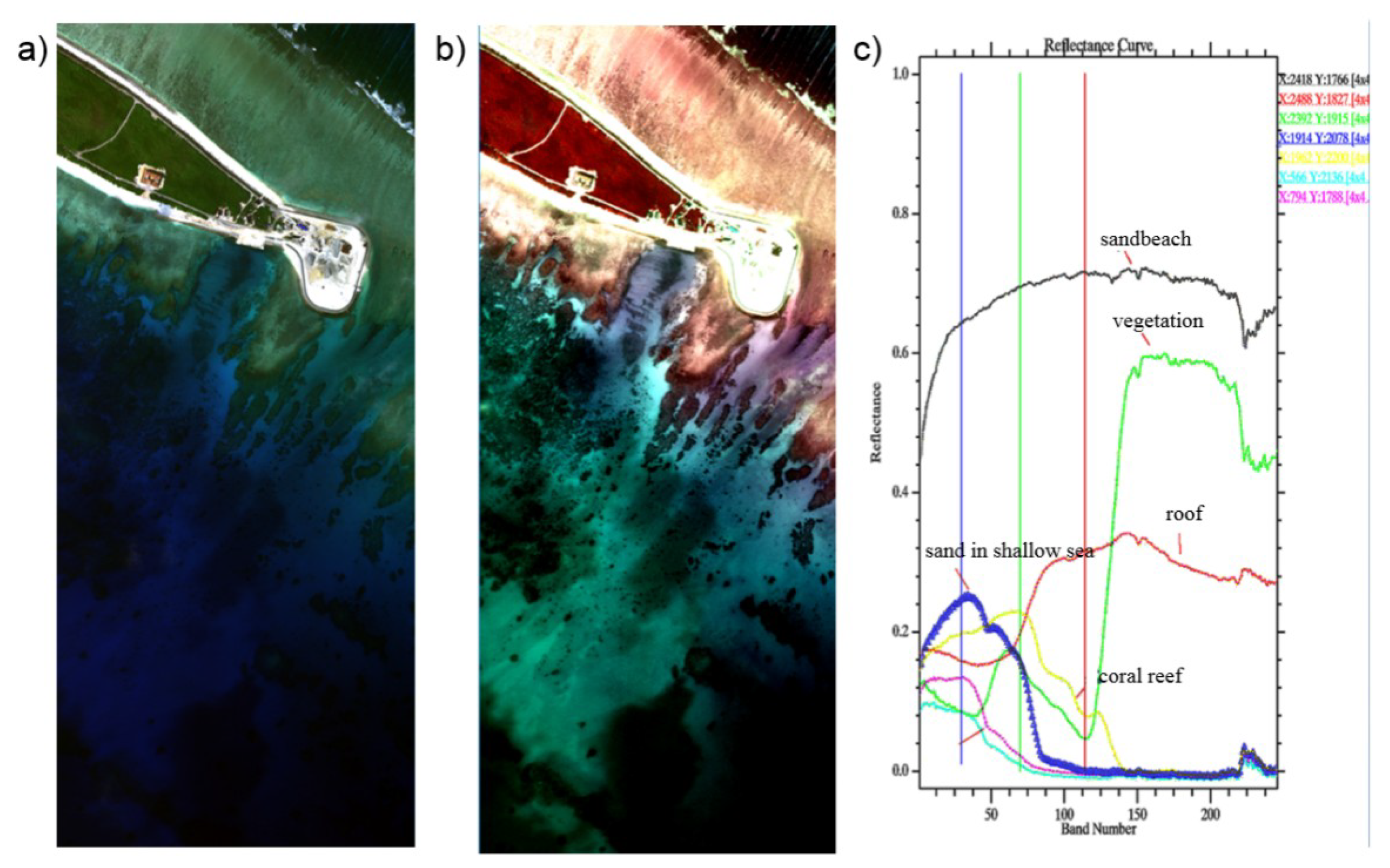

2.1. Optical System

2.2. Photoelectric System

2.3. Block System

3. Laboratory Calibration

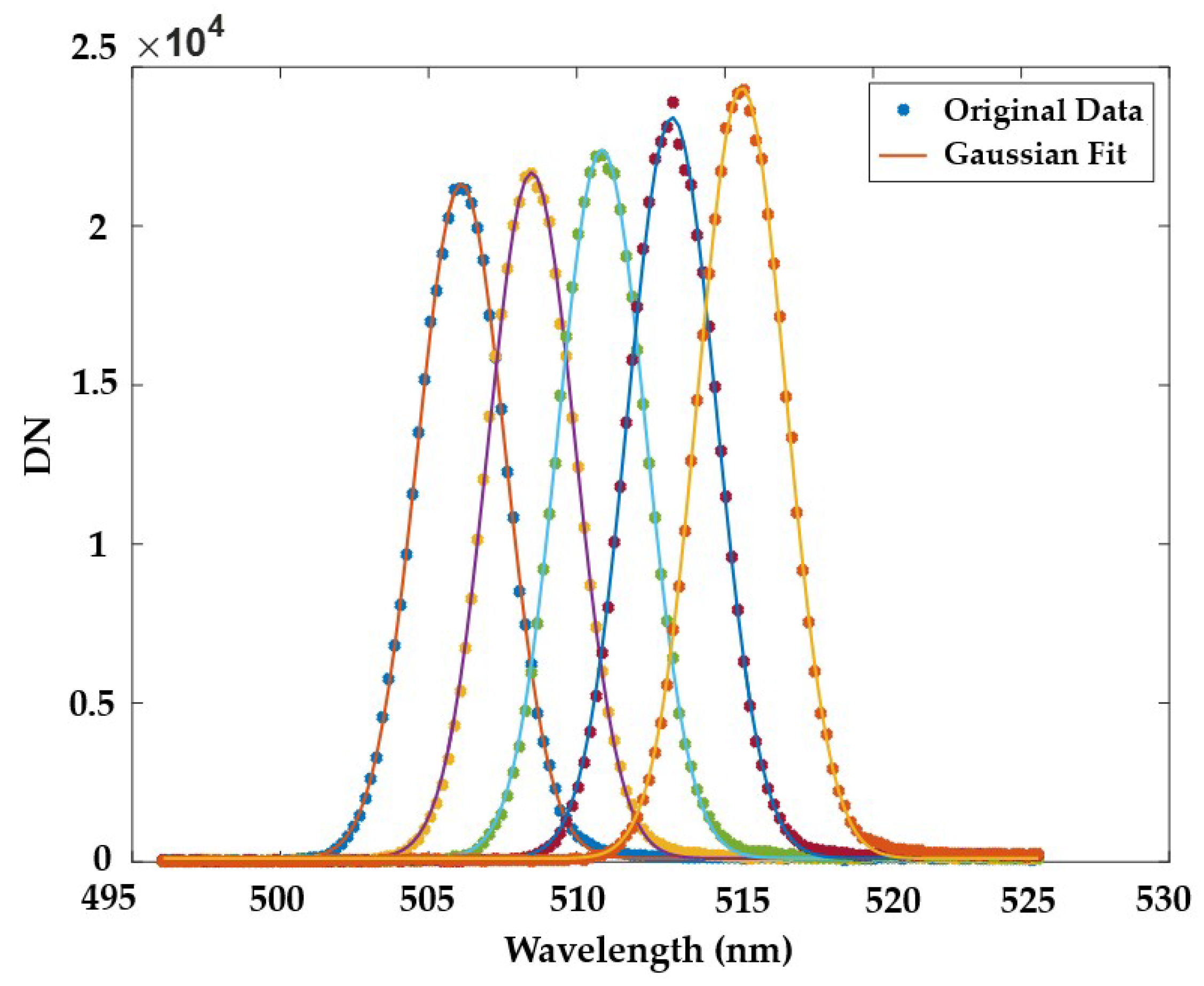

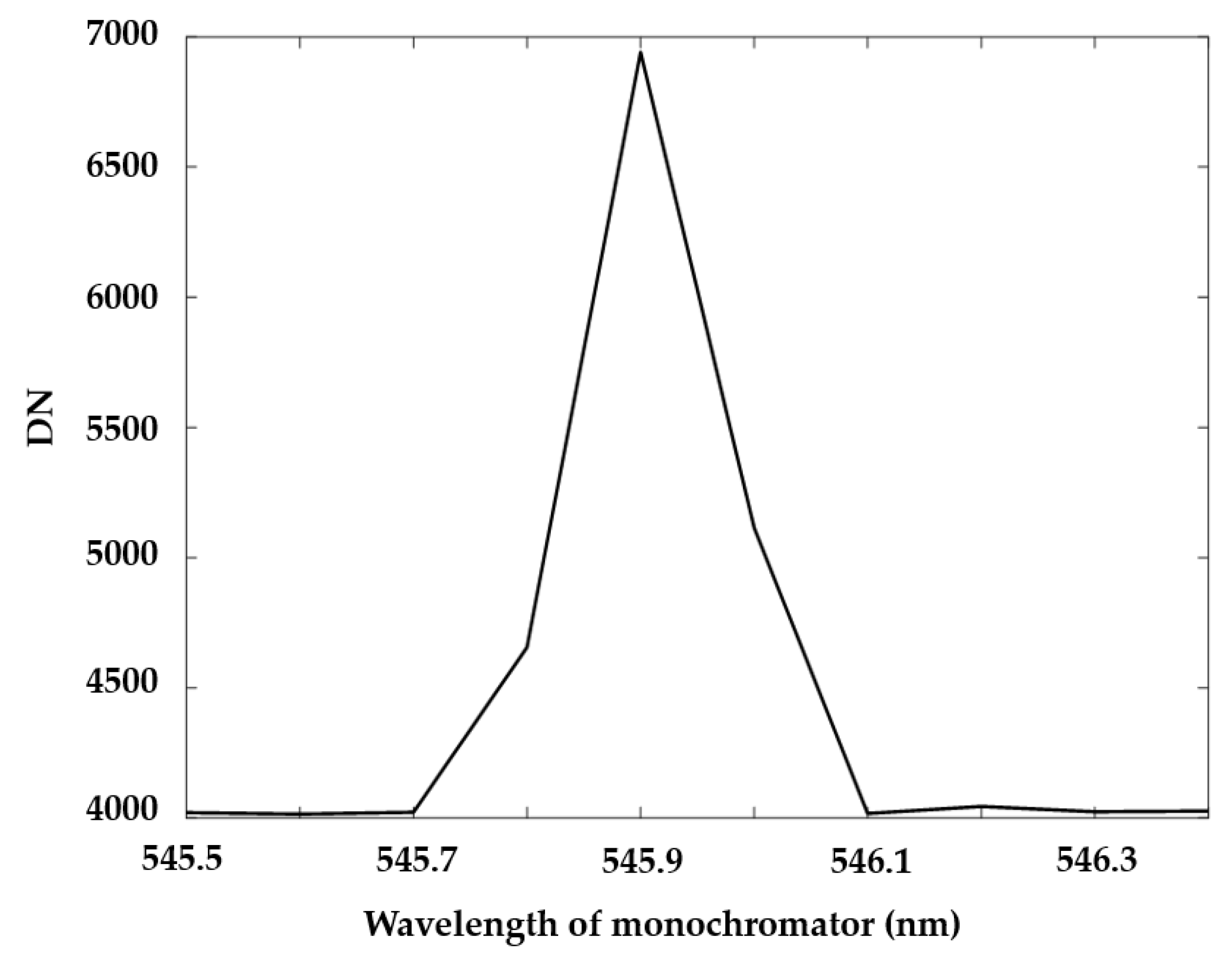

3.1. Spectral Calibration

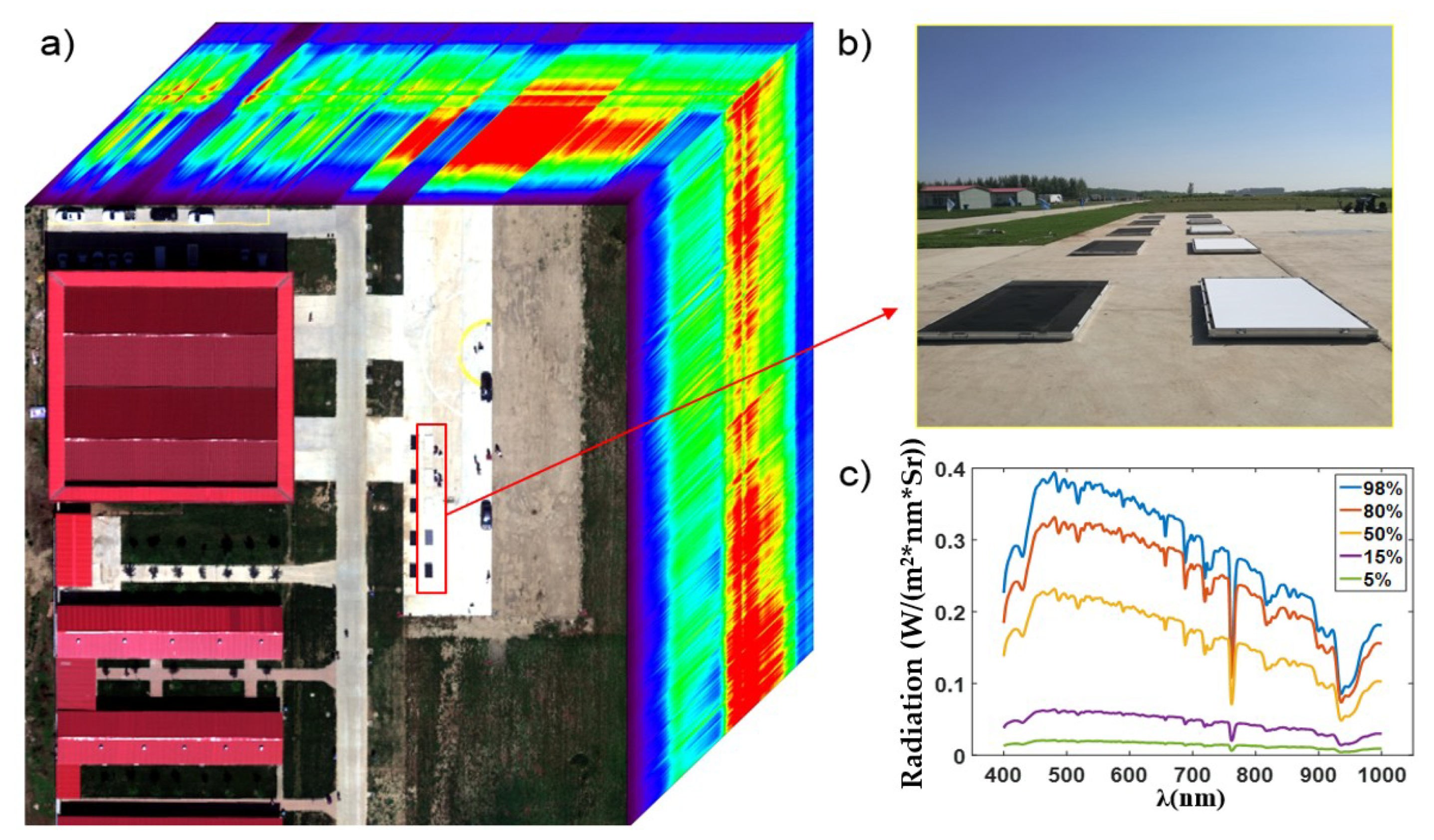

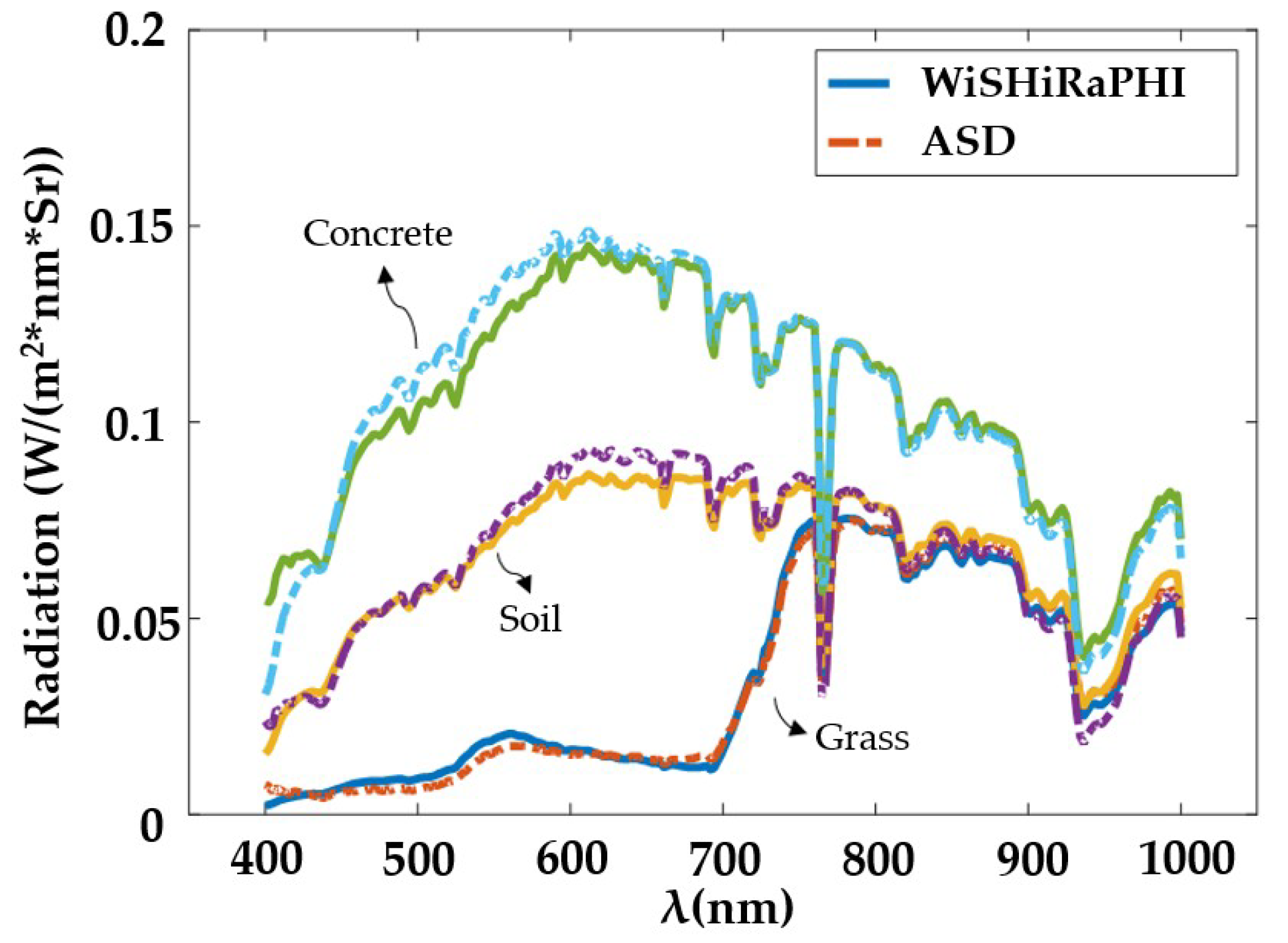

3.2. Radiometric Calibration

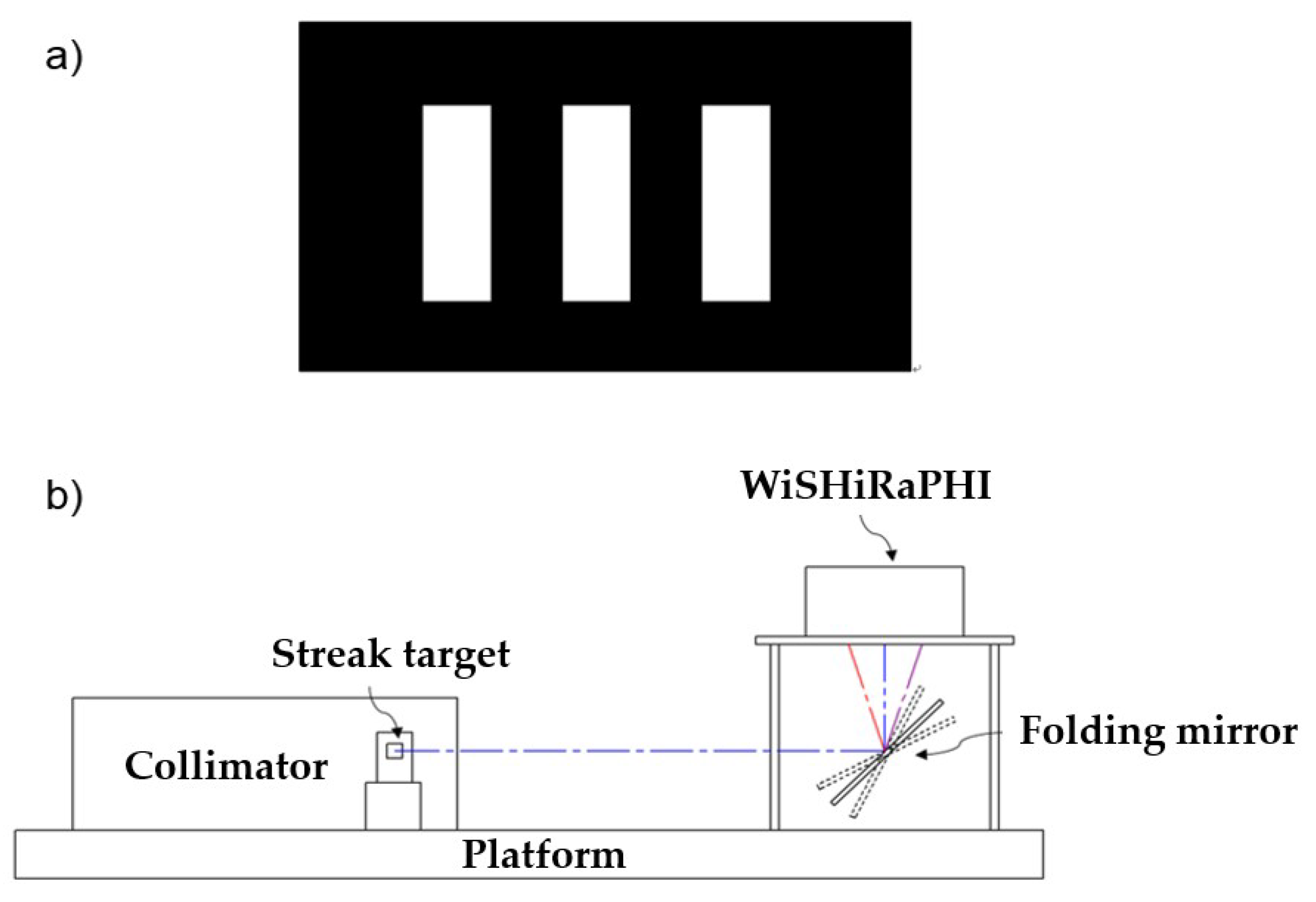

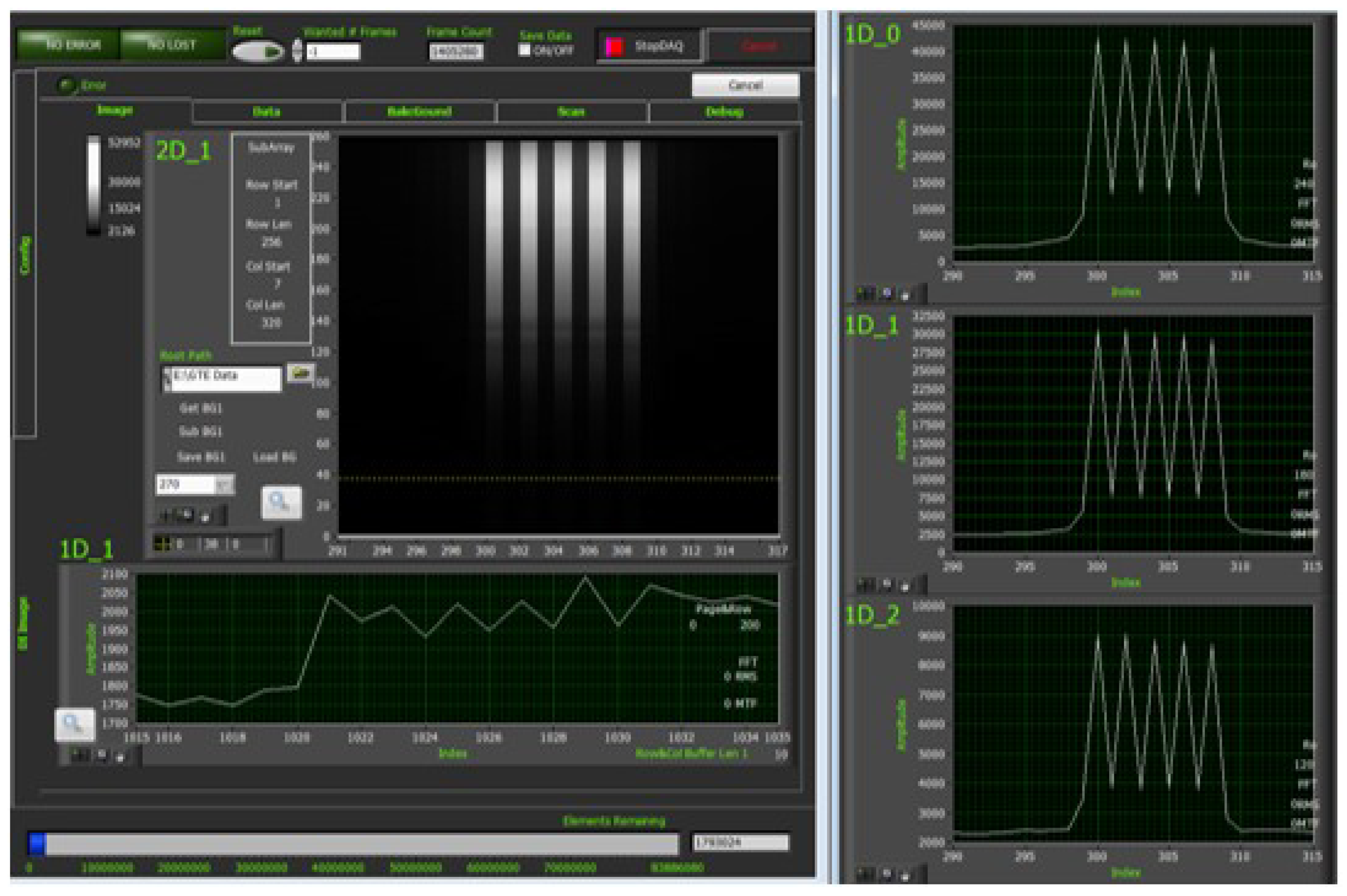

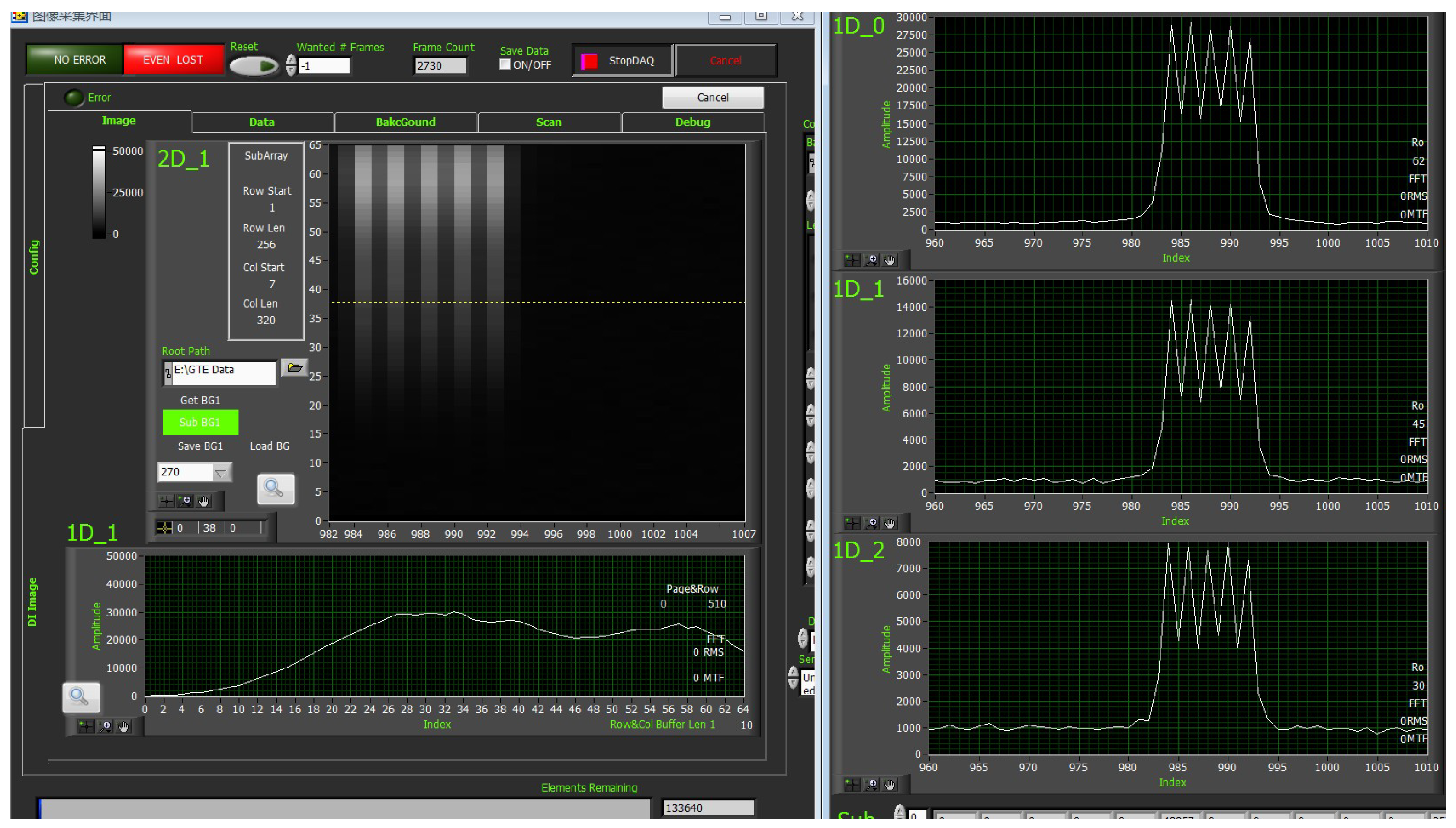

3.3. MTF Determination

3.4. SNR Determination

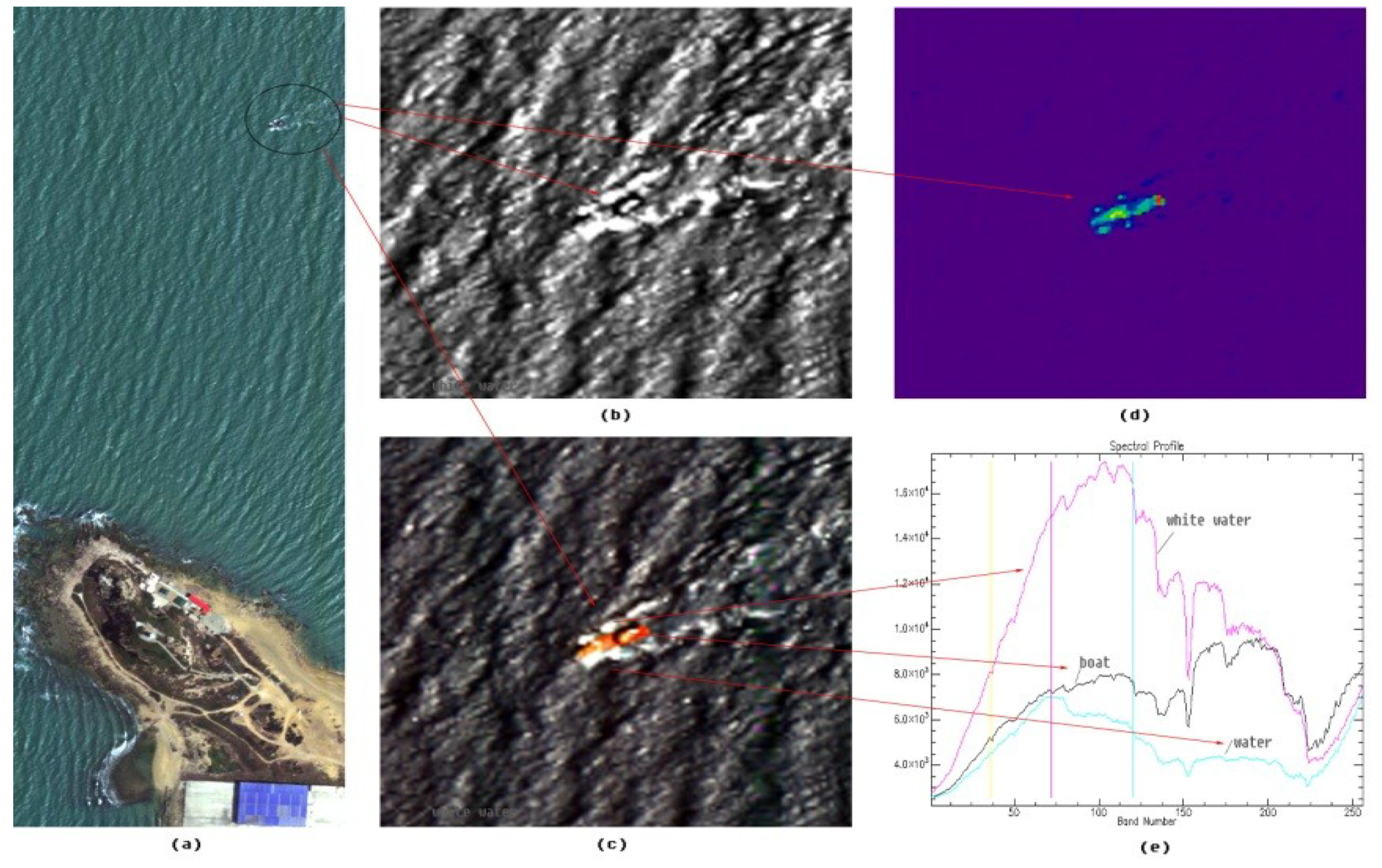

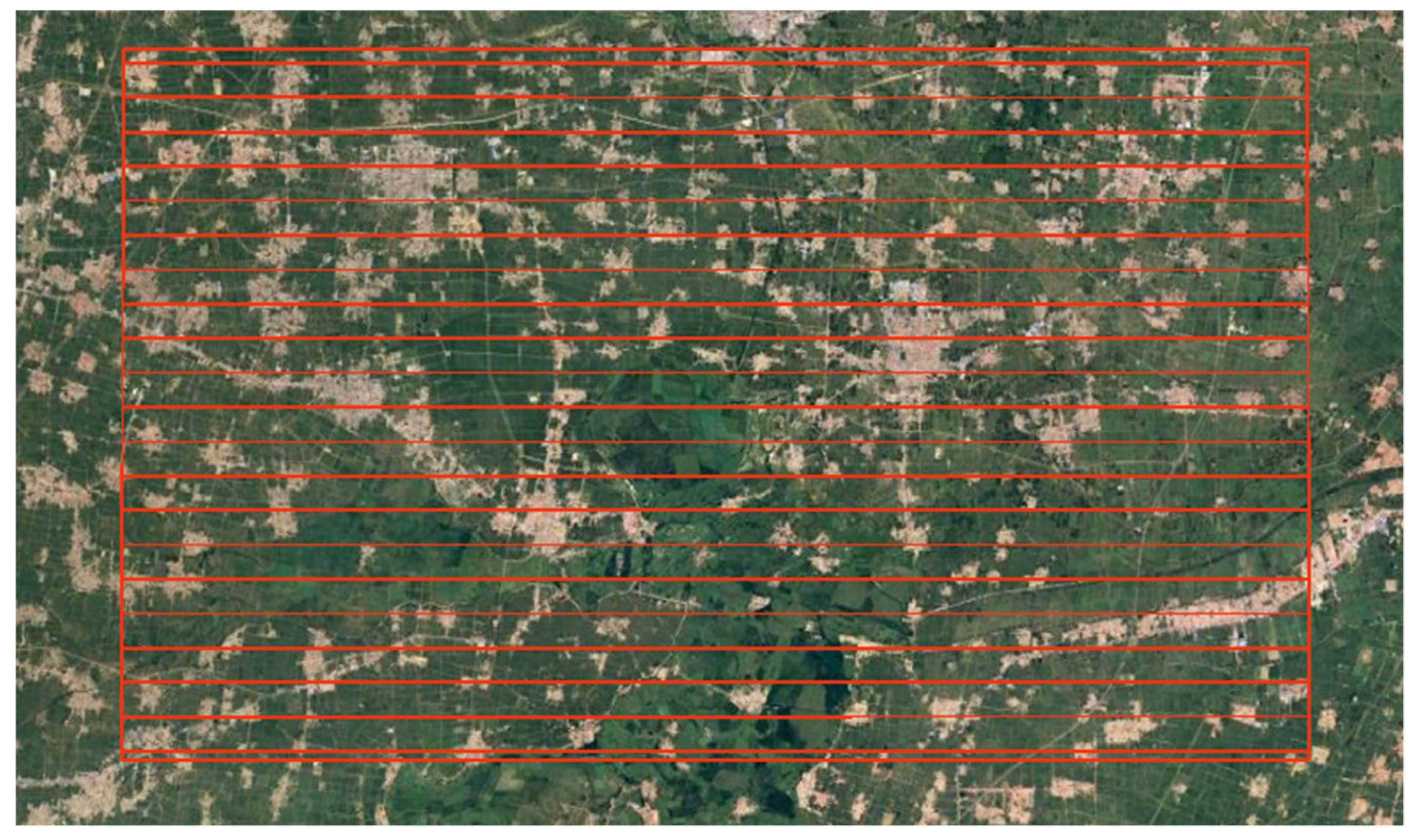

4. Airborne Flight Validation Experiments

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Greer, J.; Flake, J.C.; Busuioceanu, M.; Messinger, D.W. Compressive spectral imaging for accurate remote sensing. SPIE Newsroom 2013. [Google Scholar] [CrossRef]

- Vane, G.; Goetz, A.F.H.; Wellman, J.B. Airborne imaging spectrometer: A new tool for remote sensing. IEEE Trans. Geosci. Remote Sens. 1984, GE-22, 546–549. [Google Scholar] [CrossRef]

- Green, R.O.; Eastwood, M.L.; Sarture, C.M.; Chrien, T.G.; Aronsson, M.; Chippendale, B.J. Imaging spectroscopy and the airborne visible/infrared imaging spectrometer (AVIRIS). Remote Sens. Environ. 1998, 65, 227–248. [Google Scholar] [CrossRef]

- Chrien, T.G.; Green, R.O.; Chovit, C.; Eastwood, M.; Faust, J.; Hajek, P. New calibration techniques for the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS). Available online: https://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19950027368.pdf (accessed on 8 March 2019).

- Porter, W.M.; Enmark, H.T. A system overview of the airborne visible/infrared imaging spectrometer (AVIRIS). In Proceedings of the Imaging Spectroscopy II, International Society for Optics and Photonics, San Diego, CA, USA, 20–21 August 1987; Volume 834, pp. 22–32. [Google Scholar]

- Eastwood, M.L.; Sarture, C.M.; Chrien, T.G.; Green, R.O.; Porter, W.M. Current instrument status of the airborne visible/infrared imaging spectrometer (AVIRIS). In Proceedings of the Infrared Technology XVII, International Society for Optics and Photonics, San Diego, CA, USA, 22–26 July 1991; Volume 1540, pp. 164–176. [Google Scholar]

- Macenka, S.A.; Chrisp, M.P. Airborne visible/infrared imaging spectrometer (AVIRIS) spectrometer design and performance. In Proceedings of the Imaging Spectroscopy II, International Society for Optics and Photonics, San Diego, CA, USA, 20–21 August 1987; Volume 834, pp. 32–44. [Google Scholar]

- McCabe, G.H.; Reuter, D.C.; Jennings, D.E.; Shu, P.K.; Tsay, S.C.; Coronado, P.L.; Ross, J.L. Observations using the airborne Linear Etalon Imaging Spectral Array (LEISA): 1-to 25-micron hyperspectral imager for remote sensing applications. In Proceedings of the Sensors, Systems, and Next-Generation Satellites III, International Society for Optics and Photonics, Florence, Italy, 20–24 September 1999; Volume 3870, pp. 140–150. [Google Scholar]

- AisaFENIX: AisaFENIX Hyperspectral Sensor. Available online: http://www.specim.fi/products/aisafenix-hyperspectral-sensor (accessed on 8 March 2019).

- Cushnahan, T.; Yule, I.J.; Grafton, M.C.E.; Pullanagari, R.; White, M. The Classification of Hill Country Vegetation from Hyperspectral Imagery. Available online: https://mro.massey.ac.nz/handle/10179/10890 (accessed on 8 March 2019).

- Zhao, Y.; Meng, Z.; Wang, L.; Miyazaki, S.; Geng, X.; Zhou, G.; Li, X. A new cross-track Radiometric Correction method (VRadCor) for airborne hyperspectral image of Operational Modular Imaging Spectrometer (OMIS). In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium, IGARSS’05, Seoul, Korea, 29 July 2005; Volume 5, pp. 3553–3556. [Google Scholar]

- Kruse, F.A.; Boardman, J.W.; Lefkoff, A.B.; Young, J.M.; Kierein-Young, K.S.; Cocks, T.D.; Cocks, P.A. HyMap: An Australian hyperspectral sensor solving global problems-results from USA HyMap data acquisitions. In Proceedings of the 10th Australasian Remote Sensing and Photogrammetry Conference, Adelaide, Australia, 21–25 August 2000. [Google Scholar]

- Babey, S.K.; Anger, C.D. Compact airborne spectrographic imager (CASI): A progress review. In Proceedings of the Imaging Spectrometry of the Terrestrial Environment, International Society for Optics and Photonics, Orlando, FL, USA, 11–16 April 1993; Volume 1937, pp. 152–164. [Google Scholar]

- Pan, W.; Yang, X.; Chen, X.; Feng, P. Application of Hymap image in the environmental survey in Shenzhen, China. In Proceedings of the Remote Sensing Technologies and Applications in Urban Environments II, International Society for Optics and Photonics, Warsaw, Poland, 11–14 September 2017; Volume 10431, p. 104310. [Google Scholar]

- Bachmann, C.M.; Donato, T.F.; Fusina, R.A.; Lathrop, R.; Geib, J.; Russ, A.L.; Rhea, W.J. Coastal land-cover mapping: A comparison of PHILLS, HyMAP, and PROBE2 airborne hyperspectral imagery. In Proceedings of the Imaging Spectrometry IX, International Society for Optics and Photonics, San Diego, CA, USA, 3–8 August 2003; Volume 5159, pp. 180–188. [Google Scholar]

- Gonzalez-Sampedro, M.C.; Zomer, R.J.; Alonso-Chorda, L.; Moreno, J.F.; Ustin, S.L. Direct gradient analysis as a new tool for interpretation of hyperspectral remote sensing data: Application to HYMAP/DAISEX-99 data. In Proceedings of the Remote Sensing for Agriculture, Ecosystems, and Hydrology II, International Society for Optics and Photonics, Barcelona, Spain, 25–29 September 2000; Volume 4171, pp. 229–239. [Google Scholar]

- Bishop, C.A.; Liu, J.G.; Mason, P.J. Hyperspectral remote sensing for mineral exploration in Pulang, Yunnan Province, China. Int. J. Remote Sens. 2011, 32, 2409–2426. [Google Scholar] [CrossRef]

- Puschell, J.J. Hyperspectral imagers for current and future missions. In Proceedings of the Visual Information Processing IX, International Society for Optics and Photonics, Orlando, FL, USA, 24–28 April 2000; Volume 4041. [Google Scholar]

- Wang, Y.M.; Hu, W.D.; Shu, R.; Li, C.L.; Yuan, L.Y.; Wang, J.Y. Recent progress of push-broom infrared hyper-spectral imager in SITP. In Proceedings of the Society of Photo-Optical Instrumentation Engineers, Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series, Anaheim, CA, USA, 9–13 April 2007. [Google Scholar]

- Yu, Y.N.; Wang, Y.M.; Yuan, L.Y.; Wang, S.W.; Zhao, D.; Wang, J.Y. Wide field of view visible and near infrared pushbroom airborne hyperspectral imager. In Proceedings of the Infrared Technology and Applications XLIV, International Society for Optics and Photonics, Orlando, FL, USA, 15–19 April 2018; Volume 10624, p. 106240. [Google Scholar]

- Vane, G.; Chrien, T.G.; Reimer, J.H.; Green, R.O.; Conel, J.E. Comparison of laboratory calibrations of the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) at the beginning and end of the first flight season. In Proceedings of the Recent Advances in Sensors, Radiometry, and Data Processing for Remote sensing, International Society for Optics and Photonics, Orlando, FL, USA, 4–8 April 1988; Volume 924, pp. 168–179. [Google Scholar]

- Gege, P.; Fries, J.; Haschberger, P.; Schötz, P.; Schwarzer, H.; Strobl, P.; Vreeling, W.J. Calibration facility for airborne imaging spectrometers. ISPRS J. Photogramm. Remote Sens. 2009, 64, 387–397. [Google Scholar] [CrossRef]

- Yu, X.; Sun, Y.; Fang, A.; Qi, W.; Liu, C. Laboratory Spectral Calibration and Radiometric Calibration of Hyper-Spectral Imaging Spectrometer. In Proceedings of the International Conference on Systems & Informatics, Shanghai, China, 15–17 November 2014. [Google Scholar]

- Boreman, G.D. Modulation Transfer Function in Optical and Electro-Optical Systems; SPIE Press: Bellingham, WA, USA, 2001. [Google Scholar]

- Wang, Y.M. Flight experiment of wide-FOV airborne hyperspectral imager. Asia-Pac. Remote Sens. Tech. Program. 2018, 10780, 35. [Google Scholar]

- Hörig, B.; Kühn, F.; Oschütz, F.; Lehmann, F. HyMap hyperspectral remote sensing to detect hydrocarbons. Int. J. Remote Sens. 2001, 22, 1413–1422. [Google Scholar] [CrossRef]

| Spectral Range (nm) | Spectral Sampling Interval | Number of Channels | FOV (deg) | IFOV (mrad) | |

|---|---|---|---|---|---|

| AVIRIS | 400–2500 | 10 nm | 224 | 30 | 1 |

| LEISA | 1000–2500 | 4–10 nm | 432 | 19 | 2 |

| AisaFENIX | 380–2500 | 3–5 nm@380–970 nm 12 nm@970–2500 nm | 448 | 32.3 | 1.4 |

| OMIS | 100–12,500 | 10 [email protected]–1.1 μm 30 [email protected]–1.7 μm 15 nm@2–2.5 μm 2 μm@3–5 μm 600 nm@8–12.5 μm | 128 | 73 | 1.5/3 |

| PHI | 400–850 | 1.8 nm | 244 | 21 | 1.5 |

| Hymap | 400–2500 | 10–20 nm | 128 | 61.3 | 2 × 2.5 |

| CASI/SASI | 400–2500 | 2.4 nm/7.5 nm | 96/200 | 40 | 0.49/0.698 |

| Band (μm) | Characteristic Spectral Line | Band (μm) | Characteristic Spectral Line |

|---|---|---|---|

| 0.46–0.48 | Absorb of renieratene (high) | 0.66–0.68 | Valley of reflectance for most plant |

| 0.50–0.52 | Reflectance of chlorophyll (high) | 0.70–0.72 | Red edge of plant |

| 0.54–0.56 | Absorb of Fe2+, Fe3+ | 0.88–0.90 | Peak of reflectance for plant, absorb of Fe3+ |

| 0.56–0.62 | Absorb of phycoerythrin | 0.92–0.94 | Absorb of Fe2+ |

| Parameter | Index | Parameter | Index |

|---|---|---|---|

| Spectral Range (μm) | 0.4–1.0 | MTF | ≥0.5 |

| FOV | 40° | VHR | 0.02–0.04 |

| Spectral Resolution (nm) | 3.5/9.2, adjustable | Weight | ≤20 Kg |

| Number of Channels | 256/64, adjustable | Power Consumption | 60 W |

| IFOV (mrad) | 0.25/0.125, adjustable | Platform | ARJ-21, Y-12, and so on |

| SNR | ≥500 (ρ = 0.3) |

| Item | Parameters |

|---|---|

| Detector scale | 2048 × 256, Frame transfer |

| Pixel size | 16 μm × 16 μm |

| Number of output channels | 32 |

| Maximum pixel rate | 25 MHz |

| Full well charge | ≥200,000 e− |

| CCE | 8 μV/e− |

| QE | ≥61% @ 248 nm |

| Noise | ≤55 e− |

| Subsystems | R1 | R2 | R3 | RA |

|---|---|---|---|---|

| Left | 1.89% | 0.29% | 5.01% | 5.36% |

| Middle | 2.37% | 0.28% | 5.01% | 5.55% |

| Right | 1.45% | 0.31% | 5.01% | 5.22% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, D.; Yuan, L.; Wang, S.; Yu, H.; Zhang, C.; He, D.; Han, G.; Wang, J.; Wang, Y. Wide Swath and High Resolution Airborne HyperSpectral Imaging System and Flight Validation. Sensors 2019, 19, 1667. https://doi.org/10.3390/s19071667

Zhang D, Yuan L, Wang S, Yu H, Zhang C, He D, Han G, Wang J, Wang Y. Wide Swath and High Resolution Airborne HyperSpectral Imaging System and Flight Validation. Sensors. 2019; 19(7):1667. https://doi.org/10.3390/s19071667

Chicago/Turabian StyleZhang, Dong, Liyin Yuan, Shengwei Wang, Hongxuan Yu, Changxing Zhang, Daogang He, Guicheng Han, Jianyu Wang, and Yueming Wang. 2019. "Wide Swath and High Resolution Airborne HyperSpectral Imaging System and Flight Validation" Sensors 19, no. 7: 1667. https://doi.org/10.3390/s19071667