The Effectiveness of Depth Data in Liveness Face Authentication Using 3D Sensor Cameras †

Abstract

:1. Introduction

2. Background

Liveness Assurance/Detection

3. Face Recognition Datasets

- ZJU eyeblink dataset.This dataset is a video database that contains 80 video clips in AVI format of 20 candidates. Each subject had four clips in this dataset; the first clip was a clip for front view of the candidates without glasses, the second clip was a clip for front view of the candidates wearing rim glasses, the third was a clip for front view of the candidates wearing black glasses, the fourth and last clip was a clip of the candidates looking upwards without any glasses. As a liveness indicator, all candidates performed spontaneous eye blinking in normal speed in all four clips. Each video clip lasts about five seconds and the number of blinks per clip varies from one to six times. Data were captured indoor without illumination control with a total number of 255 blinks.

- Print-attack replay dataset.This dataset consists of 200 video clips of 50 candidates under different lighting conditions and 200 real access videos of the same candidates. The data is split into four subcategories. Enrolment data, training data, development data, and test data to validate spoof detection. Each subcategory contains different candidates. High resolution images of candidates are used to generate photo attacks.

- In-house dataset for Sant Longowal Institute of Engineering and Technology (SLIET).This dataset is developed by Manminder Singh and A.S. Arora with eye blinking, lip and chin movements as liveness indicator. It contains 65 video clips of students in the institute. Different lighting conditions are applied when capturing video clips and photos of the students to generate photo attacks. The dataset contains two categories: the first includes videos of real candidates and the second category contains photos of the candidates held by the attacker.

- Replay-attack database.This dataset contains 1300 video clips of photo and video attacks of 50 candidates. Photos and video clips are captured under different lighting conditions. The data is split into four subcategories. Enrolment data, training data, development data, and test data to validate spoof detection. Each subcategory contains different candidates.

- NUAA dataset.A public face spoof attack that contains 15 candidates with recorded bona fide face videos. Candidates were recorded on three sessions per candidate, each session consists of 500 samples. High resolution photos of candidates are included in this dataset as well. These photos were printed on A4 paper.

- CASIA face-anti-spoofing database.This dataset consists of 50 candidates with video recording of each candidate with genuine and fake faces. Genuine faces were captured from real users while the fake faces were captured from high resolution recordings. Three main spoof attacks were designed; by each candidate; warped photo attacks, photo masks and video attacks. Facial motions and eye blinking were performed to produce liveness illusion. Data were collected under three different resolutions: low, normal, and high.

- The 3D mask attack database (3DMAD).This dataset is a public 3D mask database. It contains 76,500 frames of 17 candidates. Photos are captured in a way that gives depth and colour. Each frame entails the depth, RGB and eye position of the candidate. The data is collected in three different sessions for all candidates and videos are recorded under controlled conditions. Session one and two were genuine access attempts by candidates, while the third session was a recording of an attacker with 3D mask attacks. Moreover, the dataset includes face photos used to generate masks and paper-cut masks.

- Antispoofing dataset created by Patrick P. K. Chan.This dataset consists of 50 candidates; 42 male and eight female with ages from 18 to 21. There were two images taken for each candidate; one with a flash and another without. The images were taken seconds apart with different lighting conditions and various distance settings. Five types of 2D spoof attacks are included in this dataset; paper photo attack, iPad photo attack, video attack, 2D mask attack and curved mask attack. A hot object was placed on the top of the spoof papers to increase its temperature and create a liveness illusion.

- MSU mobile face spoofing database (MSU MFSD).This dataset contains 280 videos of 35 candidates. This dataset is divided to two categories: training and testing. Spoof attacks consists of photo and video attacks consisting of printed attacks, replay attacks generated with a mobile phone and a tablet.

- Replay-mobile database.This dataset consists of 1030 videos of 40 candidates. Spoof attacks contain print photo attack, tablet photo attack and mobile video attack. Three subcategories are included in this dataset: training, development and testing.

- IIIT-D.This dataset is a public dataset that consists of 4603 RGB-D images of 106 candidates, the images were collected under normal lighting condition with few pose and expression deviations.

- BUAA Lock3DFace.This dataset is a public dataset that includes 5711 RGB-D video sequences for 509 candidates. The videos were recorded in different poses, expressions, constrictions and time.

- Dataset created by Jiyun Cui.Jiyun Cui et al. [14] created a dataset using RealSense II. Their dataset includes about 845K RGB-D images of 747 candidates with various poses and a few lighting conditions. The dataset is randomly divided into training and testing candidates.

- Silicone mask attack database (SMAD).This dataset is presented in [15]. It consists of 27,897 frames of video spoof attacks of vivid silicone masks with holes for the eyes and mouth. A few masks have hair, moustaches, and beards to create a genuine illusion for life-like impressions. This database comprises 130 videos, 65 genuine samples, and 65 masked samples. Image samples of real faces and fake silicon faces are included in this dataset as well.

- Oulu-NPU database.This database contains 4950 bona fide and artefact face videos of 55 candidates. Front cameras of six mobile devices (Samsung Galaxy S6 edge, HTC Desire EYE, MEIZU X5, ASUS Zenfone Selfie, Sony XPERIA C5 Ultra Dual, and OPPO N3) are used to record the bona fide samples. These samples were captured under different lighting conditions. While artefact species were captured using various types of PAIs, including two different kinds of printers and a display screen.

- Yale-Recaptured DatabaseThis database is a recaptured and extended dataset of Yale Face Database B, which consists of 16,128 frames of 28 candidates. The photos taken of candidates includes 9 poses and 64 lighting condition per candidates.

- MS-Face DatabaseThis dataset is a multispectral face artefact database which includes images collected from 21 candidates under different imaging settings. For the bona fide dataset, each candidate was captured in five various conditions. The spoof attacks were designed by presenting three printed images of the best quality in the visible spectrum under three different illumination settings: natural, ambient lighting, and two spotlights.

- Florence 2D/3D face dataset.This dataset is created with the purpose of supporting and is therefore designed to replicate genuine surveillance conditions. The dataset contains 53 candidates with high-resolution 3D scans, video clips with different resolutions and zoom levels. Each candidate was recorded in three different conditions: video clips in an organised setting, indoor setting with minimal constraints, and outdoor setting without constraints. This dataset was not designed for spoofing purposes and therefore no liveness indicators nor spoof attacks were included.

4. Related Work

5. Proof-Of-Concept Study

5.1. Method

Authentication System Using Kinect Sensor

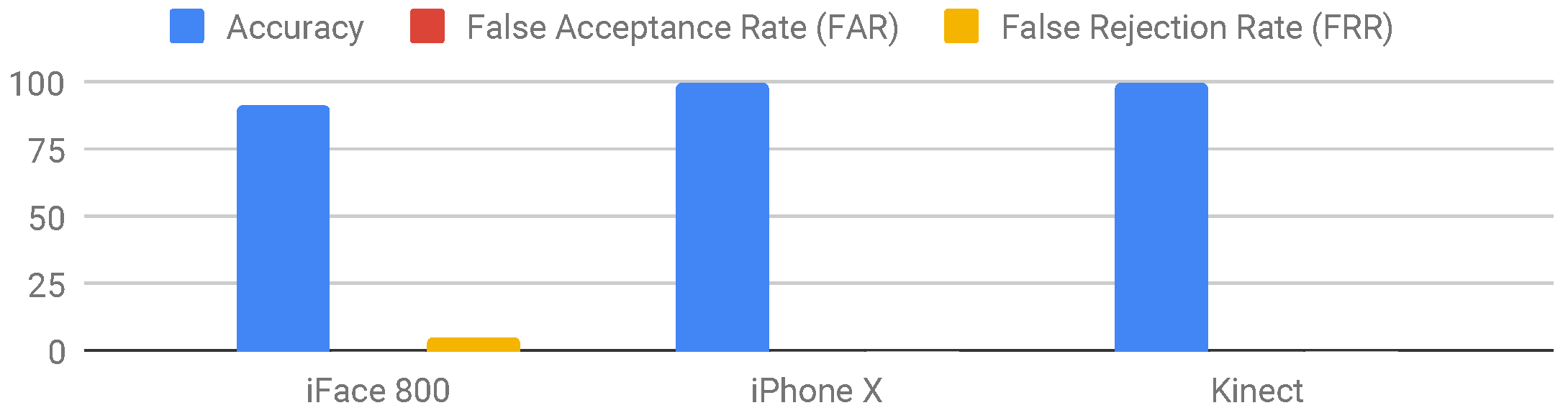

5.2. Results

6. Improvement Suggestions for Real Depth Liveness Authentication

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Albakri, G.; AlGhowinem, S. Investigating Spoofing Attacks for 3D Cameras Used in Face Biometrics. In Intelligent Systems and Applications; Arai, K., Kapoor, S., Bhatia, R., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 907–923. [Google Scholar]

- Hasan, T.; Hansen, J.H. A study on universal background model training in speaker verification. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 1890–1899. [Google Scholar] [CrossRef]

- Povey, D.; Chu, S.M.; Varadarajan, B. Universal background model based speech recognition. In Proceedings of the 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; pp. 4561–4564. [Google Scholar] [CrossRef]

- Reynolds, D. Universal background models. In Encyclopedia of Biometrics; Springer: Boston, MA, USA, 2009; pp. 1349–1352. [Google Scholar]

- Lasko, T.A.; Bhagwat, J.G.; Zou, K.H. The use of receiver operating characteristic curves in biomedical informatics. J. Biomed. Inform. 2005, 38, 404–415. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zou, K.H.; Hall, W.J. Two transformation models for estimating an ROC curve derived from continuous data. J. Appl. Stat. 2000, 27, 621–631. [Google Scholar] [CrossRef]

- Sahoo, S.K.; Choubisa, T.; Prasanna, S.M. Multimodal biometric person authentication: A review. IETE Tech. Rev. 2012, 29, 54–75. [Google Scholar] [CrossRef]

- Li, X.; Chen, J.; Zhao, G.; Pietikäinen, M. Remote heart rate measurement from face videos under realistic situations. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 4264–4271. [Google Scholar] [CrossRef]

- Li, X.; Komulainen, J.; Zhao, G.; Yuen, P.C.; Pietikainen, M. Generalized face anti-spoofing by detecting pulse from face videos. In Proceedings of the 2016 23rd International Conference on Pattern Recognition, Cancun, Mexico, 4–8 December 2017; pp. 4244–4249. [Google Scholar] [CrossRef]

- Galbally, J.; Marcel, S.; Fierrez, J. Image Quality Assessment for Fake Biometric Detection: Application to Iris, Fingerprint, and Face Recognition. IEEE Trans. Image Process. 2014, 23, 710–724. [Google Scholar] [CrossRef] [PubMed]

- Boulkenafet, Z.; Komulainen, J.; Hadid, A. Face anti-spoofing based on color texture analysis. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015. [Google Scholar]

- Mhou, K.; Van Der Haar, D.; Leung, W.S. Face spoof detection using light reflection in moderate to low lighting. In Proceedings of the 2017 2nd Asia-Pacific Conference on Intelligent Robot Systems, ACIRS 2017, Wuhan, China, 16–18 June 2017; pp. 47–52. [Google Scholar] [CrossRef]

- Kittler, J.; Hilton, A.; Hamouz, M.; Illingworth, J. 3D Assisted Face Recognition: A Survey of 3D Imaging, Modelling and Recognition Approaches. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR, San Diego, CA, USA, 21–23 September 2005; pp. 114–120. [Google Scholar] [CrossRef]

- Cui, J.; Zhang, H.; Han, H.; Shan, S.; Chen, X. Improving 2D face recognition via discriminative face depth estimation. In Proceedings of the 2018 International Conference on Biometrics (ICB), Gold Coast, Australia, 20–23 February 2018; pp. 1–8. [Google Scholar]

- Shao, R.; Lan, X.; Yuen, P.C. Joint Discriminative Learning of Deep Dynamic Textures for 3D Mask Face Anti-Spoofing. IEEE Trans. Inf. Forensics Secur. 2019, 14, 923–938. [Google Scholar] [CrossRef]

- Boulkenafet, Z.; Akhtar, Z.; Feng, X.; Hadid, A. Face anti-spoofing in biometric systems. In Biometric Security and Privacy; Springer: Berlin, Germany, 2017; pp. 299–321. [Google Scholar]

- Hadid, A. Face biometrics under spoofing attacks: Vulnerabilities, countermeasures, open issues, and research directions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 113–118. [Google Scholar]

- Killioǧlu, M.; Taşkiran, M.; Kahraman, N. Anti-spoofing in face recognition with liveness detection using pupil tracking. In Proceedings of the SAMI 2017–IEEE 15th International Symposium on Applied Machine Intelligence and Informatics, Herl’any, Slovakia, 26–28 January 2017; pp. 87–92. [Google Scholar] [CrossRef]

- Wasnik, P.; Raja, K.B.; Raghavendra, R.; Busch, C. Presentation Attack Detection in Face Biometric Systems Using Raw Sensor Data from Smartphones. In Proceedings of the 2016 12th International Conference on Signal-Image Technology and Internet-Based Systems (SITIS), Naples, Italy, 28 November–1 December 2016; pp. 104–111. [Google Scholar] [CrossRef]

- Pravallika, P.; Prasad, K.S. SVM classification for fake biometric detection using image quality assessment: Application to iris, face and palm print. In Proceedings of the 2016 International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 26–27 August 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Patil, A.A.; Dhole, S.A. Image Quality (IQ) based liveness detection system for multi-biometric detection. In Proceedings of the 2016 International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 26–27 August 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Kim, Y.; Yoo, J.H.H.; Choi, K. A motion and similarity-based fake detection method for biometric face recognition systems. IEEE Trans.Consum. Electron. 2011, 57, 756–762. [Google Scholar] [CrossRef]

- Wang, T.; Yang, J.; Lei, Z.; Liao, S.; Li, S.Z. Face liveness detection using 3D structure recovered from a single camera. In Proceedings of the 2013 International Conference on Biometrics, ICB 2013, Madrid, Spain, 4–7 June 2013. [Google Scholar] [CrossRef]

- Yan, J.; Zhang, Z.; Lei, Z.; Yi, D.; Li, S.Z. Face liveness detection by exploring multiple scenic clues. In Proceedings of the 2012 12th International Conference on Control, Automation, Robotics and Vision, ICARCV 2012, Guangzhou, China, 5–7 December 2012; pp. 188–193. [Google Scholar] [CrossRef]

- Kollreider, K.; Fronthaler, H.; Bigun, J. Evaluating liveness by face images and the structure tensor. In Proceedings of the Fourth IEEE Workshop on Automatic Identification Advanced Technologies, AUTO ID 2005, Buffalo, NY, USA, 17–18 October 2005; pp. 75–80. [Google Scholar] [CrossRef]

- Nguyen, H.P.; Retraint, F.; Morain-Nicolier, F.; Delahaies, A. Face spoofing attack detection based on the behavior of noises. In Proceedings of the 2016 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Washington, DC, USA, 7–9 December 2016; pp. 119–123. [Google Scholar] [CrossRef]

- Karthik, K.; Katika, B.R. Image quality assessment based outlier detection for face anti-spoofing. In Proceedings of the 2017 2nd International Conference on Communication Systems, Computing and IT Applications (CSCITA), Mumbai, India, 7–8 April 2017; pp. 72–77. [Google Scholar] [CrossRef]

- Lakshminarayana, N.N.; Narayan, N.; Napp, N.; Setlur, S.; Govindaraju, V. A discriminative spatio-temporal mapping of face for liveness detection. In Proceedings of the 2017 IEEE International Conference on Identity, Security and Behavior Analysis, ISBA 2017, New Delhi, India, 22–24 February 2017. [Google Scholar] [CrossRef]

- Tirunagari, S.; Poh, N.; Windridge, D.; Iorliam, A.; Suki, N.; Ho, A.T. Detection of face spoofing using visual dynamics. IEEE Trans. Inf. Forensics Secur. 2015, 10, 762–777. [Google Scholar] [CrossRef]

- Menotti, D.; Chiachia, G.; Pinto, A.; Schwartz, W.R.; Pedrini, H.; Falcão, A.X.; Rocha, A. Deep Representations for Iris, Face, and Fingerprint Spoofing Detection. IEEE Trans. Inf. Forensics Secur. 2015, 10, 864–879. [Google Scholar] [CrossRef] [Green Version]

- Arashloo, S.R.; Kittler, J.; Christmas, W. Face Spoofing Detection Based on Multiple Descriptor Fusion Using Multiscale Dynamic Binarized Statistical Image Features. IEEE Trans. Inf. Forensics Secur. 2015, 10, 2396–2407. [Google Scholar] [CrossRef]

- Boulkenafet, Z.; Komulainen, J.; Hadid, A. Face Spoofing Detection Using Colour Texture Analysis. IEEE Trans. Inf. Forensics Secur. 2016, 11, 1818–1830. [Google Scholar] [CrossRef]

- Aziz, A.Z.A.; Wei, H.; Ferryman, J. Face anti-spoofing countermeasure: Efficient 2D materials classification using polarization imaging. In Proceedings of the 2017 5th International Workshop on Biometrics and Forensics, IWBF 2017, Coventry, UK, 4–5 April 2017. [Google Scholar] [CrossRef]

- Wen, D.; Han, H.; Jain, A.K. Face Spoof Detection With Image Distortion Analysis. IEEE Trans. Inf. Forensics Secur. 2015, 10, 746–761. [Google Scholar] [CrossRef]

- Raghavendra, R.; Raja, K.B.; Venkatesh, S.; Busch, C. Face Presentation Attack Detection by Exploring Spectral Signatures. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 672–679. [Google Scholar] [CrossRef]

- Benlamoudi, A.; Samai, D.; Ouafi, A.; Bekhouche, S.E.; Taleb-Ahmed, A.; Hadid, A. Face spoofing detection using local binary patterns and Fisher Score. In Proceedings of the 3rd International Conference on Control, Engineering and Information Technology, CEIT 2015, Tlemcen, Algeria, 25–27 May 2015. [Google Scholar]

- Mohan, K.; Chandrasekhar, P.; Jilani, S.A.K. A combined HOG-LPQ with Fuz-SVM classifier for Object face Liveness Detection. In Proceedings of the 2017 International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Palladam, India, 10–11 February 2017; pp. 531–537. [Google Scholar] [CrossRef]

- Galbally, J.; Satta, R.; Gemo, M.; Beslay, L. Biometric Spoofing: A JRC Case Study in 3D Face Recognition. Available online: https://pdfs.semanticscholar.org/82be/2bc56b61a66f7ea7c9b93042958008abf24c.pdf (accessed on 4 June 2018).

- Chan, P.P.; Liu, W.; Chen, D.; Yeung, D.S.; Zhang, F.; Wang, X.; Hsu, C.C. Face Liveness Detection Using a Flash against 2D Spoofing Attack. IEEE Trans. Inf. Forensics Secur. 2018, 13, 521–534. [Google Scholar] [CrossRef]

- Singh, M.; Arora, A. A Novel Face Liveness Detection Algorithm with Multiple Liveness Indicators. Wirel. Pers. Commun. 2018, 100, 1677–1687. [Google Scholar] [CrossRef]

- Di Martino, J.M.; Qiu, Q.; Nagenalli, T.; Sapiro, G. Liveness Detection Using Implicit 3D Features. arXiv 2018, arXiv:1804.06702. [Google Scholar]

- Rehman, Y.A.U.; Po, L.M.; Liu, M. LiveNet: Improving features generalization for face liveness detection using convolution neural networks. Expert Syst. Appl. 2018, 108, 159–169. [Google Scholar] [CrossRef]

- Peng, F.; Qin, L.; Long, M. Face presentation attack detection using guided scale texture. Multimed. Tools Appl. 2017, 77, 8883–8909. [Google Scholar] [CrossRef]

- Alotaibi, A.; Mahmood, A. Deep face liveness detection based on nonlinear diffusion using convolution neural network. Signal Image Video Process. 2017, 11, 713–720. [Google Scholar] [CrossRef]

- Lai, C.; Tai, C. A Smart Spoofing Face Detector by Display Features Analysis. Sensors 2016, 16, 1136. [Google Scholar] [CrossRef] [PubMed]

- About—OpenCV Library. Available online: https://opencv.org/about/ (accessed on 1 December 2018).

- Haneda Airport to Launch Facial Recognition Gates for Japanese Nationals. Available online: https://www.japantimes.co.jp/news/2017/10/14/national/haneda-airport-to-launch-facial-recognition-gates-for-japanese-nationals/ (accessed on 1 December 2018).

- Davidson, L. Face-Scanning Technology at Orlando Airport Expands to All International Travelers. Available online: https://www.mediapost.com/publications/article/321222/face-scanning-at-orlando-airport-expands-to-all-in.html (accessed on 1 December 2018).

- Carbone, C. Tokyo 2020 OlympIcs to Use Facial Recognition in Security First. Available online: https://www.foxnews.com/tech/tokyo-2020-olympics-to-use-facial-recognition-in-security-first (accessed on 1 December 2018).

- Ashtekar, B. Face Recognition in Videos. Ph.D. Thesis, University of Mumbai, Maharashtra, India, 2010. [Google Scholar]

- Oro, D.; Fernandez, C.; Saeta, J.R.; Martorell, X.; Hernando, J. Real-time GPU-based face detection in HD video sequences. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011. [Google Scholar] [CrossRef]

| Dataset Name | Dataset Size | Dataset File | Spoofing Cases | Liveness Features |

|---|---|---|---|---|

| ZJU Eyeblink | 80 video clips of 20 candidates | Video clips | 4 clips per subject: a frontal view without glasses, a frontal view and wearing thin rim glasses, a frontal view and black frame glasses, and with upward view without glasses | Eyes blinking |

| Print-Attack Replay | 200 video clips of 50 clients | Video clips, 2D images | Video attacks of clients, 2D printed-photo attack of clients | Real-access attempt videos |

| SLIET | 65 video clips | Video clips, 2D images | videos of authentic users, pictures of valid users held by attacker | Eye blink, lips and chin movement |

| Replay-Attack Database | 1300 photo and video attack attempts of 50 clients | Video clips, 2D images | Video attacks of clients under different lighting, 2D printed-photo attack of clients under different lighting, On-screen attacks | 25 general image quality measures and Context-based using correlation between face motion vs. background motion |

| NUAA | 12,614 of live and photographed faces | 2D images | 2D printed photos | Face texture using Lambertian model and Eye blink detection using Conditional Random Fields |

| CASIA | 50 subjects | video recordings of genuine and fake faces | warped photo attacks, cut photo attacks, video attacks | Facial motion and eyes blinking |

| 3DMAD | 76,500 frames of 17 persons | depth image, RGB image | Video attacks, 3D mask attacks | Eye position |

| Patrick Chan | 50 subjects 600 videos | 2D images | paper photo attack, iPad photo attack, video attack, 2D mask attack, curved mask attack | Temperature |

| MSU MFSD | 280 video clips of photo and video attack attempts to 35 clients | Video clips | printed attacks, replay attacks generated with a mobile phone and a tablet | Image distortion based quality measures |

| Replay-mobile database | 1030 videos from 40 subjects | Video clips | print photo attack, tablet photo attack, mobile video attack | Image resolution |

| IIIT-D | 4603 RGB-D images of 106 subjects | RGB Depth Images | Flat images | Pose and expression variation |

| BUAA Lock3DFace | 5711 RGB-D video sequences of 509 subjects | RGB Depth Videos | Video attacks | Face2D liveness detection |

| Jiyun Cui | RGB-D images of 747 subjects | RGB Depth Images | Flat images | 2D face recognition |

| SMAD | 130 video | Video clips | vivid silicone masks | Dynamic Texture for 3D Mask |

| Oulu-NPU | 4950 bona fide and artefact face videos corresponding to the 55 subjects | Video clips, 2D images | Print photo, Replay video | Image resolution |

| Yale-Recaptured | 28 subjects | Images | LCD screen | Different resolutions |

| MS-Face | 21 subject | Images | Printed images | Colour |

| Florence 2D/3D Face | 53 subject | 3D scans of human faces Videos and images | No spoof attack | N/A |

| Ref | Biometrics | Spoof Attack | Anti-Spoofing | |

|---|---|---|---|---|

| Hardware | Software | |||

| [18] | Face biometrics | Shaking a regular photograph in front of the camera and real time video on a mobile phone | Basic hardware equipment | Pupil Tracking |

| [10] | Iris, Fingerprint, Face Recognition | Synthetic iris samples, A database of fake fingerprint, A database containing short videos of face images | - | Image quality assessment |

| [9] | Face biometrics | 3D mask, print and video | Pulse detection from facial videos | Analyse colour changes corresponding to heart rate |

| [12] | Face biometrics | Two cameras: one that captures an infrared, image and another which captures a normal image | Using light reflection—infrared led | - |

| [19] | Face biometrics | Images presented on smart-phone screen | - | Raw data sensors |

| [20] | Iris, Face, Palm images | Fusion Technique | - | Image quality assessment |

| [21] | Fingerprint, Face | Fake fevicol fingerprint, Mobile Images both high and low resolution, Photo print image | - | Image quality assessment |

| [22] | Face biometrics | A regular live video, printed pictures, wearing a mask with printed pictures, pictures in a LCD monitor, video displayed in mobile devices | - | Similarity is measured (using similarity index measure (SSIM) and a background motion index (BMI)) |

| [23] | Face biometrics | Genuine photo, planar photo, photo wrapped horizontally and photo wrapped vertically | - | Analysing 3D structure |

| [24] | Face biometrics | Face images printed on A4 paper | - | Non-rigid motion, face-background consistency, banding effect. |

| [25] | Face biometrics | Imitating high resolution photographs in motion | - | Utilising optical flow of lines |

| [26] | Face biometrics | Fake face images are taken from faces printed on paper or displayed on an LCD screen | - | Using noise local variance model |

| [27] | Face biometrics | 2D printed photograph presented either wrapped or unwrapped | - | Image quality assessment |

| [28] | Face biometrics | Wrapped photo attack, cut photo attack, video attack | - | Spectral analysis |

| [29] | Face biometrics | Print and replay attacks from live (valid)videos containing an authentic face | - | Dynamic Mode Decomposition |

| [30] | Iris Face Fingerprint | Printed images | - | Using hyper-parameter optimisation of network architectures ( architecture optimisation) |

| [31] | Face biometrics | Used spoofing attacks provided in these databases: CASIA, Replay-Attack, NUAA | - | Using Multiscale Dynamic Binarized Statistical Image Features |

| [32] | Face biometrics | Printed faces, video displays or masks | - | Colour texture analysis |

| [33] | Face biometrics | A set of polarised images, And printed paper masks | Polarised light | |

| [34] | Face biometrics | Image displayed on iPad, image displayed on iPhone, and printed photo | - | Image distortion analysis |

| [35] | Face biometrics | Using the Extended Multispectral Presentation Attack Face Database (EMSPAD) | - | analysing the spectral signature |

| [36] | Face biometrics | Warped photo attack, cut photo attack and video attack | - | Fisher score |

| [37] | Face biometrics | 2D photographs, video attacks and masks | - | HOG-LPQ and Fuz-SVM Classifier |

| [38] | Face Biometrics | 3D Printed Masks | ArtecID 3D Face Log On System | |

| [39] | Face Biometrics | A4 paper photo attack, iPad photo attack, video attack, mask attack, and curved mask attack | Flash light | Light illumination detection |

| [40] | Face Biometrics | video and photo attacks | - | Eyeblink, lip movement and chin movement, background changes |

| [41] | Face Biometrics | Different environments and lighting conditions | Flash light | Computing 3D features |

| [42] | Face Biometrics | video attacks | - | Deep CNN networks |

| [14] | Face Biometrics | 2D images | - | Depth estimation |

| [43] | Face Biometrics | 2D images, video clips attacks | - | Guided scale space to reduce noise |

| [16] | Face Biometrics | video attacks | - | Colour texture analysis |

| [15] | Face Biometrics | 3D mask attacks | - | Deep dynamic texture |

| [44] | Face Biometrics | 2D images, video clips attacks | - | Nonlinear diffusion |

| [45] | Face Biometrics | 2D images, iPad image display attacks | - | Characteristics of the display and probabilistic neural network |

| Spoof Attack | Material |

|---|---|

| Real face | Real face wearing scarf (Baseline) |

| Real face without scarf | |

| Real face wearing glasses (Occlusion) | |

| Face displayed on device | iPhone 6s Screen Huawei Tablet Lenovo Laptop |

| Face printed on A4 image paper | Matte Glossy |

| Printed Face mask (bent and worn on the face) | Matte Glossy |

| Video attack - face moving and eyes blinking | iPhone 6s Screen Huawei Tablet Lenovo Laptop |

| iPhone 6s | Huawei Tablet | Lenovo Laptop | |

|---|---|---|---|

| Type | LED-backlit IPS LCD Capacitive touchscreen 16 M colors | IPS LCD Capacitive touchscreen 16 M colors | HD LED TN Glare Flat eDP |

| Size | 4.7 inches | 7.0 inches | 14 inch |

| Resolution | 750 × 1334 pixels | 600 × 1024 pixels | 1366 × 768 pixels |

| Spoof Attack | Material | Ground Truth * | Results | ||

|---|---|---|---|---|---|

| iFace 800 | iPhone X | Kinect | |||

| Real Face | Real face with scarf | Accept | Accept | Accept | Accept |

| Real face wearing glasses | Accept | Reject | Accept | Accept | |

| Real face without scarf | Accept | Reject | Accept | Accept | |

| Face displayed on device | iPhone 6s screen | Reject | Reject | Reject | Reject |

| Huawei tablet | Reject | Reject | Reject | Reject | |

| Lenovo laptop | Reject | Reject | Reject | Reject | |

| Printed Face image | Matte | Reject | Reject | Reject | Reject |

| Glossy | Reject | Reject | Reject | Reject | |

| Printed Face mask(bent and worn on face) | Matte | Reject | Reject | Reject | Reject |

| Glossy | Reject | Reject | Reject | Reject | |

| Video attack | iPhone 6s screen | Reject | Reject | Reject | Reject |

| Huawei tablet | Reject | Reject | Reject | Reject | |

| Lenovo laptop | Reject | Reject | Reject | Reject | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Albakri, G.; Alghowinem, S. The Effectiveness of Depth Data in Liveness Face Authentication Using 3D Sensor Cameras. Sensors 2019, 19, 1928. https://doi.org/10.3390/s19081928

Albakri G, Alghowinem S. The Effectiveness of Depth Data in Liveness Face Authentication Using 3D Sensor Cameras. Sensors. 2019; 19(8):1928. https://doi.org/10.3390/s19081928

Chicago/Turabian StyleAlbakri, Ghazel, and Sharifa Alghowinem. 2019. "The Effectiveness of Depth Data in Liveness Face Authentication Using 3D Sensor Cameras" Sensors 19, no. 8: 1928. https://doi.org/10.3390/s19081928

APA StyleAlbakri, G., & Alghowinem, S. (2019). The Effectiveness of Depth Data in Liveness Face Authentication Using 3D Sensor Cameras. Sensors, 19(8), 1928. https://doi.org/10.3390/s19081928