A New Integrated System for Assistance in Communicating with and Telemonitoring Severely Disabled Patients

Abstract

:1. Introduction

2. Materials and Methods

2.1. Description of Assistive System Hardware

2.1.1. Communication Module

- Switch-type sensors are for patients who can perform controlled muscular contraction. These sensors are USB-connected to the laptop and adapted to the physical condition of the patient. Some examples of the switched-based sensors used by this system are illustrated in Figure 2: hand-controlled sensors, including a hand switch-click (Figure 2a), pal pad flat switch (Figure 2b), ribbon switch (Figure 2c), and wobble switch (Figure 2d); foot-controlled sensors, such as a foot switch (Figure 2e); sip/puff breeze switch with headset, as illustrated in Figure 2f (the leading sip-puff system for individuals with motor disabilities and limited dexterity).

- Eye-tracking-based interface is for fully immobilized patients who cannot perform any controlled movement, except eyeball movement, and who are unable to communicate verbally (Figure 3).

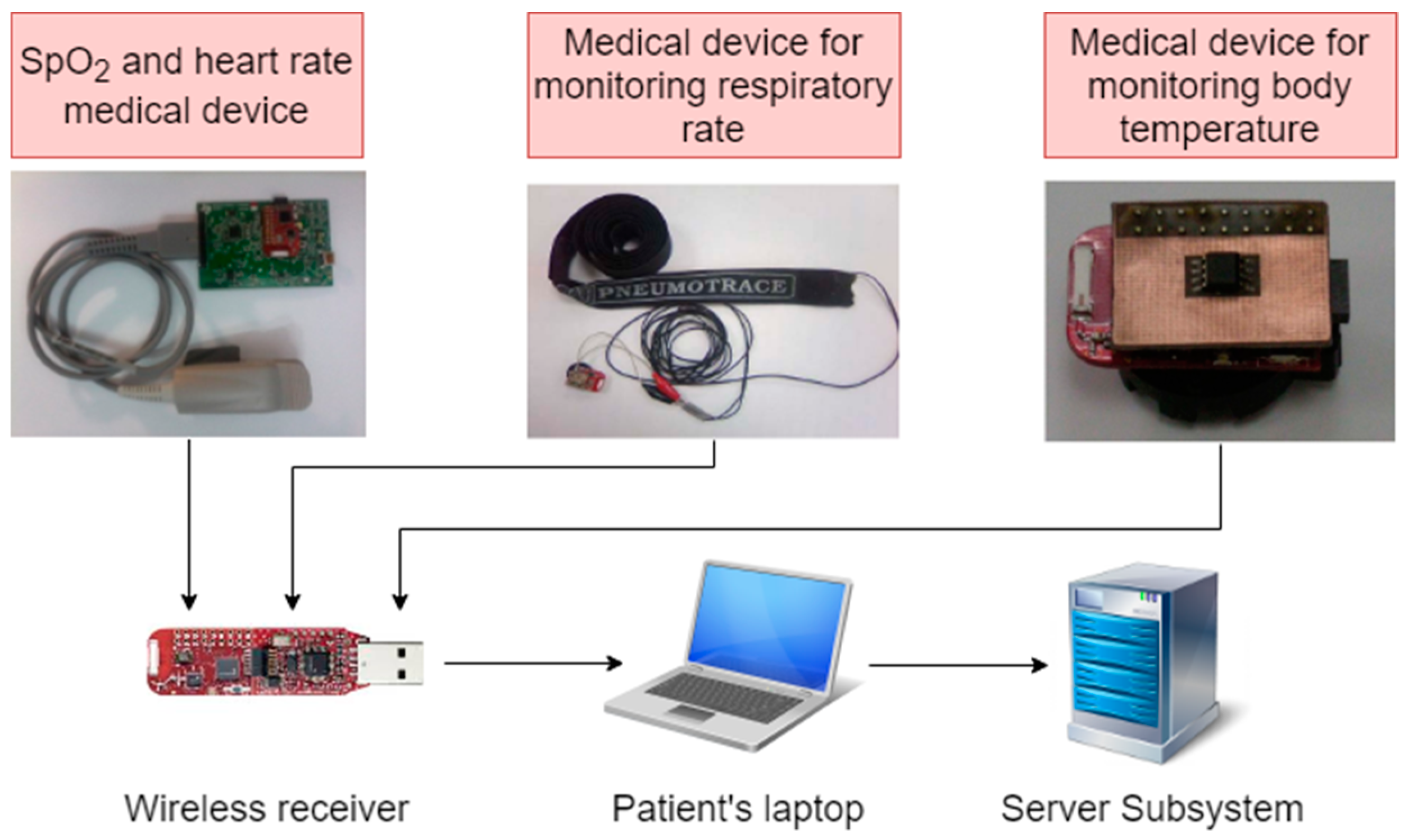

2.1.2. Telemonitoring Module

2.2. Description of Assistive System Software

2.2.1. Software Component of Patient Subsystem

- cursor movement on the user screen according to the user’s gaze direction only for visual inspection of PWA objects and

- ideogram/keyword selection by simulating a mouse click when the cursor controlled by the user’s gaze direction is placed in the selection area of the wanted object on the screen.

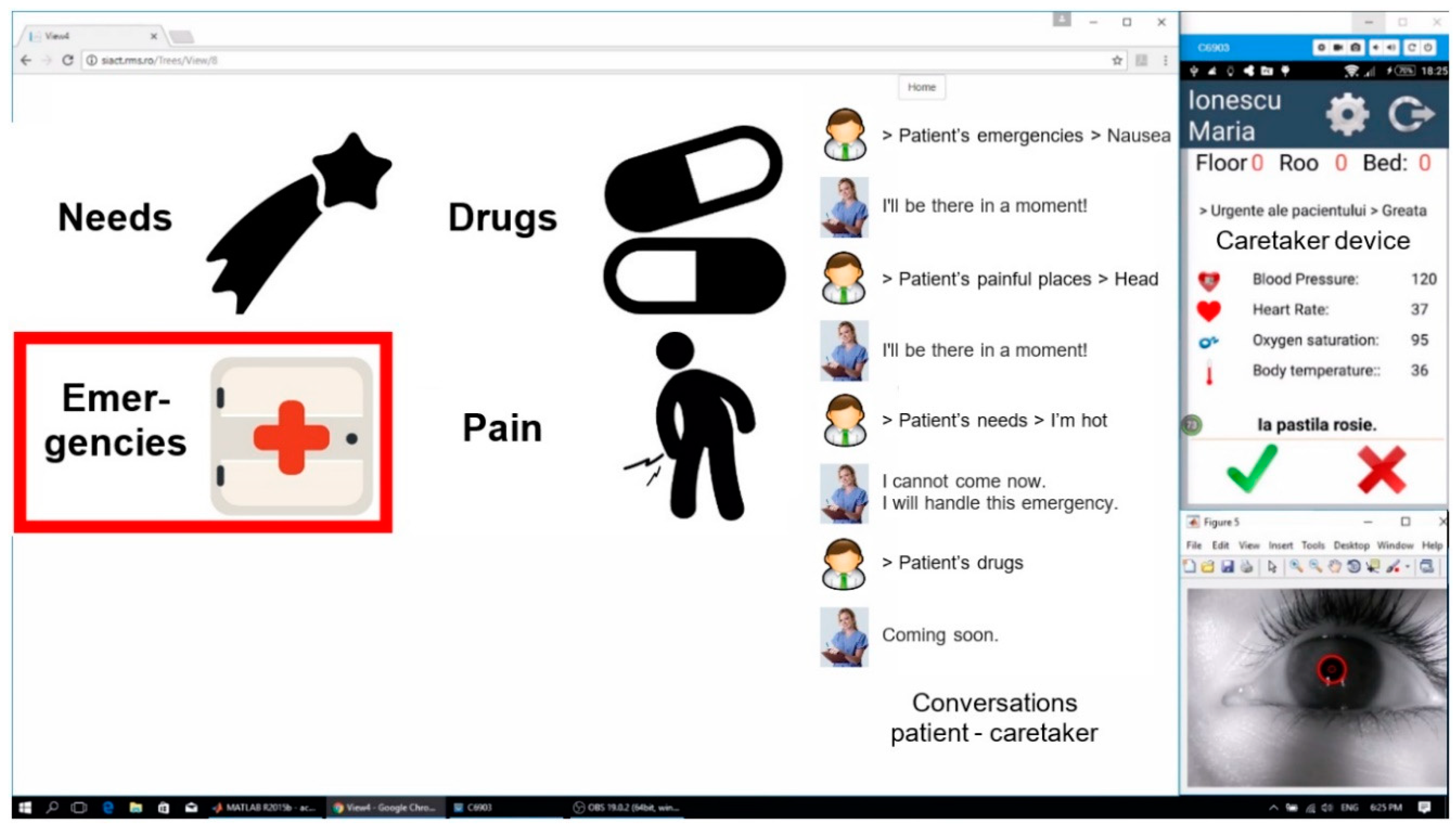

2.2.2. Software Component of Server Subsystem

2.2.3. Software Component of Caretaker Subsystem

- displays the keywords selected and transmitted by the patient in written or audio form;

- sends back the supervisor’s response to the patient;

- displays the values of the patient’s monitored physiological parameters;

- receives an alarm notification in audio and visual forms, triggered by the Server, when the normal values of the vital physiological parameters of the patients are exceeded;

- displays the time evolution of the physiological parameters’ values monitored during treatment.

- (a)

- Normal operation when the caretaker reads and answers a notification alarm.

- (b)

- Special situation (1) when the caretaker does not answer after reading the message.

- (c)

- Special situation (2) when the caretaker does not read the notification alarm.

3. System Testing

3.1. System Testing in Laboratory Conditions

3.1.1. Testing the Communication Function

3.1.2. Testing the Telemonitoring Function

3.2. System Testing in Hospital

- Training the patient to use the system. Depending on the patient’s condition, the patient learning time varied between 10 and 20 min.

- Testing both functions of the system: communication using keywords technology and real-time telemonitoring of the patient’s physiological parameters. Depending on the patient’s experience and cooperation, this stage varied between 20 and 30 min.

3.2.1. Participants

3.2.2. Experimental Sessions

4. Results and Discussion

- Do you consider that the system responds to your needs for communication with the supervisor/others?

- How do you evaluate the operating modes of the system?

- How do you evaluate the two functions of the system (communication and telemonitoring of physiological parameters)?

- Do you think that the keywords that run on the screen respond to your needs?

- How do you evaluate the graphics used by the system?

- Do you consider the hierarchically organized database useful?

- How do you rate the selection of keyword/ideogram by using the eye-tracking technique?

- How do you rate the selection of keywords/ideograms with the switch-type sensor?

- Do you think the ideograms that accompany the keywords are suggestive?

- Do you consider that you were able to communicate effectively with the supervisor during the tests?

- Do you consider the telemonitoring of the physiological parameters useful?

- Do you think the system is flexible enough to quickly reconfigure to your needs (the frequency of highlighting the ideograms/keywords, modifying dwell time, changing databases to enter or remove keywords, changing icons or sounds that accompany keyword displaying)?

- Do you consider the system tested to be useful in the hospital/at home?

- What was the level of fatigue experienced during testing?

- Did you feel physical discomfort due to the different modules of the system you tested?

5. Conclusions

Ethical Statement

Author Contributions

Funding

Conflicts of Interest

References

- Hussey, A.C.S.; Cook, S. Assistive Technologies: Principles and Practice; Mosby: Baltimore, MD, USA, 2002. [Google Scholar]

- Bryant, D.P.; Bryant, B.R. Assistive technology for people with disabilities; Allyn and Bacon: Boston, MA, USA, 2003. [Google Scholar]

- Varshney, U. Pervasive Healthcare Computing: EMR/EHR, Wireless and Health Monitoring; Springer Science & Business Media: New York, NY, USA, 2009. [Google Scholar]

- Xiao, Y.; Chen, H. Mobile Telemedicine: A computing and Networking Perspective; Auerbach Publications: New York, NY, USA, 2008. [Google Scholar]

- Bronzino, J.D. Biomedical Engineering and Instrumentation; PWS Publishing Co.: Boston, MA, USA, 1986. [Google Scholar]

- Bronzino, J.D. Biomedical Engineering Handbook; CRC Press: Danvers, MA, USA, 2000; Volume 2. [Google Scholar]

- Dawant, B.M.; Norris, P.R. Knowledge-Based Systems for Intelligent Patient Monitoring and Management in Critical Care Environments; Biomedical Engineering Handbook CRC Press Ltd.: Danvers, MA, USA, 2000. [Google Scholar]

- Scherer, M. Living in the State of Stuck: How Technology Impacts the Lives of People with Disabilities; Brookline Books: Brookline, MA, USA, 2005. [Google Scholar]

- Castanié, F.; Mailhes, C.; Henrion, S.; Lareng, L.; Alonso, A.; Weber, J.L.; Zeevi, B.; Lochelongue, P.; Luprano, J.; Kollias, V. The UR-Safe project: A multidisciplinary approach for a fully “nomad” care for patients. In Proceedings of the International Conference on Sciences of Electronics, Technologies of Information and Telecommunications, Sousse, Tunisia, 17–21 March 2003; pp. 17–21. [Google Scholar]

- Malan, D.; Fulford-Jones, T.; Welsh, M.; Moulton, S. Codeblue: An ad hoc sensor network infrastructure for emergency medical care. In Proceedings of the International Workshop on Wearable and Implantable Body Sensor Networks, Boston, MA, USA, 6 June 2004. [Google Scholar]

- Kropp, A. Wireless communication for medical applications: The HEARTS experience. J. Telecommun. Inf. Technol. 2005, 4, 40–41. [Google Scholar]

- Rubel, P.; Fayn, J.; Nollo, G.; Assanelli, D.; Li, B.; Restier, L.; Adami, S.; Arod, S.; Atoui, H.; Ohlsson, M. Toward personal eHealth in cardiology. Results from the EPI-MEDICS telemedicine project. J. Electrocardiol. 2005, 38, 100–106. [Google Scholar] [CrossRef] [PubMed]

- Kakria, P.; Tripathi, N.; Kitipawang, P. A real-time health monitoring system for remote cardiac patients using smartphone and wearable sensors. Int. J. Telemed. Appl. 2015, 2015, 8. [Google Scholar] [CrossRef] [PubMed]

- Bishop, J. Supporting communication between people with social orientation impairments using affective computing technologies: Rethinking the autism spectrum. In Assistive Technologies for Physical and Cognitive Disabilities; IGI Global: Hershey, PA, USA, 2015; pp. 42–55. [Google Scholar]

- Bowes, A.; Dawson, A.; Greasley-Adams, C. Literature review: The cost effectiveness of assistive technology in supporting people with dementia. Available online: https://dspace.stir.ac.uk/handle/1893/18500#.XMJ4gaIRWUk (accessed on 26 April 2019).

- Canal, G.; Escalera, S.; Angulo, C. A real-time human-robot interaction system based on gestures for assistive scenarios. Comput. Vis. Image Underst. 2016, 149, 65–77. [Google Scholar] [CrossRef]

- Liu, K.C.; Wu, C.H.; Tseng, S.Y.; Tsai, Y.T. Voice Helper: A Mobile Assistive System for Visually Impaired Persons. In Proceedings of the 2015 IEEE International Conference on Computer and Information Technology, Ubiquitous Computing and Communications, Dependable, Autonomic and Secure Computing, Pervasive Intelligence and Computing, Liverpool, UK, 26–28 October 2015; pp. 1400–1405. [Google Scholar]

- Hersh, M.; Johnson, M.A. Assistive technology for visually impaired and blind people; Springer-Verlag London: London, UK, 2008. [Google Scholar]

- Yang, C.-H.; Huang, H.-C.; Chuang, L.-Y.; Yang, C.-H. A mobile communication aid system for persons with physical disabilities. Math. Comput. Modell. 2008, 47, 318–327. [Google Scholar] [CrossRef]

- Tsun, M.T.K.; Theng, L.B.; Jo, H.S.; Hui, P.T.H. Robotics for assisting children with physical and cognitive disabilities. In Assistive Technologies for Physical and Cognitive Disabilities; IGI Global: Hershey, PA, USA, 2015; pp. 78–120. [Google Scholar]

- Constantinescu, V.; Matei, D.; Costache, V.; Cuciureanu, D.; Arsenescu-Georgescu, C. Linear and nonlinear parameters of heart rate variability in ischemic stroke patients. Neurol. Neurochir. Pol. 2018, 52, 194–206. [Google Scholar] [CrossRef] [PubMed]

- Cristina, L.M.; Matei, D.; Ignat, B.; Popescu, C.D. Mirror therapy enhances upper extremity motor recovery in stroke patients. Acta Neurol. Belgica 2015, 115, 597–603. [Google Scholar] [CrossRef] [PubMed]

- Montaño-Murillo, R.; Posada-Gómez, R.; Martínez-Sibaja, A.; Gonzalez-Sanchez, B.; Aguilar-Lasserre, A.; Cornelio-Martínez, P. Design and assessment of a remote vibrotactile biofeedback system for neuromotor rehabilitation using active markers. Procedia Technol. 2013, 7, 96–102. [Google Scholar] [CrossRef]

- World Health Organization 2010. 2010 Opportunities and developments|Report on the second global survey on eHealth|Global Observatory for eHealth series-Volume 2: TELEMEDICINE. January 2011. Available online: https://www.who.int/goe/publications/goe_telemedicine_2010.pdf (accessed on 26 April 2019).

- Evans, J.; Papadopoulos, A.; Silvers, C.T.; Charness, N.; Boot, W.R.; Schlachta-Fairchild, L.; Crump, C.; Martinez, M.; Ent, C.B. Remote health monitoring for older adults and those with heart failure: Adherence and system usability. Telemed. E-Health 2016, 22, 480–488. [Google Scholar] [CrossRef] [PubMed]

- Kumpusch, H.; Hayn, D.; Kreiner, K.; Falgenhauer, M.; Mor, J.; Schreier, G. A mobile phone based telemonitoring concept for the simultaneous acquisition of biosignals physiological parameters. Stud. Health Tech. Inf. 2010, 160, 1344–1348. [Google Scholar]

- Pino, A. Augmentative and Alternative Communication systems for the motor disabled. In Disability Informatics and Web Accessibility for Motor Limitations; IGI Global: Hershey, PA, USA, 2014; pp. 105–152. [Google Scholar]

- Pinheiro, C.G.; Naves, E.L.; Pino, P.; Losson, E.; Andrade, A.O.; Bourhis, G. Alternative communication systems for people with severe motor disabilities: A survey. Biomed. Eng. Online 2011, 10, 31. [Google Scholar] [CrossRef] [PubMed]

- AXIWI, Wireless communication system for disabled people. Available online: https://www.axiwi.com/wireless-communication-system-for-disabled-people/ (accessed on 26 April 2019).

- Origin Instruments, Assistive Technology for Computer and Mobile Device Access. Available online: http://www.orin.com/ (accessed on 26 April 2019).

- EnableMart, Assistive Technology. Available online: https://www.enablemart.com/ (accessed on 26 April 2019).

- Tobii Eye Trackers. Available online: https://gaming.tobii.com/products/ (accessed on 26 April 2019).

- Bozomitu, R.G. SIACT, Integrated system for assistance in communicating with and telemonitoring severe neuromotor disabled people. TUIASI; 2014–2017. Available online: http://telecom.etc.tuiasi.ro/telecom/staff/rbozomitu/SIACT/index.htm (accessed on 26 April 2019).

- Bozomitu, R.G. ASISTSYS, Integrated System of Assistance for Patients with Severe Neuromotor Affections. TUIASI; 2008-2011. Available online: http://telecom.etc.tuiasi.ro/telecom/staff/rbozomitu/asistsys/ (accessed on 26 April 2019).

- Cehan, V. TELPROT, Communication system with people with major neuromotor disability. TUIASI; 2006–2008. Available online: http://telecom.etc.tuiasi.ro/telprot/ (accessed on 26 April 2019).

- Bozomitu, R.G.; Păsărică, A.; Cehan, V.; Lupu, R.G.; Rotariu, C.; Coca, E. Implementation of eye-tracking system based on circular Hough transform algorithm. In Proceedings of the 2015 E-Health and Bioengineering Conference (EHB), Iasi, Romania, 19–21 November 2015; pp. 1–4. [Google Scholar]

- Păsărică, A.; Bozomitu, R.G.; Tărniceriu, D.; Andruseac, G.; Costin, H.; Rotariu, C. Analysis of Eye Image Segmentation Used in Eye Tracking Applications. Rev. Roum. Sci. Tech. 2017, 62, 215–222. [Google Scholar]

- Rotariu, C.; Bozomitu, R.G.; Pasarica, A.; Arotaritei, D.; Costin, H. Medical system based on wireless sensors for real time remote monitoring of people with disabilities. In Proceedings of the 2017 E-Health and Bioengineering Conference (EHB), Sinaia, Romania, 22–24 June 2017; pp. 753–756. [Google Scholar]

- Nita, L.; Bozomitu, R.G.; Lupu, R.G.; Pasarica, A.; Rotariu, C. Assistive communication system for patients with severe neuromotor disabilities. In Proceedings of the 2015 E-Health and Bioengineering Conference (EHB), Iasi, Romania, 19–21 November 2015; pp. 1–4. [Google Scholar]

- Lupu, R.G.; Bozomitu, R.G.; Nita, L.; Romila, A.; Pasarica, A.; Arotaritei, D.; Rotariu, C. Medical professional end-device applications on Android for interacting with neuromotor disabled patients. In Proceedings of the 2015 E-Health and Bioengineering Conference (EHB), Iasi, Romania, 19–21 November 2015; pp. 1–4. [Google Scholar]

- Al-Rahayfeh, A.; Faezipour, M. Eye Tracking and Head Movement Detection: A State-of-Art Survey. IEEE J. Transl. Eng. Health Med. 2013, 1, 2100212. [Google Scholar] [CrossRef] [PubMed]

- Duchowski, A.T. Eye tracking methodology. Theory Pract. 2007. [Google Scholar] [CrossRef]

- Majaranta, P.; Räihä, K.-J. Twenty years of eye typing: systems and design issues. In Proceedings of the 2002 symposium on Eye tracking research & applications, New Orleans, LA, USA, 25–27 March 2002; pp. 15–22. [Google Scholar]

- Majaranta, P.; Bulling, A. Eye tracking and eye-based human–computer interaction. In Advances in Physiological Computing; Springer: London, UK, 2014; pp. 39–65. [Google Scholar]

- Cuong, N.H.; Hoang, H.T. Eye-gaze detection with a single WebCAM based on geometry features extraction. In Proceedings of the 2010 11th International Conference on Control Automation Robotics Vision, Singapore, Singapore, 7–10 December 2010; pp. 2507–2512. [Google Scholar]

- eZ430-RF2500 Development Tool User’s Guide. Available online: http://www.ti.com/lit/ug/slau227f/slau227f.pdf (accessed on 26 April 2019).

- MSP430F2274, 16-bit Ultra-Low-Power Microcontroller, 32KB Flash, 1K RAM. Available online: http://www.ti.com/lit/ds/symlink/msp430f2274.pdf (accessed on 26 April 2019).

- CC2500, Low Cost, Low-Power 2.4 GHz RF Transceiver Designed for Low-Power Wireless Apps in the 2.4 GHz ISM B. Available online: http://www.ti.com/lit/ds/symlink/cc2500.pdf (accessed on 26 April 2019).

- AFE4400 and AFE4490 Development Guide, Texas Instruments. Available online: http://www.ti.com/lit/ug/slau480c/slau480c.pdf (accessed on 26 April 2019).

- UFI Model 1132 Pneumotrace II. Available online: http://www.ufiservingscience.com/model_1132.html (accessed on 26 April 2019).

- TMP275 Temperature Sensor, Texas Instruments. Available online: http://www.ti.com/lit/ds/symlink/tmp275.pdf (accessed on 26 April 2019).

- Li, D.; Winfield, D.; Parkhurst, D.J. Starburst: A hybrid algorithm for video-based eye tracking combining feature-based and model-based approaches. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05)-Workshops, San Diego, CA, USA, 21–23 September 2005; p. 79. [Google Scholar]

- Li, D.; Parkhurst, J.D. Starburst: A robust algorithm for video-based eye tracking. In Proceedings of the IEEE Vision for Human-Computer Interaction Workshop, Iowa State University, Ames, IA, USA, 5 September 2005. [Google Scholar]

- Sheena, D.; Borah, J. Compensation for some second order effects to improve eye position measurements. In Eye Movements: Cognition and Visual Perception; Routledge: London, UK, 1981; pp. 257–268. [Google Scholar]

- Halır, R.; Flusser, J. Numerically stable direct least squares fitting of ellipses. In Proceedings of the 6th International Conference in Central Europe on Computer Graphics and Visualization WSCG, Plzen-Bory, Czech Republic, 9–13 February 1998; pp. 125–132. [Google Scholar]

- Fitzgibbon, A.W.; Pilu, M.; Fisher, R.B. Direct least squares fitting of ellipses. In Proceedings of the 13th International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996; pp. 253–257. [Google Scholar]

- Świrski, L.; Bulling, A.; Dodgson, N. Robust real-time pupil tracking in highly off-axis images. In Proceedings of the Symposium on Eye Tracking Research and Applications, Santa Barbara, CA, USA, 28–30 March 2012; pp. 173–176. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. In Readings in Computer Vision; Elsevier: Burlington, MA, USA, 1987; pp. 726–740. [Google Scholar]

- Cherabit, N.; Chelali, F.Z.; Djeradi, A. Circular hough transform for iris localization. Adsorpt. Sci. Technol. 2012, 2, 114–121. [Google Scholar] [CrossRef]

- Rhody, H. Lecture 10: Hough Circle Transform; Chester F Carlson Center for Imaging Science, Rochester Institute of Technology: Rochester, NY, USA, 2005. [Google Scholar]

- Feng, G.-C.; Yuen, P.C. Variance projection function and its application to eye detection for human face recognition. Pattern Recognit. Lett. 1998, 19, 899–906. [Google Scholar] [CrossRef]

- Zhou, Z.-H.; Geng, X. Projection functions for eye detection. Pattern Recognit. 2004, 37, 1049–1056. [Google Scholar] [CrossRef]

- openEyes, Starburst. Available online: http://thirtysixthspan.com/openEyes/software.html (accessed on 26 April 2019).

- Bozomitu, R.G.; Păsărică, A.; Cehan, V.; Rotariu, C.; Coca, E. Eye pupil detection using the least squares technique. In Proceedings of the 39th International Spring Seminar on Electronics Technology (ISSE), Pilsen, Czech Republic, 18–22 May 2016; pp. 439–442. [Google Scholar]

- Bozomitu, R.G.; Păsărică, A.; Lupu, R.G.; Rotariu, C.; Coca, E. Pupil detection algorithm based on RANSAC procedure. In Proceedings of the 2017 International Symposium on Signals, Circuits and Systems (ISSCS), Iasi, Romania, 13–14 July 2017; pp. 1–4. [Google Scholar]

- Sato, H.; Abe, K.; Ohi, S.; Ohyama, M. Automatic classification between involuntary and two types of voluntary blinks based on an image analysis. In Proceedings of the International Conference on Human-Computer Interaction, Los Angeles, CA, USA, 2–7 August 2015; pp. 140–149. [Google Scholar]

- Olsson, P. Real-Time and Offline Filters for Eye Tracking. Master’s Thesis, Degree-Granting KTH Royal Institute of Technology, Stockholm, Sweden, 2007. [Google Scholar]

- McAuley, J.; Marsden, C. Physiological and pathological tremors and rhythmic central motor control. Brain 2000, 123, 1545–1567. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Introduction to SimpliciTI. Available online: http://www.ti.com/lit/ml/swru130b/swru130b.pdf (accessed on 26 April 2019).

- Valdez, G. SignalR: Building real time web applications. Microsoft. 2012. 17:12. Available online: https://devblogs.microsoft.com/aspnet/signalr-building-real-time-web-applications/ (accessed on 26 April 2019).

- Singh, H.; Singh, J. Human eye tracking and related issues: A review. Int. J. Sci. Res. Publ. 2012, 2, 1–9. [Google Scholar]

- Majaranta, P. Communication and text entry by gaze. In Gaze Interaction and Applications of Eye Tracking: Advances in Assistive Technologies; IGI Global: Hershey, PA, USA, 2012; pp. 63–77. [Google Scholar]

- Majaranta, P.; Räihä, K.-J. Text entry by gaze: Utilizing eye-tracking. In Text Entry Systems: Mobility, Accessibility, Universality; Morgan Kaufmann Publisher: San Francisco, CA, USA, 2007; pp. 175–187. [Google Scholar]

- Hansen, D.W.; Hansen, J.P. Eye typing with common cameras. In Proceedings of the 2006 Symposium on Eye Tracking Research & Applications, San Diego, CA, USA, 27–29 March 2006; p. 55. [Google Scholar]

| Telemonitoring Device | Data Transmission Rate | Average Current Consumption | Battery Capacity | Days of Operation |

|---|---|---|---|---|

| HR and SpO2 | 1 measurement/10 s | 6.1 mA | 1250 mAh | 8.50 |

| Respiratory rate | 1 measurement/10 s | 0.14 mA | 240 mAh | 71.0 |

| Body temperature | 1 measurement/10 s | 0.20 mA | 240 mAh | 45.5 |

| Included (n = 27) | |

|---|---|

| Age | 72.27 ± 8.23 |

| Female | 20 (76.9%) |

| Cardiovascular diseases | 9 (34.6%) |

| Pulmonary diseases | 1 (3.8%) |

| Stroke | 3 (11.5%) |

| Other neurological diseases | 2 (7.7%) |

| Degenerative osteoarticular diseases | 19 (73.1%) |

| Amputations | 1 (3.8%) |

| Tested System Function | Tested Hardware Components of the Patient Subsystem | Tested Software Components of the Patient Subsystem | Patients’ Diseases | Patients’ Learning Time |

|---|---|---|---|---|

| Communication | Switch-type sensor: - hand switch-click; - pal pad switch; - ribbon switch; - wobble switch; - foot switch; - sip/puff breeze switch. | - PWA (keywords technology for switch-type sensor) | Disabled patients who can perform some controlled muscle contractions, such as movement of a finger, forearm, or foot; inspiration /expiration; sip/puff, etc. (partial paralysis, paraplegia, amputations, congestive heart failure, degenerative osteoarticular diseases, stroke, chronic respiratory failure, severe COPD, recovery after major surgery, etc.) | Minimum (10 min) |

| Eye-tracking interface: - head-mounted device; - remote device. | - PWA (keywords technology for eye-tracking interface); - eye-tracking algorithm (including virtual keyboard and Internet/e-mail browser). | Severely disabled patients who cannot perform any controlled muscle contractions apart from eyeball movement and blinking (complete paralysis, tetraplegia, myopathies, amyotrophic lateral sclerosis, stroke, severe degenerative osteoarticular disease, severe arthritis in the shoulder, amputations, etc.) | Medium or long (15–20 min) | |

| Telemonitoring | Wireless body area network: - oxygen saturation; - heart rate; - respiratory rate; - body temperature. | - SimpliciIT protocol; - GUI for telemonitoring the physiological parameters. | All patients | - |

| Communication Function Based on Switch-Type Sensor | Communication Function Based on Eye-Tracking Interface | Telemonitoring Function | ||

|---|---|---|---|---|

| Head-Mounted | Remote Device | |||

| Task success rate | 96.3% | 81.5% | 88.9% | 98% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bozomitu, R.G.; Niţă, L.; Cehan, V.; Alexa, I.D.; Ilie, A.C.; Păsărică, A.; Rotariu, C. A New Integrated System for Assistance in Communicating with and Telemonitoring Severely Disabled Patients. Sensors 2019, 19, 2026. https://doi.org/10.3390/s19092026

Bozomitu RG, Niţă L, Cehan V, Alexa ID, Ilie AC, Păsărică A, Rotariu C. A New Integrated System for Assistance in Communicating with and Telemonitoring Severely Disabled Patients. Sensors. 2019; 19(9):2026. https://doi.org/10.3390/s19092026

Chicago/Turabian StyleBozomitu, Radu Gabriel, Lucian Niţă, Vlad Cehan, Ioana Dana Alexa, Adina Carmen Ilie, Alexandru Păsărică, and Cristian Rotariu. 2019. "A New Integrated System for Assistance in Communicating with and Telemonitoring Severely Disabled Patients" Sensors 19, no. 9: 2026. https://doi.org/10.3390/s19092026

APA StyleBozomitu, R. G., Niţă, L., Cehan, V., Alexa, I. D., Ilie, A. C., Păsărică, A., & Rotariu, C. (2019). A New Integrated System for Assistance in Communicating with and Telemonitoring Severely Disabled Patients. Sensors, 19(9), 2026. https://doi.org/10.3390/s19092026