Extrinsic Calibration between a Camera and a 2D Laser Rangefinder using a Photogrammetric Control Field

Abstract

1. Introduction

- The proposed calibration scheme required only one shot at the control field to accurately calibrate the extrinsic parameters. Compared with the calibration methods which needed multiple shots, it makes data collection easier.

- The proposed calibration scheme is robust. The use of an elaborately designed control field not only avoids degeneration problems in the camera calibration, but also provides redundant observations to enhance its robustness. In addition, the use of the control field avoids degeneration problems in LRF calibration and provides a unique solution to traditional P3P problems by using a 3D right triangle pyramid formed by the LRF scanning plane and the room corner.

- The proposed calibration scheme is accurate. Camera calibration was based on the accurate coordinates of control points, which ensured the accuracy of the extrinsic parameters of the camera. Furthermore, robust linear fitting of LRF points was employed to locate the exact intersections between the LRF scanning plane and the room edges, which reduced the impact of noise of raw LRF points during LRF calibration.

2. Methodology

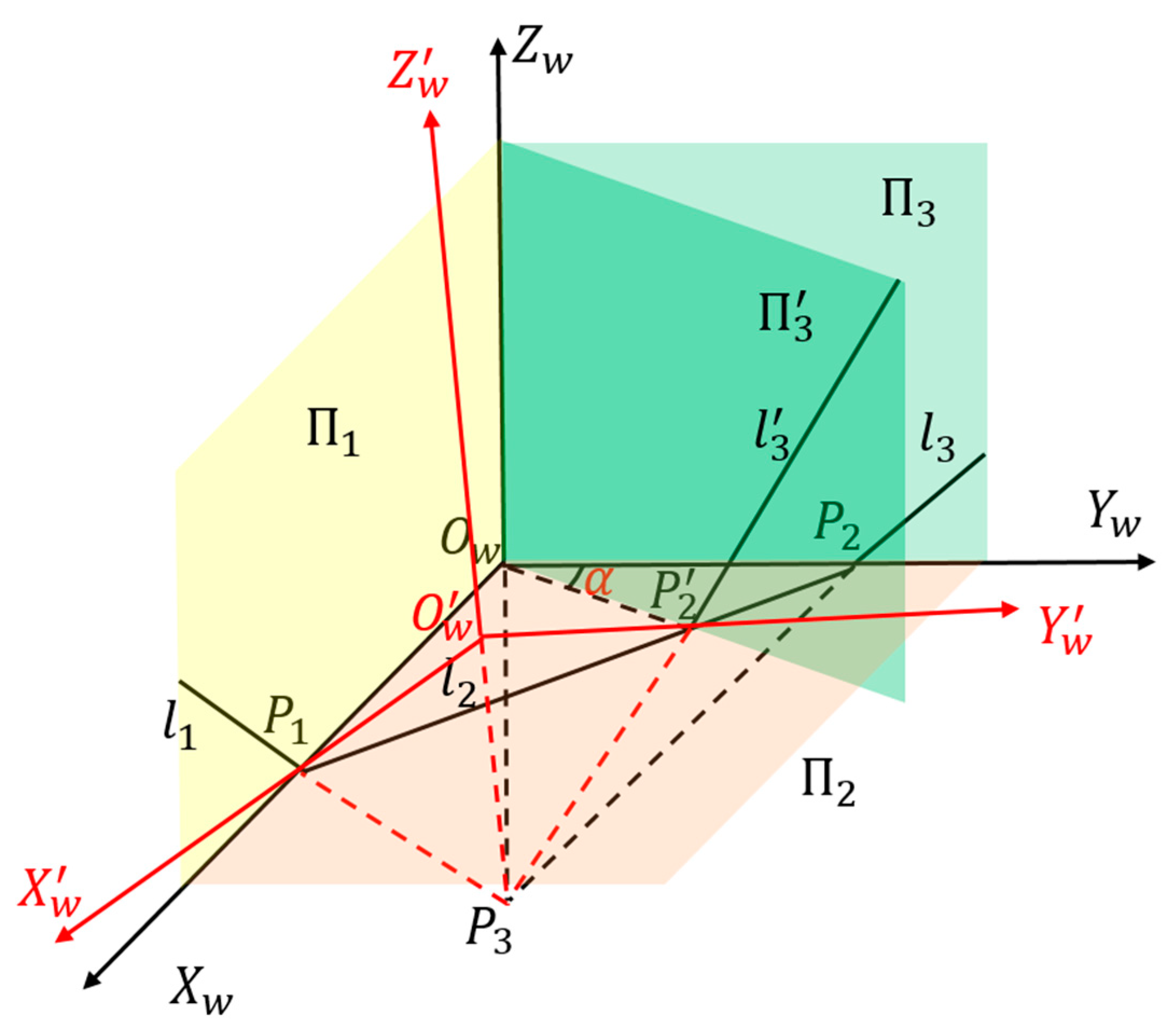

2.1. Mathematic Framework

2.2. Extrinsic Calibration of the LRF

2.3. Extrinsic Calibration of the Camera

3. Experiments

3.1. Experiments with Simulated Data

3.1.1. Performance in Terms of Image Noise

3.1.2. Performance in Terms of Laser Range Noise

3.1.3. Performance in Terms of Outliers

3.1.4. Comparison Experiments

3.2. Experiments with Real Data

4. Conclusions

- By placing chessboards or equivalent ones around the control field and capturing images and laser data from different poses, we can obtain more and closed-loop constraints which will improve the accuracy of the extrinsic parameters.

- The accuracy and robustness of LRF calibration can be improved using redundant intersections. By elaborately placing multiple trirectangular trihedrons in the control field, the laser scanning plane of the LRF can simultaneously intersect each trirectangular trihedron with three lines, and thus, the redundant intersections are obtained.

- We recommend moving the integrated sensor on a vehicle or rotation platform to capture precise movement information of the sensor and better evaluate the accuracy of the extrinsic parameters in the object space.

Author Contributions

Funding

Conflicts of Interest

References

- Wen, C.; Qin, L.; Zhu, Q.; Wang, C.; Li, J. Three-Dimensional Indoor Mobile Mapping With Fusion of Two-Dimensional Laser Scanner and RGB-D Camera Data. IEEE Geosci. Remote Sens. Lett. 2014, 11, 843–847. [Google Scholar]

- Kanezaki, A.; Suzuki, T.; Harada, T.; Kuniyoshi, Y. Fast object detection for robots in a cluttered indoor environment using integral 3D feature table. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 4026–4033. [Google Scholar]

- Biber, P.; Andreasson, H.; Duckett, T.; Schilling, A. 3D modeling of indoor environments by a mobile robot with a laser scanner and panoramic camera. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems, Sendai, Japan, 28 September–2 October 2004; pp. 3430–3435. [Google Scholar]

- Shi, Y.; Ji, S.; Shao, X.; Yang, P.; Wu, W.; Shi, Z.; Shibasaki, R. Fusion of a panoramic camera and 2D laser scanner data for constrained bundle adjustment in GPS-denied environments. Image Vision Comput. 2015, 40, 28–37. [Google Scholar] [CrossRef]

- Zhang, Q.; Pless, R. Extrinsic calibration of a camera and laser range finder (improves camera calibration). In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems, Sendai, Japan, 28 September–2 October 2004; pp. 2301–2306. [Google Scholar]

- Kassir, A.; Peynot, T. Reliable automatic camera-laser calibration. In Proceedings of the 2010 Australasian Conference on Robotics & Automation, Brisbane, Australia, 1–3 December 2010. [Google Scholar]

- Zhou, L.; Deng, Z. A new algorithm for the extrinsic calibration of a 2D LIDAR and a camera. Meas. Sci. Technol. 2014, 25, 065107. [Google Scholar] [CrossRef]

- Vasconcelos, F.; Barreto, J.P.; Nunes, U. A minimal solution for the extrinsic calibration of a camera and a laser-rangefinder. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2097–2107. [Google Scholar] [CrossRef] [PubMed]

- Wolfe, W.J.; Mathis, D.; Sklair, C.W.; Magee, M. The perspective view of three points. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 66–73. [Google Scholar] [CrossRef]

- Li, S.; Xu, C.; Xie, M. A Robust O(n) Solution to the Perspective-n-Point Problem. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1444–1450. [Google Scholar] [CrossRef] [PubMed]

- Haralick, R.M.; Lee, C.; Ottenberg, K.; Nölle, M. Review and analysis of solutions of the three point perspective pose estimation problem. Int. J. Comput. Vision 1994, 13, 331–356. [Google Scholar] [CrossRef]

- Li, G.; Liu, Y.; Dong, L.; Cai, X.; Zhou, D. An algorithm for extrinsic parameters calibration of a camera and a laser range finder using line features. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 3854–3859. [Google Scholar]

- Wasielewski, S.; Strauss, O. Calibration of a multi-sensor system laser rangefinder/camera. In Proceedings of the Intelligent Vehicles ’95. Symposium, Detroit, MI, USA, 25–26 September 1995; pp. 472–477. [Google Scholar]

- Sim, S.; Sock, J.; Kwak, K. Indirect Correspondence-Based Robust Extrinsic Calibration of LiDAR and Camera. Sensors 2016, 16, 933. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Zhuo, L.; Sun, K.; Zhang, C. Extrinsic Calibration of a Camera and a Laser Range Finder using Point to Line Constraint. Procedia Eng. 2012, 29, 4348–4352. [Google Scholar] [CrossRef]

- Gomez-Ojeda, R.; Briales, J.; Fernandez-Moral, E.; Gonzalez-Jimenez, J. Extrinsic calibration of a 2d laser-rangefinder and a camera based on scene corners. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; pp. 3611–3616. [Google Scholar]

- Hu, Z.; Li, Y.; Li, N.; Zhao, B. Extrinsic Calibration of 2-D Laser Rangefinder and Camera From Single Shot Based on Minimal Solution. IEEE Trans. Instrum. Meas. 2016, 65, 915–929. [Google Scholar] [CrossRef]

- Shi, F.; Zhang, X.; Liu, Y. A new method of camera pose estimation using 2D–3D corner correspondence. Pattern Recogn. Lett. 2004, 25, 1155–1163. [Google Scholar] [CrossRef]

- Zhang, L.; Xu, C.; Lee, K.M.; Koch, R. Robust and Efficient Pose Estimation from Line Correspondences. In Proceedings of the 11th Asian Conference on Computer Vision, Daejeon, Korea, 5–9 November 2012; pp. 217–230. [Google Scholar]

- Fraser, C.S. Digital camera self-calibration. ISPRS J. Photogramm. Remote Sens. 1997, 52, 149–159. [Google Scholar] [CrossRef]

- Cronk, S.; Fraser, C.; Hanley, H. Automated metric calibration of colour digital cameras. Photogramm. Rec. 2006, 21, 355–372. [Google Scholar] [CrossRef]

- Abbas, M.A.; Lichti, D.D.; Chong, A.K.; Setan, H.; Majid, Z. An on-site approach for the self-calibration of terrestrial laser scanner. Measurement 2014, 52, 111–123. [Google Scholar] [CrossRef]

- Abdel-Aziz, Y.I.; Dr, H.M.K.; Dr, M.H. Direct Linear Transformation from Comparator Coordinates into Object Space Coordinates in Close-Range Photogrammetry. Photogramm. Eng. Remote Sens. 2015, 81, 103–107. [Google Scholar] [CrossRef]

- Zhang, J.; Pan, L.; Wang, S. Photogrammetry, 2nd ed.; Wuhan University Press: Wuhan, China, 2009. [Google Scholar]

- Allen, P.K. 3d photography: Point based rigid registration. Available online: http://www.cs.hunter.cuny.edu/~ioannis/registerpts_allen_notes.pdf (accessed on 3 October 2008).

- Bopp, H.; Krauss, H. An orientation and calibration method for non-topographic applications. Photogramm. Eng. Remote Sens. 1978, 44, 1191–1196. [Google Scholar]

- Lawson, C.L.; Hanson, R.J. Solving least squares problems; Prentice-Hall: Englewood Cliffs, NJ, USA, 1974. [Google Scholar]

- Chen, L.; Armstrong, C.W.; Raftopoulos, D.D. An investigation on the accuracy of three-dimensional space reconstruction using the direct linear transformation technique. J. Biomech. 1994, 27, 493–500. [Google Scholar] [CrossRef]

- Fraser, C.S. Automatic Camera Calibration in Close Range Photogrammetry. Photogramm. Eng. Remote Sens. 2013, 79, 381–388. [Google Scholar] [CrossRef]

- Honkavaara, E.; Ahokas, E.; Hyyppä, J.; Jaakkola, J.; Kaartinen, H.; Kuittinen, R.; Markelin, L.; Nurminen, K. Geometric test field calibration of digital photogrammetric sensors. ISPRS J. Photogramm. Remote Sens. 2006, 60, 387–399. [Google Scholar] [CrossRef]

| Noise level | Our Method | Hu’s Method | Zhang’s Method | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean_ER (degree) | Mean_ET (mm) | Mean_ER (degree) | Mean_ET (mm) | Mean_ER (degree) | Mean_ET (mm) | ||||||||

| Image | Laser | r1 | r2 | r3 | T | r1 | r2 | r3 | T | r1 | r2 | r3 | T |

| 1 | 1 | 0.009 | 0.017 | 0.019 | 0.870 | 0.206 | 0.082 | 0.228 | 15.154 | 0.299 | 0.621 | 0.492 | 27.927 |

| 1 | 15 | 0.047 | 0.249 | 0.253 | 12.648 | 0.216 | 0.266 | 0.371 | 21.589 | 0.449 | 0.914 | 0.720 | 41.039 |

| 1 | 30 | 0.096 | 0.612 | 0.619 | 31.110 | 0.235 | 0.613 | 0.684 | 36.694 | 0.774 | 1.334 | 0.954 | 54.503 |

| 5 | 1 | 0.044 | 0.050 | 0.058 | 2.379 | 1.091 | 0.389 | 1.188 | 80.052 | 1.365 | 3.035 | 2.520 | 139.077 |

| 5 | 15 | 0.065 | 0.255 | 0.261 | 12.920 | 1.030 | 0.466 | 1.172 | 77.240 | 1.602 | 3.174 | 2.472 | 135.330 |

| 5 | 30 | 0.101 | 0.612 | 0.620 | 31.004 | 1.093 | 0.716 | 1.398 | 89.015 | 1.751 | 3.464 | 2.720 | 151.035 |

| 10 | 1 | 0.086 | 0.100 | 0.114 | 4.603 | 2.173 | 0.786 | 2.367 | 158.666 | 3.385 | 5.933 | 4.451 | 246.641 |

| 10 | 15 | 0.097 | 0.284 | 0.294 | 14.313 | 2.157 | 0.813 | 2.367 | 158.466 | 3.307 | 6.235 | 4.815 | 272.386 |

| 10 | 30 | 0.128 | 0.637 | 0.648 | 31.908 | 2.052 | 0.965 | 2.362 | 155.465 | 3.459 | 6.625 | 5.024 | 286.594 |

| Noise level | Our Method | Hu’s Method | Zhang’s Method | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Std_ER (degree) | Std_ET (mm) | Std_ER (degree) | Std_ET (mm) | Std_ER (degree) | Std_ET (mm) | ||||||||

| Image | Laser | r1 | r2 | r3 | T | r1 | r2 | r3 | T | r1 | r2 | r3 | T |

| 1 | 1 | 0.005 | 0.011 | 0.011 | 0.521 | 0.151 | 0.053 | 0.145 | 9.877 | 0.199 | 0.321 | 0.354 | 19.859 |

| 1 | 15 | 0.027 | 0.184 | 0.182 | 9.297 | 0.157 | 0.195 | 0.197 | 11.089 | 0.335 | 0.485 | 0.505 | 27.727 |

| 1 | 30 | 0.057 | 0.440 | 0.436 | 22.316 | 0.158 | 0.449 | 0.425 | 21.273 | 0.553 | 0.667 | 0.678 | 39.164 |

| 5 | 1 | 0.024 | 0.028 | 0.030 | 1.114 | 0.818 | 0.247 | 0.785 | 54.093 | 0.957 | 1.629 | 1.706 | 92.758 |

| 5 | 15 | 0.037 | 0.181 | 0.179 | 9.011 | 0.748 | 0.311 | 0.719 | 48.512 | 0.979 | 1.449 | 1.650 | 94.119 |

| 5 | 30 | 0.060 | 0.442 | 0.437 | 22.119 | 0.805 | 0.469 | 0.753 | 50.433 | 1.320 | 1.788 | 1.786 | 97.304 |

| 10 | 1 | 0.050 | 0.056 | 0.062 | 2.165 | 1.567 | 0.497 | 1.506 | 102.691 | 2.315 | 2.817 | 2.714 | 151.223 |

| 10 | 15 | 0.056 | 0.188 | 0.185 | 9.043 | 1.591 | 0.521 | 1.531 | 103.585 | 2.433 | 3.228 | 3.200 | 177.354 |

| 10 | 30 | 0.074 | 0.452 | 0.447 | 22.193 | 1.586 | 0.644 | 1.517 | 101.520 | 2.546 | 3.143 | 3.290 | 185.319 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, J.; Huang, Y.; Shan, J.; Zhang, S.; Zhu, F. Extrinsic Calibration between a Camera and a 2D Laser Rangefinder using a Photogrammetric Control Field. Sensors 2019, 19, 2030. https://doi.org/10.3390/s19092030

Fan J, Huang Y, Shan J, Zhang S, Zhu F. Extrinsic Calibration between a Camera and a 2D Laser Rangefinder using a Photogrammetric Control Field. Sensors. 2019; 19(9):2030. https://doi.org/10.3390/s19092030

Chicago/Turabian StyleFan, Jia, Yuchun Huang, Jie Shan, Shun Zhang, and Fei Zhu. 2019. "Extrinsic Calibration between a Camera and a 2D Laser Rangefinder using a Photogrammetric Control Field" Sensors 19, no. 9: 2030. https://doi.org/10.3390/s19092030

APA StyleFan, J., Huang, Y., Shan, J., Zhang, S., & Zhu, F. (2019). Extrinsic Calibration between a Camera and a 2D Laser Rangefinder using a Photogrammetric Control Field. Sensors, 19(9), 2030. https://doi.org/10.3390/s19092030