Automatic Parameter Tuning for Adaptive Thresholding in Fruit Detection

Abstract

:1. Introduction

2. Literature Review

2.1. Detection Algorithms in Agriculture

2.2. Color Spaces

3. Materials and Methods

3.1. Databases

3.1.1. Apples

3.1.2. Grapes

3.1.3. Peppers

3.1.4. Performance Measures

3.2. Analyses

- Tuning parameters: Parameters were computed for each database with procedures defined in Section 4.3 and compared to previous predefined parameters

- Color spaces’ analyses: Algorithm performances were tested on all databases for four different color spaces: RGB, HSI, LAB, and NDI.

- Sensitivity analysis: Sensitivity analyses were conducted for all the databases and included:

- Noise: Noise was created by adding to each pixel in the RGB image a random number from the mean normal distribution for noise values up to 30%. The artificial noise represents the algorithms’ robustness toward other cameras with more noise, or when capturing images with different camera settings. Noise values of 5%, 10%, 20%, and 30%, were evaluated.

- Thresholds learned in train process: Thresholds were changed by , , and according to the threshold in each region.

- Stop condition: The selected STD value was changed by 5% and 10% to test the robustness of the algorithm to these parameters.

- Training vs. testing: The algorithm performances were evaluated while using different percentages of DB images for the training and testing processes.

- Morphological operation (erosion and dilation) contribution: Performances were tested for imaging with and without a morphological operations process.

4. Algorithm

4.1. Algorithm Flow

4.2. Morphological Operations: Erosion and Dilation

4.3. Parameter Tuning

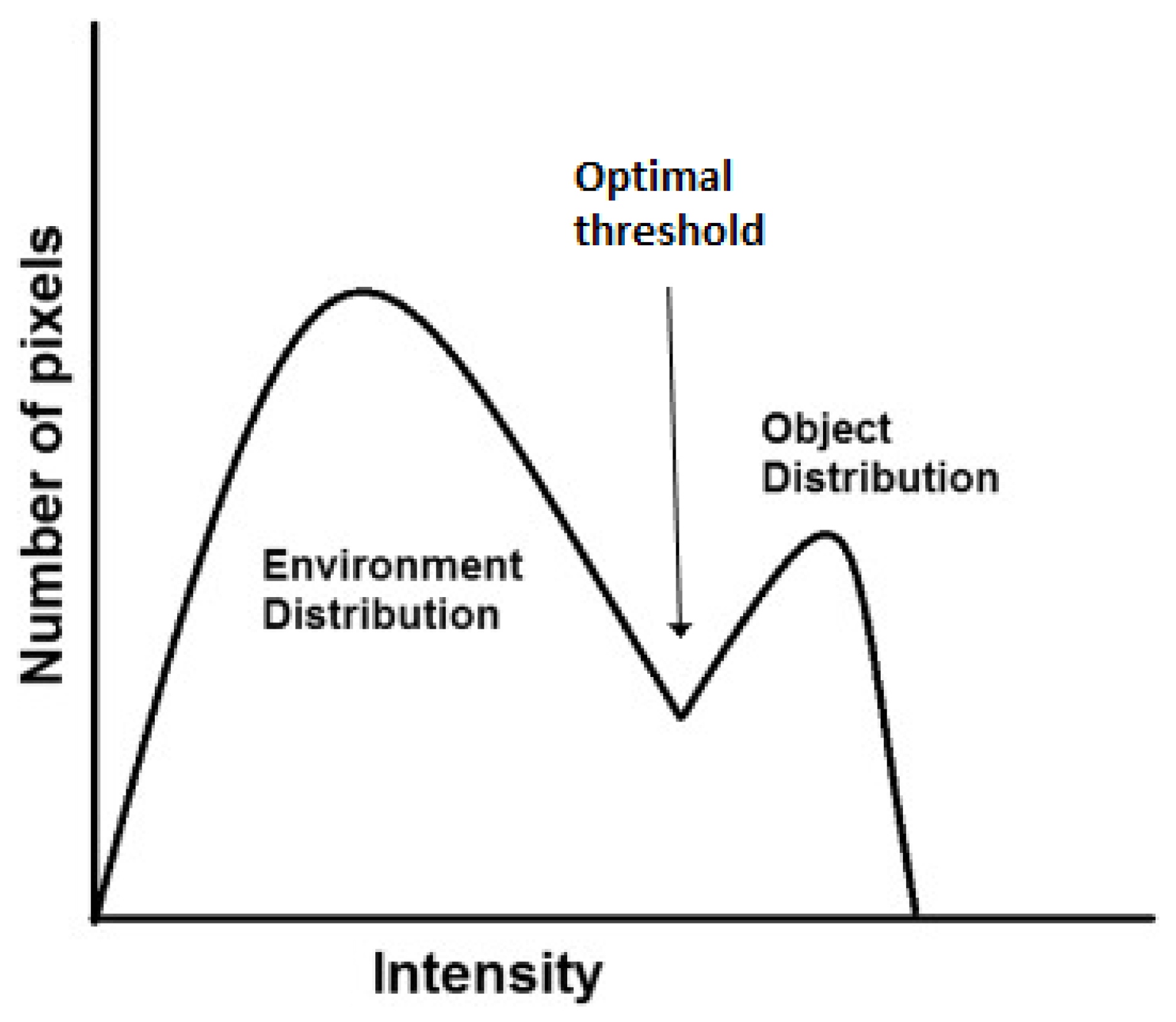

4.3.1. Light Level Thresholds (T1, T2)

4.3.2. Stop Splitting Condition (STD)

4.3.3. Classification Rule Direction (D1, D2, D3)

5. Results and Discussion

5.1. Sub-Image Size vs. STD Value

5.2. Tuning Process

5.2.1. Light Level Distribution

5.2.2. Stop Splitting Condition

5.2.3. Classification Rule Direction

5.3. Color Space Analyses

5.3.1. Apples

5.3.2. Grapes

5.3.3. Peppers

5.4. Sensitivity Analysis

5.4.1. Noise

5.4.2. Thresholds Learned in the Training Process

5.4.3. Stop Condition

5.4.4. Training/Testing

5.5. Morphological Operations

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Kalantar, A.; Dashuta, A.; Edan, Y.; Gur, A.; Klapp, I. Estimating Melon Yield for Breeding Processes by Machine-Vision Processing of UAV Images. In Proceedings of the Precision Agriculture Conference, Montpellier, France, 8–11 July 2019. [Google Scholar]

- Bargoti, S.; Underwood, J.P. Image segmentation for fruit detection and yield estimation in apple orchards. J. Field Robot. 2017, 34, 1039–1060. [Google Scholar] [CrossRef]

- Stein, M.; Bargoti, S.; Underwood, J. Image based mango fruit detection, localisation and yield estimation using multiple view geometry. Sensors 2016, 16, 1915. [Google Scholar] [CrossRef] [PubMed]

- Dorj, U.O.; Lee, M.; Yun, S.S. An yield estimation in citrus orchards via fruit detection and counting using image processing. Comput. Electron. Agric. 2017, 140, 103–112. [Google Scholar]

- Wang, Q.; Nuske, S.; Bergerman, M.; Singh, S. Automated crop yield estimation for apple orchards. In Experimental Robotics; Springer: Berlin, Germany, 2013; pp. 745–758. [Google Scholar]

- Nuske, S.; Achar, S.; Bates, T.; Narasimhan, S.; Singh, S. Yield estimation in vineyards by visual grape detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 2352–2358. [Google Scholar]

- Zaman, Q.; Schumann, A.; Percival, D.; Gordon, R. Estimation of wild blueberry fruit yield using digital color photography. Trans. ASABE 2008, 51, 1539–1544. [Google Scholar] [CrossRef]

- Oppenheim, D. Object recognition for agricultural applications using deep convolutional neural networks. Master’s Thesis, Ben-Gurion University of the Negev, Beer-Sheva, Israel, 2018. [Google Scholar]

- Mack, J.; Lenz, C.; Teutrine, J.; Steinhage, V. High-precision 3D detection and reconstruction of grapes from laser range data for efficient phenotyping based on supervised learning. Comput. Electron. Agric. 2017, 135, 300–311. [Google Scholar] [CrossRef]

- Pound, M.P.; Atkinson, J.A.; Wells, D.M.; Pridmore, T.P.; French, A.P. Deep learning for multi-task plant phenotyping. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2055–2063. [Google Scholar]

- Song, Y.; Glasbey, C.; Horgan, G.; Polder, G.; Dieleman, J.; Van der Heijden, G. Automatic fruit recognition and counting from multiple images. Biosyst. Eng. 2014, 118, 203–215. [Google Scholar] [CrossRef]

- Oberti, R.; Marchi, M.; Tirelli, P.; Calcante, A.; Iriti, M.; Tona, E.; Hočevar, M.; Baur, J.; Pfaff, J.; Schütz, C.; et al. Selective spraying of grapevines for disease control using a modular agricultural robot. Biosyst. Eng. 2016, 146, 203–215. [Google Scholar] [CrossRef]

- Payne, A.B.; Walsh, K.B.; Subedi, P.; Jarvis, D. Estimation of mango crop yield using image analysis-segmentation method. Comput. Electron. Agric. 2013, 91, 57–64. [Google Scholar] [CrossRef]

- Wang, Z.; Verma, B.; Walsh, K.B.; Subedi, P.; Koirala, A. Automated mango flowering assessment via refinement segmentation. In Proceedings of the International Conference on Image and Vision Computing New Zealand (IVCNZ), Palmerston North, New Zealand, 21–22 November 2016; pp. 1–6. [Google Scholar]

- Wouters, N.; De Ketelaere, B.; Deckers, T.; De Baerdemaeker, J.; Saeys, W. Multispectral detection of floral buds for automated thinning of pear. Comput. Electron. Agric. 2015, 113, 93–103. [Google Scholar] [CrossRef]

- Bac, C.W.; van Henten, E.J.; Hemming, J.; Edan, Y. Harvesting robots for high-value crops: State-of-the-art review and challenges ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Kapach, K.; Barnea, E.; Mairon, R.; Edan, Y.; Ben-Shahar, O. Computer vision for fruit harvesting robots–state of the art and challenges ahead. Int. J. Comput. Vis. Robot. 2012, 3, 4–34. [Google Scholar] [CrossRef]

- Gongal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Sensors and systems for fruit detection and localization: A review. Comput. Electron. Agric. 2015, 116, 8–19. [Google Scholar] [CrossRef]

- Vitzrabin, E.; Edan, Y. Adaptive thresholding with fusion using a RGBD sensor for red sweet-pepper detection. Biosyst. Eng. 2016, 146, 45–56. [Google Scholar] [CrossRef]

- Arad, B.; Kurtser, P.; Barnea, E.; Harel, B.; Edan, Y.; Ben-Shahar, O. Controlled Lighting and Illumination-Independent Target Detection for Real-Time Cost-Efficient Applications. The Case Study of Sweet Pepper Robotic Harvesting. Sensors 2019, 19, 1390. [Google Scholar] [CrossRef] [PubMed]

- Barth, R.; Hemming, J.; van Henten, E. Design of an eye-in-hand sensing and servo control framework for harvesting robotics in dense vegetation. Biosyst. Eng. 2016, 146, 71–84. [Google Scholar] [CrossRef] [Green Version]

- Arivazhagan, S.; Shebiah, R.N.; Nidhyanandhan, S.S.; Ganesan, L. Fruit recognition using color and texture features. J. Emerg. Trends Comput. Inf. Sci. 2010, 1, 90–94. [Google Scholar]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. Deepfruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.W.; Shivakumar, S.S.; Dcunha, S.; Das, J.; Okon, E.; Qu, C.; Taylor, C.J.; Kumar, V. Counting apples and oranges with deep learning: A data-driven approach. IEEE Robot. Autom. Lett. 2017, 2, 781–788. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- McCool, C.; Perez, T.; Upcroft, B. Mixtures of lightweight deep convolutional neural networks: applied to agricultural robotics. IEEE Robot. Autom. Lett. 2017, 2, 1344–1351. [Google Scholar] [CrossRef]

- Milioto, A.; Lottes, P.; Stachniss, C. Real-time blob-wise sugar beets vs weeds classification for monitoring fields using convolutional neural networks. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 41. [Google Scholar] [CrossRef]

- Berenstein, R.; Shahar, O.B.; Shapiro, A.; Edan, Y. Grape clusters and foliage detection algorithms for autonomous selective vineyard sprayer. Intell. Serv. Robot. 2010, 3, 233–243. [Google Scholar] [CrossRef]

- Van Henten, E.; Van Tuijl, B.V.; Hemming, J.; Kornet, J.; Bontsema, J.; Van Os, E. Field test of an autonomous cucumber picking robot. Biosyst. Eng. 2003, 86, 305–313. [Google Scholar] [CrossRef]

- Zemmour, E.; Kurtser, P.; Edan, Y. Dynamic thresholding algorithm for robotic apple detection. In Proceedings of the IEEE International Conference on the Autonomous Robot Systems and Competitions (ICARSC), Coimbra, Portugal, 26–28 April 2017; pp. 240–246. [Google Scholar]

- Wang, J.; He, J.; Han, Y.; Ouyang, C.; Li, D. An adaptive thresholding algorithm of field leaf image. Comput. Electron. Agric. 2013, 96, 23–39. [Google Scholar] [CrossRef]

- Jiang, J.A.; Chang, H.Y.; Wu, K.H.; Ouyang, C.S.; Yang, M.M.; Yang, E.C.; Chen, T.W.; Lin, T.T. An adaptive image segmentation algorithm for X-ray quarantine inspection of selected fruits. Comput. Electron. Agric. 2008, 60, 190–200. [Google Scholar] [CrossRef]

- Arroyo, J.; Guijarro, M.; Pajares, G. An instance-based learning approach for thresholding in crop images under different outdoor conditions. Comput. Electron. Agric. 2016, 127, 669–679. [Google Scholar] [CrossRef]

- Ostovar, A.; Ringdahl, O.; Hellström, T. Adaptive Image Thresholding of Yellow Peppers for a Harvesting Robot. Robotics 2018, 7, 11. [Google Scholar] [CrossRef]

- Zhang, Y.J. A survey on evaluation methods for image segmentation. Pattern Recognit. 1996, 29, 1335–1346. [Google Scholar] [CrossRef] [Green Version]

- Shmmala, F.A.; Ashour, W. Color based image segmentation using different versions of k-means in two spaces. Glob. Adv. Res. J. Eng. Technol. Innov. 2013, 1, 30–41. [Google Scholar]

- Zheng, L.; Zhang, J.; Wang, Q. Mean-shift-based color segmentation of images containing green vegetation. Comput. Electron. Agric. 2009, 65, 93–98. [Google Scholar] [CrossRef]

- Al-Allaf, O.N. Review of face detection systems based artificial neural networks algorithms. Int. J. Multimed. Its Appl. 2014, 6, 1. [Google Scholar] [CrossRef]

- Sakthivel, K.; Nallusamy, R.; Kavitha, C. Color Image Segmentation Using SVM Pixel Classification Image. World Acad. Sci. Eng. Technol. Int. J. Comput. Electr. Autom. Control Inf. Eng. 2015, 8, 1919–1925. [Google Scholar]

- Kurtser, P.; Edan, Y. Statistical models for fruit detectability: Spatial and temporal analyses of sweet peppers. Biosyst. Eng. 2018, 171, 272–289. [Google Scholar] [CrossRef]

- Barth, R.; IJsselmuiden, J.; Hemming, J.; Van Henten, E.J. Data synthesis methods for semantic segmentation in agriculture: A Capsicum annuum dataset. Comput. Electron. Agric. 2018, 144, 284–296. [Google Scholar] [CrossRef]

- Park, J.; Lee, G.; Cho, W.; Toan, N.; Kim, S.; Park, S. Moving object detection based on clausius entropy. In Proceedings of the IEEE 10th International Conference on Computer and Information Technology (CIT), Bradford, West Yorkshire, UK, 29 June–1 July 2010; pp. 517–521. [Google Scholar]

- Hannan, M.; Burks, T.; Bulanon, D. A real-time machine vision algorithm for robotic citrus harvesting. In Proceedings of the 2007 ASAE Annual Meeting, American Society of Agricultural and Biological Engineers, Minneapolis, MN, USA, 17–20 June 2007; p. 1. [Google Scholar]

- Bulanon, D.M.; Burks, T.F.; Alchanatis, V. Fruit visibility analysis for robotic citrus harvesting. Trans. ASABE 2009, 52, 277–283. [Google Scholar] [CrossRef]

- Gunatilaka, A.H.; Baertlein, B.A. Feature-level and decision-level fusion of noncoincidently sampled sensors for land mine detection. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 577–589. [Google Scholar] [CrossRef] [Green Version]

- Kanungo, P.; Nanda, P.K.; Ghosh, A. Parallel genetic algorithm based adaptive thresholding for image segmentation under uneven lighting conditions. In Proceedings of the IEEE International Conference on Systems Man and Cybernetics (SMC), Istanbul, Turkey, 10–13 October 2010; pp. 1904–1911. [Google Scholar]

- Hall, D.L.; McMullen, S.A. Mathematical Techniques in Multisensor Data Fusion; Artech House: Norwood, MA, USA, 2004. [Google Scholar]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Plant species identification, size, and enumeration using machine vision techniques on near-binary images. In Optics in Agriculture and Forestry; SPIE-International Society for Optics and Photonics: Bellingham, WA, USA, 1993; Volume 1836, pp. 208–220. [Google Scholar]

- Shrestha, D.; Steward, B.; Bartlett, E. Segmentation of plant from background using neural network approach. In Proceedings of the Intelligent Engineering Systems through Artificial Neural Networks: Proceedings Artificial Neural Networks in Engineering (ANNIE) International Conference, St. Louis, MO, USA, 4–7 November 2001; Volume 11, pp. 903–908. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Computer Vision and Pattern Recognition, Miami Beach, FL, USA, 20–25 June 2009. [Google Scholar]

- Goutte, C.; Gaussier, E. A probabilistic interpretation of precision, recall and F-score, with implication for evaluation. In European Conference on Information Retrieval; Springer: Berlin, Germany, 2005; pp. 345–359. [Google Scholar]

- Guyon, I. A Scaling Law for the Validation-Set Training-Set Size Ratio; AT&T Bell Laboratories: Murray Hill, NJ, USA, 1997; pp. 1–11. [Google Scholar]

- Siegel, M.; Wu, H. Objective evaluation of subjective decisions. In Proceedings of the IEEE International Workshop on Soft Computing Techniques in Instrumentation, Measurement and Related Applications, Provo, UT, USA, 17 May 2003. [Google Scholar]

- Zemmour, E. Adaptive thresholding algorithm for robotic fruit detection. Master’s Thesis, Ben-Gurion University of the Negev, Beer-Sheva, Israel, 2018. [Google Scholar]

| Paper | Crop | Dataset Size | Detection Level | Algorithm Type | FPR % | TPR % | F % | A % | P % | R % |

|---|---|---|---|---|---|---|---|---|---|---|

| Arad et al., 2019 [20] | Peppers | 156 img | PX | AD | NA | NA | NA | NA | 65(95) | 94(95) |

| W | DL | 84 | NA | |||||||

| Ostovar et al., 2018 [34] | Peppers | 170 img | PX | AD | NA | NA | NA | 91.5 | NA | NA |

| Chen et al., 2017 [24] | Apples | 1749 (21 img) | PX | DL | 5.1 | 95.7 | 95.3 * | NA | NA | NA |

| Oranges | 7200 (71 img) | 3.3 | 96.1 | 96.4 * | ||||||

| McCool et al., 2017 [26] | Weed | Pre-train: img tuning and testing: 60 img | PX | D-CNN | NA | NA | NA | 93.9 | NA | NA |

| Milioto et al., 2017 [27] | Weed | 5696 (867 img) 26,163 (1102 img) | PX | CNN | NA | NA | NA | 96.8 99.7 | 97.3 96.1 | 98.1 96.3 |

| Sa et al., 2016 [23] | Sweet pepper | 122 img | W | DL | NA | NA | 82.8 | NA | NA | NA |

| Rock melon | 135 img | 84.8 | ||||||||

| Apple | 64 img | 93.8 | ||||||||

| Avocado | 54 img | 93.2 | ||||||||

| Mango | 170 img | 94.2 | ||||||||

| Orange | 57 img | 91.5 | ||||||||

| Vitzrabin et al., 2016 [19] | Sweet pepper | 479 (221 img) | PX | AD | 4.6 | 90.0 | 92.6 * | NA | NA | NA |

| Song et al., 2014 [11] | Pepper plants | 1056 img | W | NB+SVM | NA | NA | 65 | NA | NA | NA |

| Nuske et al., 2011 [6] | Grapes | 2973 img | PX | K-NN | NA | NA | NA | NA | 63.7 | 98 |

| Berenstein et al., 2010 [28] | Grapes | 100 img | PX | Morphological | NA | NA | NA | 90 | NA | NA |

| Zheng et al., 2009 [37] | Vegetation | 20 img 80 img | PX | Mean-Shift | NA | NA | NA | 95.4 95.9 | NA | NA |

| Our results | Sweet pepper | 73 (30 img) | PX | AD | 0.81 | 99.43 | 99.31 | NA | NA | NA |

| Apples | 113 (9 img) | 2.59 | 89.45 | 93.17 | ||||||

| Grapes | 1078 (129 img) | 33.35 | 89.48 | 73.52 |

| Measure\DB | Apples | Grapes | Peppers |

|---|---|---|---|

| T1 | 84 | 49 | 18 |

| T2 | 140 | 130 | 47 |

| DB\Measure | Mean | Std | Skewness | Kurtosis | Median |

|---|---|---|---|---|---|

| Apples | 118.46 | 28.04 | 0.4 | −0.17 | 116.31 |

| Grapes | 88 | 37.9 | 0.68 | −0.13 | 81.06 |

| Peppers | 32.09 | 18.92 | 3.16 | 15.36 | 26.93 |

| DB | Apples | Grapes | Peppers | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Color space | HSI | LAB | NDI | RGB | HSI | LAB | NDI | RGB | HSI | LAB | NDI | RGB | |

| STD (P = 30%) | 20 | 30 | 10 | 20 | 10 | 20 | 60 | 20 | 100 | 10 | 10 | 10 | |

| STD (P = 50%) | 20 | 10 | 10 | 30 | 20 | 20 | 70 | 20 | 100 | 20 | 10 | 10 | |

| Classification rule direction | D1 | > | < | > | > | < | > | > | > | < | > | > | > |

| D2 | > | > | > | < | > | < | > | > | > | > | > | > | |

| D3 | > | > | > | < | < | > | < | < | > | > | < | < | |

| Dimension | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| DB | Color Space | Measure | 1 | 2 | 3 | ||||

| Apples | NDI | % FPR | 2.59 | 40.91 | 31.38 | 1.64 | 1.32 | 2.48 | 0.48 |

| % TPR | 89.45 | 83.53 | 68.39 | 78.52 | 64.82 | 54.65 | 54.1 | ||

| % F | 93.17 | 67.85 | 67.8 | 86.75 | 77.6 | 69.67 | 69.69 | ||

| LAB | % FPR | 33.58 | 2.45 | 77.08 | 1.78 | 28.55 | 1.55 | 1.07 | |

| % TPR | 61.26 | 89.34 | 85.26 | 56.59 | 52.95 | 76.27 | 48.8 | ||

| % F | 56.79 | 93.19 | 35.85 | 69.02 | 54.61 | 85.37 | 62.58 | ||

| Grapes | NDI | % FPR | 35.86 | 33.35 | 52.9 | 4.86 | 5.5 | 32.35 | 4.09 |

| % TPR | 44.52 | 89.48 | 89.99 | 38.53 | 37.27 | 87.5 | 36.7 | ||

| % F | 47.19 | 73.52 | 58.05 | 50.12 | 48.93 | 73.2 | 48.65 | ||

| Peppers High Visibility | HSI | % FPR | 18.27 | 1.52 | 2.16 | 0.81 | 0.64 | 0.17 | 0.14 |

| % TPR | 99.96 | 99.43 | 86.42 | 99.43 | 86.41 | 86.23 | 86.23 | ||

| % F | 89.77 | 98.95 | 91.41 | 99.31 | 92.12 | 92.24 | 92.25 | ||

| Peppers Inc. Low Visibility | NDI | % FPR | 66.57 | 5.24 | 9.23 | 1.42 | 1.24 | 4.57 | 0.99 |

| % TPR | 85.61 | 95.93 | 92.49 | 82.2 | 78.64 | 92.33 | 78.61 | ||

| % F | 46.96 | 95.19 | 91.24 | 88.91 | 86.59 | 93.51 | 86.67 | ||

| Changes in Threshold | ||||||||

|---|---|---|---|---|---|---|---|---|

| DB | Measure | −15% | −10% | −5% | 0% | 5% | 10% | 15% |

| Apples | % FPR | 3.58 | 3.43 | 3.3 | 2.59 | 3.06 | 2.93 | 2.81 |

| % TPR | 91.47 | 91.28 | 91.07 | 89.45 | 90.75 | 90.57 | 90.44 | |

| Grapes | % FPR | 21.59 | 18.4 | 15.53 | 33.35 | 11.00 | 9.23 | 7.72 |

| % TPR | 78.02 | 72.63 | 66.42 | 89.48 | 50.99 | 43.63 | 36.24 | |

| Peppers | % FPR | 0.98 | 0.91 | 0.86 | 0.81 | 0.78 | 0.7 | 0.65 |

| % TPR | 99.25 | 99.22 | 99.2 | 99.43 | 99.12 | 99.07 | 99.04 | |

| Training %: For a Dataset Size of 129 Images | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| DB | Measure | 10 | 20 | 30 | 40 | 50 | 60 | 70 | 80 | 90 |

| Grapes | % FPR | 32.81 | 37.08 | 28.54 | 36.16 | 31.83 | 29.1 | 29.63 | 40.51 | 40.8 |

| % TPR | 88.79 | 89.62 | 87.01 | 88.44 | 87.58 | 82.55 | 87.14 | 94.53 | 95.85 | |

| % F | 73.35 | 70.2 | 75.19 | 69.41 | 72.49 | 70.24 | 73.92 | 72.55 | 72.54 | |

| DB | Measure | Performances Using Previous Params | Performances Using Tuning Process |

|---|---|---|---|

| Apples | % FPR | 2.53 | 2.59 |

| % TPR | 89.23 | 89.45 | |

| % F | 93.08 | 93.17 | |

| Grapes | % FPR | 18.63 | 33.35 |

| % TPR | 63.7 | 89.48 | |

| % F | 67.3 | 73.52 | |

| Peppers | % FPR | 1 | 0.81 |

| % TPR | 97.97 | 99.43 | |

| % F | 98.47 | 99.31 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zemmour, E.; Kurtser, P.; Edan, Y. Automatic Parameter Tuning for Adaptive Thresholding in Fruit Detection. Sensors 2019, 19, 2130. https://doi.org/10.3390/s19092130

Zemmour E, Kurtser P, Edan Y. Automatic Parameter Tuning for Adaptive Thresholding in Fruit Detection. Sensors. 2019; 19(9):2130. https://doi.org/10.3390/s19092130

Chicago/Turabian StyleZemmour, Elie, Polina Kurtser, and Yael Edan. 2019. "Automatic Parameter Tuning for Adaptive Thresholding in Fruit Detection" Sensors 19, no. 9: 2130. https://doi.org/10.3390/s19092130

APA StyleZemmour, E., Kurtser, P., & Edan, Y. (2019). Automatic Parameter Tuning for Adaptive Thresholding in Fruit Detection. Sensors, 19(9), 2130. https://doi.org/10.3390/s19092130