1. Introduction

Aircraft pose estimation is a necessary technology in the field of aeronautical engineering and accurate pose parameters of the aircraft provide the fundamental information needed in many aerospace tasks, such as flight control system testing [

1,

2,

3], auxiliary taking-off and landing [

4], collision avoidance and autonomous navigation [

5]. Various sensors are used to determine the position and attitude of aircraft, for instance, GPS sensors, altimeters and inertial measurement units (IMU) [

6]. During the past decade, considerable progress has been made in visual sensors and computer vision technologies and a variety of vision-based methods have been developed for estimating the pose of a target. Compared to systems using GPS and IMU, vision systems are relatively inexpensive and can perform rapid pose estimation with the advantage of low power consumption, high flexibility and accuracy [

7,

8]. In recent years, performing robust and accurate aircraft pose estimation based on visual sensors has been an attractive research topic and remains quite challenging.

In general, the visual sensors used for aircraft pose estimation include RGB-D (Red Green Blue-Depth) sensors, binocular vision sensors and monocular cameras. RGB-D sensors provide RGB images and depth information [

9,

10] for pose estimation, binocular vision sensors estimate the pose of a target by extracting 3D information from two images containing overlapping regions [

11,

12,

13], while monocular camera systems use single 2D images to estimate the pose information [

14,

15,

16,

17,

18]. RGB-D sensors are referred to as active sensors while binocular vision sensors and monocular cameras are passive sensors.

The RGB-D sensor utilizes an active light source for the close-range sensing of 3D environments. Among visual based pose estimation methods, a simultaneous localization and mapping (SLAM) technique is often used to process the obtained image sequences efficiently [

19,

20,

21,

22]. RGB-D SLAM integrates visual and depth data for real-time pose estimation. References [

23,

24,

25,

26,

27] presented on-board systems for real-time pose estimation and mapping using RGB-D sensors, which enabled autonomous flight of an unmanned aerial vehicle (UAV) in GPS-denied indoor environments with no need of wireless communication. The accurate and computationally inexpensive RGB-D SLAM is performed based on visual odometry, loop closure and pose graph optimization. To reduce the computational cost of RGB-D SLAM further, methods based on signed distance functions (SDF) were proposed in [

28,

29] for real-time UAV pose estimation. SDFs utilizes the distances to the surface of a 3D model to represents the scene geometry, and the pose parameters of an UAV are estimated by minimizing the error of the depth images on SDF. By using SDF, these methods yield comparable accurate and robust pose estimation to RGB-D SLAM at a much higher speed. To increase the accuracy of 3D rigid pose estimation for long term, Gedik and Alatan [

30] fused visual and depth data in a probabilistic manner based on extended Kalman filter (EKF). Liu et al. [

31] used multiple RGB-D cameras to estimate the real-time pose of a free flight aircraft in a complex wind tunnel environment. A cross-field of view real-time pose estimation system was established to acquire the 3D sparse points and the accurate pose of the aircraft simultaneously. Although the RGB-D sensor can obtain pose parameters with high precision, the short measuring distances limit its application to aircraft pose estimation in outdoor environments.

Binocular vision systems estimate the pose of the target through feature correspondence and 3D structure computation [

32,

33,

34], and the depth information is usually extracted by stereo matching [

35]. To estimate the aircraft absolute pose, 3D models or cooperative marks are also needed [

36]. An on-board binocular vision-based system presented in [

37] estimated the relative pose between two drones to verify the autonomous aerial refueling of UAVs. Xu et al. [

38] proposed a real-time stereo vision-based pose estimation system for an unmanned helicopter landing on a moving target. The geometry features of 2D planer targets are used to simplify the process of feature extraction. The authors in [

39] used a stereo vision SLAM to acquire accurate aircraft pose parameters for industrial inspection. This stereo vision SLAM estimates aircraft pose using stereo odometry based on feature tracking (SOFT) and a feature-based pose graph SLAM solution (SOFT-SLAM) is proposed. Many on-board stereo vision-based pose estimation algorithms are mainly developed for applications in which aircraft are close to 3D environments, i.e., the ratio of the stereo camera’s baseline to depth of field is relatively large. For an aircraft flying at high altitudes, it is hard to obtain accurate and robust depth information from stereo image pairs with a small baseline, and aircraft jitter also affects the stereo camera calibration. The baseline length of two cameras is an important parameter which affect the corresponding disparity between two images. A large baseline can improve the accuracy of depth estimation and increase the measurement range [

40]. But with increasing the baseline distance, stereo matching becomes a quite challenging task for traditional binocular vision methods. The authors in [

41] proposed a hybrid stereovision method to estimate the motion of UAVs. The hybrid stereovision system consisted of a fisheye and a perspective camera and no feature matching between different views is performed which is different from classical binocular vision methods. The method first estimates the aircraft’s altitude by a plane-sweeping algorithm; then, the fisheye camera is used to calculate the attitude, and the scale of the translation is obtained by the perspective camera; finally, these pose parameters are combined to acquire the UAV motion robustly.

Compared to the state-of-the-art pose estimation systems based on RGB-D and binocular vision sensors, the monocular vision system is relative light in weight, computationally less expensive, and is also suitable for long-range pose estimation. For an aircraft with an on-board monocular camera, its pose can be determined by sensing the 3D environments. An on-board monocular vision system based on cooperative target detection was established in [

42] for aircraft pose estimation. Coplanar rectangular feature on a known checkerboard target is extracted to determine the pose parameters and a continuous frame detection technique is presented to avoid corners’ confusion. The authors in [

43] presented a simple monocular pose estimation strategy for UAV navigation in GNSS-denied environments. Known 3D geometry features in observed scenes are exploited and a perspective-and-point (PnP) algorithm is selected to estimate the pose accurately. Benini et al. [

44] proposed an aircraft pose estimation system based on the detection of a known marker. The on-board system performs high-frequency pose estimation for autonomous landing using parallel image processing. For environments without known prior information, monocular SLAM [

45,

46,

47,

48,

49] or the structure from motion (SfM) [

50,

51] method can be applied to estimate the relative pose of aircraft in real-time.

However, for external monocular vision systems which estimate an aircraft’s absolute pose using its 2D projected images, these on-board vision techniques are not applicable because of the unpredictable motion of aircraft and unknown scale factor. It is quite challenging to obtain accurate pose information from a single 2D image of an aircraft, especially for long distance measurements where the size of the aircraft is relatively small compared to its average distance to the monocular camera.

Prior knowledge about an aircraft’s structure, such as 3D models or synthetic image datasets, is usually needed to facilitate the estimation of aircraft pose parameters. For monocular model-based methods, the pose parameters are calculated by minimizing the distance between corresponding features extracted from 2D images and the 3D model. The authors in [

52] exploited 3D shape models to estimate the motion of targets in an unsupervised manner. In this method, a set of feature point trajectories are detected in images and pose estimation is performed by aligning the trajectories and the 3D model. To reduce problem dimension, subspace clustering is used to select visible part of targets’ convex hull as candidate matching points, and a guided sampling procedure is performed to obtain the alignment (i.e., the motion matrix). Reference [

53] presented a monocular vision system located on a ship’s deck to estimate the UAV pose for autonomous landing. The 3D CAD (Computer Aided Design) model of the UAV is integrated into a particle filtering framework and the estimation results of the UAV pose are obtained and optimized based on the likelihood metrics.

To reduce the complexity of model-based pose estimation, features describing the aircraft’s structure are proposed and 3D pose is estimated by pattern matching. Reference [

54] extracted rotation invariant moments to describe the aircraft silhouette from monocular image data and converted the detected features to an estimated pose through a nearest neighbor search algorithm. Breuers and Reus [

55] used normalized Fourier descriptors for structure extraction and estimated the aircraft’s pose by searching the best match in a reference database. An aircraft pose recognition algorithm was proposed in [

56] based on locally linear embedding (LLE) which reduces the dimensionality of the problem. LLE is employed to extract structural features as inputs of a back propagation neural network and the aircraft’s pose is obtained by searching for local neighbors. Reference [

57] utilized contour features to describe the aircraft’s structure and estimate the relative pose information. The algorithm first projects a 3D model into 2D images in different views and establishes a contour model dataset; then, the invariant moment and shape context are used for contour matching to recognize the aircraft’s pose. Wang et al. [

58] used central moment features to acquire the pose information of commercial aircraft in a runway end safety area. Based on the structure information extracted by the central moments and random sample consensus (RANSAC) algorithm, a two-step feature matching strategy is proposed to identify an aircraft’s pose.

Although a lot of features-based methods have been presented to reduce the complexity of model-based pose estimation, 3D models are still required to obtain high-quality synthetic aircraft image datasets which is necessary for reliable feature matching and accurate pose estimation. The usage of detailed 3D models or feature matching is storage- and time-consuming, and for different types of aircraft, it is necessary to prepare corresponding 3D models and/or feature datasets which also reduces the flexibility and efficiency.

In this paper, a vision system using two monocular cameras with a large baseline is presented for pose estimation of model-unknown aircraft. In practical applications, our vision system uses two cameras located on the ground to track a free flight aircraft simultaneously and estimate its real-time 3D pose. For every captured image, the camera orientation parameters are recorded and known. Compared to other vision methods based on detailed 3D models or feature datasets, our approach only utilizes a pair of 2D images captured at the same time to estimate the 3D pose of an aircraft robustly and accurately. Some prior assumptions about the general geometry structure of aircraft are exploited to recognize aircraft’s main structure and obtain 3D/2D feature line correspondences for pose solutions. With a very large baseline distance, our system can perform long range measurement and enhance the accuracy of pose computation. By utilizing common structure features of aircraft, the line correspondences are established without relying on 3D models and there is no need for stereo matching which is very difficult for wide-baseline images.

Compared with other systems using binocular stereo vision sensors for aircraft pose estimation, our vision system enables binocular vision sensors to achieve a larger measuring range by adopting a much wider baseline configuration, and our method can estimate the aircraft pose from the wide-baseline image pairs robustly and accurately. Moreover, our visual sensor system is more flexible in estimating the pose of different types of aircraft as it does not need to preload detailed 3D models or feature database.

In our pose estimation method, the aircraft’s structure in a single image is represented by line features, and the spatial and geometric relationships between extracted line features are explored for the extraction of aircraft structure. The line features are detected by a line segment detector (LSD) algorithm, and a mean-shift algorithm is used for locating the aircraft’s center. Based on the orientation consistency constraint of line features distributed on the fuselage, a density-based clustering algorithm is used to determine the fuselage reference line by grouping line segments with similar direction. Through the analysis of aircraft’s common wing patterns, bilateral symmetry property of aircraft, coplanar wings, and fuselage reference line are assumed. Under these prior assumptions, the line segments which correspond to the wing leading edges are extracted by vector analysis. After identifying the projected fuselage reference line and wing edges in a pair of images, line correspondences between the aircraft’s 3D structure and its 2D projection are established and the pose information is obtained using planes intersection method. As no detail 3D models and/or feature datasets are required, our vision system provides a common architecture for aircraft pose estimation and can estimate the pose of different aircraft more flexibly and efficiently.

The remainder of the article is organized as follows:

Section 2 provides the coordinate system definition and an overview of our vision system.

Section 3 describes our pose estimation algorithm in detail. The experimental results are presented in

Section 4 to verify the effectiveness and accuracy of our algorithm.

Section 5 elaborates on the conclusions.

2. Coordinate System Description and Problem Statement

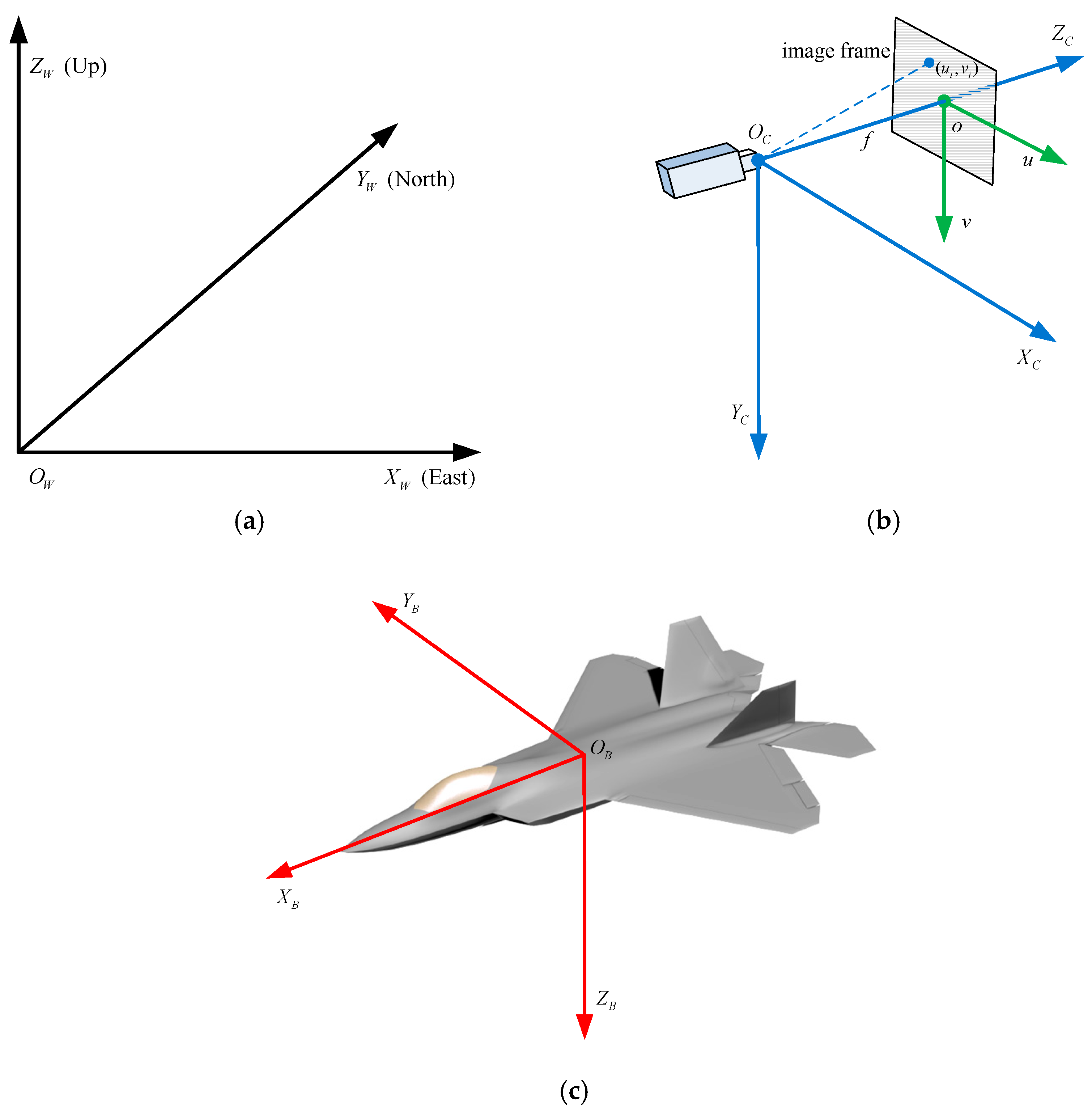

In this section, we briefly introduce the coordinate systems and pose estimation problem. Four major coordinate systems used for pose estimation are shown in

Figure 1.

Figure 1a shows the world coordinate system in which the camera tracks the aircraft, and the absolute pose of an aircraft is defined with respect to the world frame. In our method, the east–north–up (ENU) coordinate system is used as the world coordinate system.

Figure 1b shows the camera coordinate system and image coordinate system. The camera frame is tied to a monocular camera which project the aircraft onto the image frame. The optical center of the camera is treated as the origin of the camera frame, the optical axis of the camera is along the

z axis of the camera; the horizontal axis (

u) of the image frame is parallel to the

x axis of the camera coordinate frame in the right direction, and the vertical axis (

v) of the image frame is parallel to the

y axis of the camera coordinate frame in the right-handed coordinate system.

The camera is calibrated with respect to the world coordinate system and its orientation parameters corresponding to the captured image are recorded, i.e., the interior and exterior orientation parameters of the camera are considered to be known.

Figure 1c shows the body coordinate system of an aircraft. The origin is located at the centroid of the aircraft. The

x axis points along the fuselage reference line; the

z axis is perpendicular to the plane containing the fuselage reference line and wing leading edge lines, direct to down side; and the

y axis is perpendicular to the bilateral symmetry plane of the aircraft in the right-handed coordinate system. As is shown in

Figure 1c, for fixed-wing aircraft, the wings are mounted to the fuselage, and the wing leading edges and fuselage reference line are approximately coplanar.

The schematic representation of the pose estimation problem is shown in

Figure 2, where a vision system using two monocular cameras is established for pose estimation of an aircraft. The baseline distance between the two cameras in our vision system is large, and our method only utilizes a wide-baseline image pair captured at the same time to solve the absolute pose of the aircraft robustly and accurately.

The location and orientation of the body frame with respect to the world frame represent the 3D pose parameters of the aircraft, which are the solutions for the pose estimation problem. For many aircraft, the bilateral symmetry is their inherent property and their wings are coplanar with the fuselage reference line. Moreover, line features distributed along the fuselage are approximately parallel to the fuselage reference line. These geometry structure features are exploited in our method to facilitate aircraft structure recognition and pose estimation.

3. Pose Estimation Method

For the absolute pose estimation problem, the orientation and location of the aircraft was specified using a rotation matrix and a translation vector which set a transformation from the body frame to the world frame. The yaw, pitch, and roll angle (the Euler angles) of the aircraft were extracted from the rotation matrix and the translation vector represented the 3D position of the aircraft in the world coordinate system.

To obtain the solution for the rotation matrix and translation vector, it was necessary to establish the correspondences between features in the body frame and the world frame. In our method, the feature correspondences were found by structure extraction. With some prior assumptions about the geometric relationships between the fuselage reference line and wing leading edges, our pose estimation method first extracted the fuselage reference line and the wing leading edge lines from a given pair of images to establish the line correspondences, then used a planes intersection method to obtain the pose information. The details of the structure extraction and plane intersection algorithm are described in the following sections.

3.1. Structure Extraction

A novel structure extraction method is presented to detect the fuselage reference line and wing leading edge lines from a 2D image without using 3D models, cooperative markers, or other feature datasets. For the pose estimation of a free flight aircraft at long distance, it was difficult for feature points to establish reliable correspondences due to the optical blur, ambiguities, and self-occlusions. In these cases, line features were more robust and accurate and less affected by the unpredictable motion of the aircraft. To acquire accurate and robust pose information from 2D images, line segments were used for structure description and recognition.

In our algorithm, some assumptions about the generic geometry structure features of the aircraft are explored. The most important assumptions used for structure extraction are as follows:

These two assumptions reflect the geometry relationships between line features on the aircraft. Weak perspective projection (scaled orthographic projection), which is a first order approximation of the perspective projection, was also assumed. As the size of the aircraft is small compared to its average distance from the monocular camera, weak perspective assumption holds approximately.

Based on these assumptions, the geometric configuration of line features, such as geometric consistency (position, length and orientation) and linear combinations of vectors (directed line segments) were exploited to extract the main structure (fuselage and wing leading edges) of the aircraft and establish line correspondences. Robust and accurate structure extraction can lay a good foundation for pose estimation.

3.1.1. Line Feature Detection

The line features were detected in 2D images using line segment detector (LSD) algorithm [

59], which is a state-of-the-art method for extracting line segments with subpixel accuracy in linear-time. Reference [

60] compared different line detection methods and made the conclusion that LSD algorithm is optimal at different illumination, scales, and blur degrees.

Figure 3a shows the result of line feature detection, in which detected line features are expressed by red line segments. As is shown in

Figure 3a, the structure information of aircraft was described by line segments and the geometric configuration between these line segments was analyzed in the following sections to determine the fuselage reference line and wing leading edge lines. The set of line features detected by LSD algorithm is denoted by

, and the following structure extraction process is based on the line feature set

.

3.1.2. Extraction of Fuselage Reference Line

Our structure extraction method leveraged spatial, length and orientation consistency constraints of the line features to extract the fuselage reference line. Firstly, the spatial consistency constraint was used to identify the centroid of the aircraft and combined with length constraint to remove irrelevant line features; then, orientation consistency-based line clustering analysis was performed to recognize the aircraft’s structure and estimate the direction of fuselage reference line.

In actual applications, such as flight test, take-off and landing, our vision system tracked one single aircraft and captured its 2D projected image, so the image area that contained the aircraft was a saliency region in which detected line features are concentrated. Compared to unrelated line segments distributed in the background, line segments of the aircraft were close to each other which formed a high-density region of line features. Based on this spatial consistency constraint, mean shift [

61] algorithm was used for line feature clustering to determine the center of the aircraft and exclude irrelevant line features.

Mean shift is a non-parametric mode-seeking technique which locates the maxima of a density function iteratively. In our case, it was suitable for indicating the density model of line features and determining the centroid of the aircraft. Given an initial estimation, mean shift algorithm used a kernel function to determine the weight of adjacent elements and re-estimate the weighted mean of the density. The line feature set

was the input of mean shift algorithm, the adjacent element were an image pixel

(represented by

, where

and

are the horizontal and vertical coordinates of the pixel respectively) on a line segment in

, and the kernel function is a Gaussian kernel

. The Gaussian kernel

and the weighted mean

are denoted as following:

in which

represents the weight of the Gaussian kernel on the distance and

is the neighborhood of adjacent elements. After the aircraft’s center is determined, line segments within a certain distance from the cluster center are retained which is considered as the line features on the aircraft, while other line segments away from the center are excluded from the set

. The mean shift algorithm can estimate the aircraft center robustly and has a certain tolerance to background clutter. The result of mean shift clustering is shown in

Figure 3b, where the cluster center is marked by a green cross and the remaining line features are represented by red line segments. Compared to

Figure 3a, we can see that a lot of irrelevant line segments distributed in the background are effectively eliminated by leveraging the spatial geometric constraint.

However, there were still some unrelated line features left in the line feature set

, as we can see in

Figure 3b. To further reduce the adverse effect of irrelevant line segments, the length consistency constraint was adopted and only line segments longer than a certain threshold were retained in

.

In general, the lengths of the aircraft’s main structures (fuselage and wings) were larger than the lengths of other parts such as tail or other external mounts, so it is reasonable to exclude shorter line segments from line feature set. Moreover, the length consistency constraint improved the accuracy of structure extraction. For a line feature detected by the LSD algorithm, the uncertainty of its direction increased with the decreasing length, it means that a small pixel coordinate error of the endpoint causes greater orientation error for shorter line segments.

Figure 3c shows the result of excluding shorter line segments. As can be seen, the irrelevant line features were further eliminated in comparison with

Figure 3b.

After acquiring the estimation of the aircraft’s center and removing irrelevant line features, the orientation consistency constraint was utilized to estimate the direction of the fuselage reference line. Under weak perspective projection, if the line segments are parallel to each other in 3D space, then this geometric constraint of corresponding line features is invariant after the 3D world to 2D image transformation.

For many aircraft, line segments distributed along the fuselage are approximately parallel to the fuselage reference line, and the parallel world lines are transformed to the parallel image lines by the weak perspective camera. In the projected 2D image, angle values of line features along the fuselage were close to each other and concentrated around the orientation of the fuselage reference line (small standard deviation). Moreover, compare with other parts of the aircraft, fuselage contains most parallel line segments. By performing parallel line clustering to represent the aircraft structure, the orientation of the fuselage reference line was determined.

The input of the parallel line clustering analysis was the directions of line features in

, which is represented as

. Based on the orientation consistency constraint, the center of the highest density area of

indicates the orientation of the fuselage. The orientation of a line feature is defined as the angle

of the straight line specified by this line feature, and the orientation of the fuselage reference line is represented as

. To seek regions in

which have a high density and estimate the direction of the fuselage reference line, density-based spatial clustering of application with noise (DBSCAN) [

62] algorithm was used for the parallel line clustering.

DBCSAN is a density-based clustering algorithm, and the clustering solely depends on the spatial density mode of the data. Two parameters were used in the DBSCAN algorithm to describe the spatial distribution of the data: the distance threshold

and the minimum number of points

. The distance threshold

measured the proximity of two data points while

determines the minimum number of line features required to form a high-density region. For parallel line clustering,

is the absolute difference between angle values. By using these two parameters, data points are partitioned into three types according to their spatial patterns, as is shown in

Figure 4:

Core points (red points in

Figure 4, the dense region of a cluster): its

neighborhood contains at least

points;

Border points (green points in

Figure 4, the edge region of a cluster): the number of data points in its

neighborhood is less than

, but it can be reached from a core point in its

neighborhood (as displayed by one-way arrows in

Figure 4);

Noise points (blue points in

Figure 4, isolated outliers): it is neither a core point nor a border point.

To discover the orientation consistent clusters in

, the DBSCAN algorithm first counted the number of points in every data point’s

neighborhood and identifies the core points according to

; then core points were partitioned into parallel line clusters leveraging the geometric constraint on the connected graph (see two-way arrows in

Figure 4). Finally, for every non-core point, if there was a core point in its

neighborhood, it was considered as a border point, otherwise, it was a outlier. The orientation-consistent clusters with most parallel lines was considered as the representation of the fuselage in the image, and the orientation information of the fuselage reference line was acquired by extracting the center of this line feature cluster. Compared to other clustering approaches such as

k-means [

63] and Gaussian mixture model (GMM) [

64], the DBSCAN algorithm did not need prior knowledge about the number of clusters and can discover high density areas of arbitrary shape and size. It was also insensitive to outliers and the ordering of the data points.

After obtaining the orientation-consistent cluster which represents the fuselage structure, line features not belonging to the fuselage are excluded from

and form a new set

which was used for the extraction of wing leading edges. As only line features belonging to the fuselage remained in

, it was possible to identify the fuselage’s center with a higher precision, and the image moment method was used to re-estimate the center of

to obtain the estimation of the fuselage center, which is given by:

where

is the estimated center of the fuselage, and

is the number of pixels on the line features in

. In the case of a clean background, the image moment method can also be used to estimate the centroid of the aircraft. The result of parallel line clustering is shown in

Figure 3d, in which the estimated centroid of the fuselage is marked by a green cross and the fuselage reference line is indicated by the red line. As is shown in

Figure 3d, the fuselage reference line was correctly extracted. The estimated orientation

and center

determine the 2D pose information of the aircraft’s fuselage in the image, and the extracted fuselage line will provide important auxiliary information for the following structure extraction.

3.1.3. Extraction of Wing Leading Edges

To identify the line features which correspond to the wing leading edge lines of the aircraft, it was assumed that the wings were bilaterally symmetrical and approximately coplanar with the fuselage reference line (the fuselage reference line passes through the point of intersection of the wing leading edge lines). By observing the wing patterns of many aircraft, the validity of the assumption was confirmed. Based on the lateral symmetry of the aircraft, the wing leading edges were expressed as two symmetric vectors of equal length. With the additional condition that the wings were coplanar with the fuselage reference line, the sum of these two vectors was parallel to the fuselage reference line.

Since the geometric properties of linear combinations of vectors are invariant under weak perspective projection, after the aircraft in three-space is projected onto the image plane by a weak perspective camera, the addition of two directed wing leading edges in the image is still parallel to the fuselage reference line. The following is a proof of this geometric invariant property.

In the world coordinate frame, two vectors representing the wing leading edges were denoted by

and

; the sum of these two vector which is parallel to the fuselage reference line is

. While in the image coordinate frame, the corresponding projection vectors were represented as

,

and

. The world coordinates of a vector endpoint were denoted by

and the corresponding image coordinates were

. As the world and image points (

and

) are represented as homogeneous vectors, and depth of field is a positive constant under weak perspective projection, the world to image transformation of every vector endpoint can be represented compactly as:

where

is the projection matrix of the camera. By using Equation (3), the geometric transformations between vectors in the world frame and image frame are established as follows:

As

is the addition of

and

:

By substituting Equation (5) into Equation (4), the geometric invariant property is obtained:

Since

is the projection of

, the sum of

and

is parallel to the fuselage reference line in the image. An intuitive representation of this geometric constraint in the image frame is shown in

Figure 5, where the red line indicates the fuselage reference line and the green arrows indicate the directed wing leading edges. After the 3D world to 2D image transformation under weak perspective projection, the addition of directed wing leading edges is still parallel to the unit vector of the fuselage reference line.

Based on this geometric invariant property, the wing leading edge lines in the image are extracted using vector calculations. We first vectorized line segments in

based on the extracted fuselage reference line. For the vector representation of every line segment, the endpoint of the line segment which was father from the fuselage reference line was decided as the destination of the vector, and by extending original line segment to an intersection with the fuselage reference line, the intersection is determined as the beginning of the vector. Then for every two vectors in the set

, vector calculations were performed to obtain the value of an objective function. The objective function was proposed to extract the wing leading edge lines based on the geometric invariance constraints. Let the unit vector giving the direction of the fuselage reference line be

, for two vectors

and

in

, whose beginnings are

and

respectively, the objective function is given by:

where

represents the magnitude of a vector and each item in the objective function is normalized into the range

. The two vectors for which

attains its maximum value are regarded as the correspondences of the wing leading edges and used to establish the line correspondences, i.e., our method extracts the wing leading edges from the image by solving this:

The parameter is the cosine of the angle between and , and measures the degree of parallelism between two vectors. If is parallel to the fuselage reference line, then reaches its maximum, i.e., . For two vectors corresponding to the wing leading edges, the addition is approximately parallel to the fuselage reference line, and the value of is close to 1.

The parameter represents the cosine of half-angle between and . For the wing trailing edges of an aircraft, the addition of corresponding vectors is also parallel to the fuselage reference line, so is used to distinguish between the wing leading edges and trailing edges. As the angle between the wing leading edges is usually smaller than the angle between the wing trailing edges, gains a greater value for the wing leading edges.

The parameter is the magnitude of . As the wingspan dimension represents the width of an aircraft, for and corresponding to the wing leading edges, indicates the distance between wingtips and reaches its largest value.

The parameter measures the distance between (the beginning of ) and (the beginning of ). With the wing leading edges intersecting at a point, if and corresponds to the wing leading edge lines, then the value of is close to zero.

The parameter is the sum of and . Since the wing leading edge in general have a larger length than aircraft’s other structures, including the wing trailing edge, has a larger value for the vectors corresponding to the wing leading edges.

Based on the above analysis, , the weighted sum of these items, attained its maximum at the two vectors corresponding to the wing leading edges empirically. Among these items, , and play more important roles and the corresponding weights are greater. Although the 2D pose information of the fuselage was required, the extraction of the wing leading edges can tolerate the error of fuselage extraction to some extent and a very high-precision fuselage pose estimation is not necessary.

By solving Equation (8), the lines which the wing leading edges belong to were detected in the image and the correspondences between line features in the image and aircraft structure in 3D space are established. The results of wing leading edge extraction are shown in

Figure 6 where the green lines indicate the wing leading edge lines of the aircraft. As is shown, the wing leading edge lines were extracted correctly.

While the wing trailing edge could also be extracted by adjusting the weights in Equation (7), line features of the wing trailing edges were vulnerable to the interference from some other parts of the aircraft. In

Figure 6, the wing trailing edge was occluded by the tail and it was difficult to detect corresponding line features. Considering line features of the wing leading edges were less affected by the unpredictable motion of the aircraft and more stable, only the wing leading edge lines are extracted for pose estimation.

3.2. Planes Intersection Based on Line Correspondences

After extracting the wing leading edge lines and establishing the line correspondences, the planes intersection method was used for aircraft pose estimation. The geometric configuration of the planes intersection method is explained in

Figure 7. As we can see, the representation of a line in three-space (the wing leading edge line in our method) was determined by the two planes defined by line correspondences.

Although the fuselage reference line was extracted robustly and used to facilitate the wing leading edge extraction, the parameters which identify the fuselage reference line may be less accurate. To perform high-precision pose estimation, only wing leading edge lines in an image pair were utilized in intersection calculation.

In

Figure 7, two monocular cameras are used for plane-plane intersection and indicated by their optical centers

and

, and by image planes (gray regions in

Figure 7). Similar to Equation (3), the camera model under weak perspective projection is represented compactly as:

in which

is the projection matrix of the monocular camera

,

is the world coordinates and

is the corresponding image coordinates.

Our planes intersection method considered the lines as infinite and the endpoints of line features were not used for pose estimation. Let the 3D line in the world frame represent one of the wing leading edge line, and be the parametric representation of ’s projected line on the image frame. The parameters of in three-space can be computed by intersecting the planes and .

The plane

is defined by the image line

and the optical center

, and it can be represented conveniently as a 4-vector:

where

and

are already known, and for a world point

contained in the plane

,

. Based on Equation (10), the 3D line

, which is the intersection of the two planes

and

, is parametrized by the span representation:

Here, the line is represented as a matrix, and if a world point lies on the line, then . After obtaining the span representation of in 3D space, the normalized vector giving the direction of is also determined. Our pose estimation method uses the normalized vector to identify the orientation of a wing’s leading edge in the world frame, and obtain the 3D attitude of the aircraft.

Let

be the right wing leading edge line in 3-space, and

be the left wing leading edge line; The normalized vectors of

and

are represented as

and

respectively. The direction of the

x axis of the body frame is parallel to

, while the direction of the

y axis of the body frame is parallel to

. By using singular value decomposition (SVD) [

65], the rotation matrix between the body frame and world frame which determines the aircraft attitude in three-space is obtained up to a reflective ambiguity.

To avoid this reflective ambiguity, an initial pose constraint is used in our method. The approximate orientation of the aircraft is specified in the initial frame of the image sequence, and the orientation information of the current frame was used in the next frame. In practice, the approximate value of the roll angle of the aircraft was provided, or the approximate position of one wing tip was marked on one image of the initial image pair. It was easy to provide this pose constraint in the application scenarios of our pose estimation method, for example, take-off, landing, and flight testing.

The point of intersection of

and

indicates the translation vector of the body frame with respect to the world frame. Based on the span representation of

and

, overdetermined equations are established to compute the world coordinates of the point of intersection as follows:

in which

is the point of intersection (a homogeneous 4-vector) of

and

, and

is a 4 × 4 matrix. By performing a factorization of matrix

via the SVD, the singular vector corresponding to the smallest singular value of

is the solution of the overdetermined equations

. In addition, based on the epipolar constraint, an optimal estimator for the point of intersection on the image plane can be used to reduce the geometric errors before the matrix factorization [

66].

As the normalized vectors and determine the rotation matrix and the point of intersection of and identifies the translation vector, the transformation from the body frame to the world frame which solves the aircraft pose is acquired. Based on the line correspondences, the planes intersection method obtained the pose information of model-unknown aircraft. Moreover, our pose estimation method can be easily extended to multi-camera systems.

3.3. Algorithm Pipeline

The pipeline of our pose estimate method is summarized in this section, as is shown in Algorithm 1.

| Algorithm 1. Pose estimation based on geometry structure features and line correspondences. |

| Input: | A pair of images captured at the same time, the parameter matrices of the two cameras , , and the initial pose constraint. |

| Output: | The pose information of the aircraft. |

| Step 1 | Detect line features in images using the LSD algorithm; |

| Step 2 | Estimate the location of the aircraft’s center in images and eliminate irrelevant line features based on the spatially and length consistency constraints; |

| Step 3 | Determine the orientation of fuselage reference line using parallel line clustering; |

| Step 4 | Extract the wing leading edge line in the image pair based on generic geometry structure features of aircraft and vector analysis; |

| Step 5 | Acquire the pose information of the aircraft by using the planes intersection method. |