A Robust Real-Time Detecting and Tracking Framework for Multiple Kinds of Unmarked Object

Abstract

1. Introduction

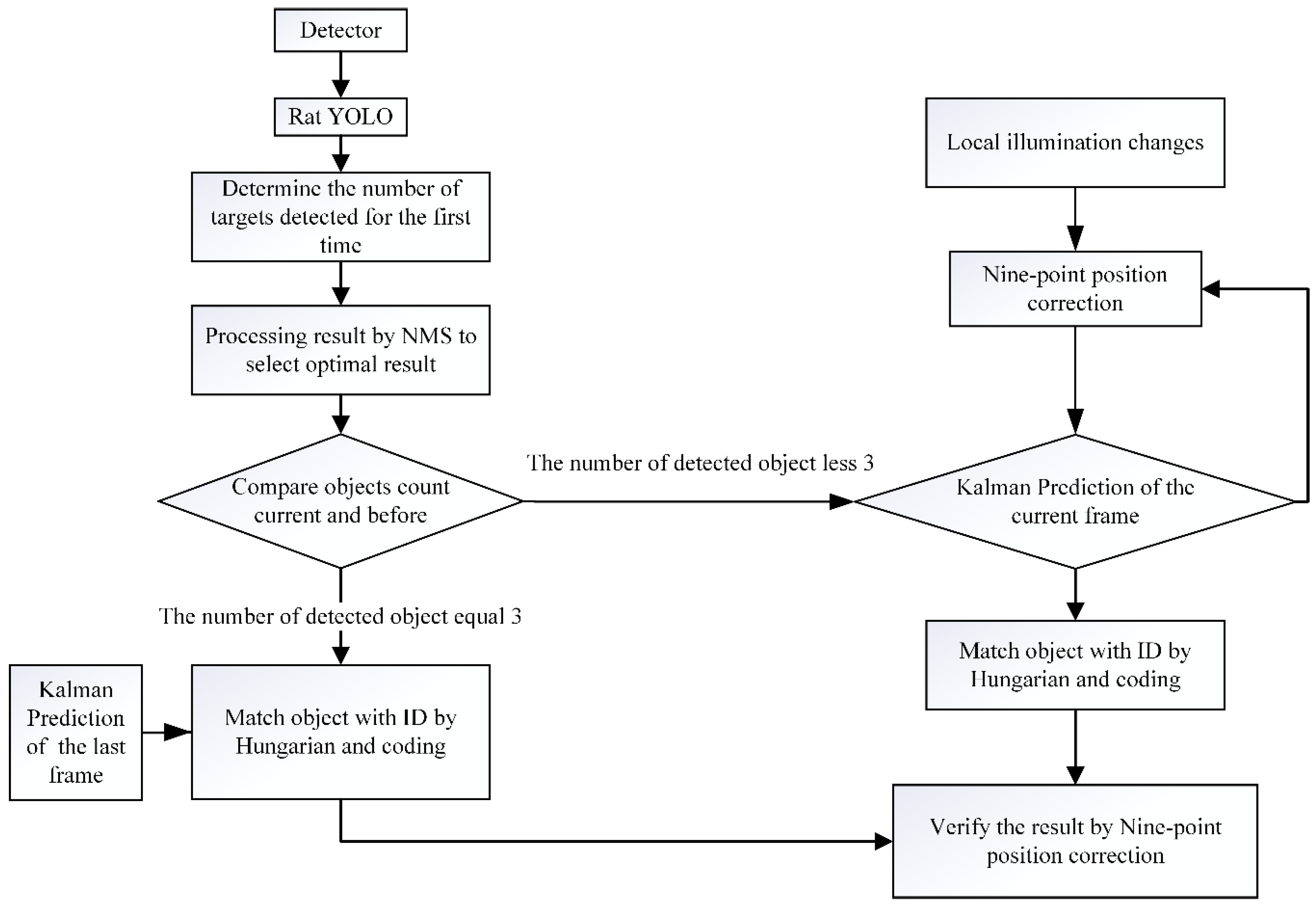

- The structure of the real-time detector-tracker of a rat is composed of rat-YOLO, the Kalman filter, Hungarian algorithm, and nine-point fine position correction to identify, predict, and track rats in a fixed scene. Besides this, it achieves offline object tracking.

- Nine-point fine position correction is proposed in this study to correct the target position. As the predicted target position of the Kalman Filter is not necessarily accurate, the correction algorithm is proposed to verify the correctness.

- An automatic marking software of rat label images is proposed. The software is limited in generating rat labels under a simple scene, and the labeled dataset can be used in the YOLO model training.

- A multithreading local removal highlighting algorithm to remove highlights is proposed in this paper, which can remove highlights in a fixed region and save time.

2. Materials

2.1. Animals Selection

2.2. Hardware Platform

3. Methods

3.1. Rat YOLO Detector and Tracking

- (1)

- Calculate the area of every bonding box and sort by score.

- (2)

- Calculate the intersection over union (IOU), for which the equation is shown in Equation (1).

- (3)

- If the value of IOU exceeds the threshold, the bonding box with a low score is deleted.

3.2. Nine-Point Position Correction Algorithm

3.3. Rat Kalman-Filter-Model

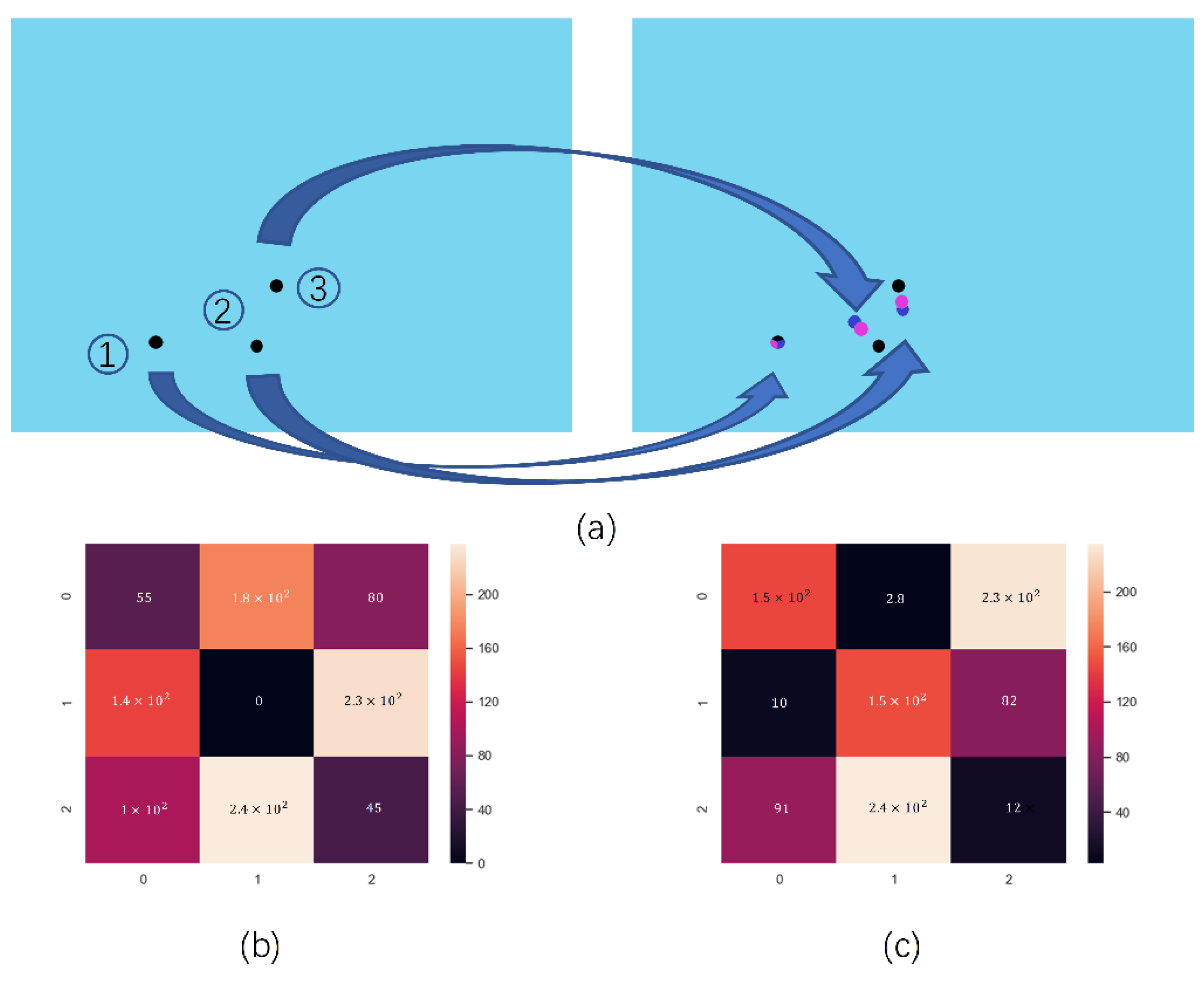

3.4. Improved Hungarian Filter Model

3.5. The Multithreading Local Removal Highlighting Algorithm

| Algorithm 1 The Multithreading Local Removal Highlighting Algorithm |

| begin: |

| 1. read original image, mask image, and channel = 0; |

| for (channel++ < 3): |

| 2. the gradient field () of the logarithm of the image is transformed |

| 3. Solve to recon- struct the logarithm of the image, ; |

| end |

| end |

3.6. Automatic Generating Labeled Dataset

| Algorithm 2 The Flow of Generating Labeled Dataset |

| begin: |

| 1. Collect a 500-frame video under a fixed scene; |

| while (frame.num++ <= frame.total_num): |

|

| end |

| 11. open “.xml” files with LabelImg to modified the wrong datasets by human; |

| end |

4. Results and Discussions

4.1. The Results of Automatically Generating a Labeled Dataset

4.2. The Result of Missing Objects Filled by the Kalman Filter and Hungarian Filter Model

4.3. The Accuarcy of Our Framework in Rat Tracking and Detecting

4.4. Generate Exploration and Trajectory of the Rat

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

Appendix A

References

- Foster, J.D.; Freifeld, O.; Nuyujukian, P.; Ryu, S.I.; Black, M.J.; Shenoy, K.V. Combining wireless neural recording and video capture for the analysis of natural gait. In Proceedings of the 2011 5th International IEEE/EMBS Conference on Neural Engineering, Cancun, Mexico, 27 April–1 May 2011; pp. 613–616. [Google Scholar]

- Zhang, P.; Huang, J.; Li, W.; Ma, X.; Yang, P.; Dai, J.; He, J. Using high frequency local field potentials from multi-cortex to decode reaching and grasping movements in monkey. IEEE Trans. Cognit. Dev. Syst. 2018, 11, 270–280. [Google Scholar]

- Guy, A.P.; Gardner, C.R. Pharmacological characterisation of a modified social interaction model of anxiety in the rat. Neuropsychobiology 1985, 13, 194–200. [Google Scholar] [CrossRef] [PubMed]

- Horner, R.H.; Carr, E.G.; Strain, P.S.; Todd, A.W.; Reed, H.K. Problem Behavior Interventions for Young Children with Autism: A Research Synthesis. J. Autism Dev. Disord. 2002, 32, 423–446. [Google Scholar] [CrossRef] [PubMed]

- Peters, S.M.; Pinter, I.J.; Pothuizen, H.H.J.; de Heer, R.C.; van der Harst, J.E.; Spruijt, B.M. Novel approach to automatically classify rat social behavior using a video tracking system. J. Neurosci. Methods 2016, 268, 163–170. [Google Scholar] [CrossRef]

- Guo, B.; Luo, G.; Weng, Z.; Zhu, Y. Annular Sector Model for tracking multiple indistinguishable and deformable objects in occlusions. Neurocomputing 2019, 333, 419–428. [Google Scholar] [CrossRef]

- Shi, Q.; Miyagishima, S.; Fumino, S.; Konno, S.; Ishii, H.; Takanishi, A. Development of a cognition system for analyzing rat’s behaviors. In Proceedings of the 2010 IEEE International Conference on Robotics and Biomimetics, Tianjin, China, 14–18 December 2010; pp. 1399–1404. [Google Scholar]

- Lorbach, M.; Kyriakou, E.I.; Poppe, R.; van Dam, E.A.; Noldus, L.P.J.J.; Veltkamp, R.C. Learning to recognize rat social behavior: Novel dataset and cross-dataset application. J. Neurosci. Methods 2018, 300, 166–172. [Google Scholar] [CrossRef]

- Jhuang, H.; Garrote, E.; Yu, X.; Khilnani, V.; Poggio, T.; Steele, A.D.; Serre, T. Automated home-cage behavioural phenotyping of mice. Nat. Commun. 2010, 1, 68. [Google Scholar] [CrossRef]

- Wang, Z.; Mirbozorgi, S.A.; Ghovanloo, M. An automated behavior analysis system for freely moving rodents using depth image. Med. Biol. Eng. Comput. 2018, 56, 1807–1821. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the NIPS’12 Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Fu, C.-Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. DSSD: Deconvolutional Single Shot Detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning for real-time fruit detection and orchard fruit load estimation: Benchmarking of “MangoYOLO”. Precis. Agric. 2019. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, W.; Tang, X.; Liu, J. A fast learning method for accurate and robust lane detection using two-stage feature extraction with YOLO v3. Sensors 2018, 18, 4308. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Girondel, V.; Caplier, A.; Bonnaud, L.; Girondel, V.; Caplier, A.; Bonnaud, L.; Girondel, V.; Caplier, A.; Bonnaud, L.; National, I.; et al. Real-time tracking of multiple persons by Kalman filtering and face pursuit for multimedia applications. In Proceedings of the 6th IEEE Southwest Symposium on Image Analysis and Interpretation, Lake Tahoe, NV, USA, 28–30 March 2007; pp. 201–205. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the IEEE International Conference on Computer Vision, Bombay, India, 4–7 January 1998; pp. 839–846. [Google Scholar]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive Histogram Equalization and Its Variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Sato, Y.; Ikeuchi, K. Temporal-color space analysis of reflection. IEEE Comput. Vis. Pattern Recognit. 1993, 11, 570–576. [Google Scholar]

- Lin, S.; Li, Y.; Kang, S.B.; Tong, X.; Shum, H.Y. Diffuse-specular separation and depth recovery from image sequences. In Proceedings of the European Conference on Computer Vision, Copenhagen, Denmark, 28–31 May 2002; Volume 2352, pp. 210–224. [Google Scholar]

- Li, Y.; Lin, S.; Lu, H.; Kang, S.B.; Shum, H.Y. Multibaseline stereo in the presence of specular reflections. In Proceedings of the Object recognition supported by user interaction for service robots, Quebec City, QC, Canada, 11–15 August 2002; Volume 16, pp. 573–575. [Google Scholar]

- Tan, R.T.; Ikeuchi, K. Reflection components decomposition of textured surfaces using linear basis functions. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 125–131. [Google Scholar]

- Yang, Q.; Wang, S.; Ahuja, N. Real-time specular highlight removal using bilateral filtering. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 87–100. [Google Scholar]

- Pérez, P.; Gangnet, M.; Blake, A. Poisson image editing. ACM Trans. Graph. 2003, 22, 313–318. [Google Scholar] [CrossRef]

- National Institute of Health (NIH). Guide for the Care and Use of Laboratory Animals; The National Academies Press: Washington, DC, USA, 1996; ISBN 9780309154000. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 850–855. [Google Scholar]

- Tan, R.T. Separating Reflection Components of Textured Surfaces using a Single Image 1 Introduction 2 Reflection Models. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 178–193. [Google Scholar] [CrossRef]

- Carlos Dos Santos Souza, A.; Cerqueira De Farias Macedo, M.; Paixao Do Nascimento, V.; Santos Oliveira, B. Real-Time High-Quality Specular Highlight Removal Using Efficient Pixel Clustering. In Proceedings of the 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images, Parana, Brazil, 29 October–1 November 2018; pp. 56–63. [Google Scholar]

- Suzuki, S.; Abe, K. Topological structural analysis of digitized binary images by border following. Comput. Vis. Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Rodriguez, A.; Zhang, H.; Klaminder, J.; Brodin, T.; Andersson, P.L.; Andersson, M. ToxTrac: A fast and robust software for tracking organisms. Methods Ecol. Evol. 2017, 9, 460–464. [Google Scholar] [CrossRef]

- Rodriguez, A.; Zhang, H.; Klaminder, J.; Brodin, T.; Andersson, M. ToxId: An efficient algorithm to solve occlusions when tracking multiple animals. Sci. Rep. 2017, 7, 14774. [Google Scholar] [CrossRef] [PubMed]

| No Rats Detected | One Rat Detected | Two Rats Detected | Three Rats Detected | More than Three Rats Detected | Total Error Frames after Correction | Detected Rats after Our Framework |

|---|---|---|---|---|---|---|

| 25 | 88 | 658 | 2098 | 23 | 139 | 2753 |

| 0.864% | 3.043% | 22.752% | 72.545% | 0.795% | 4.806% | 95.194% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lv, X.; Dai, C.; Chen, L.; Lang, Y.; Tang, R.; Huang, Q.; He, J. A Robust Real-Time Detecting and Tracking Framework for Multiple Kinds of Unmarked Object. Sensors 2020, 20, 2. https://doi.org/10.3390/s20010002

Lv X, Dai C, Chen L, Lang Y, Tang R, Huang Q, He J. A Robust Real-Time Detecting and Tracking Framework for Multiple Kinds of Unmarked Object. Sensors. 2020; 20(1):2. https://doi.org/10.3390/s20010002

Chicago/Turabian StyleLv, Xiaodong, Chuankai Dai, Luyao Chen, Yiran Lang, Rongyu Tang, Qiang Huang, and Jiping He. 2020. "A Robust Real-Time Detecting and Tracking Framework for Multiple Kinds of Unmarked Object" Sensors 20, no. 1: 2. https://doi.org/10.3390/s20010002

APA StyleLv, X., Dai, C., Chen, L., Lang, Y., Tang, R., Huang, Q., & He, J. (2020). A Robust Real-Time Detecting and Tracking Framework for Multiple Kinds of Unmarked Object. Sensors, 20(1), 2. https://doi.org/10.3390/s20010002