A Framework for Multiple Ground Target Finding and Inspection Using a Multirotor UAS †

Abstract

:1. Introduction

2. Related Work

3. Framework

3.1. Image Capture Module

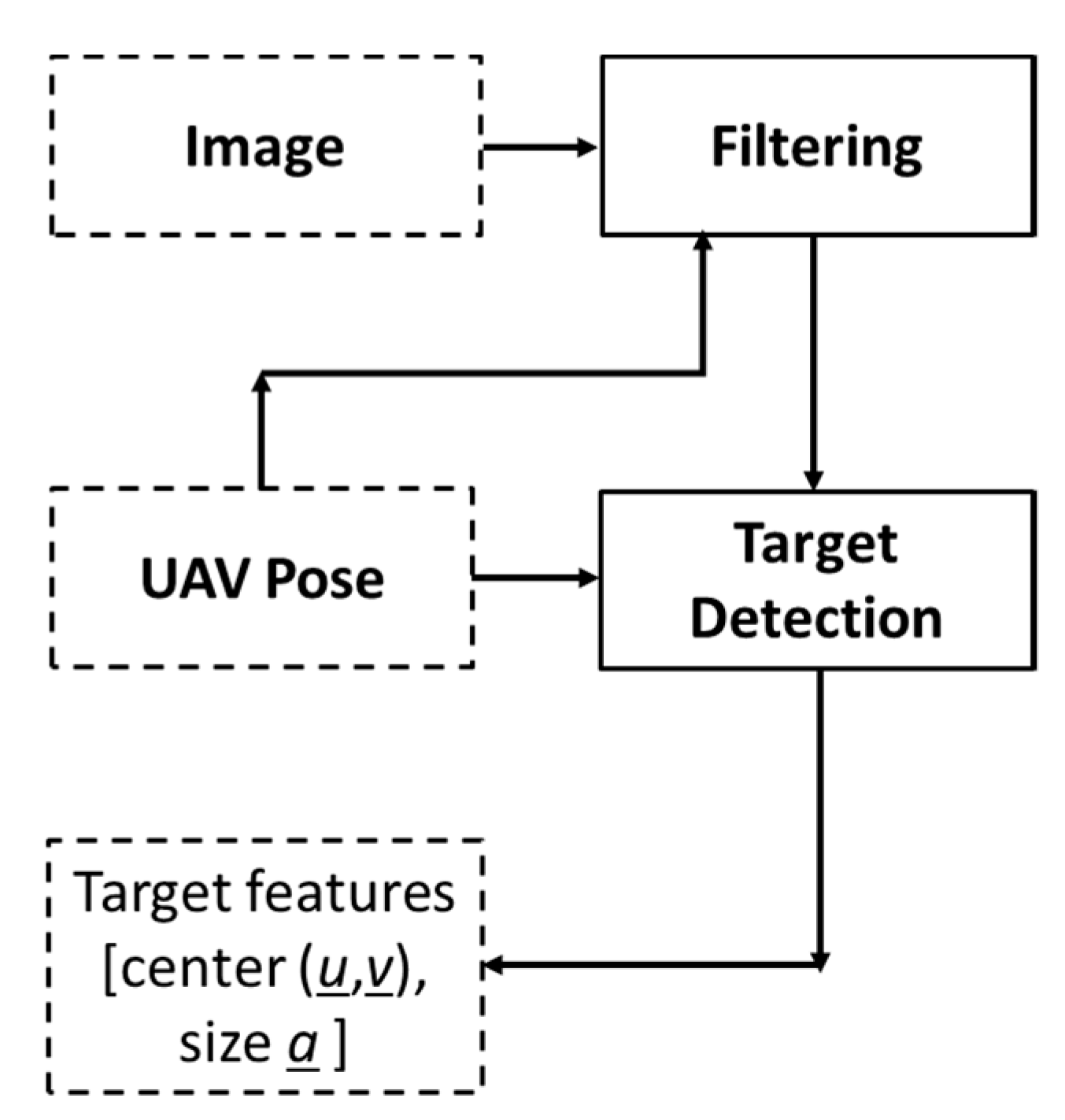

3.2. Target Detection Module

3.3. Mapping Module

3.3.1. Estimate Target Position

3.3.2. Track Targets

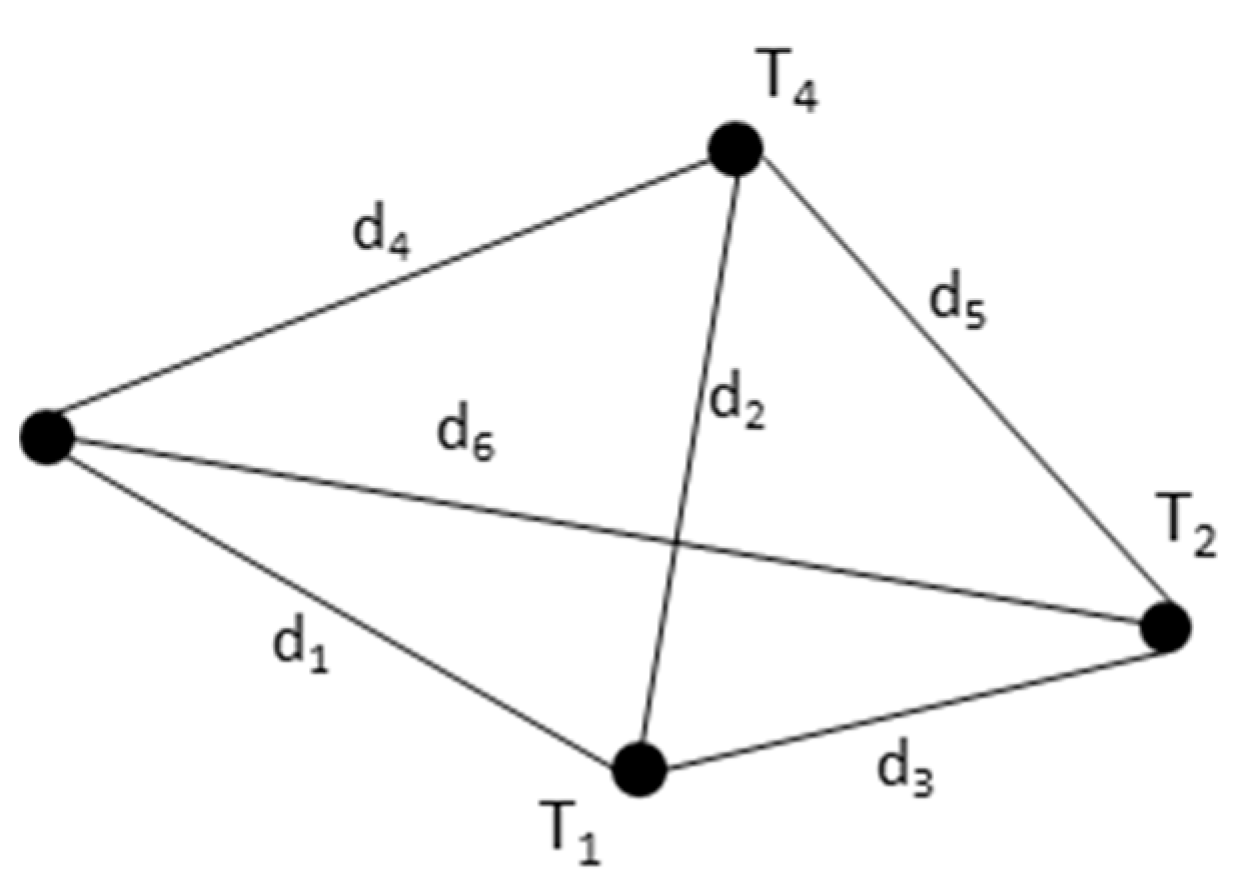

3.3.3. Build Internal Map of Adjacent Targets

3.3.4. Remove Duplicates

3.3.5. Update Position

3.3.6. Remove False Targets

3.4. External Sensors Module

3.5. Main Module

3.6. Autopilot Driver Module

4. Experiments

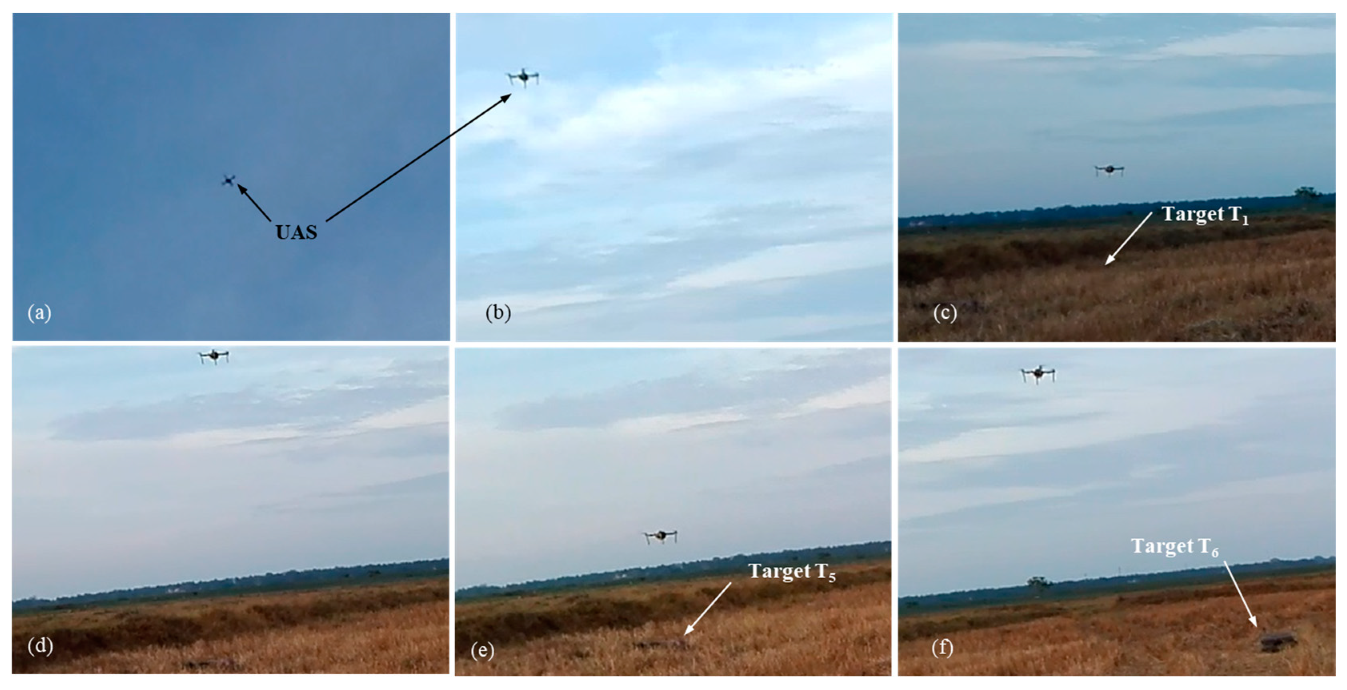

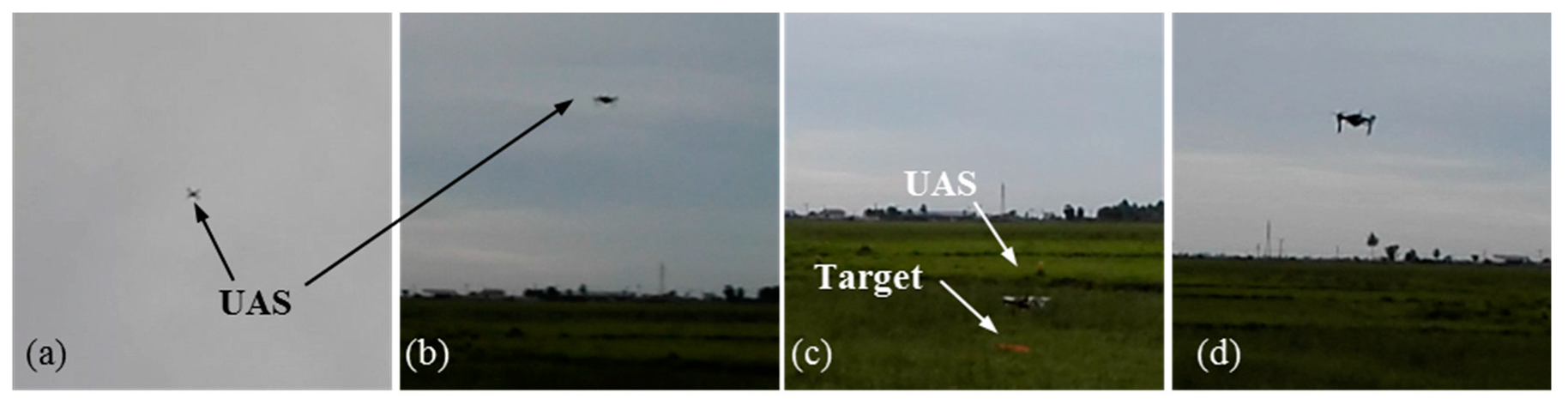

4.1. Hardware System

4.2. Field Experiments

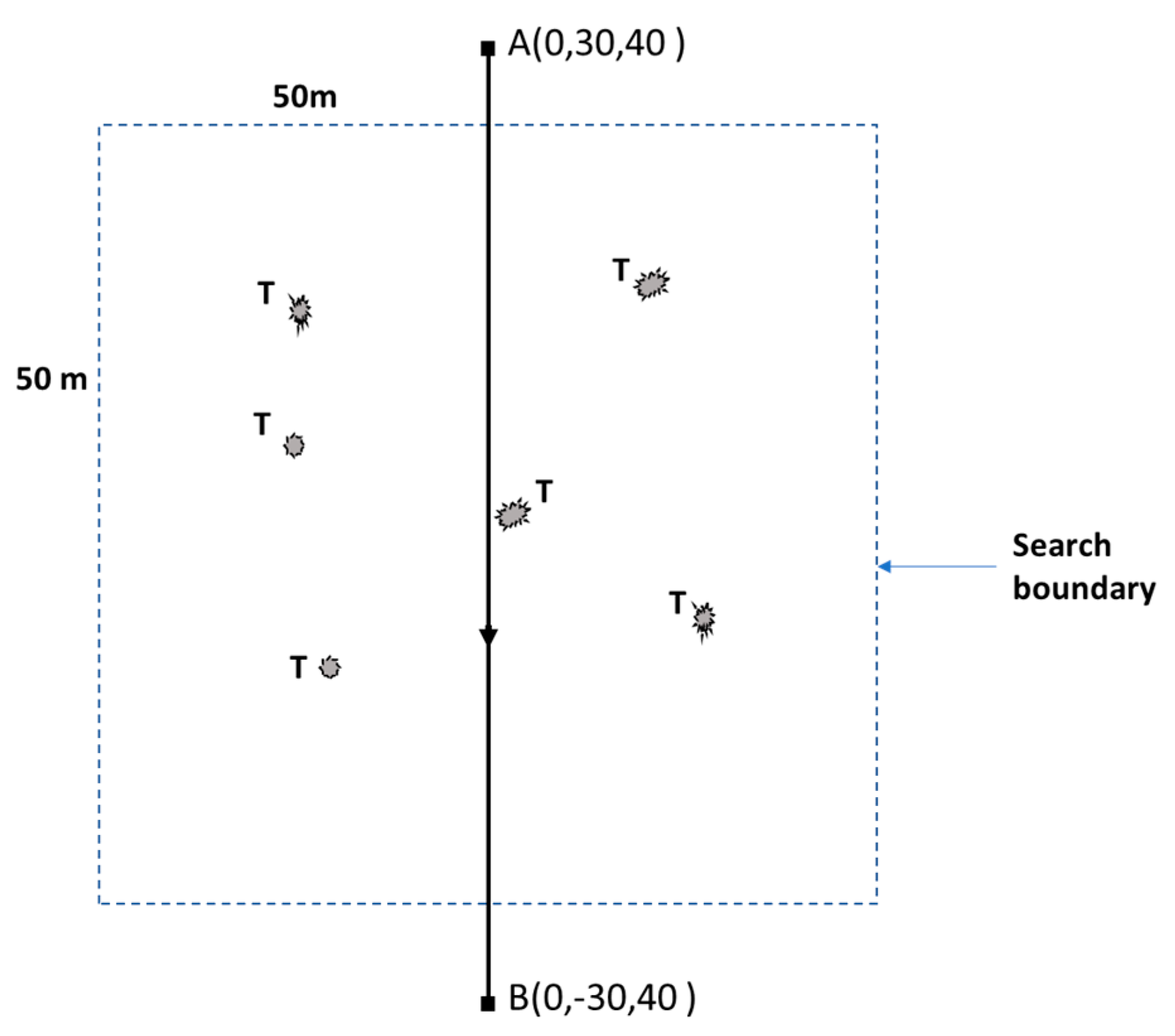

4.2.1. Test Case 1: Finding and Inspection of Objects Scattered on the Ground in Search and Rescue after a Plane Crash

4.2.2. Test Case 2: Finding and Inspection of Multiple Red Color Ground Objects

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kontitsis, M.; Valavanis, K.P.; Tsourveloudis, N. A Uav Vision System for Airborne Surveillance. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA ‘04), New Orleans, LA, USA, 26 April–1 May 2004; Volume 17, pp. 77–83. [Google Scholar]

- Gonzalez, L.F.; Montes, G.A.; Puig, E.; Johnson, S.; Mengersen, K.; Gaston, K.J. Unmanned aerial vehicles (uavs) and artificial intelligence revolutionizing wildlife monitoring and conservation. Sensors 2016, 16, 97. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Villa, T.F.; Salimi, F.; Morton, K.; Morawska, L.; Gonzalez, F. Development and validation of a uav based system for air pollution measurements. Sensors 2016, 16, 2202. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bandara, P.T.; Jayasundara, M.U.P.; Kasthuriarachchi, R.; Gunapala, R. Brown plant hopper (bph) on rice In Pest Management Decision Guide: Green and Yellow List; CAB International: Oxfordshire, England, 2013. [Google Scholar]

- Alsalam, B.H.Y.; Morton, K.; Campbell, D.; Gonzalez, F. Autonomous Uav with Vision Based on-Board Decision Making for Remote Sensing and Precision Agriculture. In Proceedings of the 2017 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2017; pp. 1–12. [Google Scholar]

- Gps Accuracy. Available online: https://www.gps.gov/systems/gps/performance/accuracy/ (accessed on 6 June 2019).

- Lieu, J. Australia Is Spending $200 Million to Make Its GPS More Accurate. Available online: https://mashable.com/2018/05/09/gps-australia-investment-accuracy/#SsV9QnDCksqI (accessed on 22 September 2018).

- Mindtools.com. Ooda Loops Understanding the Decision Cycle. Available online: https://www.mindtools.com/pages/article/newTED_78.htm (accessed on 10 May 2016).

- Thoms, J. Autonomy: Beyond ooda? In Proceedings of the 2005 The IEE Forum on Autonomous Systems (Ref. No. 2005/11271), Stevenage, UK, 28 Novenber 2005; p. 6. [Google Scholar]

- Plehn, M.T. Control Warfare: Inside the Ooda Loop. Master’s Thesis, Air University, Maxwell Airforce Base, AL, USA, 2000. [Google Scholar]

- Yang, S.; Scherer, S.A.; Zell, A. An onboard monocular vision system for autonomous takeoff, hovering and landing of a micro aerial vehicle. J. Intell. Robot. Syst. 2013, 69, 499–515. [Google Scholar] [CrossRef] [Green Version]

- Zarudzki, M.; Shin, H.S.; Lee, C.H. An image based visual servoing approach for multi-target tracking using an quad-tilt rotor uav. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 781–790. [Google Scholar]

- Greatwood, C.; Bose, L.; Richardson, T.; Mayol-Cuevas, W.; Chen, J.; Carey, S.J.; Dudek, P. Tracking control of a uav with a parallel visual processor. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4248–4254. [Google Scholar]

- Vanegas, F.; Bratanov, D.; Powell, K.; Weiss, J.; Gonzalez, F. A novel methodology for improving plant pest surveillance in vineyards and crops using uav-based hyperspectral and spatial data. Sensors 2018, 18, 260. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Perez-Grau, F.J.; Ragel, R.; Caballero, F.; Viguria, A.; Ollero, A. An architecture for robust uav navigation in gps-denied areas. J. Field Robot. 2018, 35, 121–145. [Google Scholar] [CrossRef]

- Zhang, J.; Wu, Y.; Liu, W.; Chen, X. Novel approach to position and orientation estimation in vision-based uav navigation. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 687–700. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, W.; Wu, Y. Novel technique for vision-based uav navigation. IEEE Trans. Aerosp. Electronic Syst. 2011, 47, 2731–2741. [Google Scholar] [CrossRef]

- Stefas, N.; Bayram, H.; Isler, V. Vision-based uav navigation in orchards. IFAC Pap. 2016, 49, 10–15. [Google Scholar] [CrossRef]

- Brandao, A.S.; Martins, F.N.; Soneguetti, H.B. A vision-based line following strategy for an autonomous uav. In Proceedings of the 2015 12th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Colmar, France, 21–23 July 2015; SciTePress: Colmar, Alsace, France; pp. 314–319. [Google Scholar]

- Ramirez, A.; Espinoza, E.S.; Carrillo, L.R.G.; Mondie, S.; Lozano, R. Stability analysis of a vision-based uav controller for autonomous road following missions. In Proceedings of the 2013 International Conference on Unmanned Aircraft Systems (ICUAS), Piscataway, NJ, USA, 28–31 May 2013; IEEE: Piscataway, NJ, USA; pp. 1135–1143. [Google Scholar]

- Araar, O.; Aouf, N. Visual servoing of a quadrotor uav for autonomous power lines inspection. In Proceedings of the 22nd Mediterranean Conference on Control and Automation, Palermo, Italy, 16–19 June 2014; pp. 1418–1424. [Google Scholar]

- Mills, S.J.; Ford, J.J.; Mejías, L. Vision based control for fixed wing uavs inspecting locally linear infrastructure using skid-to-turn maneuvers. J. Intell. Robot. Syst. 2011, 61, 29–42. [Google Scholar] [CrossRef] [Green Version]

- Máthé, K.; Buşoniu, L. Vision and control for uavs: A survey of general methods and of inexpensive platforms for infrastructure inspection. Sensors 2015, 15, 14887–14916. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Máthé, K.; Buşoniu, L.; Barabás, L.; Iuga, C.I.; Miclea, L.; Braband, J. In Proceedings of the Vision-based control of a quadrotor for an object inspection scenario. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 849–857. [Google Scholar]

- Sa, I.; Hrabar, S.; Corke, P. Inspection of pole-like structures using a visual-inertial aided vtol platform with shared autonomy. Sensors 2015, 15, 22003–22048. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Choi, H.; Geeves, M.; Alsalam, B.; Gonzalez, F. Open source computer-vision based guidance system for uavs on-board decision making. In Proceedings of the 2016 IEEE Aerospace Conference, Big Sky, MT, USA, 5–12 March 2016; pp. 1–5. [Google Scholar]

- Hinas, A.; Roberts, J.; Gonzalez, F. Vision-based target finding and inspection of a ground target using a multirotor uav system. Sensors 2017, 17, 2929. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hinas, A.; Ragel, R.; Roberts, J.; Gonzalez, F. Multiple ground target finding and action using uavs. In Proceedings of the IEEE Aerospace Conference 2019, Big Sky, MT, USA, 2–9 March 2019. [Google Scholar]

- Hinas, A.; Ragel, R.; Roberts, J.; Gonzalez, F. A framework for vision-based multiple target finding and action using multirotor uavs. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; pp. 1320–1327. [Google Scholar]

- Theunissen, E.; Tadema, J.; Goossens, A.A.H.E. Exploring network enabled concepts for u(c)av payload driven navigation. In Proceedings of the 2009 IEEE/AIAA 28th Digital Avionics Systems Conference, Orlando, FL, USA, 23–29 October 2009; pp. 3–15. [Google Scholar]

- Sari, S.C.; Prihatmanto, A.S. Decision system for robosoccer agent based on ooda loop. In Proceedings of the 2012 International Conference on System Engineering and Technology (ICSET), Bandung, Indonesia, 11–12 September 2012; pp. 1–7. [Google Scholar]

- Karim, S.; Heinze, C. Experiences with the design and implementation of an agent-based autonomous uav controller. In Proceedings of the 4rd International Joint Conference on Autonomous Agents and Multiagent Systems (AAMAS 2005), Utrecht, The Netherlands, 25–29 July 2005; Association for Computing Machinery: Utrecht, The Netherlands; pp. 59–66. [Google Scholar]

- Doherty, P.; Rudol, P. A uav search and rescue scenario with human body detection and geolocalization. In Proceedings of the 20th Australian Joint Conference on Artificial Intelligence, Gold Coast, Australia, 2–6 December 2007; pp. 1–13. [Google Scholar]

- Rudol, P.; Doherty, P. Human body detection and geolocalization for uav search and rescue missions using color and thermal imagery. In Proceedings of the 2008 IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2008; IEEE: Piscataway, NJ, USA, 2008; p. 8. [Google Scholar]

- Sun, J.; Li, B.; Jiang, Y.; Wen, C.-y. A camera-based target detection and positioning uav system for search and rescue (sar) purposes. Sensors 2016, 16, 1778. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hoai, D.K.; Phuong, N.V. Anomaly color detection on uav images for search and rescue works. In Proceedings of the 2017 9th International Conference on Knowledge and Systems Engineering (KSE), Hue, Vietnam, 19–21 October 2017; pp. 287–291. [Google Scholar]

- Niedzielski, T.; Jurecka, M.; Miziński, B.; Remisz, J.; Ślopek, J.; Spallek, W.; Witek-Kasprzak, M.; Kasprzak, Ł.; Świerczyńska-Chlaściak, M. A real-time field experiment on search and rescue operations assisted by unmanned aerial vehicles. J. Field Robot. 2018, 35, 906–920. [Google Scholar] [CrossRef]

- Young, L.; Yetter, J.; Guynn, M. System analysis applied to autonomy: Application to high-altitude long-endurance remotely operated aircraft. In Infotech@aerospace; American Institute of Aeronautics and Astronautics: Arlington, VA, USA, 2005. [Google Scholar]

- Unmanned Aerial Vehicles Roadmap 2000–2025; Office Of The Secretary Of Defense: Washington, DC, USA, 2001.

- Huang, H.-M. Autonomy levels for unmanned systems (alfus) framework: Safety and application issues. In Proceedings of the 2007 Workshop on Performance Metrics for Intelligent Systems, Gaithersburg, MD, USA, 28–30 August 2007; pp. 48–53. [Google Scholar]

- Durst, P.J.; Gray, W.; Nikitenko, A.; Caetano, J.; Trentini, M.; King, R. A framework for predicting the mission-specific performance of autonomous unmanned systems. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 1962–1969. [Google Scholar]

- Ros.org. Mavros. Available online: http://wiki.ros.org/mavros (accessed on 2 June 2017).

- Ros.org. Ros. Available online: http://wiki.ros.org (accessed on 6 June 2017).

- Sieberth, T.; Wackrow, R.; Chandler, J.H. Automatic detection of blurred images in uav image sets. ISPRS J. Photogramm. Remote Sens. 2016, 122, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Oliphant, R. Mh17 Crash Site. The Long Grass Hides the Carnage. Many Other Photos Cannot in Conscience Be Published. Available online: https://twitter.com/RolandOliphant/status/999596340949680128 (accessed on 28 June 2019).

- Valmary, S. Shelling Adds to Mh17 Nightmare for East Ukraine Village. Available online: https://news.yahoo.com/shelling-adds-mh17-nightmare-east-ukraine-village-193407244.html (accessed on 28 June 2019).

- Mh17 Ukraine Plane Crash: Additional Details Revealed. Available online: https://southfront.org/wp-content/uploads/2016/11/CIMG0997.jpg (accessed on 28 June 2019).

- Pena, J.M.; Torres-Sanchez, J.; Serrano-Perez, A.; de Castro, A.I.; Lopez-Granados, F. Quantifying efficacy and limits of unmanned aerial vehicle (uav) technology for weed seedling detection as affected by sensor resolution. Sensors 2015, 15, 5609–5626. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nisi, M.; Menichetti, F.; Muhammad, B.; Prasad, R.; Cianca, E.; Mennella, A.; Gagliarde, G.; Marenchino, D. Egnss high accuracy system improving photovoltaic plant maintenance using rpas integrated with low-cost rtk receiver. In Proceedings of the Global Wireless Summit, Aarhus, Denmark, 27–30 November 2016. [Google Scholar]

| Paramete | Value |

|---|---|

| Search height (hs) | 40 m |

| Threshold rotation rate (α) | 0.8 deg/s |

| Gating distance (d) | 2 m |

| Camera frame rate | 10 Hz |

| Camera resolution | 640 × 480 |

| Proportional gain (K) | 2 |

| Votes for a detection () | 1 |

| Votes for a non-detection () | −1 |

| Removal cut-off (G) | −2 |

| Valid target cut-off (H) | 5 |

| Test No. | Number of Targets | Number of Targets Visited | Number of Targets Not Inspected (Action Failure) |

|---|---|---|---|

| 1 | 6 | 6 | 1 |

| 2 | 6 | 5 | 0 |

| 3 | 6 | 5 | 0 |

| 4 | 6 | 6 | 1 |

| 5 | 6 | 6 | 1 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hinas, A.; Ragel, R.; Roberts, J.; Gonzalez, F. A Framework for Multiple Ground Target Finding and Inspection Using a Multirotor UAS. Sensors 2020, 20, 272. https://doi.org/10.3390/s20010272

Hinas A, Ragel R, Roberts J, Gonzalez F. A Framework for Multiple Ground Target Finding and Inspection Using a Multirotor UAS. Sensors. 2020; 20(1):272. https://doi.org/10.3390/s20010272

Chicago/Turabian StyleHinas, Ajmal, Roshan Ragel, Jonathan Roberts, and Felipe Gonzalez. 2020. "A Framework for Multiple Ground Target Finding and Inspection Using a Multirotor UAS" Sensors 20, no. 1: 272. https://doi.org/10.3390/s20010272

APA StyleHinas, A., Ragel, R., Roberts, J., & Gonzalez, F. (2020). A Framework for Multiple Ground Target Finding and Inspection Using a Multirotor UAS. Sensors, 20(1), 272. https://doi.org/10.3390/s20010272