Impact of Think-Aloud on Eye-Tracking: A Comparison of Concurrent and Retrospective Think-Aloud for Research on Decision-Making in the Game Environment

Abstract

:1. Introduction

2. Materials and Methods

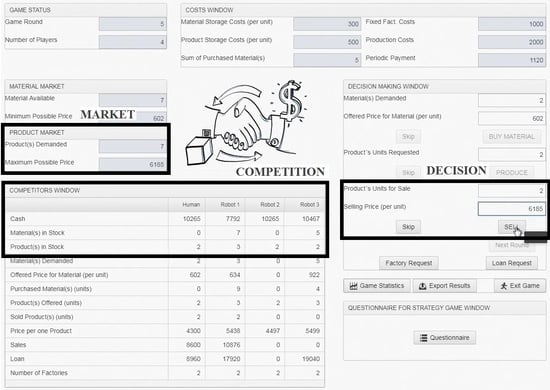

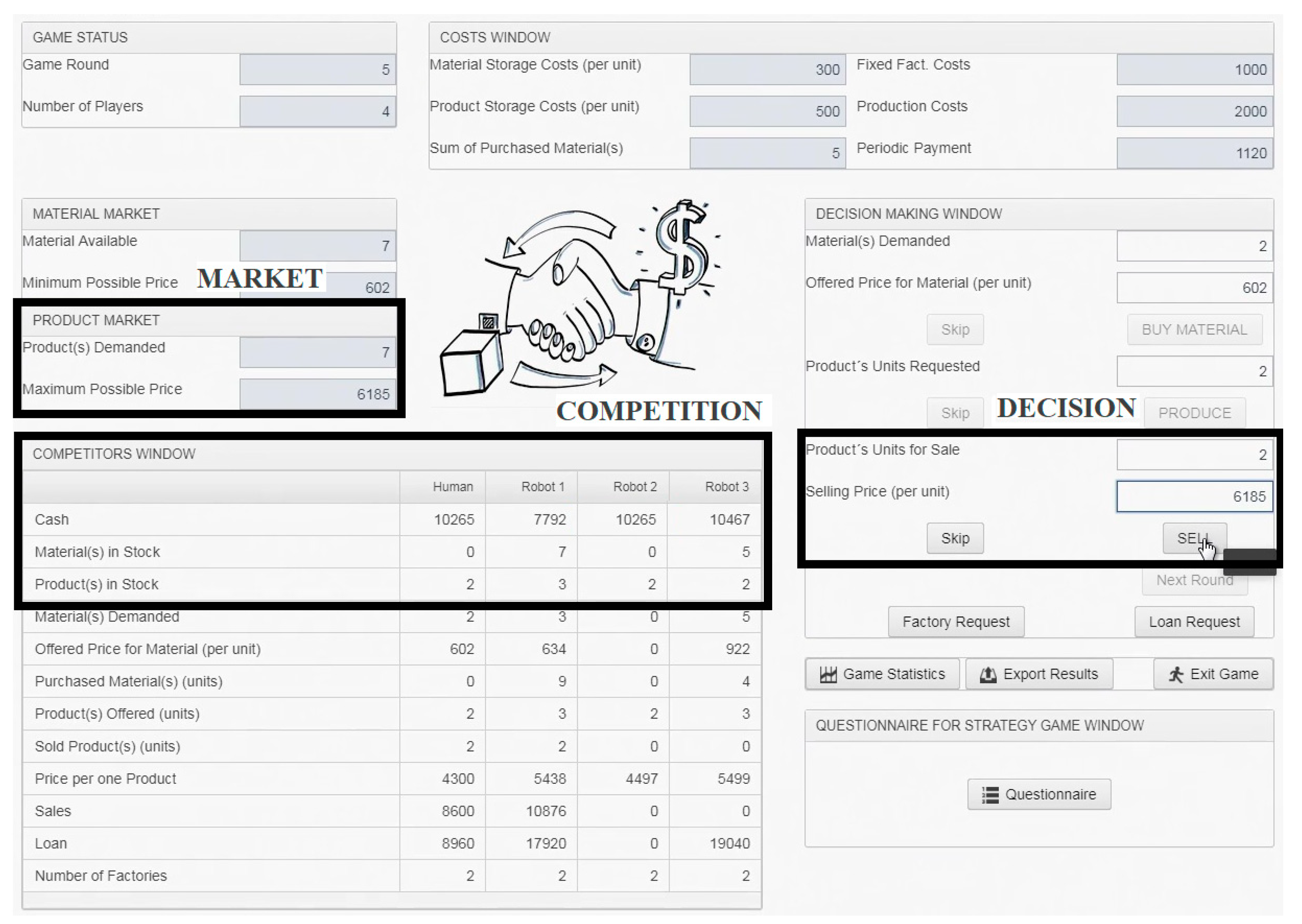

2.1. Simulation Game

2.2. Research Group

2.3. Course of Experiment

2.4. Data Processing

2.4.1. Comparison of Game Results Achieved by RTA and CTA Players

- (1)

- The amount of “net cash”, which maximization is the main game goal. Nevertheless, this indicator cannot be calculated in bankrupt games. Therefore, it includes only completed games.

- (2)

- The comparison of the numbers of bankruptcy games is presented as a separate indicator.

- (3)

- The overall standings of the player at the end of the game. To beat three competitors is the second game goal. However, players with different strategies can reach different but still the highest net cash [73,78]. Therefore, the overall standings, as the indicator, is less dependent on stochastic game conditions.

2.4.2. Comparison of the Impact of CTA and RTA on Eye-Tracking

- Number of fixations. Eye-tracking offers the possibility to measure both the number of fixations and the number of saccades, but both counts can be interpreted identically. They show the number of places viewed by the observer [75]. Nevertheless, the first indicator was preferred in this study as fixations relate to the cognitive processing of information [24], whereas the information is not acquired during saccades [29]. In the game, the players look for cues for the right decision. The number of fixations may indicate how difficult the task is for them, because, during more difficult decisions, participants tend to show a higher number of fixations [79].

- Fixation duration. This metric can be interpreted in two ways. The first presumption is that a longer duration means greater interest in an object. The second states that it is associated with the complexity of the cognitive processing of information. Therefore, fixation duration can be understood as an index of the cognitive effort degree needed for information processing [21,80]. The fixation duration is expressed in two ways: (1) as the Average duration of (one) fixation in milliseconds (ms) and (2) the Total duration of (all) fixations in seconds (s).

- Dwell time ratio. The time spent within AOI is defined as one visit (duration of all fixations and saccades are counted) from entry to exit [22]. It is also called the dwell time. The count of all dwell times within one AOI is called total dwell time [21]. Thus, based on this metric, the proposed Dwell time ratio (Rdw) is defined as the ratio which includes several total dwell times:where the numerator of the ratio is the sum of three total dwell times (Tdwm, Tdwc, Tdwd), which represents the sum of visits in Market, Decision, and Competition (Figure 1). The denominator includes one AOI that represents the total dwell time in the whole game window (Tdws). The ratio result then indicates the percentage of the time the players spent watching AOIs necessary to the decision. The purpose of this metric is to assess which players are better able to focus their gaze only on important AOIs.

2.4.3. Comparison of the Data Gathered by CTA and RTA

- (1)

- Valid information—AOI is watched and its content is verbalized

- (2)

- Omitted information—AOI is watched but its content is not verbalized

- (3)

- (4)

- Unidentified information—AOI is not watched and verbalized. This is, in a way, also valid information. However, it is of a different type from the first-mentioned combination. It provides evidence of the non-inclusion of AOI in the decision-making process. Therefore, these cases are included in a separate category.

3. Results

3.1. Comparison of Game Results Achieved by RTA and CTA Players

3.2. Overall Comparison of the Impact of CTA and RTA on Eye-Tracking

3.3. Comparison of the Impact of CTA and RTA on Eye-Tracking over Time (in the Game Progress)

3.3.1. The Number of Fixations

3.3.2. Fixation Duration

3.3.3. Dwell Time Ratio

3.4. Comparison of the Data Gathered by CTA and RTA

4. Discussion

4.1. Overall Comparison of the Impact of CTA and RTA on Eye-Tracking

4.2. Comparison of the Impact of CTA and RTA on Eye-Tracking over Time (in the Game Progress)

- The CTA can make participants feel unnatural and confused. It may take some time while they get used to the task, especially at the beginning of the experiment.

- Both cognitive processes become less and less demanding depending on the experience gained. As well as players repeat the process of task-solving in each round, they also repeat verbal comments on what they do. At the beginning of the game, participants must create verbal expressions, name objects and thoughts, and interpret logical connections among them. This process is optimized during the game, comments are shortened, and the verbalization becomes more natural.

- When the task-solving process is becoming easier (the original complex and cognitively demanding processes of decision-making are getting simple), participants can be better focused on verbalizations. The verbalizations of simpler tasks are more natural and therefore, they start to correlate more frequently simultaneously with eye movements.

4.3. Comparison of the Data Gathered by CTA and RTA

4.4. Limitations of the Study

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Grund, C.K.; Meier, M.C. Towards game-based management decision support: Using serious games to improve the decision process. In Proceedings of the Multikonferenz Wirtschaftsinformatik, MKWI 2016, Universitatsverlag Ilmenau, Ilmenau, Germany, 9–11 March 2016; Volume 1, pp. 155–166. [Google Scholar]

- Zeike, S.; Choi, K.-E.; Lindert, L.; Pfaff, H. Managers’ Well-Being in the Digital Era: Is it Associated with Perceived Choice Overload and Pressure from Digitalization? An Exploratory Study. Int. J. Environ. Res. Public Health 2019, 16, 1746. [Google Scholar] [CrossRef] [Green Version]

- Abubakar, A.M.; Elrehail, H.; Alatailat, M.A.; Elçi, A. Knowledge management, decision-making style and organizational performance. J. Innov. Knowl. 2019, 4, 104–114. [Google Scholar] [CrossRef]

- Trevis Certo, S.; Connelly, B.L.; Tihanyi, L. Managers and their not-so rational decisions. Bus. Horiz. 2008, 51, 113–119. [Google Scholar] [CrossRef]

- Thywissen, C.; Pidun, U.; zu Knyphausen-Aufseß, D. Process matters—The relevance of the decision making process for divestiture outcomes. Long Range Plann. 2018, 51, 267–284. [Google Scholar] [CrossRef]

- Aitken, L.M.; Marshall, A.; Elliott, R.; McKinley, S. Comparison of ‘think aloud’ and observation as data collection methods in the study of decision making regarding sedation in intensive care patients. Int. J. Nurs. Stud. 2011, 48, 318–325. [Google Scholar] [CrossRef] [Green Version]

- Franco-Watkins, A.M.; Johnson, J.G. Decision moving window: Using interactive eye tracking to examine decision processes. Behav. Res. Methods 2011, 43, 853–863. [Google Scholar] [CrossRef] [Green Version]

- Robinson, J.; Sinclair, M.; Tobias, J.; Choi, E. More Dynamic Than You Think: Hidden Aspects of Decision-Making. Adm. Sci. 2017, 7, 23. [Google Scholar] [CrossRef] [Green Version]

- Coovert, M.D.; Winner, J.; Bennett, W.; Howard, D.J. Serious Games are a Serious Tool for Team Research. Int. J. Serious Games 2017, 4. [Google Scholar] [CrossRef]

- Lee, J.Y.; Donkers, J.; Jarodzka, H.; van Merriënboer, J.J.G. How prior knowledge affects problem-solving performance in a medical simulation game: Using game-logs and eye-tracking. Comput. Hum. Behav. 2019, 99, 268–277. [Google Scholar] [CrossRef]

- Prokop, M.; Vrábelová, T.; Nováková, M.; Šímová, T. Evaluation of managerial and decision-making skills of small-scale farmers. In Agrarian Perspectives XXVIII, Business Scale in Relation to Economics, Proceedings of the 28th International Scientific Conference, Prague, Czech Republic, 18–19 September 2019; Czech University Life Sciences Prague: Prague, Czech Republic, 2019; Volume 28, pp. 218–225. [Google Scholar]

- Shute, V.J.; Wang, L. Measuring Problem Solving Skills in Portal 2. In E-Learning Systems, Environments and Approaches; Isaías, P., Spector, J.M., Ifenthaler, D., Sampson, D.G., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 11–24. ISBN 978-3-319-05824-5. [Google Scholar]

- Arnold, U.; Söbke, H.; Reichelt, M. SimCity in Infrastructure Management Education. Educ. Sci. 2019, 9, 209. [Google Scholar] [CrossRef] [Green Version]

- Bekebrede, G.; van Bueren, E.; Wenzler, I. Towards a Joint Local Energy Transition Process in Urban Districts: The GO2Zero Simulation Game. Sustainability 2018, 10, 2602. [Google Scholar] [CrossRef] [Green Version]

- Gogg, T.J.; Mott, J.R.A. Introduction to Simulation. In Proceedings of the 25th Conference on Winter Simulation, Los Angeles, CA, USA, 12–15 December 1993; ACM: New York, NY, USA, 1993; pp. 9–17. [Google Scholar]

- Kato, P.M.; de Klerk, S. Serious Games for Assessment: Welcome to the Jungle. J. Appl. Test. Technol. 2017, 18, 1. [Google Scholar]

- Peña Miguel, N.; Corral Lage, J.; Mata Galindez, A. Assessment of the Development of Professional Skills in University Students: Sustainability and Serious Games. Sustainability 2020, 12, 1014. [Google Scholar] [CrossRef] [Green Version]

- Ritterfeld, U.; Cody, M.J.; Vorderer, P. (Eds.) Serious Games: Mechanisms and Effects; Routledge: New York, NY, USA, 2009; ISBN 978-0-415-99369-2. [Google Scholar]

- Cooke, N.J. Varieties of knowledge elicitation techniques. Int. J. Hum. Comput. Stud. 1994, 41, 801–849. [Google Scholar] [CrossRef]

- Schulte-Mecklenbeck, M.; Johnson, J.G.; Böckenholt, U.; Goldstein, D.G.; Russo, J.E.; Sullivan, N.J.; Willemsen, M.C. Process-Tracing Methods in Decision Making: On Growing Up in the 70s. Curr. Dir. Psychol. Sci. 2017, 26, 442–450. [Google Scholar] [CrossRef]

- Berger, T. Using eye-tracking to for analyzing case study materials. Int. J. Manag. Educ. 2019, 17, 304–315. [Google Scholar] [CrossRef]

- Holmqvist, K.; Nystrom, M.; Andersson, R.; Dewhurst, R.; Jarodzka, H.; van de Weijer, J. Eye Tracking: A Comprehensive Guide to Methods and Measures; First Published in Paperback; Oxford University Press: Oxford, UK, 2015; ISBN 978-0-19-873859-6. [Google Scholar]

- Gegenfurtner, A.; Lehtinen, E.; Säljö, R. Expertise Differences in the Comprehension of Visualizations: A Meta-Analysis of Eye-Tracking Research in Professional Domains. Educ. Psychol. Rev. 2011, 23, 523–552. [Google Scholar] [CrossRef]

- Just, M.A.; Carpenter, P.A. A theory of reading: From eye fixations to comprehension. Psychol. Rev. 1980, 87, 329–354. [Google Scholar] [CrossRef]

- Russo, J.E. Eye fixations as a process trace. In A Handbook of Process Tracing Methods for Decision Research: A Critical Review and User’s Guide; Society for Judgment and Decision Making Series; Psychology Press: New York, NY, USA, 2011; pp. 43–64. ISBN 978-1-84872-864-6. [Google Scholar]

- Zhan, Z.; Zhang, L.; Mei, H.; Fong, P. Online Learners’ Reading Ability Detection Based on Eye-Tracking Sensors. Sensors 2016, 16, 1457. [Google Scholar] [CrossRef] [Green Version]

- Yamauchi, T.; Seo, J.; Sungkajun, A. Interactive Plants: Multisensory Visual-Tactile Interaction Enhances Emotional Experience. Mathematics 2018, 6, 225. [Google Scholar] [CrossRef] [Green Version]

- Brunyé, T.T.; Gardony, A.L. Eye tracking measures of uncertainty during perceptual decision making. Int. J. Psychophysiol. 2017, 120, 60–68. [Google Scholar] [CrossRef]

- Rayner, K. Eye movements in reading and information processing: 20 years of research. Psychol. Bull. 1998, 124, 372–422. [Google Scholar] [CrossRef]

- Rayner, K. The 35th Sir Frederick Bartlett Lecture: Eye movements and attention in reading, scene perception, and visual search. Q. J. Exp. Psychol. 2009, 62, 1457–1506. [Google Scholar] [CrossRef]

- Balling, L.W. Does good writing mean good reading? An eye-tracking investigation of the effect of writing advice on reading. Fachsprache 2013, 35, 2–23. [Google Scholar] [CrossRef]

- Anson, C.M.; Schwegler, R.A. Tracking the Mind’s Eye: A New Technology for Researching Twenty-First-Century Writing and Reading Processes. Coll. Compos. Commun. 2012, 64, 151. [Google Scholar]

- Hacker, D.J.; Keener, M.C.; Kircher, J.C. TRAKTEXT: Investigating writing processes using eye-tracking technology. Methodol. Innov. 2017, 10, 205979911668957. [Google Scholar] [CrossRef] [Green Version]

- Berni, A.; Maccioni, L.; Borgianni, Y. Observing Pictures and Videos of Creative Products: An Eye Tracking Study. Appl. Sci. 2020, 10, 1480. [Google Scholar] [CrossRef] [Green Version]

- Lisińska-Kuśnierz, M.; Krupa, M. Suitability of Eye Tracking in Assessing the Visual Perception of Architecture—A Case Study Concerning Selected Projects Located in Cologne. Buildings 2020, 10, 20. [Google Scholar] [CrossRef] [Green Version]

- Popelka, S.; Vondrakova, A.; Hujnakova, P. Eye-tracking Evaluation of Weather Web Maps. ISPRS Int. J. Geo-Inf. 2019, 8, 256. [Google Scholar] [CrossRef] [Green Version]

- Hercegfi, K.; Komlodi, A.; Koles, M.; Tovolgyi, S. Eye-Tracking-based Wizard-of-Oz Usability Evaluation of an Emotional Display Agent Integrated to a Virtual Environment. ACTA Polytech. Hung. 2019, 16, 145–162. [Google Scholar]

- Burian, J.; Popelka, S.; Beitlova, M. Evaluation of the Cartographical Quality of Urban Plans by Eye-Tracking. ISPRS Int. J. Geo-Inf. 2018, 7, 192. [Google Scholar] [CrossRef] [Green Version]

- Jarodzka, H.; Holmqvist, K.; Gruber, H. Eye tracking in Educational Science: Theoretical frameworks and research agendas. J. Eye Mov. Res. 2017, 10. [Google Scholar] [CrossRef]

- Muir, M.; Conati, C. An Analysis of Attention to Student—Adaptive Hints in an Educational Game. In Intelligent Tutoring Systems; Cerri, S.A., Clancey, W.J., Papadourakis, G., Panourgia, K., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7315, pp. 112–122. ISBN 978-3-642-30949-6. [Google Scholar]

- Kővári, A.; Katona, J.; Pop, C.A. Evaluation of Eye-Movement Metrics in a Software Debbuging Task using GP3 Eye Tracker. ACTA Polytech. Hung. 2020, 17, 57–76. [Google Scholar] [CrossRef]

- Sharma, K.; Leftheriotis, I.; Giannakos, M. Utilizing Interactive Surfaces to Enhance Learning, Collaboration and Engagement: Insights from Learners’ Gaze and Speech. Sensors 2020, 20, 1964. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Zhang, X.; Chen, W.-W.; Yuan, S.-M. Eye Movement Analysis of Digital Learning Content for Educational Innovation. Sustainability 2020, 12, 2518. [Google Scholar] [CrossRef] [Green Version]

- Sáiz Manzanares, M.C.; Rodríguez Diez, J.J.; Marticorena Sánchez, R.; Zaparaín Yáñez, M.J.; Cerezo Menéndez, R. Lifelong Learning from Sustainable Education: An Analysis with Eye Tracking and Data Mining Techniques. Sustainability 2020, 12, 1970. [Google Scholar] [CrossRef] [Green Version]

- Lai, M.-L.; Tsai, M.-J.; Yang, F.-Y.; Hsu, C.-Y.; Liu, T.-C.; Lee, S.W.-Y.; Lee, M.-H.; Chiou, G.-L.; Liang, J.-C.; Tsai, C.-C. A review of using eye-tracking technology in exploring learning from 2000 to 2012. Educ. Res. Rev. 2013, 10, 90–115. [Google Scholar] [CrossRef]

- Gidlof, K.; Wallin, A.; Dewhurst, R.; Holmqvist, K. Using Eye Tracking to Trace a Cognitive Process: Gaze Behaviour During Decision Making in a Natural Environment. J. Eye Mov. Res. 2013, 6, 1–14. [Google Scholar] [CrossRef]

- Zuschke, N. An analysis of process-tracing research on consumer decision-making. J. Bus. Res. 2019, 305–320. [Google Scholar] [CrossRef]

- Orquin, J.L.; Mueller Loose, S. Attention and choice: A review on eye movements in decision making. Acta Psychol. (Amst.) 2013, 144, 190–206. [Google Scholar] [CrossRef] [Green Version]

- Barrafrem, K.; Hausfeld, J. Tracing risky decisions for oneself and others: The role of intuition and deliberation. J. Econ. Psychol. 2019, 73, 89–110. [Google Scholar] [CrossRef]

- Al-Moteri, M.O.; Symmons, M.; Plummer, V.; Cooper, S. Eye tracking to investigate cue processing in medical decision-making: A scoping review. Comput. Hum. Behav. 2017, 66, 52–66. [Google Scholar] [CrossRef]

- Hyönä, J. The use of eye movements in the study of multimedia learning. Learn. Instr. 2010, 20, 172–176. [Google Scholar] [CrossRef]

- Jiang, T.; Potters, J.; Funaki, Y. Eye-tracking Social Preferences: Eye-tracking Social Preferences. J. Behav. Decis. Mak. 2016, 29, 157–168. [Google Scholar] [CrossRef]

- Van Gog, T.; Paas, F.; van Merriënboer, J.J.G.; Witte, P. Uncovering the Problem-Solving Process: Cued Retrospective Reporting Versus Concurrent and Retrospective Reporting. J. Exp. Psychol. Appl. 2005, 11, 237–244. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tanner, S.A.; McCarthy, M.B.; O’Reilly, S.J. Exploring the roles of motivation and cognition in label-usage using a combined eye-tracking and retrospective think aloud approach. Appetite 2019, 135, 146–158. [Google Scholar] [CrossRef]

- Branch, J.L. Investigating the Information-Seeking Processes of Adolescents. Libr. Inf. Sci. Res. 2000, 22, 371–392. [Google Scholar] [CrossRef]

- Ericsson, K.A.; Simon, H.A. Protocol Analysis: Verbal Reports as Data, Rev. ed.; MIT Press: Cambridge, MA, USA, 1993; ISBN 978-0-262-05047-0. [Google Scholar]

- Alshammari, T.; Alhadreti, O.; Mayhew, P.J. When to Ask Participants to Think Aloud: A Comparative Study of Concurrent and Retrospective Think-Aloud Methods. Int. J. Hum. Comput. Interact. IJHCI 2015, 6, 48–64. [Google Scholar]

- Gero, J.S.; Tang, H.-H. The differences between retrospective and concurrent protocols in revealing the process-oriented aspects of the design process. Des. Stud. 2001, 22, 283–295. [Google Scholar] [CrossRef]

- Jääskeläinen, R. Verbal Reports. In The Handbook of Translation and Cognition; Schwieter, J.W., Ferreira, A., Eds.; Wiley: Hoboken, NJ, USA, 2017; pp. 213–231. ISBN 978-1-119-24143-0. [Google Scholar]

- Peute, L.W.P.; de Keizer, N.F.; Jaspers, M.W.M. The value of Retrospective and Concurrent Think Aloud in formative usability testing of a physician data query tool. J. Biomed. Inform. 2015, 55, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Taylor, K.L.; Dionne, J.-P. Accessing problem-solving strategy knowledge: The complementary use of concurrent verbal protocols and retrospective debriefing. J. Educ. Psychol. 2000, 92, 413–425. [Google Scholar] [CrossRef]

- Van den Haak, M.J.; de Jong, M.D.T.; Schellens, P.J. Evaluating municipal websites: A methodological comparison of three think-aloud variants. Gov. Inf. Q. 2009, 26, 193–202. [Google Scholar] [CrossRef] [Green Version]

- Preece, J.; Rogers, Y.; Sharp, H.; Benyon, D.; Holland, S.; Carey, T. Human-Computer Interaction; Addison-Wesley Longman Ltd.: Essex, UK, 1994; ISBN 978-0-201-62769-5. [Google Scholar]

- Elling, S.; Lentz, L.; de Jong, M. Combining Concurrent Think-Aloud Protocols and Eye-Tracking Observations: An Analysis of Verbalizations and Silences. IEEE Trans. Prof. Commun. 2012, 55, 206–220. [Google Scholar] [CrossRef] [Green Version]

- Cooke, L. Assessing Concurrent Think-Aloud Protocol as a Usability Test Method: A Technical Communication Approach. IEEE Trans. Prof. Commun. 2010, 53, 202–215. [Google Scholar] [CrossRef]

- Van den Haak, M.J.; de Jong, M.D.T. Exploring two methods of usability testing: Concurrent versus retrospective think-aloud protocols. In Proceedings of the IEEE International Professional Communication Conference, Orlando, FL, USA, 21–24 September 2003; IEEE: Orlando, FL, USA, 2003; p. 3. [Google Scholar]

- Olsen, A.; Smolentzov, L.; Strandvall, T. Comparing different eye tracking cues when using the retrospective think aloud method in usability testing. In Proceedings of the HCI 2010, 24th BCS Conference on Human Computer Interaction, Dundee, UK, 6–10 September 2010. [Google Scholar]

- Bowers, V.A.; Snyder, H.L. Concurrent versus Retrospective Verbal Protocol for Comparing Window Usability. Proc. Hum. Factors Soc. Annu. Meet. 1990, 34, 1270–1274. [Google Scholar] [CrossRef] [Green Version]

- Cotton, D.; Gresty, K. Reflecting on the think-aloud method for evaluating e-learning. Br. J. Educ. Technol. 2006, 37, 45–54. [Google Scholar] [CrossRef]

- Guan, Z.; Lee, S.; Cuddihy, E.; Ramey, J. The validity of the stimulated retrospective think-aloud method as measured by eye tracking. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems—CHI ’06, Montreal, QC, Canada, 22–27 April 2006; ACM Press: Montreal, QC, Canada, 2006; p. 1253. [Google Scholar]

- Gerjets, P.; Kammerer, Y.; Werner, B. Measuring spontaneous and instructed evaluation processes during Web search: Integrating concurrent thinking-aloud protocols and eye-tracking data. Learn. Instr. 2011, 21, 220–231. [Google Scholar] [CrossRef]

- Kim, B.; Dong, Y.; Kim, S.; Lee, K.-P. Development of Integrated Analysis System and Tool of Perception, Recognition, and Behavior for Web Usability Test: With Emphasis on Eye-tracking, Mouse-tracking, and Retrospective Think Aloud. In Proceedings of the 2nd International Conference on Usability and Internationalization, Beijing, China, 22–27 July 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 113–121. [Google Scholar]

- Švec, V.; Pavlicek, J.; Ticha, I.; Kreckova, J. FactOrEasy (c): ART AND CRAFT OF MANAGEMENT? In Proceedings of the 13th International Conference Efficiency and Responsibility in Education, Prague, Czech Republic, 2–3 June 2016; Czech University Life Sciences Prague: Prague, Czech Republic, 2016; pp. 567–577. [Google Scholar]

- Prokop, M.; Švec, V. Flawless Managers: Myth or Reality? In Proceedings of the 15th International Conference Efficiency and Responsibility in Education 2018 (ERIE), Prague, Czech Republic, 7–8 June 2018; Czech University Life Sciences Prague: Prague, Czech Republic, 2018; pp. 281–289. [Google Scholar]

- Abdi Sargezeh, B.; Tavakoli, N.; Daliri, M.R. Gender-based eye movement differences in passive indoor picture viewing: An eye-tracking study. Physiol. Behav. 2019, 206, 43–50. [Google Scholar] [CrossRef]

- Kovari, A.; Katona, J.; Costescu, C. Quantitative Analysis of Relationship Between Visual Attention and Eye-Hand Coordination. Acta Polytech. Hung. 2020, 17, 77–95. [Google Scholar] [CrossRef]

- Boren, T.; Ramey, J. Thinking aloud: Reconciling theory and practice. IEEE Trans. Prof. Commun. 2000, 43, 261–278. [Google Scholar] [CrossRef]

- Švec, V.; Flegl, M.; Pavlicek, J.; Prokop, M.; Kreckova, J. Factoreasy (r): Do Students Take a Risk? In Proceedings of the 14th International Conference Efficiency and Responsibility in Education 2017 (ERIE), Prague, Czech Republic, 8–9 June 2017; Czech University Life Sciences Prague: Prague, Czech Republic, 2017; pp. 451–459. [Google Scholar]

- Fiedler, S.; Glöckner, A. The Dynamics of Decision Making in Risky Choice: An Eye-Tracking Analysis. Front Psychol. 2012, 3. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Franěk, M.; Šefara, D.; Petružálek, J.; Cabal, J.; Myška, K. Differences in eye movements while viewing images with various levels of restorativeness. J. Environ. Psychol. 2018, 57, 10–16. [Google Scholar] [CrossRef]

- Rhenius, D.; Deffner, G. Evaluation of Concurrent Thinking Aloud Using Eye-tracking Data. Proc. Hum. Factors Soc. Annu. Meet. 1990, 34, 1265–1269. [Google Scholar] [CrossRef]

- Nielsen, J.; Clemmensen, T.; Yssing, C. Getting access to what goes on in people’s heads? Reflections on the think-aloud technique. In Proceedings of the 2nd Nordic Conference on Human-Computer Interaction—NordiCHI ’02, Aarhus, Denmark, 19–23 October 2002; ACM Press: Aarhus, Denmark, 2002; p. 101. [Google Scholar]

- Eger, N.; Ball, L.J.; Stevens, R.; Dodd, J. Cueing retrospective verbal reports in usability testing through eye-movement replay. In Proceedings of the 21st British HCI Group Annual Conference on People and Computers: HCI… But Not as We Know It-Volume 1, Lanchester, UK, 3–7 September 2007; British Computer Society: Swindon, UK, 2007; pp. 129–137. [Google Scholar]

- Hu, J.; Gao, X.A. Using think-aloud protocol in self-regulated reading research. Educ. Res. Rev. 2017, 22, 181–193. [Google Scholar] [CrossRef] [Green Version]

- Baumann, J.F.; Seifert-Kessell, N.; Jones, L.A. Effect of Think-Aloud Instruction on Elementary Students’ Comprehension Monitoring Abilities. J. Read. Behav. 1992, 24, 143–172. [Google Scholar] [CrossRef]

- Bowles, M.A. The Think-Aloud Controversy in Second Language Research; Second Language Acquisition Research; Monographs on Research Methodology; Routledge: New York, NY, USA, 2010; ISBN 978-0-415-99483-5. [Google Scholar]

| Round | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 |

| CTA | 14 | 14 | 14 | 14 | 13 | 14 | 13 | 14 | 12 | 13 | 11 | 12 | 10 | 10 | 9 | 8 | 9 | 7 |

| RTA | 17 | 17 | 17 | 16 | 17 | 16 | 15 | 15 | 14 | 14 | 15 | 12 | 13 | 15 | 14 | 11 | 12 | 12 |

| Round | 19 | 20 | 21 | 22 | 23 | 24 | 25 | 26 | 27 | 28 | 29 | 30 | 31 | 32 | 33 | 34 | 35 | 36 |

| CTA | 6 | 6 | 6 | 4 | 6 | 5 | 5 | 5 | 5 | 5 | 5 | 4 | 5 | 3 | 2 | 1 | 2 | 3 |

| RTA | 11 | 11 | 10 | 8 | 9 | 10 | 2 | 3 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Indicator | CTA | RTA | Test of Statistical Difference | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Median | Mean | SD | Test of Normality (Shapiro-Wilk) | Median | Mean | SD | Test of Normality (Shapiro-Wilk) | ||

| Net cash in finished games | 19776 | 19458 | 10540 | W(24) = 0.952 p = 0.300 | 19032 | 17935 | 13288 | W(26) = 0.962 p = 0.438 | t-test t(48) = −0.447 p = 0.657 |

| Overall standings | 2 | 2.25 | 1.36 | W(36) = 0.730 p < 0.001 | 1.5 | 2.36 | 1.477 | W(36) = 0.730 p < 0.001 | Mann-Whitney U = 632 p = 0.844 |

| Indicator | CTA | RTA | Test of Statistical Difference (Z-Test for 2 Proportions) | ||

|---|---|---|---|---|---|

| Count | % | Count | % | ||

| Bankruptcy games | 12 | 33.32% | 10 | 27.78% | z = 0.512; p < 0.610 |

| Total games | 36 | 100% | 36 | 100% | - |

| Indicator | CTA | RTA | Test of Statistical Difference (Mann-Whitney) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Median | Mean | SD | Test of Normality (Shapiro-Wilk) | Median | Mean | SD | Test of Normality (Shapiro-Wilk) | ||

| Number of fixations | 92 | 128.31 | 116.91 | W(288) = 0.768 p < 0.001 | 64 | 82.29 | 69.68 | W(329) = 0.734 p < 0.001 | U = 34823.5 p < 0.001 |

| Average duration of fixation | 187 ms | 184 ms | 44 ms | W(288) = 0.978 p < 0.001 | 208 ms | 211 ms | 47 ms | W(329) = 0.963 p < 0.001 | U = 33022 p < 0.001 |

| Total fixation duration | 17.02 s | 25.83 s | 25.9 s | W(288) = 0.773 p < 0.001 | 13.04 s | 17.89 s | 17.21s | W(329) = 0.699 p < 0.001 | U = 39602.5 p < 0.001 |

| Dwell time ratio | 0.67% | 0.66% | 0.16% | W(288) = 0.992 p = 0.106 | 0.75% | 0.72% | 0.17% | W(329) = 0.929 p < 0.001 | U = 35326 p < 0.001 |

| Data Assessment | CTA | RTA | Test of Statistical Difference (Z-Test for 2 Proportion) | ||

|---|---|---|---|---|---|

| Count | % | Count | % | ||

| Valid information | 770 | 89.1% | 715 | 72.4% | z = 8.988; p < 0.001 |

| Omitted information | 90 | 10.4% | 268 | 27.2% | z = 9.095; p < 0.001 |

| Fabricated information | 0 | 0% | 0 | 0% | N/A |

| Unidentified information | 4 | 0.5% | 4 | 0.4% | z = 0.189; p = 0.85 |

| Total | 864 | 100% | 987 | 100% | - |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prokop, M.; Pilař, L.; Tichá, I. Impact of Think-Aloud on Eye-Tracking: A Comparison of Concurrent and Retrospective Think-Aloud for Research on Decision-Making in the Game Environment. Sensors 2020, 20, 2750. https://doi.org/10.3390/s20102750

Prokop M, Pilař L, Tichá I. Impact of Think-Aloud on Eye-Tracking: A Comparison of Concurrent and Retrospective Think-Aloud for Research on Decision-Making in the Game Environment. Sensors. 2020; 20(10):2750. https://doi.org/10.3390/s20102750

Chicago/Turabian StyleProkop, Michal, Ladislav Pilař, and Ivana Tichá. 2020. "Impact of Think-Aloud on Eye-Tracking: A Comparison of Concurrent and Retrospective Think-Aloud for Research on Decision-Making in the Game Environment" Sensors 20, no. 10: 2750. https://doi.org/10.3390/s20102750

APA StyleProkop, M., Pilař, L., & Tichá, I. (2020). Impact of Think-Aloud on Eye-Tracking: A Comparison of Concurrent and Retrospective Think-Aloud for Research on Decision-Making in the Game Environment. Sensors, 20(10), 2750. https://doi.org/10.3390/s20102750