An Improved Pulse-Coupled Neural Network Model for Pansharpening

Abstract

:1. Introduction

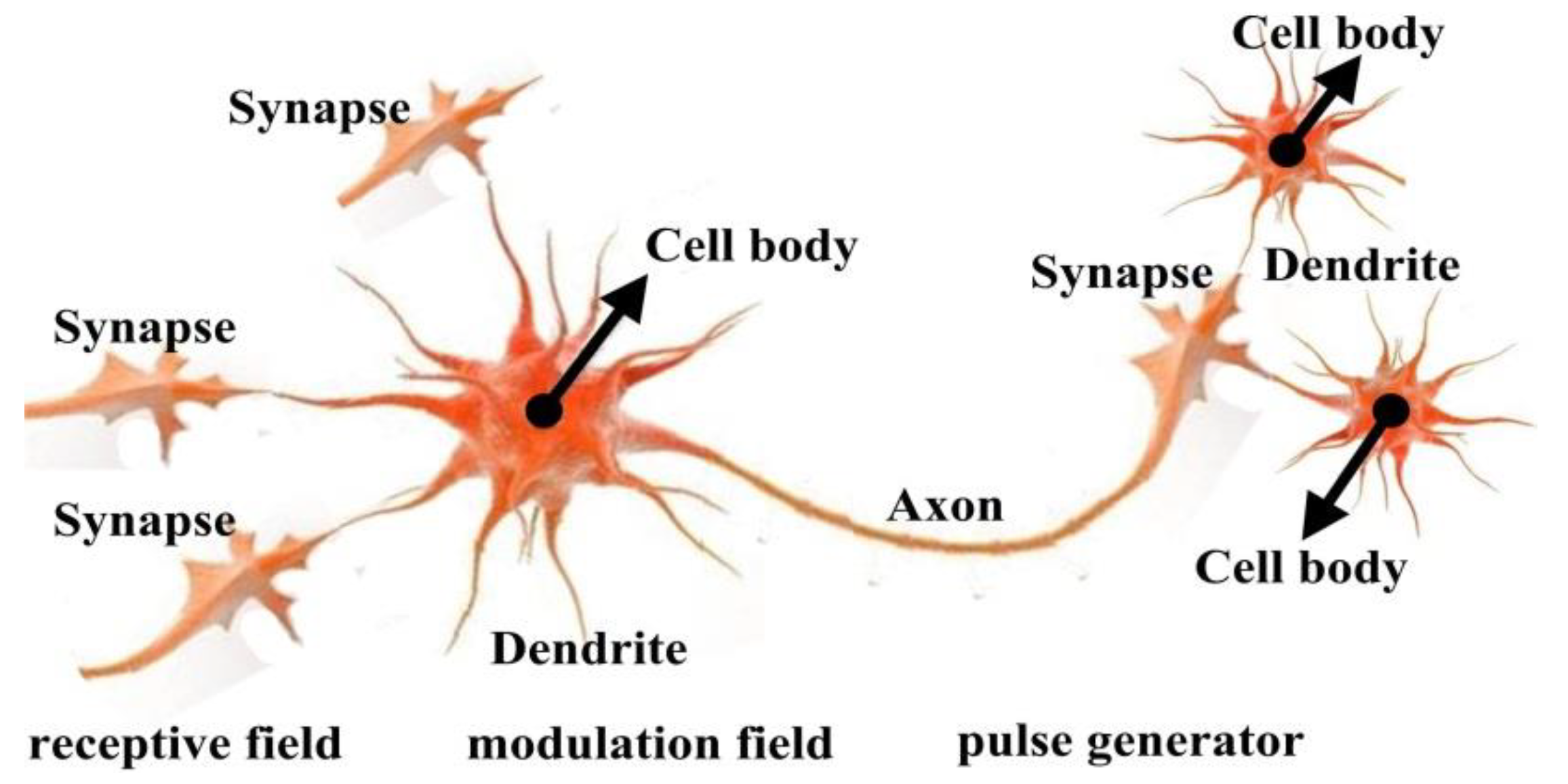

2. PCNN and PPCNN Models

2.1. Standard PCNN Model

2.2. Proposed PPCNN Model

2.3. Implementation of PPCNN Model

- Initializing matrices and parameters. Y[0] = F[0] = L[0] = β[0] = U[0] = 0, and E[0] = VE. The initial value of the iteration number n is 1. P is denoted as the spatial detail image. I and P are all normalized between 0 and 1. Other parameters (VF, VL, VE M, W, and αE) are determined by experience based on different applications.

- Compute F[n] = VF × (Y[n − 1] ⊗ M) + I, L[n] = VL × (Y[n − 1] ⊗ W)+P, and U[n] = F[n − 1] + β[n − 1]•L[n − 1].

- If Uij[n] > Eij[n], then Yij[n] = 1, else Yij[n] = 0.

- Update E[n + 1] = e-αE × E[n] + VE × Y[n].

- Stop iteration until all neurons have been stimulated, and the internal activity U is the final fusion result, else let n = n + 1 and return to the Step (2).

2.4. Analysis of PPCNN Model

3. PPCNN Based Pansharpening Approach

- Interpolate the multispectral image to the PAN size by employing the even cubic kernel to acquire the input MIk [41].

- Obtain Ik and PN according to Equations (19) and (20).

- Execute the histogram matching between PN and Ik to obtain the matched version PNk of the PAN image.

- Obtain the reduced-resolution version PNL of PN by using a low-pass filter, which obeys the modulation transferring function of the given satellite [42]. Calculate Pk = PNk − PNLk, where k = 1, …, K.

- Set k = 1.

- Update the internal activity Uk after implementing the PPCNN model, where the external stimuli are Ik and Pk, respectively.

- If k < K, let k = k + 1 and return to Step (6), otherwise, end the procedure.

- Obtain the fusion result R by the inverse normalization transformation Rk = φ × Uk.

4. Experiments

4.1. Quality Evaluation Criteria

4.2. Datasets

4.3. Initialize PPCNN Parameters

5. Experimental Results and Discussions

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Huang, B.; Zhao, B.; Song, Y. Urban land-use mapping using a deep convolutional neural network with high spatial resolution multispectral remote sensing imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Fu, H.; Zhou, T.; Sun, C. Object-based shadow index via illumination intensity from high resolution satellite images over urban areas. Sensors 2020, 20, 1077. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Frick, A.; Tervooren, S. A framework for the long-term monitoring of urban green volume based on multi-temporal and multi-sensoral remote sensing data. J. Geovis. Spat. Anal. 2019, 3, 6–16. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, X.; Niu, X.; Wang, F.; Zhang, X. Scene classification of high-resolution remotely sensed image based on resnet. J. Geovis. Spat. Anal. 2019, 3, 1–9. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L.; Capobianco, L.; Garzelli, A.; Marchesi, S. Analysis of the effects of pansharpening in change detection on VHR images. IEEE Geosci. Remote Sens. Lett. 2010, 7, 53–57. [Google Scholar] [CrossRef]

- Mohammadzadeh, A.; Tavakoli, A.; Zoej, M.J.V. Road extraction based on fuzzy logic and mathematical morphology from pan-sharpened IKONOS images. Photogramm. Rec. 2006, 21, 44–60. [Google Scholar] [CrossRef]

- Gašparović, M.; Jogun, T. The effect of fusing Sentinel-2 bands on land-cover classification. Int. J. Remote Sens. 2018, 39, 822–841. [Google Scholar] [CrossRef]

- Laporterie-Déjean, F.; Boissezon, H.; Flouzat, G.; Lefèvre-Fonollosaa, M. Thematic and statistical evaluations of five panchromatic/multispectral fusion methods on simulated PLEIADES-HR images. Inf. Fusion 2005, 6, 193–212. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Mura, M.D.; Garzelli, A. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Amro, I.; Mateos, J.; Vega, M.; Molina, R.; Katsaggelos, A.K. A survey of classical methods and new trends in pansharpening of multispectral images. EURASIP J. Adv. Signal Process. 2011, 2011, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Thomas, C.; Ranchin, T.; Wald, L.; Chanussot, J. Synthesis of multispectral images to high spatial resolution: A critical review of fusion methods based on remote sensing physics. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1301–1312. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Ziou, D.; Armenakis, C.; Li, D.; Li, Q. A comparative analysis of image fusion methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1391–1402. [Google Scholar] [CrossRef]

- Tu, T.M.; Su, S.C.; Shyu, H.C.; Huang, P.S. A new look at IHS like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Carper, W.; Lillesand, T.; Kiefer, R. The use of intensity-hue-saturation transformations for merging SPOT panchromatic and multispectral image data. Photogramm. Eng. Remote Sens. 1990, 56, 459–467. [Google Scholar]

- Psjr, C.; Sides, S.C.; Anderson, J.A. Comparison of three different methods to merge multiresolution and multispectral data: Landsat TM and SPOT panchromatic. Photogramm. Eng. Remote Sens. 1991, 57, 265–303. [Google Scholar]

- Chavez, P.S.; Kwarteng, A.Y. Extracting spectral contrast in Landsat thematic mapper image data using selective principal component analysis. Photogramm. Eng. Remote Sens. 1989, 55, 339–348. [Google Scholar]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6011875, 4 January 2000. [Google Scholar]

- Mallat, S.G. A Theoryf Multiresolution Signal Decomposition: The Wavelet Representation; IEEE Computer Society: Washington, DC, USA, 1989. [Google Scholar]

- Nason, G.P.; Silverman, B.W. The Stationary Wavelet Transform and Some Statistical Applications; Springer: New York, NY, USA, 1995. [Google Scholar]

- Vivone, G.; Restaino, R.; Mura, M.D.; Licciardi, G.; Chanussot, J. Contrast and error-based fusion schemes for multispectral image pansharpening. IEEE Geosci. Remote Sens. Lett. 2013, 11, 930–934. [Google Scholar] [CrossRef] [Green Version]

- Burt, P.J.; Adelson, E.H. The Laplacian pyramid as a compact image code. IEEE Trans. Commun. 2003, 31, 532–540. [Google Scholar] [CrossRef]

- Restaino, R.; Vivone, G.; Mura, M.D.; Chanussot, J. Fusion of multispectral and panchromatic images based on morphological operators. IEEE Trans. Image Process. 2016, 25, 2882–2895. [Google Scholar] [CrossRef] [Green Version]

- Scarpa, G.; Vitale, S.; Cozzolino, D. Target-adaptive cnn-based pansharpening. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by convolutional neural networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Ma, J.; Fei, R.; Li, H.; Zhang, J. Enhanced back-projection as postprocessing for pansharpening. Remote Sens. 2019, 11, 712. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Jing, L.; Tang, Y.; Ding, H. An improved pansharpening method for misaligned panchromatic and multispectral data. Sensors 2018, 18, 557. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aiazzi, B.; Alparone, L.; Baronti, S. Twenty-Five Years of Pansharpening: A critical Review and New Developments. In Signal and Image Processing for Remote Sensing; CRC Press: Boca Raton, FL, USA, 2012; pp. 533–548. [Google Scholar]

- Wang, N.; Jiang, W.; Lei, C.; Qin, S.; Wang, J. A robust image fusion method based on local spectral and spatial correlation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 454–458. [Google Scholar] [CrossRef]

- Ma, Y.; Zhan, K.; Wang, Z. Applications of Pulse-Coupled Neural Networks; Higher Education Press: Beijing, China, 2010. [Google Scholar]

- Wang, Z.; Ma, Y. Medical image fusion using m-PCNN. Inf. Fusion 2008, 9, 176–185. [Google Scholar] [CrossRef]

- Zhu, S.; Wang, L.; Duan, S. Memristive pulse coupled neural network with applications in medical image processing. Neurocomputing 2017, 227, 149–157. [Google Scholar] [CrossRef]

- Dong, Z.; Lai, C.; Qi, D.; Zhao, X.; Li, C. A general memristor-based pulse coupled neural network with variable linking coefficient for multi-focus image fusion. Neurocomputing 2018, 308, 172–183. [Google Scholar] [CrossRef]

- Huang, C.; Tian, G.; Lan, Y.; Peng, Y.; Ng, E.Y.K. A new pulse coupled neural network (pcnn) for brain medical image fusion empowered by shuffled frog leaping algorithm. Front. Neurosci. 2019, 13, 1–10. [Google Scholar] [CrossRef]

- Cheng, B.; Jin, L.; Li, G. Infrared and visual image fusion using LNSST and an adaptive dual-channel PCNN with triple-linking strength. Neurocomputing 2018, 310, 135–147. [Google Scholar] [CrossRef]

- Ma, J.; Xu, H.; Jiang, J.; Mei, X.; Zhang, X. DDcGAN: A dual-discriminator conditional generative adversarial network for multi-resolution image fusion. IEEE Trans. Image Process. 2020, 29, 4980–4995. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion. 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Ma, J.; Liang, P.; Yu, W.; Chen, C.; Guo, X. Infrared and visible image fusion via detail preserving adversarial learning. Inf. Fusion. 2020, 54, 85–98. [Google Scholar] [CrossRef]

- Shi, C.; Miao, Q.G.; Xu, P.F. A novel algorithm of remote sensing image fusion based on Shearlets and PCNN. Neurocomputing 2013, 117, 47–53. [Google Scholar]

- Johnson, J.L.; Padgett, M.L. PCNN models and applications. IEEE Trans. Neural Netw. 1999, 10, 480–498. [Google Scholar] [CrossRef] [PubMed]

- Lindblad, T.; Kinser, J.M. Image Processing Using Pulse-Coupled Neural Networks; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M.; Alparone, L. Bi-cubic interpolation for shift-free pan-sharpening. ISPRS J. Photogramm. Remote Sens. 2013, 86, 65–76. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. MTF-tailored multiscale fusion of high-resolution MS and pan imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Goetz, A.; Boardman, W.; Yunas, R. Discrimination among semi-arid landscape endmembers using the Spectral Angle Mapper (SAM) algorithm. In Proceedings of the Summaries 3rd Annual JPL Airborne Geoscience Workshop, Pasadena, CA, USA, 1–5 June 1992; pp. 147–149. [Google Scholar]

- Wald, L. Data Fusion: Definitions and Architectures: Fusion of Images of Different Spatial Resolutions; Presses Des MINES: Paris, France, 2002. [Google Scholar]

- Alparone, L.; Baronti, S.; Garzelli, A.; Nencini, F. A global quality measurement of pan-sharpened multispectral imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 313–317. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F. Hypercomplex quality assessment of multi/hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 662–665. [Google Scholar] [CrossRef]

- Otazu, X.; Gonzalez-Audicana, M.; Fors, O.; Nunez, J. Introduction of sensor spectral response into image fusion methods. Application to wavelet-based methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2376–2385. [Google Scholar] [CrossRef] [Green Version]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Gillespie, A.R.; Kahle, A.B.; Walker, R.E. Color enhancement of highly correlated images. II. Channel ratio and “chromaticity” transformation techniques. Remote Sens. Environ. 1987, 22, 343–365. [Google Scholar] [CrossRef]

- Vivone, G.; Luciano, A.; Andrea, G.; Simone, L. Fast reproducible pansharpening based on instrument and acquisition modeling: AWLP revisited. Remote Sens. 2019, 11, 2315. [Google Scholar] [CrossRef] [Green Version]

- Vivone, G.; Rocco, R.; Jocelyn, C. Full scale regression-based injection coefficients for panchromatic sharpening. IEEE Trans. Image Process. 2018, 27, 3418–3431. [Google Scholar] [CrossRef] [PubMed]

| Experiments | Datasets | Satellites | Size | Spatial Resolution | Region |

|---|---|---|---|---|---|

| Pan and MS | Washington dataset | WV-2 | PAN: 2048 × 2048 MS: 512 × 512 | 0.5 m/2 m | Washington, D.C., USA |

| Sichuan dataset | IKONOS-2 | PAN: 2048 × 2048 MS: 512 × 512 | 1 m/4 m | Sichuan, China | |

| Boulder dataset | QuickBird | PAN: 2048 × 2048 MS: 512 × 512 | 0.7 m/ 2.8 m | Boulder, USA | |

| Lanzhou dataset | GF-2 | PAN: 2048 × 2048 MS: 512 × 512 | 0.81 m/3.24 m | Lanzhou, China | |

| Xinjiang dataset | GF-2 | PAN: 2048 × 2048 MS: 512 × 512 | 0.81 m/3.24 m | Xinjiang, China |

| Criteria | PPCNN | GS | PCA | BT | IHS | ATWT |

| Q4 | 0.8884 | 0.7822 | 0.7438 | 0.7764 | 0.7841 | 0.8626 |

| SAM (°) | 7.7527 | 8.4126 | 9.6313 | 8.1936 | 8.6529 | 7.9265 |

| ERGAS | 4.9416 | 6.5617 | 7.5797 | 6.7480 | 6.6710 | 5.5633 |

| SCC | 0.8375 | 0.8349 | 0.7933 | 0.8372 | 0.8175 | 0.8317 |

| Criteria | AWLP | CBD | MOF | RAWLP | FSR | |

| Q4 | 0.8600 | 0.8696 | 0.8651 | 0.8731 | 0.8795 | |

| SAM (°) | 7.9247 | 8.5065 | 7.9248 | 7.7967 | 8.3575 | |

| ERGAS | 5.7873 | 5.4875 | 5.4889 | 5.3147 | 5.0657 | |

| SCC | 0.8187 | 0.7812 | 0.8309 | 0.8369 | 0.8271 |

| Criteria | PPCNN | GS | PCA | BT | IHS | ATWT |

| Q4 | 0.8959 | 0.8354 | 0.8368 | 0.7086 | 0.5658 | 0.8772 |

| SAM (°) | 1.3857 | 1.7843 | 1.7942 | 2.7454 | 4.2208 | 1.5599 |

| ERGAS | 1.1769 | 1.4884 | 1.4911 | 1.8771 | 2.8338 | 1.2873 |

| SCC | 0.9552 | 0.9525 | 0.9520 | 0.9085 | 0.7201 | 0.9509 |

| Criteria | AWLP | CBD | MOF | RAWLP | FSR | |

| Q4 | 0.8714 | 0.8762 | 0.8810 | 0.8917 | 0.8926 | |

| SAM (°) | 2.9800 | 1.4627 | 1.5765 | 1.3983 | 1.3927 | |

| ERGAS | 1.7519 | 1.3027 | 1.2960 | 1.1865 | 1.1819 | |

| SCC | 0.9343 | 0.9515 | 0.9476 | 0.9540 | 0.9542 |

| Criteria | PPCNN | GS | PCA | BT | IHS | ATWT |

| Q4 | 0.9126 | 0.8511 | 0.8513 | 0.8291 | 0.8084 | 0.9057 |

| SAM (°) | 0.9747 | 1.3354 | 1.5113 | 1.1640 | 1.4448 | 1.0470 |

| ERGAS | 0.8049 | 1.0992 | 1.0346 | 1.1104 | 1.2317 | 0.8957 |

| SCC | 0.9557 | 0.9411 | 0.9411 | 0.9467 | 0.9328 | 0.9432 |

| Criteria | AWLP | CBD | MOF | RAWLP | FSR | |

| Q4 | 0.9050 | 0.8972 | 0.9026 | 0.9062 | 0.9097 | |

| SAM (°) | 1.2062 | 1.0305 | 1.1257 | 1.1903 | 1.1528 | |

| ERGAS | 0.9731 | 1.0052 | 0.9763 | 0.9312 | 0.9441 | |

| SCC | 0.9346 | 0.9369 | 0.9465 | 0.9393 | 0.9392 |

| Criteria | PPCNN | GS | PCA | BT | IHS | ATWT |

| Q4 | 0.9107 | 0.8230 | 0.7763 | 0.8126 | 0.8097 | 0.8980 |

| SAM (°) | 1.4304 | 1.7992 | 2.3502 | 1.4307 | 1.6756 | 1.4545 |

| ERGAS | 1.6697 | 2.2794 | 2.8436 | 2.2208 | 2.2514 | 1.7911 |

| SCC | 0.8944 | 0.8713 | 0.8516 | 0.8717 | 0.8777 | 0.8844 |

| Criteria | AWLP | CBD | MOF | RAWLP | FSR | |

| Q4 | 0.8961 | 0.7957 | 0.8835 | 0.9000 | 0.8982 | |

| SAM (°) | 1.4480 | 2.2117 | 1.4583 | 1.6917 | 1.4550 | |

| ERGAS | 1.7683 | 3.2100 | 1.9758 | 1.7373 | 1.7963 | |

| SCC | 0.8771 | 0.8021 | 0.8829 | 0.8822 | 0.8878 |

| Criteria | PPCNN | GS | PCA | BT | IHS | ATWT |

| Q4 | 0.9253 | 0.8650 | 0.8479 | 0.8514 | 0.8621 | 0.8956 |

| SAM (°) | 0.9940 | 1.2560 | 1.3230 | 1.0468 | 1.0672 | 1.1253 |

| ERGAS | 1.1784 | 1.6340 | 1.7619 | 1.5944 | 1.6054 | 1.3765 |

| SCC | 0.9195 | 0.8814 | 0.8778 | 0.8820 | 0.8918 | 0.8904 |

| Criteria | AWLP | CBD | MOF | RAWLP | FSR | |

| Q4 | 0.8836 | 0.8479 | 0.8802 | 0.9181 | 0.8864 | |

| SAM (°) | 1.1277 | 1.5497 | 1.1718 | 1.0129 | 1.1674 | |

| ERGAS | 1.3733 | 2.0711 | 1.5450 | 1.2622 | 1.4706 | |

| SCC | 0.8830 | 0.8444 | 0.8896 | 0.9142 | 0.8847 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Yan, H.; Xie, W.; Kang, L.; Tian, Y. An Improved Pulse-Coupled Neural Network Model for Pansharpening. Sensors 2020, 20, 2764. https://doi.org/10.3390/s20102764

Li X, Yan H, Xie W, Kang L, Tian Y. An Improved Pulse-Coupled Neural Network Model for Pansharpening. Sensors. 2020; 20(10):2764. https://doi.org/10.3390/s20102764

Chicago/Turabian StyleLi, Xiaojun, Haowen Yan, Weiying Xie, Lu Kang, and Yi Tian. 2020. "An Improved Pulse-Coupled Neural Network Model for Pansharpening" Sensors 20, no. 10: 2764. https://doi.org/10.3390/s20102764