Differentially Private Mobile Crowd Sensing Considering Sensing Errors

Abstract

1. Introduction

2. Models

2.1. Application Model

2.2. Motivating Example

2.3. Privacy Metric

2.4. Utility Metric

2.5. Problem

3. Related Work

3.1. Privacy-Preserving Mobile Crowdsensing

3.2. Privacy Metrics

3.3. Incentive Mechanism and Trustworthiness for Mobile Crowdsensing

4. Method

4.1. Overview

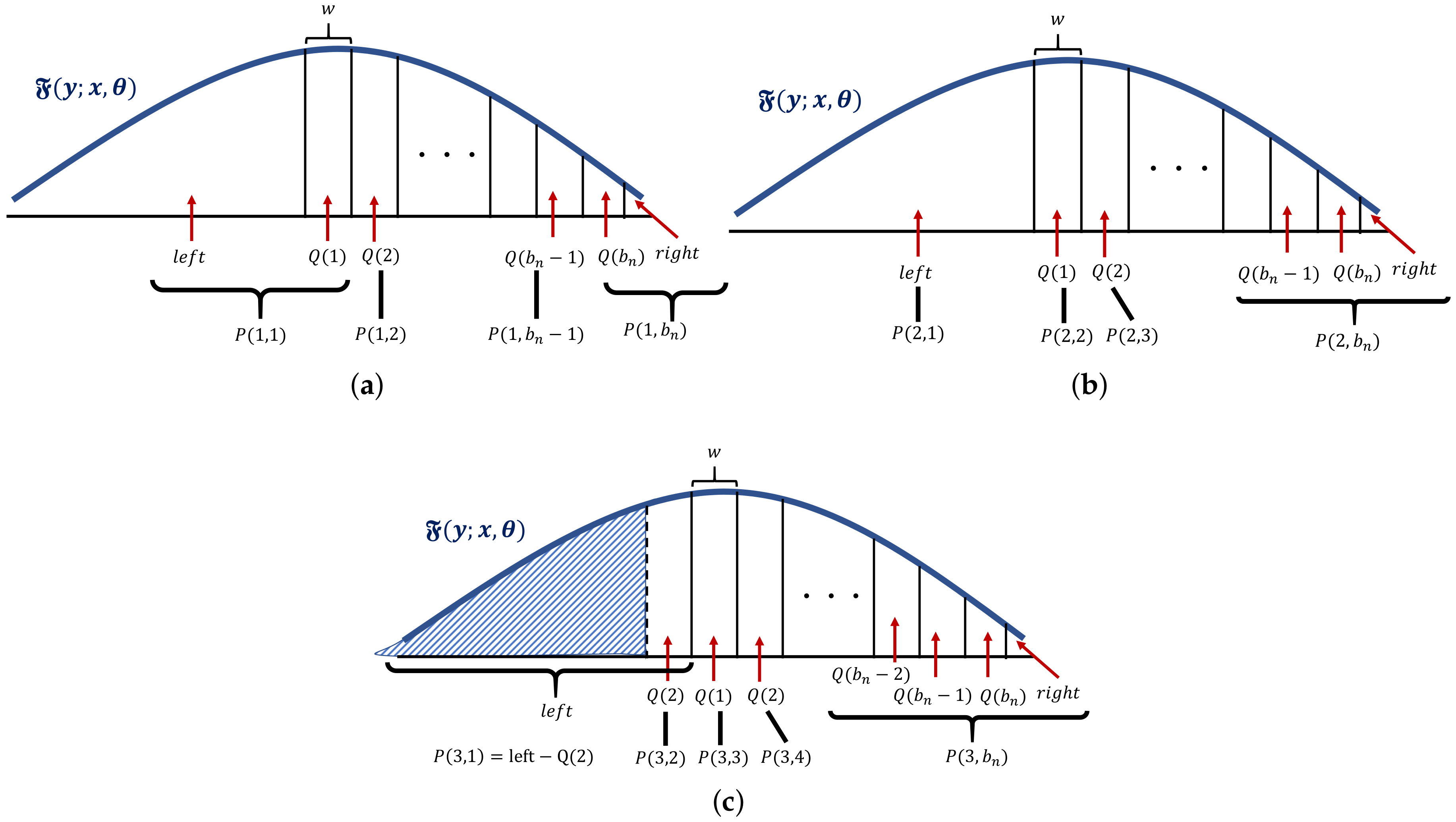

4.2. PDE for Participants

| Algorithm 1 Anonymization Algorithm. |

Input:, , , , , , . Output: Report value v and standard deviation of sensing error

|

4.3. ETE for Estimation

| Algorithm 2 Estimation Algorithm. |

Input:Y, , , , , , , , , Output:

|

5. Evaluation

5.1. Evaluation of Synthetic Data

5.2. Evaluation of Real Data

5.2.1. Location Data

5.2.2. Deep Neural Network’s Output Data

6. Discussion

7. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Miluzzo, E.; Lane, N.D.; Fodor, K.; Peterson, R.; Lu, H.; Musolesi, M.; Eisenman, S.B.; Zheng, X.; Campbell, A.T. Sensing meets mobile social networks. In Proceedings of the 6th ACM Conference on Embedded Network Sensor Systems, Raleigh, NC, USA, 5–7 November 2008; pp. 337–350. [Google Scholar]

- Bridgelall, R.; Tolliver, D. Accuracy Enhancement of Anomaly Localization with Participatory Sensing Vehicles. Sensors 2020, 20, 409. [Google Scholar] [CrossRef] [PubMed]

- Kozu, R.; Kawamura, T.; Egami, S.; Sei, Y.; Tahara, Y.; Ohsuga, A. User participatory construction of open hazard data for preventing bicycle accidents. In Proceedings of the Joint International Semantic Technology Conference (JIST), Gold Coast, Australia, 10–12 November 2017; pp. 289–303. [Google Scholar] [CrossRef]

- Khoi, N.; Casteleyn, S.; Moradi, M.; Pebesma, E. Do Monetary Incentives Influence Users’ Behavior in Participatory Sensing? Sensors 2018, 18, 1426. [Google Scholar] [CrossRef] [PubMed]

- Domínguez, D.R.; Díaz Redondo, R.P.; Vilas, A.F.; Khalifa, M.B. Sensing the city with Instagram: Clustering geolocated data for outlier detection. Expert Syst. Appl. 2017, 78, 319–333. [Google Scholar] [CrossRef]

- Igartua, M.A.; Almenares, F.; Redondo, R.P.D.; Martin, M.I.; Forne, J.; Campo, C.; Fernandez, A.; De la Cruz, L.J.; Garcia-Rubio, C.; Marin, A.; et al. INRISCO: INcident monitoRing In Smart COmmunities. IEEE Access 2020, 8, 72435–72460. [Google Scholar] [CrossRef]

- Kairouz, P.; Bonawitz, K.; Ramage, D. Discrete Distribution Estimation under Local Privacy. In Proceedings of the ICML, New York, NY, USA, 19–24 June 2016; pp. 2436–2444. [Google Scholar]

- Sei, Y.; Ohsuga, A. Differential Private Data Collection and Analysis Based on Randomized Multiple Dummies for Untrusted Mobile Crowdsensing. IEEE Trans. Inf. Forensics Secur. 2017, 12, 926–939. [Google Scholar] [CrossRef]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating Noise to Sensitivity in Private Data Analysis. In Proceedings of the Theory of Cryptography (TCC), New York, NY, USA, 4–7 March 2006; pp. 265–284. [Google Scholar]

- Dwork, C.; Roth, A. The Algorithmic Foundations of Differential Privacy. Found. Trends Theor. Comput. Sci. 2014, 9, 211–407. [Google Scholar] [CrossRef]

- Liu, F. Generalized Gaussian Mechanism for Differential Privacy. IEEE Trans. Knowl. Data Eng. 2019, 31, 747–756. [Google Scholar] [CrossRef]

- Ren, X.; Yu, C.M.; Yu, W.; Yang, S.; Yang, X.; McCann, J.A.; Yu, P.S. LoPub: High-dimensional crowdsourced data publication with local differential privacy. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2151–2166. [Google Scholar] [CrossRef]

- Phan, N.; Wu, X.; Hu, H.; Dou, D. Adaptive Laplace Mechanism: Differential Privacy Preservation in Deep Learning. In Proceedings of the IEEE ICDM, New Orleans, LA, USA, 18–21 November 2017; pp. 385–394. [Google Scholar]

- Zhang, X.; Chen, R.; Xu, J.; Meng, X.; Xie, Y. Towards Accurate Histogram Publication under Differential Privacy. In Proceedings of the SIAM SDM Workshop on Data Mining for Medicine and Healthcare, Philadelphia, PA, USA, 24–26 April 2014; pp. 587–595. [Google Scholar]

- Chen, P.T.; Chen, F.; Qian, Z. Road Traffic Congestion Monitoring in Social Media with Hinge-Loss Markov Random Fields. In Proceedings of the IEEE ICDM, Shenzhen, China, 14–17 December 2014; pp. 80–89. [Google Scholar]

- Schubert, E.; Zimek, A.; Kriegel, H.P. Generalized Outlier Detection with Flexible Kernel Density Estimates. In Proceedings of the SIAM SDM, Philadelphia, PA, USA, 22–29 January 2014; pp. 542–550. [Google Scholar]

- Lyon, A. Why are Normal Distributions Normal? Br. J. Philos. Sci. 2014, 65, 621–649. [Google Scholar] [CrossRef]

- Peng, R.; Sichitiu, M.L. Angle of arrival localization for wireless sensor networks. In Proceedings of the IEEE Communications Society Conference on Sensor and Ad Hoc Communications and Networks (SECON), Santa Clara, CA, USA, 28 September 2006; pp. 374–382. [Google Scholar] [CrossRef]

- Floris, I.; Calderón, P.A.; Sales, S.; Adam, J.M. Effects of core position uncertainty on optical shape sensor accuracy. Meas. J. Int. Meas. Confed. 2019, 139, 21–33. [Google Scholar] [CrossRef]

- Burguera, A.; González, Y.; Oliver, G. Sonar sensor models and their application to mobile robot localization. Sensors 2009, 9, 10217–10243. [Google Scholar] [CrossRef] [PubMed]

- Devon, D.; Holzer, T.; Sarkani, S. Minimizing uncertainty and improving accuracy when fusing multiple stationary GPS receivers. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, San Diego, CA, USA, 14–16 September 2015; pp. 83–88. [Google Scholar] [CrossRef]

- Wang, Q.; Kurillo, G.; Ofli, F.; Bajcsy, R. Evaluation of pose tracking accuracy in the first and second generations of microsoft Kinect. In Proceedings of the IEEE International Conference on Healthcare Informatics (ICHI), Dallas, TX, USA, 21–23 October 2015; pp. 380–389. [Google Scholar] [CrossRef]

- Li, J. Permissible Area Analyses of Measurement Errors with Required Fault Diagnosability Performance. Sensors 2019, 19, 4880. [Google Scholar] [CrossRef]

- Wang, D.; Chen, S.; Li, X.; Zhang, W.; Jin, H. Research on Rotational Angle Measurement for the Smart Wheel Force Sensor. Sensors 2020, 20, 1037. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez, R.; Dabove, P. Performance Assessment of an Ultra Low-Cost Inertial Measurement Unit for Ground Vehicle Navigation. Sensors 2019, 19, 3865. [Google Scholar] [CrossRef]

- Nguyen, L.V.; Kodagoda, S.; Ranasinghe, R.; Dissanayake, G. Adaptive Placement for Mobile Sensors in Spatial Prediction under Locational Errors. IEEE Sens. J. 2017, 17, 794–802. [Google Scholar] [CrossRef]

- Pereira Barbeiro, P.N.; Krstulovic, J.; Teixeira, H.; Pereira, J.; Soares, F.J.; Iria, J.P. State estimation in distribution smart grids using autoencoders. In Proceedings of the IEEE International Power Engineering and Optimization Conference (PEOCO), Langkawi, Malaysia, 24–25 March 2014; pp. 358–363. [Google Scholar] [CrossRef]

- Wang, Q.; Ye, L.; Luo, H.; Men, A.; Zhao, F.; Huang, Y. Pedestrian Stride-Length Estimation Based on LSTM and Denoising Autoencoders. Sensors 2019, 19, 840. [Google Scholar] [CrossRef]

- Uss, M.; Vozel, B.; Lukin, V.; Chehdi, K. Efficient Discrimination and Localization of Multimodal Remote Sensing Images Using CNN-Based Prediction of Localization Uncertainty. Remote Sens. 2020, 12, 703. [Google Scholar] [CrossRef]

- Bhaskar, R.; Laxman, S.; Smith, A.; Thakurta, A. Discovering frequent patterns in sensitive data. In Proceedings of the ACM KDD, Washington, DC, USA, 24–28 July 2010; pp. 503–512. [Google Scholar]

- Chen, R.; Fung, B.C.; Desai, B.C.; Sossou, N.M. Differentially private transit data publication. In Proceedings of the ACM KDD, Beijing, China, 12–16 August 2012; pp. 213–221. [Google Scholar]

- Ren, H.; Li, H.; Liang, X.; He, S.; Dai, Y.; Zhao, L. Privacy-Enhanced and Multifunctional Health Data Aggregation under Differential Privacy Guarantees. Sensors 2016, 16, 1463. [Google Scholar] [CrossRef]

- Dwork, C. Differential Privacy. In Proceedings of the ICALP, Venice, Italy, 10–14 July 2006; pp. 1–12. [Google Scholar]

- Kasiviswanathan, S.P.; Lee, H.K.; Nissim, K.; Raskhodnikova, S.; Smith, A. What Can We Learn Privately? SIAM J. Comput. 2013, 40, 793–826. [Google Scholar] [CrossRef]

- Li, Q.; Cao, G.; Porta, T.F. Efficient and privacy-aware data aggregation in mobile sensing. IEEE Trans. Dependable Secur. Comput. 2014, 11, 115–129. [Google Scholar] [CrossRef]

- Lu, R.; Liang, X.; Li, X.; Lin, X.; Shen, X.S. EPPA: An Efficient and Privacy-Preserving Aggregation Scheme for Secure Smart Grid Communications. IEEE Trans. Parallel Distrib. Syst. 2012, 23, 1621–1631. [Google Scholar]

- Shen, X.; Zhu, L.; Xu, C.; Sharif, K.; Lu, R. A privacy-preserving data aggregation scheme for dynamic groups in fog computing. Inf. Sci. 2020, 514, 118–130. [Google Scholar] [CrossRef]

- Agrawal, S.; Haritsa, J. A Framework for High-Accuracy Privacy-Preserving Mining. In Proceedings of the IEEE ICDE, Tokyo, Japan, 5–8 April 2005; pp. 193–204. [Google Scholar]

- Agrawal, S.; Haritsa, J.R.; Prakash, B.A. FRAPP: A framework for high-accuracy privacy-preserving mining. Data Min. Knowl. Discov. 2008, 18, 101–139. [Google Scholar] [CrossRef]

- Yang, M.; Zhu, T.; Xiang, Y.; Zhou, W. Density-Based Location Preservation for Mobile Crowdsensing with Differential Privacy. IEEE Access 2018, 6, 14779–14789. [Google Scholar] [CrossRef]

- Ma, Q.; Zhang, S.; Zhu, T.; Liu, K.; Zhang, L.; He, W.; Liu, Y. PLP: Protecting Location Privacy Against Correlation Analyze Attack in Crowdsensing. IEEE Trans. Mob. Comput. 2017, 16, 2588–2598. [Google Scholar] [CrossRef]

- Gao, H.; Xu, H.; Zhang, L.; Zhou, X. A Differential Game Model for Data Utility and Privacy-Preserving in Mobile Crowdsensing. IEEE Access 2019, 7, 128526–128533. [Google Scholar] [CrossRef]

- Huai, M.; Huang, L.; Sun, Y.E.; Yang, W. Efficient Privacy-Preserving Aggregation for Mobile Crowdsensing. In Proceedings of the IEEE Big Data and Cloud Computing, Dalian, China, 26–28 August 2015; pp. 275–280. [Google Scholar]

- Huang, P.; Zhang, X.; Guo, L.; Li, M. Incentivizing Crowdsensing-based Noise Monitoring with Differentially-Private Locations. IEEE Trans. Mob. Comput. 2019, 1–14. [Google Scholar] [CrossRef]

- Sweeney, L. Achieving k-anonymity privacy protection using generalization and suppression. Int. J. Uncertainty Fuzziness Knowl. Based Syst. 2002, 10, 571–588. [Google Scholar] [CrossRef]

- Li, X.; Miao, M.; Liu, H.; Ma, J.; Li, K.C. An incentive mechanism for K-anonymity in LBS privacy protection based on credit mechanism. Soft Comput. 2017, 21, 3907–3917. [Google Scholar] [CrossRef]

- Oganian, A.; Domingo-Ferrer, J. Local synthesis for disclosure limitation that satisfies probabilistic k-anonymity criterion. Trans. Data Priv. 2017, 10, 61–81. [Google Scholar]

- Machanavajjhala, A.; Kifer, D.; Gehrke, J.; Venkitasubramaniam, M.; Kifer, D.; Venkitasubramaniam, M. l-diversity: Privacy beyond k-anonymity. ACM TKDD 2007, 1, 3. [Google Scholar] [CrossRef]

- Li, N.; Li, T.; Venkatasubramanian, S. t-closeness: Privacy beyond k-anonymity and l-diversity. In Proceedings of the IEEE ICDE, Istanbul, Turkey, 15–20 April 2007; pp. 106–115. [Google Scholar]

- Suliman, A.; Otrok, H.; Mizouni, R.; Singh, S.; Ouali, A. A greedy-proof incentive-compatible mechanism for group recruitment in mobile crowd sensing. Future Gener. Comput. Syst. 2019, 101, 1158–1167. [Google Scholar] [CrossRef]

- Saadatmand, S.; Kanhere, S.S. MRA: A modified reverse auction based framework for incentive mechanisms in mobile crowdsensing systems. Comput. Commun. 2019, 145, 137–145. [Google Scholar] [CrossRef]

- Wu, Y.; Li, F.; Ma, L.; Xie, Y.; Li, T.; Wang, Y. A Context-Aware Multiarmed Bandit Incentive Mechanism for Mobile Crowd Sensing Systems. IEEE Internet Things J. 2019, 6, 7648–7658. [Google Scholar] [CrossRef]

- Abououf, M.; Otrok, H.; Singh, S.; Mizouni, R.; Ouali, A. A Misbehaving-Proof Game Theoretical Selection Approach for Mobile Crowd Sourcing. IEEE Access 2020, 8, 58730–58741. [Google Scholar] [CrossRef]

- Zhou, T.; Cai, Z.; Wu, K.; Chen, Y.; Xu, M. FIDC: A framework for improving data credibility in mobile crowdsensing. Comput. Netw. 2017, 120, 157–169. [Google Scholar] [CrossRef]

- Xie, K.; Li, X.; Wang, X.; Xie, G.; Xie, D.; Li, Z.; Wen, J.; Diao, Z. Quick and Accurate False Data Detection in Mobile Crowd Sensing. In Proceedings of the IEEE INFOCOM, Paris, France, 29 April–2 May 2019; pp. 2215–2223. [Google Scholar] [CrossRef]

- Zhang, M.; Yang, L.; Gong, X.; Zhang, J. Privacy-Preserving Crowdsensing: Privacy Valuation, Network Effect, and Profit Maximization. In Proceedings of the IEEE GLOBECOM, Washington, DC, USA, 4–8 December 2016; pp. 1–6. [Google Scholar]

- Capponi, A.; Fiandrino, C.; Kantarci, B.; Foschini, L.; Kliazovich, D.; Bouvry, P. A Survey on Mobile Crowdsensing Systems: Challenges, Solutions, and Opportunities. IEEE Commun. Surv. Tutor. 2019, 21, 2419–2465. [Google Scholar] [CrossRef]

- Liu, Y.; Kong, L.; Chen, G. Data-Oriented Mobile Crowdsensing: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2019, 21, 2849–2885. [Google Scholar] [CrossRef]

- Pouryazdan, M.; Kantarci, B.; Soyata, T.; Foschini, L.; Song, H. Quantifying user reputation scores, data trustworthiness, and user incentives in mobile crowd-sensing. IEEE Access 2017, 5, 1382–1397. [Google Scholar] [CrossRef]

- Pouryazdan, M.; Fiandrino, C.; Kantarci, B.; Soyata, T.; Kliazovich, D.; Bouvry, P. Intelligent Gaming for Mobile Crowd-Sensing Participants to Acquire Trustworthy Big Data in the Internet of Things. IEEE Access 2017, 5, 22209–22223. [Google Scholar] [CrossRef]

- Xiao, L.; Li, Y.; Han, G.; Dai, H.; Poor, H.V. A Secure Mobile Crowdsensing Game with Deep Reinforcement Learning. IEEE Trans. Inf. Forensics Secur. 2018, 13, 35–47. [Google Scholar] [CrossRef]

- Agrawal, R.; Srikant, R.; Thomas, D. Privacy preserving OLAP. In Proceedings of the ACM SIGMOD, Baltimore, MA, USA, 14–16 June 2005; pp. 251–262. [Google Scholar]

- Wang, W.; Carreira-Perpiñán, M.A. Projection onto the probability simplex: An efficient algorithm with a simple proof, and an application. arXiv 2013, arXiv:1309.1541v1. [Google Scholar]

- Tang, J.; Korolova, A.; Bai, X.; Wang, X.; Wang, X. Privacy Loss in Apple’s Implementation of Differential Privacy on MacOS 10.12. arXiv 2017, arXiv:1709.02753. [Google Scholar]

- Differential Privacy Team Apple. Learning with Privacy at Scale. Apple Mach. Learn. J. 2017, 1, 1–25. [Google Scholar]

- Wang, L.; Zhang, D.; Yan, Z.; Xiong, H.; Xie, B. EffSense: A Novel Mobile Crowd-Sensing Framework for Energy-Efficient and Cost-Effective Data Uploading. IEEE Trans. Syst. Man Cybern. Syst. 2015, 45, 1549–1563. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Zeng, M.; Nguyen, L.T.; Yu, B.; Mengshoel, O.J.; Zhu, J.; Wu, P.; Zhang, J. Convolutional Neural Networks for human activity recognition using mobile sensors. In Proceedings of the EAI International Conference on Mobile Computing, Applications and Services (MobiCASE), Berlin, Germany, 12–13 November 2015; pp. 197–205. [Google Scholar]

- Shen, W.; Guo, Y.; Wang, Y.; Zhao, K.; Wang, B.; Yuille, A. Deep Regression Forests for Age Estimation. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2304–2313. [Google Scholar]

- Chen, S.; Zhang, C.; Dong, M.; Le, J.; Rao, M. Using Ranking-CNN for Age Estimation. In Proceedings of the CVPR, Honolulu, HI, USA, 22–25 July 2017; pp. 5183–5192. [Google Scholar]

- Lai, C.C.; Shih, T.P.; Ko, W.C.; Tang, H.J.; Hsueh, P.R. Severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) and coronavirus disease-2019 (COVID-19): The epidemic and the challenges. Int. J. Antimicrob. Agents 2020. [Google Scholar] [CrossRef]

- Wu, J.T.; Leung, K.; Bushman, M.; Kishore, N.; Niehus, R.; de Salazar, P.M.; Cowling, B.J.; Lipsitch, M.; Leung, G.M. Estimating clinical severity of COVID-19 from the transmission dynamics in Wuhan, China. Nat. Med. 2020, 1–5. [Google Scholar] [CrossRef]

- Rothe, R.; Timofte, R.; Van Gool, L. DEX: Deep EXpectation of apparent age from a single image. In Proceedings of the ICCV Workshops, Santiago, Chile, 13–16 December 2015; pp. 10–15. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the ICML, Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Fan, L. Image pixelization with differential privacy. In Proceedings of the IFIP Annual Conference on Data and Applications Security and Privacy (DBSec), Bergamo, Italy, 16–18 July 2018; pp. 148–162. [Google Scholar] [CrossRef]

| Methods | Output of the Method |

|---|---|

| Existing methods. | The estimated distribution of sensing data containing errors. |

| Our method. | The estimated distribution of true data without errors. |

| N | Number of participants. |

| True sensing value of participant i. | |

| Reported sensing value of participant i. | |

| X | . |

| Y | . |

| Set of standard deviations of the normal distributions of sensing errors of all participants. | |

| Number of bins of a histogram. | |

| Maximum value of a sensing data. | |

| Minimum value of a sensing data. | |

| Maximum value of a reported data. | |

| Minimum value of a reported data. | |

| Maximum value of a standard deviation. | |

| Minimum value of a standard deviation. | |

| Scale factor of a Laplace noise with regard to the sensing value. | |

| Scale factor of a Laplace noise with regard to the standard deviation. | |

| Number of participants whose reported values were categorized into the ith bin. | |

| Estimated number of participants whose true values were categorized into the ith bin. | |

| † | . |

| ‡ | . |

| Layer ID | Description of Each Layer |

|---|---|

| 1 | Input Layer |

| 2 | Convolutional Layer |

| 3 | Convolutional Layer |

| 4 | Max Pooling Layer |

| 5 | Convolutional Layer |

| 6 | Convolutional Layer |

| 7 | Max Pooling Layer |

| 8 | Convolutional Layer |

| 9 | Convolutional Layer |

| 10 | Convolutional Layer |

| 11 | Max Pooling Layer |

| 12 | Convolutional Layer |

| 13 | Convolutional Layer |

| 14 | Convolutional Layer |

| 15 | Max Pooling Layer |

| 16 | Convolutional Layer |

| 17 | Convolutional Layer |

| 18 | Convolutional Layer |

| 19 | Max Pooling Layer |

| 20 | Convolutional Layer |

| 21 | Convolutional Layer |

| 22 | Fully Connected Layer |

| 23 | Output Layer |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sei, Y.; Ohsuga, A. Differentially Private Mobile Crowd Sensing Considering Sensing Errors. Sensors 2020, 20, 2785. https://doi.org/10.3390/s20102785

Sei Y, Ohsuga A. Differentially Private Mobile Crowd Sensing Considering Sensing Errors. Sensors. 2020; 20(10):2785. https://doi.org/10.3390/s20102785

Chicago/Turabian StyleSei, Yuichi, and Akihiko Ohsuga. 2020. "Differentially Private Mobile Crowd Sensing Considering Sensing Errors" Sensors 20, no. 10: 2785. https://doi.org/10.3390/s20102785

APA StyleSei, Y., & Ohsuga, A. (2020). Differentially Private Mobile Crowd Sensing Considering Sensing Errors. Sensors, 20(10), 2785. https://doi.org/10.3390/s20102785