EEG Headset Evaluation for Detection of Single-Trial Movement Intention for Brain-Computer Interfaces

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

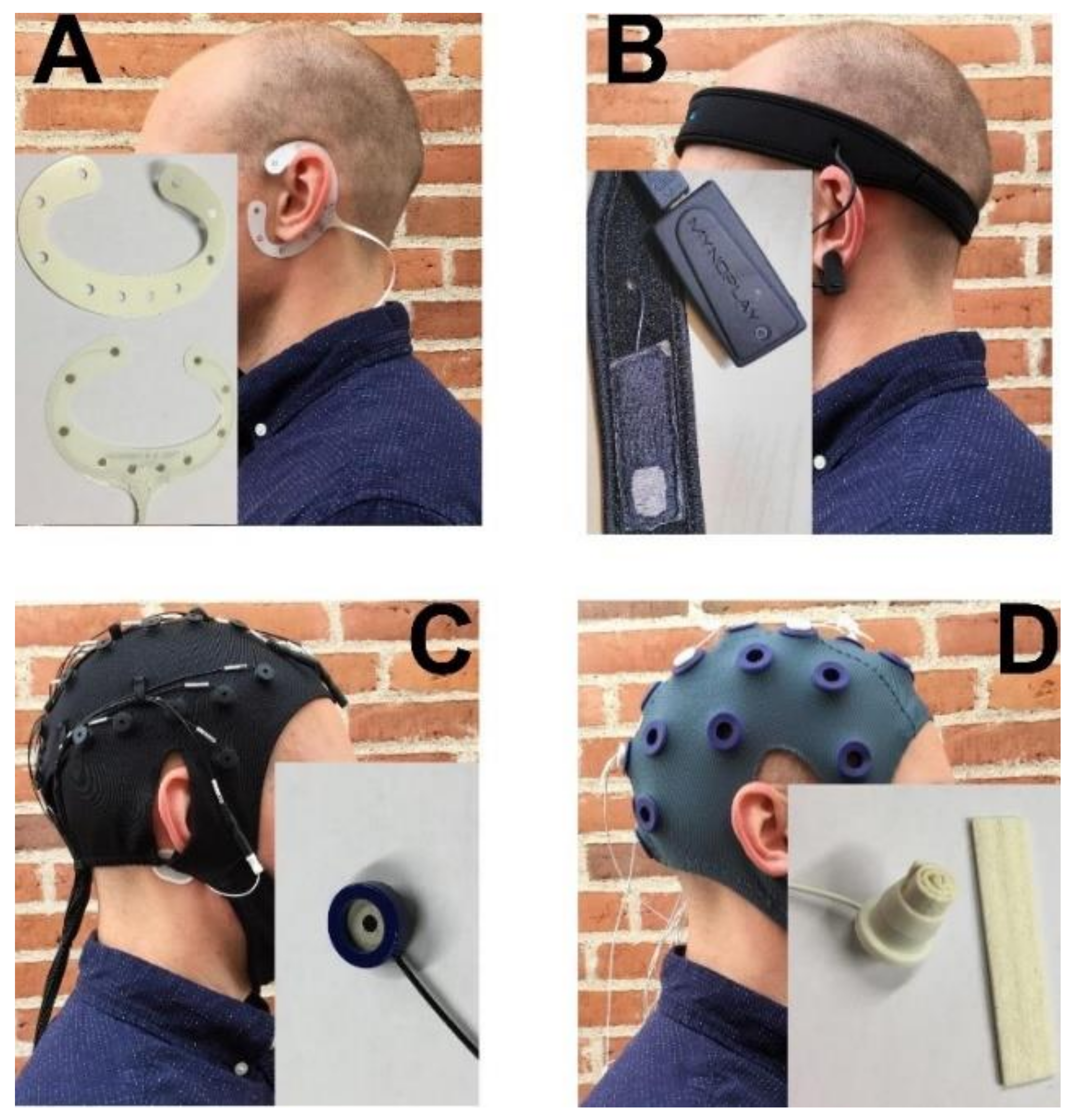

2.2. EEG Headsets

2.2.1. cEEGrid: TMSi

2.2.2. MyndBand: MyndPlay

2.2.3. Quick-Cap: Compumedics

2.2.4. Water-Based Electrodes: TMSi

2.3. Experimental Procedure

2.4. Data Analysis

2.4.1. Pre-Processing

2.4.2. Signal-to-Noise Ratio, Epoch Rejection, and Peak Amplitudes

2.4.3. Feature Extraction and Classification

2.5. Test–Retest Reliability

2.6. Statistics

3. Results

3.1. Signal Quality

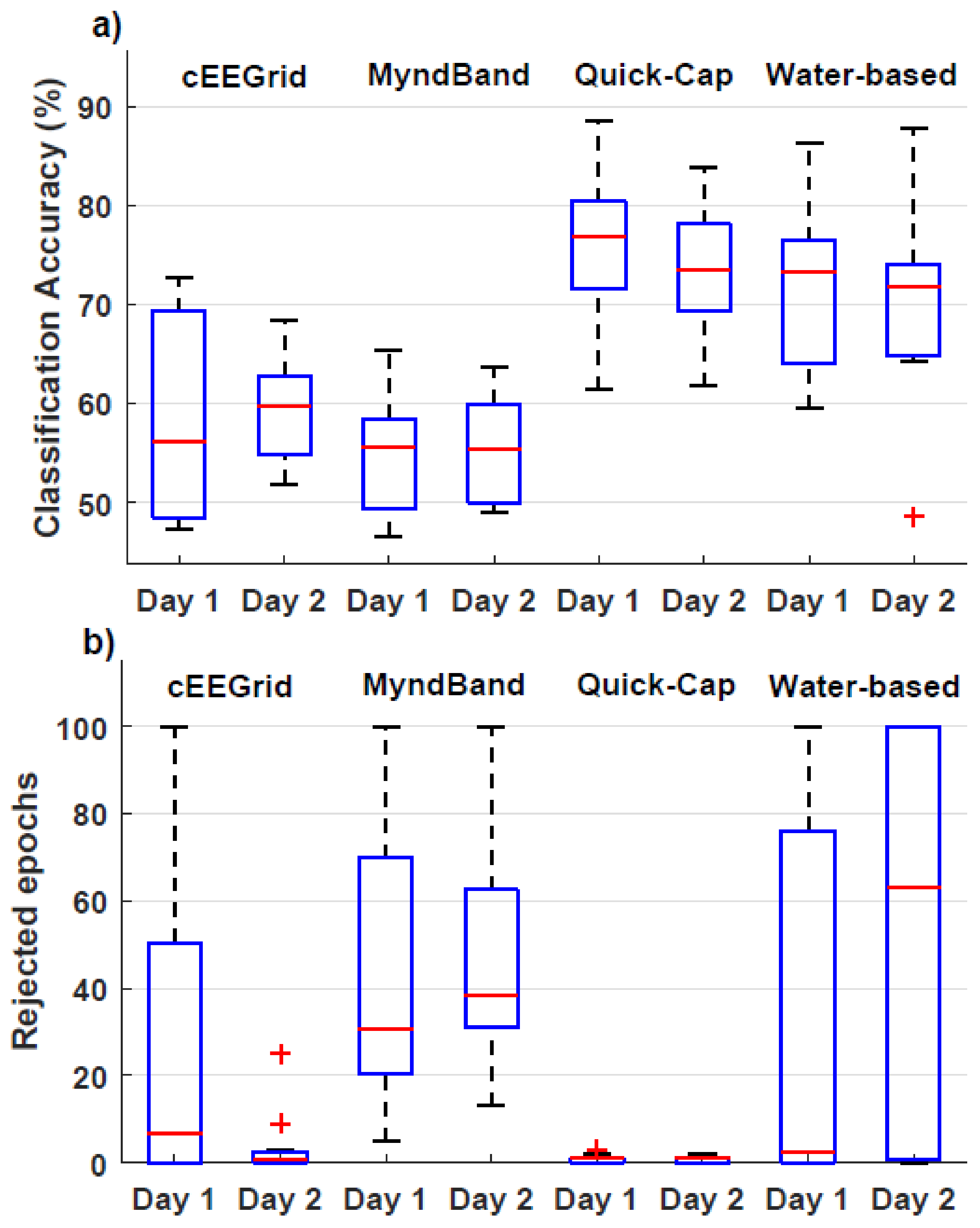

3.2. Movement Intention vs. Idle Classification

3.3. Test–Retest Reliability

4. Discussion

Limitations and Future Perspectives

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain-Computer Interfaces for Communication and Control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Birbaumer, N.; Cohen, L. Brain–computer Interfaces: Communication and Restoration of Movement in Paralysis. J. Physiol. 2007, 579, 621. [Google Scholar] [CrossRef] [PubMed]

- Huggins, J.E.; Moinuddin, A.A.; Chiodo, A.E.; Wren, P.A. What would Brain-Computer Interface Users Want: Opinions and Priorities of Potential Users with Spinal Cord Injury. Arch. Phys. Med. Rehabil. 2015, 96, S38–S45. [Google Scholar] [CrossRef] [PubMed]

- Biasiucci, A.; Leeb, R.; Iturrate, I.; Perdikis, S.; Al-Khodairy, A.; Corbet, T.; Schnider, A.; Schmidlin, T.; Zhang, H.; Bassolino, M. Brain-Actuated Functional Electrical Stimulation Elicits Lasting Arm Motor Recovery After Stroke. Nat. Commun. 2018, 9, 2421. [Google Scholar] [CrossRef]

- Ramos-Murguialday, A.; Broetz, D.; Rea, M.; Läer, L.; Yilmaz, Ö.; Brasil, F.; Liberati, G.; Curado, M.; Garcia-Cossio, E.; Vyziotis, A. Brain–machine Interface in Chronic Stroke Rehabilitation: A Controlled Study. Ann. Neurol. 2013, 74, 100–108. [Google Scholar] [CrossRef]

- Pichiorri, F.; Morone, G.; Petti, M.; Toppi, J.; Pisotta, I.; Molinari, M.; Paolucci, S.; Inghilleri, M.; Astolfi, L.; Cincotti, F. Brain–computer Interface Boosts Motor Imagery Practice during Stroke Recovery. Ann. Neurol. 2015, 77, 851–865. [Google Scholar] [CrossRef]

- Grosse-Wentrup, M.; Mattia, D.; Oweiss, K. Using Brain-Computer Interfaces to Induce Neural Plasticity and Restore Function. J. Neural Eng. 2011, 8, 025004. [Google Scholar] [CrossRef] [Green Version]

- Frolov, A.A.; Mokienko, O.; Lyukmanov, R.; Biryukova, E.; Kotov, S.; Turbina, L.; Nadareyshvily, G.; Bushkova, Y. Post-Stroke Rehabilitation Training with a Motor-Imagery-Based Brain-Computer Interface (BCI)-Controlled Hand Exoskeleton: A Randomized Controlled Multicenter Trial. Front. Neurosci. 2017, 11, 400. [Google Scholar] [CrossRef] [Green Version]

- Pascual-Leone, A.; Dang, N.; Cohen, L.G.; Brasil-Neto, J.P.; Cammarota, A.; Hallett, M. Modulation of Muscle Responses Evoked by Transcranial Magnetic Stimulation during the Acquisition of New Fine Motor Skills. J. Neurophysiol. 1995, 74, 1037–1045. [Google Scholar] [CrossRef]

- Krakauer, J.W. Motor Learning: Its Relevance to Stroke Recovery and Neurorehabilitation. Curr. Opin. Neurol. 2006, 19, 84–90. [Google Scholar] [CrossRef]

- Niazi, I.K.; Kersting, N.M.; Jiang, N.; Dremstrup, K.; Farina, D. Peripheral Electrical Stimulation Triggered by Self-Paced Detection of Motor Intention Enhances Motor Evoked Potentials. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 595–604. [Google Scholar] [CrossRef] [PubMed]

- Jochumsen, M.; Navid, M.S.; Rashid, U.; Haavik, H.; Niazi, I.K. EMG-Versus EEG-Triggered Electrical Stimulation for Inducing Corticospinal Plasticity. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1901–1908. [Google Scholar] [CrossRef] [PubMed]

- Xu, R.; Jiang, N.; Mrachacz-Kersting, N.; Lin, C.; Asin, G.; Moreno, J.; Pons, J.; Dremstrup, K.; Farina, D. A Closed-Loop Brain-Computer Interface Triggering an Active Ankle-Foot Orthosis for Inducing Cortical Neural Plasticity. IEEE Trans. Biomed. Eng. 2014, 61, 2092–2101. [Google Scholar] [PubMed]

- Cervera, M.A.; Soekadar, S.R.; Ushiba, J.; Millán, J.d.R.; Liu, M.; Birbaumer, N.; Garipelli, G. Brain-computer Interfaces for Post-stroke Motor Rehabilitation: A Meta-analysis. Ann. Clin. Transl. Neurol. 2018, 5, 651–663. [Google Scholar] [CrossRef] [PubMed]

- Jochumsen, M.; Knoche, H.; Kidmose, P.; Kjær, T.W.; Dinesen, B.I. Evaluation of EEG Headset Mounting for Brain-Computer Interface-Based Stroke Rehabilitation by Patients, Therapists, and Relatives. Front. Hum. Neurosci. 2020, 14, 13. [Google Scholar] [CrossRef]

- Ries, A.J.; Touryan, J.; Vettel, J.; McDowell, K.; Hairston, W.D. A Comparison of Electroencephalography Signals Acquired from Conventional and Mobile Systems. J. Neurosci. Neuroeng. 2014, 3, 10–20. [Google Scholar] [CrossRef]

- Radüntz, T.; Meffert, B. User Experience of 7 Mobile Electroencephalography Devices: Comparative Study. JMIR mHealth uHealth 2019, 7, e14474. [Google Scholar] [CrossRef] [Green Version]

- Nijboer, F.; Van De Laar, B.; Gerritsen, S.; Nijholt, A.; Poel, M. Usability of Three Electroencephalogram Headsets for Brain–computer Interfaces: A within Subject Comparison. Interact. Comput. 2015, 27, 500–511. [Google Scholar] [CrossRef] [Green Version]

- International Organization for Standardization. ISO 9241-11. Ergonomic Requirements for Office Work with Visual Display Terminals (VDTs)—Part 9: Requirements for Non-Keyboard Input Devices; ISO: Geneva, Switzerland, 1998. [Google Scholar]

- Jochumsen, M.; Niazi, I.K.; Mrachacz-Kersting, N.; Jiang, N.; Farina, D.; Dremstrup, K. Comparison of Spatial Filters and Features for the Detection and Classification of Movement-Related Cortical Potentials in Healthy Individuals and Stroke Patients. J. Neural Eng. 2015, 12, 056003. [Google Scholar] [CrossRef]

- Lotte, F.; Congedo, M.; Lecuyer, A.; Lamarche, F.; Arnaldi, B. A Review of Classification Algorithms for EEG-Based Brain–computer Interfaces. J. Neural Eng. 2007, 4, R1–R13. [Google Scholar] [CrossRef]

- Bai, O.; Lin, P.; Vorbach, S.; Li, J.; Furlani, S.; Hallett, M. Exploration of Computational Methods for Classification of Movement Intention during Human Voluntary Movement from Single Trial EEG. Clin. Neurophysiol. 2007, 118, 2637–2655. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chi, Y.M.; Wang, Y.; Wang, Y.; Maier, C.; Jung, T.; Cauwenberghs, G. Dry and Noncontact EEG Sensors for Mobile Brain–computer Interfaces. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 228–235. [Google Scholar] [CrossRef] [PubMed]

- Choi, S.; Han, C.; Choi, G.; Shin, J.; Song, K.S.; Im, C.; Hwang, H. On the Feasibility of using an Ear-EEG to Develop an Endogenous Brain-Computer Interface. Sensors 2018, 18, 2856. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Duvinage, M.; Castermans, T.; Petieau, M.; Hoellinger, T.; Cheron, G.; Dutoit, T. Performance of the Emotiv Epoc Headset for P300-Based Applications. Biomed. Eng. Online 2013, 12, 56. [Google Scholar] [CrossRef] [Green Version]

- Guger, C.; Krausz, G.; Allison, B.Z.; Edlinger, G. Comparison of Dry and Gel Based Electrodes for P300 Brain–computer Interfaces. Front. Neurosci. 2012, 6, 60. [Google Scholar] [CrossRef] [Green Version]

- Grozea, C.; Voinescu, C.D.; Fazli, S. Bristle-Sensors—low-Cost Flexible Passive Dry EEG Electrodes for Neurofeedback and BCI Applications. J. Neural Eng. 2011, 8, 025008. [Google Scholar] [CrossRef]

- Grummett, T.; Leibbrandt, R.; Lewis, T.; DeLosAngeles, D.; Powers, D.; Willoughby, J.; Pope, K.; Fitzgibbon, S. Measurement of Neural Signals from Inexpensive, Wireless and Dry EEG Systems. Physiol. Meas. 2015, 36, 1469. [Google Scholar] [CrossRef]

- Kappel, S.L.; Rank, M.L.; Toft, H.O.; Andersen, M.; Kidmose, P. Dry-Contact Electrode Ear-EEG. IEEE Trans. Biomed. Eng. 2019, 66, 150–158. [Google Scholar] [CrossRef]

- Käthner, I.; Halder, S.; Hintermüller, C.; Espinosa, A.; Guger, C.; Miralles, F.; Vargiu, E.; Dauwalder, S.; Rafael-Palou, X.; Solà, M. A Multifunctional Brain-Computer Interface Intended for Home use: An Evaluation with Healthy Participants and Potential End Users with Dry and Gel-Based Electrodes. Front. Neurosci. 2017, 11, 286. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Jiang, X.; Cao, T.; Wan, F.; Mak, P.U.; Mak, P.; Vai, M.I. Implementation of SSVEP Based BCI with Emotiv EPOC. In Proceedings of the 2012 IEEE International Conference on Virtual Environments Human-Computer Interfaces and Measurement Systems (VECIMS) Proceedings, Tianjin, China, 2–4 July 2012; pp. 34–37. [Google Scholar]

- Mathewson, K.E.; Harrison, T.J.; Kizuk, S.A. High and Dry? Comparing Active Dry EEG Electrodes to Active and Passive Wet Electrodes. Psychophysiology 2017, 54, 74–82. [Google Scholar] [CrossRef]

- Mayaud, L.; Congedo, M.; Van Laghenhove, A.; Orlikowski, D.; Figère, M.; Azabou, E.; Cheliout-Heraut, F. A Comparison of Recording Modalities of P300 Event-Related Potentials (ERP) for Brain-Computer Interface (BCI) Paradigm. Neurophysiol. Clin. Clin. Neurophysiol. 2013, 43, 217–227. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pinegger, A.; Wriessnegger, S.C.; Faller, J.; Müller-Putz, G.R. Evaluation of Different EEG Acquisition Systems Concerning their Suitability for Building a Brain–computer Interface: Case Studies. Front. Neurosci. 2016, 10, 441. [Google Scholar] [CrossRef] [Green Version]

- De Vos, M.; Kroesen, M.; Emkes, R.; Debener, S. P300 Speller BCI with a Mobile EEG System: Comparison to a Traditional Amplifier. J. Neural Eng. 2014, 11, 036008. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Li, G.; Chen, J.; Duan, Y.; Zhang, D. Novel Semi-Dry Electrodes for Brain–computer Interface Applications. J. Neural Eng. 2016, 13, 046021. [Google Scholar] [CrossRef] [PubMed]

- Zander, T.O.; Lehne, M.; Ihme, K.; Jatzev, S.; Correia, J.; Kothe, C.; Picht, B.; Nijboer, F. A Dry EEG-System for Scientific Research and Brain–computer Interfaces. Front. Neurosci. 2011, 5, 53. [Google Scholar] [CrossRef] [Green Version]

- Zander, T.O.; Andreessen, L.M.; Berg, A.; Bleuel, M.; Pawlitzki, J.; Zawallich, L.; Krol, L.R.; Gramann, K. Evaluation of a Dry EEG System for Application of Passive Brain-Computer Interfaces in Autonomous Driving. Front. Hum. Neurosci. 2017, 11, 78. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Oliveira, A.S.; Schlink, B.R.; Hairston, W.D.; König, P.; Ferris, D.P. Proposing Metrics for Benchmarking Novel EEG Technologies Towards Real-World Measurements. Front. Hum. Neurosci. 2016, 10, 188. [Google Scholar] [CrossRef] [Green Version]

- Radüntz, T. Signal Quality Evaluation of Emerging EEG Devices. Front. Physiol. 2018, 9, 98. [Google Scholar] [CrossRef] [Green Version]

- Debener, S.; Minow, F.; Emkes, R.; Gandras, K.; De Vos, M. How about Taking a Low-cost, Small, and Wireless EEG for a Walk? Psychophysiology 2012, 49, 1617–1621. [Google Scholar] [CrossRef]

- Rashid, U.; Niazi, I.; Signal, N.; Taylor, D. An EEG Experimental Study Evaluating the Performance of Texas Instruments ADS1299. Sensors 2018, 18, 3721. [Google Scholar] [CrossRef] [Green Version]

- Cruz-Garza, J.G.; Brantley, J.A.; Nakagome, S.; Kontson, K.; Megjhani, M.; Robleto, D.; Contreras-Vidal, J.L. Deployment of Mobile EEG Technology in an Art Museum Setting: Evaluation of Signal Quality and Usability. Front. Hum. Neurosci. 2017, 11, 527. [Google Scholar] [CrossRef] [PubMed]

- Laszlo, S.; Ruiz-Blondet, M.; Khalifian, N.; Chu, F.; Jin, Z. A Direct Comparison of Active and Passive Amplification Electrodes in the Same Amplifier System. J. Neurosci. Methods 2014, 235, 298–307. [Google Scholar] [CrossRef] [PubMed]

- Debener, S.; Emkes, R.; De Vos, M.; Bleichner, M. Unobtrusive Ambulatory EEG using a Smartphone and Flexible Printed Electrodes around the Ear. Sci. Rep. 2015, 5, 16743. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shibasaki, H.; Hallett, M. What is the Bereitschaftspotential? Clin. Neurophysiol. 2006, 117, 2341–2356. [Google Scholar] [CrossRef] [PubMed]

- Jochumsen, M.; Niazi, I.K.; Dremstrup, K.; Kamavuako, E.N. Detecting and Classifying Three Different Hand Movement Types through Electroencephalography Recordings for Neurorehabilitation. Med. Biol. Eng. Comput. 2015, 54, 1491–1501. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ibáñez, J.; Serrano, J.; Del Castillo, M.; Monge-Pereira, E.; Molina-Rueda, F.; Alguacil-Diego, I.; Pons, J. Detection of the Onset of Upper-Limb Movements Based on the Combined Analysis of Changes in the Sensorimotor Rhythms and Slow Cortical Potentials. J. Neural Eng. 2014, 11, 056009. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Niazi, I.K.; Jiang, N.; Tiberghien, O.; Nielsen, J.F.; Dremstrup, K.; Farina, D. Detection of Movement Intention from Single-Trial Movement-Related Cortical Potentials. J. Neural Eng. 2011, 8, 066009. [Google Scholar] [CrossRef]

- Müller-Putz, G.R.; Scherer, R.; Brunner, C.; Leeb, R.; Pfurtscheller, G. Better than Random? A Closer Look on BCI Results. Int. J. Bioelectromagn. 2008, 10, 52–55. [Google Scholar]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [Green Version]

- Liljequist, D.; Elfving, B.; Skavberg Roaldsen, K. Intraclass Correlation - A Discussion and Demonstration of Basic Features. PLoS ONE 2019, 14, e0219854. [Google Scholar] [CrossRef] [Green Version]

- Jochumsen, M.; Niazi, I.K.; Taylor, D.; Farina, D.; Dremstrup, K. Detecting and Classifying Movement-Related Cortical Potentials Associated with Hand Movements in Healthy Subjects and Stroke Patients from Single-Electrode, Single-Trial EEG. J. Neural Eng. 2015, 12, 056013. [Google Scholar] [CrossRef] [PubMed]

- Blankertz, B.; Lemm, S.; Kawanabe, M.; Tomioka, R.; Müller, K.-R. Optimizing Spatial filters for Robust EEG Single-Trial Analysis. IEEE Signal Process. Mag. 2007, 25, 41–56. [Google Scholar] [CrossRef]

- Bensch, M.; Karim, A.A.; Mellinger, J.; Hinterberger, T.; Tangermann, M.; Bogdan, M.; Rosenstiel, W.; Birbaumer, N. Nessi: An EEG-Controlled Web Browser for Severely Paralyzed Patients. Comput. Intell. Neurosci. 2007, 2007, 71863. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mugler, E.M.; Ruf, C.A.; Halder, S.; Bensch, M.; Kubler, A. Design and Implementation of a P300-Based Brain-Computer Interface for Controlling an Internet Browser. IEEE Trans. Neural Syst. Rehabil. Eng. 2010, 18, 599–609. [Google Scholar] [CrossRef] [PubMed]

| SNR | Amplitude (µV) | # Rejected Epochs | # Excluded Participants | |||||

|---|---|---|---|---|---|---|---|---|

| Day 1 | Day 2 | Day 1 | Day 2 | Day 1 | Day 2 | Day 1 | Day 2 | |

| cEEGrid | 0.8/1.2/1.4 | 0.6/0.9/1.6 | −4.9/−0.8/0.9 | −3.6/−1.6/0.6 | 2/6/65 | 1/3/7 | 2 | 0 |

| MyndBand | 0.6/0.9/1.1 | 0.7/0.8/0.9 | −0.7/0.3/0.9 | −0.6/0.2/0.6 | 7/19/45 | 18/28/36 | 2 | 1 |

| Quick-Cap | 1.1/1.5/2.5 | 1.4/1.7/2.2 | −3.4/−2.6/−0.9 | −2.6/−1.0/0.0 | 0/1/2 | 0/0/2 | 0 | 0 |

| Water-based | 0.8/1.3/2.0 | 1.0/1.4/2.7 | −7.4/−2.9/1.9 | −4.3/−2.7/−0.3 | 2/2/6 | 2/3/9 | 0 | 0 |

| 25% / Median / 75% | ||||||||

| Classification Accuracy (%) | # Rejected Epochs | # Excluded Participants | ||||

|---|---|---|---|---|---|---|

| Day 1 | Day 2 | Day 1 | Day 2 | Day 1 | Day 2 | |

| cEEGrid | 48/56/70 | 55/60/63 | 0/7/54 | 0/1/3 | 2 | 0 |

| MyndBand | 49/56/59 | 50/56/60 | 19/31/85 | 31/39/70 | 3 | 2 |

| Quick-Cap | 70/77/82 | 69/74/78 | 0/1/1 | 0/1/1 | 0 | 0 |

| Water-based | 64/73/78 | 65/72/75 | 0/3/88 | 0/63/100 | 3 | 4 |

| 25% / Median / 75% | ||||||

| Intraclass Correlation Coefficient (ICC) | |||

|---|---|---|---|

| ICC_SNR | ICC_Amplitude | ICC_Classification Accuracy | |

| cEEGrid | −0.3 | 0.32 | 0.63 |

| MyndBand | 0.43 | −0.21 | 0.33 |

| Quick-Cap | 0.78 | 0.83 | 0.59 |

| Water-based | −0.29 | 0.06 | −0.11 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jochumsen, M.; Knoche, H.; Kjaer, T.W.; Dinesen, B.; Kidmose, P. EEG Headset Evaluation for Detection of Single-Trial Movement Intention for Brain-Computer Interfaces. Sensors 2020, 20, 2804. https://doi.org/10.3390/s20102804

Jochumsen M, Knoche H, Kjaer TW, Dinesen B, Kidmose P. EEG Headset Evaluation for Detection of Single-Trial Movement Intention for Brain-Computer Interfaces. Sensors. 2020; 20(10):2804. https://doi.org/10.3390/s20102804

Chicago/Turabian StyleJochumsen, Mads, Hendrik Knoche, Troels Wesenberg Kjaer, Birthe Dinesen, and Preben Kidmose. 2020. "EEG Headset Evaluation for Detection of Single-Trial Movement Intention for Brain-Computer Interfaces" Sensors 20, no. 10: 2804. https://doi.org/10.3390/s20102804

APA StyleJochumsen, M., Knoche, H., Kjaer, T. W., Dinesen, B., & Kidmose, P. (2020). EEG Headset Evaluation for Detection of Single-Trial Movement Intention for Brain-Computer Interfaces. Sensors, 20(10), 2804. https://doi.org/10.3390/s20102804