Recognition of Human Activities Using Depth Maps and the Viewpoint Feature Histogram Descriptor

Abstract

:1. Introduction

2. Related Work and Contribution

- Proposition of an approach for recognition of activities with using sequences of point clouds and the VFH descriptor.

- Verification of the method on two representative, large datasets using k-NN and BiLSTM classifiers.

- Reduction of classification time for k-NN by introducing a two-tier model.

- Improvement of BiLSTM-based classification via transfer learning and combining multiple networks by fuzzy integrals.

3. Viewpoint Feature Histogram (VFH)

4. Classification

4.1. DTW

4.2. BiLSTM

5. Datasets

5.1. UTD Multimodal HUMAN Action Dataset

5.2. MSR-Action 3D Dataset

- Action Set 1 (AS1): horizontal arm wave, hammer, forward punch, high throw, hand clap, bend, tennis serve, pickup & throw.

- Action Set 2 (AS2): high arm wave, hand catch, draw X, draw tick, draw circle, two hand wave, forward kick. side boxing.

- Action Set 3 (AS3): high throw, forward kick, side kick, jogging, tennis swing, tennis serve, golf swing, pickup & throw.

6. Activity Recognition System

- (1)

- Segmentation of the human figure;

- (2)

- Conversion of the depth map of the segmented human figure to the point cloud and downsampling the point cloud;

- (3)

- Building the smallest possible axis aligned cuboid that entirely embraces the point cloud of the segmented human figure (bounding box);

- (4)

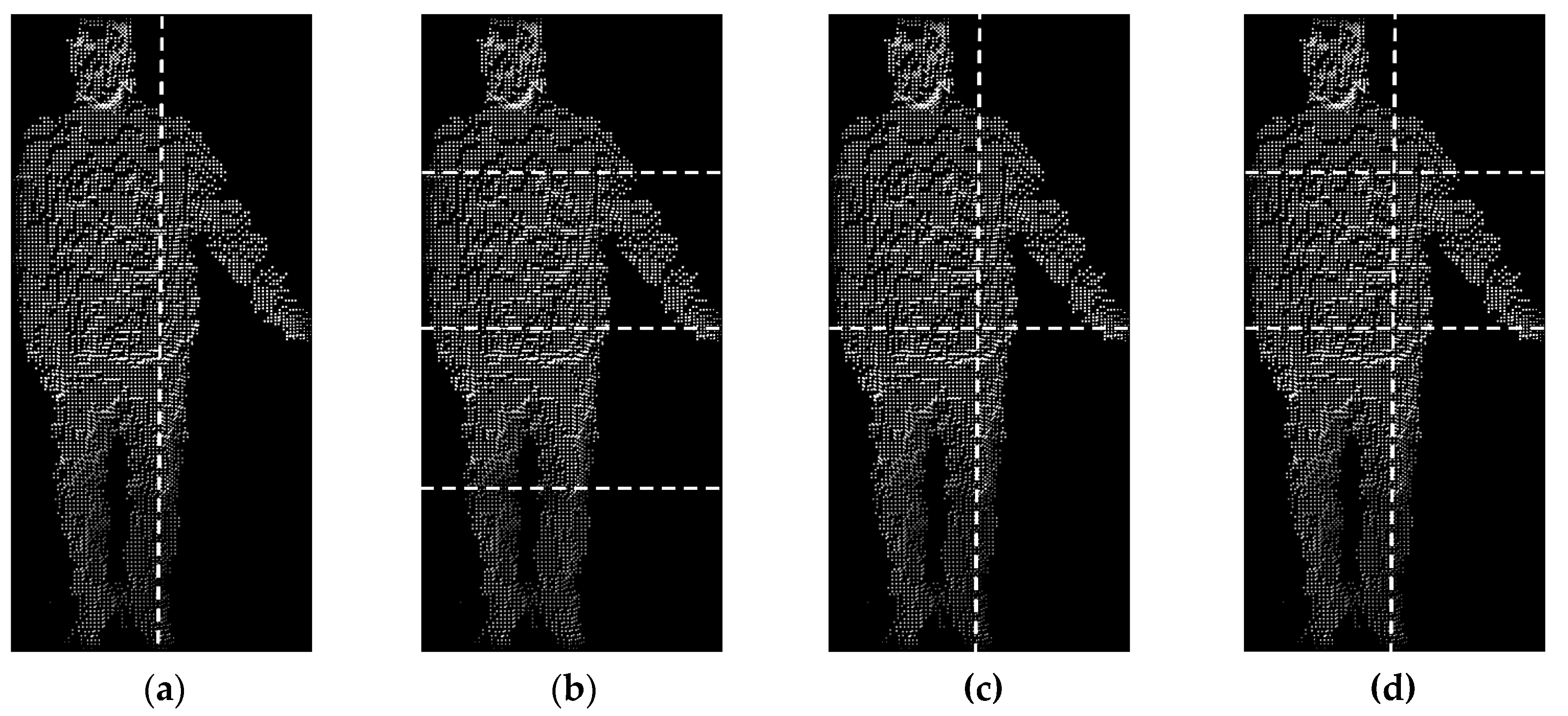

- Dividing the bounding box into several cuboidal cells to increase the distinctiveness of features to be determined in the next steps;

- (5)

- Computing the VFH descriptors for each cell and representing the histograms by their mean values m(.) and standard deviations s(.);

- (6)

- Concatenation of the obtained m(.) and s(.) values into a feature vector.

7. Experiments

7.1. Activity Recognition Using DTW

- Step 1:

- The number k1 of the nearest neighbors of the classified activity is determined in a reduced training set composed of representatives of all activities.

- Step 2:

- For a given number k = k2, the answer of the k-NN classifier is determined based on the training set containing all the realizations, i.e., before reduction, of the activities identified among k1 neighbors determined in step 1.

7.2. Activity Recognition Using the BiLSTM Network

7.3. The use of BiLSTM Networks Fusion Using the Fuzzy Integral Method

- The degree of importance of the classifier was determined as with denoting the recognition rate of the classifier i in the LOSO test.

- The fuzzy integral factor , ϵ (−1, +∞) was determined on the basis of the parameter from the quadratic equation:

- Assuming that the output of the classifier corresponding to a class is and where , specifies the classifier number, a class resulting from the fusion of three classifiers was determined based on the relations:

8. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Warchoł, D.; Kapuściński, T.; Wysocki, M. Recognition of Fingerspelling Sequences in Polish Sign Language Using Point Clouds Obtained from Depth Images. Sensors 2019, 19, 1078. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, K.; Shi, Y.; Zheng, L.; Zhang, J.; Liu, M.; Huang, H.; Su, H.; Cohen-Or, D.; Chen, B. 3D attention-driven depth acquisition for object identification. ACM Trans. Graph. (TOG) 2016, 35, 1–14. [Google Scholar] [CrossRef]

- Michel, D.; Panagiotakis, C.; Argyros, A.A. Tracking the articulated motion of the human body with two RGBD camera. Mach. Vis. Appl. 2014, 26, 41–54. [Google Scholar] [CrossRef]

- Kapuściński, T.; Oszust, M.; Wysocki, M.; Warchoł, D. Recognition of Hand Gestures Observed by Depth Cameras. Int. J. Adv. Robot. Syst. 2015, 12, 36. [Google Scholar] [CrossRef]

- Junsong, Y.; Zicheng, L.; Ying, W. Discriminative Subvolume Search for Efficient Action Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2009), Miami, FL, USA, 22–24 June 2009. [Google Scholar]

- Chen, C.; Jafari, R.; Kehtarnavaz, N. A Real-Time Human Action Recognition System Using Depth and Inertial Sensor Fusion. IEEE Sens. J. 2016, 16, 773–781. [Google Scholar] [CrossRef]

- Point Cloud Library (PCL). Available online: http://pointclouds.org (accessed on 14 March 2020).

- Chen, C.; Jafari, R.; Kehtarnavaz, N. UTD-MHAD: A Multimodal Dataset for Human Action Recognition Utilizing a Depth Camera and a Wearable Inertial Sensor. In Proceedings of the IEEE International Conference on Image Processing, Quebec City, QC, Canada, 27–30 September 2015. [Google Scholar]

- Chen, C.; Jafari, R.; Kehtarnavaz, N. UTD Multimodal Human Action Dataset (UTD-MHAD). Available online: http://www.utdallas.edu/~kehtar/UTD-MHAD.html (accessed on 14 March 2020).

- Wang, J. MSR Action 3D. Available online: http://users.eecs.northwestern.edu/~jwa368/my_data.html (accessed on 14 March 2020).

- Wanqing, L.; Zhengyou, Z.; Zicheng, L. Action Recognition Based on A Bag of 3D Points. In Proceedings of the IEEE International Workshop on CVPR for Human Communicative Behavior Analysis (in conjunction with CVPR2010), San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Vieira, A.; Nascimento, E.; Oliveira, G.; Liu, Z.; Campos, M. Stop: Space-time occupancy patterns for 3d action recognition from depth map sequences. In Proceedings of the Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications, Buenos Aires, Argentina, 3–6 September 2012; Volume 7441, pp. 52–59. [Google Scholar]

- Wang, J.; Liu, Z.; Chorowski, J.; Chen, Z.; Wu, Y. Robust 3d action recognition with random occupancy patterns. In Proceedings of the European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012; pp. 872–885. [Google Scholar]

- Yang, X.; Zhang, C.; Tina, Y. Recognizing actions using depth motion maps based histograms of oriented gradients. In Proceedings of the International Conference on Multimedia, Nara, Japan, 29 October–2 November 2012. [Google Scholar]

- Chen, C.; Liu, K.; Kehtarnavaz, N. Real-time human action recognition based depth motion maps. J. Real-Time Image Process. 2013, 12, 155–163. [Google Scholar] [CrossRef]

- Oreifej, O.; Liu, Z. Hon4d: Histogram of oriented 4d normals for activity recognition from depth sequences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 716–723. [Google Scholar]

- Kim, D.; Yun, W.-H.; Yoon, H.-S.; Kim, J. Action recognition with depth maps using HOG descriptors of multi-view motion appearance and history. In Proceedings of the Eighth International Conference on Mobile Ubiquitous Computing, Systems, Services and Technologies, Rome, Italy, 24–28 August 2014; pp. 126–130. [Google Scholar]

- Wang, P.; Li, W.; Li, C.; Hou, Y. Action Recognition Based on Joint Trajectory Maps with Convolutional Neural Networks. IEEE Trans. Cybern. 2016, 158, 43–53. [Google Scholar]

- Kamel, A.; Sheng, B.; Yang, P.; Li, P. Deep Convolutional Neural Networks for Human Action Recognition Using Depth Maps and Postures. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 1806–1819. [Google Scholar] [CrossRef] [Green Version]

- Hou, Y.; Li, Z.; Wang, P.; Li, W. Skeleton Optical Spectra-Based Action Recognition Using Convolutional Neural Networks. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 807–811. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Z.; Wu, Y.; Yuan, J. Mining action let ensemble for action recognition with depth cameras. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1290–1297. [Google Scholar]

- Yang, X.; Tian, Y.L. EigenJoints-based action recognition using Naïve–Bayes-Nearest-Neighbor. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 14–19. [Google Scholar]

- Luo, J.; Wang, W.; Qi, H. Group sparsity and geometry constrained dictionary learning for action recognition from depth maps. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 1809–1816. [Google Scholar]

- Pugeault, N. ASL Finger Spelling Dataset. Available online: http://empslocal.ex.ac.uk/people/staff/np331/index.php?section=FingerSpellingDataset (accessed on 6 May 2020).

- Rusu, R.B.; Bradski, G.; Thibaux, R. Fast 3D recognition and pose using the Viewpoint Feature Histogram, Intelligent Robots and Systems (IROS). In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 2155–2162. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast point feature histograms (FPFH) for 3D registration, Robotics and Automation. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Müller, M. Dynamic Time Warping. In Information Retrieval for Music and Motion; Springer: Berlin/Heidelberg, Germany, 2007; pp. 69–84. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Lstm can solve hard long time lag problems. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 2–5 December 1996; pp. 473–479. [Google Scholar]

- Long Short-Term Memory Networks. Available online: https://www.mathworks.com/help/deeplearning/ug/long-short-term-memory-networks.html (accessed on 14 March 2020).

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Zhang, Z.; Liu, Z. Action Recognition Based on A Bag of 3D Points. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition—Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 914–920. [Google Scholar]

- Madany, N.E.D.E.; He, Y.; Guan, L. Human action recognition via multiview discriminative analysis of canonical correlations. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 4170–4174. [Google Scholar]

- Xu, L.; Krzyżak, A.; Suen, C.Y. Methods of combining multiple classifiers and their applications to hand writing recognition. IEEE Trans. SMC 1992, 22, 418–435. [Google Scholar]

- Cho, S.-B.; Kim, J.H. Combining multiple neural networks by fuzzy integral for robust classification. IEEE Trans. SMC 1995, 25, 380–384. [Google Scholar]

- Cho, S.-B.; Kim, J.H. Multiple network fusion using fuzzy logic. IEEE Trans. Neural Netw. 1995, 6, 497–501. [Google Scholar] [PubMed]

- Tahani, H.; Keller, J.M. Information fusion in computer vision using the fuzzy integral. IEEE Trans. SMC 1990, 20, 733–741. [Google Scholar] [CrossRef]

- Gou, J.; Ma, H.; Ou, W.; Zheng, S.; Rao, Y. A generalized mean distance-based k-nearest neighbor classifier. Expert Syst. Appl. 2019, 115, 356–372. [Google Scholar] [CrossRef]

- Gou, J.; Wang, L.; Hou, B.; Lv, J.; Yuan, Y.; Mao, Q. Two-phase probabilistic collaborative representation-based classification. Expert Syst. Appl. 2019, 133, 9–20. [Google Scholar] [CrossRef]

- Gou, J.; Wanga, L.; Yi, Z.; Yuan, Y.; Ou, W.; Maoa, O. Weighted discriminative collaborative competitive representation for robust image classification. Neural Netw. 2020, 125, 104–120. [Google Scholar] [CrossRef] [PubMed]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Conf. on Computer Vision and Pattern Recognition CVPR 2017, Honolulu, HI, USA, 22–25 July 2017; pp. 77–85. [Google Scholar]

| Work | Method | Classifier | Dataset | Efficiency [%] |

|---|---|---|---|---|

| Wanging et al. [11] | Action graph: models the dynamics; its nodes (bag of 3D points) represent salient postures. | bi-gram with maximum likelihood decoding (BMLD) | MSR Action 3D * | AS1:72.9 AS2:71.9 AS3:79.2 |

| Vieira et al. [12] | Space–Time Occupancy Patterns: space and time axes divided into segments define a 4D grid for each depth map sequence. | SVM | MSR Action 3D * | AS1:84.7 AS2:81.3 AS3:88.4 |

| Wang et al. [13] | Random Occupancy Patterns: a sampling scheme that effectively explores very large sampling spaces; the features robustly encoded by sparse coding. | SVM | MSR Action 3D ** Gesture3D | 86.2 88.5 |

| Yang et al. [14] | Depth Motion Maps: accumulate sequences of depths and histograms of oriented gradients. | SVM | MSR Action 3D * | AS1:96.2 AS2: 84.1 AS3: 94.6 |

| Chen et al. [6,15] | Depth Motion Maps: accumulate sequences of depths and histograms of oriented gradients. | Collaborative Representation classifier | MSR Action 3D * | AS1: 96.2 AS2: 83.2 AS3: 92 |

| Oreifej et al. [16] | Histogram of Oriented 4D Normals: represents the distribution of the surface normal orientation in the space of time, depth, and spatial coordinates. | SVM | MSR Action 3D ** | 88.89 |

| MSR Hand Gesture | 92.45 | |||

| 3D Action Pairs | 96.67 | |||

| Kim et al. [17] | Depth Motion Appearance, Depth Motion History: local appearances and shapes are represented by histogram of oriented gradients. | SVM | MSR Action 3D * | 90.45 |

| Wanget al. [18] | Joint Trajectory Maps: the skeleton trajectory is projected to the three Cartesian planes and processed by three respective CNNs. | CNN | MSRC-12 Kinect Gesture * | 93.12 |

| G3D Dataset * | 94.24 | |||

| UTD-MHAD * | 85.81 | |||

| Kamel et al. [19] | Depth Motion Image: accumulates depth maps, Moving Joints Descriptor: represents motion of body joints; three CNN channels: DMI, (DMI+MJD), MJD. | CNN | MSR Action 3D * UTD-MHAD * MAD * | 94.51 88.14 91.86 |

| Hou et al. [20] | Skeleton Optical Spectra: encode the spatiotemporal information of a skeleton sequence into color texture images; desirable features are learned by three CNNs – for front, side, and top view. | CNN | MSR Action 3D * UTD-MHAD * MAD * | 94.51 88.14 91.86 |

| Wang et al. [21] | 3D Skeletons: a joint descriptor (joint position, local space around it), Fourier Temporal Pyramid as a joint motion representation. | SVM | MSR Action 3D * MSRDailyActivity3D* CMU MoCap* | 88.2 85.75 98.13 |

| Yang, Tian, [22] | EigenJoints: features based on position differences of joints, combine static posture, motion, and offset. | Naïve-Bayes- Nearest-Neighbor Classifier | MSR Action3D * | AS1:74.5 AS2: 76.1 AS3: 96.4 |

| Luo et al. [23] | Temporal Pyramid Matching: representation of temporal information in depth sequences; discriminative dictionary learning for sparse coding of the 3D joint features. | SVM | MSR Action3D ** | 96.7 |

| MSR DailyActivity 3D * | AS1: 97.2 AS2: 95.5 AS3: 99.1 |

| Number of the Nearest Neighbors | Recognition Rate [%] | ||||

|---|---|---|---|---|---|

| Without Division | Vertical Division into 2 Cells | Cross Division into 4 Cells | Horizontal Division into 4 Cells | Division into 6 Cells | |

| k = 1 | 74.78 | 80.24 | 82.34 | 85.48 | 86.37 |

| k = 2 | 72.80 | 81.52 | 81.99 | 83.37 | 84.20 |

| k = 3 | 75.59 | 81.40 | 82.10 | 85.59 | 86.63 |

| k = 4 | 76.99 | 80.82 | 84.19 | 86.75 | 87.56 |

| k = 5 | 78.96 | 80.83 | 83.97 | 87.10 | 88.15 |

| k = 6 | 79.43 | 83.26 | 83.96 | 87.42 | 88.03 |

| k = 7 | 79.08 | 82.91 | 83.27 | 87.55 | 86.98 |

| k = 8 | 78.50 | 83.02 | 83.15 | 88.49 | 86.64 |

| k = 9 | 79.77 | 83.37 | 85.01 | 87.67 | 87.57 |

| k =10 | 79.43 | 83.60 | 84.54 | 87.90 | 88.58 |

| Number of the Nearest Neighbors | Recognition Rate [%] | ||||

|---|---|---|---|---|---|

| Without Division | Vertical Division into 2 Cells | Cross Division into 4 Cells | Horizontal Division into 4 Cells | Division into 6 Cells | |

| k = 1 | 59.56 | 69.66 | 69.75 | 69.20 | 77.09 |

| k = 2 | 52.55 | 67.89 | 68.68 | 64.66 | 75.42 |

| k = 3 | 58.07 | 73.89 | 73.63 | 69.91 | 79.78 |

| k = 4 | 60.41 | 71.56 | 73.15 | 70.28 | 81.30 |

| k = 5 | 59.93 | 72.30 | 73.90 | 71.16 | 81.19 |

| k = 6 | 61.21 | 74.96 | 73.15 | 69.21 | 80.51 |

| k = 7 | 63.53 | 74.73 | 73.60 | 71.20 | 81.05 |

| k = 8 | 63.54 | 75.49 | 73.61 | 70.40 | 80.08 |

| k = 9 | 62.87 | 75.68 | 74.90 | 70.85 | 80.29 |

| k =10 | 64.62 | 77.09 | 74.62 | 71.92 | 79.43 |

| Method | Recognition Rate [%] |

|---|---|

| Wang et al. [18] | 85.81 |

| Hou et al. [20] | 86.97 |

| Kamel et al. [19] | 88.14 |

| Our work | 88.58 |

| Method | Recognition Rate [%] |

|---|---|

| Chen et. al. [6] | 85.10 |

| Mandany et. al. [32] | 93.26 |

| Our work | 99.30 |

| Data Set | Chen et al. [15] | Proposed Method | ||||

|---|---|---|---|---|---|---|

| Test A | Test B | Test C | Test A | Test B | Test C | |

| AS1 | 97.3 | 98.6 | 96.2 | 100 | 95.3 | 87.8 |

| AS2 | 96.1 | 98.7 | 83.2 | 94.9 | 93.5 | 86.2 |

| AS3 | 98.7 | 100 | 92 | 100 | 94.7 | 90.5 |

| Average | 97.4 | 99.1 | 90.5 | 98.3 | 94.5 | 88.1 |

| Division of the Bounding Box | Average Classification Time [ms] | |

|---|---|---|

| UTD-MHAD | MSR-Action 3D | |

| Without division | 81.2 | 38.8 |

| Vertical division into 2 cells | 86.0 | 41.3 |

| Cross division into 4 cells | 95.5 | 52.5 |

| Horizontal division into 4 cells | 97.1 | 53.2 |

| Division into 6 cells | 112.9 | 57.4 |

| Number of the Nearest Neighbors | Recognition Rate [%] | ||

|---|---|---|---|

| All Features (Based on ) | V1: Features Based on | V2: Features Based on | |

| k = 1 | 86.37 | 86.28 | 85.59 |

| k = 2 | 84.20 | 84.20 | 85.13 |

| k = 3 | 86.63 | 87.80 | 85.71 |

| k = 4 | 87.56 | 87.45 | 86.52 |

| k = 5 | 88.15 | 87.92 | 87.23 |

| k = 6 | 88.03 | 87.68 | 87.34 |

| k = 7 | 86.98 | 87.46 | 87.45 |

| k = 8 | 86.64 | 88.73 | 88.39 |

| k = 9 | 87.57 | 87.92 | 88.50 |

| k = 10 | 88.58 | 87.92 | 87.92 |

| Number of the Nearest Neighbors | Recognition Rate [%] | ||

|---|---|---|---|

| All Features (Based on ) | V1: Features Based on | V2: Features Based on | |

| k = 1 | 77.09 | 80.21 | 79.03 |

| k = 2 | 75.42 | 75.40 | 75.66 |

| k = 3 | 79.78 | 78.84 | 80.97 |

| k = 4 | 81.30 | 79.96 | 80.56 |

| k = 5 | 81.19 | 79.79 | 81.45 |

| k = 6 | 80.51 | 79.65 | 82.89 |

| k = 7 | 81.05 | 79.87 | 82.10 |

| k = 8 | 80.08 | 80.13 | 80.45 |

| k = 9 | 80.29 | 81.77 | 82.09 |

| k =10 | 79.43 | 72.46 | 82.62 |

| Number of the Nearest Neighbors k2 | Recognition Rate [%] | |||||

|---|---|---|---|---|---|---|

| k1 = 5 | k1 = 10 | |||||

| All Features | V1 | V2 | All Features | V1 | V2 | |

| 1 | 85.94 | 86.28 | 86.29 | 85.24 | 86.40 | 85.59 |

| 2 | 84.90 | 85.36 | 85.71 | 84.43 | 85.24 | 85.12 |

| 3 | 86.63 | 87.80 | 85.71 | 86.51 | 87.21 | 85.24 |

| 4 | 86.87 | 87.46 | 87.22 | 87.10 | 87.33 | 85.71 |

| 5 | 87.91 | 88.38 | 87.46 | 87.45 | 88.04 | 86.18 |

| 6 | 87.45 | 87.46 | 87.57 | 87.56 | 87.57 | 86.64 |

| 7 | 86.64 | 88.03 | 86.76 | 86.52 | 86.53 | 86.06 |

| 8 | 85.59 | 87.33 | 87.11 | 86.87 | 87.57 | 86.76 |

| 9 | 86.98 | 86.76 | 87.69 | 86.99 | 86.87 | 86.99 |

| 10 | 86.87 | 87.45 | 86.99 | 86.98 | 86.41 | 86.87 |

| Number of the Nearest Neighbors k2 | Recognition Rate [%], k1 = 5 | ||

|---|---|---|---|

| All Features | V1 | V2 | |

| 1 | 83.12 | 83.07 | 83.05 |

| 2 | 83.86 | 80.86 | 81.18 |

| 3 | 85.23 | 83.94 | 83.19 |

| 4 | 84.64 | 84.32 | 82.05 |

| 5 | 84.82 | 85.06 | 83.45 |

| 6 | 84.55 | 84.50 | 83.45 |

| 7 | 84.55 | 84.28 | 83.83 |

| 8 | 83.74 | 84.66 | 83.78 |

| 9 | 84.32 | 85.00 | 83.18 |

| 10 | 83.95 | 84.87 | 83.45 |

| AS1 | AS2 | AS3 | ||||||

|---|---|---|---|---|---|---|---|---|

| All Features | V1 | V2 | All Features | V1 | V2 | All Features | V1 | V2 |

| 84.4 | 87.0 | 88.2 | 82.6 | 82.5 | 80.9 | 91.7 | 89.6 | 86.0 |

| Variants of the Features | Average Classification Time Using Representatives [ms] | ||

|---|---|---|---|

| UTD-MHAD | MSR Action 3D | ||

| k1 = 5 | k1 = 10 | k1 = 5 | |

| All features | 46.2 | 59.1 | 22.5 |

| V1 | 40.9 | 53.3 | 16.9 |

| V2 | 37.9 | 49 | 11.3 |

| Training 1 (Random Starting Weights) | Training 2 (Starting Weights Based on Training 1) | Training 3 (Starting Weights Based on Training 2) | |

|---|---|---|---|

| All the features | 80.70 | 82.68 | 82.45 |

| Variant V1 | 80.24 | 83.14 | 84.48 |

| Variant V2 | 81.98 | 83.27 | 82.33 |

| First Training | Second Training | Third Training |

|---|---|---|

| AS1 (random starting weights) | AS1 (starting weights taken from the first training on AS3) | AS1 (starting weights taken from the second training on AS3) |

| 83.60/83.58/88.22 | 85.19/87.31/86.59 | 85.72/86.89/87.73 |

| AS2 (starting weights taken from the first training on AS1) | AS2 (starting weights taken from the second training on set AS1) | AS2 (starting weights taken from the third training on AS1) |

| 83.43/83.24/85.63 | 81.16/82.43/82.41 | 84.11/82.01/8455 |

| AS3 (starting weights taken from the first training on AS2) | AS3 (starting weights taken from the second training on AS2) | AS3 (starting weights taken from the third training on AS2) |

| 87.64 /87.24/86.27 | 86.90/87.51/84.62 | 88.03/89.34/87.14 |

| Title 1 | k-NN | BiLSTM | BiLSTM + Fuzzy Integral | ||||||

|---|---|---|---|---|---|---|---|---|---|

| All Features | V1 | V2 | All Features | V1 | V2 | All Features | V1 | V2 | |

| AS1 | 87.80 | 89.89 | 90.83 | 85.72 | 87.31 | 88.22 | 85.26 | 87.77 | 90.34 |

| AS2 | 86.23 | 85.85 | 84.40 | 84.11 | 83.24 | 85.63 | 87.48 | 85.36 | 86.23 |

| AS3 | 90.5 | 92.28 | 92.50 | 88.03 | 89.34 | 87.14 | 90.21 | 88.49 | 89.30 |

| UTD-MHAD | 88.58 | 88.73 | 88.50 | 82.68 | 83.48 | 83.27 | 84.89 | 84.77 | 84.77 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sidor, K.; Wysocki, M. Recognition of Human Activities Using Depth Maps and the Viewpoint Feature Histogram Descriptor. Sensors 2020, 20, 2940. https://doi.org/10.3390/s20102940

Sidor K, Wysocki M. Recognition of Human Activities Using Depth Maps and the Viewpoint Feature Histogram Descriptor. Sensors. 2020; 20(10):2940. https://doi.org/10.3390/s20102940

Chicago/Turabian StyleSidor, Kamil, and Marian Wysocki. 2020. "Recognition of Human Activities Using Depth Maps and the Viewpoint Feature Histogram Descriptor" Sensors 20, no. 10: 2940. https://doi.org/10.3390/s20102940