Quality Control and Pre-Analysis Treatment of the Environmental Datasets Collected by an Internet Operated Deep-Sea Crawler during Its Entire 7-Year Long Deployment (2009–2016) †

Abstract

:1. Introduction

2. Materials and Methods

2.1. The Crawler and the Study Site

2.2. Data Collection, Quality Control, and Treatment

- Instrument Level tests (real-time) can indicate sensor failure or a loss of calibration.

- Regional Level tests (real-time) identify extreme values not associated with North East Pacific waters below 300 m depth, possibly due to sensor drift or biofouling.

- Station Level tests (real-time) further narrow the acceptable data range based on previous, adequate crawler data.

- Spike tests (delayed-mode) based on the result of Equation (1) not exceeding a variable-specific thresholdwith V being the value of the tested variable at three consecutive time slots t1, t2, and t3.|Vt2 − (Vt3 + Vt1)/2| − |(Vt3 − Vt1)/2|,

- Gradient tests (delayed-mode) based on the result of Equation (2) not exceeding a variable-specific threshold.with V being the value of the tested variable at three consecutive time slots t1, t2, and t3.|V2 − (V3 + V1)/2|,

- Stuck Value tests (delayed-mode) detect non-changing scalar values within a given time period.

- Absence of quality control (quality flag 0).

- Differential range and scale between distinct sensors and deployment periods for the same variable.

- Presence of underlying short- or long-term trends in values.

- Presence of non-realistic peaks and lows in values.

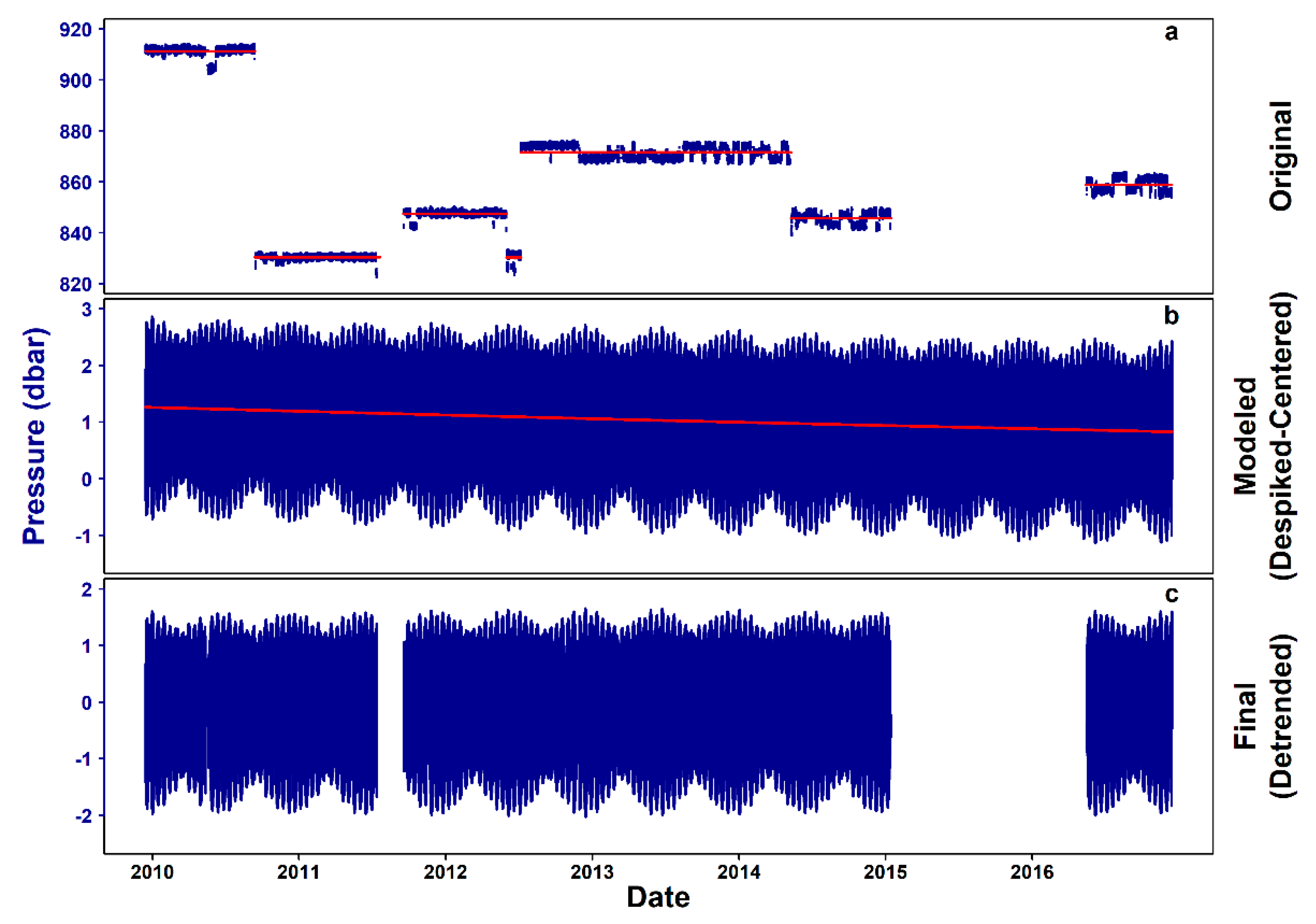

2.2.1. Pressure

2.2.2. Temperature

2.2.3. Conductivity and Salinity

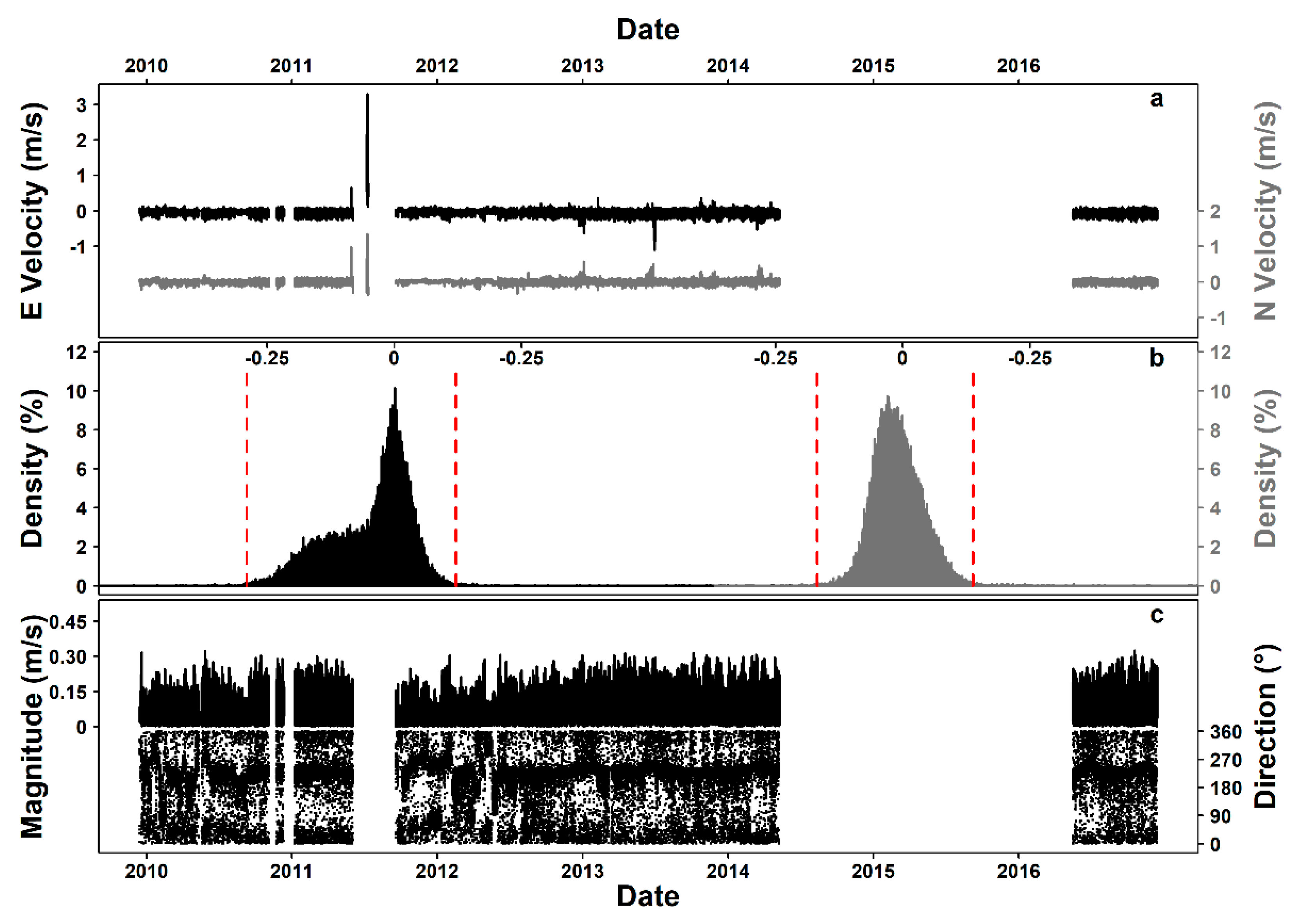

2.2.4. Flow

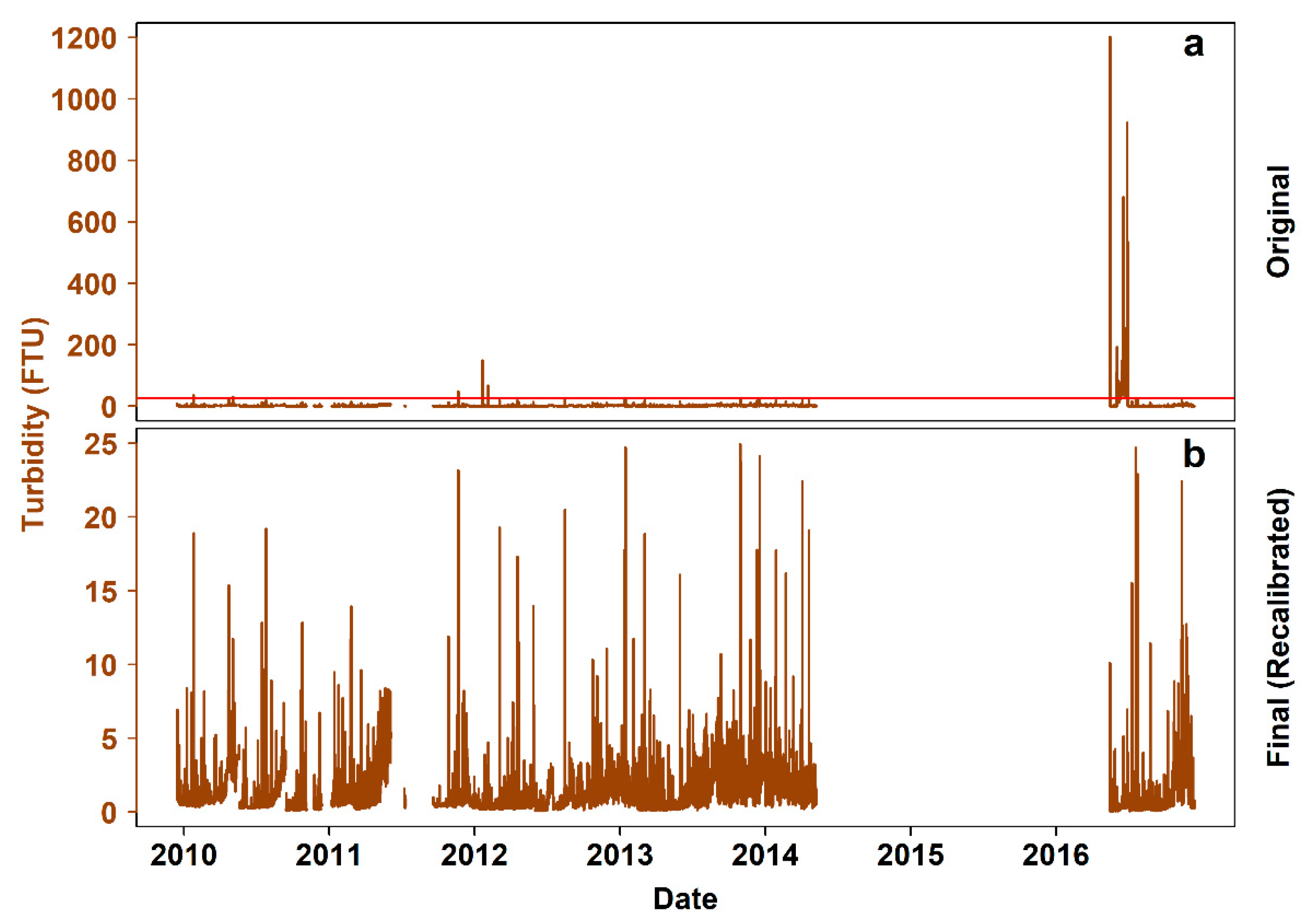

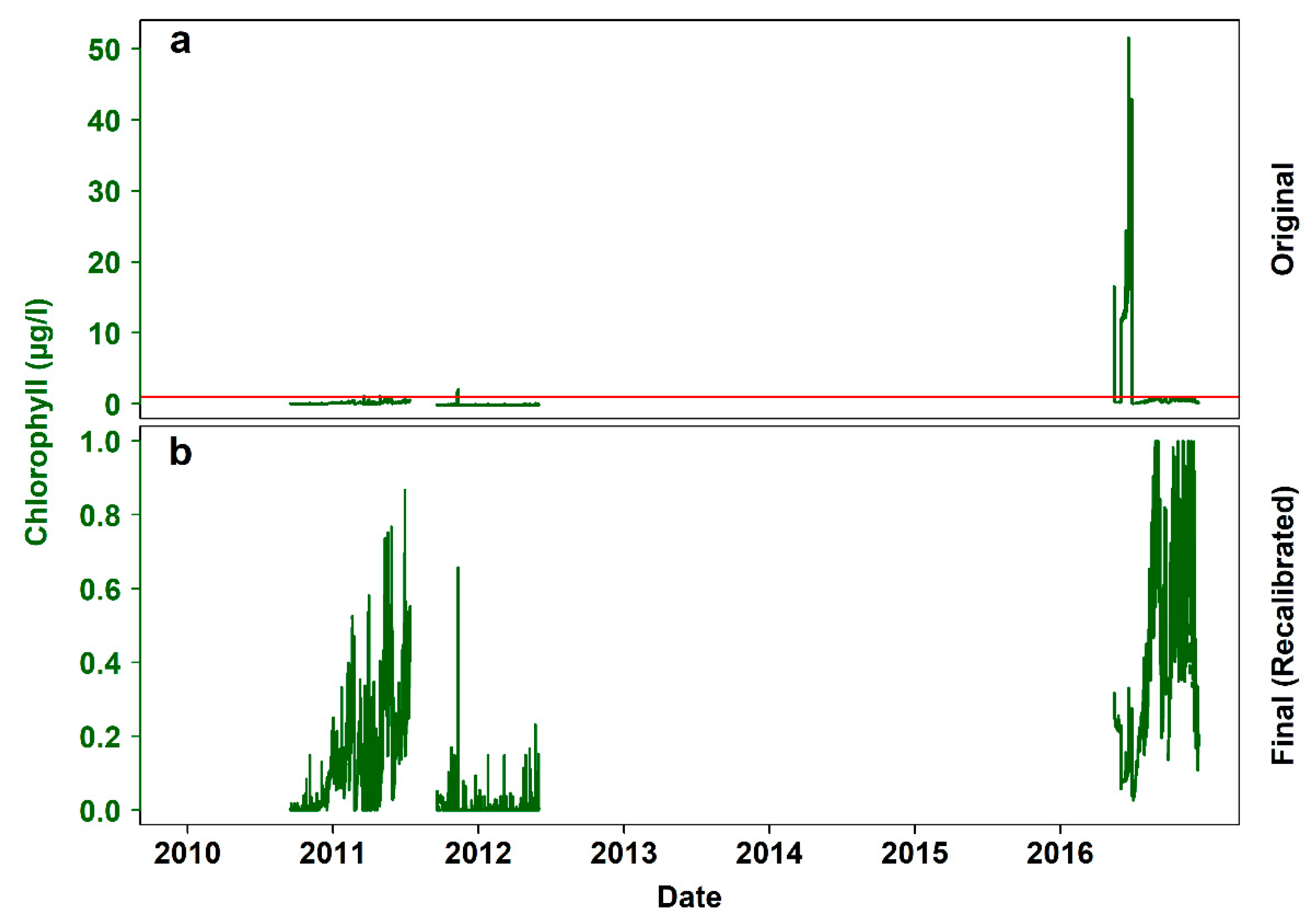

2.2.5. Turbidity and Chlorophyll

3. Results

3.1. Pressure

3.2. Temperature

3.3. Conductivity and Salinity

3.4. Flow

3.5. Turbidity and Chlorophyll

4. Discussion

4.1. General Remarks

4.2. Remarks on Individual Environmental Variables

4.3. Automated and Manual Data Quality Control and Validation

- Are subsets of the time series wrongly scaled?

- Are there implausible gradients in the time series?

- Do the time series contain implausible spikes?

- Are the time series compromised by unnatural noise?

- Is the variable characterized by marked periodicities or other patterns?

- Can the variable time series be modeled?

- Are the values of the variable positive by definition or do they range in the entire ℝ (i.e., real numbers) field?

4.4. Future Steps and Perspectives

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

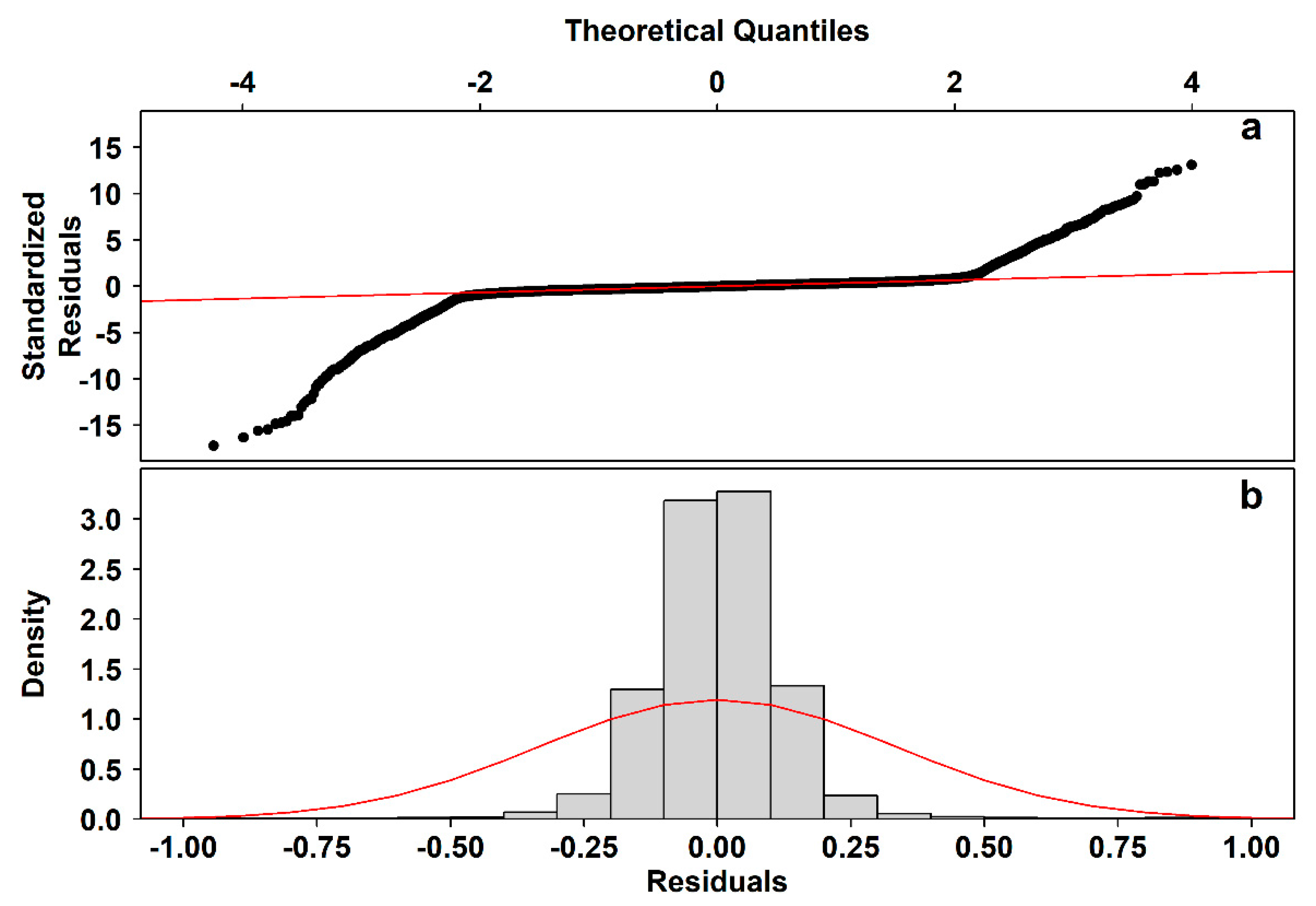

Appendix A.1. Tidal Model Residual Analysis

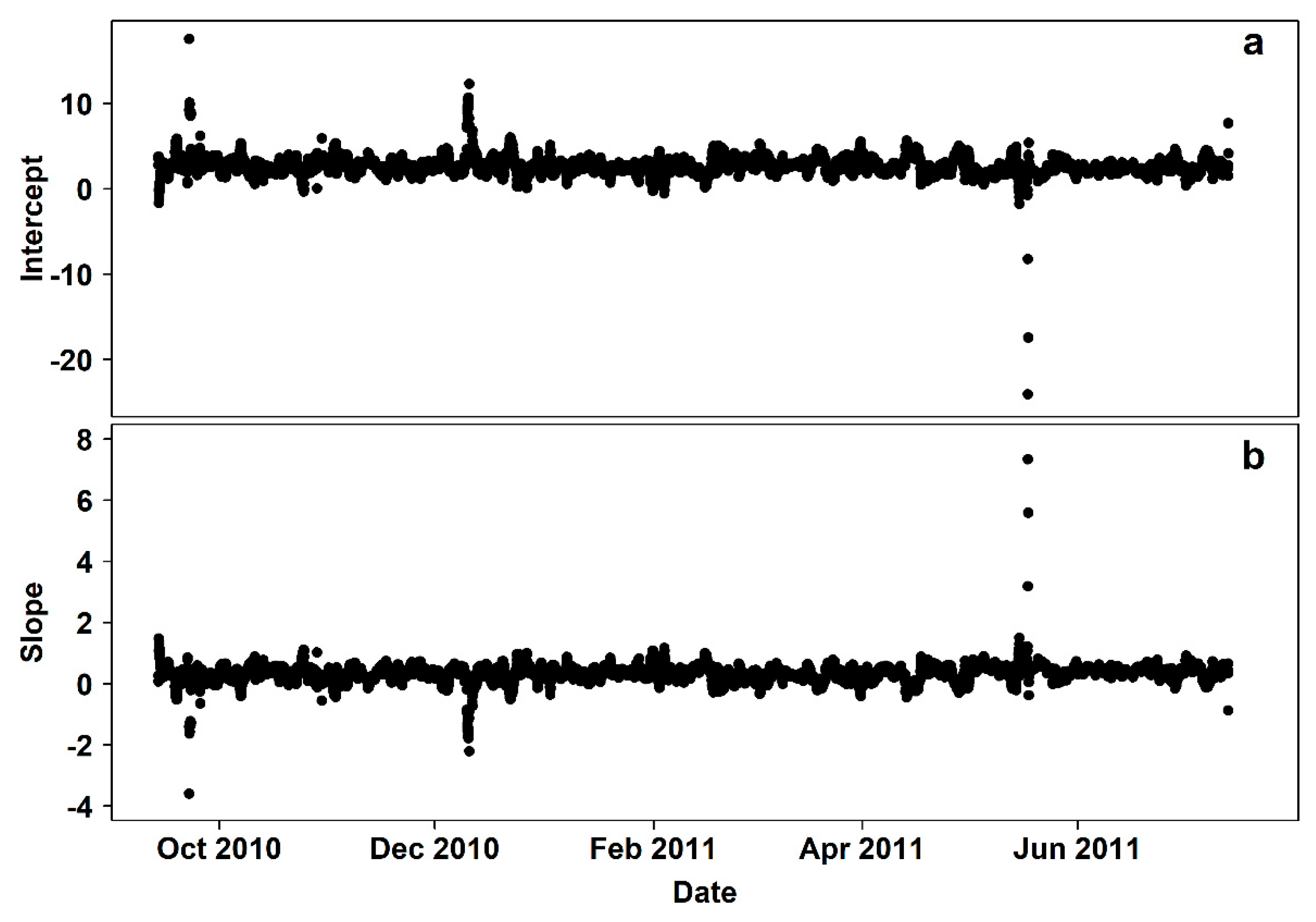

Appendix A.2. Hydrates—Mid-Canyon East Temperature Comparison

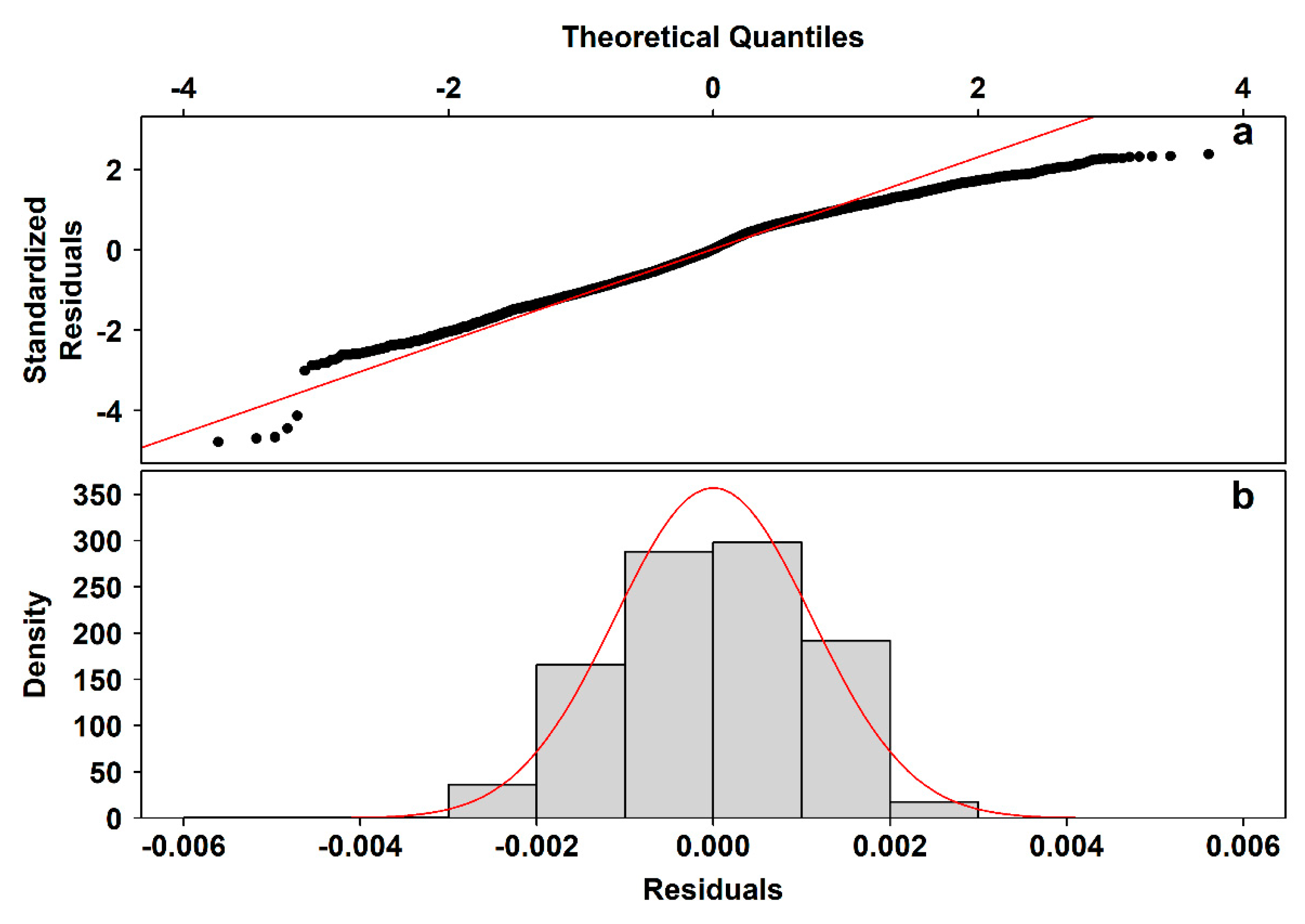

Appendix A.3. Conductivity—Temperature Model Residual Analysis

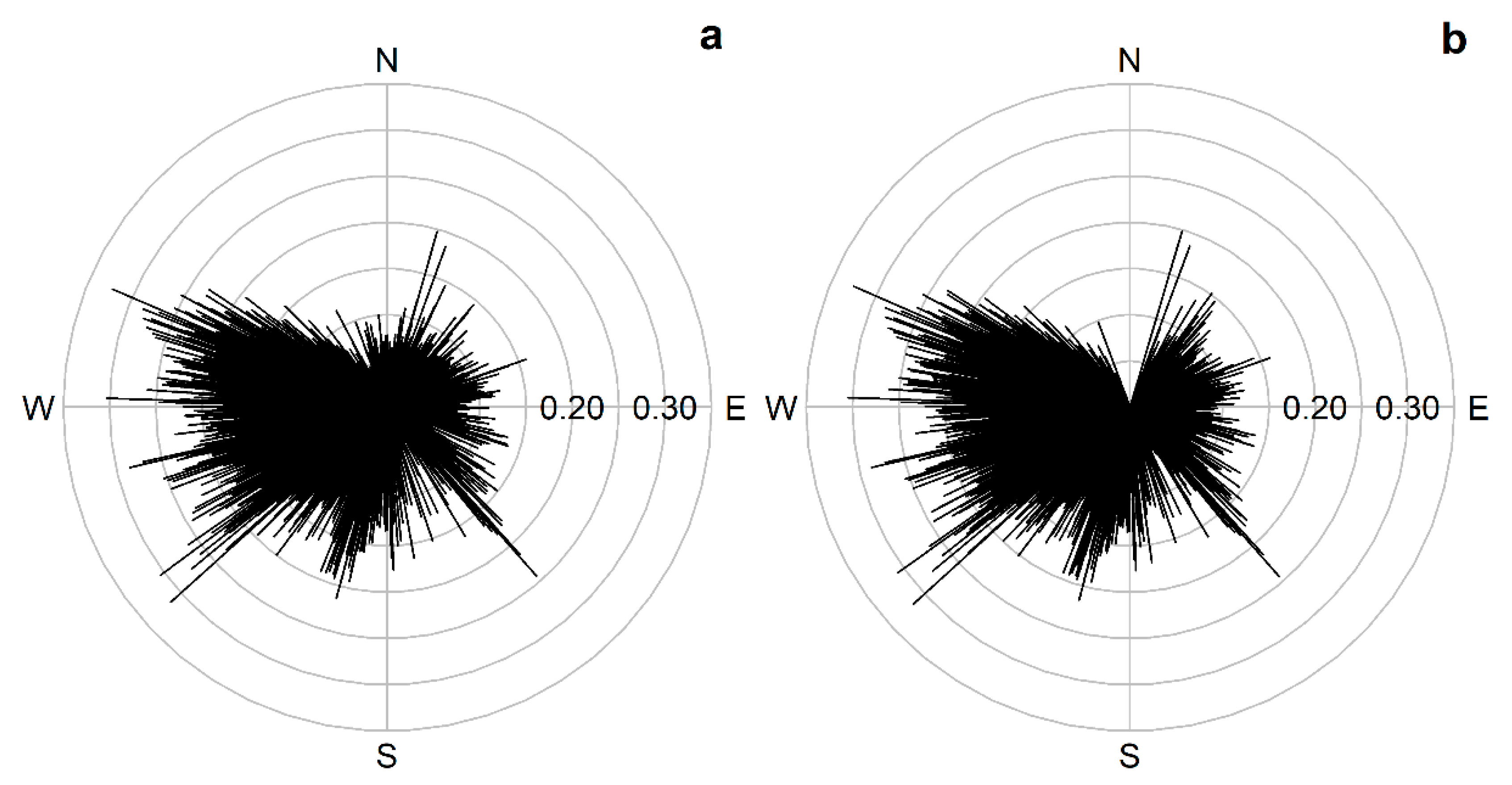

Appendix A.4. Current Meter Flow Component Comparison

References

- Bicknell, A.W.J.; Godley, B.J.; Sheehan, E.V.; Votier, S.C.; Witt, M.J. Camera technology for monitoring marine biodiversity and human impact. Front. Ecol. Environ. 2016, 14, 424–432. [Google Scholar] [CrossRef]

- Woodall, L.C.; Andradi-Brown, D.A.; Brierley, A.S.; Clark, M.R.; Connelly, D.; Hall, R.A.; Howell, K.L.; Huvenne, V.A.I.; Linse, K.; Ross, R.E.; et al. A multidisciplinary approach for generating globally consistent data on mesophotic, deep-pelagic, and bathyal biological communities. Oceanography 2018, 31, 76–89. [Google Scholar] [CrossRef] [Green Version]

- Bates, N.R.; Astor, Y.M.; Church, M.J.; Currie, K.; Dore, J.E.; González-Dávila, M.; Lorenzoni, L.; Muller-Karger, F.; Olafsson, J.; Santana-Casiano, J.M. A Time series View of Changing Surface Ocean Chemistry Due to Ocean Uptake of Anthropogenic CO2 and Ocean Acidification. Oceanography 2014, 27, 126–141. [Google Scholar] [CrossRef] [Green Version]

- Hughes, B.B.; Beas-Luna, R.; Barner, A.K.; Brewitt, K.; Brumbaugh, D.R.; Cerny-Chipman, E.B.; Close, S.L.; Coblentz, K.E.; De Nesnera, K.L.; Drobnitch, S.T.; et al. Long-term studies contribute disproportionately to ecology and policy. Bioscience 2017, 67, 271–281. [Google Scholar] [CrossRef] [Green Version]

- Bates, A.E.; Helmuth, B.; Burrows, M.T.; Duncan, M.I.; Garrabou, J.; Guy-Haim, T.; Lima, F.; Queiros, A.M.; Seabra, R.; Marsh, R.; et al. Biologists ignore ocean weather at their peril. Nature 2018, 560, 299–301. [Google Scholar] [CrossRef] [Green Version]

- Lampitt, R.S.; Favali, P.; Barnes, C.R.; Church, M.J.; Cronin, M.F.; Hill, K.L.; Kaneda, Y.; Karl, D.M.; Knap, A.H.; McPhaden, M.J.; et al. In Situ Sustained Eulerian Observatories. In Proceedings of OceanObs’09: Sustained Ocean Observations and Information for Society; Hall, J., Harrison, D.E., Stammer, D., Eds.; ESA Publication WPP-306: Noordwijk, The Netherlands, 2010; Volume 1, pp. 395–404. [Google Scholar]

- Send, U.; Weller, R.A.; Wallace, D.; Chavez, F.; Lampitt, R.S.; Dickey, T.; Honda, M.; Nittis, K.; Lukas, R.; McPhaden, M.J.; et al. OceanSITES. In Proceedings of OceanObs’09: Sustained Ocean Observations and Information for Society; Hall, J., Harrison, D.E., Stammer, D., Eds.; ESA Publication WPP-306: Noordwijk, The Netherlands, 2010; Volume 2, pp. 913–922. [Google Scholar]

- Cronin, M.F.; Weller, R.A.; Lampitt, R.S.; Send, U. Ocean reference stations. In Earth Observation; Rustamov, R., Salahova, S.E., Eds.; InTech: Rijeka, Croatia, 2012; pp. 203–228. [Google Scholar]

- Karl, D.M. Oceanic ecosystem time series programs: Ten lessons learned. Oceanography 2010, 23, 104–125. [Google Scholar] [CrossRef]

- Bell, M.J.; Guymer, T.H.; Turton, J.D.; MacKenzie, B.A.; Rogers, R.; Hall, S.P. Setting the course for UK operational oceanography. J. Oper. Oceanogr. 2013, 6, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Danovaro, R.; Carugati, L.; Berzano, M.; Cahill, A.E.; Carvalho, S.; Chenuil, A.; Corinaldesi, C.; Cristina, S.; David, R.; Dell’Anno, A.; et al. Implementing and innovating marine monitoring approaches for assessing marine environmental status. Front. Mar. Sci. 2016, 3, 213. [Google Scholar] [CrossRef]

- Froese, M.E.; Tory, M. Lessons learned from designing visualization dashboards. IEEE Comput. Graph. 2016, 36, 83–89. [Google Scholar] [CrossRef] [PubMed]

- Danovaro, R.; Aguzzi, J.; Fanelli, E.; Billett, D.; Gjerde, K.; Jamieson, A.; Ramirez-Llodra, E.; Smith, C.R.; Snelgrove, P.V.R.; Thomsen, L.; et al. An ecosystem-based deep-ocean strategy. Science 2017, 355, 452–454. [Google Scholar] [CrossRef]

- Liu, Y.; Qiu, M.; Liu, C.; Guo, Z. Big data challenges in ocean observation: A survey. Pers. Ubiquit. Comput. 2017, 21, 55–65. [Google Scholar] [CrossRef]

- Crise, A.M.; Ribera D’Alcala, M.; Mariani, P.; Petihakis, G.; Robidart, J.; Iudicone, D.; Bachmayer, R.; Malfatti, F. A conceptual framework for developing the next generation of Marine OBservatories (MOBs) for science and society. Front. Mar. Sci. 2018, 5, 318. [Google Scholar] [CrossRef]

- Aguzzi, J.; Chatzievangelou, D.; Marini, S.; Fanelli, E.; Danovaro, R.; Flögel, S.; Lebris, N.; Juanes, F.; De Leo, F.C.; Del Rio, J.; et al. New High-Tech Flexible Networks for the Monitoring of Deep-Sea Ecosystems. Environ. Sci. Technol. 2019, 53, 6616–6631. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Levin, L.A.; Bett, B.J.; Gates, A.R.; Heimbach, P.; Howe, B.M.; Janssen, F.; McCurdy, A.; Ruhl, H.A.; Snelgrove, P.; Stocks, K.I.; et al. Global Observing Needs in the Deep Ocean. Front. Mar. Sci. 2019, 6, 241. [Google Scholar] [CrossRef]

- MacLeod, N.; Benfield, M.; Culverhouse, P. Time to automate identification. Nature 2010, 467, 154–155. [Google Scholar] [CrossRef] [PubMed]

- Matabos, M.; Hoeberechts, M.; Doya, C.; Aguzzi, J.; Nephin, J.; Reimchen, T.E.; Leaver, S.; Marx, R.M.; Branzan Albu, A.; Fier, R.; et al. Expert, Crowd, Students or Algorithm: Who holds the key to deep-sea imagery big data’processing? Methods Ecol. Evol. 2017, 8, 996–1004. [Google Scholar] [CrossRef] [Green Version]

- Juanes, F. Visual and acoustic sensors for early detection of biological invasions: Current uses and future potential. J. Nat. Conserv. 2018, 42, 7–11. [Google Scholar] [CrossRef]

- Durden, J.M.; Schoening, T.; Althaus, F.; Friedman, A.; Garcia, R.; Glover, A.G.; Greinert, J.; Stout, N.J.; Jones, D.O.; Jordt, A.; et al. Perspectives in visual imaging for marine biology and ecology: From acquisition to understanding. In Oceanography and Marine Biology: An Annual Review; Hughes, R.N., Hughes, D.J., Smith, I.P., Dale, A.C., Eds.; CRC Press: Boca Raton, FL, USA, 2016; Volume 54, pp. 9–80. [Google Scholar] [CrossRef] [Green Version]

- Thomsen, L.; Barnes, C.; Best, M.; Chapman, R.; Pirenne, B.; Thomson, R.; Vogt, J. Ocean circulation promotes methane release from gas hydrate outcrops at the NEPTUNE Canada Barkley Canyon node. Geophys. Res. Lett. 2012, 39, L16605. [Google Scholar] [CrossRef]

- Purser, A.; Thomsen, L.; Barnes, C.; Best, M.; Chapman, R.; Hofbauer, M.; Menzel, M.; Wagner, H. Temporal and spatial benthic data collection via an internet operated Deep Sea Crawler. Methods Oceanogr. 2013, 5, 1–18. [Google Scholar] [CrossRef]

- Scherwath, M.; Thomsen, L.; Riedel, M.; Römer, M.; Chatzievangelou, D.; Schwendner, J.; Duda, A.; Heesemann, M. Ocean observatories as a tool to advance gas hydrate research. Earth Space Sci. 2019, 6, 2644–2652. [Google Scholar] [CrossRef] [Green Version]

- Chatzievangelou, D.; Aguzzi, J.; Thomsen, L. Quality control and pre-analysis treatment of 5-year long environmental datasets collected by an Internet Operated Deep-sea Crawler. In Proceedings of the 2019 IMEKO TC-19 International Workshop on Metrology for the Sea, Genova, Italy, 3–5 October 2019; IMEKO: Budapest, Hungary, 2019; pp. 156–160, ISBN 978-92-990084-2-3. [Google Scholar]

- Juniper, S.K.; Matabos, M.; Mihály, S.; Ajayamohan, R.S.; Gervais, F.; Bui, A.O. A year in Barkley Canyon: A time series observatory study of mid-slope benthos and habitat dynamics using the NEPTUNE Canada network. Deep-Sea Res. II 2013, 92, 114–123. [Google Scholar] [CrossRef]

- Thomson, R.E. Oceanography of the British Columbia coast. In Canadian Special Publication of Fisheries & Aquatic Sciences; Thorn Press Ltd.: Ottawa, ON, Canada, 1981; Volume 56, p. 291. ISBN 0-660-10978-6. [Google Scholar]

- De Leo, F.; Mihály, S.; Morley, M.; Smith, C.R.; Puig, P.; Thomsen, L. Nearly a decade of deep-sea monitoring in Barkley Canyon, NE Pacific, using the NEPTUNE cabled observatory. In Proceedings of the 4th International Submarine Canyon Symposium (INCISE 2018), Shenzhen, China, 5–7 November 2018. [Google Scholar] [CrossRef]

- Thomsen, L.; Aguzzi, J.; Costa, C.; De Leo, F.C.; Ogston, A.; Purser, A. The oceanic biological pump: Rapid carbon transfer to the Deep Sea during winter. Sci. Rep. 2017, 7, 10763. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chauvet, P.; Metaxas, A.; Hay, A.E.; Matabos, M. Annual and seasonal dynamics of deep-sea megafaunal epibenthic communities in Barkley Canyon (British Columbia, Canada): A response to climatology, surface productivity and benthic boundary layer variation. Prog. Oceanogr. 2018, 169, 89–105. [Google Scholar] [CrossRef] [Green Version]

- Kvålseth, T.O. Coefficient of variation: The second-order alternative. J. Appl. Stat. 2016, 44, 402–415. [Google Scholar] [CrossRef]

- Kelley, D.; Richards, C. Oce: Analysis of Oceanographic Data. R Package Version 1.2-0. 2020. Available online: https://CRAN.R-project.org/package=oce (accessed on 24 May 2020).

- Doya, C.; Aguzzi, J.; Pardo, M.; Company, J.B.; Costa, C.; Mihály, S.; Canals, M. Diel behavioral rhythms in sablefish (Anoplopoma fimbria) and other benthic species, as recorded by the Deep-sea cabled observatories in Barkley canyon (NEPTUNE-Canada). J. Mar. Syst. 2014, 130, 69–78. [Google Scholar] [CrossRef]

- Foreman, M.G.G.; Henry, R.F. The harmonic analysis of tidal model time series. Adv. Water Resour. 1989, 12, 109–120. [Google Scholar] [CrossRef]

- Golyandina, N.; Shlemov, A. Variations of singular spectrum analysis for separability improvement: Non-orthogonal decompositions of time series. Stat. Interface 2015, 8, 277–294. [Google Scholar] [CrossRef] [Green Version]

- Golyandina, N.; Korobeynikov, A. Basic Singular Spectrum Analysis and Forecasting with R. Comput. Stat. Data Anal. 2014, 71, 934–954. [Google Scholar] [CrossRef] [Green Version]

- IOC; SCOR; IAPSO. The international thermodynamic equation of seawater-2010: Calculation and use of thermodynamic properties, Manual and Guides No. 56, Intergovernmental Oceanographic Commission, UNESCO (English). 2010, p. 196. Available online: http://www.TEOS-10.org (accessed on 24 May 2020).

- Kelley, D. Oceanographic Analysis with R; Springer: New York, NY, USA, 2018. [Google Scholar]

- Agostinelli, C.; Lund, U. R package ‘circular’: Circular Statistics. R Package Version 0.4-93. 2017. Available online: https://r-forge.r-project.org/projects/circular/ (accessed on 24 May 2020).

- Thomsen, L.; Van Weering, T.; Gust, G. Processes in the benthic boundary layer at the Iberian continental margin and their implication for carbon mineralization. Prog. Oceanogr. 2002, 52, 315–329. [Google Scholar] [CrossRef]

- McShane, B.; Wyner, A.J. A statistical analysis of multiple temperature proxies: Are reconstructions of surface temperatures over the last 1000 years reliable? Ann. Appl. Stat. 2011, 5, 5–44. [Google Scholar] [CrossRef] [Green Version]

- Allen, S.E.; Durrieu de Madron, X. A review of the role of submarine canyons in deep-ocean exchange with the shelf. Ocean Sci. 2009, 5, 607–620. [Google Scholar] [CrossRef] [Green Version]

- Ramos-Musalem, K.; Allen, S.E. The impact of locally enhanced vertical diffusivity on the cross-shelf transport of tracers induced by a submarine canyon. J. Phys. Oceanogr. 2019, 49, 561–584. [Google Scholar] [CrossRef] [Green Version]

- Chatzievangelou, D.; Doya, C.; Thomsen, L.; Purser, A.; Aguzzi, J. High-frequency patterns in the abundance of benthic species near a cold-seep–An Internet Operated Vehicle application. PLoS ONE 2016, 11, e0163808. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hayashi, M. Temperature-electrical conductivity relation of water for environmental monitoring and geophysical data inversion. Environ. Monit. Assess. 2004, 96, 119–128. [Google Scholar] [CrossRef]

- Chatzievangelou, D.; Aguzzi, J.; Ogston, A.; Suárez, A.; Thomsen, L. Visual monitoring of key deep-sea megafauna with an Internet Operated crawler as a tool for ecological status assessment. Prog. Oceanogr. 2020, 184, 102321. [Google Scholar] [CrossRef]

- Díaz, S.; Settele, J.; Brondízio, E.S.; Ngo, H.T.; Guèze, M.; Agard, J.; Arneth, A.; Balvanera, P.; Brauman, K.A.; Butchart, S.H.M.; et al. (Eds.) Summary for Policymakers of the Global Assessment Report on Biodiversity and Ecosystem Services of the Intergovernmental Science-Policy Platform on Biodiversity and Ecosystem Services; IPBES Secretariat: Bonn, Germany, 2019; p. 56. ISBN 978-3-947851-13-3. [Google Scholar]

- Bindoff, N.L.; Cheung, W.W.L.; Kairo, J.G.; Arístegui, J.; Guinder, V.A.; Hallberg, R.; Hilmi, N.; Jiao, N.; Karim, M.S.; Levin, L.; et al. Changing Ocean, Marine Ecosystems, and Dependent Communities. In IPCC Special Report on the Ocean and Cryosphere in a Changing Climate; Pörtner, H.O., Roberts, D.C., Masson-Delmotte, V., Zhai, P., Tignor, M., Poloczanska, E., Mintenbeck, K., Alegría, A., Nicolai, M., Okem, A., et al., Eds.; IPCC: Geneva, Switzerland, in press.

| Type | Constituent | Period |

|---|---|---|

| lunar diurnal | O1 | 25.82 |

| solar diurnal | P1 | 24.07 |

| lunar diurnal | K1 | 23.93 |

| smaller lunarelliptic diurnal | J1 | 23.10 |

| lunar ellipticalsemi-diurnal second-order | 2N2 | 12.91 |

| larger lunar evectional | NU2 | 12.63 |

| principal lunarsemi-diurnal | M2 | 12.42 |

| principal solarsemi-diurnal | S2 | 12.00 |

| Test | Statistic | p Value |

|---|---|---|

| Wallraff | 373.2 (4 df) | < 2.2 × 10−16 |

| Watson-Wheeler | 13.68 (2 df) | 1.07 × 10−3 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chatzievangelou, D.; Aguzzi, J.; Scherwath, M.; Thomsen, L. Quality Control and Pre-Analysis Treatment of the Environmental Datasets Collected by an Internet Operated Deep-Sea Crawler during Its Entire 7-Year Long Deployment (2009–2016). Sensors 2020, 20, 2991. https://doi.org/10.3390/s20102991

Chatzievangelou D, Aguzzi J, Scherwath M, Thomsen L. Quality Control and Pre-Analysis Treatment of the Environmental Datasets Collected by an Internet Operated Deep-Sea Crawler during Its Entire 7-Year Long Deployment (2009–2016). Sensors. 2020; 20(10):2991. https://doi.org/10.3390/s20102991

Chicago/Turabian StyleChatzievangelou, Damianos, Jacopo Aguzzi, Martin Scherwath, and Laurenz Thomsen. 2020. "Quality Control and Pre-Analysis Treatment of the Environmental Datasets Collected by an Internet Operated Deep-Sea Crawler during Its Entire 7-Year Long Deployment (2009–2016)" Sensors 20, no. 10: 2991. https://doi.org/10.3390/s20102991

APA StyleChatzievangelou, D., Aguzzi, J., Scherwath, M., & Thomsen, L. (2020). Quality Control and Pre-Analysis Treatment of the Environmental Datasets Collected by an Internet Operated Deep-Sea Crawler during Its Entire 7-Year Long Deployment (2009–2016). Sensors, 20(10), 2991. https://doi.org/10.3390/s20102991