Autonomous Toy Drone via Coresets for Pose Estimation

Abstract

:1. Introduction and Motivation

- (i)

- Introduce coresets to the robotics community and show how their theory can be applied in real-time systems and not only in the context of machine learning or theoretical computer science.

- (ii)

- Suggest novel coresets for real-time kinematic systems, where the motivation is to improve the running time of an algorithm, by selecting a small subset of the moving points only once and then tracking and processing them (not the entire set) during the movement of the coreset in the next observed frames.

- (iii)

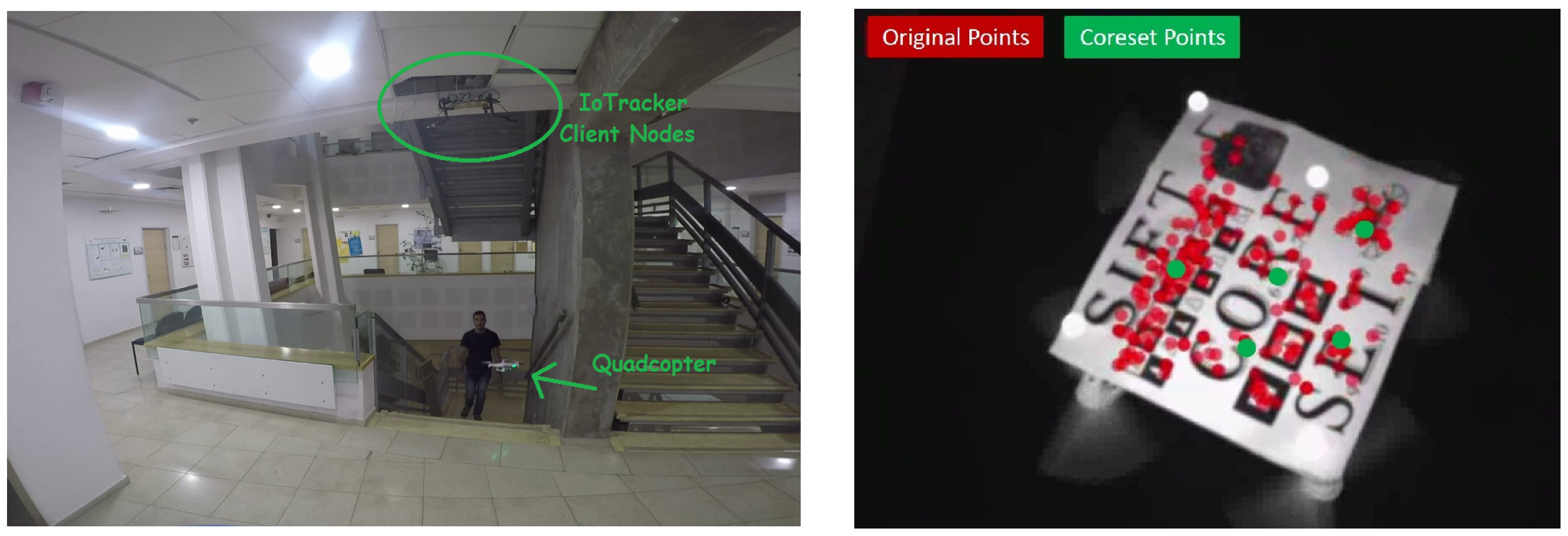

- Provide a wireless and low-cost tracking system, IoTracker, that is based on mini-computers (“Internet of things”) that run coresets.

2. Related Work and Comparison

3. Warm Up: Mean Coreset

- (i)

- Numerical stability: Every d linearly independent points in P span their mean. However, this coreset yields huge positive and negative coefficients that canceled each other and resulted in high numerical error. Our requirement that the coreset weights will have positive weights whose average is 1 makes these phenomena disappear in practice.

- (ii)

- Efficiency: A small coreset allows us to compute the mean of a kinematic (moving) set of points faster, by computing the mean of the small coreset in each frame, instead of the complete set of points. This also reduces the time and probability of failure of other tasks such as matching points between frames. This is explained in Section 1.

- (iii)

- Kinematic Tracking: In the next sections we track the orientation of an object (robot or a set of vectors) by tracking a kinematic representative set (coreset) of markers during many frames. This coreset is computed once for the many following frames. Such tracking is impossible when the coreset is not a subset of the tracked points.

| Algorithm 1: |

|

| Algorithm 2: |

|

3.1. Example Applications

4. Application for Kinematic Data: Kabsch Coreset

| Algorithm 3: Kabsch-Coreset() |

|

5. From Theory to Real Time Tracking System

6. Experimental Results

6.1. Synthetic Data

6.2. IoTracker: A Multicamera Wireless Low-Cost Tracking System

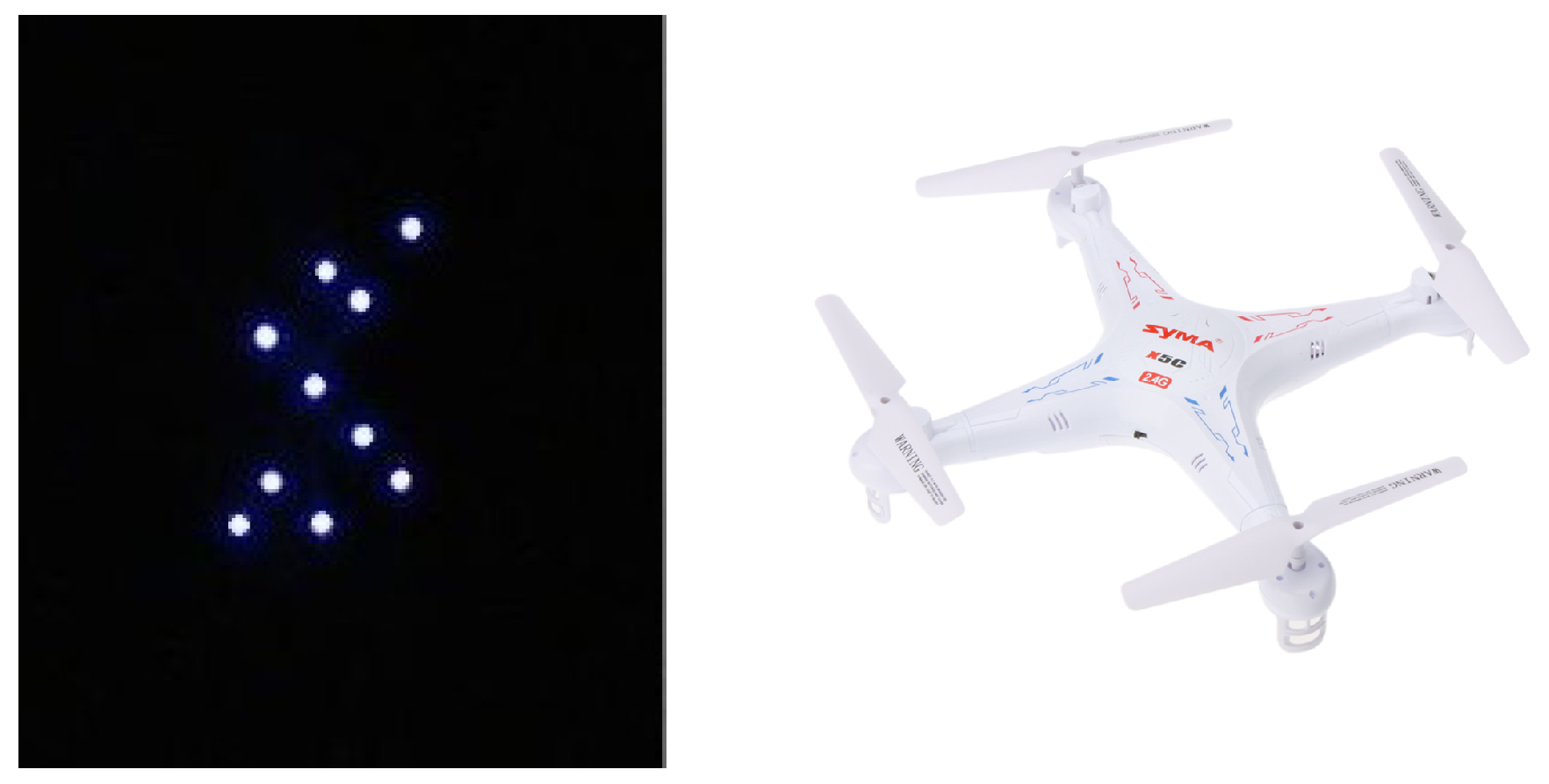

Autonomous Quadcopter

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Agarwal, P.K.; Procopiuc, C.M. Approximation algorithms for projective clustering. In Proceedings of the Eleventh Annual ACM-SIAM Symposium on Discrete Algorithms (SODA), San Francisco, CA, USA, 9–11 January 2000; pp. 538–547. [Google Scholar]

- Agarwal, P.K.; Procopiuc, C.M.; Varadarajan, K.R. Approximation Algorithms for k-Line Center. In Proceedings of the 10th Annual European Symposium on Algorithms (ESA), Rome, Italy, 17–21 September 2002; Lecture Notes in Computer Science. Springer: Berlin, Germany, 2002; Volume 2461, pp. 54–63. [Google Scholar]

- Har-Peled, S. Clustering Motion. Discrete Comput. Geom. 2004, 31, 545–565. [Google Scholar] [CrossRef] [Green Version]

- Feldman, D.; Monemizadeh, M.; Sohler, C. A PTAS for k-means clustering based on weak coresets. In Proceedings of the 23rd Annual Symposium on Computational Geometry (SoCG ’07), Gyeongju, Korea, 6–8 June 2007. [Google Scholar]

- Agarwal, P.K.; Har-Peled, S.; Varadarajan, K.R. Geometric Approximations via Coresets. In Combinatorial and Computational Geometry; MSRI Publications: Berkeley, CA, USA, 2005; Volume 52, pp. 1–30. [Google Scholar]

- Czumaj, A.; Sohler, C. Sublinear-time approximation algorithms for clustering via random sampling. Random Struct. Algorithms (RSA) 2007, 30, 226–256. [Google Scholar] [CrossRef]

- Phillips, J.M. Coresets and Sketches, Near-Final Version of Chapter 49. In Handbook on Discrete and Computational Geometry, 3rd ed.; CRC Press LLC: Boca Raton, FL, USA, 2016. [Google Scholar]

- Czumaj, A.; Ergün, F.; Fortnow, L.; Magen, A.; Newman, I.; Rubinfeld, R.; Sohler, C. Approximating the weight of the euclidean minimum spanning tree in sublinear time. SIAM J. Comput. 2005, 35, 91–109. [Google Scholar] [CrossRef] [Green Version]

- Frahling, G.; Indyk, P.; Sohler, C. Sampling in Dynamic Data Streams and Applications. Int. J. Comput. Geometry Appl. 2008, 18, 3–28. [Google Scholar] [CrossRef]

- Buriol, L.S.; Frahling, G.; Leonardi, S.; Sohler, C. Estimating Clustering Indexes in Data Streams. In Proceedings of the 15th Annual European Symposium on Algorithms (ESA), Eilat, Israel, 8–10 October 2007; Lecture Notes in Computer Science. Springer: Berlin, Germany, 2007; Volume 4698, pp. 618–632. [Google Scholar]

- Frahling, G.; Sohler, C. Coresets in dynamic geometric data streams. In Proceedings of the Thirty-Seventh Annual ACM Symposium on Theory of Computing, Baltimore, MD, USA, 22 May 2005; ACM: New York, NY, USA, 2005; pp. 209–217. [Google Scholar]

- Feldman, D.; Volkov, M.; Rus, D. Dimensionality Reduction of Massive Sparse Datasets Using Coresets. In Advances in Neural Information Processing Systems (NIPS); MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Feldman, D.; Faulkner, M.; Krause, A. Scalable training of mixture models via coresets. In Advances in Neural Information Processing Systems (NIPS); MIT Press: Cambridge, MA, USA, 2011; pp. 2142–2150. [Google Scholar]

- Tsang, I.W.; Kwok, J.T.; Cheung, P.M. Core vector machines: Fast SVM training on very large data sets. J. Mach. Learn. Res. 2005, 6, 363–392. [Google Scholar]

- Lucic, M.; Bachem, O.; Krause, A. Strong coresets for hard and soft Bregman clustering with applications to exponential family mixtures. In Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, Cadiz, Spain, 7–11 May 2016; pp. 1–9. [Google Scholar]

- Bachem, O.; Lucic, M.; Hassani, S.H.; Krause, A. Approximate k-means++ in sublinear time. In Proceedings of the Conference on Artificial Intelligence (AAAI), Phoenix Convention Center, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Lucic, M.; Ohannessian, M.I.; Karbasi, A.; Krause, A. Tradeoffs for Space, Time, Data and Risk in Unsupervised Learning. arXiv 2015, arXiv:1605.00529. [Google Scholar]

- Bachem, O.; Lucic, M.; Krause, A. Coresets for Nonparametric Estimation—The Case of DP-Means. In Proceedings of the International Conference on Machine Learning (ICML), Lille, France, 6–11 July 2015. [Google Scholar]

- Huggins, J.H.; Campbell, T.; Broderick, T. Coresets for Scalable Bayesian Logistic Regression. arXiv 2016, arXiv:1605.06423. [Google Scholar]

- Rosman, G.; Volkov, M.; Feldman, D.; Fisher, J.W., III; Rus, D. Coresets for k-segmentation of streaming data. In Advances in Neural Information Processing Systems (NIPS); MIT Press: Cambridge, MA, USA, 2014; pp. 559–567. [Google Scholar]

- Reddi, S.J.; Póczos, B.; Smola, A. Communication efficient coresets for empirical loss minimization. In Proceedings of the Conference on Uncertainty in Artificial Intelligence (UAI), Amsterdam, The Netherlands, 12–16 July 2015. [Google Scholar]

- Sung, C.; Feldman, D.; Rus, D. Trajectory clustering for motion prediction. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1547–1552. [Google Scholar]

- Feldman, D.; Sugaya, A.; Sung, C.; Rus, D. iDiary: From GPS signals to a text-searchable diary. In Proceedings of the 11th ACM Conference on Embedded Networked Sensor Systems, Roma, Italy, 11–15 November 2013; ACM: New York, NY, USA, 2013; p. 6. [Google Scholar]

- Feldman, D.; Xian, C.; Rus, D. Private Coresets for High-Dimensional Spaces; Technical Report; ACM Digital Library: New York, NY, USA, 2016. [Google Scholar]

- Feigin, M.; Feldman, D.; Sochen, N. From high definition image to low space optimization. In Proceedings of the International Conference on Scale Space and Variational Methods in Computer Vision, Ein-Gedi, Israel, Germany, 29 May–2 June 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 459–470. [Google Scholar]

- Feldman, D.; Feigin, M.; Sochen, N. Learning big (image) data via coresets for dictionaries. J. Mathem. Imaging Vis. 2013, 46, 276–291. [Google Scholar] [CrossRef]

- Alexandroni, G.; Moreno, G.Z.; Sochen, N.; Greenspan, H. Coresets versus clustering: Comparison of methods for redundancy reduction in very large white matter fiber sets. In Medical Imaging 2016: Image Processing; SPIE Medical Imaging; International Society for Optics and Photonics: Bellingham, WA, USA, 2016; p. 97840A. [Google Scholar]

- Stanway, M.J.; Kinsey, J.C. Rotation Identification in Geometric Algebra: Theory and Application to the Navigation of Underwater Robots in the Field. J. Field Robot. 2015, 32, 632–654. [Google Scholar] [CrossRef] [Green Version]

- MIT Senseable City Lab. SkyCall Video. 2016. Available online: https://www.youtube.com/watch?v=mB9NfEJ0ZVs (accessed on 9 May 2020).

- Nasser, S.; Jubran, I.; Feldman, D. System Demonstration Video. 2020. Available online: https://drive.google.com/open?id=1HN1iY2Ti_d-akUXKJgckDKh7rmZUyXWG (accessed on 9 May 2020).

- Wahba, G. A least squares estimate of satellite attitude. SIAM Rev. 1965, 7, 409. [Google Scholar] [CrossRef]

- Kabsch, W. A solution for the best rotation to relate two sets of vectors. Acta Crystallogr. Sect. A Cryst. Phys. Diff. Theor. Gen. Crystallogr. 1976, 32, 922–923. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. Epnp: An accurate O(n) solution to the pnp problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef] [Green Version]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Robotics-DL Tentative; International Society for Optics and Photonics, SPIE Digital Library: Bellingham, WA, USA, 1992; pp. 586–606. [Google Scholar]

- Wang, L.; Sun, X. Comparisons of Iterative Closest Point Algorithms. In Ubiquitous Computing Application and Wireless Sensor; Springer: Berlin, Germany, 2015; pp. 649–655. [Google Scholar]

- Friedman, J.H.; Bentley, J.L.; Finkel, R.A. An algorithm for finding best matches in logarithmic expected time. ACM Trans. Math. Softw. (TOMS) 1977, 3, 209–226. [Google Scholar] [CrossRef]

- Zhang, Z. Iterative point matching for registration of free-form curves and surfaces. Int. J. Comput. Vis. 1994, 13, 119–152. [Google Scholar] [CrossRef]

- Pulli, K.; Shapiro, L.G. Surface reconstruction and display from range and color data. Graph. Models 2000, 62, 165–201. [Google Scholar] [CrossRef] [Green Version]

- Ahuja, R.K.; Magnanti, T.L.; Orlin, J.B. Network Flows: Theory, Algorithms, and Applications; Physica-Verlag: Wurzburg, Gemany, 1993. [Google Scholar]

- Valencia, C.E.; Vargas, M.C. Optimum matchings in weighted bipartite graphs. In Boletín de la Sociedad Matemática Mexicana; Springer: Berlin, Germany, 2015. [Google Scholar]

- Batson, J.; Spielman, D.A.; Srivastava, N. Twice-ramanujan sparsifiers. SIAM J. Comput. 2012, 41, 1704–1721. [Google Scholar] [CrossRef] [Green Version]

- Cohen, M.B.; Nelson, J.; Woodruff, D.P. Optimal approximate matrix product in terms of stable rank. arXiv 2015, arXiv:1507.02268. [Google Scholar]

- Ghashami, M.; Liberty, E.; Phillips, J.M.; Woodruff, D.P. Frequent directions: Simple and deterministic matrix sketching. SIAM J. Comput. 2016, 45, 1762–1792. [Google Scholar] [CrossRef] [Green Version]

- Cohen, M.B.; Elder, S.; Musco, C.; Musco, C.; Persu, M. Dimensionality reduction for k-means clustering and low rank approximation. In Proceedings of the Forty-Seventh Annual ACM on Symposium on Theory of Computing, Portland, OR, USA, 15–17 June 2015; ACM: New York, NY, USA, 2015; pp. 163–172. [Google Scholar]

- Feldman, D.; Schmidt, M.; Sohler, C. Turning big data into tiny data: Constant-size coresets for k-means, pca and projective clustering. In Proceedings of the Twenty-Fourth Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 6–8 January 2013; SIAM: Philadelphia, PA, USA, 2013; pp. 1434–1453. [Google Scholar]

- De Carli Silva, M.K.; Harvey, N.J.A.; Sato, C.M. Sparse Sums of Positive Semidefinite Matrices. arXiv 2011, arXiv:1107.0088v2. [Google Scholar]

- Carathéodory, C. Über den Variabilitätsbereich der Fourier’schen Konstanten von positiven harmonischen Funktionen. In Rendiconti del Circolo Matematico di Palermo (1884–1940); Springer: Berlin/Heidelberg, Germany, 1911; Volume 32, pp. 193–217. [Google Scholar]

- Indyk, P.; Mahabadi, S.; Mahdian, M.; Mirrokni, V.S. Composable core-sets for diversity and coverage maximization. In Proceedings of the 33rd ACM SIGMOD-SIGACT-SIGART Symposium on Principles of Database Systems, Snowbird, UT, USA, 22–27 July 2014; ACM: New York, NY, USA, 2014; pp. 100–108. [Google Scholar]

- Mirrokni, V.; Zadimoghaddam, M. Randomized composable core-sets for distributed submodular maximization. In Proceedings of the Forty-Seventh Annual ACM on Symposium on Theory of Computing, Portland, OR, USA, 15–17 June 2015; ACM: New York, NY, USA, 2015; pp. 153–162. [Google Scholar]

- Aghamolaei, S.; Farhadi, M.; Zarrabi-Zadeh, H. Diversity Maximization via Composable Coresets. In Proceedings of the 27th Canadian Conference on Computational Geometry (CCCG 2015), Kingston, ON, Canada, 10–12 August 2015. [Google Scholar]

- Agarwal, P.K.; Cormode, G.; Huang, Z.; Phillips, J.M.; Wei, Z.; Yi, K. Mergeable summaries. ACM Trans. Database Syst. (TODS) 2013, 38, 26. [Google Scholar] [CrossRef]

- Har-Peled, S.; Mazumdar, S. On coresets for k-means and k-median clustering. In Proceedings of the Thirty-Sixth Annual ACM Symposium on Theory of Computing, Chicago, IL, USA, 13–15 June 2004; ACM: New York, NY, USA, 2004; pp. 291–300. [Google Scholar]

- Har-Peled, S.; Kushal, A. Smaller coresets for k-median and k-means clustering. Discret. Comput. Geom. 2007, 37, 3–19. [Google Scholar] [CrossRef] [Green Version]

- Chen, K. On coresets for k-median and k-means clustering in metric and euclidean spaces and their applications. SIAM J. Comput. 2009, 39, 923–947. [Google Scholar] [CrossRef]

- Langberg, M.; Schulman, L.J. Universal ε-approximators for integrals. In Proceedings of the Twenty-First Annual ACM-SIAM Symposium on Discrete Algorithms, Austin, TX, USA, 17–19 January 2010; SIAM: Philadelphia, PA, USA, 2010; pp. 598–607. [Google Scholar]

- Feldman, D.; Langberg, M. A Unified Framework for Approximating and Clustering Data. In Proceedings of the 34th Annual ACM Symposium on Theory of Computing (STOC), San Jose, CA, USA, 6–8 June 2011. [Google Scholar]

- Barger, A.; Feldman, D. k-Means for Streaming and Distributed Big Sparse Data. In Proceedings of the 2016 SIAM International Conference on Data Mining (SDM’16), Miami, FL, USA, 5–7 May 2016. [Google Scholar]

- Feldman, D.; Tassa, T. More constraints, smaller coresets: Constrained matrix approximation of sparse big data. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD’15), Sydney, Australia, 10–13 August 2015; ACM: New York, NY, USA, 2015; pp. 249–258. [Google Scholar]

- Kjer, H.M.; Wilm, J. Evaluation of Surface Registration Algorithms for PET Motion Correction. Ph.D. Thesis, Technical University of Denmark, Lyngby, Denmark, 2010. [Google Scholar]

| Without Coreset | Using Coreset | |

|---|---|---|

| With matching, without rotation | ||

| Without matching, with rotation | ||

| Noisy matching |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nasser, S.; Jubran, I.; Feldman, D. Autonomous Toy Drone via Coresets for Pose Estimation. Sensors 2020, 20, 3042. https://doi.org/10.3390/s20113042

Nasser S, Jubran I, Feldman D. Autonomous Toy Drone via Coresets for Pose Estimation. Sensors. 2020; 20(11):3042. https://doi.org/10.3390/s20113042

Chicago/Turabian StyleNasser, Soliman, Ibrahim Jubran, and Dan Feldman. 2020. "Autonomous Toy Drone via Coresets for Pose Estimation" Sensors 20, no. 11: 3042. https://doi.org/10.3390/s20113042