MRI Segmentation and Classification of Human Brain Using Deep Learning for Diagnosis of Alzheimer’s Disease: A Survey

Abstract

:1. Introduction

- Provide an overview of the current deep learning approaches for brain MRI segmentation and classification of AD.

- Identify the application challenges in the segmentation of brain structure MRI and classification of AD.

- Show that MRI segmentation of the brain structure can improve the accuracy of diagnosing AD.

2. MRI Dataset for Brain Analysis

2.1. Public Dataset for Brain MRI

2.1.1. OASIS

2.1.2. ADNI

2.1.3. IBSR

2.1.4. MICCAI

2.2. Pre-Processing for Brain MRI Analysis

3. Review of Brain MRI Segmentation and Diagnosis

3.1. Overview of CNN Architecture

- Deep learning used in big data analytics: The major challenge lies in the difficulty of obtaining a large enough dataset to train and improve the accuracy of the model properly. Deep learning faces difficulties in dealing with the volume (high-dimensional decision space, and a large number of objectives), variety (modeling using heterogeneous data and knowledge transfer between problems), variability (robustness over time and online knowledge acquisition) and veracity (noisy fitness evaluations and surrogate-assisted optimization) of big data [50]. To overcome this problem, the author in [51] suggested various optimization techniques such as global optimization, which reuse the knowledge extracted from the vast amount of high dimensional, heterogeneous, and noisy data. On the other hand, complex optimization techniques provide efficient solutions by formulating new insights and methodologies for optimization problems that take advantage of using deep learning approaches when dealing with big data problems. The traditional machine learning approaches show better performance with less input data. As the amount of data increases beyond a certain critical point, the performance of traditional machine learning approaches becomes steady, whereas deep learning approaches tend to increase [51]. Deep learning architectures, such as deep neural networks, deep belief networks, and recurrent neural networks, have been applied to research fields including medical image analysis, bioinformatics, and computer vision, where they often produce impressive results, that are comparable to and superior to human experts in some cases.

- Scalability of deep learning approaches: The scalability of deep learning needs to consider not only accuracy but several other measures regarding computational resources. Scalability plays a vital role in deep learning. As data expands in terms of variability, variety, veracity, and volume, it becomes increasingly difficult to scale computing performance using enterprise-class servers and storage in line with the increase. Scalability can be achieved by implementing deep learning techniques on a high-performance computing (HPC) system (super-computing, cluster, sometimes considered cloud computing), which offers immense potential for data-intensive business computing [50]. The ability to generate data, which is important where data is not available for learning the system (especially for computer vision tasks, such as inverse graphics).

- Multi-task, transfer learning, or multi-module learning: Learning simultaneously from several domains or with various models is one of the significant challenges in deep learning. Currently, one of the most significant limitations to transfer learning is the problem of negative transfer. Transfer learning only works if the initial and target problems are similar enough for the first round of training to be relevant. If the first round of training is too different, the model may perform worse than if it had never been trained at all. There are no clear standards on what types of training are sufficiently related, or how this should be measured.

3.2. Segmentation of Brain MRI Using Deep Learning

- Large variations in brain anatomical structures due to phenotypes, age, gender, and disease. It is difficult to apply one specific segmentation method to all phenotypic categories for reliable performance [77].

- It is challenging to process cytoarchitectural variations, such as gyral folds, sulci depths, thin tissue structures, and smooth boundaries between different tissues. This can result in confusing categorical labeling for distinct tissue classes. This is difficult even for human experts.

- The low contrast of anatomical structure in T1, T2, and FLAIR modalities results in low segmentation performances.

- Manual segmentation for brain MRI is laborious, subjective, and time-consuming, and requires sophisticated knowledge of brain anatomy. Thus, it is difficult to obtain enough amount of ground truth data for building a segmentation model.

- The noisy background in the ordinary image for segmentation is challenging because it is hard to assign an accurate label to each pixel/voxel with learned features.

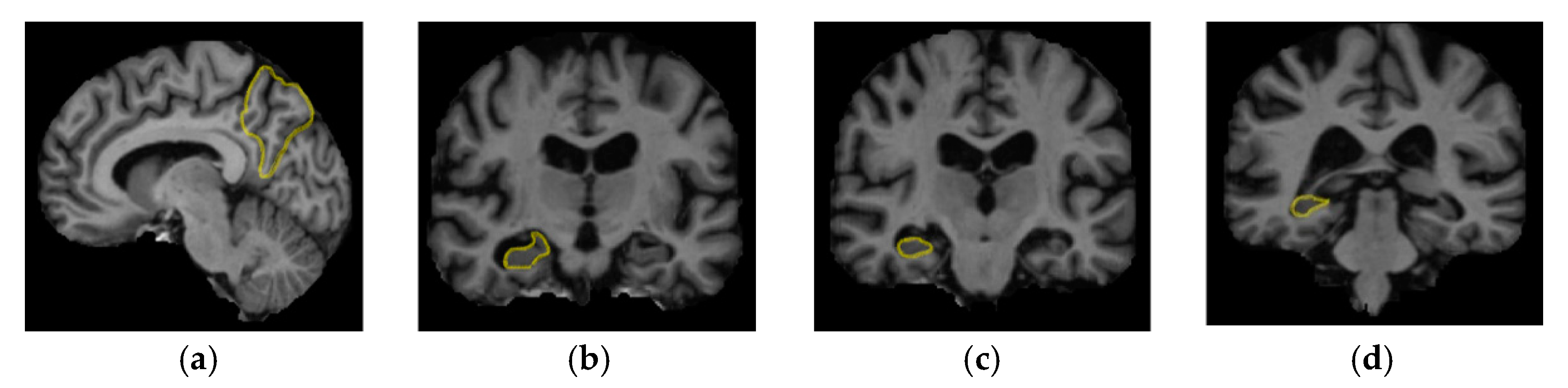

- The segmentation of the hippocampus, which is one of the most important biomarkers for AD, is difficult due to its small size and volume [65], as well as its anatomical variability, partial volume effects, low contrast, low signal-to-noise ratio, indistinct boundary and proximity to the Amygdaloid body.

3.3. Brain MRI Classification of AD Diagnosis Using Deep Learning

- The automatic classification of AD is quite challenging due to the low contrast of the anatomical structure in MRI. The presence of noisy or outlier pixels in MRI scans due to various scanning conditions may also result in a reduction of the classification accuracy.

- The major challenge in AD is that it is difficult to make long-term tracking and investigation of the patient’s pathology. Thus, it is not easy to track the transition of AD status. In the ADNI dataset [16], there are only 152 transitions in total out of the entire dataset of 2731 MRIs. Due to the lack of the MRIs in terms of tracking the transition of AD status, it is likely for the model to overfit without generalizing distinctions between different stages of AD.

- It is well known that AD is not only diagnosed from clinical stages of brain MRI, but also occurs through abnormal amyloid β peptide (Aβ) and tau (τ) protein activity around neurons and their temporal relationship with the different phases of AD in different stages. The factors mentioned above should be considered as multi-modal biomarkers as well as brain MRI. Thus, complexity during the process of treating AD is due to diverse factors regulating its pathology.

- Data multimodality in the diagnosis of AD

- ✓

- Since neuroimaging data (i.e., MRI or PET) and genetic data (single nucleotide polymorphism (SNP)) have different data distributions, different numbers of features and different levels of discriminative ability to AD diagnosis (e.g., SNP data in their raw form are less effective in AD diagnosis), simple concatenation of the features from multimodality data will result in an inaccurate prediction model [105,106] due to heterogeneity.

- ✓

- High dimensionality issue: One neuroimage scan normally contains millions of voxels, while the genetic data of a subject has thousands of AD-related SNPs.

3.4. The Segmentation of Brain MRI Improves the Classification of AD

4. Evaluation Metrics for Brain MRI Segmentation

5. Discussion and Future Directions

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Miriam, R.; Malin, B.; Jan, A.; Patrik, B.; Fredrik, B.; Jorgen, R.; Ingrid, L.; Lennart, B.; Richard, P.; Martin, R.; et al. PET/MRI and PET/CT hybrid imaging of rectal cancer–description and initial observations from the RECTOPET (Rectal Cancer trial on PET/MRI/CT) study. Cancer Imaging 2019, 19, 52. [Google Scholar]

- Rebecca, S.; Diana, M.; Eric, J.; Choonsik, L.; Heather, F.; Michael, F.; Robert, G.; Randell, K.; Mark, H.; Douglas, M.; et al. Use of Diagnostic Imaging Studies and Associated Radiation Exposure for Patients Enrolled in Large Integrated Health Care Systems, 1996–2010. JAMA 2012, 307, 2400–2409. [Google Scholar]

- Hsiao, C.-J.; Hing, E.; Ashman, J. Trends in electronic health record system use among office-based physicians: United States, 2007–2012. Natl. Health Stat. Rep. 2014, 1, 1–18. [Google Scholar]

- Despotović, I.; Goossens, B.; Philips, W. MRI Segmentation of the Human Brain: Challenges, Methods, and Applications. Comput. Math. Methods Med. 2015, 2015, 1–23. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Akkus, Z.; Galimzianova, A.; Hoogi, A.; Rubin, D.L.; Erickson, B.J. Deep Learning for Brain MRI Segmentation: State of the Art and Future Directions. J. Digit. Imaging 2017, 30, 449–459. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mulder, E.R.; De Jong, R.A.; Knol, D.L.; Van Schijndel, R.A.; Cover, K.S.; Visser, P.J.; Barkhof, F.; Vrenken, H.; Alzheimer’s Disease Neuroimaging Initiative. Hippocampal volume change measurement: Quantitative assessment of the reproducibility of expert manual outlining and the automated methods FreeSurfer and FIRST. NeuroImage 2014, 92, 169–181. [Google Scholar] [CrossRef]

- Corso, J.J.; Sharon, E.; Dube, S.; El-Saden, S.; Sinha, U.; Yuille, A. Efficient Multilevel Brain Tumor Segmentation with Integrated Bayesian Model Classification. IEEE Trans. Med. Imaging 2008, 27, 629–640. [Google Scholar] [CrossRef] [Green Version]

- Yang, C.; Sethi, M.; Rangarajan, A.; Ranka, S. Supervoxel-Based Segmentation of 3D Volumetric Images. Appl. E Comput. 2017, 10111, 37–53. [Google Scholar]

- Killiany, R.J.; Hyman, B.T.; Gómez-Isla, T.; Moss, M.B.; Kikinis, R.; Jolesz, F.; Tanzi, R.; Jones, K.; Albert, M.S. MRI measures of entorhinal cortex vs hippocampus in preclinical AD. Neurology 2002, 58, 1188–1196. [Google Scholar] [CrossRef]

- Ryu, S.Y.; Kwon, M.J.; Lee, S.B.; Yang, D.W.; Kim, T.W.; Song, I.U.; Yang, P.S.; Kim, H.J.; Lee, A.Y. Measurement of Precuneal and Hippocampal Volumes Using Magnetic Resonance Volumetry in Alzheimer’s Disease. J. Clin. Neurol. 2010, 6, 196–203. [Google Scholar] [CrossRef] [Green Version]

- Lin, D.; Vasilakos, A.V.; Tang, Y.; Yao, Y. Neural networks for computer-aided diagnosis in medicine: A review. Neurocomputing 2016, 216, 700–708. [Google Scholar] [CrossRef]

- Kooi, T.; Litjens, G.; Van Ginneken, B.; Gubern-Mérida, A.; Sánchez, C.I.; Mann, R.M.; Heeten, A.D.; Karssemeijer, N. Large scale deep learning for computer aided detection of mammographic lesions. Med. Image Anal. 2017, 35, 303–312. [Google Scholar] [CrossRef] [PubMed]

- Cheng, J.-Z.; Ni, N.; Chou, Y.-H.; Qin, J.; Tiu, C.-M.; Chang, Y.-C.; Huang, C.-S.; Shen, D.; Chen, C.-M. Computer-Aided Diagnosis with Deep Learning Architecture: Applications to Breast Lesions in US Images and Pulmonary Nodules in CT Scans. Sci. Rep. 2016, 6, 2445. [Google Scholar] [CrossRef] [Green Version]

- Geert, L.; Clara, S.; Nadya, T.; Meyke, H.; Iris, N.; Iringo, K.; Christina, H.; Peter, B.; Bram, G.; Jeroen, L. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci. Rep. 2016, 6, 26286. [Google Scholar]

- Marcus, D.; Wang, T.H.; Parker, J.; Csernansky, J.G.; Morris, J.C.; Buckner, R.L. Open Access Series of Imaging Studies (OASIS): Cross-sectional MRI Data in Young, Middle Aged, Nondemented, and Demented Older Adults. J. Cogn. Neurosci. 2007, 19, 1498–1507. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jack, C.R.; Bernstein, M.A.; Fox, N.; Thompson, P.; Alexander, G.; Harvey, D.; Borowski, B.; Britson, P.J.; Whitwell, J.L.; Ward, C.; et al. The Alzheimer’s disease neuroimaging initiative (ADNI): MRI methods. J. Magn. Reson. Imaging 2008, 27, 685–691. [Google Scholar] [CrossRef] [Green Version]

- Warfield, S.; Landman, B. MICCAI 2012 Workshop on Multi-Atlas Labeling, in: MICCAI Grand Challenge and Workshop on Multi-Atlas Labeling; CreateSpace Independent Publishing Platform: Scotts Valley, CA, USA, 2012. [Google Scholar]

- IBSR Dataset. Available online: https://www.nitrc.org/projects/ibsr (accessed on 4 June 2020).

- Morris, C. The clinical dementia rating (CDR). Neurology 1993, 43, 2412. [Google Scholar] [CrossRef]

- Rubin, E.H.; Storandt, M.; Miller, J.P.; Kinscherf, D.A.; Grant, E.A.; Morris, J.C.; Berg, L. A prospective study of cognitive function and onset of dementia in cognitively healthy elders. Arch. Neurol. 1998, 55, 395–401. [Google Scholar] [CrossRef]

- Duta, N.; Sonka, M. Segmentation and interpretation of MR brain images. An improved active shape model. IEEE Trans. Med. Imaging 1998, 17, 1049–1062. [Google Scholar] [CrossRef]

- Rogowska, J. Overview and fundamentals of medical image segmentation. In Handbook of Medical Image Processing and Analysis; Elsevier: Amsterdam, The Netherlands, 2000. [Google Scholar]

- Vovk, U.; Pernus, F.; Likar, B. A Review of Methods for Correction of Intensity Inhomogeneity in MRI. IEEE Trans. Med. Imaging 2007, 26, 405–421. [Google Scholar] [CrossRef]

- Coupe, P.; Yger, P.; Prima, S.; Hellier, P.; Kervrann, C.; Barillot, C. An optimized blockwise nonlocal means denoising filter for 3-D magnetic resonance images. IEEE Trans. Med. Imaging 2008, 27, 425–441. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Arno, K.; Jesper, A.; Babak, A.; John, A.; Brian, A.; Ming-Chang, C.; Gary, C.; Louis, C.; James, G.; Pierre, H.; et al. Evaluation of 14 nonlinear deformation algorithms applied to human brain MRI registration. NeuroImage 2009, 46, 786–802. [Google Scholar]

- Zhang, Y.-D.; Dong, Z.; Wu, L.; Wang, S. A hybrid method for MRI brain image classification. Expert Syst. Appl. 2011, 38, 10049–10053. [Google Scholar] [CrossRef]

- Neffati, S.; Ben Abdellafou, K.; Jaffel, I.; Taouali, O.; Bouzrara, K. An improved machine learning technique based on downsized KPCA for Alzheimer’s disease classification. Int. J. Imaging Syst. Technol. 2018, 29, 121–131. [Google Scholar] [CrossRef]

- Saraswathi, S.; Mahanand, B.; Kloczkowski, A.; Suresh, S.; Sundararajan, N. Detection of onset of Alzheimer’s disease from MRI images using a GA-ELM-PSO classifier. In Proceedings of the 2013 Fourth International Workshop on Computational Intelligence in Medical Imaging (CIMI), Singapore, 16–19 April 2013; pp. 42–48. [Google Scholar]

- Ding, Y.; Zhang, C.; Lan, T.; Qin, Z.; Zhang, X.; Wang, W. Classification of Alzheimer’s disease based on the combination of morphometric feature and texture feature. In Proceedings of the 2015 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Washington, DC, USA, 9–12 November 2015; pp. 409–412. [Google Scholar]

- Kwon, G.-R.; Kim, J.-I.; Kwon, G.-R. Diagnosis of Alzheimer’s Disease Using Dual-Tree Complex Wavelet Transform, PCA, and Feed-Forward Neural Network. J. Health Eng. 2017, 2017, 1–13. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Li, F. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Alex, K.; Ilya, S.; Hg, E. Imagenet classification with deep convolutional neural networks. In Proceedings of the NIPS, IEEE Neural Information Processing System Foundation, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Li, R.; Deng, H.; Wang, L.; Lin, W.; Ji, S.; Shen, D. Deep convolutional neural networks for multi-modality isointense infant brain image segmentation. NeuroImage 2015, 108, 214–224. [Google Scholar] [CrossRef]

- Brebisson, A.; Montana, G. Deep neural networks for anatomical brain segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7 June 2015. [Google Scholar]

- Moeskops, P.; Benders, M.; Chiţǎ, S.M.; Kersbergen, K.J.; Groenendaal, F.; De Vries, L.S.; Viergever, M.A.; Išgum, I. Automatic segmentation of MR brain images of preterm infants using supervised classification. NeuroImage 2015, 118, 628–641. [Google Scholar] [CrossRef]

- Dong, N.; Wang, L.; Gao, Y.; Shen, D. Fully convolutional networks for multi-modality isointense infant brain image segmentation. In Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016; pp. 1342–1345. [Google Scholar]

- Brosch, T.; Tang, L.Y.W.; Yoo, Y.; Li, D.K.B.; Traboulsee, A.; Tam, R. Deep 3D Convolutional Encoder Networks with Shortcuts for Multiscale Feature Integration Applied to Multiple Sclerosis Lesion Segmentation. IEEE Trans. Med. Imaging 2016, 35, 1229–1239. [Google Scholar] [CrossRef]

- Shakeri, M.; Tsogkas, S.; Ferrante, E.; Lippe, S.; Kadoury, S.; Paragios, N.; Kokkinos, I. Sub-cortical brain structure segmentation using F-CNNs. In Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016. [Google Scholar]

- Kong, Z.; Luo, J.; Xu, S.; Li, T. Automatical and accurate segmentation of cerebral tissues in fMRI dataset with combination of image processing and deep learning. Opt. Biophotonics Low-Resour. Settings IV 2018, 10485. [Google Scholar] [CrossRef]

- Milletari, F.; Ahmadi, S.-A.; Kroll, C.; Plate, A.; Rozanski, V.; Maiostre, J.; Levin, J.; Dietrich, O.; Ertl-Wagner, B.; Bötzel, K.; et al. Hough-CNN: Deep learning for segmentation of deep brain regions in MRI and ultrasound. Comput. Vis. Image Underst. 2017, 164, 92–102. [Google Scholar] [CrossRef] [Green Version]

- Mehta, R.; Majumdar, A.; Sivaswamy, J. Brainsegnet: A convolutional neural network architecture for automated segmentation of human brain structures. J. Med. Imaging 2017, 4, 024003. [Google Scholar] [CrossRef] [PubMed]

- Kamnitsas, K.; Ledig, C.; Newcombe, V.; Simpson, J.P.; Kane, A.; Menon, D.; Rueckert, D.; Glocker, B. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med. Image Anal. 2017, 36, 61–78. [Google Scholar] [CrossRef]

- Pereira, S.; Pinto, A.; Alves, V.; Silva, C. Brain Tumor Segmentation Using Convolutional Neural Networks in MRI Images. IEEE Trans. Med. Imaging 2016, 35, 1240–1251. [Google Scholar] [CrossRef]

- Havaei, M.; Davy, A.; Warde-Farley, D.; Biard, A.; Courville, A.; Bengio, Y.; Pal, C.; Jodoin, P.-M.; LaRochelle, H. Brain tumor segmentation with Deep Neural Networks. Med. Image Anal. 2017, 35, 18–31. [Google Scholar] [CrossRef] [Green Version]

- Dou, Q.; Chen, H.; Yu, L.; Zhao, L.; Qin, J.; Wang, D.; Mok, V.C.-T.; Shi, L.; Heng, P.A. Automatic Detection of Cerebral Microbleeds from MR Images via 3D Convolutional Neural Networks. IEEE Trans. Med. Imaging 2016, 35, 1182–1195. [Google Scholar] [CrossRef]

- Chen, H.; Dou, Q.; Yu, L.; Qin, J.; Heng, P.A. VoxResNet: Deep voxelwise residual networks for brain segmentation from 3D MR images. NeuroImage 2018, 170, 446–455. [Google Scholar] [CrossRef]

- Lyksborg, M.; Puonti, O.; Agn, M.; Larsen, R. An Ensemble of 2D Convolutional Neural Networks for Tumor Segmentation. Appl. E Comput. 2015, 9127, 201–211. [Google Scholar]

- Chen, X.-W.; Lin, X. Big Data Deep Learning: Challenges and Perspectives. IEEE Access 2014, 2, 514–525. [Google Scholar] [CrossRef]

- Alom, Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sagan, V.; Nasrin, M.S.; Hasan, M.; Van Essen, B.C.; Awwal, A.A.S.; Asari, V.K. A State-of-the-Art Survey on Deep Learning Theory and Architectures. Electronics 2019, 8, 292. [Google Scholar] [CrossRef] [Green Version]

- Srhoj-Egekher, V.; Benders, M.; Viergever, M.A.; Išgum, I. Automatic neonatal brain tissue segmentation with MRI. SPIE Med. Imaging 2013, 8669, 86691K. [Google Scholar] [CrossRef]

- Anbeek, P.; Išgum, I.; Van Kooij, B.J.M.; Mol, C.P.; Kersbergen, K.J.; Groenendaal, F.; Viergever, M.A.; De Vries, L.S.; Benders, M. Automatic Segmentation of Eight Tissue Classes in Neonatal Brain MRI. PLoS ONE 2013, 8, e81895. [Google Scholar] [CrossRef]

- Vrooman, H.; Cocosco, C.; Lijn, F.; Stokking, R.; Ikram, M.; Vernooij, M.; Breteler, M.; Niessen, W.J. Multi-spectral brain tissue segmentation using automatically trained k-nearestneighbor classification. NeuroImage 2007, 37, 71–81. [Google Scholar] [CrossRef] [PubMed]

- Makropoulos, A.; Gousias, I.S.; Ledig, C.; Aljabar, P.; Serag, A.; Hajnal, J.V.; Edwards, A.D.; Counsell, S.; Rueckert, D. Automatic Whole Brain MRI Segmentation of the Developing Neonatal Brain. IEEE Trans. Med. Imaging 2014, 33, 1818–1831. [Google Scholar] [CrossRef] [PubMed]

- Christensen, G.E.; Rabbitt, R.D.; Miller, M.I. Deformable templates using large deformation kinematics. IEEE Trans. Image Process. 1996, 5, 1435–1447. [Google Scholar] [CrossRef]

- Davatzikos, C.; Prince, J. Brain image registration based on curve mapping. In Proceedings of the IEEE Workshop on Biomedical Image Analysis, Seattle, WA, USA, 24–25 June 1994; pp. 245–254. [Google Scholar]

- Carmichael, O.T.; Aizenstein, H.J.; Davis, S.W.; Becker, J.T.; Thompson, P.M.; Meltzer, C.C.; Liu, Y. Atlas-based hippocampus segmentation in Alzheimer’s disease and mild cognitive impairment. NeuroImage 2005, 27, 979–990. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Gao, Y.; Shi, F.; Li, G.; Gilmore, J.; Lin, W.; Shen, D. Links: Learning-based multi-source integration framework for segmentation of infant brain images. NeuroImage 2014, 108, 734–746. [Google Scholar]

- Chiţǎ, S.M.; Benders, M.; Moeskops, P.; Kersbergen, K.J.; Viergever, M.A.; Išgum, I. Automatic segmentation of the preterm neonatal brain with MRI using supervised classification. SPIE Med Imaging 2013, 8669, 86693. [Google Scholar]

- Cuingnet, R.; Gerardin, E.; Tessieras, J.; Auzias, G.; Lehéricy, S.; Habert, M.-O.; Chupin, M.; Benali, H.; Colliot, O. Automatic classification of patients with Alzheimer’s disease from structural MRI: A comparison of ten methods using the ADNI database. NeuroImage 2011, 56, 766–781. [Google Scholar] [CrossRef] [Green Version]

- Wolz, R.; Julkunen, V.; Koikkalainen, J.; Niskanen, E.; Zhang, D.P.; Rueckert, D.; Soininen, H.; Lötjönen, J.M.P.; The Alzheimer’s Disease Neuroimaging Initiative. Multi-Method Analysis of MRI Images in Early Diagnostics of Alzheimer’s Disease. PLoS ONE 2011, 6, e25446. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Braak, H.; Braak, E. Staging of alzheimer’s disease-related neurofibrillary changes. Neurobiol. Aging 1995, 16, 271–278. [Google Scholar] [CrossRef]

- Dubois, B.; Feldman, H.H.; Jacova, C.; DeKosky, S.T.; Barberger-Gateau, P.; Cummings, J.; Delacourte, A.; Galasko, U.; Gauthier, S.; Jicha, G.; et al. Research criteria for the diagnosis of Alzheimer’s disease: Revising the NINCDS–ADRDA criteria. Lancet Neurol. 2007, 6, 734–746. [Google Scholar] [CrossRef]

- Maryam, H.; Bashir, B.; Jamshid, D.; Tim, E. Segmentation of the Hippocampus for Detection of Alzheimer’s Disease, Advances in Visual Computing; Springer: Berlin/Heidelberg, Germany, 2012; pp. 42–50. [Google Scholar]

- Claude, I.; Daire, J.-L.; Sebag, G. Fetal Brain MRI: Segmentation and Biometric Analysis of the Posterior Fossa. IEEE Trans. Biomed. Eng. 2004, 51, 617–626. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Zhu, Y.; Gao, W.; Xu, H.; Lin, Z. The measurement of the volume of stereotaxic MRI hippocampal formation applying the region growth algorithm based on seeds. In Proceedings of the IEEE/ICME International Conference on Complex Medical Engineering, Harbin, China, 22–25 May 2011. [Google Scholar]

- Tan, P. Hippocampus Region Segmentation for Alzheimer’s Disease Detection; Technische Universiteit Eindhoven: Eindhoven, The Netherlands, 2009. [Google Scholar]

- Bao, S.; Chung, A. Multi-scale structured cnn with label consistency for brain MR image segmentation. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2016, 6, 1–5. [Google Scholar] [CrossRef]

- Dolz, J.; Desrosiers, C.; Ben Ayed, I. 3D fully convolutional networks for subcortical segmentation in MRI: A large-scale study. NeuroImage 2018, 170, 456–470. [Google Scholar] [CrossRef] [Green Version]

- Wachinger, C.; Reuter, M.; Klein, T. DeepNAT: Deep convolutional neural network for segmenting neuroanatomy. NeuroImage 2018, 170, 434–445. [Google Scholar] [CrossRef]

- Khagi, B.; Kwon, G.; Lama, R. Comparative analysis of Alzheimer’s disease classification by CDR level using CNN, feature selection, and machine-learning techniques. Int. J. Imaging Syst. Technol. 2019, 29, 297–310. [Google Scholar] [CrossRef]

- Bernal, J.; Kushibar, K.; Cabezas, M.; Valverde, S.; Oliver, A.; Llado, X. Quantitative Analysis of Patch-Based Fully Convolutional Neural Networks for Tissue Segmentation on Brain Magnetic Resonance Imaging. IEEE Access 2019, 7, 89986–90002. [Google Scholar] [CrossRef]

- Jiong, W.; Zhang, Y.; Wang, K.; Tang, X. Skip Connection U-Net for White Matter Hyperintensities Segmentation from MRI. IEEE Access 2019, 7, 155194–155202. [Google Scholar] [CrossRef]

- Chen, C.-C.C.; Chai, J.-W.; Chen, H.-C.; Wang, H.C.; Chang, Y.-C.; Wu, Y.-Y.; Chen, W.-H.; Chen, H.-M.; Lee, S.-K.; Chang, C.-I. An Iterative Mixed Pixel Classification for Brain Tissues and White Matter Hyperintensity in Magnetic Resonance Imaging. IEEE Access 2019, 7, 124674–124687. [Google Scholar] [CrossRef]

- Pengcheng, L.; Zhao, Y.; Liu, Y.; Chen, Q.; Liu, F.; Gao, C. Temporally Consistent Segmentation of Brain Tissue from Longitudinal MR Data. IEEE Access 2020, 8, 3285–3293. [Google Scholar] [CrossRef]

- Pulkit, K.; Pravin, N.; Chetan, A. U-SegNet: Fully Convolutional Neural Network based Automated Brain tissue segmentation Tool. In Proceedings of the 2018 25th IEEE International Conference on Image Processing, Athens, Greece, 7–10 October 2018. [Google Scholar]

- Wortmann, M. World Alzheimer’s report 2014: Dementia and risk reduction. Alzheimer’s Dement. 2015, 11, 837. [Google Scholar] [CrossRef]

- Morra, J.H.; Tu, Z.; Apostolova, L.G.; Green, A.E.; Toga, A.W.; Thompson, P.M. Comparison of AdaBoost and Support Vector Machines for Detecting Alzheimer’s Disease Through Automated Hippocampal Segmentation. IEEE Trans. Med. Imaging 2009, 29, 30–43. [Google Scholar] [CrossRef] [Green Version]

- Brookmeyer, R.; Johnson, E.; Ziegler-Graham, K.; Arrighi, H.M. Forecasting the global burden of Alzheimer’s disease. Alzheimer’s Dement. 2007, 3, 186–191. [Google Scholar] [CrossRef] [Green Version]

- Siqi, L.; Liu, S.; Cai, W.; Pujol, S.; Kikinis, R.; Feng, D.D. Early diagnosis of Alzheimer’s disease with deep learning. In Proceedings of the 2014 IEEE 11th International Symposium on Biomedical Imaging (ISBI), Beijing, China, 29 April–2 May 2014; pp. 1015–1018. [Google Scholar]

- Suk, H.; Lee, S.; Shen, D. Hierarchical feature representation and multimodal fusion with deep learning for AD-MCI diagnosis. NeuroImage 2014, 101, 569–582. [Google Scholar] [CrossRef] [Green Version]

- Payan, A.; Montana, G. Predicting Alzheimer’s disease: A neuroimaging study with 3D convolutional neural networks. In Proceedings of the ICPRAM 2015-4th International Conference on Pattern Recognition Applications and Methods, Lisbon, Portugal, 10–12 January 2015. [Google Scholar]

- Andres, O.; Munilla, J.; Gorriz, J.; Ramirez, J. Ensembles of Deep Learning Architectures for the Early Diagnosis of the Alzheimer’s Disease. Int. J. Neural Syst. 2016, 26, 1650025. [Google Scholar] [CrossRef]

- Hosseini, E.; Gimel’farb, G.; El-Baz, A. Alzheimer’s disease diagnostics by a deeply supervised adapTable 3D convolutional network, Computational and Mathematical Methods in Medicine. arXiv 2016, arXiv:1607.00556. [Google Scholar]

- Sarraf, S.; Tofighi, G. Classification of Alzheimer’s disease using fMRI data and deep learning convolutional neural networks. arXiv 2016, arXiv:1603.08631. [Google Scholar]

- Liu, M.; Zhang, J.; Adeli, E.; Shen, D. Landmark-based deep multi-instance learning for brain disease diagnosis. Med. Image Anal. 2017, 43, 157–168. [Google Scholar] [CrossRef]

- Aderghal, K.; Benois-Pineau, J.; Karim, A.; Gwenaelle, C. Fuseme: Classification of sMRI images by fusion of deep CNNs in 2D+E projections. In Proceedings of the 15th International Workshop on Content-Based Multimedia Indexing, Florence, Italy, 19–21 June 2017. [Google Scholar]

- Shi, J.; Zheng, X.; Li, Y.; Zhang, Q.; Ying, S. Multimodal Neuroimaging Feature Learning with Multimodal Stacked Deep Polynomial Networks for Diagnosis of Alzheimer’s Disease. IEEE J. Biomed. Health Inform. 2018, 22, 173–183. [Google Scholar] [CrossRef] [PubMed]

- Korolev, S.; Safiullin, A.; Belyaev, M.; Dodonova, Y. Residual and plain convolutional neural networks for 3D brain MRI classification. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, Australia, 18–21 April 2017. [Google Scholar]

- Jyoti, I.; Zhang, Y. Brain MRI analysis for Alzheimer’s disease diagnosis using an ensemble system of deep convolutional neural networks. Brain Inform. 2018, 5, 2. [Google Scholar] [CrossRef]

- Lu, D.; Popuri, K.; Ding, W.; Balachandar, R.; Faisal, M. Multimodal and multiscale deep neural networks for the early diagnosis of Alzheimer’s disease using structural MRI and FDG-pPET images. Sci. Rep. 2017, 8, 1–13. [Google Scholar]

- Khvostikov, A.; Aderghal, K.; Benois-Pineau, J.; Krylov, A.; Catheline, G. 3D CNN-based classification using sMRI and md-DTI images for Alzheimer disease studies. arXiv 2018, arXiv:1801.05968. [Google Scholar]

- Aderghal, K.; Khvostikov, A.; Krylov, A.; Benois-Pineau, J.; Afdel, K.; Catheline, G. Classification of Alzheimer Disease on Imaging Modalities with Deep CNNs Using Cross-Modal Transfer Learning. In Proceedings of the 2018 IEEE 31st International Symposium on Computer-Based Medical Systems (CBMS), Karlstad, Sweden, 18–21 June 2018. [Google Scholar]

- Lian, C.; Liu, M.; Zhang, J.; Shen, D. Hierarchical Fully Convolutional Network for Joint Atrophy Localization and Alzheimer’s Disease Diagnosis Using Structural MRI. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 880–893. [Google Scholar] [CrossRef]

- Liu, M.; Cheng, D.; Wang, K.; Wang, Y.; The Alzheimer’s Disease Neuroimaging Initiative. Multi-Modality Cascaded Convolutional Neural Networks for Alzheimer’s Disease Diagnosis. Neuroinformatics 2018, 16, 295–308. [Google Scholar] [CrossRef]

- Lee, B.; Ellahi, W.; Choi, J.Y. Using Deep CNN with Data Permutation Scheme for Classification of Alzheimer’s Disease in Structural Magnetic Resonance Imaging (sMRI). IEICE Trans. Inf. Syst. 2019, E102, 1384–1395. [Google Scholar] [CrossRef] [Green Version]

- Feng, C.; ElAzab, A.; Yang, P.; Wang, T.; Zhou, F.; Hu, H.; Xiao, X.; Lei, B. Deep Learning Framework for Alzheimer’s Disease Diagnosis via 3D-CNN and FSBi-LSTM. IEEE Access 2019, 7, 63605–63618. [Google Scholar] [CrossRef]

- Mefraz, K.; Abraham, N.; Hon, M. Transfer Learning with Intelligent Training Data Selection for Prediction of Alzheimer’s Disease. IEEE Access 2019, 7, 72726–72735. [Google Scholar] [CrossRef]

- Ruoxuan, C.; Liu, M. Hippocampus Analysis by Combination of 3-D DenseNet and Shapes for Alzheimer’s Disease Diagnosis. IEEE J. Biomed. Health Inform. 2019, 23, 2099–2107. [Google Scholar] [CrossRef]

- Ahmed, S.; Choi, K.Y.; Lee, J.J.; Kim, B.C.; Kwon, G.-R.; Lee, K.H.; Jung, H.Y.; Goo-Rak, K. Ensembles of Patch-Based Classifiers for Diagnosis of Alzheimer Diseases. IEEE Access 2019, 7, 73373–73383. [Google Scholar] [CrossRef]

- Fang, X.; Liu, Z.; Xu, M. Ensemble of deep convolutional neural networks based multi-modality images for Alzheimer’s disease diagnosis. IET Image Process. 2020, 14, 318–326. [Google Scholar] [CrossRef]

- Kam, T.-E.; Zhang, H.; Jiao, Z.; Shen, D. Deep Learning of Static and Dynamic Brain Functional Networks for Early MCI Detection. IEEE Trans. Med. Imaging 2020, 39, 478–487. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Suk, H.-I.; Gao, Y.; Lee, S.-W.; Shen, D. Leveraging Coupled Interaction for Multimodal Alzheimer’s Disease Diagnosis. IEEE Trans. Neural Networks Learn. Syst. 2020, 31, 186–200. [Google Scholar] [CrossRef]

- Di Paola, M.; Di Iulio, F.; Cherubini, A.; Blundo, C.; Casini, A.R.; Sancesario, G.; Passafiume, D.; Caltagirone, C.; Spalletta, G. When, where, and how the corpus callosum changes in MCI and AD: A multimodal MRI study. Neurology 2010, 74, 1136–1142. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Liu, S.; Cai, W.; Che, H.; Pujol, S.; Kikinis, R.; Feng, D.; Fulham, M. Adni Multimodal neuroimaging feature learning for multiclass diagnosis of Alzheimer’s disease. IEEE Trans. Biomed. Eng. 2014, 62, 1132–1140. [Google Scholar] [CrossRef] [Green Version]

- Loveman, E.; Green, C.; Fleming, J.; Takeda, A.; Picot, J.; Payne, E.; Clegg, A. The Clinical and Cost-effectiveness of Donepezil, Rivastigmine, Galantamine and Memantine for Alzheimer’s Disease; Health Technology Assessment: Winchester, UK, 2006. [Google Scholar]

- Braak, H.; Braak, E. Neuropahological staging of Alzheimer-related changes. Acta Neuropath. Appl. Neurobiol. 1991, 14, 39–44. [Google Scholar] [CrossRef]

- Fox, N.C.; Warrington, E.K.; Freeborough, P.; Hartikainen, P.; Kennedy, A.M.; Stevens, J.M.; Rossor, M. Presymptomatic hippocampal atrophy in Alzheimer’s disease. A longitudinal MRI study. Brain 1996, 119, 2001–2007. [Google Scholar] [CrossRef]

- Rose, S. Loss of connectivity in Alzheimer’s disease: An evaluation of white matter tract integrity with color coded MR diffusion tensorimaging. J. Neurol. Neurosurg. Psychiatry 2000, 69, 528–530. [Google Scholar] [CrossRef] [Green Version]

- Petersen, R.; Doody, R.; Kurz, A.; Mohs, R.; Morris, J.; Rabins, P.; Ritchie, K.; Rossor, M.; Thal, L.; Winblad, B. Current concepts in mild cognitive impairment Archives of neurology. Arch. Neurol. 2002, 58, 1985–1992. [Google Scholar] [CrossRef]

- Perri, R.; Serra, L.; Carlesimo, G.; Caltagirone, C. Preclinical dementia: An Italian multicenter study on amnestic mild cognitive impairment Dementia and geriatric cognitive disorders. Dement. Geriatr. Cogn. Disord. 2007, 23, 289–300. [Google Scholar] [CrossRef] [PubMed]

- Bozzali, M.; Franceschi, M.; Falini, A.; Pontesilli, S.; Cercignani, M.; Magnani, G.; Scotti, G.; Comi, G.; Filippi, M. Quantification of tissue damage in AD using diffusion tensor and magnetization transfer MRI. Neurology 2001, 57, 1135–1137. [Google Scholar] [CrossRef] [PubMed]

- Bozzali, M.; Falini, A.; Franceschi, M.; Cercignani, M.; Zuffi, M.; Scotti, G.; Comi, G.; Filippi, M. White matter damage in Alzheimer’s disease assessed in vivo using diffusion tensor magnetic resonance imaging. J. Neurol. Neurosurg. Psychiatry 2002, 72, 742–746. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kloppel, S.; Stonnington, C.; Chu, C.; Draganski, B.; Scahill, R.; Rohrer, J.; Fox, N.; Jack, C.; Ashburner, J.; Frackowiak, R. Automatic classification of MR scans in Alzheimer’s disease. Brain 2008, 131, 681–689. [Google Scholar] [CrossRef]

- Jack, C.R.; Wiste, H.J.; Vemuri, P.; Weigand, S.D.; Senjem, M.L.; Zeng, G.; Bernstein, M.A.; Gunter, J.L.; Pankratz, V.S.; Aisen, P.S.; et al. Brain beta-amyloid measures and magnetic resonance imaging atrophy both predict time-to-progression from mild cognitive impairment to Alzheimer’s disease. Brain 2010, 133, 3336–3348. [Google Scholar] [CrossRef]

- Klein-Koerkamp, Y.; Heckemann, R.; Baraly, K.; Moreaud, O.; Keignart, S.; Krainik, A.; Hammers, A.; Baciu, M.; Hot, P. Amygdalar atrophy in early Alzheimer’s disease. Curr. Alzheimer Res. 2014, 11, 239–252. [Google Scholar] [CrossRef] [Green Version]

- Colliot, O.; Chételat, G.; Chupin, M.; Desgranges, B.; Magnin, B.; Benali, H.; Dubois, B.; Garnero, L.; Eustache, F.; Lehéricy, S. Discrimination between Alzheimer Disease, Mild Cognitive Impairment, and Normal Aging by Using Automated Segmentation of the Hippocampus. Radiology 2008, 248, 194–201. [Google Scholar] [CrossRef] [Green Version]

- Dickerson, B.; Goncharova, I.; Sullivan, M.; Forchetti, C.; Wilson, R.; Bennett, D.; Beckett, L.; Morrell, L. MRI-derived entorhinal and hippocampal atrophy in incipient and very mild Alzheimer’s disease. Neurobiol. Aging 2001, 22, 747–754. [Google Scholar] [CrossRef]

- Wang, L.; Swank, J.; Glick, I.; Gado, M.; Miller, M.; Morris, J.; Csernansky, J. Changes in hippocampal volume and shape across time distinguish dementia of the Alzheimer’s type from healthy aging. NeuroImage 2003, 20, 667–682. [Google Scholar] [CrossRef]

- Barnes, J.; Foster, J.; Boyes, R.; Pepple, T.; Moore, E.; Schott, J.; Frost, C.; Scahill, R.; Fox, N. A comparison of methods for the automated calculation of volumes and atrophy rates in the hippocampus. NeuroImage 2008, 40, 1655–1671. [Google Scholar] [CrossRef]

- Wolz, R.; Heckemann, R.A.; Aljabar, P.; Hajnal, J.V.; Hammers, A.; Lötjönen, J.M.P.; Rueckert, D. Measurement of hippocampal atrophy using 4D graph-cut segmentation: Application to ADNI. NeuroImage 2010, 52, 109–118. [Google Scholar] [CrossRef] [PubMed]

- Leung, K.K.; Barnes, J.; Ridgway, G.; Bartlett, J.; Clarkson, M.J.; Macdonald, K.; Schuff, N.; Fox, N.; Ourselin, S.; The Alzheimer’s Disease Neuroimaging Initiative. Automated cross-sectional and longitudinal hippocampal volume measurement in mild cognitive impairment and Alzheimer’s disease. NeuroImage 2010, 51, 1345–1359. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shen, D.; Moffat, S.; Resnick, S.M.; Davatzikos, C. Measuring Size and Shape of the Hippocampus in MR Images Using a Deformable Shape Model. NeuroImage 2002, 15, 422–434. [Google Scholar] [CrossRef] [Green Version]

- Chupin, M.; Gerardin, E.; Cuingnet, R.; Boutet, C.; Lemieux, L.; Lehéricy, S.; Benali, H.; Garnero, L.; Colliot, O.; The Alzheimer’s Disease Neuroimaging Initiative. Fully automatic hippocampus segmentation and classification in Alzheimer’s disease and mild cognitive impairment applied on data from ADNI. Hippocampus 2009, 19, 579–587. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lötjönen, J.M.P.; Wolz, R.; Koikkalainen, J.; Thurfjell, L.; Waldemar, G.; Soininen, H.; Rueckert, D.; The Alzheimer’s Disease Neuroimaging Initiative. Fast and robust multi-atlas segmentation of brain magnetic resonance images. NeuroImage 2010, 49, 2352–2365. [Google Scholar] [CrossRef] [PubMed]

- Wolz, R.; Aljabar, P.; Hajnal, J.V.; Hammers, A.; Rueckert, D.; The Alzheimer’s Disease Neuroimaging Initiative. LEAP: Learning embeddings for atlas propagation. NeuroImage 2009, 49, 1316–1325. [Google Scholar] [CrossRef] [Green Version]

- Coupe, P.; Eskildsen, S.F.; Manjón, J.V.; Fonov, V.S.; Pruessner, J.C.; Allard, M.; Collins, D.L.; The Alzheimer’s Disease Neuroimaging Initiative. Scoring by nonlocal image patch estimator for early detection of Alzheimer’s disease. NeuroImage Clin. 2012, 1, 141–152. [Google Scholar] [CrossRef] [PubMed]

- Tong, T.; Wolz, R.; Gao, Q.; Hajnal, J.; Rueckert, D. Multiple instance learning for classification of dementia in brain MRI. Med Image Anal. 2013, 16, 599–606. [Google Scholar]

- Davatzikos, C.; Bhatt, P.; Shaw, L.; Batmanghelich, K.; Trojanowski, J. Prediction of MCI to AD conversion, via MRI, CSF biomarkers, and pattern classification. Neurobiol. Aging 2011, 32, 2322.e19–2322.e27. [Google Scholar] [CrossRef] [Green Version]

- Eskildsen, S.F.; Coupe, P.; García-Lorenzo, D.; Fonov, V.S.; Pruessner, J.C.; Collins, D.L.; The Alzheimer’s Disease Neuroimaging Initiative. Prediction of Alzheimer’s disease in subjects with mild cognitive impairment from the ADNI cohort using patterns of cortical thinning. NeuroImage 2012, 65, 511–521. [Google Scholar] [CrossRef] [Green Version]

- Ashburner, J.; Friston, K. Voxel-based morphometry-the methods. NeuroImage 2000, 11, 805–821. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ashburner, J.; Friston, K.J. Nonlinear spatial normalization using basis functions. Hum. Brain Mapp. 1999, 7, 254–266. [Google Scholar] [CrossRef]

- Koikkalainen, J.; Lötjönen, J.; Thurfjell, L.; Rueckert, D.; Waldemar, G.; Soininen, H.; The Alzheimer’s Disease Neuroimaging Initiative. Multi-template tensor-based morphometry: Application to analysis of Alzheimer’s disease. NeuroImage 2011, 56, 1134–1144. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Good, C.D.; Johnsrude, I.S.; Ashburner, J.; Henson, R.N.; Friston, K.J.; Frackowiak, R. A Voxel-Based Morphometric Study of Ageing in 465 Normal Adult Human Brains. NeuroImage 2001, 14, 21–36. [Google Scholar] [CrossRef] [Green Version]

- Bozzali, M.; Filippi, M.; Magnani, G.; Cercignani, M.; Franceschi, M.; Schiatti, E.; Falini, A. The contribution of voxelbased morphometry in staging patients with mild cognitive impairment. Neurology 2006, 67, 453–460. [Google Scholar] [CrossRef]

- Chételat, G.; Landeau, B.; Eustache, F.; Mézenge, F.; Viader, F.; De La Sayette, V.; Desgranges, B.; Baron, J.C. Using voxel-based morphometry to map the structural changes associated with rapid conversion in MCI: A longitudinal MRI study. NeuroImage 2005, 27, 934–946. [Google Scholar] [CrossRef]

- Kinkingnéhun, S.; Sarazin, M.; Lehericy, S.; Guichart-Gomez, E.; Hergueta, T.; Dubois, B. VBM anticipates the rate of progression of Alzheimer disease: A 3-year longitudinal study. Neurology 2008, 70, 2201–2211. [Google Scholar] [CrossRef]

- Serra, L.; Cercignani, M.; Lenzi, D.; Perri, R.; Fadda, L.; Caltagirone, C.; Macaluso, E.; Bozzali, M. Grey and White Matter Changes at Different Stages of Alzheimer’s Disease. J. Alzheimer’s Dis. 2010, 19, 147–159. [Google Scholar] [CrossRef]

- Yang, X.; Tan, M.Z.; Qiu, A. CSF and Brain Structural Imaging Markers of the Alzheimer’s Pathological Cascade. PLoS ONE 2012, 7, e47406. [Google Scholar] [CrossRef] [Green Version]

- Liu, M.; Li, F.; Yan, H.; Wang, K.; Ma, Y.; Shen, L.; Xu, M. A multi-model deep convolutional neural network for automatic hippocampus segmentation and classification in Alzheimer’s disease. NeuroImage 2020, 208, 116459. [Google Scholar] [CrossRef]

- Cao, L.; Li, L.; Zheng, J.; Fan, X.; Yin, F.; Shen, H.; Zhang, J. Multi-task neural networks for joint hippocampus segmentation and clinical score regression. Multimedia Tools Appl. 2018, 77, 29669–29686. [Google Scholar] [CrossRef]

- Choi, H.; Jin, K. Predicting cognitive decline with deep learning of brain metabolism and amyloid imaging. Behav. Brain Res. 2018, 344, 103–109. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vansteenkiste, E. Quantitative Analysis of Ultrasound Images of the Preterm Brain. Ph.D. Thesis, Ghent University, Ghent, Belgium, 2007. [Google Scholar]

- Carass, A.; Roy, S.; Jog, A.; Cuzzocreo, J. Longitudinal multiple sclerosis lesion segmentation: Resourceand challenge. NeuroImage 2017, 148, 72–102. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dice, L.R. Measures of the Amount of Ecologic Association between Species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- Crum, W.; Camara, O.; Hill, D.L. Generalized overlap measures for evaluation and validation in medical image analysis. IEEE Trans. Med. Imaging 2006, 25, 1451–1461. [Google Scholar] [CrossRef]

- Rathore, S.; Habes, M.; Iftikhar, M.A.; Shacklett, A.; Davatzikos, C. A review on neuroimaging-based classification studies and associated feature extraction methods for Alzheimer’s disease and its prodromal stages. NeuroImage 2017, 155, 530–548. [Google Scholar] [CrossRef]

- Hinrichs, C.; Singh, V.; Mukherjee, L.; Xu, G.; Chung, M.K.; Johnson, S.C.; The Alzheimer’s Disease Neuroimaging Initiative. Spatially augmented LPboosting for AD classification with evaluations on the ADNI dataset. NeuroImage 2009, 48, 138–149. [Google Scholar] [CrossRef] [Green Version]

- Salvatore, C.; Cerasa, A.; Battista, P.; Gilardi, M.C.; Quattrone, A.; Castiglioni, I. Magnetic resonance imaging biomarkers for the early diagnosis of Alzheimer’s disease: A machine learning approach. Front. Mol. Neurosci. 2015, 9, 270. [Google Scholar] [CrossRef] [Green Version]

- Kleesiek, J.; Urban, G.; Hubert, A.; Schwarz, D.; Maier-Hein, K.; Bendszus, M.; Biller, A. Deep MRI brain extraction: A 3D convolutional neural network for skull stripping. NeuroImage 2016, 129, 460–469. [Google Scholar] [CrossRef]

- Islam, J.; Zhang, Y. An Ensemble of Deep Convolutional Neural Networks for Alzheimer’s Disease Detection and Classification. In Proceedings of the 31st Conference on Neural Information Processing System (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Lavin, A.; Gray, S. Fast algorithms for convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Vemuri, P.; Gunter, J.L.; Senjem, M.; Whitwell, J.L.; Kantarci, K.; Knopman, D.S.; Boeve, B.F.; Petersen, R.C.; Jack, C.R. Alzheimer’s disease diagnosis in individual subjects using structural MR images: Validation studies. NeuroImage 2007, 39, 1186–1197. [Google Scholar] [CrossRef] [Green Version]

- Paine, T.; Jin, H.; Yang, J.; Lin, Z.; Huang, T. GPU asynchronous stochastic gradient descent to speed up neural network training. arXiv 2013, arXiv:1312.6186. [Google Scholar]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional architecture for fast feature embedding. In Proceedings of the MM 2014-Proceedings of the 2014 ACM Conference on Multimedia, New York, NY, USA, 3–7 November 2014. [Google Scholar]

- Collobert, R.; Kavukcuoglu, K.; Farabet, C. Torch7: A Matlab-Like Environment for Machine Learning. In Proceedings of the NIPS 2011 Workshop on Algorithms, Systems, and Tools for Learning at Scale, Sierra Nevada, Spain, 16–17 December 2011. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2015, arXiv:1603.04467. [Google Scholar]

- Bastien, F.; Lamblin, P.; Pascanu, R.; Bergstra, J.; Goodfellow, I.; Bergeron, A.; Bouchard, N.; Warde-Farley, D.; Bengio, Y. Theano: New features and speed improvements, CoRR. arXiv 2012, arXiv:1211.5590. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2015), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Cho, J.; Lee, K.; Shin, E.; Choy, G.; Do, S. How much data is needed to train a medical image deep learning system to achieve necessary high accuracy. arXiv 2015, arXiv:1511.06348. [Google Scholar]

- Lekadir, K.; Galimzianova, A.; Betriu, A.; Vila, M.D.M.; Igual, L.; Rubin, D.L.; Fernandez, E.; Radeva, P.; Napel, S. A Convolutional Neural Network for Automatic Characterization of Plaque Composition in Carotid Ultrasound. IEEE J. Biomed. Health Inform. 2017, 21, 48–55. [Google Scholar] [CrossRef] [Green Version]

| Dataset | Class | # of Subjects | Sex | Age | MMSE | # of MRI Scans | |||

|---|---|---|---|---|---|---|---|---|---|

| M | F | Mean | Std | Mean | Std | ||||

| OASIS | AD | 100 | 41 | 59 | 76.76 | 7.11 | 24.32 | 4.16 | 100 |

| HC | 316 | 119 | 197 | 45.09 | 23.11 | 29.63 | 0.83 | 316 | |

| ADNI | AD | 192 | 101 | 91 | 75.3 | 7.5 | 23.3 | 2.1 | 530 |

| MCI | 398 | 257 | 141 | 74.7 | 7.4 | 27.0 | 1.8 | 1126 | |

| HC | 229 | 120 | 109 | 75.8 | 5.0 | 29.1 | 1.0 | 877 | |

| IBSR | HC | 18 | 14 | 4 | 71 | - | - | - | 18 |

| MICCAI | HC | 35 | - | - | - | - | - | - | 35 |

| Strategies | Authors | Description |

|---|---|---|

| Semantic-wise | Dong [38] Brosch [39] Shakeri [40] Zhenglun [41] Milletari [42] Raghav [43] | The main objective of the semantic-wise segmentation is to link each pixel of an image with its class label. It is called dense prediction because every pixel is predicted from the whole input image. Later, segmentation labels are mapped with the input image in a way that minimizes the loss function. |

| Patch–wise | Kamnitsas [44] Pereira [45] Havaei [46] Zhang [35] Brebisson [36] Moeskops [37] | Patch-wise segmentation handles high-resolution images, and the input images are split as local patches. An N×N patch is extracted from the input image. These patches are trained and provide class labels to identify normal or abnormal brain images. The design network consists of convolution layers, transfer functions, pooling, and sub-sampling layers, and fully connected layers. |

| Cascaded | Dou [47] | The cascaded architecture types are used to combine two different CNN architectures. The output of the first architecture is fed into the second architecture to get classification results. The first architecture is used to train the model with the initial prediction of class labels, and later for fine-tuning. |

| Single-modality | Moeskops [37] Brebisson [36] Raghav [43] Milletari [42] Shakeri [40] | This type of modality refers to single-source information and is adaptable to different scenarios. The single modality commonly used in the public dataset for tissue-type segmentation in brain MRI (mainly T1-W images). |

| Multi-modality | Zhang [35] Chen [48] Lyksborg [49] | Multi-source information can be used, and it might require a larger number of parameters than using a single modality. The advantage of using multi-modality is to gain valuable contrast information. Furthermore, using multi-path configurations, the imaging sequences can be processed in parallel (e.g., T1 and T2, fluid-attenuated inversion recovery (FLAIR)). |

| No. | Authors | Year | Strategies | Dimension | Key Method | Classifier | Dataset |

|---|---|---|---|---|---|---|---|

| 1 | Zhang [35] | 2015 | Patch-wise | 2D | CNN | Soft-max | Clinical data |

| 2 | Brebisson [36] | 2015 | Patch-wise | 2D/3D | CNN | Soft-max | MICCAI 2012 |

| 3 | Moeskops [37] | 2016 | Patch-wise | 2D/3D | CNN | Soft-max | NeoBrainS12 |

| 4 | Bao [69] | 2016 | Patch-wise | 2D | CNN | Soft-max | IBSR/LPBA40 |

| 5 | Dong [38] | 2016 | Semantic-wise | 2D | CNN | Soft-max | Clinical data |

| 6 | Shakeri [40] | 2016 | Semantic-wise | 2D | FCNN | Soft-max | IBSR data |

| 7 | Raghav [43] | 2017 | Semantic-wise | 2D/3D | M-Net + CNN | Soft-max | IBSR/ MICCAI 2012 |

| 8 | Milletari [42] | 2017 | Semantic-wise | 2D/3D | Hough-CNN | Soft-max | MICCAI 2012 |

| 9 | Dolz [70] | 2018 | Semantic-wise | 3D | CNN | Soft-max | IBSR/ABIDE |

| 10 | Wachinger [71] | 2018 | Patch-Based | 3D | CNN | Soft-max | MICCAI 2012 |

| 11 | Zhenglun [41] | 2018 | Semantic-wise | 2D | Wavelet + CNN | Soft-max | Clinical data |

| 12 | Khagi [72] | 2018 | Semantic-wise | 2D | SegNet + CNN | Soft-max | OASIS Dataset |

| 13 | Bernal [73] | 2019 | Patch-Based | 2D/3D | FCNN | Soft-max | IBSR, MICCAI2012 & iSeg2017 |

| 14 | Jiong [74] | 2019 | Semantic-wise | 2D | U-net | Soft-max | MICCAI2017 |

| 15 | Chen [75] | 2019 | Semantic-wise | 2D | LCMV | - | BrainWeb |

| 16 | Pengcheng [76] | 2020 | Semantic-wise | 3D/4D | Fuzzy C-mean | - | BLSA |

| Authors | Methods | Application: Key Features |

|---|---|---|

| Zhang [35] | CNN | Tissue segmentation: multi-modal 2D segmentation for isointense brain tissues using the deep CNN architecture. |

| Brebisson [36] | CNN | Anatomical segmentation: fusing multi-scale 2D patches with a 3D patch using a CNN. |

| Moeskops [37] | CNN | Tissue segmentation: CNN trained on multiple patches and kernel sizes to extract information from each voxel. |

| Bao [69] | CNN | Anatomical segmentation: multi-scale late fusion CNN with a random walker as a novel label consistency method. |

| Dong [38] | CNN | Tissue segmentation: FCN with a late fusion method on different modalities. |

| Shakeri [40] | FCNN | Anatomical segmentation: FCN followed by Markov random fields, whose topology corresponds to a volumetric grid. |

| Raghav [43] | M-Net + CNN | Tissue segmentation: the 3D contextual information of a given slice is converted into a 2D slice using CNN. |

| Milletari [42] | Hough-CNN | Anatomical segmentation: Hough-voting to acquire mapping from CNN features to full patch segmentations. |

| Dolz [70] | CNN | Anatomical segmentation: 3D CNN architecture for the segmentation of subcortical MRI brain structure. |

| Wachinger [71] | CNN | Anatomical segmentation: neuroanatomy in T1-W MRI segmentation using deep CNN. |

| Zhenglun [41] | Wavelet + CNN | Tissue segmentation: pre-processing is performed with the wavelet multi-scale transformation, and then, CNN is applied for the segmentation of brain MRI. |

| Bernal [73] | FCNN | Tissue segmentation: the quantitative analysis of patch-based FCNN. |

| Jiong [74] | U-net | Tissue segmentation: skip-connection U-net for WM hyper intensities segmentation. |

| Chen [75] | LCMV | Tissue segmentation: new iterative linearly constrained minimum variance (LCMV) classification-based method developed for hyperspectral classification. |

| Pengcheng [76] | Fuzzy C-mean | Tissue segmentation: fuzzy C-means framework to improve the temporal consistency of adults’ brain tissue segmentation. |

| No. | Authors | Year | Content | Modalities | Key Method | Classifier | Data (Size) |

|---|---|---|---|---|---|---|---|

| 1 | Siqi [81] | 2014 | Full brain | MRI | Auto-encoder | Soft-max | ADNI (311) |

| 2 | Suk [82] | 2015 | Full brain | MRI + PET | CNN | Soft-max | ADNI (204) |

| 3 | Payan [83] | 2015 | Full brain | MRI | CNN | Soft-max | ADNI (755) |

| 4 | Andres [84] | 2016 | Gray matter | MRI + PET | Deep Belief Network | NN | ADNI (818) |

| 5 | Hosseini [85] | 2016 | Full brain | fMRI | CNN | Soft-max | ADNI (210) |

| 6 | Saraf [86] | 2016 | Full brain | fMRI | CNN | Soft-max | ADNI (58) |

| 7 | Mingxia [87] | 2017 | Full brain | MRI | CNN | Soft-max | ADNI (821) |

| 8 | Aderghal [88] | 2017 | Hippocampus | MRI + DTI | CNN | Soft-max | ADNI (1026) |

| 9 | Shi [89] | 2017 | Full brain | MRI + PET | Auto-encoder | Soft-max | ADNI (207) |

| 10 | Korolev [90] | 2017 | Full brain | MRI | CNN | Soft-max | ADNI (821) |

| 11 | Jyoti [91] | 2018 | Full brain | MRI | CNN | Soft-max | OASIS (416) |

| 12 | Donghuan [92] | 2018 | Full brain | MRI | CNN | Soft-max | ADNI (626) |

| 13 | Khvostikov [93] | 2018 | Hippocampus | MRI + DTI | CNN | Soft-max | ADNI (214) |

| 14 | Aderghal [94] | 2018 | Hippocampus | MRI + DTI | CNN | Soft-max | ADNI (815) |

| 15 | Lian [95] | 2018 | Full brain | MRI | FCN | Soft-max | ADNI (821) |

| 16 | Liu [96] | 2018 | Full brain | MRI + PET | CNN | Soft-max | ADNI (397) |

| 17 | Lee [97] | 2019 | Full brain | MRI | CNN | Alex-Net | ADNI (843), OASIS (416) |

| 18 | Feng [98] | 2019 | Full brain | MRI + PET | CNN | Soft-max | ADNI (397) |

| 19 | Mefraz [99] | 2019 | Full brain | MRI | Transfer learning | Soft-max | ADNI (50) |

| 20 | Ruoxuan [100] | 2019 | Hippocampus | MRI | CNN | Soft-max | ADNI (811) |

| 21 | Ahmed [101] | 2019 | Full brain | MRI | CNN | Soft-max | ADNI (352) GARD (326) |

| 22 | Fung [102] | 2020 | Full brain | MRI + PET | CNN | Adaboost | ADNI (352) |

| 23 | Kam [103] | 2020 | Full brain | MRI | CNN | Soft-max | ADNI (352) |

| 24 | Shi [104] | 2020 | Full brain | MRI + PET + CSF | Machine learning | Adaboost | ADNI (202) |

| Authors | Methods | Applications: Key Features |

|---|---|---|

| Siqi [81] | Auto-encoder | AD/HC classification: deep learning architecture contains sparse auto-encoders and a softmax regression layer for the classification of AD |

| Suk [82] | CNN | AD/MCI/HC classification: neuroimaging modalities for latent hierarchical feature representation from extracted patches using CNN |

| Payan [83] | CNN | AD/MCI/HC classification: 3D CNN pre-trained with sparse auto-encoders |

| Andres [84] | Deep Belief Network | AD/HC classification: automated anatomical labeling brain regions for the construction of classification techniques using deep learning architecture |

| Hosseini [85] | CNN | AD/MCI/HC classification: 3D CNN pre-trained with a 3D convolutional auto-encoder on MRI data |

| Saraf [86] | CNN | AD/HC classification: adapted Lenet-5 architecture on fMRI data |

| Mingxia [87] | CNN | AD/MCI/HC classification: landmark-based deep multi-instance learning framework for brain disease diagnosis |

| Aderghal [88] | CNN | AD/HC classification: separate CNN base classifier to form an ensemble of CNNs, each trained with a corresponding plane of MRI brain data |

| Shi [89] | Auto-encoder | AD/MCI/HC classification: multi-modal stacked deep polynomial networks with an SVM classifier on top layer using MRI and PET |

| Korolev [90] | CNN | AD/MCI/HC classification: residual and plain CNNs for 3D brain MRI |

| Jyoti [91] | CNN | AD/HC classification: deep CNN model for resolving an imbalanced dataset to identify AD and recognize the disease stages. |

| Donghuan [92] | CNN | AD/MCI classification: early diagnosis of AD by combing the multiple different modalities using multiscale and multimodal deep neural networks. |

| Khvostikov [93] | CNN | AD/HC classification: multi-modality fusion on hippocampal ROI using CNN |

| Aderghal [94] | CNN | AD/HC classification: diffusion tensor imaging modality from MRI using the transfer learning method |

| Lian [95] | FCN | AD/MCI/HC classification: CNN to discriminate the local patches in the brain MRI and multi-scale features are fused to construct hierarchical classification models for the diagnosis of AD. |

| Liu [96] | CNN | AD/MCI/HC classification: CNN to learn multi-level and multimodal features of MRI and PET brain images. |

| Lee [97] | CNN | AD/MCI/HC classification: data permutation scheme for the classification of AD in MRI using deep CNN. |

| Feng [98] | CNN | AD/MCI/HC classification: 3D-CNN designed to extract deep feature representation from both MRI and PET. Fully stacked bidirectional long short-term memory (FSBi-LSTM) applied to the hidden spatial information from deep feature maps to improve the performance. |

| Mefraz [99] | Transfer learning | AD/MCI/HC classification: transfer learning with intelligent training data selection for the prediction of AD and CNN pre-trained with VGG architecture. |

| Ruoxuan [100] | CNN | AD/MCI/HC classification: a new hippocampus analysis method combining the global and local features of the hippocampus by 3D densely connected CNN. |

| Ahmed [101] | CNN | AD/HC classification: ensembles of patch-based classifiers for the diagnosis of AD. |

| Fung [102] | CNN | AD/MCI/HC classification: an ensemble of deep CNNs with multi-modality images for the diagnosis of AD. |

| Kam [103] | CNN | AD/MCI/HC classification: CNN framework to simultaneously learn embedded features from brain functional networks (BFNs). |

| Shi [104] | Machine Learning | AD/MCI/HC classification: MRI, PET, and CSF are used as multimodal data. Coupled boosting and coupled metric ensemble scheme to model and learn an informative feature projection form the different modalities. |

| Metrics of Segmentation Quality | Mathematical Description |

|---|---|

| True positive rate, TPR (Sensitivity) | |

| Positive predictive rate, PPV (Precision) | |

| Negative predictive rate, NPV | |

| Dice similarity coefficient, DSC | |

| Volume difference rate, VDR | |

| Lesion-wise true positive rate, LTPR | |

| Lesion-wise positive predictive value, LPPV | |

| Specificity | |

| F1 score | |

| Accuracy | |

| Balanced Accuracy |

| Authors | MICCAI [17] | OASIS [15] | Clinical/IBSR [18] | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| DSC and JI | DSC and JI | DSC and JI | ||||||||

| CSF | GM | WM | CSF | GM | WM | CSF | GM | WM | ||

| 1 | Zhang [35] | - | - | - | - | - | - | 83.5 † | 85.2 † | 86.4 † |

| 2 | Brebisson [36] | 72.5 † | 72.5 † | 72.5 † | - | - | - | - | - | - |

| 3 | Moeskops [37] | 73.5 † | 73.5 † | 73.5 † | - | - | - | - | - | - |

| 4 | Bao [69] | - | - | - | - | - | - | 82.2 † | 85.0 † | 82.2 † |

| 5 | Dong [38] | - | - | - | - | - | - | 85.5 † | 87.3 † | 88.7 † |

| 6 | Zhenglun [41] | - | - | - | - | - | - | 94.3 * | 90.2 * | 91.4 * |

| 7 | Khagi [72] | - | - | - | 72.2 † 73.0 * | 74.6 † 74.0 * | 81.9 † 85.0 * | - | - | - |

| 8 | Shakeri [40] | - | - | - | - | - | - | 82.4 † | 82.4 † | 82.4 † |

| 9 | Raghav [43] | 74.3 † | 74.3 † | 74.3 † | - | - | - | 84.4 † | 84.4 † | 84.4 † |

| 10 | Milletari [42] | - | - | - | - | - | - | 77.0 † | 77.0 † | 77.0 † |

| 11 | Dolz [70] | - | - | - | - | - | - | 90.0 † | 90.0 † | 90.0 † |

| 12 | Wachinger [71] | 90.6 † | 90.6 † | 90.6 † | - | - | - | - | - | - |

| 13 | Chen [75] | - | - | - | - | - | - | 93.6 † | 94.8 † | 97.5 † |

| Authors | Subjects | AD vs. HC | pMCI vs. sMCI | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ACC | SEN | SPE | AUC | ACC | SEN | SPE | AUC | |||

| 1 | Siqi [81] | 204HC + 180AD | 0.79 | 0.83 | 0.87 | 0.78 | - | - | - | - |

| 2 | Suk [82] | 101HC + 128sMCI + 76pMCI + 93AD | 0.92 | 0.92 | 0.95 | 0.97 | 0.72 | 0.37 | 0.91 | 0.73 |

| 3 | Korolev [90] | 61HC + 77sMCI + 43pMCI + 50AD | 0.80 | - | - | 0.87 | 0.52 | - | - | 0.52 |

| 4 | Khvostikov [93] | 58HC + 48AD | 0.85 | 0.88 | 0.90 | - | - | - | - | - |

| 5 | Lian [95] | 429HC + 465sMCI + 205pMCI + 358AD | 0.90 | 0.82 | 0.97 | 0.95 | 0.81 | 0.53 | 0.85 | 0.78 |

| 6 | Mingxia [87] | 229HC + 226sMCI + 167pMCI + 203AD | 0.91 | 0.88 | 0.93 | 0.95 | 0.76 | 0.42 | 0.82 | 0.77 |

| 7 | Andres [84] | 68HC + 70AD | 0.90 | 0.86 | 0.94 | 0.95 | - | - | - | - |

| 8 | Adherghal [84] | 228HC + 188AD | 0.85 | 0.84 | 0.87 | - | - | - | - | - |

| 9 | Donghuan [92] | 360HC + 409sMCI + 217pMCI | - | - | - | - | 0.75 | 0.73 | 0.76 | - |

| 10 | Shi [89] | 52 NC + 56 sMCI + 43 pMCI + 51AD | 0.95 | 0.94 | 0.96 | 0.96 | 0.75 | 0.63 | 0.85 | 0.72 |

| 11 | Payan [83] | 755 subjects (AD, MCI, HC) | 0.95 | - | - | - | - | - | - | - |

| 12 | Hosseini [85] | 70HC + 70AD | 0.99 | - | 0.98 | - | - | - | - | - |

| 13 | Lee [97] | 843 subjects (AD, MCI, HC) | 0.98 | 0.96 | 0.97 | - | - | - | - | - |

| 14 | Liu [96] | 397 subjects (AD, MCI, HC) | 0.93 | 0.92 | 0.93 | 0.95 | - | - | - | - |

| 15 | Feng [98] | 397 subjects (AD, MCI, HC) | 0.94 | 0.97 | 0.92 | 0.96 | - | - | - | - |

| 16 | Ruoxuan [100] | 811 subjects (AD, MCI, HC) | 0.90 | 0.86 | 0.92 | 0.92 | 0.73 | 0.69 | 0.75 | 0.76 |

| Author | Dataset | Scanner | Hardware | Software | Training Time |

|---|---|---|---|---|---|

| Zhang [35] | Clinical data | 3T Siemens | Tesla K20c GPU with 2496 cores | iBEAT toolbox ITK-SNAP toolbox | less than one day |

| Brebisson [36] | MICCAI 2012 | - | NVIDIA Tesla K40 GPU with 12 GB memory. | Python with Theano framework | - |

| Moeskops [37] | NeoBrainS12 | 3T Philips Achieva | - | BET toolbox FMRIB software library | - |

| Bao [69] | IBSR LPBA40 | 1.5 T GE | - | FLIRT toolbox | - |

| Dong [38] | Clinical data | 3T Siemens | - | Python with Caffe framework iBEAT toolbox | - |

| Raghav [43] | IBSR MICCAI 2012 LPBA40 Hammers67n20 | - - 1.5 T GE 1 T Philips HPQ | NVIDIA K40 GPU, with 12 GB of RAM. | Python with Keras packages BET toolbox | - |

| Milletari [42] | Clinical data | - | Intel i7 quad-core workstations with 32 GB of RAM and Nvidia GTX 980 (4 GB -RAM). | Python with Caffe framework | - |

| Dolz [70] | IBSR ABIDE | - | Intel(R) Core(TM) i7-6700 K 4.0 GHz CPU and NVIDIA GeForce GTX 960 GPU with 2 GB of memory. | Python with Theano framework FreeSurfer 5.1 tool Medical Interaction Tool Kit | 2 days and a half |

| Wachinger [71] | MICCAI 2012 | - | NVIDIA Tesla K40 and TITAN X with 12 GB GPU memory | Python with Caffe framework FreeSurfer tool | 1 day(train) 1 h(test) |

| Bernal [73] | IBSR MICCAI2012 iSeg2017 | - | Ubuntu 16.04, with 128 GB RAM and TITAN-X PASCAL GPU with 8 GB RAM | Python with Keras packages | - |

| Jiong [74] | MICCAI2017 | - | Ubuntu 16.04 with 32 GB RAM and GTX 1080 Ti GPUs. | Python with Keras packages | - |

| Chen [75] | BrainWeb | 1.5 T Siemens | Windows 7 computer with CPU Intel R Xeon R E5-2620 v3 @ 2.40 GHz processor and 32 GB RAM | - | - |

| Pengcheng [76] | BLSA | - | - | FSL software 3D-Slicer | - |

| Hosseini [85] | ADNI | 1.5 T Siemens Trio | Amazon EC2 g 2.8 x large with GPU GRID K520 | Python with Theano framework | - |

| Saraf [86] | ADNI | 3T Siemens Trio | NVIDIA GPU | Python with Caffe framework BET toolbox FMRIB Software Library v 5.0 | - |

| Mingxia [87] | ADNI-1 ADNI-2 MIRIAD | 1.5 T Siemens Trio 3 T Siemens Trio 1.5 T Signa GE | NVIDIA GTX TITAN 12 GB GPU | MIPAV software FSL software | 27 h <1 s (test) |

| Aderghal [88] | ADNI | 1.5 T Siemens Trio | Intel® Xeon® CPU E5-2680 v2 with 2.80 GHz and Tesla K20Xm with 2496 CUDA cores GPU | Python with Caffe framework | 2 h, 3 min |

| Jyoti [91] | OASIS | 1.5 T Siemens | Linux X86-64 with AMD A8 CPU, 16 GB RAM and NVIDIA GeForce GTX 770 | Python with Tensorflow and Keras library | - |

| Khvostikov [93] | ADNI | 1.5 T Siemens Trio | Intel Core i7-6700 HQ with Nvidia GeForce GTX 960 M and Intel Core i7-7700 HQ CPU with Nvidia GeForce GTX 1070 GPU | Python with Tensorflow framework BET toolbox | - |

| Lian [95] | ADNI-1 ADNI-2 | 1.5 T Siemens Trio 3 T Siemens Trio | NVIDIA GTX TITAN 12 GB GPU | Python with Keras packages | - |

| Liu [96] | ADNI | 1.5 T Siemens Trio | GPU NVIDIA GTX1080. | Python with Theano framework and Keras packages | |

| Lee [97] | ADNI OASIS | 1.5 T Siemens Trio 1.5 T Siemens | Nvidia GTX 1080Ti GPU | - | - |

| Feng [98] | ADNI | 1.5 T Siemens Trio | Windows with NVIDIA TITA- Xt GPU | MIPAV Software Keras library with Tensorflow as backend | - |

| Ruoxuan [100] | ADNI | 1.5 T Siemens Trio | Ubuntu14.04-x64/ GPU of NVIDIA GeForce GTX 1080 Ti | FreeSurfer tool Keras library with Tensorflow as backend | - |

| Ahmed [101] | ADNI GARD | 1.5 T Siemens Trio | Intel(R) Xeon (R) CPU E5-1607 v4 @ 3.10 GHz, 32 GB RAM NVIDIA Quadro M4000 | Keras library with Tensorflow as backend | - |

| Fung [102] | ADNI | 1.5 T Siemens Trio | Desktop PC equipped with Intel Core i7, 8 GB memory and GPU with 16 G NVIDIA P100 × 8 | Ubuntu 16.04, Keras library with Tensorflow MATLAB 2014b with SPM | - |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yamanakkanavar, N.; Choi, J.Y.; Lee, B. MRI Segmentation and Classification of Human Brain Using Deep Learning for Diagnosis of Alzheimer’s Disease: A Survey. Sensors 2020, 20, 3243. https://doi.org/10.3390/s20113243

Yamanakkanavar N, Choi JY, Lee B. MRI Segmentation and Classification of Human Brain Using Deep Learning for Diagnosis of Alzheimer’s Disease: A Survey. Sensors. 2020; 20(11):3243. https://doi.org/10.3390/s20113243

Chicago/Turabian StyleYamanakkanavar, Nagaraj, Jae Young Choi, and Bumshik Lee. 2020. "MRI Segmentation and Classification of Human Brain Using Deep Learning for Diagnosis of Alzheimer’s Disease: A Survey" Sensors 20, no. 11: 3243. https://doi.org/10.3390/s20113243