1. Introduction

The unmanned aerial vehicle (UAV) information acquisition technology has been applied in agricultural operations and it plays an important role in the development of precision agriculture [

1,

2,

3]. UAV has been used for agricultural information acquisition [

4,

5,

6,

7,

8], pesticide spraying [

9,

10,

11], disease detection [

12,

13,

14]. In recent years, the research of UAV has been focused on navigation and control direction. Among them, precision landing is a critical area in the field of UAV control study [

15,

16,

17,

18].

Visual navigation, as an effective navigation technology, receives increasing attentions. It mainly uses computer vision technology to obtain positioning parameter information [

19,

20]. This technology can obtain information through common low-cost devices and has broad application prospects in the field of UAV navigation. Sharp et al. [

21] from the University of California at Berkeley studied the aircraft landing based on the computer vision system in 2001, and reported that the computer vision technology is applicable for UAV landing control. Anitha et al. [

22] designed and realized automatic fixed-point landing of UAV based on visual navigation technology. Its fixed-point image had a well-defined geometric shape. After image processing, the image position was obtained, and the UAV’s flight attitude was adjusted to achieve landing. Sun and Hao [

23] adjusted UAV autonomous landing based on the geometrical feature information of landmark, and the simulation under laboratory conditions showed that the method showed high stability and feasibility. Ye et al. [

24] proposed a novel field scene recognition algorithm based on the combination of local pyramid feature and convolutional neural network (CNN) learning feature to identify the complex and autonomous landing scene for the low-small-slow UAV. For identifying the autonomous landing of an UAV on a stationary target, Sudevan et al. [

25] used the speeded up robust features (SURF) method to detect and compute the key-point descriptors. The fast-approximate nearest neighbor search library (FLANN) was utilized to achieve the accurate matching of the template image with the captured images to determine the target position.

Most of the previous or existing UAV landing studies focused on a fixed location. In real agricultural operations (e.g., agricultural information collection and pesticide spraying), producers may like the UAVs to land on a mobile platform/apron (e.g., charging battery, data downloading, or refill pesticide) timely to improve the operational efficiency. In addition, in poultry or animal houses, UAVs may be used for monitoring indoor air quality and animal information. Precision control of landing on a mobile or a fixed apron is necessary. The objectives of this study were (1) designing a precision method for landing control of the UAV on a mobile apron automatically; (2) verifying the operations of the method under different scenarios (e.g., high altitude and low altitude); and simulating the operation of the UAV in the agricultural facility (i.e., a poultry house).

2. Materials and Methods

2.1. Hardware of Autonomous Precision Landing System

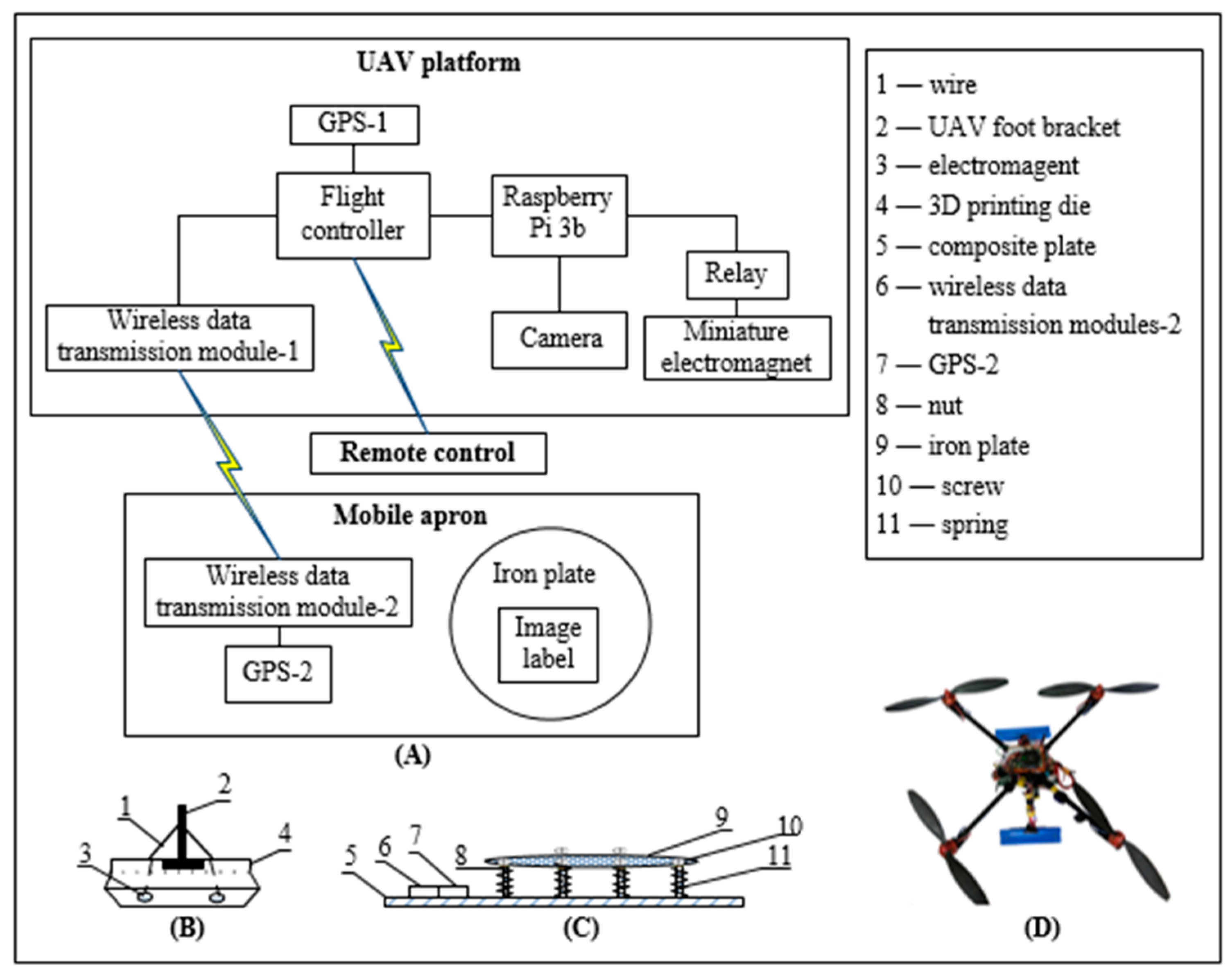

The system mainly consists of two parts: the UAV platform and the mobile apron (overall structure was shown in

Figure 1). A four-rotor UAV was tested in the study. The UAV platform consisted of the following units: flight controller (Pixhawk 2.4.8) and its own GPS-1 unit; raspberry Pi 3b and its own camera (field angle: 72°; Sensor pixel: 1080p, 5 million pixels); wireless data transmission module-1 (WSN-02); relay (ZD-3FF-1Z-05VDC) and miniature electromagnet (ZS-20/15, DC: 12V), and the relay and the electromagnet formed an electromagnet circuit; the wireless data transmission module-1 received the GPS-2 signal from the apron and transmitted it to the flight controller; the flight controller combined its own GPS-1 signal information to obtain the relative position information of the two to adjust the flight attitude of the UAV. The camera obtained image and inputted it to the Raspberry Pi, and then the position information of the image label was acquired by the image recognition program loaded in the Raspberry Pi and transmitted to the flight controller. The flight controller would further adjust the flight attitude. The distance information acquired by the image recognition program was used to control the trigger electromagnet circuit.

The mobile apron was consisted of a wireless data transmission module-2 (WSN-02) and GPS-2 unit (NiRen-SIM868-V1.0). Iron plate had the diameter of 1 m and the thickness of 2 mm, and image label (35 cm × 35 cm). GPS-2 was connected with the wireless data transmission module-2 for emitting GPS-2 signals. Image tag was used for UAV image retrieval.

The electromagnet was embedded in the 3D printing die and connected with wire. When the electromagnet circuit was triggered and electrified, the electromagnet attracted the iron plate. Screws and nuts were used to fix the iron plate, and screws were embedded in the composite plate. Six screws and six nuts were selected and evenly distributed under the iron plate. Springs connected the iron plate and the composite plate acted as a shock absorber, and the spacing between the iron plate and the composite plate was about 2 cm.

2.2. UAV Landing Test Arrangement

Considering actual flight, experimental site and landing situation of the UAV, the test was conducted in three processes: (1) UAV landing at high operating ranges. The flight controller was used to adjust the UAV’s flight attitude by receiving and analyzing the GPS-2 signals of the apron and its own GPS-1 to approach the target; (2) UAV landing at low operating ranges. The image recognition method was used to detect the image label on the mobile apron and obtain the relative position information of the UAV and the apron for adjusting the flight parameters of the UAV to approach the mobile apron; and (3) UAV landing process. The fixed landing device was triggered according to image detection-based distance information to achieve a stable landing.

2.3. GPS Remote Positioning

When the UAV flied high and started to return (e.g., over 5 m from the apron), the GPS-2 signal of the apron would be received for coarse positioning. If the UAV was far away from the apron, the image label in the field-of-view would be small, so it would be not conducive to acquiring image features as the operation is easily interfered by the external environmental factors. Occasionally, the image label was not in the field-of-view. According to the longitude and latitude information transmitted by the GPS-2 signals of the apron and the longitude and latitude information of the UAV’s own GPS-1 signals, the relative position of the two can be quantified. Adjustment horizontal speeds of the UAV could be obtained according to the principle of triangular synthesis (

Figure 2).

In order to avoid the situation that UAV and mobile apron may have the same horizontal speed and direction when UAV are not in the position vertical above the apron, the relative position information and relative speed information were integrated to adjust horizontal speed and descending speed of the UAV, as shown in Equations (1) and (2) [

26,

27].

where,

VF2 is the adjusted horizontal speed of the UAV.

VF1 is the horizontal speed of the UAV in the previous moment.

θ is the angle between ΔP and UAV horizontal plane. ΔP is the actual distance vector from the UAV to the apron.

Vb0 is the adjusted vertical speed of the UAV.

Vh0 = 10 cm/s.

VF2 and

VF1 are vector values.

To improve the positioning accuracy, the longitude and latitude information obtained by the flight controller was continuously updated by the dynamic mean method. The schematic diagram was shown in

Figure 3.

To reduce the GPS positioning deviation, the average values of 20 consecutive longitude and latitude information were used as the longitude and latitude inputs of the moving apron at the current moment, as described in Equation (3).

where,

x is the latitude.

y is the longitude.

is the longitude and latitude finally obtained by flight controller.

is the longitude and latitude detected in real time.

2.4. Accurate Positioning and Landing Based on Image Detection

When the height of UAV was about 5 m from the apron, the image recognition module would be turned on. If there was no image label in the field-of-view, the descending speed would be set to zero. The horizontal speed of the UAV was adjusted according to Equation (1) until the image label appeared in the field-of-view, then the flight attitude was adjusted to approach the apron based on the results of image detection/analysis. In

Figure 1, the camera transmitted image to the Raspberry Pi, and the information extracted by the Raspberry Pi was transmitted to the flight controller for adjusting controlling parameters/inputs. According to the actual installation position of the camera, in the text, when the acquisition distance was less than 15 cm, the electromagnet circuit would be triggered.

2.4.1. Camera Calibration

The camera equipped in UAV was calibrated for reducing distortion. In this study, 10 rows and 7 columns of chessboards were printed on an A4 paper, which was fixed on flat cardboard as the calibration board by following a published method [

28].

Since the focal length of the camera was no longer changed after the camera was installed, it is necessary to measure the current field angle. According to the Equations (4) and (5), the horizontal field angle

θ1 and the vertical field angle

θ2 could be obtained.

where,

θ1 is the horizontal field angle of camera.

θ2 is the vertical field angle of camera.

d1 is the actual distance from the center of left boundary to the center of the field-of-view.

d2 is the actual distance from the center of upper boundary to the center of the field-of-view.

h is the actual distance from the camera to the field-of-view.

2.4.2. Image Label Detection

In this paper, the vision positioning system proposed by Edwin Olson [

29] was used for image detection. To speed up the algorithm, the color image was transformed into gray image, and the image resolution was adjusted to 640 pixels × 480 pixels. The image label is composed of a large icon (35 cm × 35 cm) and a small icon (4 cm × 4 cm), as shown in

Figure 4. When the UAV was close to the apron, the large icon could not be captured completely. At this time, the relative position information could be obtained by detecting the small icon. The horizontal speed of UAV was adjusted by Equation (1). Since the vertical speed of UAV could not be too fast when it is about the land, Equation (6) was used to adjust the vertical velocity speed [

26,

27].

where,

θ is the angle between Δ

P and UAV horizontal plane. Δ

P is the actual distance vector from the UAV to the apron.

Vb1 is the adjusted vertical speed of the UAV.

Vh1 = 5 cm/s.

2.4.3. UAV Landing Fixation

UAV tended to roll over due to uneven tilting or sliding of the apron when it was landing. In order to solve this problem, a landing fixture based on an electromagnet principle was designed (see

Figure 1). According to the actual size of UAV, a 3D printing mold was designed for placing the electromagnet. The apron was an iron plate. The distance between the UAV and the apron was obtained based on image detection for determining whether UAV was about to land. If yes, the electromagnet circuit would be triggered, and eventually to complete the landing safely.

2.5. Error Calculation

The error for using the label side length information as a reference standard can be estimated in Equation (7):

where,

δ is the calculation error.

D1 is the actual distance from the center of the label to the center of the field-of-view.

D2 is the pixel distance from the center of the label to the center of the field-of-view.

S1 is the actual side length of the label.

S2 is the average of the four pixels side lengths of the label.

2.6. Poultry House UAV Landing Simulation

In poultry or livestock houses, environmental quality monitoring and animal imaging are important tasks for evaluating if animals have a good production environment and how their health and welfare are affected in commercial production system [

30,

31]. Traditional methods (e.g., installing sensors or cameras in fixed places) have limitations in selecting representative data collection locations due to the large size of the poultry house (e.g., 150 m length and 30 m wide) [

32]. Therefore, equipping a UAV with different air quality sensors and cameras will promote the precision sensing of poultry housing environment and animal health/welfare in the future.

In this study, we simulated the UAV landing control by using the physical environments of a commercial poultry house as a model to evaluate effect of running speed on landing accuracy and how the size of an apron can be optimized for a precision landing control. The simulating house was measured 135 L × 15 W × 3 H m, as shown in

Figure 5. We simulated that the UAV flew a round trip hourly and return back to a fixed location apron. In the simulation model, the image label was assumed as a large icon (35 cm × 35 cm) and a small icon (4 cm × 4 cm). The apron was set as 1 m high above floor in the end of the poultry house. Running speed of the UAV was set as 2 km/h, 3 km/h, and 4 km/h, respectively, to evaluate the effect of running speed on landing accuracy; the UAV running height was set as 2 m; the apron was assumed as a circle iron metal (1 m in diameter) at the height of 1 m above floor in the end of the house. The flying posture of the UAV could be adjusted automatically according to detected images label and

θ (e.g.,

θ need to be ≥ 45° for control) based on the method in Equations (1) and (6).

3. Results and Discussion

3.1. Measured Field Angle

Figure 6 is a measurement diagram when the distance (

h) between the camera and the wall is 313.6 cm. During the test, the center of the lens display (i.e., red UK-flag) was adjusted to make it coincide with the wall (the camera would be parallel to the wall,

Figure 6). Black tapes were attached to the center of the left and upper edges to indicate the boundary of the field-of-view. On this basis, the actual distance

d1 and

d2 from the center of the left and upper boundaries to the center point of the UK-flag shaped were measured. In order to improve the measurement accuracy of the field angle, the measurement was performed at different distances

h. The results were shown in

Table 1 and

Table 2. The measured horizontal and vertical field angles changed slightly at different distances

h. The mean and standard deviation of the horizontal field angle were 25.18833° and 0.30308°, respectively, and the maximum and minimum differences between the measured horizontal field angle and the mean were 0.56142° and 0.04219°, respectively; The mean and standard deviation of the vertical field angle were 19.73093° and 0.28117°, respectively, and the maximum and minimum differences between the measured vertical field angle and the mean were 0.59921° and 0.06851°, respectively. Although the angle of view measured at different heights slightly changes, the change was negligible, so the average could be used as the true field angle of the camera. The image size of the camera is 640 pixels × 480 pixels (the ratio of width to length is 0.75). The average field-of-view ratio of the experimental measurement was 0.76273, and the maximum and minimum fluctuation values were 0.02111 and 0.00580, respectively.

3.2. Image Label Detection and Distance Model

3.2.1. Image Label Detection

To verify the image detection method, images in different positions or rotations in the field-of-view were tested in the experiment, as shown in

Figure 7a. In addition, anti-interference experiments were performed as shown in

Figure 7b.

According to

Figure 7a, the image detection method could detect the image label effectively even if the image label was in different positions (e.g., rotation). In addition, it can be seen from

Figure 7b that the image detection method was highly resistant to interference. Therefore, this method is applicable in target detection during the test.

3.2.2. Constructed Distance Model

In the test, the actual length of the edge of the image label was recorded as

S1. The number of pixels on the same edge of the image label was recorded as

S2. The actual distance corresponding to the pixel could be obtained by

S1/

S2, and this was used as a calculation standard. When the pixel distance from the center of the label to the center of the field-of-view was measured, the true distance could be obtained according to the calculation standard. In order to verify the feasibility of the calculation standard, the image labels with side lengths of 80 mm and 64 mm were tested at different distances

h. As shown in

Table 3 and

Table 4.

Table 3 shows that the maximum and minimum calculation errors were 0.04 and 0.01, respectively. From

Table 4, the maximum and minimum calculation errors were 0.06 and 0.01, respectively.

D1/

D2 could be represented by

S1/

S2, and be used as the reference standard to calculate the actual distance from the center of the label to the center of the field-of-view. In addition, the actual length of the edge of the image label was known, and the pixel value of the side length of the image label can be automatically calculated through the geometric relationship, so

S1/

S2 is feasible as a calculation standard.

Besides,

Table 3 and

Table 4 also show that the

S1/

S2 values at a different distance

h were quite different, while the

S1/

S2 at the same distance

h was relatively close. The actual distance from the center of the image label (80 mm × 80 mm as an example) to the center of the field-of-view was adjusted at different distances

h to obtain a series of data (

Figure 8).

As shown in

Figure 8, the pixel distance would be in a linear relationship to the actual distance if the distance

h from the camera to the wall was a constant. Then the

S1/

S2 value at this distance

h can be calculated. The actual distance from the center of the image label to the center of the field-of-view can be calculated as the pixel value from the center of the image label to the center of the field-of-view multiplied by

S1/

S2. Briefly, the actual length of the whole field-of-view was obtained, and the height information was calculated by combining the field angle.

Therefore, through the above process, the flying height of the UAV was continuously and automatically obtained. After the height information was obtained, it would be judged if its value is greater than 5 m or not, then the flying speed of the UAV was adjusted according to either Equations (1) and (2) or Equations (1) and (6) until landed.

3.3. UAV Landing Accuracy

In order to evaluate the accuracy of the precision method for UAV landing control, different fixed-point and mobile landing experiments were conducted in an open area. The landing error (i.e., the actual horizontal distance from the center axis of the UAV to the center of the image label) was quantified.

Figure 9 and

Figure 10 shows the error distribution of fixed-point landing and mobile landing (apron moves at 3 km/h).

The average errors of fixed-point landing and mobile landing (3 km/h) were quantified as 6.78 cm and 13.29 cm, respectively, indicating that the newly developed method could control UAV landing on a mobile apron relative precisely. However, the landing errors were increasing with the increase of moving speed (>3 km/h) of the apron. This guarantee the further studies on optimization of control algorithms for UAV to land more precisely under different running speeds.

3.4. Simulation Results for the Poultry House

We simulated UAV landing control by using a real poultry house as a model to evaluate the effect of running speed on landing accuracy during applying the UAV in poultry house (e.g., monitor indoor environmental quality and animal health, welfare, or behaviors). It was estimated that a round trip of UAV would be 270 m in the poultry house. As the poultry house was 3 m high only (from ground to ceiling), so the flight height was recommended as 1.5–2.5 m for the UAV considering the facilities restrictions.

Table 5 shows the effect of UAV running speed on landing accuracy. The UAV running at a lower speed had a higher landing accuracy. In animal houses, a lower running speed is recommended considering the complex facilities and environment as compared to open field. The precision landing control provides a basis for optimize apron design in size to reduce the space use in the poultry house. This study is ongoing for studying the effect of the UAV weight on landing control efficiency to quantify how many sensors and cameras an UAV may carry in the future.

4. Conclusions

In the current study, a UAV landing control method was developed and tested for the agricultural application under three scenarios:

- (1)

UAV landing at high operating altitude based on the GPS signal of the mobile apron;

- (2)

UAV landing at low operating altitude based on the image recognition on the mobile apron;

- (3)

UAV landing progress control based on the fixed landing device and image detection.

According to landing tests, the average errors of fixed-point landing and mobile landing at speed of 3 km/h were 6.78 cm and 13.29 cm, respectively. The UAV landing was smoothly and firmly controlled. We tested only limited parameters in the current study for the mobile apron landing (e.g., single speed at 3 km/h), further studies will be guaranteed to improve UAV landing control (e.g., detection, positioning, and control method of the mobile apron) by testing more parameters (e.g., speed and curve motion) in the future.

The simulation study for UAV application in agricultural facilities was conducted by using a commercial poultry house as a model (135 L × 15 W × 3 H m). In the simulation, the landing errors were controlled at 6.22 ± 2.59 cm, 6.79 ± 3.26 cm, and 7.14 ± 2.41 cm at the running speed of 2 km/h, 3 km/h, and 4 km/h, respectively. For the simulation work, we will continue to identify the effect of the UAV weight on landing control efficiency to promote the design of sensors and cameras in UAV for the real-time monitoring task in poultry houses. This study provides the technical basis for applying the UAV to collect data (e.g., air quality and animal information) in a poultry or animal house that requires more precise landing control than open fields.

Author Contributions

D.H was project principal investigator; Y.G., D.H., and L.C came up the research thoughts; Y.G., J.G., and L.C figured out the research methodology; Y.G. and D.H. managed research resources; Y.G., J.G., C.L., and H.X. developed the algorithm for landing control and data analysis; Y.G., J.G., C.L, and H.X. collected the original data; Y.G., J.G., C.L., H.X., and L.C., analyzed the data; D.H. verified the results; Y.G., J.G., and L.C wrote the manuscript; L.C submitted the manuscript for review. All authors have read and agreed to the submission of the manuscript.

Funding

This research was funded by Technology Integration and Service of Accurate Acquisition of Farmland Information on Multi-Remote Sensing Platform in Dry Areas (grant number 2012BAH29B04).

Acknowledgments

Y.G. thanks the sponsorship from China Scholarship Council (CSC) to study at the University of Georgia, USA. Authors also thank Kaixuan Zhao, Ziru Zhang and Jinyu Niu for their help in this study.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- Zhang, Z.Y.; Li, C. Application of Unmanned Aerial Vehicle Technology in Modern Agriculture. Agric. Eng. 2016, 6, 23–25. [Google Scholar]

- Wang, P.; Luo, X.W.; Zhou, Z.Y.; Zang, Y.; Hu, L. Key technology for remote sensing information acquisition based on micro UAV. Trans. Chin. Soc. Agric. Eng. 2014, 30, 1–12. [Google Scholar]

- Shi, Z.; Liang, Z.Z.; Yang, Y.Y.; Guo, Y. Status and Prospect of Agricultural Remote Sensing. Trans. Chin. Soc. Agric. Mach. 2015, 46, 247–260. [Google Scholar]

- Sankaran, S.; Khot, L.R.; Carter, A.H. Field-based crop phenotyping: Multispectral aerial imaging for evaluation of winter wheat emergence and spring stand. Comput. Electron. Agric. 2015, 118, 372–379. [Google Scholar] [CrossRef]

- Sankaran, S.; Khot, L.R.; Espinoza, C.Z.; Jarolmasjed, S.; Sathuvalli, V.R.; Vandemark, G.J.; Miklas, P.N.; Carter, A.H.; Pumphrey, M.O.; Knowles, N.R.; et al. Low-altitude, high-resolution aerial imaging systems for row and field crop phenotyping: A review. Eur. J. Agron. 2015, 70, 112–123. [Google Scholar] [CrossRef]

- Sankaran, S.; Zhou, J.; Khot, L.R.; Trapp, J.J.; Mndolwa, E.; Miklas, P.N. High-throughput field phenotyping in dry bean using small unmanned aerial vehicle based multispectral imagery. Comput. Electron. Agric. 2018, 151, 84–92. [Google Scholar] [CrossRef]

- Xu, R.; Li, C.; Paterson, A.H.; Jiang, Y.; Sun, S.; Robertson, J.S. Aerial images and convolutional neural network for cotton bloom detection. Front. Plant. Sci. 2018, 8, 2235. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.G.; Zhao, C.J.; Yang, G.J.; Yu, H.; Zhao, X.; Xu, B.; Niu, Q. Review of field-based phenotyping by unmanned aerial vehicle remote sensing platform. Trans. Chin. Soc. Agric. Eng. 2016, 32, 98–106. [Google Scholar]

- Bae, Y.; Koo, Y.M. Flight Attitudes and Spray Patterns of a Roll-Balanced Agricultural Unmanned Helicopter. Appl. Eng. Agric. 2013, 29, 675–682. [Google Scholar]

- Faiçal, B.S.; Freitas, H.; Gomes, P.H.; Mano, L.Y.; Pessin, G.; de Carvalho, A.C.; Krishnamachari, B.; Ueyama, J. An adaptive approach for UAV-based pesticide spraying in dynamic environments. Comput. Electron. Agric. 2017, 138, 210–223. [Google Scholar] [CrossRef]

- Zhang, D.Y.; Lan, Y.B.; Chen, L.P.; Wang, X.; Liang, D. Current Status and Future Trends of Agricultural Aerial Spraying Technology in China. Trans. Chin. Soc. Agric. Mach. 2014, 45, 53–59. [Google Scholar]

- Wang, Z.; Chu, G.K.; Zhang, H.J.; Liu, S.X.; Huang, X.C.; Gao, F.R.; Zhang, C.Q.; Wang, J.X. Identification of diseased empty rice panicles based on Haar-like feature of UAV optical image. Trans. Chin. Soc. Agric. Eng. 2018, 34, 73–82. [Google Scholar]

- Albetis, J.; Duthoit, S.; Guttler, F.; Jacquin, A.; Goulard, M.; Poilvé, H.; Féret, J.B.; Dedieu, G. Detection of Flavescence dorée Grapevine Disease Using Unmanned Aerial Vehicle (UAV) Multispectral Imagery. Remote Sens. 2017, 9, 308. [Google Scholar] [CrossRef] [Green Version]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing very high resolution UAV imagery for monitoring forest health during a simulated disease outbreak. ISPRS J. Photogramm. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Xuan-Mung, N.; Hong, S.-K. Improved Altitude Control Algorithm for Quadcopter Unmanned Aerial Vehicles. Appl. Sci. 2019, 9, 2122. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Yang, Q.Q.; Guo, S.X. Recognition Algorithm of the Apron for Unmanned Aerial Vehicle Based on Image Corner Points. Laser J. 2016, 08, 71–74. [Google Scholar]

- Olivares-Mendez, M.A.; Mondragón, I.; Campoy, P. Autonomous Landing of an Unmanned Aerial Vehicle using Image-Based Fuzzy Control. In Proceedings of the Second Workshop on Research, Development and Education on Unmanned Aerial Systems (RED-UAS 2013), Compiegne, France, 20–22 November 2013. [Google Scholar]

- Amaral, T.G.; Pires, V.F.; Crisostomo, M.M. Autonomous Landing of an Unmanned Aerial Vehicle. In Proceedings of the International Conference on Computational Intelligence for Modelling, Control & Automation, & International Conference on Intelligent Agents, Web Technologies & Internet Commerce (CIMCA-IAWTIC’06), Vienna, Austria, 28–30 November 2005; Volume 2, pp. 530–536. [Google Scholar]

- Bu, C.; Ai, Y.; Du, H. Vision-based autonomous landing for rotorcraft unmanned aerial vehicle. In Proceedings of the IEEE International Conference on Vehicular Electronics and Safety (ICVES), Beijing, China, 10–12 July 2016; pp. 1–6. [Google Scholar]

- Kong, W.; Zhou, D.; Zhang, D.; Zhang, J. Vision-based autonomous landing system for unmanned aerial vehicle: A survey. In Proceedings of the International Conference on Multisensor Fusion and Information Integration for Intelligent Systems (MFI), Beijing, China, 28–29 September 2014; pp. 1–8. [Google Scholar]

- Sharp, C.S.; Shakernia, O.; Sastay, S.S. A vision system for landing an unmanned aerial vehicle. In Proceedings of the ICRA IEEE International Conference on Robotics and Automation (Cat. No. 01CH37164), Seoul, Korea, 21–26 May 2001; pp. 1720–1727. [Google Scholar]

- Anitha, G.; Kumar, R.N.G. Vision Based Autonomous Landing of an Unmanned Aerial Vehicle. Procedia Eng. 2012, 38, 2250–2256. [Google Scholar] [CrossRef] [Green Version]

- Sun, W.G.; Hao, Y.G. Autonomous landing of unmanned helicopter based on landmark’s geometrical feature. J. Comput. Appl. 2012, 32, 179–181. [Google Scholar]

- Ye, L.H.; Wang, L.; Zhao, L.P. Field scene recognition method for low-mall-slow unmanned aerial vehicle landing. J. Comput. Appl. 2017, 37, 2008–2013. [Google Scholar]

- Sudevan, V.; Shukla, A.; Karki, H. Vision based Autonomous Landing of an Unmanned Aerial Vehicle on a Stationary Target. In Proceedings of the 17th International Conference on Control, Automation and Systems (ICCAS), Ramada Plaza, Jeju, Korea, 18–21 October 2017; pp. 362–367. [Google Scholar]

- Hu, M.; Li, C.T. Application in real-time planning of UAV based on velocity vector field. In Proceedings of the International Conference on Electrical and Control Engineering, Wuhan, China, 25 June 2010; pp. 5022–5026. [Google Scholar]

- Guanglei, M.; Jinlong, G.; Fengqin, S.; Feng, T. UAV real-time path planning using dynamic RCS based on velocity vector field. In Proceedings of the 26th Chinese Control and Decision Conference (2014 CCDC), Changsha, China, 31 May–2 June 2014; pp. 1376–1380. [Google Scholar]

- Zhang, Z. Flexible Camera Calibration by Viewing a Plane from Unknown Orientations. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 666–673. [Google Scholar]

- Olson, E. AprilTag: A robust and flexible visual fiducial system. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3400–3407. [Google Scholar]

- Chai, L.; Xin, H.; Wang, Y.; Oliveira, J.; Wang, K.; Zhao, Y. Mitigating particulate matter generations of a commercial cage-free henhouse. Trans. ASABE 2019, 62, 877–886. [Google Scholar] [CrossRef]

- Guo, Y.; Chai, L.; Aggrey, S.E.; Oladeinde, A.; Johnson, J.; Zock, G. A Machine Vision-Based Method for Monitoring Broiler Chicken Floor Distribution. Sensors 2020, 20, 3179. [Google Scholar] [CrossRef] [PubMed]

- Chai, L.L.; Ni, J.Q.; Chen, Y.; Diehl, C.A.; Heber, A.J.; Lim, T.T. Assessment of long-term gas sampling design at two commercial manure-belt layer barns. J. Air. Waste Manag. 2010, 60, 702–710. [Google Scholar] [CrossRef] [PubMed] [Green Version]

Figure 1.

The schematic diagram of unmanned aerial vehicle (UAV) platform: (A) system overall hardware structure diagram; (B) schematic diagram of electromagnet installation structure; (C) mobile apron structural diagram; and (D) overhead view of the built UAV.

Figure 1.

The schematic diagram of unmanned aerial vehicle (UAV) platform: (A) system overall hardware structure diagram; (B) schematic diagram of electromagnet installation structure; (C) mobile apron structural diagram; and (D) overhead view of the built UAV.

Figure 2.

Triangular synthesis schematic diagram. The blue circle represents the UAV and the apron at time t1. The red circle represents the UAV and the apron at time t2, and the green dotted circle is the position of the apron in the UAV coordinate system. V0, V1, VF, ∆V, and ∆P are vector values, which contains both numerical values and directions. The horizontal speed of the UAV was synthesized by VF and ∆V.

Figure 2.

Triangular synthesis schematic diagram. The blue circle represents the UAV and the apron at time t1. The red circle represents the UAV and the apron at time t2, and the green dotted circle is the position of the apron in the UAV coordinate system. V0, V1, VF, ∆V, and ∆P are vector values, which contains both numerical values and directions. The horizontal speed of the UAV was synthesized by VF and ∆V.

Figure 3.

The principle of dynamic mean method (e.g., the 20th GPS information is the average of 1–20 GPS information).

Figure 3.

The principle of dynamic mean method (e.g., the 20th GPS information is the average of 1–20 GPS information).

Figure 4.

Image label. The image label is composed of a large icon (35 cm × 35 cm) and a small icon (4 cm × 4 cm).

Figure 4.

Image label. The image label is composed of a large icon (35 cm × 35 cm) and a small icon (4 cm × 4 cm).

Figure 5.

The poultry house model used for simulating UAV landing and control (the house was measured 135 L × 15 W × 3 H m; the photo was taken by authors in a commercial broiler breeder house in Georgia, USA).

Figure 5.

The poultry house model used for simulating UAV landing and control (the house was measured 135 L × 15 W × 3 H m; the photo was taken by authors in a commercial broiler breeder house in Georgia, USA).

Figure 6.

Measurement of the field angle. The blue frame is the wall, the yellow frame is the field of view of the camera, and h is the vertical distance from the camera to the center of the field of view. The center of the red UK-flag shaped is the center of the field-of-view, and black tapes are attached to the center of the upper and left boundaries of the field–of-view.

Figure 6.

Measurement of the field angle. The blue frame is the wall, the yellow frame is the field of view of the camera, and h is the vertical distance from the camera to the center of the field of view. The center of the red UK-flag shaped is the center of the field-of-view, and black tapes are attached to the center of the upper and left boundaries of the field–of-view.

Figure 7.

Image detection. (a). The detection results of images in different positions or rotations. (b). The detection results of anti-interference test.

Figure 7.

Image detection. (a). The detection results of images in different positions or rotations. (b). The detection results of anti-interference test.

Figure 8.

Distance relationship at different heights. The legend shows the actual distance from the camera to the wall. Horizontal coordinate is the actual distance from the image label to the center of the field-of-view. Vertical coordinates are the pixel distance from the image label to the center of the field-of-view.

Figure 8.

Distance relationship at different heights. The legend shows the actual distance from the camera to the wall. Horizontal coordinate is the actual distance from the image label to the center of the field-of-view. Vertical coordinates are the pixel distance from the image label to the center of the field-of-view.

Figure 9.

The error distribution of fixed-point landing. Horizontal axis is the number of experiments. Vertical axis is the distance from the center of the UAV landing position to the center of the image label. Blue dot is the distance error. Orange dot is the average error.

Figure 9.

The error distribution of fixed-point landing. Horizontal axis is the number of experiments. Vertical axis is the distance from the center of the UAV landing position to the center of the image label. Blue dot is the distance error. Orange dot is the average error.

Figure 10.

The error distribution of landing when the apron moves at 3 km/h. Horizontal axis is the number of experiments. Vertical axis is the distance from the center of the UAV landing position to the center of the image label. Red dot is the distance error. Green dot is the average error.

Figure 10.

The error distribution of landing when the apron moves at 3 km/h. Horizontal axis is the number of experiments. Vertical axis is the distance from the center of the UAV landing position to the center of the image label. Red dot is the distance error. Green dot is the average error.

Table 1.

Measurement results of field angle at different distances h.

Table 1.

Measurement results of field angle at different distances h.

| Number | Distance h (cm) | Length d1 (cm) | Width d2 (cm) | d2/d1 | Horizontal Field Angle θ1 (°) | Vertical Field Angle θ2 (°) |

|---|

| 1 | 73.60 | 35.50 | 26.60 | 0.74930 | 25.74975 | 19.87053 |

| 2 | 133.60 | 62.60 | 49.50 | 0.79073 | 25.10607 | 20.33014 |

| 3 | 193.60 | 92.50 | 68.60 | 0.74162 | 25.53799 | 19.51123 |

| 4 | 253.60 | 119.50 | 91.60 | 0.76653 | 25.23052 | 19.85967 |

| 5 | 313.60 | 147.20 | 110.90 | 0.75340 | 25.14481 | 19.47540 |

| 6 | 373.60 | 172.00 | 134.50 | 0.78198 | 24.72068 | 19.79944 |

| 7 | 433.60 | 202.00 | 152.90 | 0.75693 | 24.97929 | 19.42415 |

| 8 | 493.60 | 230.50 | 175.50 | 0.76139 | 25.03153 | 19.57291 |

| Mean ± SD | 0.76273 ± 0.01548 | 25.18833 ± 0.30308 | 19.73093 ± 0.28117 |

Table 2.

Variation of the field-of-view parameters.

Table 2.

Variation of the field-of-view parameters.

| Difference between d2/d1 and the Mean | Difference between θ1 and the Mean (°) | Difference between θ2 and the Mean (°) |

|---|

| −0.01343 | 0.56142 | 0.13960 |

| 0.02800 | −0.08226 | 0.59921 |

| −0.02111 | 0.34966 | −0.21970 |

| 0.00380 | 0.04219 | 0.12874 |

| −0.00933 | −0.04352 | −0.25553 |

| 0.01925 | −0.46765 | 0.06851 |

| −0.00580 | −0.20904 | −0.30678 |

| −0.00134 | −0.15680 | −0.15802 |

Table 3.

Distance test results of the image label (80 mm × 80 mm).

Table 3.

Distance test results of the image label (80 mm × 80 mm).

| Distance h (mm) | Distance from Label Center to Field-of-View Center |

| Real Distance D1 (mm) | Pixel Distance D2 (pixel) | D1/D2 (mm/pixel) |

| 736.00 | 141.40 | 269.00 | 0.53 |

| 1936.00 | 707.10 | 482.00 | 1.47 |

| 1936.00 | 500.00 | 341.00 | 1.47 |

| 2536.00 | 707.10 | 369.00 | 1.92 |

| 2536.00 | 500.00 | 271.00 | 1.85 |

| 3136.00 | 707.10 | 297.00 | 2.38 |

| 3136.00 | 500.00 | 209.00 | 2.39 |

| Label Edge Length |

| True Side Length S1 (mm) | Average Pixel Length S2 (pixel) | S1/S2 (mm/pixel) | Error δ (mm/pixel) |

| 80.00 | 153.00 | 0.52 | 0.01 |

| 80.00 | 55.00 | 1.45 | 0.01 |

| 80.00 | 55.00 | 1.45 | 0.01 |

| 80.00 | 42.00 | 1.90 | 0.01 |

| 80.00 | 44.00 | 1.82 | 0.01 |

| 80.00 | 35.00 | 2.29 | 0.04 |

| 80.00 | 34.50 | 2.32 | 0.03 |

Table 4.

Distance test results of image label (64 mm × 64 mm).

Table 4.

Distance test results of image label (64 mm × 64 mm).

| Distance (mm) | Distance from Label Center to Field-of-View Center |

| Real Distance D1 (mm) | Pixel Distance D2 (pixel) | D1/D2 (mm/pixel) |

| 736.00 | 145.00 | 286.00 | 0.51 |

| 736.00 | 191.40 | 357.00 | 0.54 |

| 1936.00 | 707.10 | 486.00 | 1.45 |

| 1936.00 | 500.00 | 374.00 | 1.34 |

| 2536.00 | 707.10 | 369.00 | 1.92 |

| 2536.00 | 500.00 | 285.00 | 1.75 |

| 3136.00 | 707.10 | 297.00 | 2.38 |

| 3136.00 | 500.00 | 232.00 | 2.16 |

| Label Edge Length |

| True Side Length S1 (mm) | Average Pixel Length S2 (pixel) | S1/S2 (mm/pixel) | Error δ (mm/pixel) |

| 64.00 | 124.50 | 0.51 | 0.01 |

| 64.00 | 120.00 | 0.53 | 0.01 |

| 64.00 | 46.50 | 1.38 | 0.06 |

| 64.00 | 47.00 | 1.36 | 0.02 |

| 64.00 | 34.00 | 1.88 | 0.02 |

| 64.00 | 36.00 | 1.78 | 0.01 |

| 64.00 | 28.00 | 2.29 | 0.04 |

| 64.00 | 28.50 | 2.25 | 0.04 |

Table 5.

Effect of UAV running speed on landing accuracy (simulation times n = 15 times).

Table 5.

Effect of UAV running speed on landing accuracy (simulation times n = 15 times).

| Horizontal Running Speed | 2 km/h | 3 km/h | 4 km/h |

| Mean ± SD | 6.22 ± 2.59 cm | 6.79 ± 3.26 cm | 7.14 ± 2.41 cm |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).