A Hough-Space-Based Automatic Online Calibration Method for a Side-Rear-View Monitoring System

Abstract

1. Introduction

2. Related Works

2.1. Offline Calibration

2.2. Online Calibration with Additional Devices

2.3. Online Calibration without Additional Devices

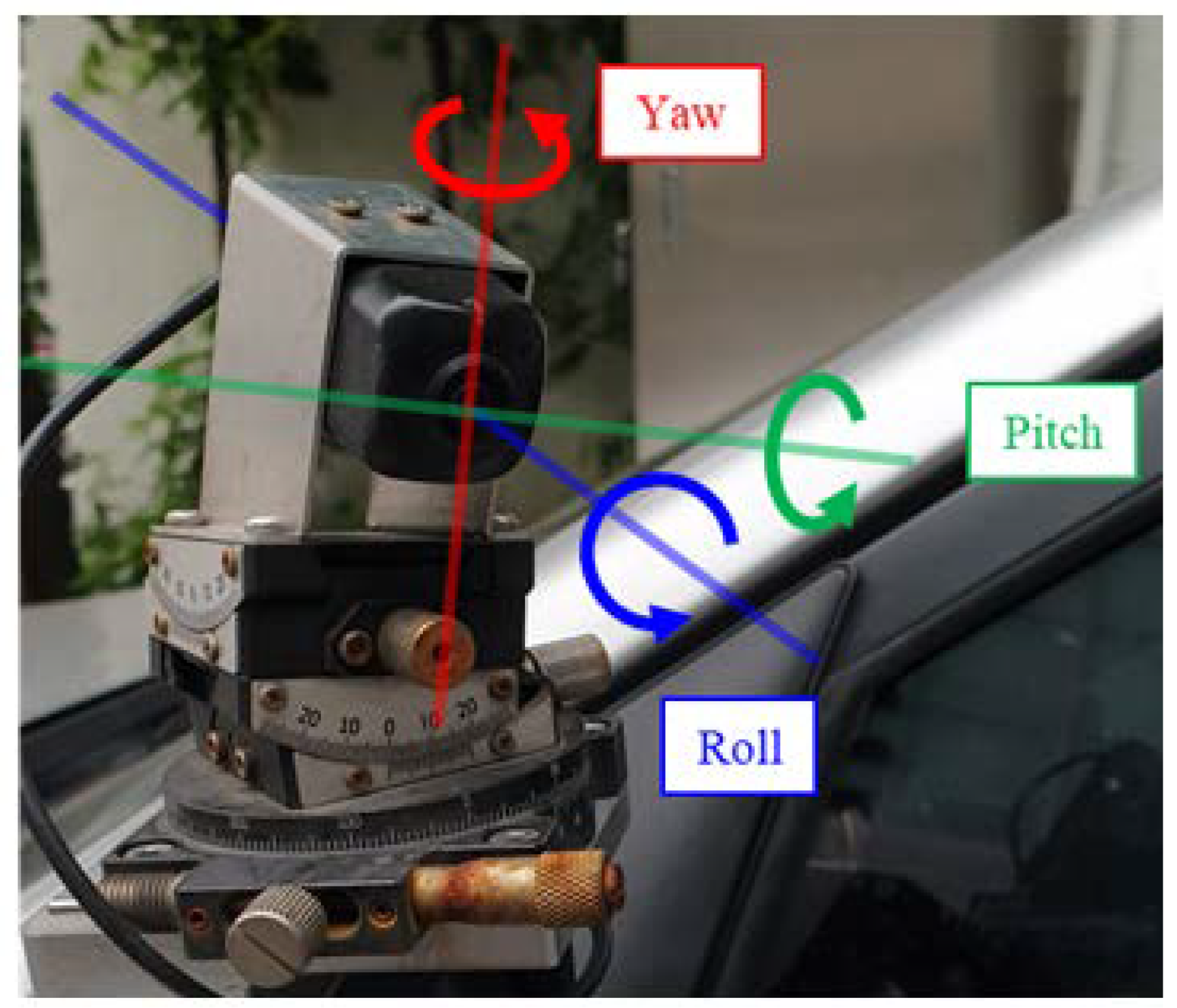

3. Automatic Online Calibration

3.1. Reflected-Vehicle Area Detection

3.2. RVA Comparative Analysis to Estimate Parameters

4. Simulation and Experimental Results

4.1. Experiments for Determining An Appropriate Number of Captured Images

4.2. Field Experiments for Quantitative and Qualitative Evaluation

4.2.1. Precision, Recall, and RMSE

4.2.2. Experiments with Various Cameras

4.3. Comparison with Previous Methods

4.4. Limitation of Calibration

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zhang, B.; Appia, V.; Pekkucuksen, I.; Liu, Y.; Umit Batur, A.; Shastry, P.; Liu, S.; Sivasankaran, S.; Chitnis, K. A surround view camera solution for embedded systems. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 24–27 June 2014; pp. 662–667. [Google Scholar]

- Yu, M.; Ma, G. 360 surround view system with parking guidance. SAE Int. J. Commer. Veh. 2014, 7, 19–24. [Google Scholar] [CrossRef]

- Lee, J.H.; Han, J.Y.; You, Y.J.; Lee, D.W. Apparatus and Method for Matching Images. KR Patent 101,781,172, 14 September 2017. [Google Scholar]

- Pan, J.; Appia, V.; Villarreal, J.; Weaver, L.; Kwon, D.K. Rear-stitched view panorama: A low-power embedded implementation for smart rear-view mirrors on vehicles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 20–29. [Google Scholar]

- Mammeri, A.; Lu, G.; Boukerche, A. Design of lane keeping assist system for autonomous vehicles. In Proceedings of the International Conference on New Technologies, Mobility and Security, Paris, France, 26–29 July 2015; pp. 1–5. [Google Scholar]

- Eum, S.; Jung, H.G. Enhancing light blob detection for intelligent headlight control using lane detection. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1003–1011. [Google Scholar] [CrossRef]

- Cardarelli, E. Vision-based blind spot monitoring. In Handbook of Intelligent Vehicles; Azim, E., Ed.; Springer: London, UK, 2012; pp. 1071–1087. ISBN 978-0-85729-084-7. [Google Scholar]

- Suzuki, S.; Raksincharoensak, P.; Shimizu, I.; Nagai, M.; Adomat, R. Sensor fusion-based pedestrian collision warning system with crosswalk detection. In Proceedings of the IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 355–360. [Google Scholar]

- Baró, X.; Escalera, S.; Vitrià, J.; Pujol, O.; Radeva, P. Traffic sign recognition using evolutionary adaboost detection and forest-ECOC classification. IEEE Trans. Intell. Transp. Syst. 2009, 10, 113–126. [Google Scholar] [CrossRef]

- Liu, J.F.; Su, Y.F.; Ko, M.K.; Yu, P.N. Development of a vision-based driver assistance system with lane departure warning and forward collision warning functions. In Proceedings of the Digital Image Computing: Techniques and Applications, Canberra, Australia, 1–3 December 2008; pp. 480–485. [Google Scholar]

- Chen, M.; Jochem, T.; Pomerleau, D. AURORA: A vision-based roadway departure warning system. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Pittsburgh, PA, USA, 5–9 August 1995; pp. 243–248. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Camera Calibration Facility. Available online: http://www.angtec.com/ (accessed on 12 May 2020).

- Xia, R.; Hu, M.; Zhao, J.; Chen, S.; Chen, Y. Global calibration of multi-cameras with non-overlapping fields of view based on photogrammetry and reconfigurable target. Meas. Sci. Technol. 2018, 29, 065005. [Google Scholar] [CrossRef]

- Mazzei, L.; Medici, P.; Panciroli, M. A lasers and cameras calibration procedure for VIAC multi-sensorized vehicles. In Proceedings of the IEEE Intelligent Vehicles Symposium, Alcala de Henares, Spain, 3–7 June 2012. [Google Scholar]

- Hold, S.; Nunn, C.; Kummert, A.; Muller-Schneiders, S. Efficient and robust extrinsic camera calibration procedure for lane departure warning. In Proceedings of the IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009. [Google Scholar]

- Tan, J.; Li, J.; An, X.; He, H. An interactive method for extrinsic parameter calibration of onboard camera. In Proceedings of the IEEE Intelligent Vehicles Symposium, Baden-Baden, Germany, 5–9 June 2011. [Google Scholar]

- Li, S.; Ying, H. Estimating camera pose from H-pattern of parking lot. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010. [Google Scholar]

- Wang, X.; Chen, H.; Li, Y.; Huang, H. Online Extrinsic Parameter Calibration for Robotic Camera-Encoder System. IEEE Trans. Ind. Inform. 2019, 15, 4646–4655. [Google Scholar] [CrossRef]

- Schneider, S.; Luettel, T.; Wuensche, H.J. Odometry-based online extrinsic sensor calibration. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1287–1292. [Google Scholar]

- Chien, H.J.; Klette, R.; Schneider, N.; Franke, U. Visual odometry driven online calibration for monocular lidar-camera systems. In Proceedings of the International Conference on Pattern Recognition, Cancún, México, 4–8 December 2016; pp. 2848–2853. [Google Scholar]

- Li, M.; Mourikis, A.I. 3-D motion estimation and online temporal calibration for camera-IMU systems. In Proceedings of the IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 5709–5716. [Google Scholar]

- Hemayed, E.E. A survey of camera self-calibration. In Proceedings of the IEEE Conference on Advanced Video and Signal Based Surveillance, Miami, FL, USA, 22 July 2003; pp. 351–357. [Google Scholar]

- Maybank, S.J.; Faugeras, O.D. A theory of self-calibration of a moving camera. Int. J. Comput. Vis. 1992, 8, 123–151. [Google Scholar] [CrossRef]

- Catalá-Prat, Á.; Rataj, J.; Reulke, R. Self-calibration system for the orientation of a vehicle camera. In Proceedings of the ISPRS Commission V Symposium: Image Engineering and Vision Metrology, Dresden, Germany, 25–27 September 2006; pp. 68–73. [Google Scholar]

- Xu, H.; Wang, X. Camera calibration based on perspective geometry and its application in LDWS. Phys. Procedia 2012, 33, 1626–1633. [Google Scholar] [CrossRef]

- De Paula, M.B.; Jung, C.R.; da Silveira, L.G., Jr. Automatic on-the-fly extrinsic camera calibration of onboard vehicular cameras. Expert Syst. Appl. 2014, 41, 1997–2007. [Google Scholar] [CrossRef]

- Wang, H.; Cai, Y.; Lin, G.; Zhang, W. A novel method for camera external parameters online calibration using dotted road line. Adv. Robot. 2014, 28, 1033–1042. [Google Scholar] [CrossRef]

- Choi, K.; Jung, H.; Suhr, J. Automatic calibration of an around view monitor system exploiting lane markings. Sensors 2018, 18, 2956. [Google Scholar] [CrossRef] [PubMed]

- Yang, K.; Hu, X.; Bergasa, L.M.; Romera, E.; Wang, K. PASS: Panoramic Annular Semantic Segmentation. IEEE Trans. Intell. Transp. Syst. 2019, 1–15. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Szeliski, R. Image alignment and stitching: A tutorial. Found. Trends® Comput. Graph. Vis. 2007, 37, 1–104. [Google Scholar] [CrossRef]

- Ullah, F.; Kaneko, S.I. Using orientation codes for rotation-invariant template matching. Pattern Recognit. 2004, 37, 201–209. [Google Scholar] [CrossRef]

- Kim, H.Y.; de Araújo, S.A. Grayscale template-matching invariant to rotation, scale, translation, brightness and contrast. In Proceedings of the Pacific-Rim Symposium on Image and Video Technology, Santiago, Chile, 17–19 December 2007; pp. 100–113. [Google Scholar]

- Yang, H.; Huang, C.; Wang, F.; Song, K.; Zheng, S.; Yin, Z. Large-scale and rotation-invariant template matching using adaptive radial ring code histograms. Pattern Recognit. 2019, 91, 345–356. [Google Scholar] [CrossRef]

- Ruland, T.; Pajdla, T.; Krüger, L. Extrinsic autocalibration of vehicle mounted cameras for maneuvering assistance. In Proceedings of the Computer Vision Winter Workshop, Nove Hrady, Czech Republic, 3–5 February 2010; pp. 44–51. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Unity Technologies. What’s New in Unity 5.0. Available online: https://unity3d.com/unity/whats-new/unity-5.0 (accessed on 26 March 2020).

- Duda, R.O.; Hart, P.E. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Lewis, J.P. Fast template matching. In Proceedings of the European Conference on Computer Vision, Copenhagen, Denmark, 28–31 May 2002; pp. 120–123. [Google Scholar]

- Antonelli, G.; Caccavale, F.; Grossi, F.; Marino, A. Simultaneous calibration of odometry and camera for a differential drive mobile robot. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 5417–5422. [Google Scholar]

- Tang, H.; Liu, Y. A fully automatic calibration algorithm for a camera odometry system. IEEE Sens. J. 2017, 17, 4208–4216. [Google Scholar] [CrossRef]

- Guo, C.X.; Mirzaei, F.M.; Roumeliotis, S.I. An analytical least-squares solution to the odometer-camera extrinsic calibration problem. In Proceedings of the IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 3962–3968. [Google Scholar]

- Olson, D.L.; Delen, D. Advanced Data Mining Techniques; Springer: Berlin/Heidelberg, Germany, 2008; ISBN 978-3-540-76916-3. [Google Scholar]

- Kanzow, C.; Yamashita, N.; Fukushima, M. Withdrawn: Levenberg–marquardt methods with strong local convergence properties for solving nonlinear equations with convex constraints. J. Comput. Appl. Math. 2005, 173, 321–343. [Google Scholar] [CrossRef]

| TP | FP | ||

| FN | TN | ||

(Degree) | (Pixel) | (Pixel) | Precision with Calibration | Recall with Calibration | Precision without Calibration | Recall without Calibration | |

|---|---|---|---|---|---|---|---|

| Average | −1.4000 | −124.6400 | −55.3000 | 0.9758 | 0.9239 | 0.6715 | 0.5929 |

| RMSE | 0.6164 | 4.9041 | 13.4763 | - | - |

| Method | Driver’s Convenience | Product Cost | Calibration Constraint | |

|---|---|---|---|---|

| Offline calibration | Poor | Poor | Fair | |

| Online calibration with additional devices | Good | Fair | Poor | |

| Online calibration without additional devices | Previous methods | Good | Good | Poor |

| Proposed method | Good | Good | Good | |

| Camera Condition | Method | (Degree) | (Pixel) | (Pixel) |

|---|---|---|---|---|

| 150° FOV with lens distortion | proposed | 0 | 89 | -25 |

| offline | 1 | 75 | 0 | |

| 115° FOV with lens distortion | proposed | −1 | 96 | −71 |

| offline | 1 | 103 | −6 | |

| 115° FOV without lens distortion | proposed | 2 | 93 | −33 |

| offline | 0 | 117 | −38 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.H.; Lee, D.-W. A Hough-Space-Based Automatic Online Calibration Method for a Side-Rear-View Monitoring System. Sensors 2020, 20, 3407. https://doi.org/10.3390/s20123407

Lee JH, Lee D-W. A Hough-Space-Based Automatic Online Calibration Method for a Side-Rear-View Monitoring System. Sensors. 2020; 20(12):3407. https://doi.org/10.3390/s20123407

Chicago/Turabian StyleLee, Jung Hyun, and Dong-Wook Lee. 2020. "A Hough-Space-Based Automatic Online Calibration Method for a Side-Rear-View Monitoring System" Sensors 20, no. 12: 3407. https://doi.org/10.3390/s20123407

APA StyleLee, J. H., & Lee, D.-W. (2020). A Hough-Space-Based Automatic Online Calibration Method for a Side-Rear-View Monitoring System. Sensors, 20(12), 3407. https://doi.org/10.3390/s20123407