A Cost-Effective In Situ Zooplankton Monitoring System Based on Novel Illumination Optimization

Abstract

:1. Introduction

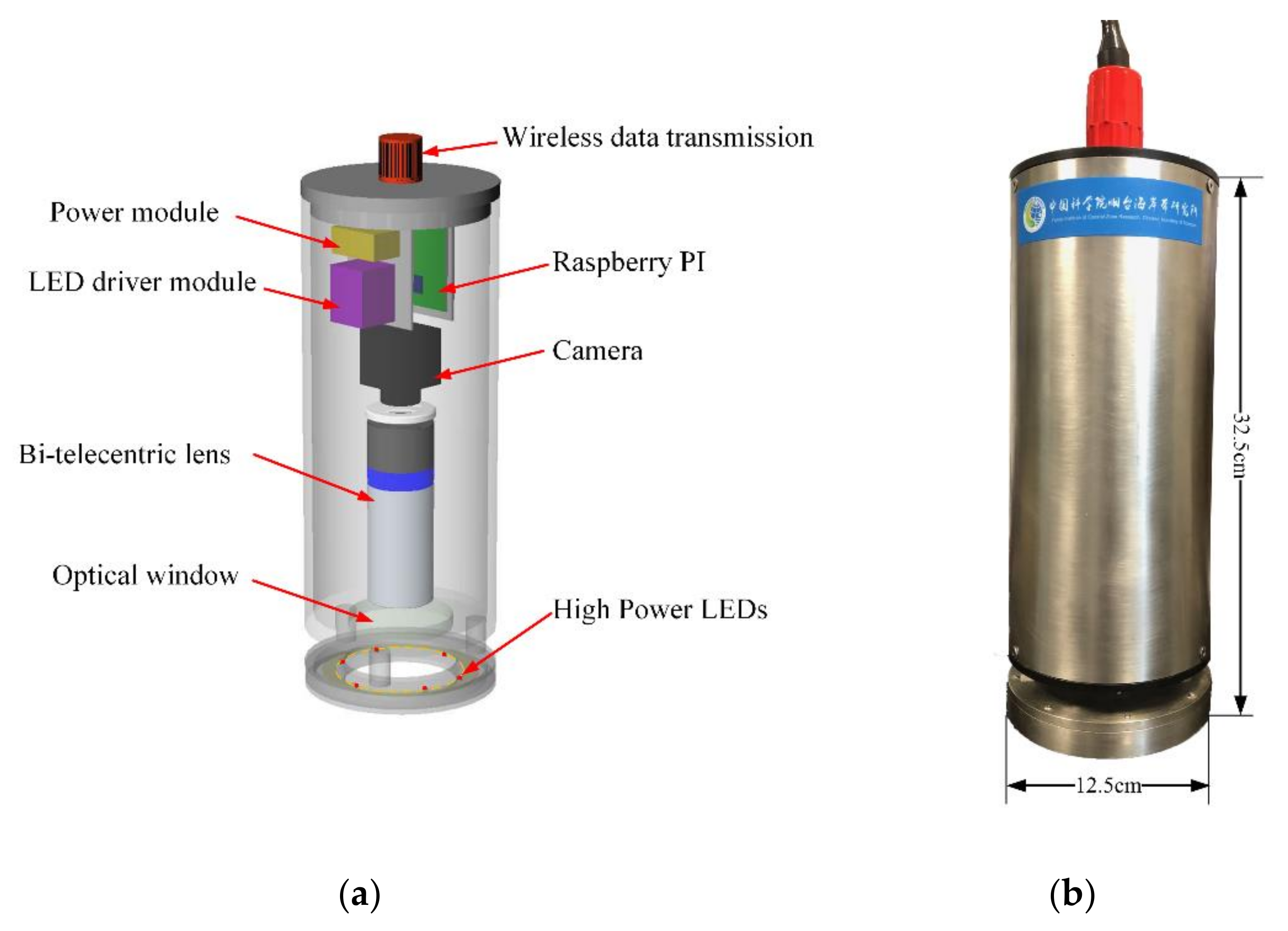

2. Experimental Section

2.1. Materials

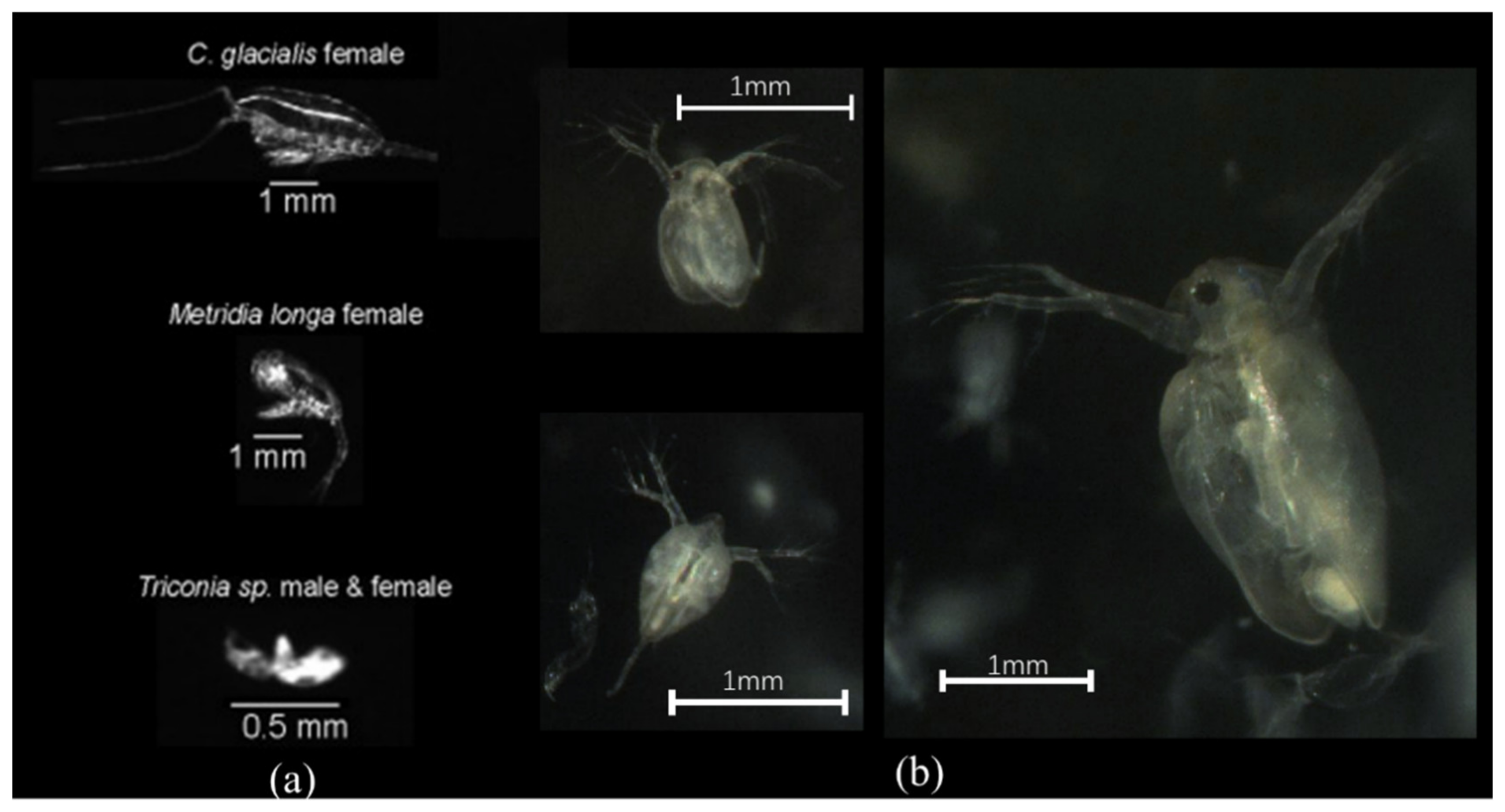

2.2. Underwater Opitcal Imaging of Zooplankton

2.3. Design of a Zooplankton Imaging System

2.4. Design of an Optimized Illuminator

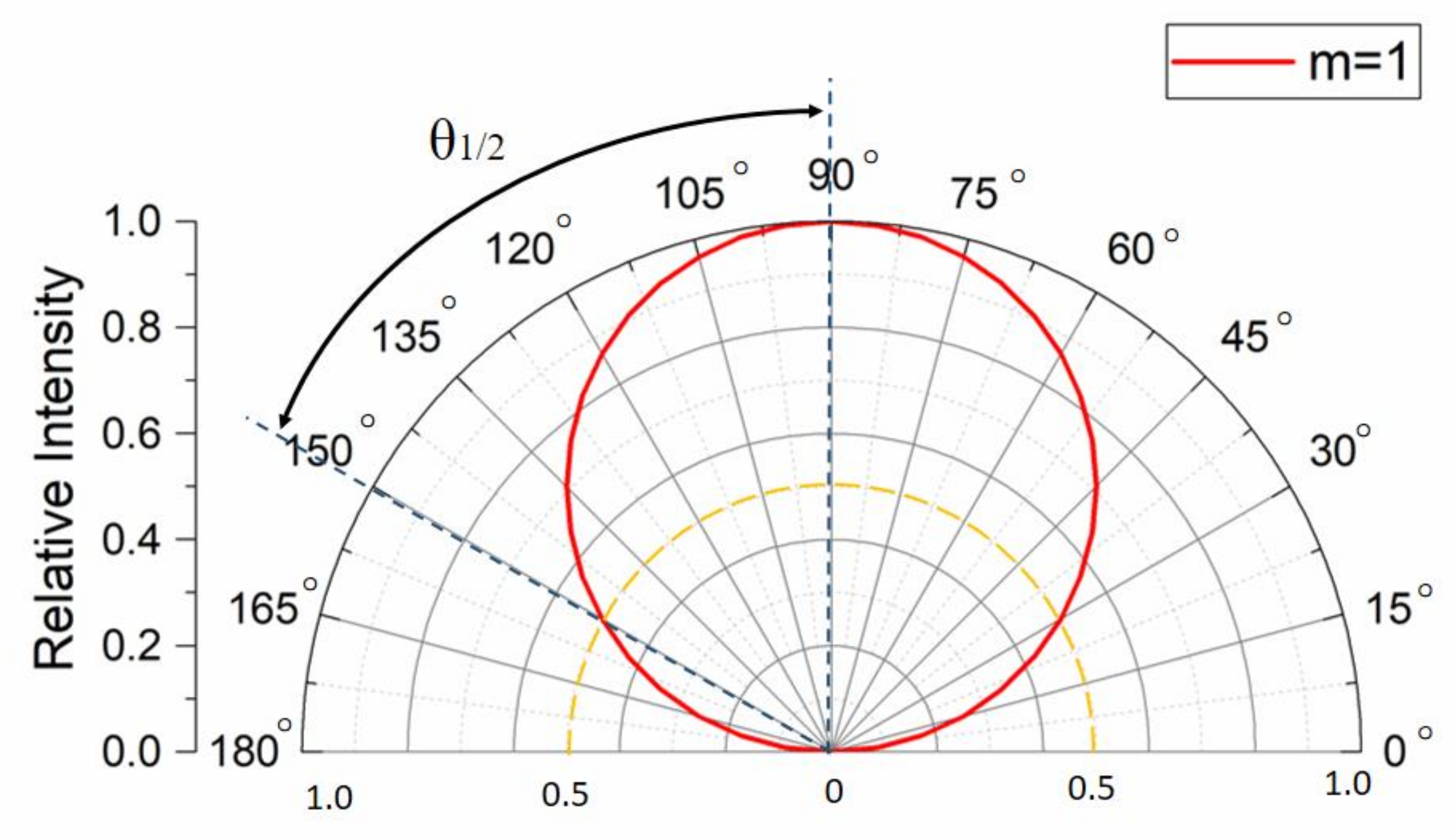

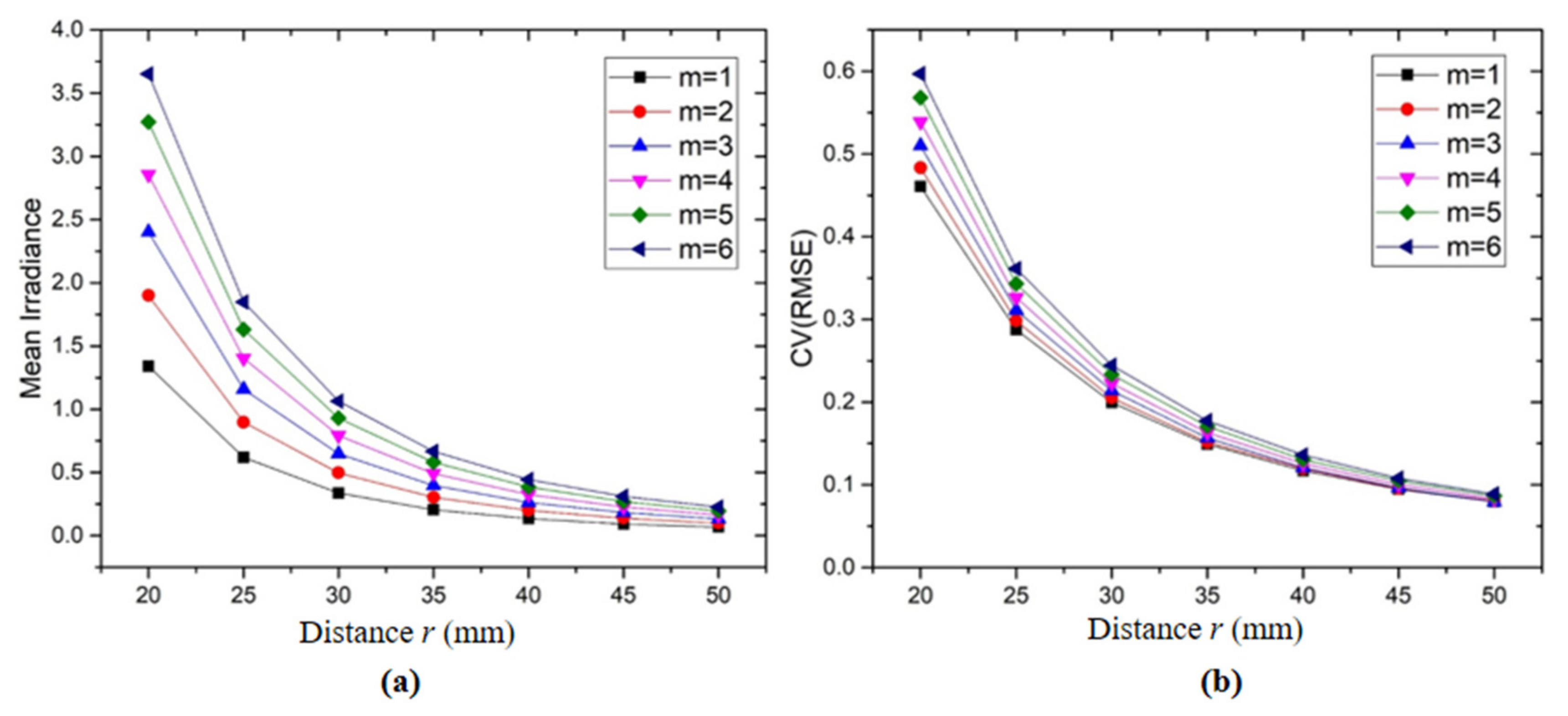

2.4.1. Analysis of the Illumination Properties of the LED Array

- (1)

- Illumination Analysis of a Single LED

- (2)

- Illumination Analysis of Group LEDs

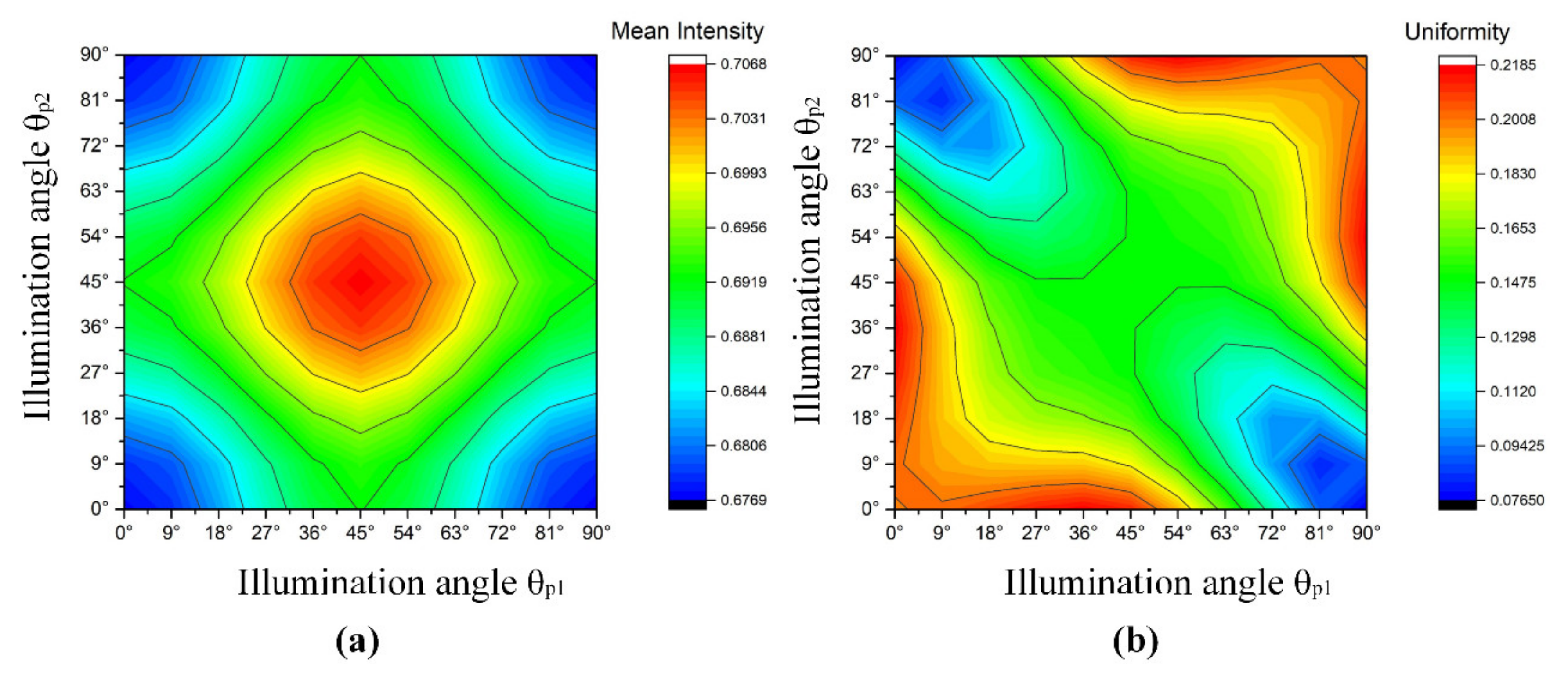

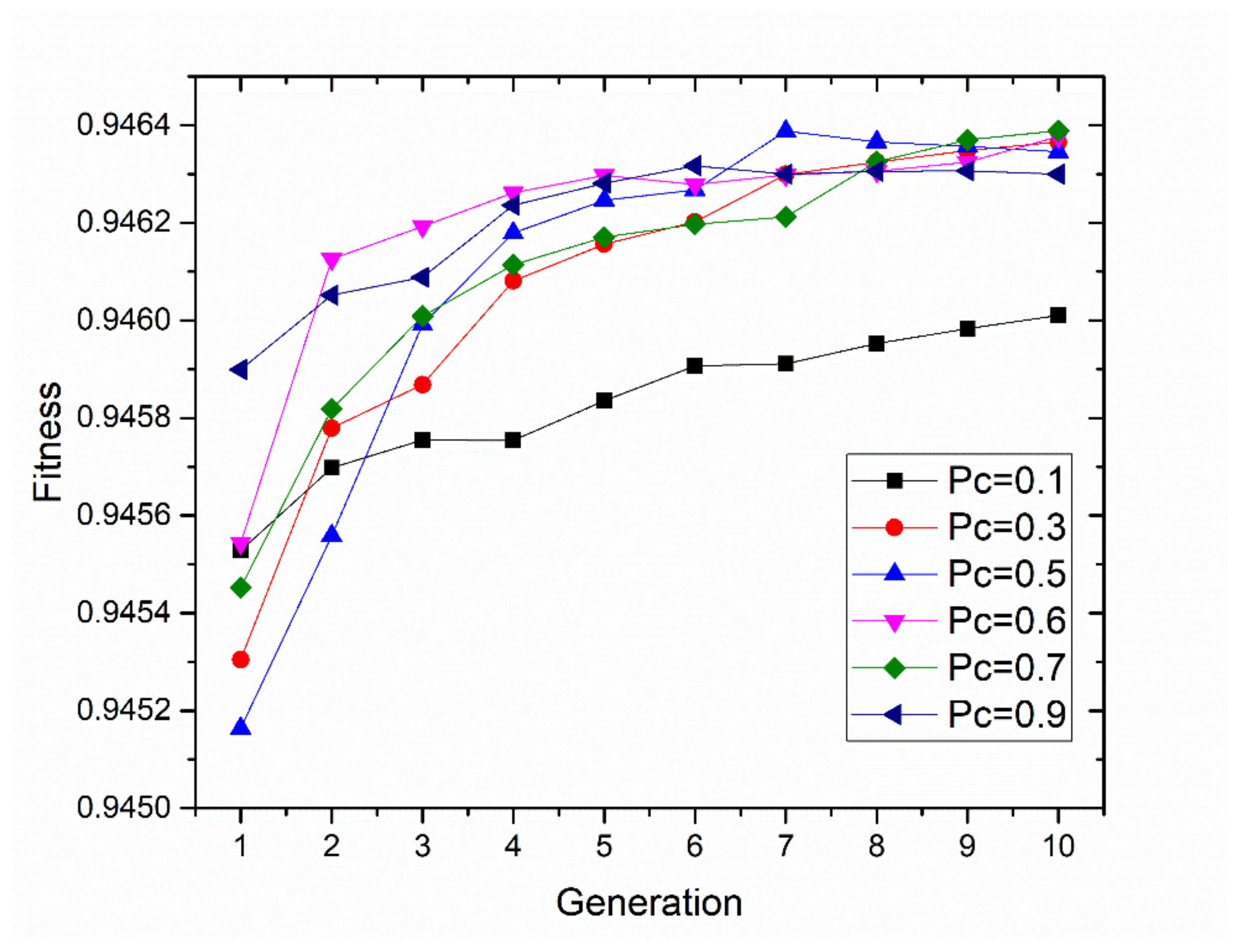

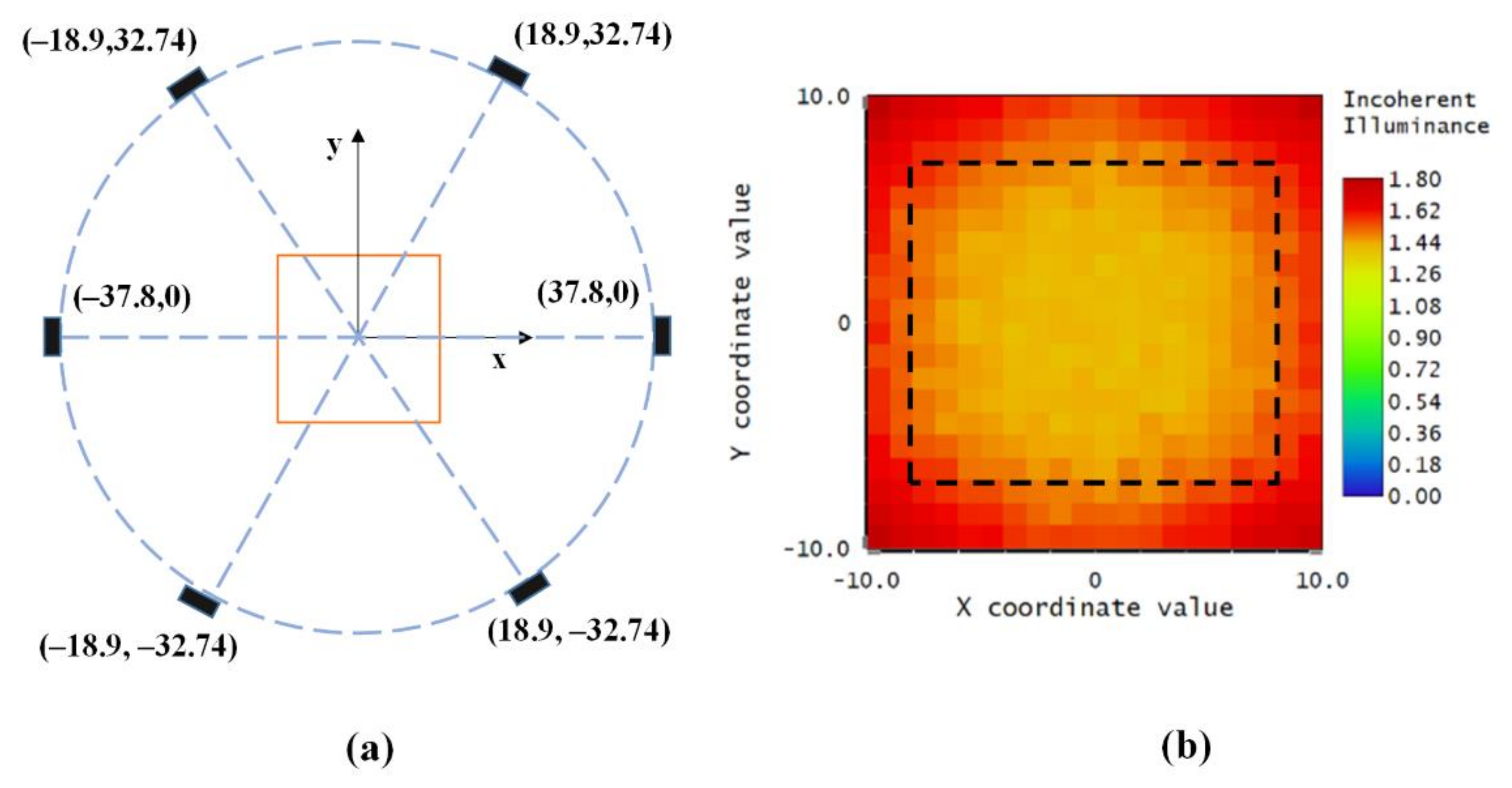

2.4.2. Optimizing the Illumination Configuration by Using the Genetic Algorithm

3. Results and Discussion

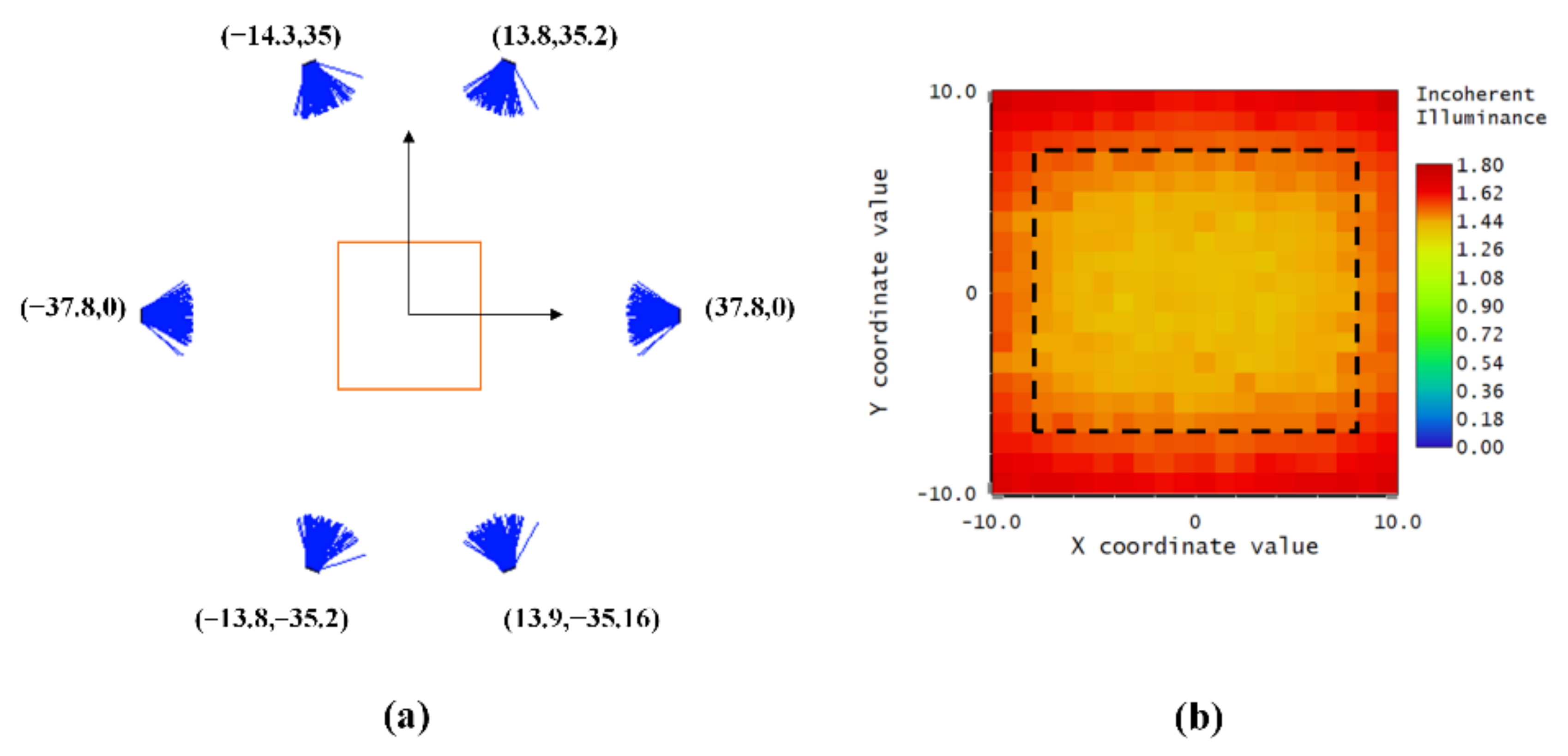

3.1. Design Array Consisting of LEDs Based on the Genetic Algorithm

- (1)

- Chromosomes

- (2)

- Fitness function

- (3)

- Genetic operators

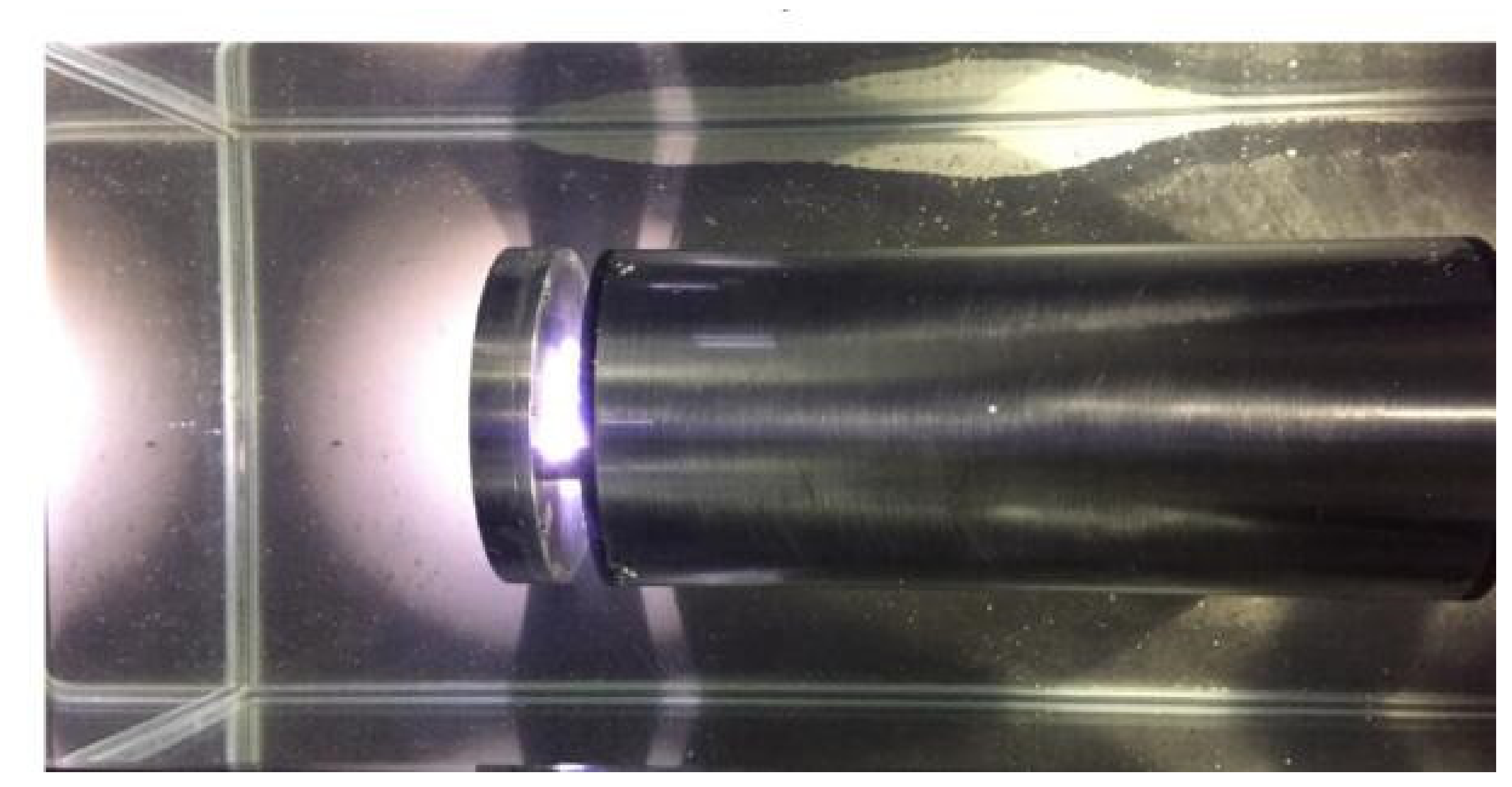

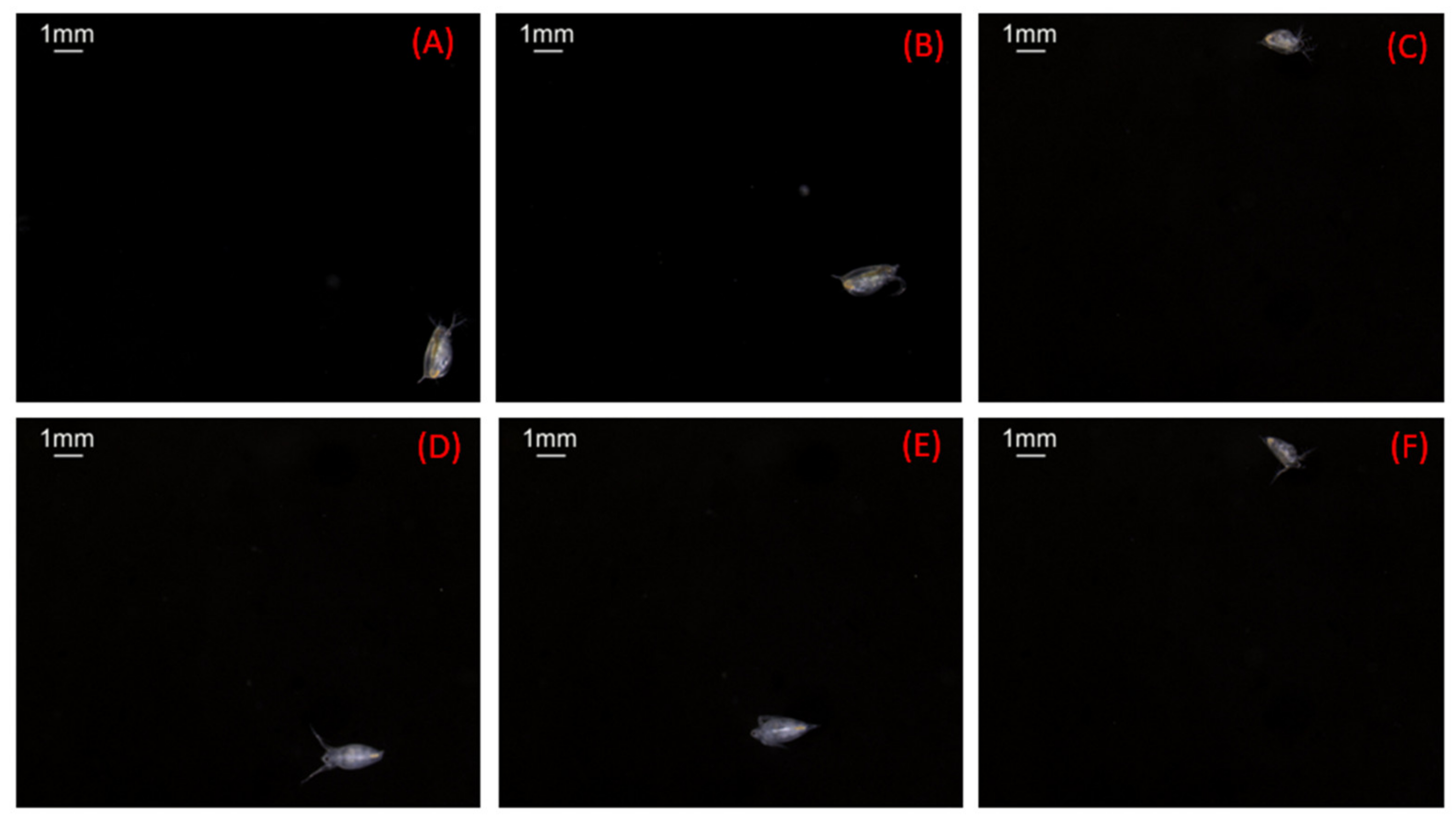

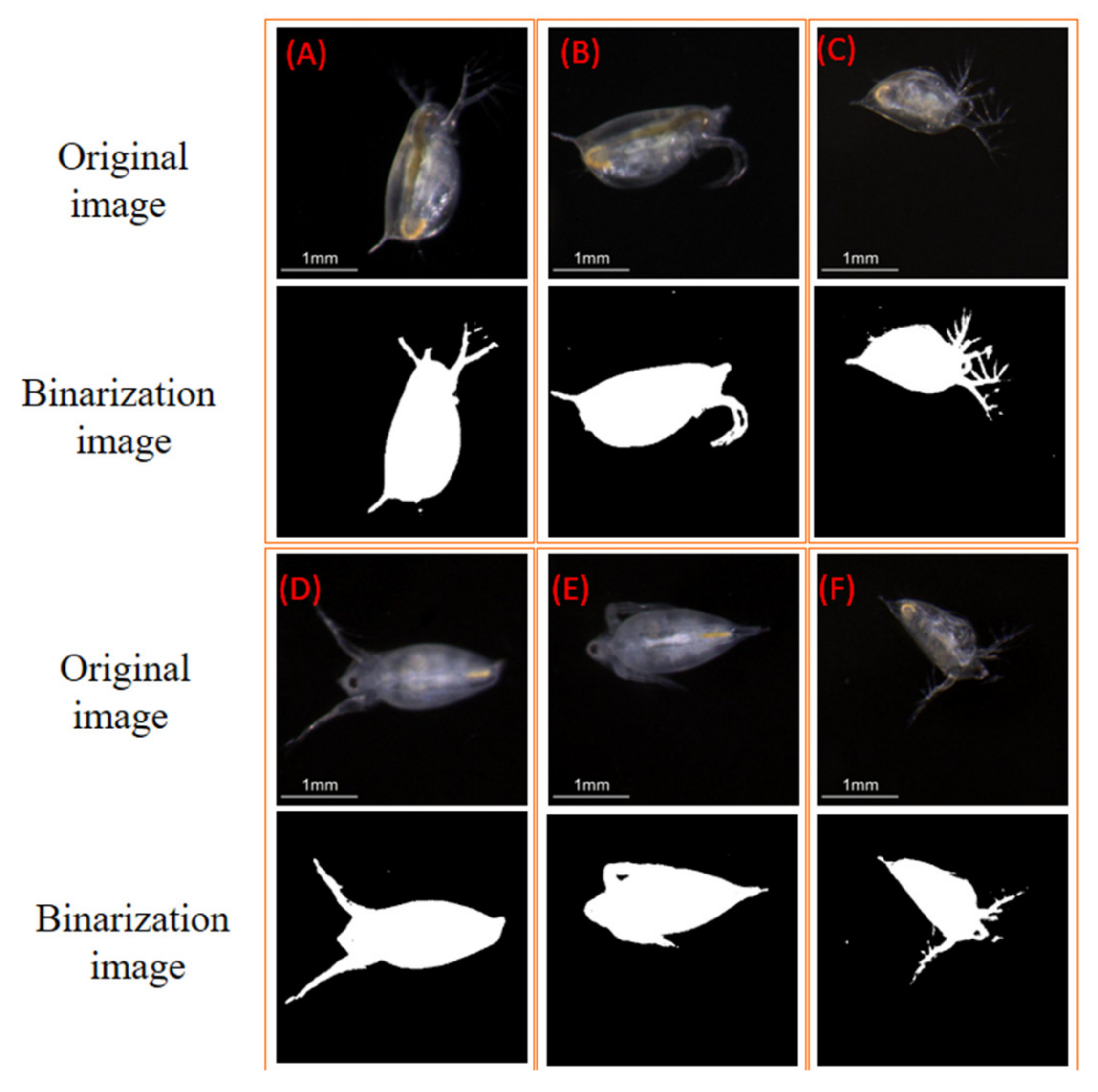

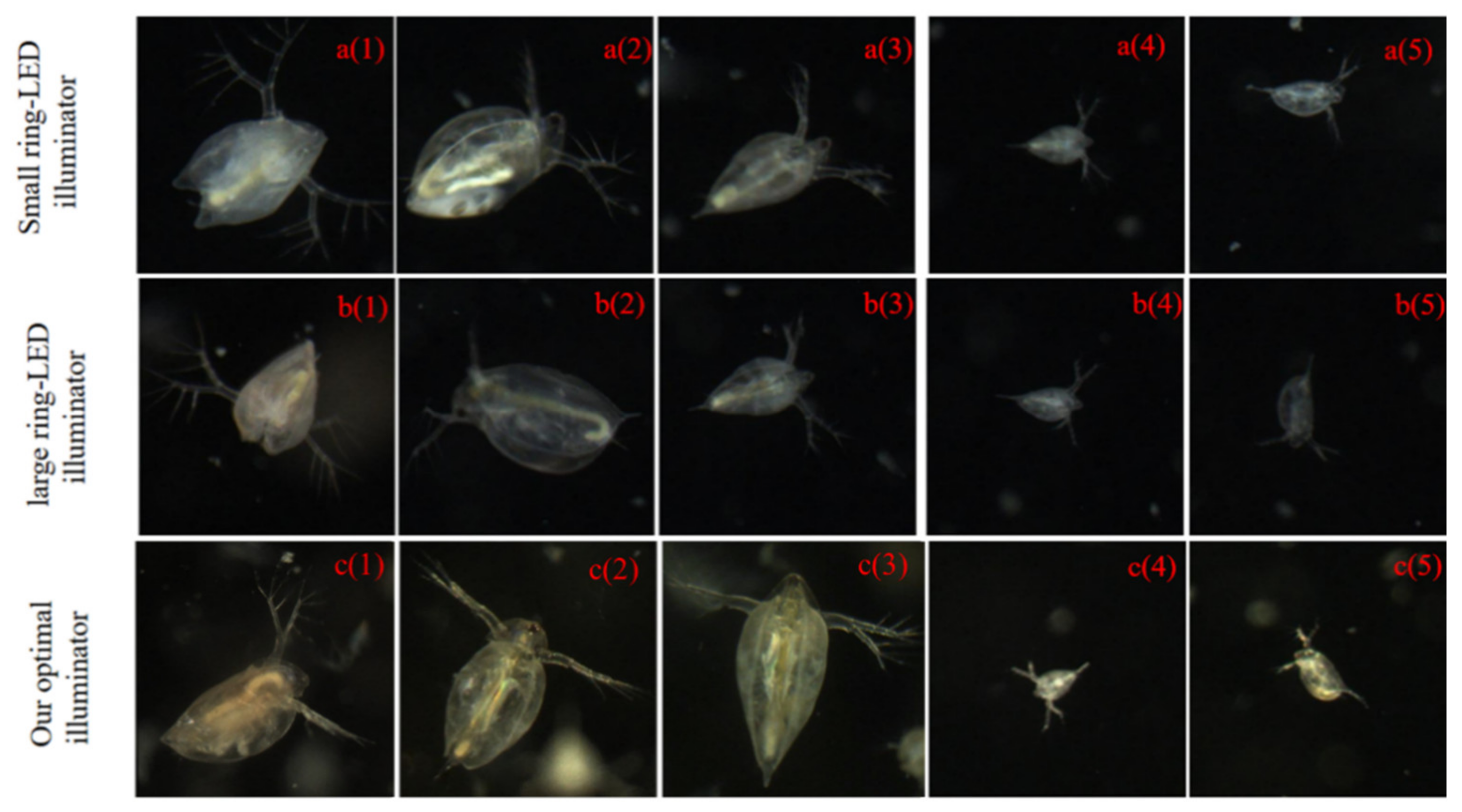

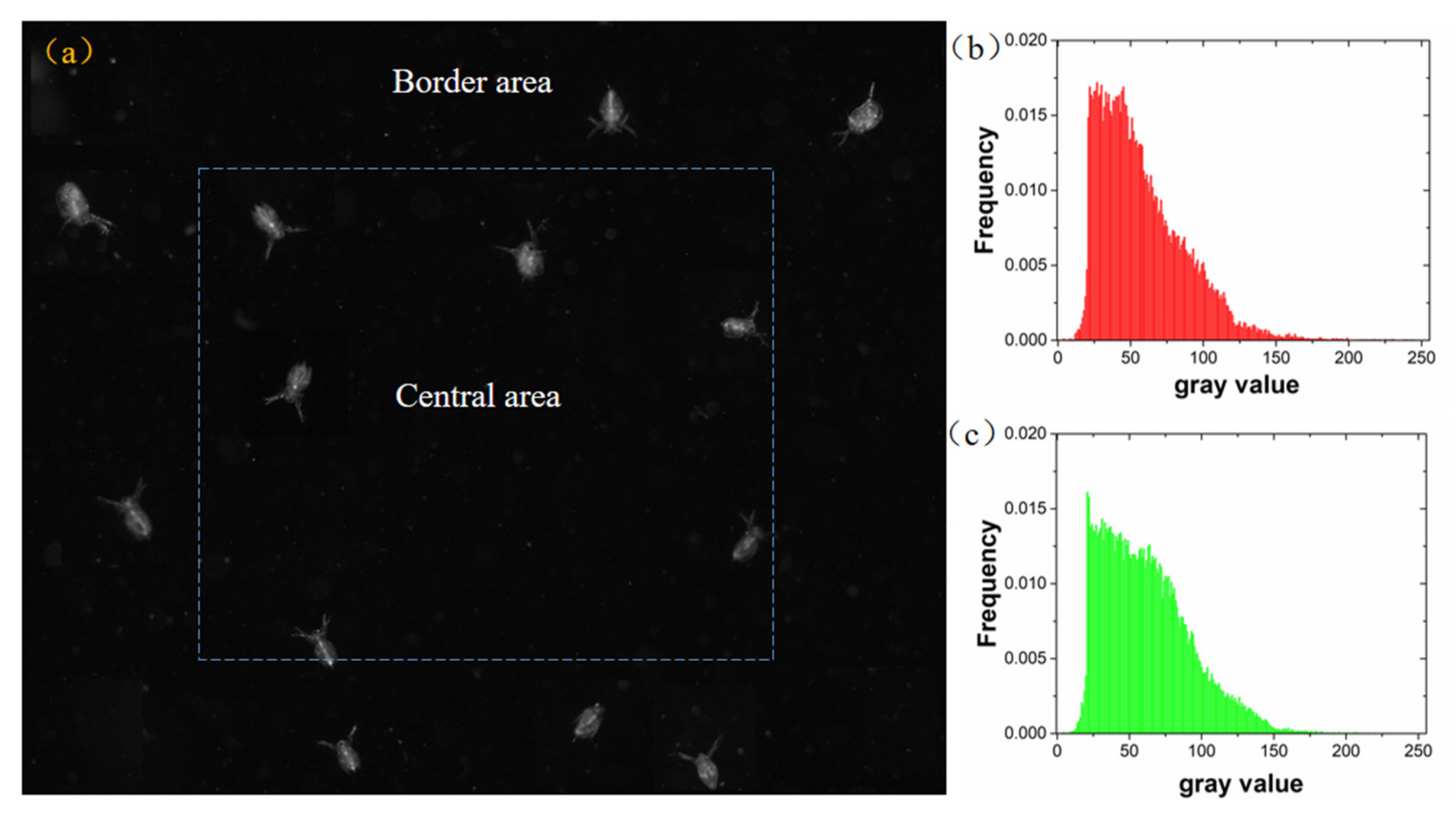

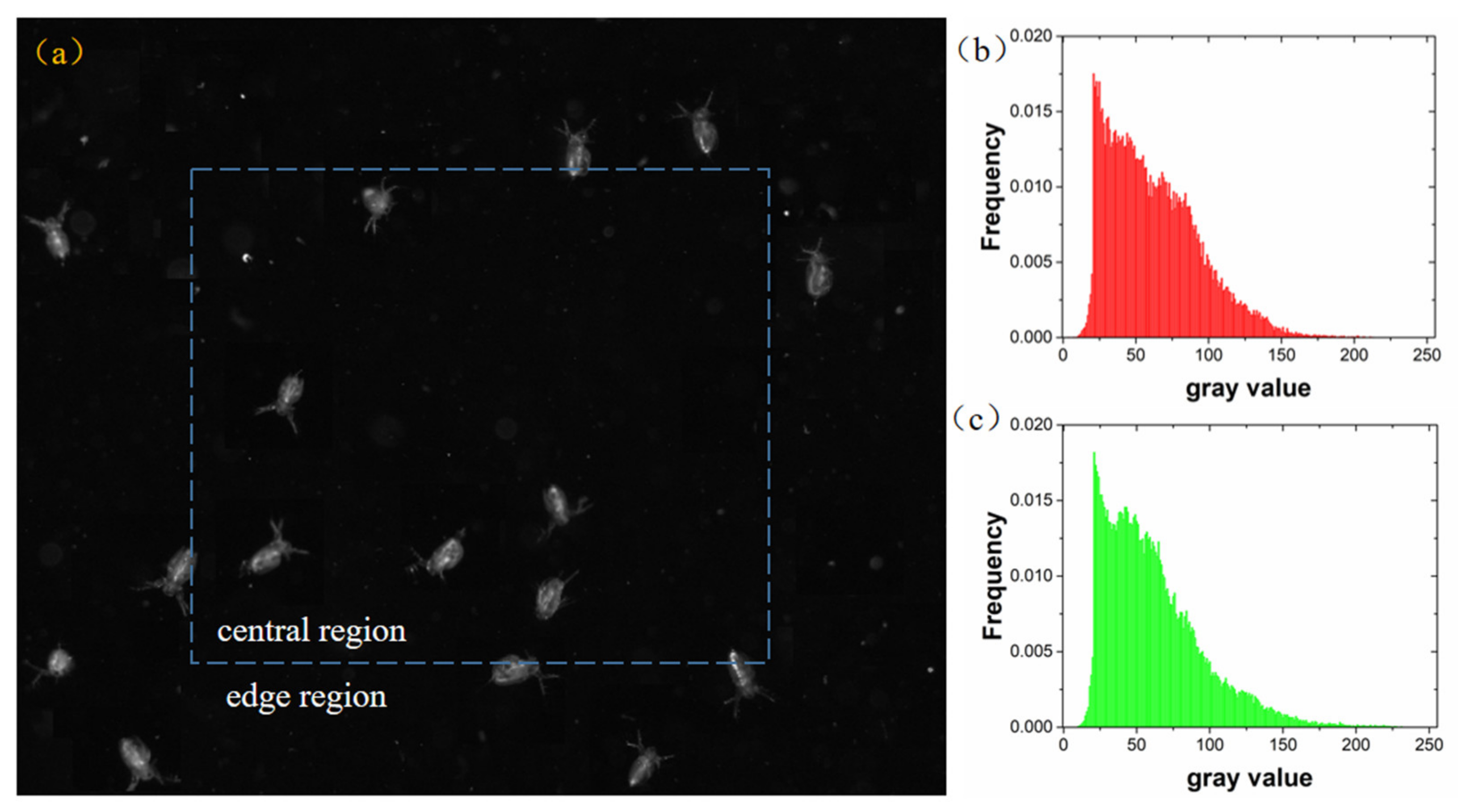

3.2. Experiments with the Zooplankton Imaging System

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Falkowski, P. Ocean Science: The power of plankton. Nature 2012, 483, S17–S20. [Google Scholar] [CrossRef] [PubMed]

- Stemmann, L.; Boss, E. Plankton and Particle Size and Packaging: From Determining Optical Properties to Driving the Biological Pump. Annu. Rev. Mar. Sci. 2012, 4, 263–290. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zheng, H.; Wang, R.; Yu, Z.; Wang, N.; Gu, Z.; Zheng, B. Automatic plankton image classification combining multiple view features via multiple kernel. BMC Bioinform. 2017, 18. [Google Scholar] [CrossRef] [Green Version]

- Yu, J.; Yang, X.; Wang, N.; Tilstone, G.; Fileman, E.; Zheng, H.; Yu, Z.; Fu, M.; Zheng, B. Video-Based Real Time Analysis of Plankton Particle Size Spectrum. IEEE Access 2019, 7, 60020–60025. [Google Scholar] [CrossRef]

- Wiebe, P.H.; Benfield, M.C. From the Hensen net toward four-dimensional biological oceanography. Prog. Oceanogr. 2003, 56, 7–136. [Google Scholar] [CrossRef]

- Davis, C.S.; Thwaites, F.T.; Gallager, S.M.; Hu, Q. A three-axis fast-tow digital Video Plankton Recorder for rapid surveys of plankton taxa and hydrography. Limnol. Oceanogr. Methods 2005, 3, 59–74. [Google Scholar] [CrossRef]

- Nakamura, Y.; Somiya, R.; Suzuki, N.; Hidaka-Umetsu, M.; Yamaguchi, A.; Lindsay, D.J. Optics-based surveys of large unicellular zooplankton: A case study on radiolarians and phaeodarians. Plankton Benthos Res. 2017, 12, 95–103. [Google Scholar] [CrossRef] [Green Version]

- Picheral, M.; Guidi, L.; Stemmann, L.; Karl, D.M.; Iddaoud, G.; Gorsky, G. The Underwater Vision Profiler 5: An advanced instrument for high spatial resolution studies of particle size spectra and zooplankton. Limnol. Oceanogr. Methods 2010, 8, 462–473. [Google Scholar] [CrossRef] [Green Version]

- Bi, H.S.; Guo, Z.H.; Benfield, M.C.; Fan, C.L.; Ford, M.; Shahrestani, S.; Sieracki, J.M. A Semi-Automated Image Analysis Procedure for In Situ Plankton Imaging Systems. PLoS ONE 2015, 10. [Google Scholar] [CrossRef] [Green Version]

- Schulz, J.; Barz, K.; Ayon, P.; Luedtke, A.; Zielinski, O.; Mengedoht, D.; Hirche, H.-J. Imaging of plankton specimens with the lightframe on-sight keyspecies investigation (LOKI) system. J. Eur. Opt. Soc. Rapid Publ. 2010, 5. [Google Scholar] [CrossRef] [Green Version]

- Schmid, M.S.; Aubry, C.; Grigor, J.; Fortier, L. The LOKI underwater imaging system and an automatic identification model for the detection of zooplankton taxa in the Arctic Ocean. Methods Oceanogr. 2016, 15, 129–160. [Google Scholar] [CrossRef]

- Luo, T.; Kramer, K.; Goldgof, D.B.; Hall, L.O.; Samson, S.; Remsen, A.; Hopkins, T. Recognizing plankton images from the shadow image particle profiling evaluation recorder. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2004, 34, 1753–1762. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cowen, R.K.; Greer, A.T.; Guigand, C.M.; Hare, J.A.; Richardson, D.E.; Walsh, H.J. Evaluation of the In Situ Ichthyoplankton Imaging System (ISIIS): Comparison with the traditional (bongo net) sampler. Fish. Bull. 2013, 111, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Johnsen, S.; Widder, E.A. Transparency and visibility of gelatinous zooplankton from the Northwestern Atlantic and Gulf of Mexico. Biol. Bull. 1998, 195, 337–348. [Google Scholar] [CrossRef] [Green Version]

- Samson, S.; Hopkins, T.; Remsen, A.; Langebrake, L.; Sutton, T.; Patten, J. A system for high-resolution zooplankton imaging. IEEE J. Ocean. Eng. 2001, 26, 671–676. [Google Scholar] [CrossRef]

- Pitois, S.G.; Tilbury, J.; Bouch, P.; Close, H.; Barnett, S.; Culverhouse, P.F. Comparison of a Cost-Effective Integrated Plankton Sampling and Imaging Instrument with Traditiona Systems for Mesozooplankton Sampling in the Celtic Sea. Front. Mar. Sci. 2018, 5. [Google Scholar] [CrossRef] [Green Version]

- Mosleh, M.A.A.; Manssor, H.; Malek, S.; Milow, P.; Salleh, A. A preliminary study on automated freshwater algae recognition and classification system. BMC Bioinform. 2012, 13. [Google Scholar] [CrossRef] [Green Version]

- Thompson, C.M.; Hare, M.P.; Gallager, S.M. Semi-automated image analysis for the identification of bivalve larvae from a Cape Cod estuary. Limnol. Oceanogr. Methods 2012, 10, 538–554. [Google Scholar] [CrossRef] [Green Version]

- Ramane, D.; Shaligram, A. Optimization of multi-element LED source for uniform illumination of plane surface. Opt. Express 2011, 19, A639–A648. [Google Scholar] [CrossRef]

- Orth, A.; Wilson, E.R.; Thompson, J.G.; Gibson, B.C. A dual-mode mobile phone microscope using the onboard camera flash and ambient light. Sci. Rep. 2018, 8. [Google Scholar] [CrossRef] [Green Version]

- Guo, B.; Yu, J.; Liu, H.; Liu, X.; Hou, R. Research on Detecting Plankton Based on Dark-field Microscopy. In Optical Design and Testing Vii; Wang, Y., Kidger, T.E., Tatsuno, K., Eds.; International Society for Optics and Photonics: Bellingham, WA, USA, 2016; Volume 10021. [Google Scholar]

- Ohman, M.D.; Davis, R.E.; Sherman, J.T.; Grindley, K.R.; Whitmore, B.M.; Nickels, C.F.; Ellen, J.S. Zooglider: An autonomous vehicle for optical and acoustic sensing of zooplankton. Limnol. Oceanogr. Methods 2019, 17, 69–86. [Google Scholar] [CrossRef] [Green Version]

- Suzuki, A.; Kato, Y.; Matsuura, T.; Watanabe, H. Growth evaluation method by live imaging of Daphnia magna and its application to the estimation of an insect growth regulator. J. Appl. Toxicol. 2015, 35, 68–74. [Google Scholar] [CrossRef] [PubMed]

- Shen, S.C.; Kuo, C.Y.; Fang, M.-C. Design and Analysis of an Underwater White LED Fish-Attracting Lamp and Its Light Propagation. Int. J. Adv. Robot. Syst. 2013, 10. [Google Scholar] [CrossRef]

- Moreno, I.; Avendaño-Alejo, M.; Tzonchev, R.I. Designing light-emitting diode arrays for uniform near-field irradiance. Appl. Opt. 2006, 45, 2265–2272. [Google Scholar] [CrossRef]

- Wu, F.-T.; Huang, Q.-L. A precise model of LED lighting and its application in uniform illumination system. Optoelectron. Lett. 2011, 7, 334–336. [Google Scholar] [CrossRef]

- Zheng, J.; Qian, K. Designing single LED illumination distribution for direct-type backlight. Appl. Opt. 2013, 52, 7022–7027. [Google Scholar] [CrossRef]

- Lei, P.; Wang, Q.; Zou, H. Designing LED array for uniform illumination based on local search algorithm. J. Eur. Opt. Soc. Rapid Publ. 2014, 9. [Google Scholar] [CrossRef] [Green Version]

- Qin, Z.; Wang, K.; Chen, F.; Luo, X.; Liu, S. Analysis of condition for uniform lighting generated by array of light emitting diodes with large view angle. Opt. Express 2010, 18, 17460–17476. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mangkuto, R.A.; Siregar, M.A.A.; Handina, A.; Faridah. Determination of appropriate metrics for indicating indoor daylight availability and lighting energy demand using genetic algorithm. Sol. Energy 2018, 170, 1074–1086. [Google Scholar] [CrossRef]

- Liu, H.; Lin, Z.; Xu, Y.; Chen, Y.; Pu, X. Coverage uniformity with improved genetic simulated annealing algorithm for indoor Visible Light Communications. Opt. Commun. 2019, 439, 156–163. [Google Scholar] [CrossRef]

- Bajpai, P.; Kumar, M. Genetic algorithm–an approach to solve global optimization problems. Indian J. Comput. Sci. Eng. 2010, 1, 199–206. [Google Scholar]

- Goncalvez Ferraz, L.H.; Ferrazani Mattos, D.M.; Muniz Bandeira Duarte, O.C.M.B. Two-Phase Multipathing Scheme based on Genetic Algorithm for Data Center Networking. In Proceedings of the 2014 IEEE Global Communications Conference, Austin, TX, USA, 8–12 December 2014; pp. 2270–2275. [Google Scholar]

- Madias, E.-N.D.; Kontaxis, P.A.; Topalis, F.V. Application of multi-objective genetic algorithms to interior lighting optimization. Energy Build. 2016, 125, 66–74. [Google Scholar] [CrossRef]

- Chapman, J.L.; Lu, L.; Anderson-Cook, C.M. Using Multiple Criteria Optimization and Two-Stage Genetic Algorithms to Select a Population Management Strategy with Optimized Reliability. Complexity 2018, 2018. [Google Scholar] [CrossRef]

- Beed, R.S.; Sarkar, S.; Roy, A.; Chatterjee, S. A Study of the Genetic Algorithm Parameters for Solving Multi-objective Travelling Salesman Problem. In Proceedings of the 2017 International Conference on Information Technology (ICIT), Bhubaneswar, India, 21–23 December 2017; pp. 23–29. [Google Scholar] [CrossRef]

- Bystrov, A.; Hoare, E.; Gashinova, M.; Cherniakov, M.; Tran, T.-Y. Underwater Optical Imaging for Automotive Wading. Sensors 2018, 18, 4476. [Google Scholar] [CrossRef] [Green Version]

- Dodson, S.I.; Tollrian, R.; Lampert, W. Daphnia swimming behavior during vertical migration. J. Plankton Res. 1997, 19, 969–978. [Google Scholar] [CrossRef] [Green Version]

- Noss, C.; Dabrunz, A.; Rosenfeldt, R.R.; Lorke, A.; Schulz, R. Three-Dimensional Analysis of the Swimming Behavior of Daphnia magna Exposed to Nanosized Titanium Dioxide. PLoS ONE 2013, 8. [Google Scholar] [CrossRef]

- Simao, F.C.P.; Martinez-Jeronimo, F.; Blasco, V.; Moreno, F.; Porta, J.M.; Pestana, J.L.T.; Soares, A.M.V.M.; Raldua, D.; Barata, C. Using a new high-throughput video-tracking platform to assess behavioural changes in Daphnia magna exposed to neuro-active drugs. Sci. Total Environ. 2019, 662, 160–167. [Google Scholar] [CrossRef] [Green Version]

- Pérez-Fuentetaja, A.; Goodberry, F. Daphnia’s challenge: Survival and reproduction when calcium and food are limiting. J. Plankton Res. 2016, 38, 1379–1388. [Google Scholar] [CrossRef] [Green Version]

- Cheng, K.; Cheng, X.; Hao, Q.; Assoc Comp, M. A Review of Feature Extraction Technologies for Plankton Images. In Proceedings of the 2018 International Conference on Information Hiding and Image Processing, Manchester, UK, 22–24 September 2018; pp. 48–56. [Google Scholar]

- Huang, X.; Liu, X.; Zhang, L. A Multichannel Gray Level Co-Occurrence Matrix for Multi/Hyperspectral Image Texture Representation. Remote Sens. 2014, 6, 8424–8445. [Google Scholar] [CrossRef] [Green Version]

| Parameters | A | B | C | D | E | F |

|---|---|---|---|---|---|---|

| Area (mm) | 1.663 | 1.673 | 1.177 | 1.626 | 1.526 | 1.080 |

| Major axis length (mm) | 2.382 | 2.308 | 1.856 | 2.504 | 2.055 | 1.671 |

| Minor axis length (mm) | 1.040 | 1.076 | 0.928 | 1.122 | 1.012 | 1.205 |

| Equivalent diameter (mm) | 1.455 | 1.459 | 1.198 | 1.439 | 1.394 | 1.173 |

| Perimeter (mm) | 9.778 | 7.789 | 9.633 | 9.770 | 6.473 | 8.692 |

| Mean of brightness | 95.04 | 76.24 | 73.94 | 83.99 | 78.93 | 68.44 |

| Standard deviation of brightness | 42.68 | 34.77 | 40.75 | 37.02 | 35.03 | 35.10 |

| Method | Formula |

|---|---|

| Energy | |

| Contrast | |

| Entropy | |

| Homogeneity |

| Images | Energy | Contrast | Homogeneity | Entropy |

|---|---|---|---|---|

| a1 | 0.6478 | 0.0270 | 0.9866 | 4.6817 |

| b1 | 0.6663 | 0.0291 | 0.9857 | 5.1094 |

| c1 | 0.5906 | 0.0359 | 0.9825 | 5.3417 |

| a2 | 0.6184 | 0.0368 | 0.9823 | 4.7674 |

| b2 | 0.6600 | 0.0168 | 0.9916 | 4.4639 |

| c2 | 0.6842 | 0.0738 | 0.9738 | 4.9676 |

| a3 | 0.7594 | 0.0162 | 0.9919 | 4.0554 |

| b3 | 0.8475 | 0.0103 | 0.9948 | 3.6599 |

| c3 | 0.6181 | 0.0651 | 0.9727 | 4.8811 |

| a4 | 0.9283 | 0.0054 | 0.9973 | 2.8527 |

| b4 | 0.9375 | 0.0054 | 0.9973 | 3.1140 |

| c4 | 0.9381 | 0.0145 | 0.9946 | 3.4211 |

| a5 | 0.9191 | 0.0099 | 0.9951 | 3.4720 |

| b5 | 0.9376 | 0.0046 | 0.9977 | 2.9578 |

| c5 | 0.8881 | 0.0190 | 0.9923 | 3.9563 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, Z.; Xia, C.; Fu, L.; Zhang, N.; Li, B.; Song, J.; Chen, L. A Cost-Effective In Situ Zooplankton Monitoring System Based on Novel Illumination Optimization. Sensors 2020, 20, 3471. https://doi.org/10.3390/s20123471

Du Z, Xia C, Fu L, Zhang N, Li B, Song J, Chen L. A Cost-Effective In Situ Zooplankton Monitoring System Based on Novel Illumination Optimization. Sensors. 2020; 20(12):3471. https://doi.org/10.3390/s20123471

Chicago/Turabian StyleDu, Zhiqiang, Chunlei Xia, Longwen Fu, Nan Zhang, Bowei Li, Jinming Song, and Lingxin Chen. 2020. "A Cost-Effective In Situ Zooplankton Monitoring System Based on Novel Illumination Optimization" Sensors 20, no. 12: 3471. https://doi.org/10.3390/s20123471