GAS-GCN: Gated Action-Specific Graph Convolutional Networks for Skeleton-Based Action Recognition

Abstract

:1. Introduction

- We propose an action-specific graph convolutional module, which combines the structural and implicit edges together and also automatically learns the ratio of them according to the input skeleton data.

- To filter out useless and redundant temporal information, we propose a gated CNN in the temporal dimension. The channel-wise attention is adopted in both GCN and gated CNN for information filtering.

- A residual and cascaded framework is designed to integrate these two major components, which enhances and fuses their strengths. The proposed method outperforms state-of-the-art methods on NTU-RGB + D, NTU-RGB + D 120, and Kinetics dataset for skeleton-based action recognition.

2. Related Work

3. Methods

3.1. Graph Convolutional Networks

3.2. Action-Specific Graph Convolutional Module

3.3. Gated Convolutional Neural Networks

3.4. The Gated Action-Specific Convolutional Networks

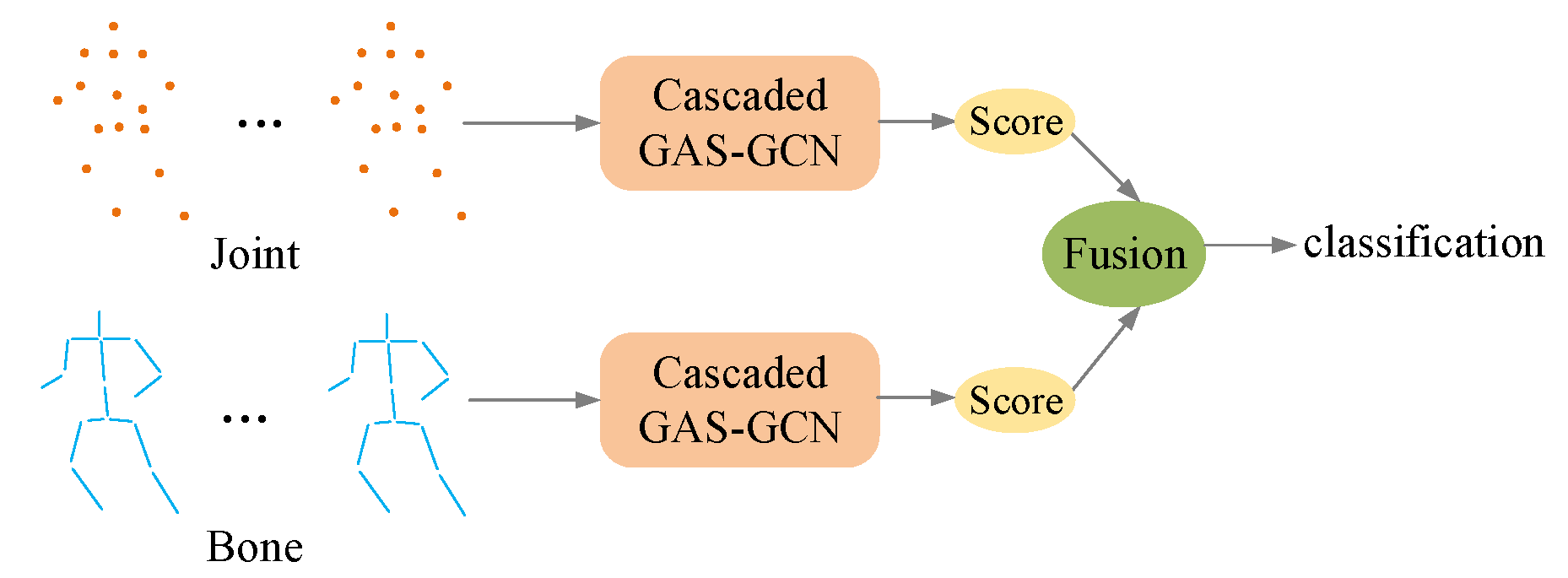

3.5. The Architecture of the Networks

4. Experiments

4.1. Datasets

4.2. Experiments Details

4.3. Comparisons with the State of the Arts

4.3.1. NTU-RGB + D Dataset

4.3.2. Kinetics Dataset

4.4. Ablation Experiments

4.4.1. Ablation Results

4.4.2. The Results of Two-Stream Fusion

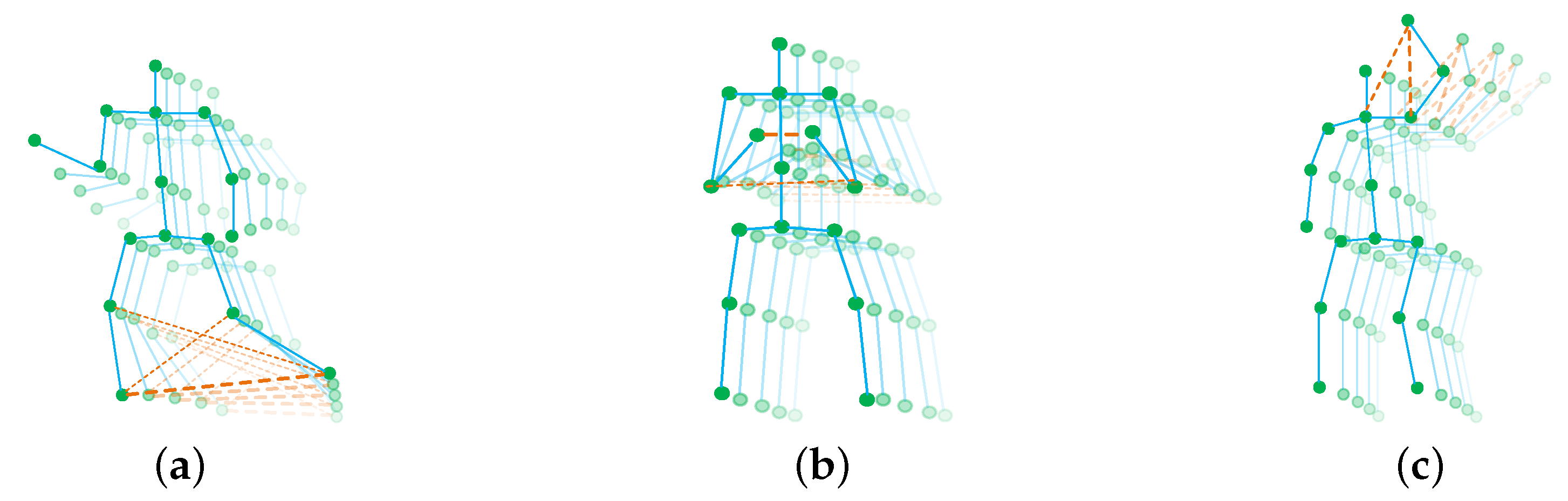

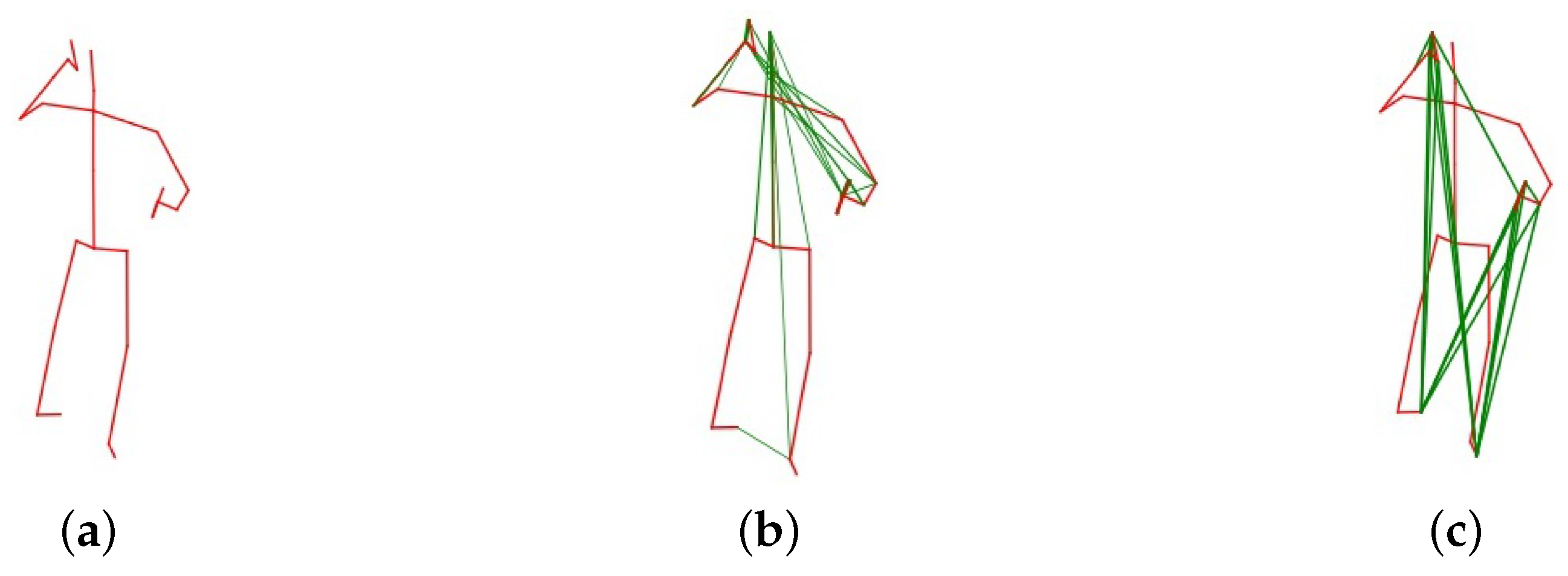

4.4.3. Visualization of Action-Specific Graph Edges

4.5. Results on NTU-RGB + D 120 Dataset

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Vemulapalli, R.; Arrate, F.; Chellappa, R. Human action recognition by representing 3d skeletons as points in a lie group. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 588–595. [Google Scholar]

- Song, S.; Lan, C.; Xing, J.; Zeng, W.; Liu, J. An end-to-end spatio-temporal attention model for human action recognition from skeleton data. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Thakkar, K.; Narayanan, P. Part-based graph convolutional network for action recognition. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Si, C.; Chen, W.; Wang, W.; Wang, L.; Tan, T. An Attention Enhanced Graph Convolutional LSTM Network for Skeleton-Based Action Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1227–1236. [Google Scholar]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Two-Stream Adaptive Graph Convolutional Networks for Skeleton-Based Action Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 12026–12035. [Google Scholar]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Skeleton-Based Action Recognition with Directed Graph Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7912–7921. [Google Scholar]

- Liu, M.; Liu, H.; Chen, C. Enhanced skeleton visualization for view invariant human action recognition. Pattern Recognit. 2017, 68, 346–362. [Google Scholar] [CrossRef]

- Kim, T.S.; Reiter, A. Interpretable 3d human action analysis with temporal convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–27 July 2017; pp. 1623–1631. [Google Scholar]

- Yang, F.; Wu, Y.; Sakti, S.; Nakamura, S. Make Skeleton-based Action Recognition Model Smaller, Faster and Better. In Proceedings of the ACM Multimedia Asia on ZZZ, Beijing, China, 16–18 December 2019; pp. 1–6. [Google Scholar]

- Zhu, W.; Lan, C.; Xing, J.; Zeng, W.; Li, Y.; Shen, L.; Xie, X. Co-occurrence feature learning for skeleton based action recognition using regularized deep LSTM networks. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Wang, H.; Wang, L. Beyond joints: Learning representations from primitive geometries for skeleton-based action recognition and detection. IEEE Trans. Image Process. 2018, 27, 4382–4394. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Chen, S.; Chen, X.; Zhang, Y.; Wang, Y.; Tian, Q. Actional-Structural Graph Convolutional Networks for Skeleton-based Action Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3595–3603. [Google Scholar]

- Si, C.; Jing, Y.; Wang, W.; Wang, L.; Tan, T. Skeleton-based action recognition with spatial reasoning and temporal stack learning. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 103–118. [Google Scholar]

- Dauphin, Y.N.; Fan, A.; Auli, M.; Grangier, D. Language modeling with gated convolutional networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 933–941. [Google Scholar]

- Shahroudy, A.; Liu, J.; Ng, T.T.; Wang, G. Ntu rgb+ d: A large scale dataset for 3d human activity analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1010–1019. [Google Scholar]

- Liu, J.; Shahroudy, A.; Perez, M.; Wang, G.; Duan, L.Y.; Kot, A.C. NTU RGB+D 120: A Large-Scale Benchmark for 3D Human Activity Understanding. IEEE Trans. Pattern Anal. Mach. Intell. 2019. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kay, W.; Carreira, J.; Simonyan, K.; Zhang, B.; Hillier, C.; Vijayanarasimhan, S.; Viola, F.; Green, T.; Back, T.; Natsev, P.; et al. The kinetics human action video dataset. arXiv 2017, arXiv:1705.06950. [Google Scholar]

- Xia, L.; Chen, C.C.; Aggarwal, J.K. View invariant human action recognition using histograms of 3D joints. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 20–27. [Google Scholar]

- Ke, Q.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F. A new representation of skeleton sequences for 3D action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3288–3297. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. In Proceedings of the International Conference on Learning Representations, Sydney, Australia, 6–11 August 2017. [Google Scholar]

- Xu, K.; Hu, W.; Leskovec, J.; Jegelka, S. How powerful are graph neural networks? In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Chen, J.; Ma, T.; Xiao, C. Fastgcn: Fast learning with graph convolutional networks via importance sampling. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Li, G.; Muller, M.; Thabet, A.; Ghanem, B. Deepgcns: Can gcns go as deep as cnns? In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9267–9276. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in pytorch. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Du, Y.; Wang, W.; Wang, L. Hierarchical recurrent neural network for skeleton based action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1110–1118. [Google Scholar]

- Liu, J.; Shahroudy, A.; Xu, D.; Wang, G. Spatio-temporal lstm with trust gates for 3D human action recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 10–16 October 2016; pp. 816–833. [Google Scholar]

- Wang, H.; Wang, L. Modeling temporal dynamics and spatial configurations of actions using two-stream recurrent neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 499–508. [Google Scholar]

- Lee, I.; Kim, D.; Kang, S.; Lee, S. Ensemble deep learning for skeleton-based action recognition using temporal sliding lstm networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1012–1020. [Google Scholar]

- Zhang, P.; Lan, C.; Xing, J.; Zeng, W.; Xue, J.; Zheng, N. View adaptive recurrent neural networks for high performance human action recognition from skeleton data. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2117–2126. [Google Scholar]

- Tang, Y.; Tian, Y.; Lu, J.; Li, P.; Zhou, J. Deep progressive reinforcement learning for skeleton-based action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5323–5332. [Google Scholar]

- Li, C.; Zhong, Q.; Xie, D.; Pu, S. Co-occurrence feature learning from skeleton data for action recognition and detection with hierarchical aggregation. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 786–792. [Google Scholar]

- Xie, C.; Li, C.; Zhang, B.; Chen, C.; Han, J.; Liu, J. Memory attention networks for skeleton-based action recognition. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 1639–1645. [Google Scholar]

- Song, Y.F.; Zhang, Z.; Wang, L. Richlt Activated Graph Convolutional Network for Action Recognition with Incomplete Skeletons. In Proceedings of the 26th IEEE International Conference on Image Processing, Taipei, Taiwan, China, 22–25 September 2019. [Google Scholar]

- Fernando, B.; Gavves, E.; Oramas, J.M.; Ghodrati, A.; Tuytelaars, T. Modeling video evolution for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5378–5387. [Google Scholar]

| Methods | Year | Accuracy(%) | |

|---|---|---|---|

| Cross-Subject | Cross-View | ||

| Lie Group [1] | 2014 | 50.1 | 52.8 |

| H-RNN [28] | 2015 | 59.1 | 64.0 |

| Part-aware LSTM [16] | 2016 | 62.9 | 70.3 |

| ST-LSTM [29] | 2016 | 69.2 | 77.7 |

| Two-stream RNN [30] | 2017 | 71.3 | 79.5 |

| STA-LSTM [2] | 2017 | 73.4 | 81.2 |

| Ensemble TS-LSTM [31] | 2017 | 74.6 | 81.3 |

| Visualization CNN [8] | 2017 | 76.0 | 82.6 |

| C-CNN + MTLN [20] | 2017 | 79.6 | 84.8 |

| Temporal Conv [9] | 2017 | 74.3 | 83.1 |

| VA-LSTM [32] | 2017 | 79.4 | 87.6 |

| Beyond Joints [12] | 2018 | 79.5 | 87.6 |

| ST-GCN [3] | 2018 | 81.5 | 88.3 |

| DPRL [33] | 2018 | 83.5 | 89.8 |

| HCN [34] | 2018 | 86.5 | 91.1 |

| SR-TSL [14] | 2018 | 84.8 | 92.4 |

| MAN [35] | 2018 | 82.7 | 93.2 |

| PB-GCN [4] | 2018 | 87.5 | 93.2 |

| RA-GCN [36] | 2019 | 85.9 | 93.5 |

| AS-GCN [13] | 2019 | 86.8 | 94.2 |

| AGC-LSTM [5] | 2019 | 89.2 | 95.0 |

| 2s-AGCN [6] | 2019 | 88.5 | 95.1 |

| DGNN [7] | 2019 | 89.9 | 96.1 |

| GAS-GCN(Ours) | 2020 | 90.4 | 96.5 |

| Methods | Year | Accuracy(%) | |

|---|---|---|---|

| Top-1 | Top-5 | ||

| Feature Enc [37] | 2015 | 14.9 | 25.8 |

| Deep LSTM [16] | 2016 | 16.4 | 35.3 |

| Temporal Conv [9] | 2017 | 20.3 | 40.0 |

| ST-GCN [3] | 2018 | 30.7 | 52.8 |

| AS-GCN [13] | 2019 | 34.8 | 56.5 |

| 2s-AGCN [6] | 2019 | 36.1 | 58.7 |

| DGNN [7] | 2019 | 36.9 | 59.6 |

| GAS-GCN (Ours) | 2020 | 37.8 | 60.7 |

| Stream | Model | Accuracy (%) |

|---|---|---|

| Joint stream only | AGCN | 93.83 |

| PG | 94.34 | |

| PG + AG | 94.59 | |

| PG + GN | 94.75 | |

| PG + AG + GN | 95.14 | |

| Two stream | AGCN | 95.10 |

| GAS-GCN | 96.50 |

| Methods | CS (%) | CV (%) |

|---|---|---|

| GAS-GCN (Joint) | 87.9 | 95.1 |

| GAS-GCN (Bone) | 88.2 | 95.0 |

| GAS-GCN (Joint&Bone) | 90.4 | 96.5 |

| Methods | Accuracy (%) |

|---|---|

| GAS-GCN (Joint) | 36.1 |

| GAS-GCN (Bone) | 35.6 |

| GAS-GCN (Joint&Bone) | 37.8 |

| Methods | Cross-Subject (%) | Cross-Setup (%) |

|---|---|---|

| GAS-GCN (Joint) | 83.0 | 85.0 |

| GAS-GCN (Bone) | 83.4 | 85.0 |

| GAS-GCN (Joint&Bone) | 86.4 | 88.0 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chan, W.; Tian, Z.; Wu, Y. GAS-GCN: Gated Action-Specific Graph Convolutional Networks for Skeleton-Based Action Recognition. Sensors 2020, 20, 3499. https://doi.org/10.3390/s20123499

Chan W, Tian Z, Wu Y. GAS-GCN: Gated Action-Specific Graph Convolutional Networks for Skeleton-Based Action Recognition. Sensors. 2020; 20(12):3499. https://doi.org/10.3390/s20123499

Chicago/Turabian StyleChan, Wensong, Zhiqiang Tian, and Yang Wu. 2020. "GAS-GCN: Gated Action-Specific Graph Convolutional Networks for Skeleton-Based Action Recognition" Sensors 20, no. 12: 3499. https://doi.org/10.3390/s20123499