Image-Processing-Based Low-Cost Fault Detection Solution for End-of-Line ECUs in Automotive Manufacturing

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experimental Model

- Four Raspberry Pi 3 boards. One board is the master and the other 3 boards are slaves.

- Four V2 Pi cameras. One Pi camera is attached to each Raspberry Pi and placed inside an enclosure. Each Pi camera communicates with the Raspberry Pi through the Inter-Integrated Circuits interface (I2C). On each enclosure, a 2× telephoto lens is mounted. The lenses are placed at 31 cm above the superior area of the ECU, with an error range of ± 0.5 cm on the vertical axis.

- One Ethernet switch with 5 ports without management. Four ports are used for internal communication between the master and the slaves (the IPs are manually set for each device) and 1 port was planned for external communication inside the factory network.

- One Barcode scanner. The Barcode scanner is a usual, linear scanner, transmitting the barcode through the RS232 serial interface, the USB port. Although as an alternative, a barcode reader software module was implemented by the authors, the external scanner and an interfacing and wrapping software module were preferred to be able to further align the cameras and to further reduce the processing time.

- Detection of crooked pins;

- Detection of missing pins;

- Third bullet;

- Detection of extra pins;

- Detection of wrong clips disposal or damaged clips;

- Detection of cracks on boards;

- Reporting of the whole process;

- Collecting and marking faults, data aggregation on the master equipment, creating logs, user and board selection, debug procedure, etc. within the graphical user interface;

- Managing existing boards configuration;

- Learning new board configuration;

- Reading barcodes;

- Data exchange between the four microcomputers and communication with the higher-level traceability application.

- The beige-white blocks are specialized and specific modules for certain types of operations. These are unique modules for each node, not having similar code parts with other nodes.

- The yellow blocks are distributed over various nodes and have similar functionalities.

- The green blocks (light or dark) are representing modules that are handling the communication between the nodes, specifically requests sending and analyzing, prioritizing, and interpreting. Additionally, these modules assure the keep-alive connection maintenance and command task executions for each node.

2.1.1. Master Node

- I/O Drivers: the component that handles the communication between the microprocessor and the peripherals (camera, barcode reader).

- Functionality: the component contains the functional modules of the master node, assuring the image processing, data interpretation and processing, image capturing from the peripheral, barcode scan interpreting, etc. The functional component is dependent on the Drivers component and situated on a superior level towards I/O. Any superior component regarding the Functionality cannot communicate directly with the drivers. Besides the Python language, there are some external modules dependencies:

- ○

- numpy: library used for n-dimensional matrix calculus, higher-level functions, linear algebra, generical multidimensional data.

- ○

- pynput: library to control all input devices.

- ○

- xmlrpclib: Ethernet communication protocol.

- ○

- opencv2: used for image processing.

- ○

- PyQt4: library used for user interaction, creation of interfaces.

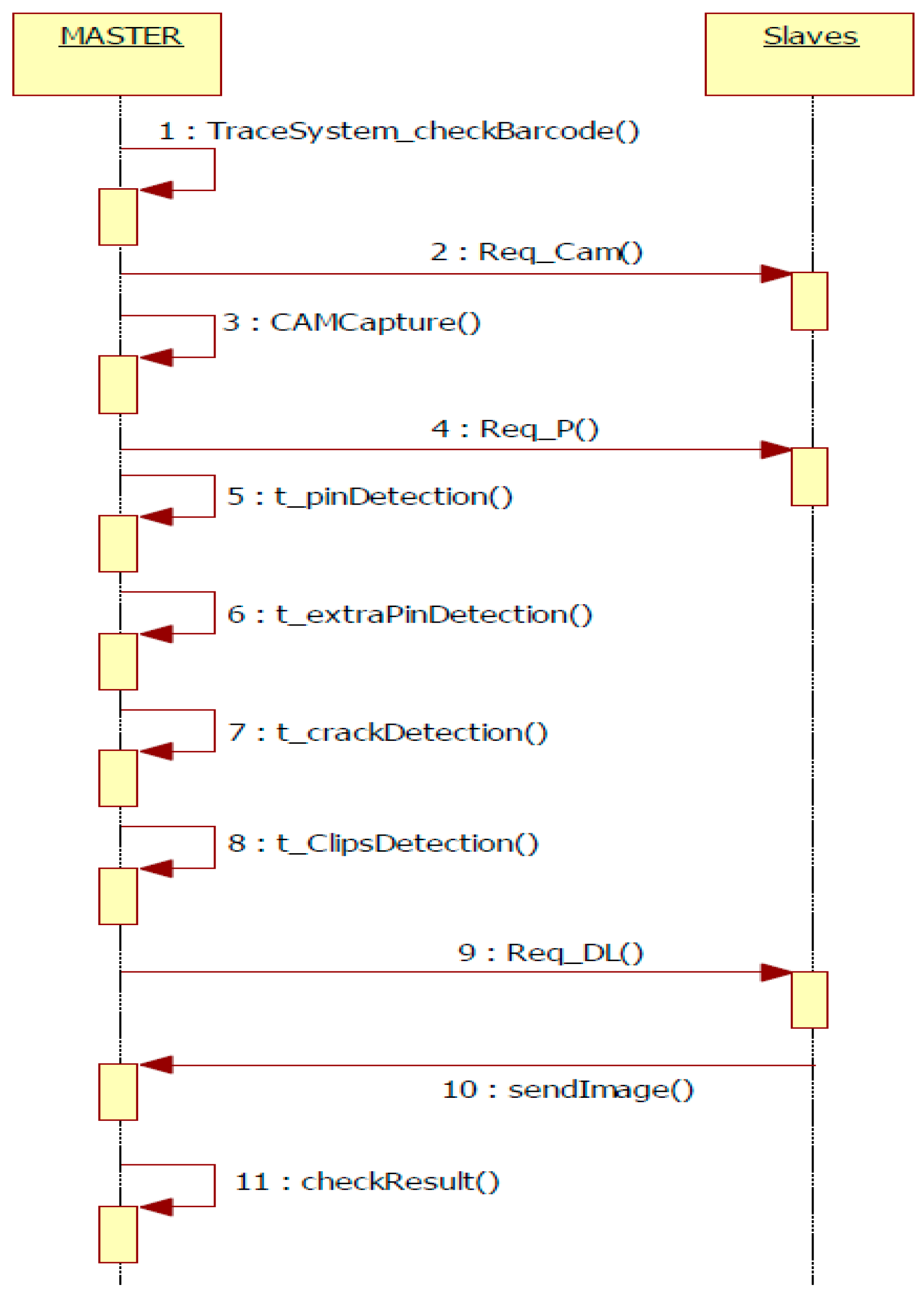

- RPC requests are the component that handles the communication with the slaves. These requests are ordered by the master and executed by the slaves. Through the Local Area Network (LAN), the master transmits the following requests:

- ○

- REQ_CAM: request for capturing an image.

- ○

- REQ_P: request for ECU analysis (pin detection, clip detection, crack detection).

- ○

- REQ_DL: request for downloading the final results after the analysis of slaves. The results downloaded on the master are used to display them in the user interface and add them to the final analysis report.

- UI (User Interface): All technical details regarding implementation and all procedures to obtain outputs are situated in the Functionality component. From the UI, the user sends commands that trigger the Functionality component. All these commands are covering all the components from the master node, and the received answers are sent back to the component on the highest level of the architecture. Additionally, UI launches in parallel the requests to the other nodes within the system.

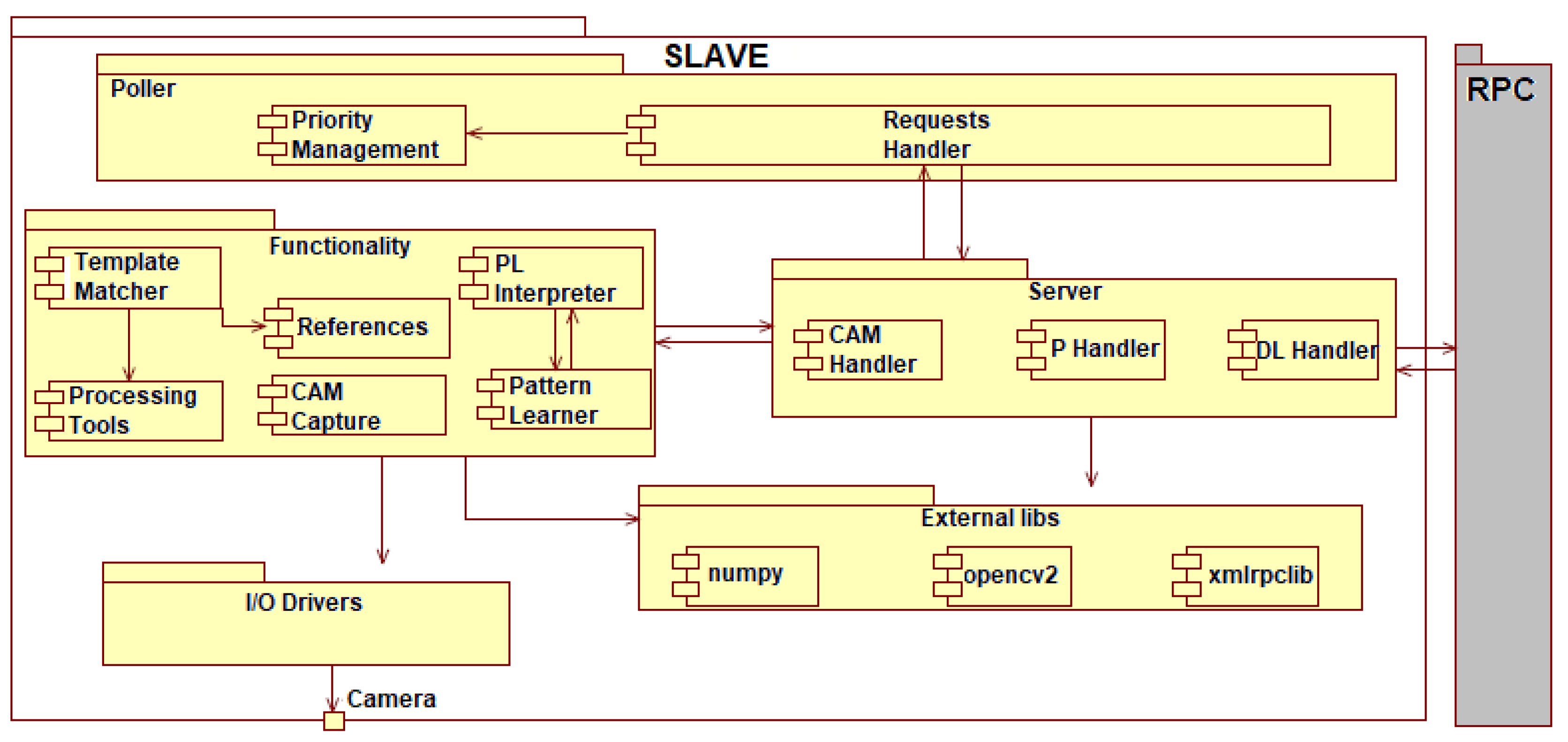

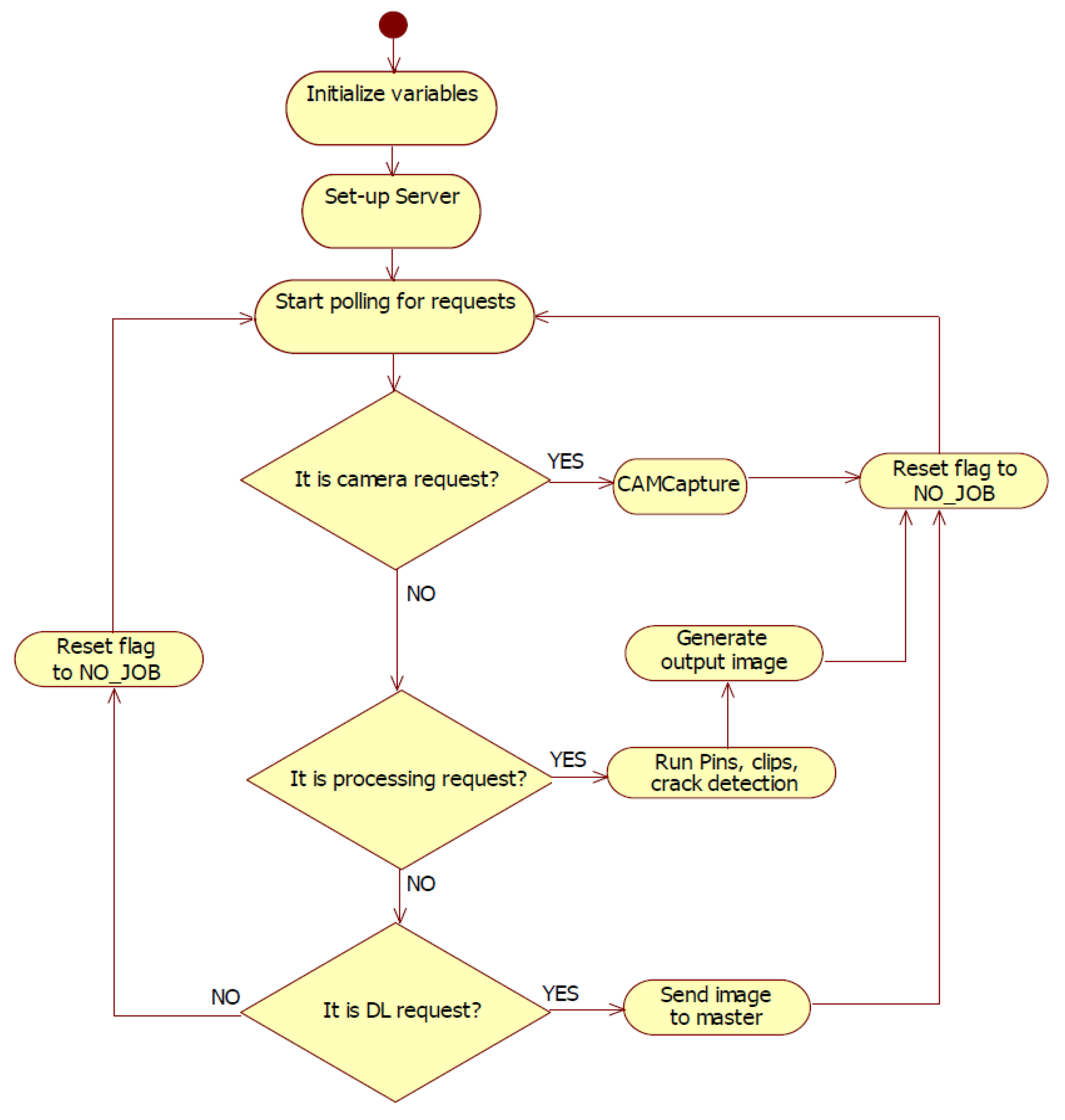

2.1.2. Slave Node

- CAM Handler: Accesses the CAMCapture module from the Functionality component and captures the image.

- P Handler: Accesses the processing modules (Template Matcher, Processing Tools) and realizes the pin, clip, and crack detection. All these are marked on the captured image.

- DL Handler: Transforms the processes image into binary data and realizes the data transfer towards the master node.

2.1.3. Main Modules

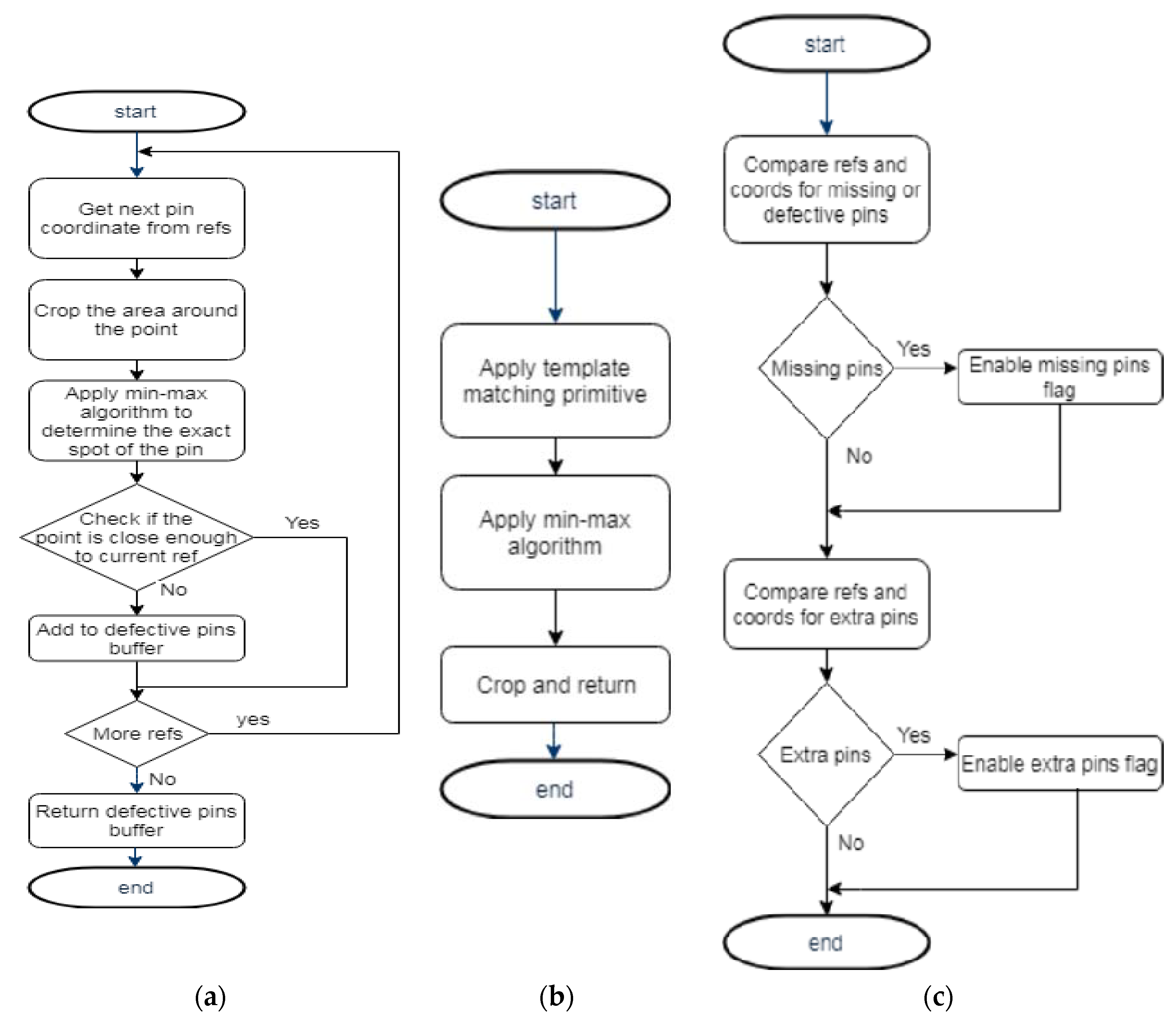

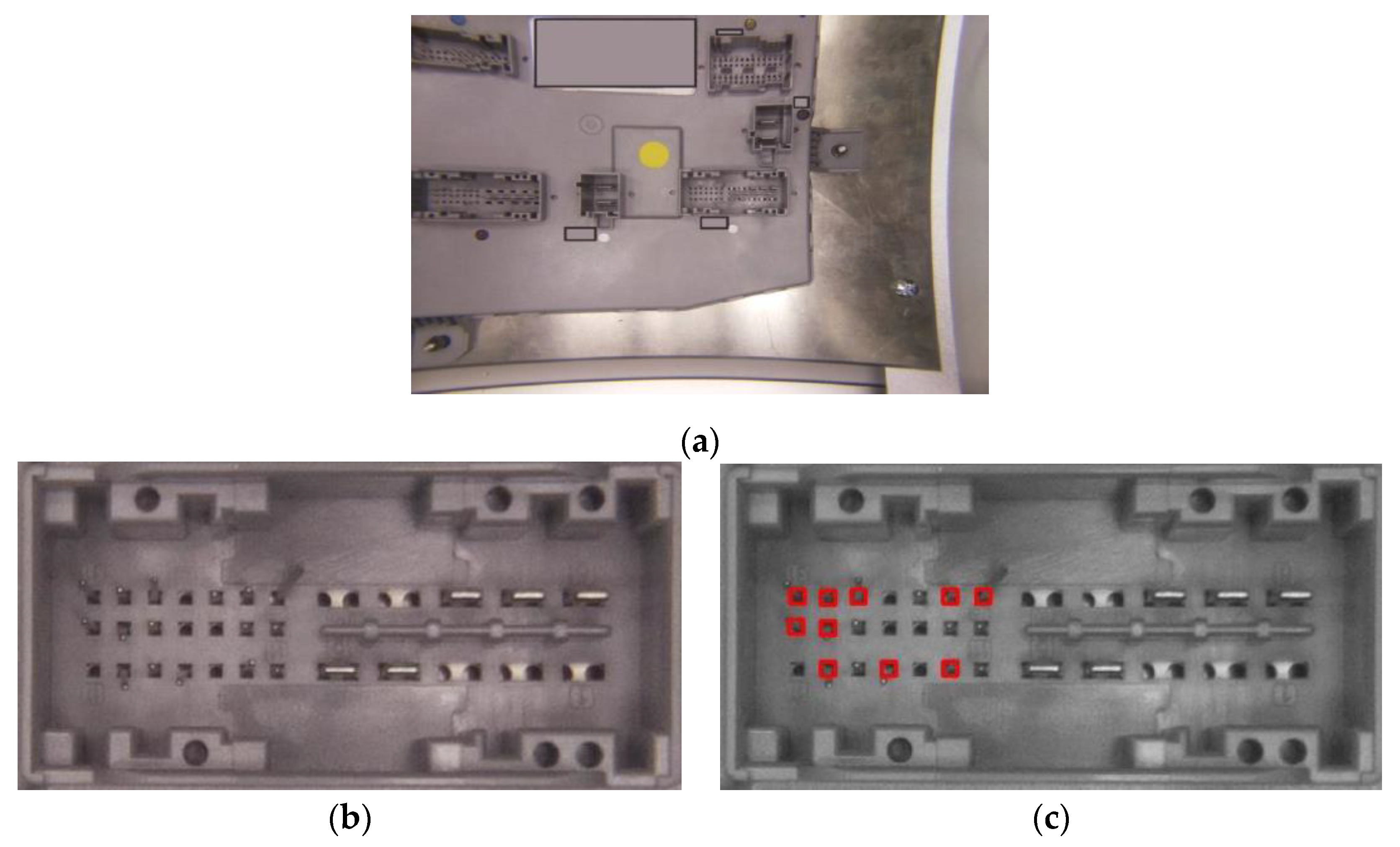

- CAMCapture: Used to take over the saved image in a file by the primitives from the PiCamera library. An important aspect is that the camera resolution is set at 2592 × 1944 pixels. Regarding hardware considerations (meaning that the image is distorted through the lens), the useful surface of the image is much smaller than the initial picture that can be observed in Figure 10.

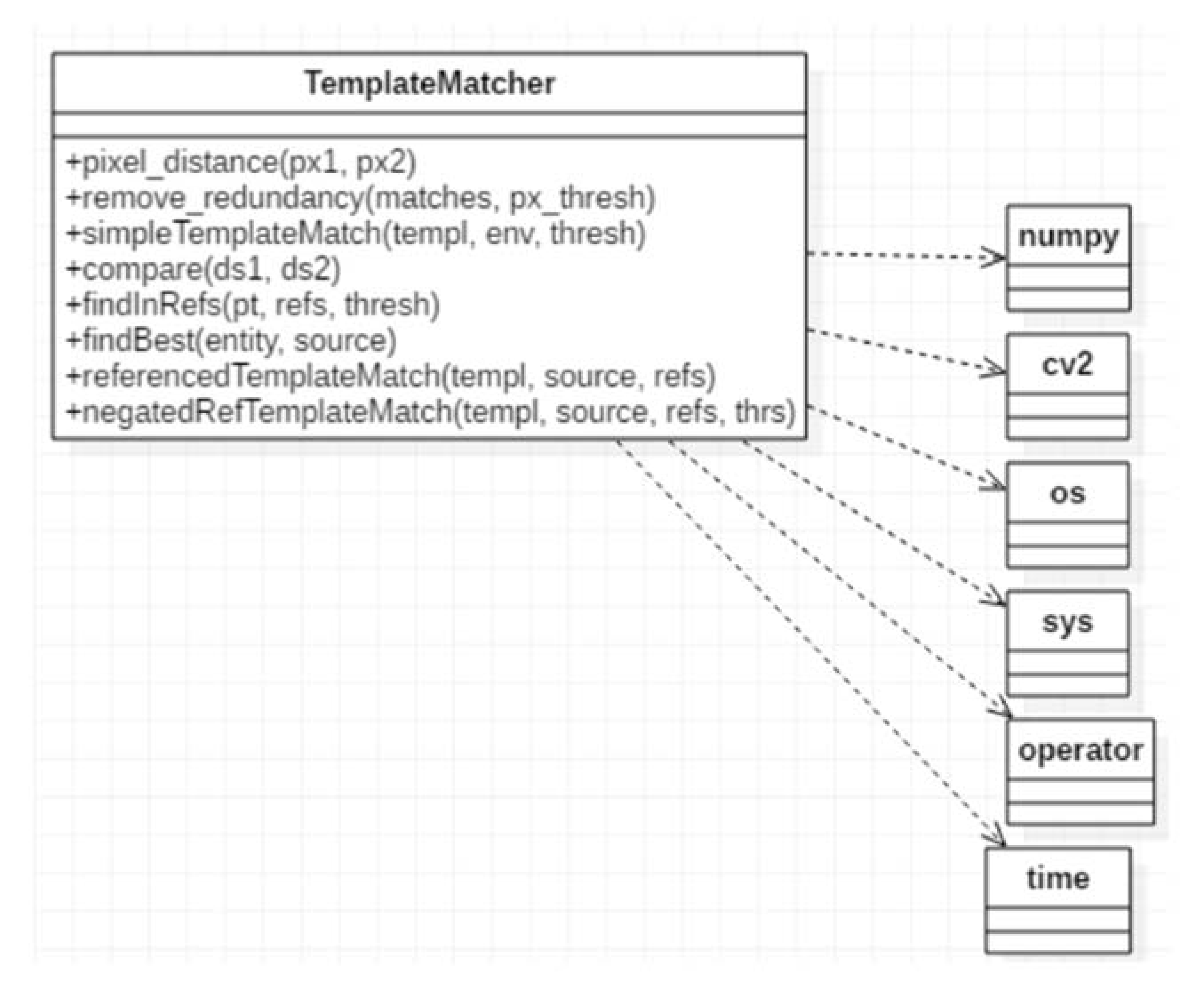

- Template Matcher: The functional core of the application, containing basic methods for processing the input image. The Template Matcher class diagram is pictured in Figure 11 and uses primitives from the OpenCV library, as well as low-level; processing techniques, working directly on the color values of the pixels.

- Threads: The module contains all types of threads used in the application. During runtime processing routine, multiple resources are permanently checked so that the execution time will not be significantly affected. One of the resources is the available memory to store the reports. Periodically, the location of the reports is diagnosed and a byte dimension calculus is realized, the result being shown as a status bar filled in percentage (0–100%) on the user interface.

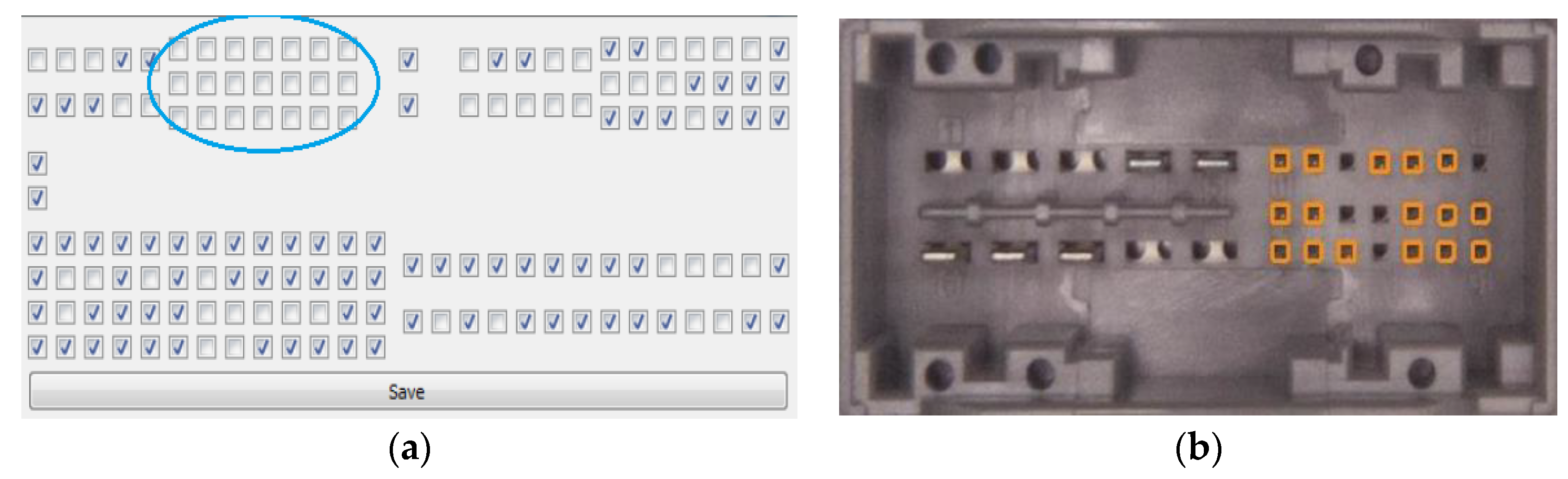

- Pattern Learner: The IP-LC-FD application is foreseen to analyze more types of ECU boards as long as they have the same mechanical structure. During the first phase of researching the experimental model, the provided boards had the same mechanical structure, but the configuration of the pins within the mechanical structure determined various functional characteristics having different corresponding circuits in the background. In industry, it is a common procedure to use the same mechanical structure for a large set of ECU board types in order to reduce the production costs. Therefore, the Pattern Learner module was created in order to validate various types of boards, and it exposes all connectors with the pins’ configuration to the user in a window. The configuration of pins can be adjusted (add/remove pins) within the interface, and the configuration can be initialized, created, and viewed in the window.

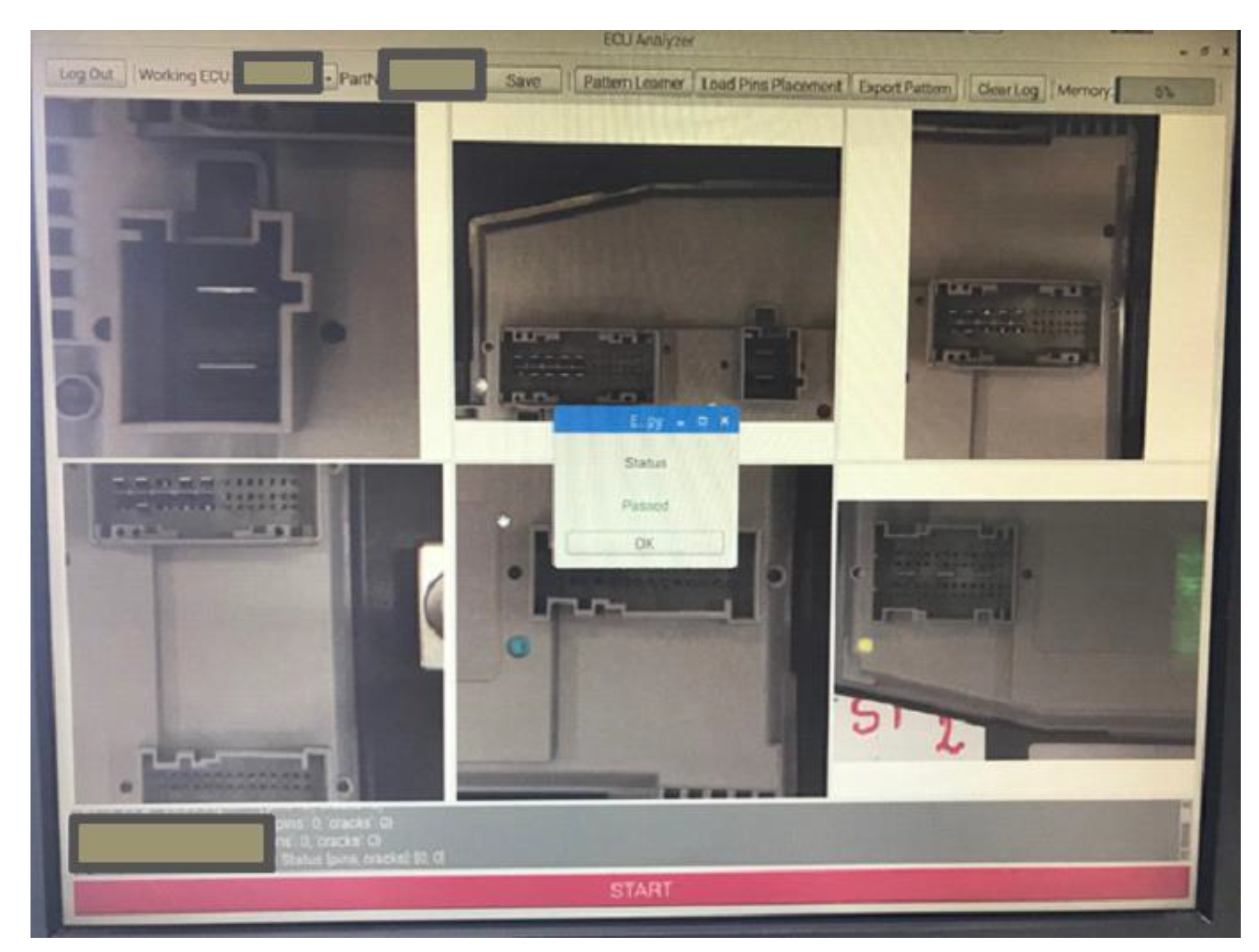

- Graphical User Interface (GUI): The GUI module contains all elements on the superior layer of the project, the user interface. These elements (buttons, textboxes, console, windows, combo boxes) are allowing the user to interact and configure the entire system.

- Barcode Reader: The software module is found only on the master mode and it is compatible with many types of barcode readers. The module launches the execution start signal for the application when the system is in operator mode.

- Base64: The module is used to encode the images into arrays of char to ease the network transport. For example, if the images are transferred through the network as pure binary data, compatibility issues may occur between operating systems. Additionally, some protocols may interpret binary data as special characters. All binary data are encoded as ASCII text.

- References: Creates references for a certain type of ECU. The references are used for image processing, contain data regarding elements that must be processed on the board and basic captures of elements to be identified. Using these references, the processing modules decide if some parts of the board are deteriorated. The references’ file structure for a board is exemplified in Figure 13a. The references are saved and named within a folder, containing a template as a .png file and a .json file with the pins’ layout on the boards (see Figure 13b).

- PL Interpreter: The PL (Pattern Learner) Interpreter module takes over and translates the Boolean values from the GUI and returns the coordinates for the pins. The module has two types of filters applied to the pins and connectors from the interface. All marked pins on the interface are verified and validated (valid position within the hole, not broken, not bent, etc.) and all unmarked pins are verified to determine if there are no extra pins on the board.

- Reports Generator: The module generates reports after finalizing the processing. All information regarding the analysis is stored within a folder.

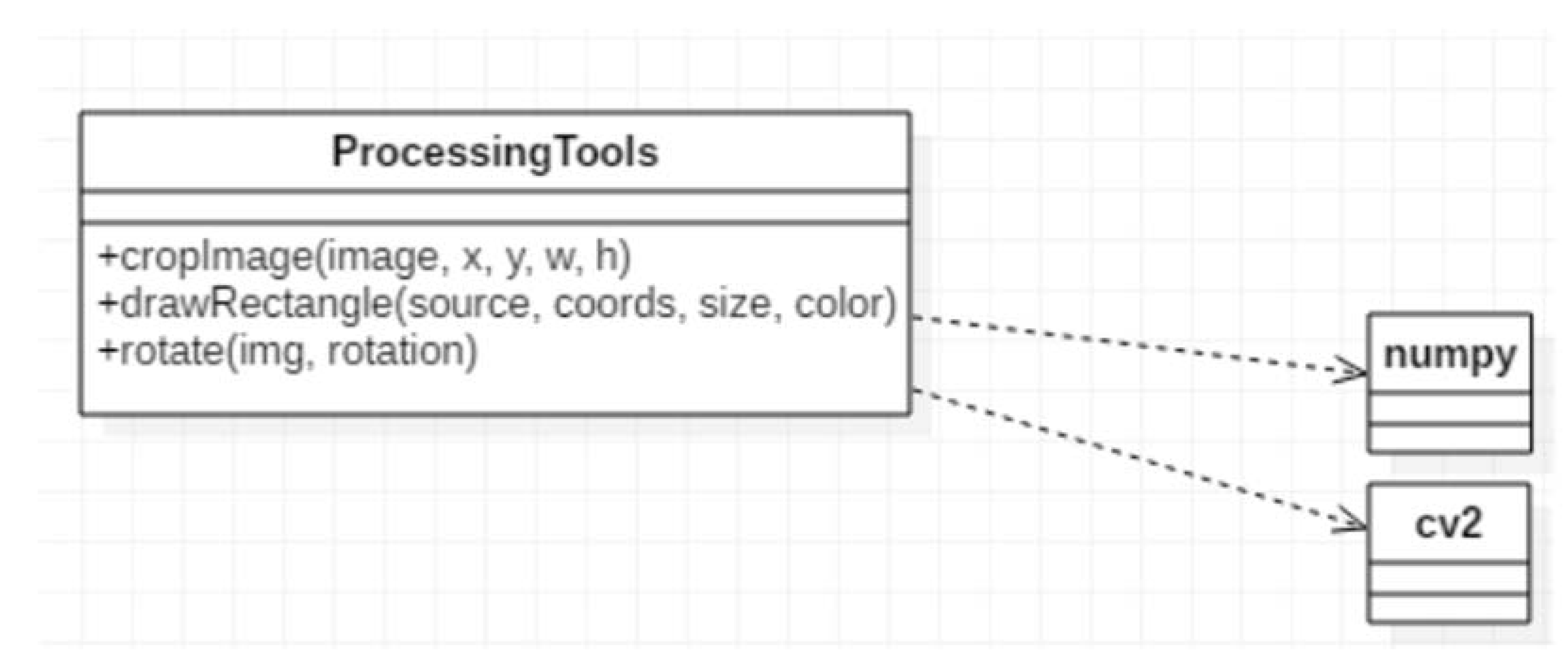

- Processing Tools: The module contains methods designed to assist other processing modules to accomplish the detection. The class diagram of the module is presented in Figure 16. The methods are impacting the main functionalities indirectly, but their separation is important from the resources point-of-view. The impact of the methods in the overall detection process is significant. For example, rotation procedures are applied permanently, either using straight angles to adapt each camera position, or in small angles (within 10°) for correlating and adapting the mechanical degrees of freedom in placing the boards for image processing. If the image is not well-rotated, the cropping procedure may cause the loss of important data. Additionally, cropping is essential for detection, but also to largely reduce the execution time.

- Image Difference: The module is used for cracks detection or other nonconformities on the boards. The module marks with a red contoured rectangle the problematic area to be easily observed by the operator.

- RPC Requests: The module was previously described.

- Server: Each slave contains a Server module. The modules run on separate threads and serve all requests on a port. The module is implemented using the RPC protocol and assures the communication between the master and the slaves. A set of methods are registered and can be called, all other methods or request types are ignored.

- Poller: The module was previously described.

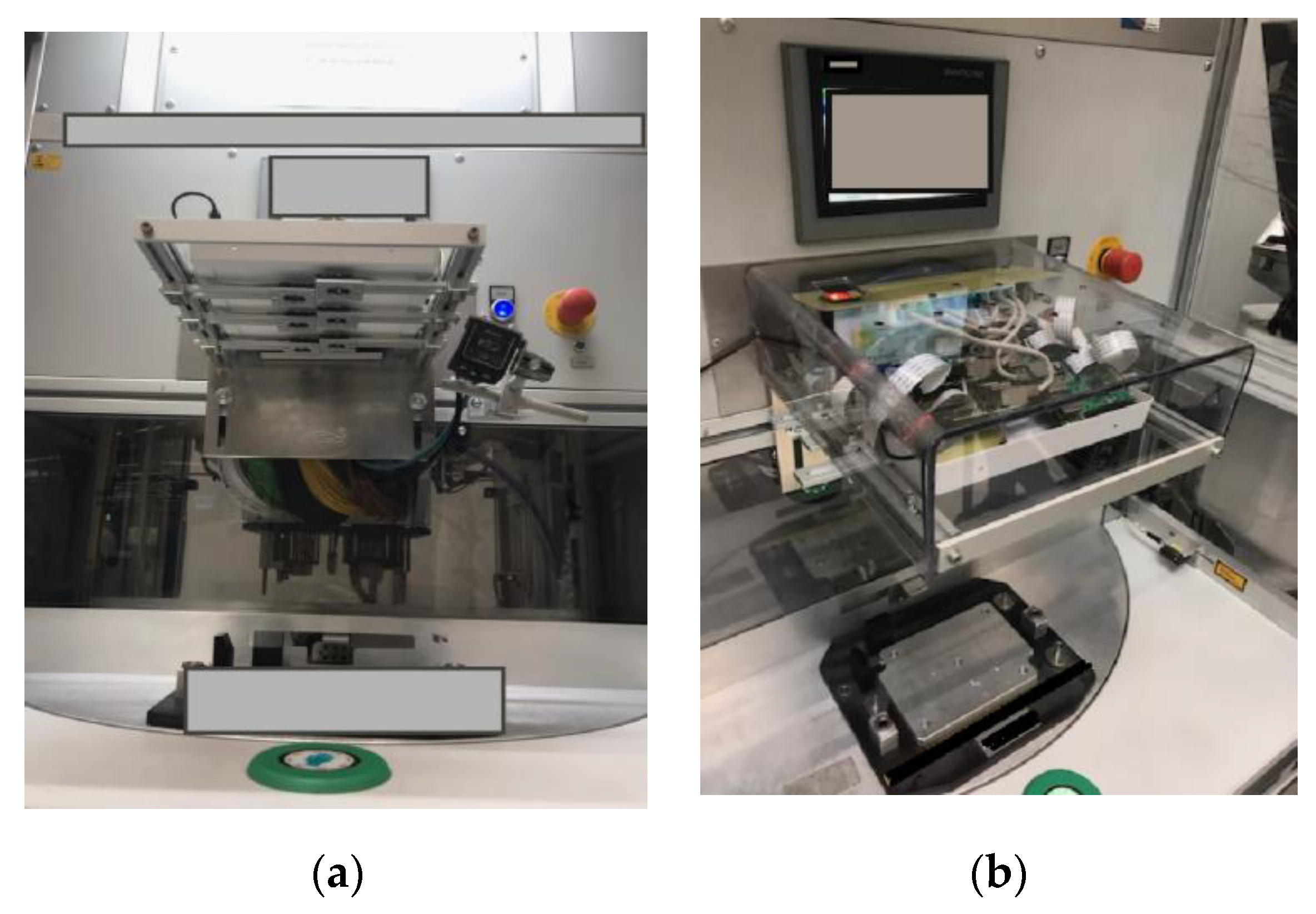

2.2. Prototype

- Starting from the experimental model (consisting of 4 compact modules, each containing a Raspberry Pi and a camera), the solution was modified within the prototype in order to fit the high number of different ECUs from the production line and to reach the test indicators. A prototype solution was conceived and implemented, based on 6 Raspberry Pis and 6 cameras, this time physically separated, and improving the quality of the inspection for the connectors with perspective issues. Having separated cameras, the prototype allows besides higher possibilities to eliminate the perspective, a higher luminosity on the ECUs analyzed surface.

- The capability to vehiculate data was extended to be between 1 master and 5 slaves, including from request/response transmissions toward data aggregation, concluding procedures, and reporting. The schematic view of the prototype is shown in Figure 20, each Raspberry Pi analyzing a zone from to the board, captured by the corresponding camera.

- A generic platform was created for n processing modules. Therefore now, the prototype contains only two branches: 1 master branch and 1 slave branch, and the software from the slaves is generic for any Raspberry Pi slave in the scheme. This aspect brings many advantages during maintenance because any module can be easily replaced and configured.

- The prototype solution is extended to function for the main classes of ECUs from the production line. The extension required a new concept for the software module. This was necessary because of the differences between the hardware-mechanical structuring of the main classes and the necessity of the software to adapt to all cases.

- Modules were conceived and developed to include layouts from all main classes of boards and all the particular sets of boards inside the main classes.

- The pin search module was optimized through additional cropping to reduce the search area and the processing times.

- New detection modules were researched and implemented. The new detection modules were based on “islands” identification. The identified islands were then either separated or grouped, for more accurate conclusions.

- A new method was researched and developed to establish a dynamic illumination threshold associated with each pin. The light is crucial, and the prototype aims for accurate detection in the open working environment. The experiments showed that if the structure is not placed in a closed environment, light and shadow differences will occur. The pins and the pinholes are small; therefore, the different illumination thresholds were necessary.

- The layout saving, storing, and loading was optimized due to the high number of layouts in the production line.

- Detection task request optimizing was researched and implemented mainly in the sense that the master equipment extracts and sends a list of the connectors to analyze for each slave equipment. This procedure eliminates the fixed slaves, each being able to be replaced without application issues.

- The processing time was significantly reduced by eliminating the necessity of connector rotation for the slave’s software.

- A new module was implemented for new layout learning, placed only on the master equipment. This way no ssh/vnc connection is necessary for each slave.

- A new offset (search area) separation was researched and implemented so that each pin has now its own offset. Additionally, a new module was created that establishes the filling factor for the offset for each pin.

- The prototype is able to apply all the changes in configuration from the graphical user interface.

- The prototype functions in complete correlation with the traceability software within the company.

3. Results

- Max slot deviation is defined by a radius in pixels around the origin of the slot (which is also given as a parameter) that defines the maximum deviation a pin is allowed to have;

- Min pin light is the minimum intensity of brightness on a scale of (0–255) the brightest pixel of the pin is required to have to be considered a valid pin and not a background element.

4. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Huang, S.; Pan, Y.C. Automated visual inspection in the semiconductor industry: A survey. Comput. Ind. 2015, 66, 1–10. [Google Scholar] [CrossRef]

- Liu, W.; Li, X.; Jia, Z.; Yan, H.; Ma, X. A three-dimensional triangular vision-based contouring error detection system and method for machine tools. Precis. Eng. 2017, 50, 85–98. [Google Scholar] [CrossRef]

- Mera, C.; Orozco-Alzate, M.; Branch, J.; Mery, D. Automatic visual inspection: An approach with multi-instance learning. Comput. Ind. 2016, 83, 46–54. [Google Scholar] [CrossRef]

- Zhi, X.; Yan, J.; Hang, Y.; Wang, S. Realization of CUDA-based real-time registration and target localization for high-resolution video images. J. Real-Time Image Proc. 2019, 16, 1025–1036. [Google Scholar] [CrossRef] [Green Version]

- Yu, X.; Fei, X. Target image matching algorithm based on pyramid model and higher moments. J. Comput. Sci. 2017, 21, 189–194. [Google Scholar] [CrossRef]

- Lin, C.H.; Hsiao, M.D.; Lai, K.J. A study on using image serving technology for high precision mechanical positioning. Mech. Syst. Sig. Process. 2016, 81, 493–514. [Google Scholar] [CrossRef]

- Villalba-Diez, J.; Schmidt, D.; Gevers, R.; Ordieres-Meré, J.; Buchwitz, M.; Wellbrock, W. Deep Learning for Industrial Computer Vision Quality Control in the Printing Industry 4.0. Sensors 2019, 19, 3987. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.C.; Chen, J.Y. Optical Inspection System for Gear Tooth Surfaces Using a Projection Moiré Method. Sensors 2019, 19, 1450. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, Y.; Pan, L.; Ma, J.; Yang, R.; Zhu, Y.; Yang, Y.; Zhang, L. A High-Performance Deep Learning Algorithm for the Automated Optical Inspection of Laser Welding. Appl. Sci. 2020, 10, 933. [Google Scholar] [CrossRef] [Green Version]

- Fonseka, C.; Jayasinghe, J. Implementation of an Automatic Optical Inspection System for Solder Quality Classification of THT Solder Joints. IEEE. Trans. Compon. Packag. Manuf. Technol. 2019, 9, 353–366. [Google Scholar] [CrossRef]

- Zhao, L.; Li, F.; Zhang, Y.; Xu, X.; Xiao, H.; Feng, Y. A Deep-Learning-based 3D Defect Quantitative Inspection System in CC Products Surface. Sensors 2020, 20, 980. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wu, F.; Cao, P.; Du, Y.; Hu, H.; Yang, Y. Calibration and Image Reconstruction in a Spot Scanning Detection System for Surface Defects. Appl. Sci. 2020, 10, 2503. [Google Scholar] [CrossRef] [Green Version]

- Prappacher, N.; Bullmann, M.; Bohn, G.; Deinzer, F.; Linke, A. Defect Detection on Rolling Element Surface Scans Using Neural Image Segmentation. Appl. Sci. 2020, 10, 3290. [Google Scholar] [CrossRef]

- Ardhy, F.; Hariadi, F.I. Development of SBC based Machine-Vision System for PCB Board Assembly Automatic Optical Inspection. In Proceedings of the International Symposium on Electronics and Smart Devices (ISESD), Bandung, Indonesia, 29–30 November 2016; pp. 386–393. [Google Scholar]

- Lim, D.U.; Kim, Y.G.; Park, T.H. SMD Classification for Automated Optical Inspection Machine using Convolution Neural Network. In Proceedings of the 2019 3rd IEEE International Conference on Robotic Computing (IRC 2019), Naples, Italy, 25–27 February 2019; pp. 395–398. [Google Scholar]

- LMI Gocator 3D Sensors. Available online: https://lmi3d.com/ (accessed on 16 May 2020).

| Test Procedure | Success Rate |

|---|---|

| Testing the informational flow at the algorithm level (“fairness” concept). (a) All module/function activation signals producing the expected outcome (e.g., image capturing on the master/slaves generates the image file, the EdgeDetection function always determines the corresponding execution, the ImageDifference executes always the correct code, etc.); (b) correct transition between the states, no unknown state; (c) the application is providing outputs and allows the transition to the new cycle both in normal and debug functioning regimes. | 100% |

| Verifying the communications and the threads. (a) The communication between the master and the slaves; (b) The communication with the traceability application; (c) Correct functioning of the local threads. | 100% |

| Testing the local reporting module. | 100% |

| Testing the ability to learn new types of boards and the management of saved ECUs. | 100% |

| Verifying the data aggregation and integration from all processing equipment, and the concluding manner. | 100% |

| Testing the referencing procedure (references addition, adjustments, etc.) | 100% |

| Testing the correct connector detection and fault detection for small and large pins, clips, cracks. | 98% |

| Testing the missing pins detection. | 98% |

| Testing the extra pins detection. | 98% |

| Capability Pass repeatability test: A good ECU is tested successfully 50 times in the industrial environment and 50 times the system provides the same result, Passed. | 100% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Korodi, A.; Anitei, D.; Boitor, A.; Silea, I. Image-Processing-Based Low-Cost Fault Detection Solution for End-of-Line ECUs in Automotive Manufacturing. Sensors 2020, 20, 3520. https://doi.org/10.3390/s20123520

Korodi A, Anitei D, Boitor A, Silea I. Image-Processing-Based Low-Cost Fault Detection Solution for End-of-Line ECUs in Automotive Manufacturing. Sensors. 2020; 20(12):3520. https://doi.org/10.3390/s20123520

Chicago/Turabian StyleKorodi, Adrian, Denis Anitei, Alexandru Boitor, and Ioan Silea. 2020. "Image-Processing-Based Low-Cost Fault Detection Solution for End-of-Line ECUs in Automotive Manufacturing" Sensors 20, no. 12: 3520. https://doi.org/10.3390/s20123520

APA StyleKorodi, A., Anitei, D., Boitor, A., & Silea, I. (2020). Image-Processing-Based Low-Cost Fault Detection Solution for End-of-Line ECUs in Automotive Manufacturing. Sensors, 20(12), 3520. https://doi.org/10.3390/s20123520