Perspective and Evolution of Gesture Recognition for Sign Language: A Review

Abstract

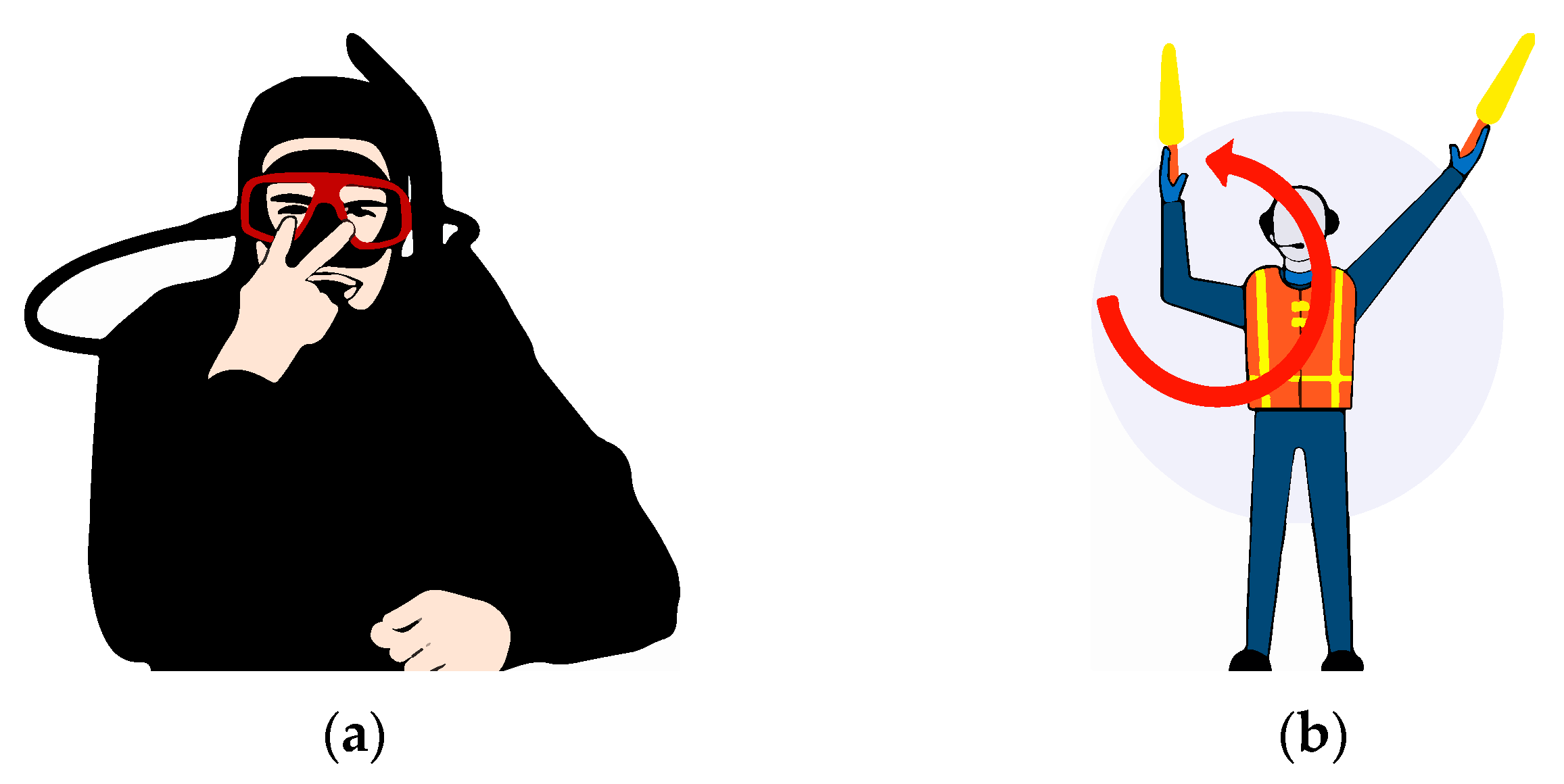

:1. Introduction

2. Evolution of Gesture Recognition Devices

2.1. Data Gloves

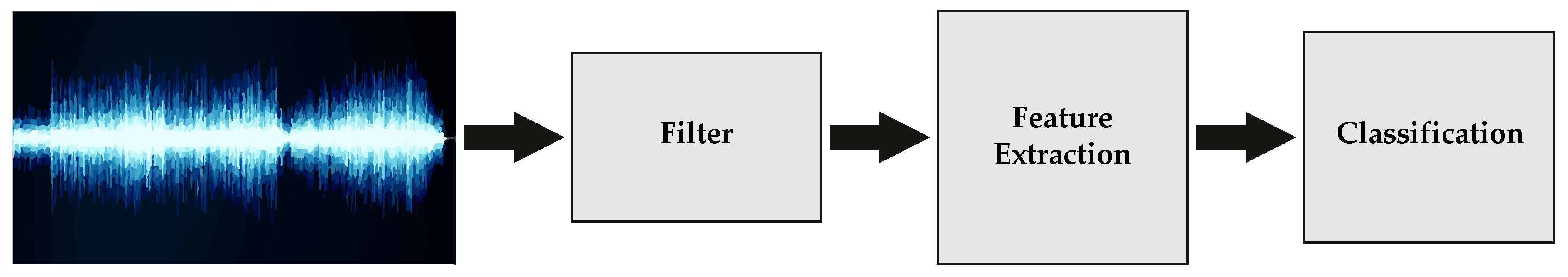

2.2. Electromyography (EMG) Electrodes

2.3. Ultrasound

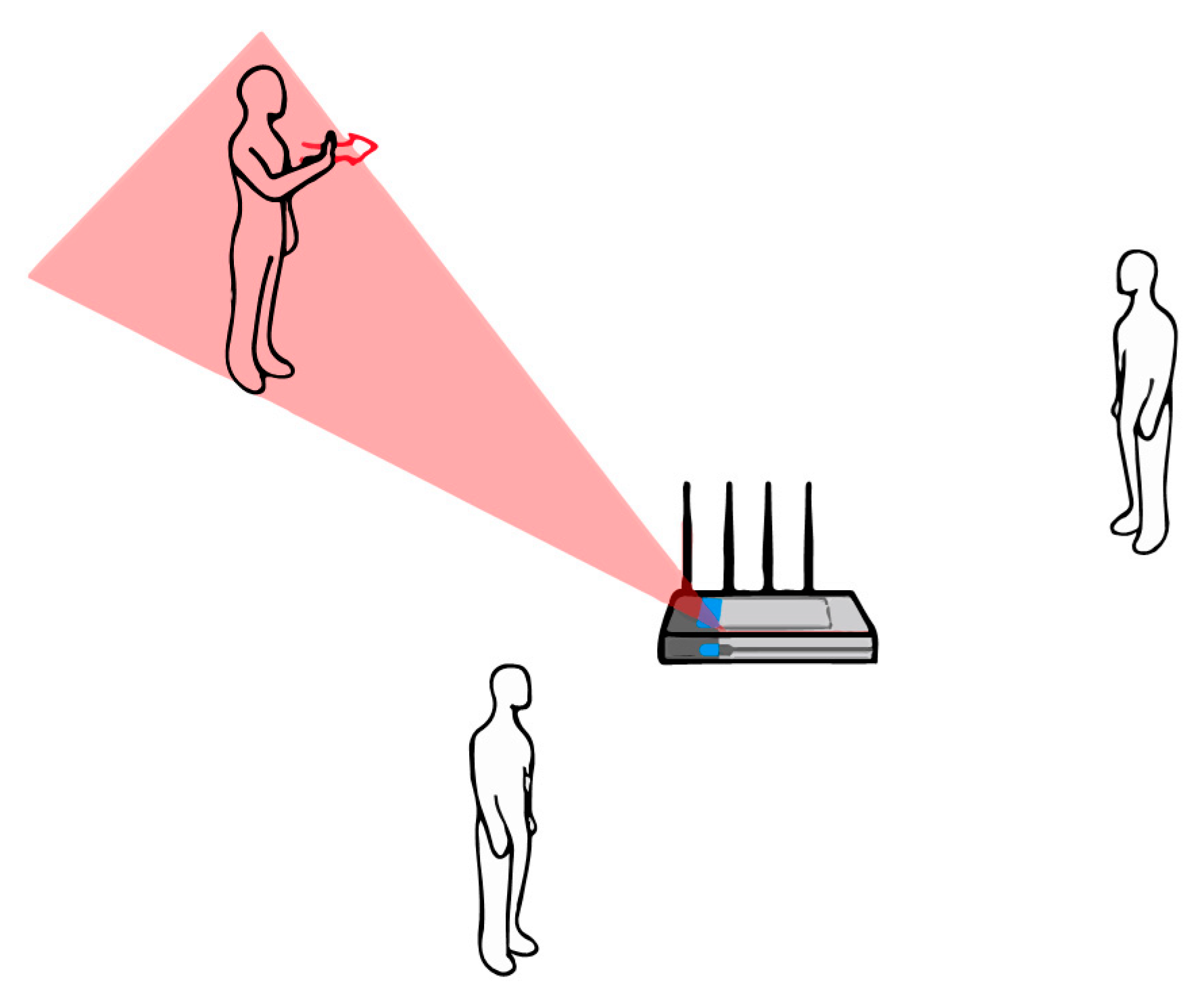

2.4. WiFi

2.5. Radio Frequency Identification (RFID)

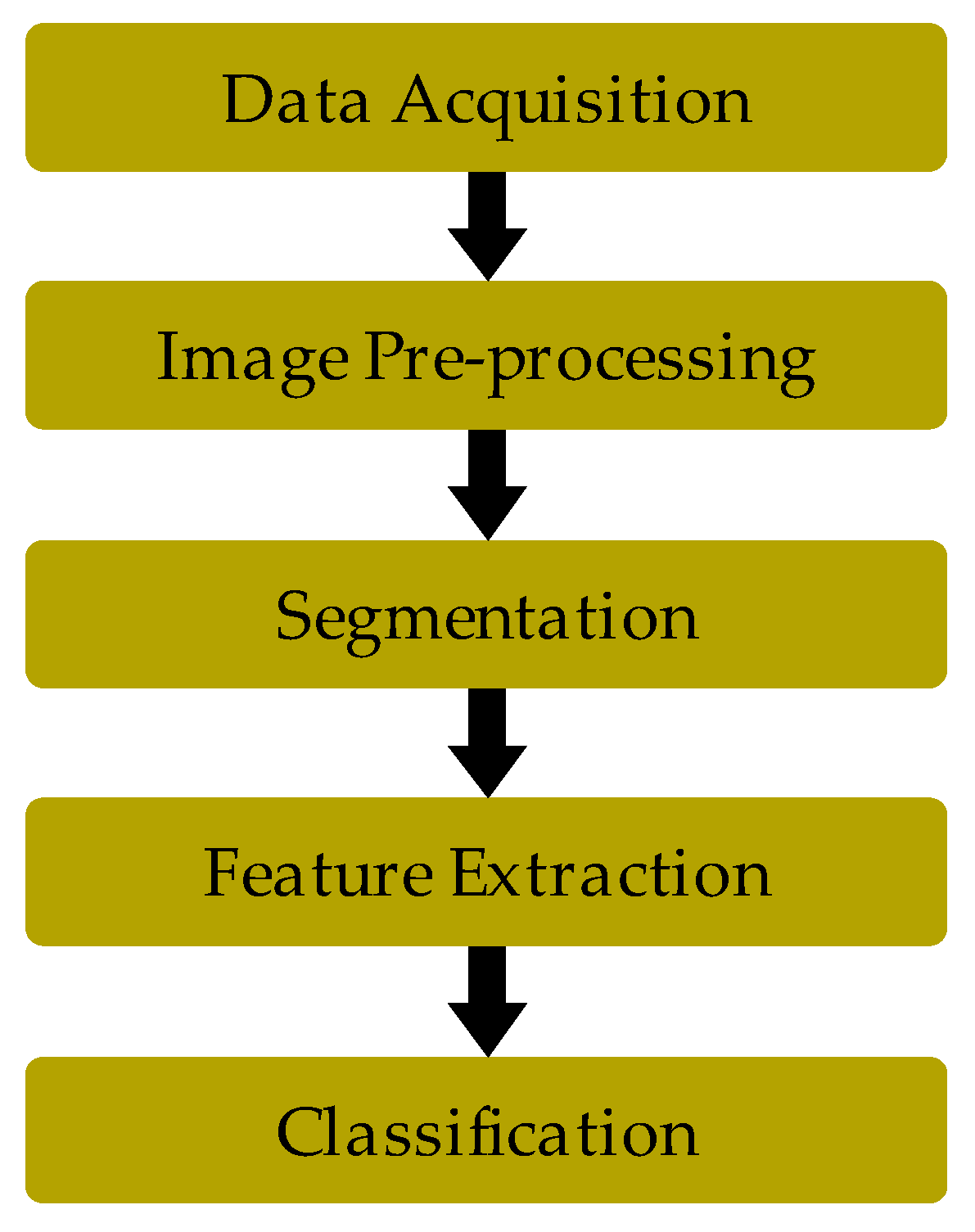

2.6. RGB and RGB-d Cameras

2.6.1. Stereoscopic Vision

2.6.2. Structured Light

2.6.3. Time of Flight (TOF)

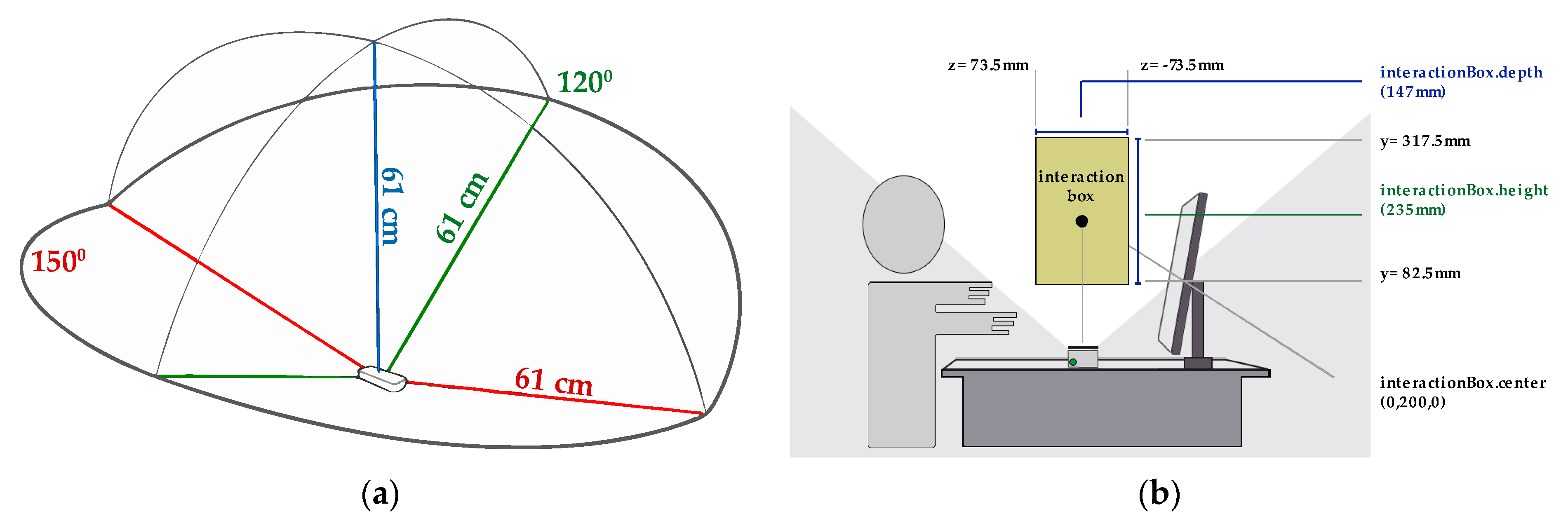

2.7. Leap Motion

2.8. Virtual Reality (VR)

3. Discussion

4. Conclusions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Ref. | Device | Authors | Year | Application | Focus | Results |

|---|---|---|---|---|---|---|

| [2] | Sketchpad | Sutherland | 1963 | Touch recognition | First touch interaction device | Opens up a new area of man–machine communication |

| [3] | Videoplace | Krueger et al. | 1969 | Virtual reality | Search for an alternative to the traditional human computer interaction (HCI) | Combines a participant’s live video image with a computer graphic world |

| [9] | DataGlove | Zimmerman | 1983 | Gesture recognition | The first invention of a glove with optical flex sensor | Signals from these sensors can be processed for application in kinesiology, physical therapy, computer animation, remote control and man to machine interface |

| [10] | PowerGlove | Mattel | 1989 | VR Gesture recognition | Device for Nintendo games | A low-cost alternative was created for researchers in virtual reality and hand and gesture posture recognition |

| [10] | SuperGlove | Nissho Electronics | 1997 | Gesture recognition | Pioneer use in sensors with a special resistive ink | Measures flexion of both the metacarpophalangeal and proximal interphalangeal joints for all four fingers and the thumb |

| [11] | CyberGlove | CyberGlove Systems | 1990 (Currently on sale) | VR Robotic control Medical rehabilitation Gesture recognition | Gloves that use magnetic sensors and accelerometers | Programmable gloves with the greatest commercial impact |

| [12] | AcceleGlove | AnthroTronix | 1999 (Currently on sale) | VR Robotic control Medical rehabilitation Gesture recognition | Gloves that use magnetic sensors and accelerometers | Programmable gloves with the greatest commercial impact |

| [43,44,45,46,47] | Myo | Thalmic Labs | 2014 (Currently on sale) | VR Gesture recognition Home automation | Bracelet that reads and collects the electrical activity of muscles to control, wirelessly, other devices with gestures and arm movements | Myoelectric bracelet with greater commercial impact |

| [104,105,117] | Kinect | Microsoft | 2010 (Currently on sale V2.0) | Gaming Gesture recognition Robotic control Medical rehabilitation Education Identification Authentication | Allows users to control and interact with the console without having physical contact with a traditional video game controller | More than 10 million sensors sold |

| [106,114,115,116,117,120,121,122,123,124,125,126,127,128,129,130,131] | Leap Motion | Leap Motion Inc. | 2012 (Currently on sale) | VR Gesture recognition Robotic control Medical rehabilitation Education Identification Authentication | Low cost device to interact with computer through gestures | Startup whose valuation reached 306 million in 2013 and was acquired for 30 million dollars |

| Ref. | Device Type | Focus | Segmentation | Feature Extraction | Classification | Results and Observations |

|---|---|---|---|---|---|---|

| [4,5,6,7,10] | An overview of Gesture Based Interaction | Not applied | Not applied | Not applied | Advantages and disadvantages of various techniques that have emerged over time and in progress | |

| [8] | DataGlove | A survey of glove-based input | Not applied | Not applied | Not applied | Achieving the goal of the “contactless” natural user interface (NUI) requires progress in many areas, including improving speed and accuracy of tracking devices and reducing manufacturing costs |

| [13] | DataGlove | Data-glove for medical use | Not applied | Not applied | Not applied | The use of sensorized surgical gloves in Hand-assisted laparoscopic surgery (HALS) surgery provides the surgeon with information additional tissues and organs |

| [14] | DataGlove | Design of a haptic control glove for teleoperated robotic manipulator | Not applied | Not applied | Not applied | Efficiency greater than 90% in opening and closing movements |

| [33] | Electromyogram data (EMG) | Cybernetic control of wearable computers | Not applied | Skin conductivity signals | Skin conductance sensor with a matched filter | The startle detection algorithm is robust in several users, and allows the wearable to respond automatically to events of potential interest |

| [34,35,36,37] | Electromyogram data (EMG) | An interface based on active EMG electrodes | Not applied | Root Mean Square (RMS) power calculations filtering | Not applied | Control of synthesizers, sequencers, drum machines or any another musical instrument digital interface (MDI) device [34,35,37]. Expanded to a multimodal interaction [36]. |

| [38] | Electromyogram data (EMG) | A biological feedback GUI pointer (electromyograms) | Not applied | Not applied | Neural network | Detects the electromyograms of four of the muscles used to move the wrist, with performance similar to a standard mouse |

| [39,40] | Electromyogram data (EMG) | Capture of electromyographic signals as input of joysticks and virtual keyboards | Not applied | Not applied | Not applied | Low-cost brain-computer interface for the disabled people and the elderly |

| [41] | Electromyogram data (EMG) | Interpretation of forearm electromyography and finger gesture classification | 31 milliseconds temporary segments | Root Mean Square (RMS). Fourier transform (FFT). Phase Coherence | SVM (Support Vector Machines) | Precision of finger gestures and arm postures above 80% |

| [42] | Electromyogram data (EMG) | Myoelectric control prosthetics and therapeutic games using Myo Armband | Not applied | Not applied | Not applied | Helps to improve the low myoelectric prosthesis adoption rate |

| [43] | Electromyogram data (EMG) | Hand posture and gesture recognition using Myo Armband | Ordered subspace clustering (OSC) | Fourier transform (FFT) | Collaborative representation classification (CRC) | Precision greater than 97% in six hand gestures and various postures |

| [44] | Electromyogram data (EMG) | Provide guidelines on the use of Myo Armband for physiotherapy analysis by doctors and patients | Not applied | Not applied | Not applied | It is shown that the MYO device has the potential to be used to understand arm movements during the physiotherapy stage |

| [45] | Electromyogram data (EMG) | Use of electromyogram data (EMG) provided by the Myo bracelet to identify letters from Brazilian sign language (LIBRAS) | 0.25 s samples | Calculation of absolute and average value | Support vector machines (SVM) | It is very difficult to perceive in real time fine finger gestures only with EMG data |

| [46,47] | Electromyogram data (EMG) | Real-time hand gesture recognition with Myo bracelet | 200 sample windows | Signal rectification (abs) and application of a Butterworth low pass digital filter | Neighbor and dynamic temporal deformation algorithms closest to k | In [46] the model works better (86% accuracy) than the Myo system (83%) for five kinds of gestures. In [47] a muscle activity detector is included that speeds up processing time and improves the accuracy of recognition at 89.5% |

| [48,50,52] | Ultrasound | Ultrasound imaging based on control strategy for upper arm prosthetics | Ultrasound image sequences | Image processing algorithm. Calculation of cross-correlation coefficients between sequences. Root mean squares (RMS) | K-Nearest neighbour algorithm (k-NN) [48]. Not applied [50,52] | SMG signal has potential to be an alternative method for prosthetic control |

| [53] | Ultrasound | Gesture detection based on the propagation of low frequency transdermal ultrasound. | 35, 40, 45, and 50 kHz of sinusoidal wave | Average amplitude, standard deviation, linear and log average of Fourier transform (FFT) points | Support Vector Machines (SVM) | Solid classification of tactile gestures made in different locations in the forearm |

| [54] | Ultrasound | Radial Muscle Activity Detection System with ultrasound transducers | 1100 images per gesture | Rectification and application of the Hilbert transform | NN applying Combined cross-correlation (C-CC) | 72% success in recognition of five flexion/extension gestures |

| [55] | Ultrasound | Comparison of the performance of different mounting positions of a portable ultrasound device | Splitting the video into push-ups or extensions from the neutral position | Farnebäck’s algorithm | Optical flow. Multilayer perceptron regressor. | Flexion classification and extension of 10 discrete hand gestures with an accuracy greater than 98% |

| [57] | Ultrasound | A human gait classification method Based on radar Doppler spectrograms Radar Doppler Spectrograms | 20 s samples | Short-Time Fourier Transform (STFT) | Support Vector Machines (SVM) | With seven directional filters, the classification performance is increased above 98% |

| [58] | Ultrasound | Ultrasonic sound waves to measure the distance to a subject | Not applied | Not applied | Not applied | Convert this information into lens rotation to obtain the correct focus |

| [59] | Ultrasound | Ultrasonic device to recognize simple hand gestures | Frames of 32 ms | Fourier transform (FFT) and discrete cosine transform (DCT) | Gaussian mixture model (GMM) | 88.42% success in recognition of eight gestures |

| [60] | Ultrasound | Gesture-controlled sound synthesis with triangulation method using ultrasonic | Not applied | Low-pass filter | Not applied | Success in the detection and monitoring of 1 m3 space movement |

| [61] | Ultrasound | Gesture recognition based on the application of simple ultrasonic rangefinders in a mobile robot | Not applied | The speed and direction of the hand allow define a function of a speed ration | Not applied | Sensors readily available but the set of recognized gestures is limited |

| [62,65] | Ultrasound | Technique that takes advantage of the speaker and microphone already integrated in mobile device to detect gestures in the air | Not applied | Velocity, direction, proximity, size of target and time variation | Not applied | Robustness above 90% on different devices, users and environments [62] |

| [63,64] | Ultrasound | 2D ultrasonic depth sensor that measures the range and direction of the targets in the air [63]. 3D applications [64] | Not applied | Signal-to-noise ratio (SNR) and incident angle | Not applied | Ultrasonic solutions are ideal for simple gesture recognition for smartphones and other devices with power limitation |

| [66] | Ultrasound | Ultrasonic hand gesture recognition for mobile devices | Not applied | Round trip time (RTD) Reflected signal strength (RSS) | Support vector machines (SVM) | 96% success for seven types of gestures |

| [67] | Ultrasound | Micro hand gesture recognition system and methods using ultrasonic active sensing | 256 FFT points | Range-Doppler pulsed radar signal processing method, shift and velocity | Convolutional neural network (CNN) and long short-term memory (LSTM) | 96.32% of success for 11 hand gestures |

| [68] | Wi-Fi | Operation-oriented gesture recognition system in a range of low consumption computing devices through existing wireless signals | Not applied | Envelope detector to remove the carrier frequency and extract amplitude information | Dynamic time Warping (DTW) | Achieves the classification in 97% of success in a set of eight gestures |

| [70] | Wi-Fi | Gesture recognition extracting Doppler shifts from wireless signals | Combination of segments with positive and negative Doppler shifts | Computation of the frequency–time energy distribution of the narrow band signal | Coding of gestures. Doppler effects | 94% success for nine gestures of a person in a room. With more people can go down to 60% |

| [71] | Wi-Fi | Capturing moving objects and their gestures behind a wall | Gesture decoding | Cumulative distribution functions (CDF) of Signal-to-noise ratio (SNR) | Not applied | 87.5% success in detecting three gestures on concrete walls |

| [73] | Wi-Fi | Wi-Fi-based gesture recognition system identifying different signal change primitives | Detects changes in the raw Received signal strength indicator (RSSI) | Discrete wavelet transform (DWT) | Not applied | 96% accuracy using three access point (AP) |

| [75] | Wi-Fi | Wi-Fi gesture recognition on existing devices | Received signal strength indicator (RSSI) and channel state information (CSI) streams | Low-pass filter Changes in received signal strength indicator (RSSI) and in channel state information (CSI) | Height and timing information to perform classification | 91% accuracy while classifying four gestures across six participants |

| [76] | Wi-Fi | Wi-Fi gesture recognition on existing devices | 180 groups of channel state information (CSI) data from each packet | Mean value, standard deviation, median absolute deviation and maximum value of the anomaly patterns. | Support vector machines (SVM) | Average recognition accuracy of 92% in line of sight scenario and 88% without line of sight |

| [77] | Wi-Fi | Finger gesture recognition as continuous text input on standard Wi-Fi devices | Channel state information (CSI) stream | Discrete wavelet transformation (DWT) | Dynamic time Warping (DTW) | Average classification accuracy of up to 90.4% to recognize nine digits finger gestures of American sign language (ASL) |

| [87] | RFID | Device-free gesture recognition system based on phase information output by commercial off-the-shelf (COTS) RFID devices | Modified Varri Method | Savitzky–Golay filter | Dynamic time warping (DTW) | Achieves an average recognition accuracy of 96.5% and 92.8% in the scenario of identical positions and diverse positions respectively |

| [96] | Vision | Real-time classification of dance gestures from skeleton animation using Kinect | Video frames | Skeletal tracking algorithm (STA) | Cascaded Classifier | 96.9% accuracy average for approximately four seconds skeletal motion recordings |

| [97] | Vision | A review of manual gesture recognition algorithms based on vision | Not applied | Not applied | Not applied | The methods using RGB and RGB-D cameras are reviewed with quantitative and qualitative algorithm comparisons |

| [98] | Vision | Robust gesture recognition with Kinect through a comparison between dynamic time warping (DTW) and hidden Markov model (HMM) | Video frames | Not applied | Dynamic time warping (DTW) and hidden Markov model (HMM) | Both dynamic time warping (DTW) and hidden Markov model (HMM) approaches give a very good classification rate of around 90% |

| [113] | Leap Motion | Segmentation and recognition of text written in 3D using Leap Motion Interface | Partial differentiation of the signal within a predefined window | Heuristics is applied to determine word boundaries | Hidden Markov model (HMM) | The proposed heuristic-based word segmentation algorithm works with an accuracy as high as 80.3% and an accuracy of 77.6% has been recorded by HMM-based words |

| [115] | Leap Motion | Arabic Sign Language Recognition using the Leap Motion Controller | Sample of 10 frames | Mean value | Nave Bayes classifier and multilayer perceptron neural networks (MLP) | 98% classification accuracy with the Nave Bayes classifier and more than 99% using the MLP |

| [116,117,118] | Leap Motion | Recognition of dynamic hand gestures using Leap Motion | Data sample | Palm direction, palm normal, fingertips positions, and palm centre position. High-frequency noise filtering. | Support vector machines (SVM) and hidden Markov model (HMM) [116] Convolutional neural network (CNN) [117] Hidden conditional neural field (HCNF) [118] | With 80% of sample data, efficiency is achieved above 93.3% [116] Despite its good performance, it cannot provide online gesture recognition in real time [117] 95.0% recognition for the Handicraft-Gesture data set and 89.5% for the LeapMotion-Gesture3D [118] |

| [120] | Leap Motion | Hand gesture recognition for post-stroke rehabilitation using leap motion | Data sample | 17 features are extracted including Euclidean distances between the fingertips, pitch angle of palm, yaw angle of palm, roll angle of palm, and angles between fingers | Multi-class support vector machines (SVM). K-nearest neighbour (k-NN)-Neural Network | Seven gestures for the residential rehabilitation of post stroke patients are monitored with 97.71% accuracy |

| [122] | Leap Motion | Leap Motion Controller for authentication via hand geometry and gestures | Data sample | Equal error rate (EER), false acceptance (FAR) and false reject rate (FRR) | Waikato environment for knowledge analysis (WEKA) | IT shows that the Leap Motion can indeed by used successfully to both authenticate users at login as well as while performing continuous activities. Authentication accuracy reaches 98% |

| [110] | Leap Motion and Kinect | Hand gesture recognition with leap motion and Kinect devices | Data sample | Extract from the characteristics of the devices | Multi-class support vector machines (SVM) | 81% accuracy with LPM, 65% accuracy with Kinect and when combined, increases to 91.3% for ten static gestures |

| [1] | Degree of impact of the gesture in a conversation | Not applied | Not applied | Not applied | 55% impact on emotional conversations | |

| [15,16,17,18,21,22,23,24,25,26,27,28,29,30,31,32] | Electromyogram data (EMG) | Techniques of EMG signal analysis | EMG decomposition using the lowest nonlinear mean square optimization (LMS) of higher order accumulators | Wavelet transform (WT). Autoregressive model (AR). Artificial intelligence Linear and stochastic models; Nonlinear modeling | Euclidean distance between the motor unit action potentials (MUAP) waveforms [21]. Motor unit number estimation (MUNE). Hardware models [26,29] | EMG signal analysis techniques for application in clinical diagnosis, biomedicine, research, hardware implementation and end user |

| [19] | Scientific basis of the body and its movements | Not applied | Not applied | Not applied | Global organization of system elements neuromuscular, neuroreceptors and instrumentation | |

| [20] | Concepts of medical instrumentation | Not applied | Not applied | Not applied | A view of main medical sensors | |

| [49,51] | Electromyogram data (EMG) | Relationships between the variables measured with sonography and EMG | Not applied | The relationship between each of the ultrasound and EMG parameters was described with nonlinear regression [49]. Root mean squares. (RMS) [51] | Not applied | Sonomyography has great potential for be an alternative method to assess muscle function |

| [56] | Wi-Fi | Identification of multiple human subjects outdoors with micro-Doppler signals | Not applied | Doppler filtering to eliminate clutter, torso extraction from the spectrogram, torso filtering to reduce noise, and peak period extraction using a Fourier transform. | Future work to compare classifiers. | Accuracy of more than 80% in the recognition of long-range subjects and frontal view. With angle variation it is significantly reduced below 40% in the worst case. |

| [69,74] | System that tracks a user’s 3D movement from the radio signals reflected in their body | Round trip distance spectrogram from each receive antenna | Kalman filter | Not applied | Its accuracy exceeds the current location of RF systems, which require the user to have a transceiver [69]. The second version [74] can localize up to five users simultaneously, even if they are perfectly static. Accuracy of 95% or higher | |

| [72] | Electromyogram data (EMG) | Wi-Fi-based indoor positioning system that takes advantage of channel status information (CSI) | Not applied | Channel status information (CSI) processing | Not applied | Significantly improve location accuracy compared to the RSSI (Received Signal Strength Indicator) approach |

| [78,79,80,81,82,83] | RFID | RFID supply chain applications | Not applied | Not applied | Not applied | Management guidelines to proactively implement RFID applications |

| [86] | RFID | RFID-enabled fluid container without battery for recognize individual instances of liquid consumption | The time series is segmented as a single label reading | Mean, median, mode, standard deviation and range with respect to received signal strength indicator (RSSI) and Phase | Naïve Bayes (NB). Support vector machines (SVM). Random forest (RF). Linear conditional random fields (LCRF). | 87% of success to recognize episodes of alcohol consumption in ten volunteers |

| [88] | Vision | Introductory techniques for 3D computer vision | Not applied | Not applied | Not applied | Set of computational techniques for 3D images |

| [89] | Vision | 3D model generation by structured light | Not applied | Not applied | Not applied | Creation of a 3D scanner model based on structured light |

| [90] | Vision | High quality sampling in depth maps captured from a camera 3D-time of flight (ToF) coupled with a high-resolution RGB camera | Not applied | Not applied | Not applied | The new method surpasses the existing ones approaches to 3D-ToF upstream sampling |

| [91] | Model-based human posture estimation for gesture analysis in an opportunistic fusion smart camera network | Video frames | Expectation maximization (EM) algorithm | Not applied | Human posture estimation is described incorporating the concept of an opportunistic fusion framework | |

| [92,93,94,95] | 2D Human Features Model [94,95] 3D model-based tracking and recognition of human movement from real images [92,93] | Video frames | 3_DOF model [92] Shape descriptors [93] Star skeleton. Skin color processing | Dynamic time Warping (DTW) [92]. Support vector machines (SVM) [93,94]. Neural Classifiers [95]. | The experiment manages to obtain a result of 3D pose-recovery and movement classification [92,93]. Results with 2D models [94,95] | |

| [105,108] | Leap Motion | An evaluation of the performance of the Leap Motion Controller [105] The analysis was performed in accordance with ISO 9283 [108] | Not applied | Not applied | Not applied | In the static scenario, it was shown that the standard deviation was less than 0.5 mm at all times. However, there is a significant drop in the accuracy of samples taken more than 250 mm above the controller [105]. With an industrial robot with a reference pen, an accuracy of 0.2 mm is achieved [108]. |

| [107] | Vision | Stereoscopic vision techniques to determine the three-dimensional structure of the scene | Not applied | Not applied | Not applied | Study on the effectiveness of a series of stereoscopic correspondence methods |

| [109] | Leap Motion | Validation of the Leap Motion Controller using markers motion capture technology | Not applied | Not applied | Not applied | The LMC is unable to provide data that is clinically significant for wrist flexion/extension, and perhaps wrist deviation |

| [111] | Vision | Potential of multimodal and multiuser interaction with virtual holography | Not applied | Not applied | Not applied | Multi-user interactions are described, which support local and distributed computers, using a variety of screens |

| [112] | Operating Virtual Panels with Hand Gestures in Immersive VR Games | Not applied | Not applied | Not applied | Gesture-based interaction for virtual reality games using the Unity game engine, the Leap Motion sensor, smartphone and VR headset | |

| [114] | Leap Motion | Exploration of the feasibility of adapting the LMC, developed for video games, to the neurorehabilitation of the elderly with subacute stroke | Data sample | Not applied | Not applied | The rehabilitation with CML was performed with a high level of active participation, without adverse effects, and contributed to increase the recovery of manual skills |

| [119] | Leap Motion | Robotic arm manipulation using the Leap Motion Controller | Data sample | Mapping Algorithm | Not applied | Robotic arm manipulation scheme is proposed to allow the incorporation of robotic systems in the home environment |

| [121] | The system that remotely controls different appliances in your home or office through natural user interfaces HCI | Not applied | Not applied | Not applied | A low-cost and real-time environment is built capable of help people with disabilities | |

| [123] | Leap Motion | Implementations of the Leap Motion device in sound synthesis and interactive live performance | Not applied | Not applied | Not applied | It seeks to empower disabled patients with musical expression using motion tracking technology and portable sensors |

| [124,125,126,127] | Leap Motion and VR glasses | VR glasses and Leap Motion trends in education, robotic, etc. | Not applied | Not applied | Not applied | Technology continues to update and promote innovation. VR and sensors such as Leap Motion are intended to have a great impact on it |

References

- Mehrabian, A. Nonverbal Communication; Routledge: London, UK, 1972. [Google Scholar]

- Sutherland, E.I. Sketchpad: A Man-Machine Graphical Communication System; University of Cambridge Computer Laboratory: Cambridge, UK, 2003. [Google Scholar]

- Krueger, W.M.; Gionfriddo, T.; Hinrichsen, K. Videoplace an artificial reality. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, San Francisco, CA, USA, 10–13 April 1985; pp. 35–40. [Google Scholar] [CrossRef]

- Kaushik, M.; Jain, R. Gesture Based Interaction NUI: An Overview. Int. J. Eng. Trends Technol. 2014, 9, 633–636. [Google Scholar] [CrossRef] [Green Version]

- Myers, A.B. A brief history of human-computer interaction technology. Interactions 1998, 5, 44–54. [Google Scholar] [CrossRef] [Green Version]

- Sharma, P.K.; Sharma, S.R. Evolution of Hand Gesture Recognition: A Review. Int. J. Eng. Comput. Sci. 2015, 4, 4. [Google Scholar]

- Premaratne, P.; Nguyen, Q.; Premaratne, M. Human Computer Interaction Using Hand Gestures. In Advanced Intelligent Computing Theories and Applications; Springer: Berlin/Heidelberg, Germany, 2010; pp. 381–386. [Google Scholar] [CrossRef]

- Sturman, J.D.; Zeltzer, D. A survey of glove-based input. IEEE Comput. Graph. Appl. 1994, 14, 30–39. [Google Scholar] [CrossRef]

- Zimmerman, G.T. Optical flex sensor. U.S. Patent 4542291A, 17 September 1985. [Google Scholar]

- La Viola, J.J., Jr. A Survey of Hand Posture and Gesture Recognition Techniques and Technology; Departament of Computer Science, Brown University: Providence, RI, USA, 1999. [Google Scholar]

- CyberGlove III, CyberGlove Systems LLC. Available online: http://www.cyberglovesystems.com/cyberglove-iii (accessed on 17 January 2020).

- Hernandez-Rebollar, J.L.; Kyriakopoulos, N.; Lindeman, R.W. The AcceleGlove: A whole-hand input device for virtual reality. In Proceedings of the ACM SIGGRAPH 2002 Conference Abstracts and Applications, San Antonio, TX, USA, 21–26 July 2002; p. 259. [Google Scholar] [CrossRef]

- Santos, L.; González, J.L.; Turiel, J.P.; Fraile, J.C.; de la Fuente, E. Guante de datos sensorizado para uso en cirugía laparoscópica asistida por la mano (HALS). Presented at the Jornadas de Automática, Bilbao, Spain, 2–4 September 2015. [Google Scholar]

- Moreno, R.; Cely, C.; Pinzón Arenas, J.O. Diseño de un guante háptico de control para manipulador robótico teleoperado. Ingenium 2013, 7, 19. [Google Scholar] [CrossRef]

- Reaz, M.B.I.; Hussain, M.S.; Mohd-Yasin, F. Techniques of EMG signal analysis: Detection, processing, classification and applications. Biol. Proced. Online 2006, 8, 11–35. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Valentinuzzi, M.E. Bioelectrical signal processing in cardiac and neurological applications and electromyography: Physiology, engineering, and noninvasive applications. Biomed. Engl. OnLine 2007, 6, 27. [Google Scholar] [CrossRef] [Green Version]

- Farina, D.; Stegeman, D.F.; Merletti, R. Biophysics of the generation of EMG signals. In Surface Electromyography: Physiology, Engineering and Applications; Wiley Online Library: Hoboken, NJ, USA, 2016; pp. 1–24. [Google Scholar] [CrossRef]

- Merletti, R.; Parker, P. Electromyography: Physiology, Engineering, and Noninvasive Applications; Wiley-IEEE Press: New York, NY, USA, 2004. [Google Scholar]

- Gowitzke, B.A.; Milner, M. El Cuerpo y sus Movimientos. Bases Científicas; Paidotribo: Buenos Aires, Argentina, 1999. [Google Scholar]

- Webster, J.G. Medical Instrumentation: Application and Design; John Wiley & Sons: New York, NY, USA, 2009. [Google Scholar]

- Luca, C.J.D. The Use of Surface Electromyography in Biomechanics. J. Appl. Biomech. 1997, 13, 135–163. [Google Scholar] [CrossRef] [Green Version]

- Hefftner, G.; Zucchini, W.; Jaros, G.G. The electromyogram (EMG) as a control signal for functional neuromuscular stimulation. I. Autoregressive modeling as a means of EMG signature discrimination. IEEE Trans. Biomed. Eng. 1988, 35, 230–237. [Google Scholar] [CrossRef]

- Merletti, R.; Farina, D. Surface Electromyography: Physiology, Engineering, and Applications; John Wiley & Sons: New York, NY, USA, 2016. [Google Scholar]

- Barreto, A.; Scargle, S.; Adjouadi, M. Hands-off human-computer interfaces for individuals with severe motor disabilities. In Proceedings of the HCI International ’99 (the 8th International Conference on Human-Computer Interaction), Munich, Germany, 22–26 August 1999; Volume 2, pp. 970–974. [Google Scholar]

- Coleman, K. Electromyography Based Human-Computer-Interface to Induce Movement in Elderly Persons with Movement Impairments; ACM Press: New York, NY, USA, 2001; p. 4. [Google Scholar]

- Guerreiro, T.; Jorge, J. EMG as a daily wearable interface. Presented at the International Conference on Computer Graphics Theory and Applications, Lisbon, Portugal, 25–28 February 2006; pp. 216–223. [Google Scholar]

- Ahsan, R.; Ibrahimy, M.; Khalifa, O. EMG Signal Classification for Human Computer Interaction A Review. Eur. J. Sci. Res. 2009, 33, 480–501. [Google Scholar]

- Crawford, B.; Miller, K.; Shenoy, P.; Rao, R. Real-Time Classification of Electromyographic Signals for Robotic Control. Presented at the AAAI-Association for the Advancement of Artificial Intelligence, Pittsburgh, PA, USA, 9–13 July 2005; p. 6. [Google Scholar]

- Kiguchi, K.; Hayashi, Y. An EMG-Based Control for an Upper-Limb Power-Assist Exoskeleton Robot. IEEE Trans. Syst. Man Cybern. Part. B Cybern. 2012, 42, 1064–1071. [Google Scholar] [CrossRef] [PubMed]

- Joshi, C.D.; Lahiri, U.; Thakor, N.V. Classification of Gait Phases from Lower Limb EMG: Application to Exoskeleton Orthosis. In Proceedings of the 2013 IEEE Point-of-Care Healthcare Technologies (PHT), Bangalore, India, 16–18 January 2013; pp. 228–231. [Google Scholar] [CrossRef]

- Manabe, H.; Hiraiwa, A.; Sugimura, T. Unvoiced speech recognition using EMG–mime speech recognition. In Proceedings of the CHI ’03 Extended Abstracts on Human Factors in Computing Systems, Ft. Lauderdale, FL, USA, 5–10 April 2003; pp. 794–795. [Google Scholar] [CrossRef]

- Benedek, J.; Hazlett, R.L. Incorporating Facial EMG Emotion Measures as Feedback in the Software Design Process. Presented at the HCIC Consortium, Granby, CO, USA, 2–6 February 2005; pp. 1–9. [Google Scholar]

- Healey, J.; Picard, R.W. StartleCam: A cybernetic wearable camera. In Proceedings of the Digest of Papers. Second International Symposium on Wearable Computers (Cat. No.98EX215), Pittsburgh, PA, USA, 19–20 October 1998; pp. 42–49. [Google Scholar] [CrossRef] [Green Version]

- Dubost, G.; Tanaka, A. A Wireless, Network-Based Biosensor Interface for Music. In Proceedings of the 2002 International Computer Music Conference (ICMC), Gothenburg, Sweden, 16–21 September 2002. [Google Scholar]

- Knapp, R.B.; Lusted, H.S. A Bioelectric Controller for Computer Music Applications. Comput. Music J. 1990, 14, 42–47. [Google Scholar] [CrossRef]

- Tanaka, A.; Knapp, R.B. Multimodal Interaction in Music Using the Electromyogram and Relative Position Sensing. In A NIME Reader; Jensenius, A.R., Lyons, M.J., Eds.; Springer International Publishing: Cham, Vietnam, 2002; Volume 3, pp. 45–58. [Google Scholar]

- Fistre, J.; Tanaka, A. Real time EMG gesture recognition for consumer electronics device control. Presented at the Sony CSL Paris Open House, Paris, France, October 2002. [Google Scholar]

- Rosenberg, R. The biofeedback pointer: EMG control of a two dimensional pointer. In Proceedings of the Digest of Papers Second International Symposium on Wearable Computers (Cat. No.98EX215), Pittsburgh, PA, USA, 19–20 October 1998; pp. 162–163. [Google Scholar] [CrossRef]

- Wheeler, K.R.; Jorgensen, C.C. Gestures as input: Neuroelectric joysticks and keyboards. IEEE Pervasive Comput. 2003, 2, 56–61. [Google Scholar] [CrossRef]

- Dhillon, H.S.; Singla, R.; Rekhi, N.S.; Jha, R. EOG and EMG based virtual keyboard: A brain-computer interface. In Proceedings of the 2009 2nd IEEE International Conference on Computer Science and Information Technology, Beijing, China, 8–11 August 2009; pp. 259–262. [Google Scholar] [CrossRef]

- Saponas, T.S.; Tan, D.S.; Morris, D.; Balakrishnan, R.; Turner, J.; Landay, J.A. Enabling always-available input with muscle-computer interfaces. In Proceedings of the 22nd Annual ACM Symposium on User Interface Software and Technology, Victoria, BC, Canada, 4–7 October 2009; pp. 167–176. [Google Scholar] [CrossRef]

- Tabor, A.; Bateman, S.; Scheme, E. Game-Based Myoelectric Training. In Proceedings of the 2016 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts, Austin, TX, USA, 16–19 October 2016; pp. 299–306. [Google Scholar] [CrossRef]

- Boyali, A.; Hashimoto, N.; Matsumoto, O. Hand posture and gesture recognition using MYO armband and spectral collaborative representation based classification. In Proceedings of the 2015 IEEE 4th Global Conference on Consumer Electronics (GCCE), Osaka, Japan, 27–30 October 2015; pp. 200–201. [Google Scholar] [CrossRef]

- Sathiyanarayanan, M.; Rajan, S. MYO Armband for physiotherapy healthcare: A case study using gesture recognition application. In Proceedings of the 2016 8th International Conference on Communication Systems and Networks (COMSNETS), Bangalore, India, 5–10 January 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Abreu, J.G.; Teixeira, J.M.; Figueiredo, L.S.; Teichrieb, V. Evaluating Sign Language Recognition Using the Myo Armband. In Proceedings of the 2016 XVIII Symposium on Virtual and Augmented Reality (SVR), Gramado, Brazil, 21–24 June 2016; pp. 64–70. [Google Scholar] [CrossRef]

- Benalcázar, M.E.; Jaramillo, A.G.; Jonathan, A.; Zea Páez, A.; Andaluz, V.H. Hand gesture recognition using machine learning and the Myo armband. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; pp. 1040–1044. [Google Scholar] [CrossRef] [Green Version]

- Benalcázar, M.E.; Motoche, C.; Zea, J.A.; Jaramillo, A.G.; Anchundia, C.E.; Zambrano, P.; Pérez, M. Real-time hand gesture recognition using the Myo armband and muscle activity detection. In Proceedings of the 2017 IEEE Second Ecuador Technical Chapters Meeting (ETCM), Salinas, Ecuador, 16–20 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Sikdar, S.; Rangwala, H.; Eastlake, E.B.; Hunt, I.A.; Nelson, A.J.; Devanathan, J.; Pancrazio, J.J. Novel Method for Predicting Dexterous Individual Finger Movements by Imaging Muscle Activity Using a Wearable Ultrasonic System. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 69–76. [Google Scholar] [CrossRef]

- Hodges, P.W.; Pengel, L.H.M.; Herbert, R.D.; Gandevia, S.C. Measurement of muscle contraction with ultrasound imaging. Muscle Nerve 2003, 27, 682–692. [Google Scholar] [CrossRef]

- Zheng, Y.P.; Chan, M.M.F.; Shi, J.; Chen, X.; Huang, Q.H. Sonomyography: Monitoring morphological changes of forearm muscles in actions with the feasibility for the control of powered prosthesis. Med. Eng. Phys. 2006, 28, 405–415. [Google Scholar] [CrossRef] [Green Version]

- Guo, J.-Y.; Zheng, Y.-P.; Huang, Q.-H.; Chen, X.; He, J.-F. Comparison of sonomyography and electromyography of forearm muscles in the guided wrist extension. In Proceedings of the 2008 5th International Summer School and Symposium on Medical Devices and Biosensors, Hong Kong, China, 1–3 June 2008; pp. 235–238. [Google Scholar] [CrossRef]

- Chen, X.; Zheng, Y.P.; Guo, J.Y.; Shi, J. Sonomyography (SMG) Control for Powered Prosthetic Hand: A Study with Normal Subjects. Ultrasound Med. Biol. 2010, 36, 1076–1088. [Google Scholar] [CrossRef]

- Mujibiya, A.; Cao, X.; Tan, D.S.; Morris, D.; Patel, S.N.; Rekimoto, J. The sound of touch: On-body touch and gesture sensing based on transdermal ultrasound propagation. In Proceedings of the 2013 ACM International Conference on Interactive Tabletops and Surfaces, St. Andrews, UK, 6 October 2013; pp. 189–198. [Google Scholar] [CrossRef]

- Hettiarachchi, N.; Ju, Z.; Liu, H. A New Wearable Ultrasound Muscle Activity Sensing System for Dexterous Prosthetic Control. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Kowloon, China, 9–12 October 2015; pp. 1415–1420. [Google Scholar] [CrossRef]

- McIntosh, J.; Marzo, A.; Fraser, M.; Phillips, C. EchoFlex: Hand Gesture Recognition using Ultrasound Imaging. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1923–1934. [Google Scholar] [CrossRef]

- Tahmoush, D.; Silvious, J. Radar micro-doppler for long range front-view gait recognition. In Proceedings of the 2009 IEEE 3rd International Conference on Biometrics: Theory, Applications, and Systems, Washington, DC, USA, 28–30 September 2009; pp. 1–6. [Google Scholar] [CrossRef]

- Tivive, F.H.C.; Bouzerdoum, A.; Amin, M.G. A human gait classification method based on radar Doppler spectrograms. EURASIP J. Adv. Signal. Process. 2010, 2010, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Biber, C.; Ellin, S.; Shenk, E.; Stempeck, J. The Polaroid Ultrasonic Ranging System. Presented at the Audio Engineering Society Convention 67, New York, NY, USA, 31 October–3 November 1980. [Google Scholar]

- Kalgaonkar, K.; Raj, B. One-handed gesture recognition using ultrasonic Doppler sonar. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; pp. 1889–1892. [Google Scholar] [CrossRef] [Green Version]

- Fléty, E. 3D Gesture Acquisition Using Ultrasonic Sensors. In Trends in Gestural Control of Music; Wanderley, M., Battier, M., Eds.; Institut de Recherche et Coordination Acoustique Musique -Centre Pompidou: Paris, France, 2000; pp. 193–207. [Google Scholar]

- Kreczmer, B. Gestures recognition by using ultrasonic range-finders. In Proceedings of the 2011 16th International Conference on Methods Models in Automation Robotics, Miedzyzdroje, Poland, 22–25 August 2011; pp. 363–368. [Google Scholar] [CrossRef]

- Gupta, S.; Morris, D.; Patel, S.; Tan, D. SoundWave: Using the doppler effect to sense gestures. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 1911–1914. [Google Scholar] [CrossRef]

- Przybyla, R.J.; Shelton, S.E.; Guedes, A.; Krigel, R.; Horsley, D.A.; Boser, B.E. In-air ultrasonic rangefinding and angle estimation using an array of ALN micromachined transducers. In Proceedings of the 2012 Solid-State, Actuators, and Microsystems Workshop Technical Digest, Hilton Head, SC, USA, 3–7 June 2012; pp. 50–53. [Google Scholar] [CrossRef]

- Przybyla, R.J.; Tang, H.-Y.; Shelton, S.E.; Horsley, D.A.; Boser, B.E. 3D ultrasonic gesture recognition. In Proceedings of the 2014 IEEE International Solid-State Circuits Conference Digest of Technical Papers (ISSCC), San Francisco, CA, USA, 9–13 February 2014; pp. 210–211. [Google Scholar] [CrossRef]

- Booij, W.E.; Welle, K.O. Ultrasound Detectors. U.S. Patent 8792305B2, 29 July 2014. [Google Scholar]

- Saad, M.; Bleakley, C.J.; Nigram, V.; Kettle, P. Ultrasonic hand gesture recognition for mobile devices. J. Multimodal User Interfaces 2018, 12, 31–39. [Google Scholar] [CrossRef]

- Sang, Y.; Shi, L.; Liu, Y. Micro Hand Gesture Recognition System Using Ultrasonic Active Sensing. IEEE Access 2018, 6, 49339–49347. [Google Scholar] [CrossRef]

- Kellogg, B.; Talla, V.; Gollakota, S. Bringing Gesture Recognition to All Devices. Presented at the 11th (USENIX) Symposium on Networked Systems Design and Implementation (NSDI 14), Seattle, WA, USA, 2–4 April 2014; pp. 303–316. [Google Scholar]

- Adib, F.; Kabelac, Z.; Katabi, D.; Miller, R.C. 3D Tracking via Body Radio Reflections. Presented at the 11th (USENIX) Symposium on Networked Systems Design and Implementation (NSDI 14), Seattle, WA, USA, 2–4 April 2014; pp. 317–329. [Google Scholar]

- Pu, Q.; Gupta, S.; Gollakota, S.; Patel, S. Whole-home gesture recognition using wireless signals. In Proceedings of the 19th Annual International Conference on Mobile Computing & Networking, Miami, FL, USA, 30 September–4 October 2013; pp. 27–38. [Google Scholar] [CrossRef] [Green Version]

- Adib, F.; Katabi, D. See through walls with Wi-Fi! In Proceedings of the ACM SIGCOMM 2013 Conference on SIGCOMM, Hong Kong, China, 12–16 August 2013; pp. 75–86. [Google Scholar] [CrossRef] [Green Version]

- Wu, K.; Xiao, J.; Yi, Y.; Gao, M.; Ni, L.M. FILA: Fine-grained indoor localization. In Proceedings of the 2012 Proceedings IEEE INFOCOM, Orlando, FL, USA, 25–30 March 2012; pp. 2210–2218. [Google Scholar] [CrossRef] [Green Version]

- Abdelnasser, H.; Youssef, M.; Harras, K.A. WiGest: A ubiquitous Wi-Fi-based gesture recognition system. In Proceedings of the 2015 IEEE Conference on Computer Communications (INFOCOM), Hong Kong, China, 26 April–1 May 2015; pp. 1472–1480. [Google Scholar] [CrossRef] [Green Version]

- Adib, F.; Kabelac, Z.; Katabi, D. Multi-Person Localization via RF Body Reflections. In Proceedings of the 12th USENIX, etworked Systems Design and Implementation, Oakland, CA, USA, 4–6 May 2015. [Google Scholar]

- Nandakumar, R.; Kellogg, B.; Gollakota, S. Wi-Fi Gesture Recognition on Existing Devices. arXiv 2014, arXiv:1411.5394. Available online: https://arxiv.org/abs/1411.5394 (accessed on 23 June 2020).

- He, W.; Wu, K.; Zou, Y.; Ming, Z. WiG: Wi-Fi-Based Gesture Recognition System. In Proceedings of the 2015 24th International Conference on Computer Communication and Networks (ICCCN), Kowloon, Hong Kong, 26 April–1 May 2015; pp. 1–7. [Google Scholar] [CrossRef]

- Li, H.; Yang, W.; Wang, J.; Xu, Y.; Huang, L. WiFinger: Talk to your smart devices with finger-grained gesture. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; pp. 250–261. [Google Scholar] [CrossRef]

- Angeles, R. RFID Technologies: Supply-Chain Applications and Implementation Issues. Inf. Syst. Manag. 2006, 51–65. [Google Scholar] [CrossRef]

- Borriello, G.; Brunette, W.; Hall, M.; Hartung, C.; Tangney, C. Reminding About Tagged Objects Using Passive RFIDs. In UbiComp 2004: Ubiquitous Computing; Springer: Berlin/Heidelberg, Germany, 2004; pp. 36–53. [Google Scholar] [CrossRef]

- Want, R.; Fishkin, K.P.; Gujar, A.; Harrison, B.L. Bridging physical and virtual worlds with electronic tags. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Pittsburgh, PA, USA, 15–20 May 1999; pp. 370–377. [Google Scholar] [CrossRef] [Green Version]

- Buettner, M.; Prasad, R.; Philipose, M.; Wetherall, D. Recognizing daily activities with RFID-based sensors. In Proceedings of the 11th International Conference on Ubiquitous Computing, Orlando, FL, USA, 30 September–3 October 2009; pp. 51–60. [Google Scholar] [CrossRef]

- Fishkin, K.P.; Jiang, B.; Philipose, M.; Roy, S. I Sense a Disturbance in the Force: Unobtrusive Detection of Interactions with RFID-tagged Objects. In UbiComp 2004: Ubiquitous Computing; Springer: Berlin/Heidelberg, Germany, 2004; pp. 268–282. [Google Scholar] [CrossRef] [Green Version]

- Yang, L.; Chen, Y.; Li, X.-Y.; Xiao, C.; Li, M.; Liu, Y. Tagoram: Real-time tracking of mobile RFID tags to high precision using COTS devices. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, Maui, HA, USA, 7–11 September 2014; pp. 237–248. [Google Scholar] [CrossRef]

- Asadzadeh, P.; Kulik, L.; Tanin, E. Real-time Gesture Recognition Using RFID Technology. J. Pers. Ubiquitous Comput. 2012, 16, 225–234. [Google Scholar] [CrossRef]

- Bouchard, K.; Bouzouane, A.; Bouchard, B. Gesture recognition in smart home using passive RFID technology. In Proceedings of the 7th International Conference on PErvasive Technologies Related to Assistive Environments, Rhodes, Greece, 27–30 May 2014; pp. 1–8. [Google Scholar] [CrossRef]

- Jayatilaka, A.; Ranasinghe, D.C. Real-time fluid intake gesture recognition based on batteryless UHF RFID technology. Pervasive Mob. Comput. 2017, 34, 146–156. [Google Scholar] [CrossRef]

- Zou, Y.; Xiao, J.; Han, J.; Wu, K.; Li, Y.; Ni, L.M. GRfid: A Device-Free RFID-Based Gesture Recognition System. IEEE Trans. Mob. Comput. 2017, 16, 381–393. [Google Scholar] [CrossRef]

- Trucco, E.; Verri, A. Introductory Techniques for 3-D Computer Vision, 1st ed.; Prentice Hall: Upper Saddle River, NJ, USA, 1998; pp. 178–194. [Google Scholar]

- Manteca, F. Generación de Modelos 3D Mediante luz Estructurada; Universidad de Cantabria: Cantabria, Spain, 2018. [Google Scholar]

- Park, J.; Kim, H.; Tai, Y.W.; Brown, M.S.; Kweon, I. High quality depth map upsampling for 3D-TOF cameras. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1623–1630. [Google Scholar] [CrossRef]

- Wu, C.; Aghajan, H. Model-based human posture estimation for gesture analysis in an opportunistic fusion smart camera network. In Proceedings of the 2007 IEEE Conference on Advanced Video and Signal Based Surveillance, London, UK, 5–7 September 2007; pp. 453–458. [Google Scholar] [CrossRef]

- Gavrila, D.M.; Davis, L.S. Towards 3-d model-based tracking and recognition of human movement: A multi-view approach. In Proceedings of the International Workshop on Automatic Face-and Gesture-Recognition, Zurich, Switzerland, 26–28 June 1995; pp. 272–277. [Google Scholar]

- Cohen, I.; Li, H. Inference of human postures by classification of 3D human body shape. In Proceedings of the 2003 IEEE International SOI Conference, Proceedings (Cat. No.03CH37443), Nice, France, 17 October 2003; pp. 74–81. [Google Scholar] [CrossRef]

- Mo, H.-C.; Leou, J.-J.; Lin, C.-S. Human Behavior Analysis Using Multiple 2D Features and Multicategory Support Vector Machine in MVA. In Proceedings of the MVA2009 IAPR Conference on Machine Vision Applications, Yokohama, Japan, 20–22 May 2009; pp. 46–49. [Google Scholar]

- Corradini, A.; Böhme, H.-J.; Gross, H.-M. Visual-based posture recognition using hybrid neural networks. Presented at the European Symposium on Artificial Neural Networks, Bruges, Belgium, 21–23 April 1999; pp. 81–86. [Google Scholar]

- Raptis, M.; Kirovski, D.; Hoppe, H. Real-time classification of dance gestures from skeleton animation. In Proceedings of the 2011 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Vancouver, BC, Canada, 5–7 August 2011; pp. 147–156. [Google Scholar] [CrossRef]

- Pisharady, P.K.; Saerbeck, M. Recent methods and databases in vision-based hand gesture recognition: A review. Comput. Vis. Image Underst. 2015, 141, 52–165. [Google Scholar] [CrossRef]

- Raheja, J.L.; Minhas, M.; Prashanth, D.; Shah, T.; Chaudhary, A. Robust gesture recognition using Kinect: A comparison between DTW and HMM. Optik 2015, 126, 1098–1104. [Google Scholar] [CrossRef]

- Kinect: Desarrollo de Aplicaciones de Windows. Available online: https://developer.microsoft.com/es-es/windows/kinect (accessed on 18 January 2020).

- Digital Worlds that Feel Human | Ultraleap. Available online: https://www.ultraleap.com/ (accessed on 18 January 2020).

- Leap Motion (I): Características técnicas. Available online: https://www.showleap.com/2015/04/20/leap-motion-caracteristicas-tecnicas/ (accessed on 18 January 2020).

- MX25L3206E DATASHEET. Available online: https://docs.rs-online.com/5c85/0900766b814ac6f9.pdf (accessed on 23 June 2020).

- API Overview—Leap Motion JavaScript SDK v2.3 Documentation. Available online: https://developer-archive.leapmotion.com/documentation/v2/javascript/devguide/Leap_Overview.html (accessed on 18 January 2020).

- API Overview—Leap Motion Java SDK v3.2 Beta Documentation. Available online: https://developer-archive.leapmotion.com/documentation/java/devguide/Leap_Overview.html?proglang=java (accessed on 27 January 2020).

- Guna, J.; Jakus, G.; Pogačnik, M.; Tomažič, S.; Sodnik, J. An Analysis of the Precision and Reliability of the Leap Motion Sensor and Its Suitability for Static and Dynamic Tracking. Sensors 2014, 14, 702–3720. [Google Scholar] [CrossRef] [Green Version]

- Leap Motion (II): Principio de funcionamiento. Available online: https://www.showleap.com/2015/05/04/leap-motion-ii-principio-de-funcionamiento/ (accessed on 18 January 2020).

- Montalvo Martínez, M. Técnicas de Visión Estereoscópica Para Determinar la Estructura Tridimensional de la Escena. 2010. Available online: https://eprints.ucm.es/11350/ (accessed on 18 January 2020).

- Weichert, F.; Bachmann, D.; Rudak, B.; Fisseler, D. Analysis of the Accuracy and Robustness of the Leap Motion Controller. Sensors 2013, 13, 6380–6393. [Google Scholar] [CrossRef]

- Smeragliuolo, A.H.; Hill, N.J.; Disla, L.; Putrino, D. Validation of the Leap Motion Controller using markered motion capture technology. J. Biomech. 2016, 49, 1742–1750. [Google Scholar] [CrossRef]

- Marin, G.; Dominio, F.; Zanuttigh, P. Hand gesture recognition with leap motion and kinect devices. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 1565–1569. [Google Scholar] [CrossRef]

- Noor, A.K.; Aras, R. Potential of multimodal and multiuser interaction with virtual holography. Adv. Eng. Softw. 2015, 81, 1–6. [Google Scholar] [CrossRef]

- Zhang, Y.; Meruvia-Pastor, O. Operating Virtual Panels with Hand Gestures in Immersive VR Games. In Augmented Reality, Virtual Reality, and Computer Graphics; De Paolis, L., Bourdot, P., Mongelli, A., Eds.; Springer: Cham, Switzerland; pp. 299–308. [CrossRef]

- Agarwal, C.; Dogra, D.P.; Saini, R.; Roy, P.P. Segmentation and recognition of text written in 3D using Leap motion interface. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malasya, 3–6 November 2015; pp. 539–543. [Google Scholar] [CrossRef]

- Iosa, M.; Morone, G.; Fusco, A.; Castagnoli, M.; Fusco, F.R.; Pratesi, L.; Paolucci, S. Leap motion controlled videogame-based therapy for rehabilitation of elderly patients with subacute stroke: A feasibility pilot study. Top. Stroke Rehabil. 2015, 22, 306–316. [Google Scholar] [CrossRef] [PubMed]

- Mohandes, M.; Aliyu, S.; Deriche, M. Arabic sign language recognition using the leap motion controller. In Proceedings of the 2014 IEEE 23rd International Symposium on Industrial Electronics (ISIE), Istanbul, Turkey, 1–4 June 2014; pp. 960–965. [Google Scholar] [CrossRef]

- Chen, Y.; Ding, Z.; Chen, Y.-L.; Wu, X. Rapid recognition of dynamic hand gestures using leap motion. In Proceedings of the 2015 IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015; pp. 1419–1424. [Google Scholar] [CrossRef]

- McCartney, R.; Yuan, J.; Bischof, H.-P. Gesture Recognition with the Leap Motion Controller. In Proceedings of the 19th International Conference on Image Processing, Computer Vision, & Pattern Recognition: IPCV’15, Las Vegas, NV, USA, 27–30 July 2015. [Google Scholar]

- Lu, W.; Tong, Z.; Chu, J. Dynamic Hand Gesture Recognition with Leap Motion Controller. IEEE Signal. Process. Lett. 2016, 23, 1188–1192. [Google Scholar] [CrossRef]

- Bassily, D.; Georgoulas, C.; Guettler, J.; Linner, T.; Bock, T. Intuitive and Adaptive Robotic Arm Manipulation using the Leap Motion Controller. In Proceedings of the ISR/Robotik 2014, 41st International Symposium on Robotics, München, Germany, 2–3 June 2014; pp. 1–7. [Google Scholar]

- Li, W.-J.; Hsieh, C.-Y.; Lin, L.-F.; Chu, W.-C. Hand gesture recognition for post-stroke rehabilitation using leap motion. In Proceedings of the 2017 International Conference on Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017; pp. 386–388. [Google Scholar] [CrossRef]

- Gándara, C.V.; Bauza, C.G. IntelliHome: A framework for the development of ambient assisted living applications based in low-cost technology. In Proceedings of the Latin American Conference on Human Computer Interaction, Córdoba, Argentina, 18–21 November 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Chan, A.; Halevi, T.; Memon, N. Leap Motion Controller for Authentication via Hand Geometry and Gestures. Presented at the International Conference on Human Aspects of Information Security, Privacy, and Trust, Los Angeles, CA, USA, 2–7 August 2015; pp. 13–22. [Google Scholar] [CrossRef]

- Hantrakul, L.; Kaczmarek, K. Implementations of the Leap Motion device in sound synthesis and interactive live performance. In Proceedings of the 2014 International Workshop on Movement and Computing, Paris, France, 16–17 June 2014; pp. 142–145. [Google Scholar] [CrossRef]

- Ling, H.; Rui, L. VR glasses and leap motion trends in education. In Proceedings of the 2016 11th International Conference on Computer Science Education (ICCSE), Nagoya, Japan, 23–25 August 2016; pp. 917–920. [Google Scholar] [CrossRef]

- Cichon, T.; Rossmann, J. Simulation-Based User Interfaces for Digital Twins: Pre-, in-, or Post-Operational Analysis and Exploration of Virtual Testbeds; Institute for Man-Machine-Interaction RWTH Aachen University Ahornstrasse: Aachen, Germany, 2017; p. 6. [Google Scholar]

- Vosinakis, S.; Koutsabasis, P. Evaluation of visual feedback techniques for virtual grasping with bare hands using Leap Motion and Oculus Rift. Virtual Real. 2018, 22, 47–62. [Google Scholar] [CrossRef]

- Jetter, H.-C.; Rädle, R.; Feuchtner, T.; Anthes, C.; Friedl, J.; Klokmose, C. In VR, everything is possible!: Sketching and Simulating Spatially-Aware Interactive Spaces in Virtual Reality. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar] [CrossRef]

- HP Reverb G2 VR Headset. Available online: https://www8.hp.com/us/en/vr/reverb-g2-vr-headset.html (accessed on 13 June 2020).

- Oculus Rift | Oculus. Available online: https://www.oculus.com/rift/?locale=es_LA#oui-csl-rift-games=mages-tale (accessed on 13 June 2020).

- Virtual Reality | HTC España. Available online: https://www.htc.com/es/virtual-reality/ (accessed on 13 June 2020).

- Mejora tu Experiencia-Valve Corporation. Available online: https://www.valvesoftware.com/es/index (accessed on 13 June 2020).

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Galván-Ruiz, J.; Travieso-González, C.M.; Tejera-Fettmilch, A.; Pinan-Roescher, A.; Esteban-Hernández, L.; Domínguez-Quintana, L. Perspective and Evolution of Gesture Recognition for Sign Language: A Review. Sensors 2020, 20, 3571. https://doi.org/10.3390/s20123571

Galván-Ruiz J, Travieso-González CM, Tejera-Fettmilch A, Pinan-Roescher A, Esteban-Hernández L, Domínguez-Quintana L. Perspective and Evolution of Gesture Recognition for Sign Language: A Review. Sensors. 2020; 20(12):3571. https://doi.org/10.3390/s20123571

Chicago/Turabian StyleGalván-Ruiz, Jesús, Carlos M. Travieso-González, Acaymo Tejera-Fettmilch, Alejandro Pinan-Roescher, Luis Esteban-Hernández, and Luis Domínguez-Quintana. 2020. "Perspective and Evolution of Gesture Recognition for Sign Language: A Review" Sensors 20, no. 12: 3571. https://doi.org/10.3390/s20123571

APA StyleGalván-Ruiz, J., Travieso-González, C. M., Tejera-Fettmilch, A., Pinan-Roescher, A., Esteban-Hernández, L., & Domínguez-Quintana, L. (2020). Perspective and Evolution of Gesture Recognition for Sign Language: A Review. Sensors, 20(12), 3571. https://doi.org/10.3390/s20123571