Abstract

The performance of camera-based polarization sensors largely depends on the estimated model parameters obtained through calibration. Limited by manufacturing processes, the low extinction ratio and inconsistency of the polarizer can reduce the measurement accuracy of the sensor. To account for the challenges, one extinction ratio coefficient was introduced into the calibration model to unify the light intensity of two orthogonal channels. Since the introduced extinction ratio coefficient is associated with degree of polarization (DOP), a new calibration method considering both azimuth of polarization (AOP) error and DOP error for the bionic camera-based polarization sensor was proposed to improve the accuracy of the calibration model parameter estimation. To evaluate the performance of the proposed camera-based polarization calibration model using the new calibration method, both indoor and outdoor calibration experiments were carried out. It was found that the new calibration method for the proposed calibration model could achieve desirable performance in terms of stability and robustness of the calculated AOP and DOP values.

1. Introduction

There have been many studies showing that insects such as desert ants and butterflies are able to determine their heading-orientation based on the skylight polarization patterns [1,2,3,4,5]. Inspired by the polarization navigation strategy of these insects, the bioinspired polarization navigation methods have attracted much attention in the field of autonomous navigation. Unlike traditional navigation methods—Including the Inertial Navigation System (INS) and Global Navigation Satellite System (GNSS)—The bioinspired polarization navigation method does not suffer from accumulation errors and electromagnetic interference [6,7]. It has been widely studied in the fields of robotics, UAVs and other unmanned systems [8,9].

Inspired by the polarization vision mechanism of insects, a variety of polarization sensors have been developed. In general, the polarization sensors can be divided into two types: polarization-sensitive type (POL-type) sensor and camera-based polarization sensor. The POL-type sensor simulates the signal process in the polarization opponent neurons in ommatidium of insects [10,11,12,13]. Although the POL-type sensor is able to generate real-time navigation measurements with less computational complexity, it can be severely affected by the cloudy sky, the tree occlusion, and other complex outdoor sky conditions [14,15,16]. In contrast, the camera-based polarization sensor can measure multiple direction information of polarization pattern and achieve satisfactory accuracy in partly sky conditions [17,18]. In the last decades, there have been many camera-based polarization sensors developed for different applications. For instance, Mukul et al. presented a polarization navigation method using the Stokes parameters to determine the orientation. The sensor used a metallic wire grid micro-polarizer to acquire position information [19]. Powel et al. developed a fully polarized sensing array to obtain the all-sky atmospheric polarization pattern. A calibration method based on the polarimeter behavior was established. To account for the errors caused by the fabrication of the imaging array nanowires, one corresponding calibration method was proposed. This method has been demonstrated to be able to significantly reduce the root mean square error (RMSE) of degree of polarization (DOP) with each super-pixel calibrated as a unit [20,21]. Wolfgang et al. presented a lightweight polarization sensor consisting of four synchronized cameras, which could determine the solar elevation angle even in the presence of clouds for UAVs [22,23]. Wang et al. designed a real-time bionic camera-based polarization navigation sensor with two working modes including one of single-point measurement mode and the other one of multi-point measurement mode. The azimuth of polarization (AOP) of the sensor can reach up to 0.3256° accuracy [24,25]. Sun et al. proposed three improved models of imaging and analyzed different polarization pattern distortion degrees of these imaging models [26]. Zhao et al. proposed a polarization imaging algorithm containing a pattern recognition algorithm and one orientation measurement algorithm to reduce the computational load. The proposed imaging algorithm was verified with simulations and experiments for utilization in embedded systems with low computational load [27]. Hu et al. designed and tested a bionic camera-based polarization navigation sensor. The standard deviation of the AOP calibrated indoors is 0.0516° [28]. In 2017, they designed a new microarray camera-based polarization compass which was calibrated based on iterative least squares method. The standard deviation of the AOP calibrated is 0.06° achieved under indoor conditions [29]. Aycock et al. designed an image-based sky AOP sensing system which could be used in GPS-challenged environments with high-precision navigation [30,31].

The aforementioned camera-based polarization sensors can all provide heading information using the AOP and DOP measurements without GNSS. However, the AOP and DOP calculations do not take into account the extinction ratio inconsistency of the polarizer, which could cause conformance degradation in the light intensity of two orthogonal channels, and result in decreasing accuracy and stability of the measured AOP and DOP. In addition, the existing calibration models for camera-based polarization sensors, only AOP error is considered to estimate the model parameters without considering the effect of DOP error on the accuracy of calibration model [24,28,29,32,33].

In this study—To account for the extinction ratio inconsistency and enhance the calibration performance for the camera-based polarization sensor—One extinction ratio coefficient was introduced into the polarization calibration model. Then, a calibration method considering both AOP and DOP errors is proposed to improve the accuracy of the calibration model. The main technical features can be summarized as follows:

- (1)

- The influence of the inconsistency of the extinction ratio between orthogonal polarizers on sensor was quantitative analyzed. Accordingly, a new camera-based polarization sensor model based on the extinction ratio coefficient was established. As such, the light intensity of the two orthogonal channels could be unified with the aid of extinction ratio coefficient. Unlike the existing polarization sensor model [26,28,29], a model with extinction ratio parameter was considered as a fine structure model. Moreover, the effect of extinction ratio error on the sensor was carefully analyzed.

- (2)

- A new calibration method integrated both the AOP and DOP error is proposed. With the addition of DOP error, the estimation accuracy of sensor calibration model parameters was further improved. Meanwhile the stability and robustness of AOP and DOP could be improved simultaneously.

The remainder of this study is organized as follows. In Section 2, the bionic camera-based polarization sensor structure and the corresponding AOP and DOP calculation are reviewed. In Section 3, the polarization calibration model with the extinction ratio coefficient and the calibration method based on both AOP and DOP error are described. To evaluate the calibration performance with the proposed calibration method, the results of both indoor and outdoor calibration experiments are analyzed in Section 4. Finally, concluding remarks are presented.

2. Bionic Camera-Based Polarization Sensor

2.1. Polarization Sensor Structure

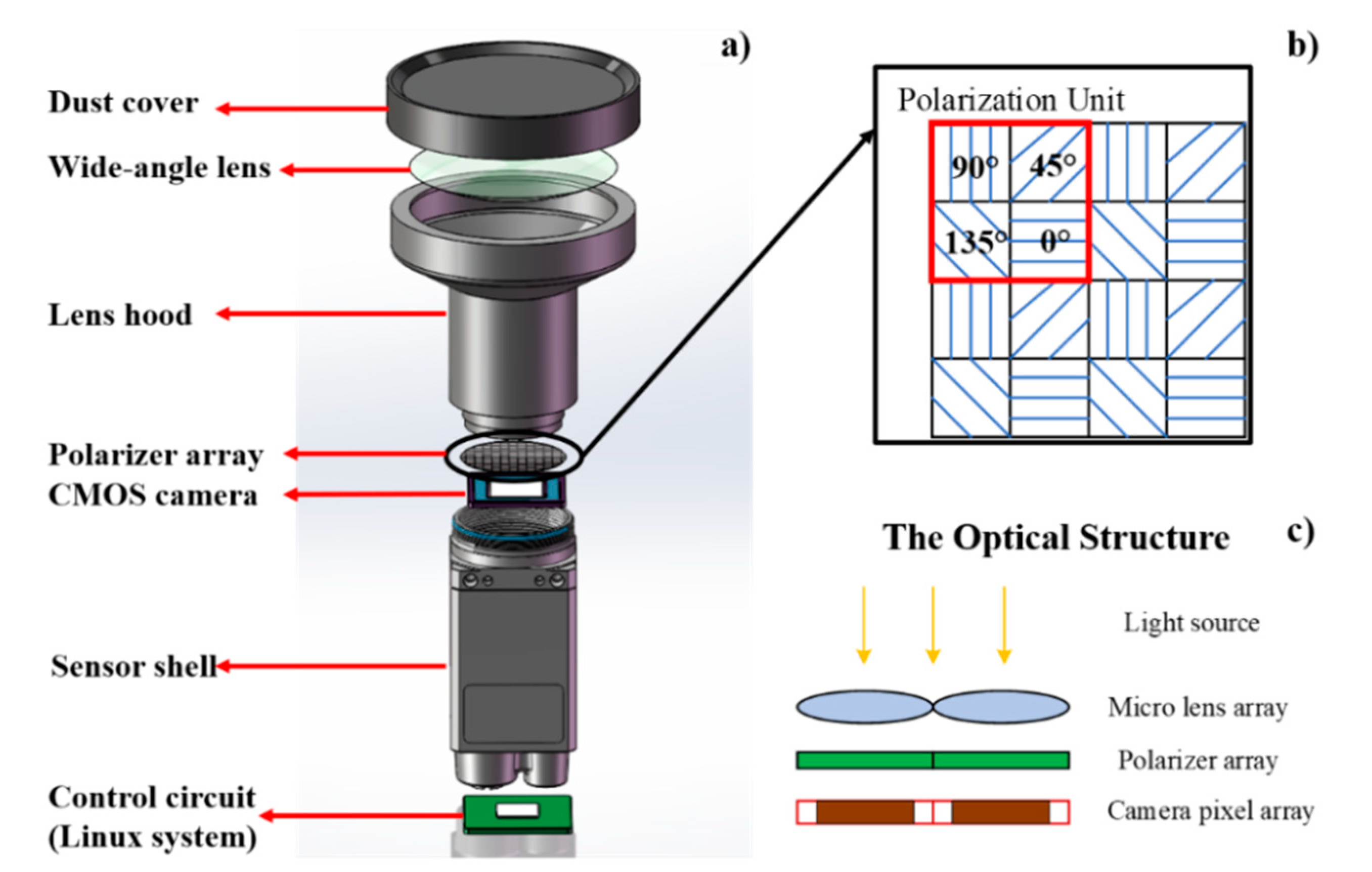

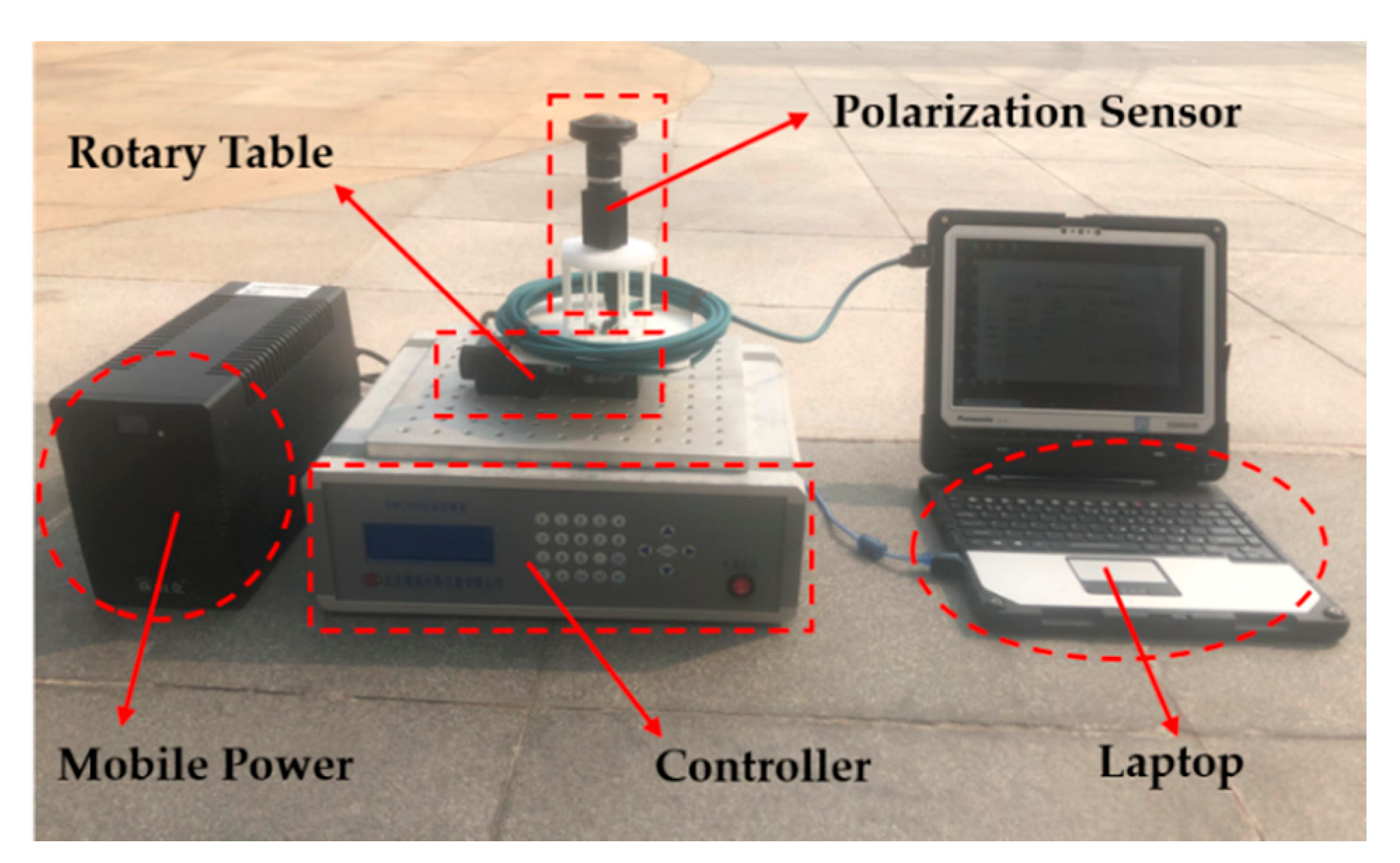

In this study, the camera-based polarization sensor designed by Space Intelligent Autonomous Systems Research Center in Beihang University is used. It consists of one lens layer, one polarization optical information acquisition layer and one photosensitive circuit layer, as shown in Figure 1.

Figure 1.

Bionic polarized-sensor structure diagram. (a) Bionic polarization sensor hardware component; (b) one example of the polarization unit with different installation angle; (c) the optical structure of the sensor.

- (1)

- The lens layer is used to sense the polarization pattern with a wide field of view. The FE185C057HA-1 wide-angle lens is adopted. Its focal length is 1.8 mm, and the field of view is about 185° × 185°.

- (2)

- The polarization optical information acquisition layer is used to detect the optical characteristics of polarized skylight. A pixel-level polarization complementary metal oxide semiconductor (CMOS) camera (Sony IMX250MZR) is employed as shown in Figure 1a. The polarization camera includes a CMOS where 2 × 2 matrices of polarizers are used in front of every 2 × 2 photosensors. The array polarizer contains 2048 × 2448-pixel channels to measure the polarized skylight, and the size of each pixel channel is 3.45 × 3.45 μm2. As shown in Figure 1b, four adjacent pixel channels constitute a polarization unit, and the polarizer installation directions of corresponding channels are 0°, 45°, 90° and 135°, respectively. In addition, the CMOS camera is used to acquire the polarized skylight intensity information.

- (3)

- The control and processing circuit layer ensures real-time acquisition and processing of polarized images obtained by the CMOS camera. It consists of a control module, a communication module and a memory module. In the control module, a Linux system is used to process the polarized skylight intensity images to finally obtain polarization information. Finally, the sun vector information is obtained according to the vertical relationship between the sun vector and the polarization vector, which is stored in the memory module.

2.2. Polarization Calculation

According to the Malus law, the light-intensity response value from the pixel channels in the same polarization unit can be expressed by [34]:

where is i–th pixel channel of one polarization unit; is the output light intensity through the polarizer; is intensity of incident light; is the DOP of the incident light; is the angle between the polarization direction of the incident light and the reference direction; and is the angle between the polarizer installation direction and the reference direction of each pixel channel in a polarization unit.

According to the light-intensity response value derived from each pixel channel, the AOP and DOP can be calculated using the Stokes parameters, as follows [29,34]:

where , and are components of the incident light Stokes vector.

3. Camera-Based Polarization Sensor Calibration

The camera-based polarization sensor calibration includes the establishment of calibration model and the improvement of calibration method. First, the calibration model of the sensor is improved for extinction ratio and other error sources. Then, the calibration model coefficients are estimated by improving the existing calibration method.

3.1. Sensor Model of Camera-Based Polarization Sensor

As an important parameter of polarization sensor, extinction ratio directly affects the accuracy and stability of the obtained polarization information. In addition, the performance of the sensor is also limited by the structure error of polarizer, the photographic error of camera, the environmental error, etc. To account for these errors, a new calibration model, including the array polarizer model and the CMOS camera photosensitivity model, is established by using different error parameters.

3.1.1. Array Polarizer Model with Extinction Ratio

As a key component of the camera-based polarization sensor, the polarizer is adopted to convert the nature light to the polarized light for heading determination. However, in the manufacturing process, the extinction ratio of microarray polarizer is limited by the influence of the fine grid design [35,36,37]. The low extinction ratio can affect the accuracy of the polarization sensor. In our sensor used, the extinction ratio of the array polarizer is about 24 dB (250:1 approximately). Therefore, the measurement model of polarization sensor needs to be calibrated with high precision according to extinction ratio [37]. Moreover, we proposed the sensor calibration model with extinction ratio to adapt to the variation of measurement information caused by extinction ratio attenuation and inconsistency.

The effect of extinction ratio is analyzed using a single pixel channel. According to the literature [34,38,39,40,41], the extinction ratio of polarizer can be expressed as follows:

where represents extinction ratio of the polarization sensor, which is a constant [35,37]; and and are the maximum and minimum values of the sine curve of the light-intensity response, respectively.

According to Equation (1), and can also be expressed as:

According to Equations (3) and (4), the relationship between ER of the polarizer and DOP of the incident light can be obtained:

In order to ensure that the calculated DOP value is the same for every polarization unit with the same incident light, the extinction ratio coefficient can be introduced:

The polarization sensor calibration model can then be changed to:

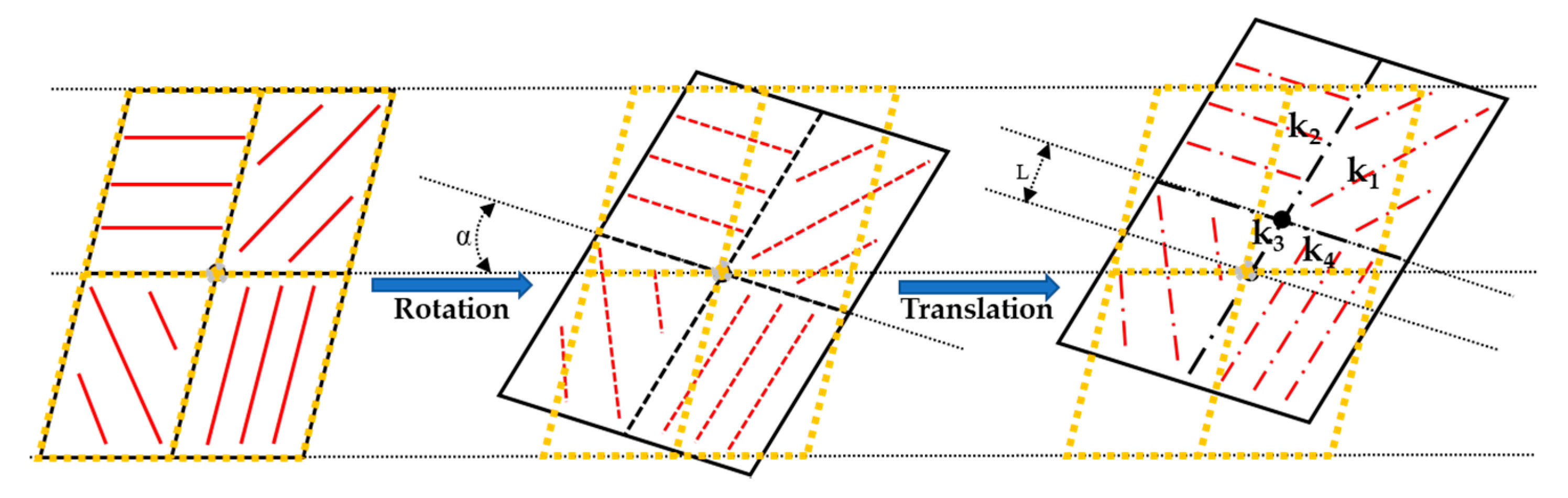

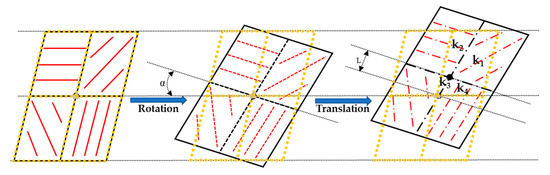

Besides the ER inconsistency of polarizer, the installation misalignment between the array polarizer and the CMOS camera photosensor also affects the accuracy of obtained polarization information [28,29]. It mainly includes the rotation and translation installation misalignments, which could result in optical path information coupling, as shown in Figure 2. The black and yellow 2 × 2 matrices represent the array polarizers and photosensors of CMOS camera, respectively.

Figure 2.

The polarizer installation misalignment of one polarization unit.

Due to the rotation and translation misalignments, the light intensity acquired by every pixel channel of CMOS camera is no longer from a single channel, but the coupling light intensity from the four channels. Suppose that the intensity of the four pixel channels of one polarization unit are , , and , respectively. The corresponding intensity distribution coefficients of the four pixel channels are , , and . Then, the output light intensity of the polarization response perceived by every pixel channel is:

where , is the ideal installation angle direction of the polarizer, with , , and respectively set to 0°, 45°, 90° and 135°; and is installation angle error of every pixel channel of polarization unit; and is the parameter introduced to account for the optical path coupling and ER inconsistency, which can be calculated as with representing the optical path coupling coefficient, and the extinction ratio coefficient.

Furthermore, the attenuation effects of different polarization channel on incident polarized light are considered in the array polarizer model, which can be expressed as:

where represents the attenuation parameter of incident light intensity of the i–th pixel channel; and represents installation angle with error, which can be calculated as ; and denotes the Gaussian noise of measurement.

3.1.2. CMOS Camera Photosensitivity Model

A CMOS camera below the array polarizer was used to collect light-intensity information. Due to difference of photodiodes and thermal effect of circuit, the photosensitivity of every pixel channel on the CMOS camera is inconsistent, which could lead to decrease on measurement accuracy of polarization sensor [28,29]. To account for the photosensitivity error, the mapping relationship between incident light intensity and the CMOS camera response light intensity can be modeled as:

where is the i–th pixel channel of CMOS camera; is the proportionality coefficient; is the bias coefficient; is the light-intensity response value of i–th pixel channel; and is the output light-intensity value after passing through the i–th polarizer. This model is referred to as the CMOS camera photosensitivity model in this study.

3.2. Calibration Method

The parameters of the bionic polarization sensor calibration models, including the CMOS camera photosensitivity model parameters and the array polarizer model parameters , are calibrated in two steps. The first step (Step 1) is to calibrate the CMOS camera photosensitivity model, and the second step (Step 2) is to calibrate the array polarizer model. Both AOP and DOP errors are taken into account during the following calibration method.

3.2.1. The CMOS Camera Photosensitivity Model Calibration

Using the uniform unpolarized light source of the integrating sphere, light-intensity response images under different incident light intensity are collected by the CMOS camera. According to Equation (10), the light-intensity responses of all pixel channels of the CMOS camera can be expressed as:

where is CMOS camera light-intensity response matrix; denotes calibration coefficient vector; and is the incident light-intensity matrix; and denotes the number of the incident light intensity.

The coefficients of the CMOS camera photosensitivity model can be estimated based on the linear least square estimation algorithm as:

3.2.2. The Array Polarizer Model Calibration

To calibrate the array polarizer model, the bionic polarization sensor is fixed on a high-precision rotary platform. Polarization light source from the integrated ball is used. The reference direction is defined as the installation direction of polarizer 0° channel under the initial state of the turntable. The rotation angle of the rotary platform relative to the reference direction is used as a benchmark to calibrate the array polarizer model. For every polarization unit of polarizers, the polarization response can be written as:

where is the light-intensity vector of the polarization response; is the vector about polarization of the incident light; and is the calibration coefficient matrix of the array polarizer model.

Assuming that groups of polarization response images are obtained, the polarization information vector group and intensity vector group of n–th polarization unit are expressed as:

The initial calibration coefficient matrix of the array polarizer model is estimated based on the linear least square algorithm:

The initial calibration coefficient of polarizer model can then be calculated with the components of as follows:

Inspired by the calibration method used for the POL-type polarization sensor considering both AOP and DOP errors [8], a least-squares iterative calibration algorithm also taking into account these two errors is proposed for the bionic camera-based polarization sensor, to account for the extinction ratio inconsistency that is associated with measurement accuracy of DOP. Compared with the existing calibration method based on AOP [6,11,28,29], the innovation of this calibration method is to establish the relationship between extinction ratio and DOP error.

The calibration parameters vector is defined as:

The AOP and DOP errors can be expressed as:

where and are the reference values of the AOP and DOP, respectively; and are the corresponding estimated values calculated based on Equation (2).

An objective function in the form of the squared Euclidean norm of the error is established as follows:

To solve this objective function, the secant Levenberg–Marquardt (LM) least square algorithm is used to obtain the optimized estimation [42,43]. The initial value of the calibration parameters can be obtained based on Equation (15).

4. Experiment Results and Analysis

In this section, one simulation experiment was carried out to first analyze the influence of the extinction ratio on calibration model. In addition, both indoor and outdoor calibration experiments were investigated to validate the effectiveness of the proposed calibration model and calibration method.

4.1. Simulation Experiment for Extinction Ratio Analysis

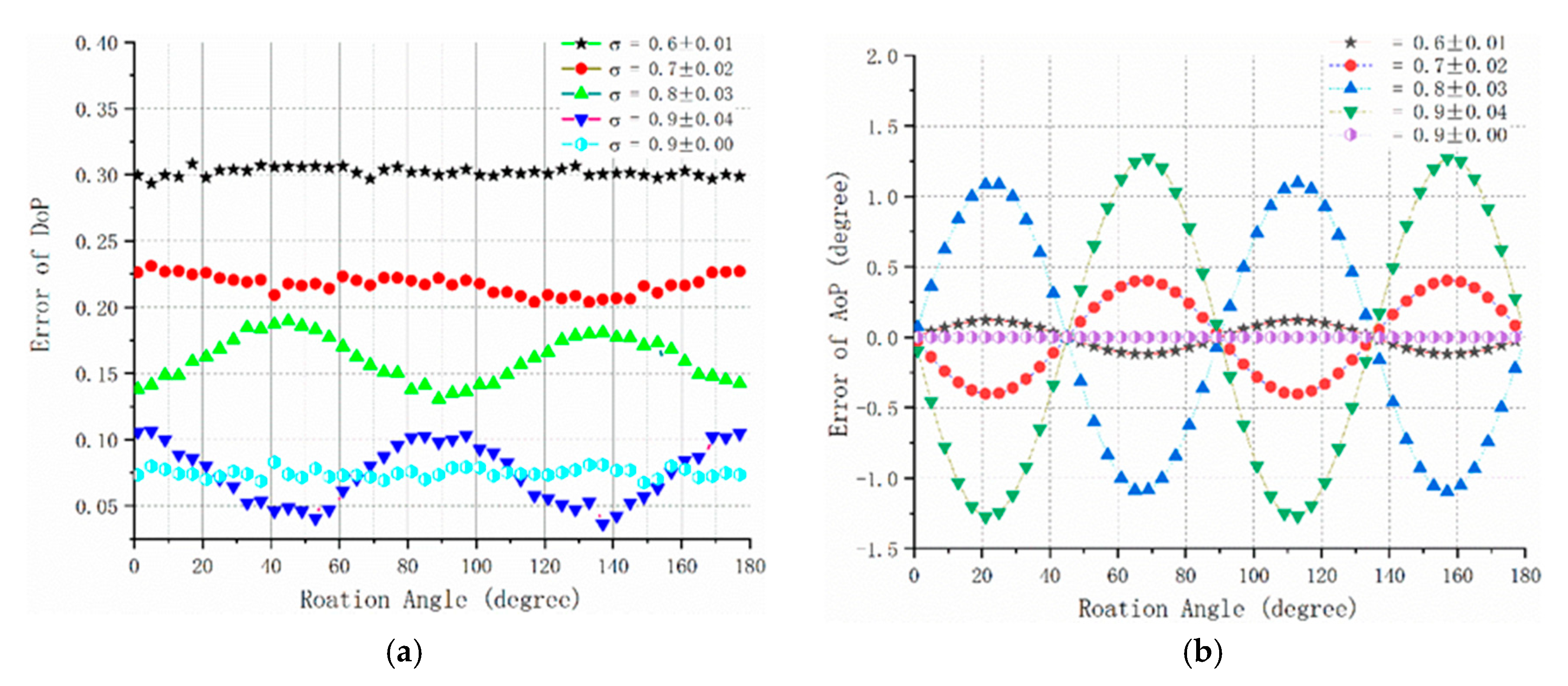

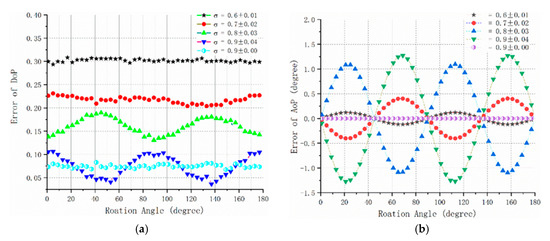

The extinction ratio coefficient used in the simulation experiment was set to be 0.6, 0.7, 0.8 and 0.9, respectively. In theory, the greater the extinction ratio coefficient, the better the extinction characteristics of the polarizer (When the extinction ratio coefficient is 1, the extinction ratio of the polarizer is ideal value 1/∞). To better illustrate the effect of the ER inconsistency of the pixel channel on the compass precision, random errors were added to the different extinction ratio coefficients. The magnitudes of the added random errors were ±0.01, ±0.02, ±0.03, ±0.04 and ±0.00, respectively. The simulation results are shown in Figure 3.

Figure 3.

Effect of extinction ratio coefficient on the sensor simulation diagram. (a) degree of polarization (DOP) error; (b) azimuth of polarization (AOP) error.

As can be seen from the simulation results, the accuracy of DOP is positively correlated with extinction ratio of polarizer. When the extinction ratio coefficient decreases from 0.9 to 0.8, the mean error of DOP increases from 7.5% to 15%. Moreover, the inconsistency of ER can cause the fluctuation of polarization error. The standard deviation of both DOP error and AOP error is enlarged almost three times with the increase in two orders of magnitude of the extinction ratio coefficient error. Hence, extinction ratio as an important parameter, must be considered in the process of sensor calibration.

4.2. Indoor Calibration Experiment

4.2.1. Calibration Results

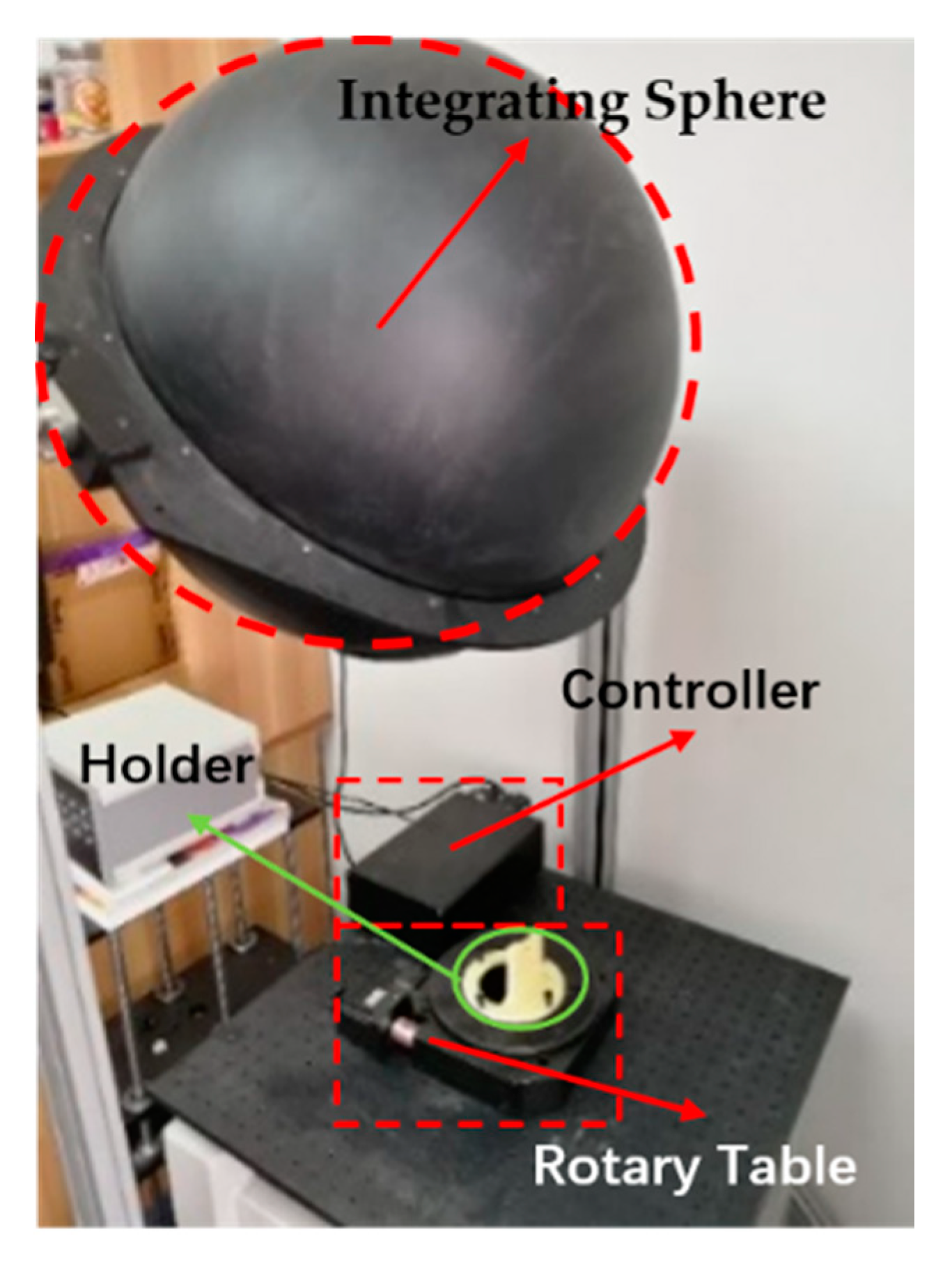

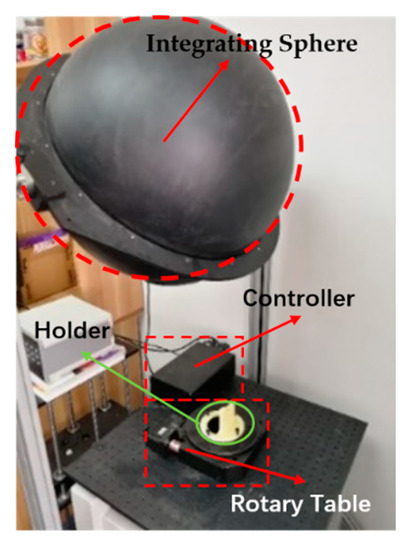

The setup used for the indoor calibration experiment include a high-precision electric rotary platform, an integrating sphere, an optical platform and a polarizer, as shown in Figure 4. The integrating sphere with high extinction ratio polarizer can provide high quality linear polarization light source for the sensor. The bionic polarization sensor was installed on an electric rotary platform that was fixed on an optical platform. The rotary platform was used to provide polarized light source with direction change for the bionic polarization sensor with the 0.01° rotating accuracy.

Figure 4.

Indoor calibration experiment environment.

Step 1: Calibration experiment of CMOS camera photosensitivity model. In order to reduce the interference of stray light, the bionic polarization sensor was placed in a closed environment with only the integrating sphere light source. The photosensitivity model of camera was calibrated with natural light source. The exposure time of the camera was set to a constant value. By changing the light intensity of the output natural light from the integrating sphere, the camera was controlled to collect response images at 30 lux intervals within the range of 20–680 lux. Moreover, a total of 23 sets of experimental data were obtained.

Step 2: Calibration experiment of the array polarizer model. The same experiment environment as the CMOS camera calibration experiment was used for the calibration experiment of the array polarizer. By rotating the rotary platform at 3° intervals from 0° to 360°, 120 sets of experimental data were collected. The rotation angle of the platform was used as the external direction reference for the array polarizer model calibration of the bionic polarization sensor. By changing the intensity of the output polarized light from the integrating sphere, 120 groups of experimental data were collected under the radiation intensity of 700lux, 500lux, 350lux and 140lux, respectively.

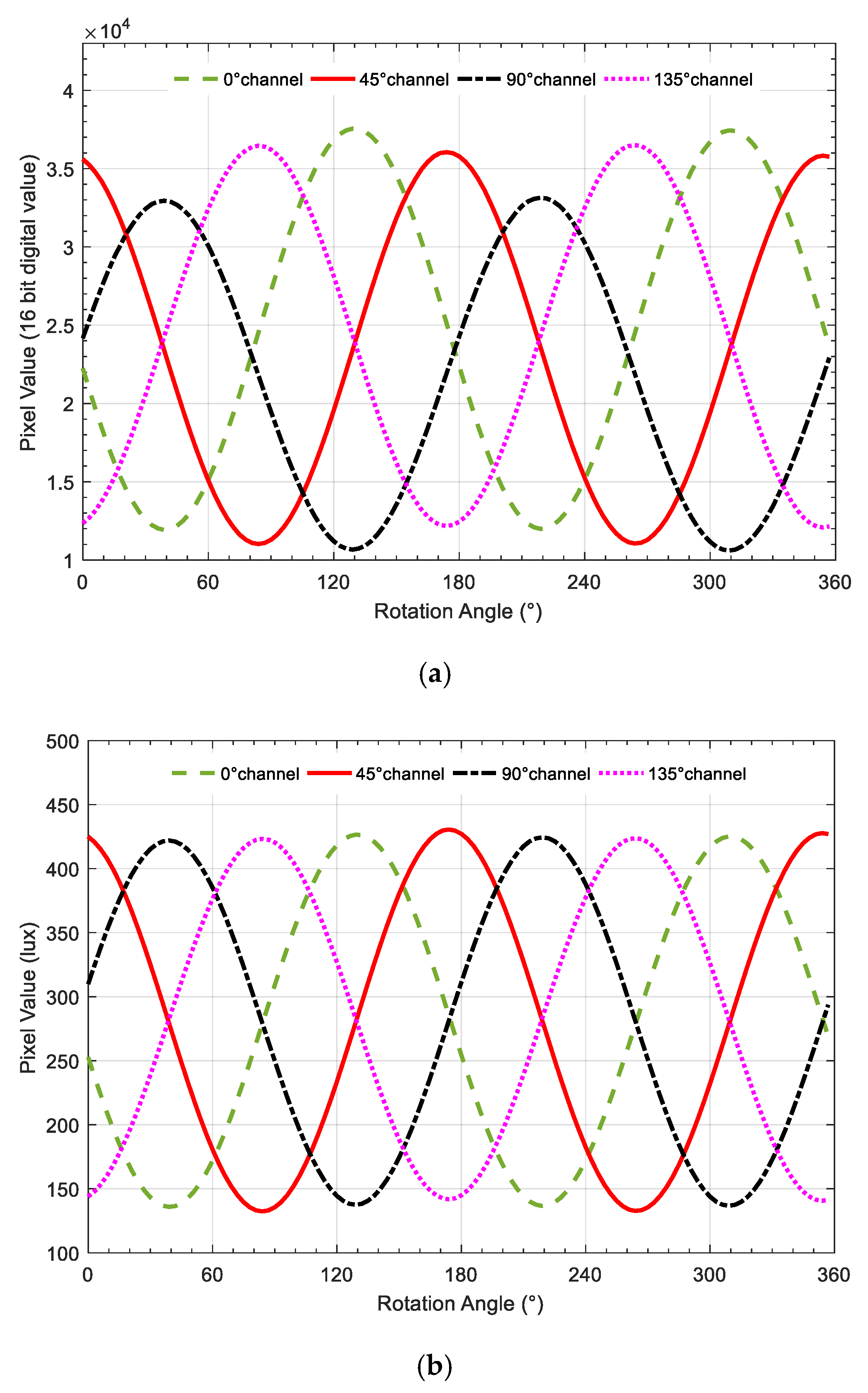

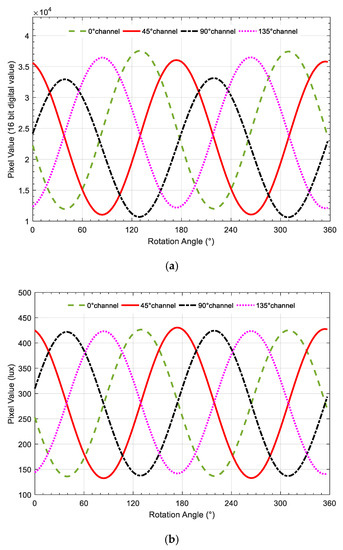

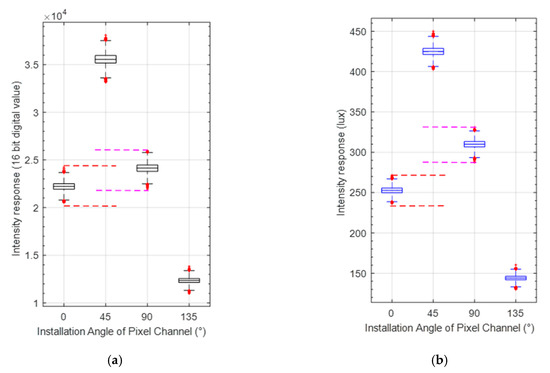

The method described in equation (12) is used to calibrate the CMOS camera photosensitivity model. The average light-intensity value of CMOS camera under polarized light varies with the rotation angle of the rotary platform, as shown in Figure 5. Figure 5a,b illustrates the intensity responses before and after calibration, respectively. It can be found that the amplitude and bias of every pixel channel response curve are significantly different before calibration, which are of almost the same magnitude after calibration of the CMOS camera photosensitivity model.

Figure 5.

Indoor calibration experiment diagram. (a) four-channel light-intensity response curve before calibration; (b) four-channel light-intensity response curve before calibration.

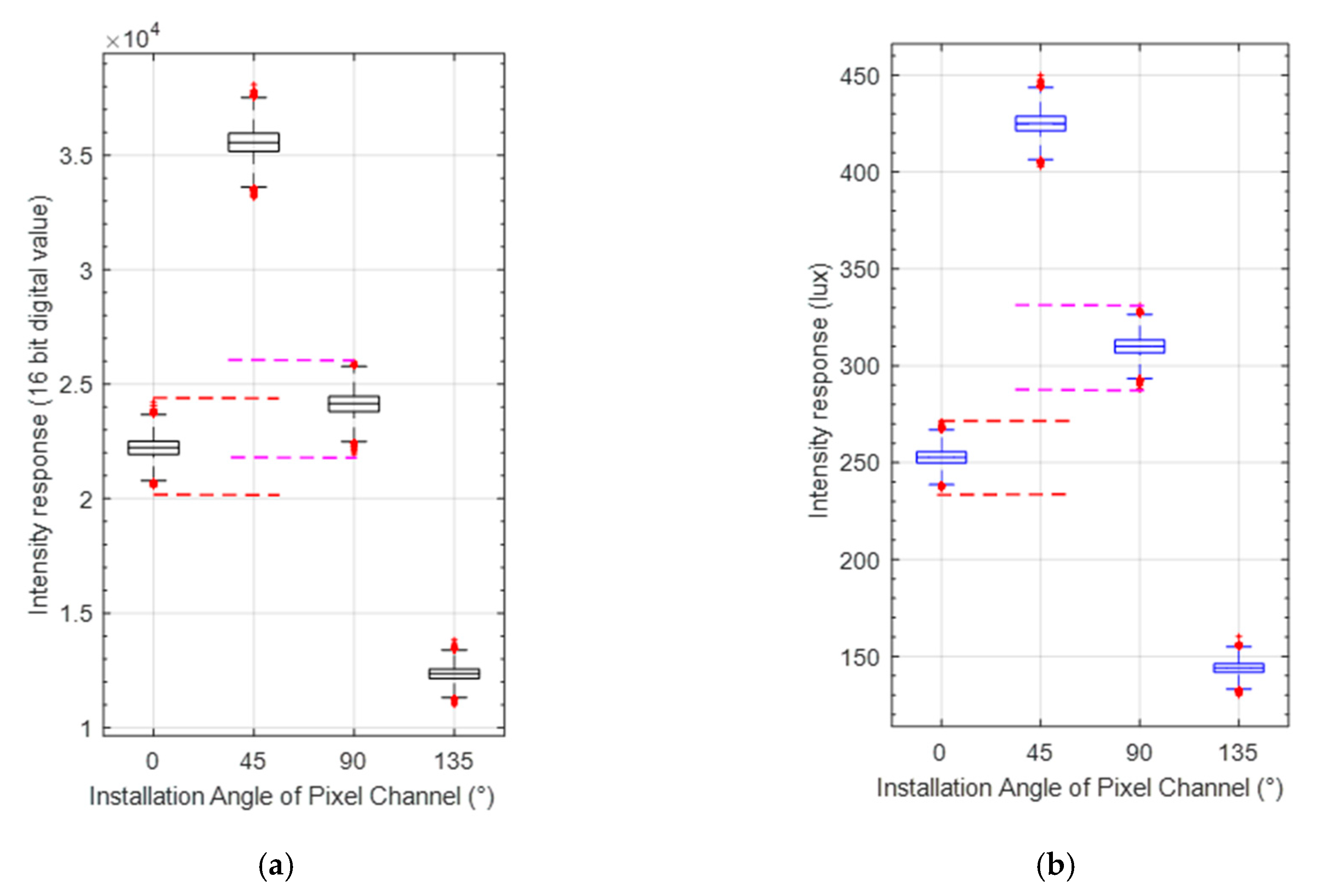

To further demonstrate the contribution of the calibration, Figure 6 gives the comparison of the distribution of light-intensity response of every pixel. As can be seen from Figure 6a,b, the response value of two pixel-channels with similar light-intensity response are more clearly distinguished after calibration. Due to the inconsistency of photosensitivity of CMOS camera, there is an overlap between the measurement value derived from the pixel channels of 0° and 90° as shown in Figure 6a, i.e., the crossed area formed by the red and magenta dotted lines. Moreover, after the photosensitivity inconsistency calibration, the two pixel channels have completely different intensity responses as shown in Figure 6b after calibration, i.e., the red and magenta dotted areas no longer cross, which will facilitate the calibration of extinction ratio error and installation error of polarizer.

Figure 6.

Distribution of light-intensity response of every pixel in single image (a) before calibration and (b) after calibration.

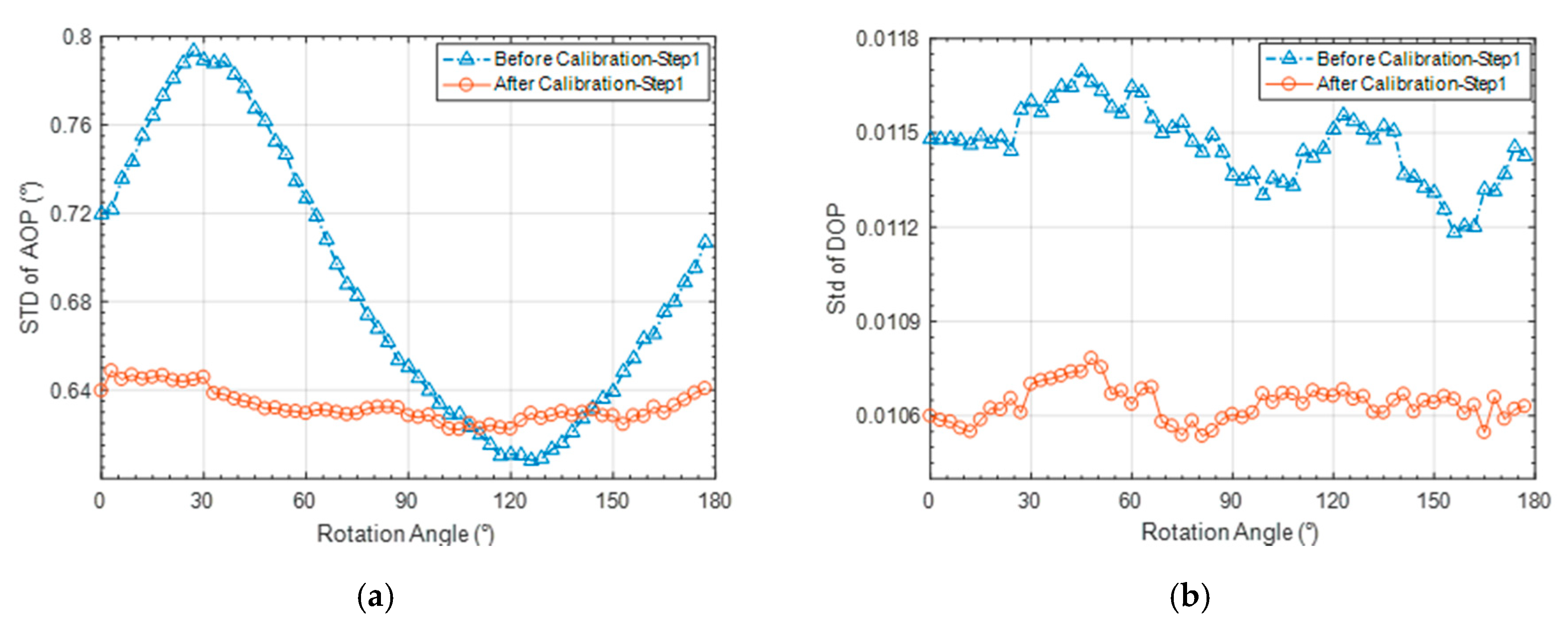

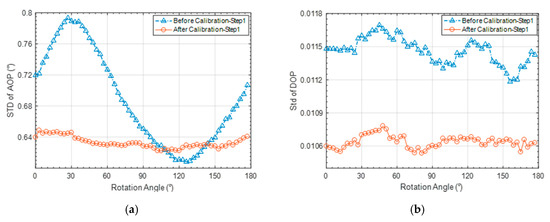

The calibrated coefficients of the CMOS camera photosensitivity model were used to correct the original light-intensity data and the standard deviations (SD) of AOP and DOP of every image rotating with the rotary platform before and after calibration were compared, as shown in Figure 7. The standard deviations of AOP and DOP of every polarization unit are decreased by 8% and 7%, respectively; As can be seen from Figure 7a, the sinusoidal fluctuation of standard deviation of AOP in each image is greatly reduced after calibration. The standard deviation of AOP calculated within the rotation angle range of 110 to 130 before calibration is smaller than after calibration, which can be attributed to the positive and negative offsets when calculating AOP based on Equation (2).

Figure 7.

(a) AOP and (b) DOP SD comparison before and after calibration experiment of CMOS camera photosensitivity model.

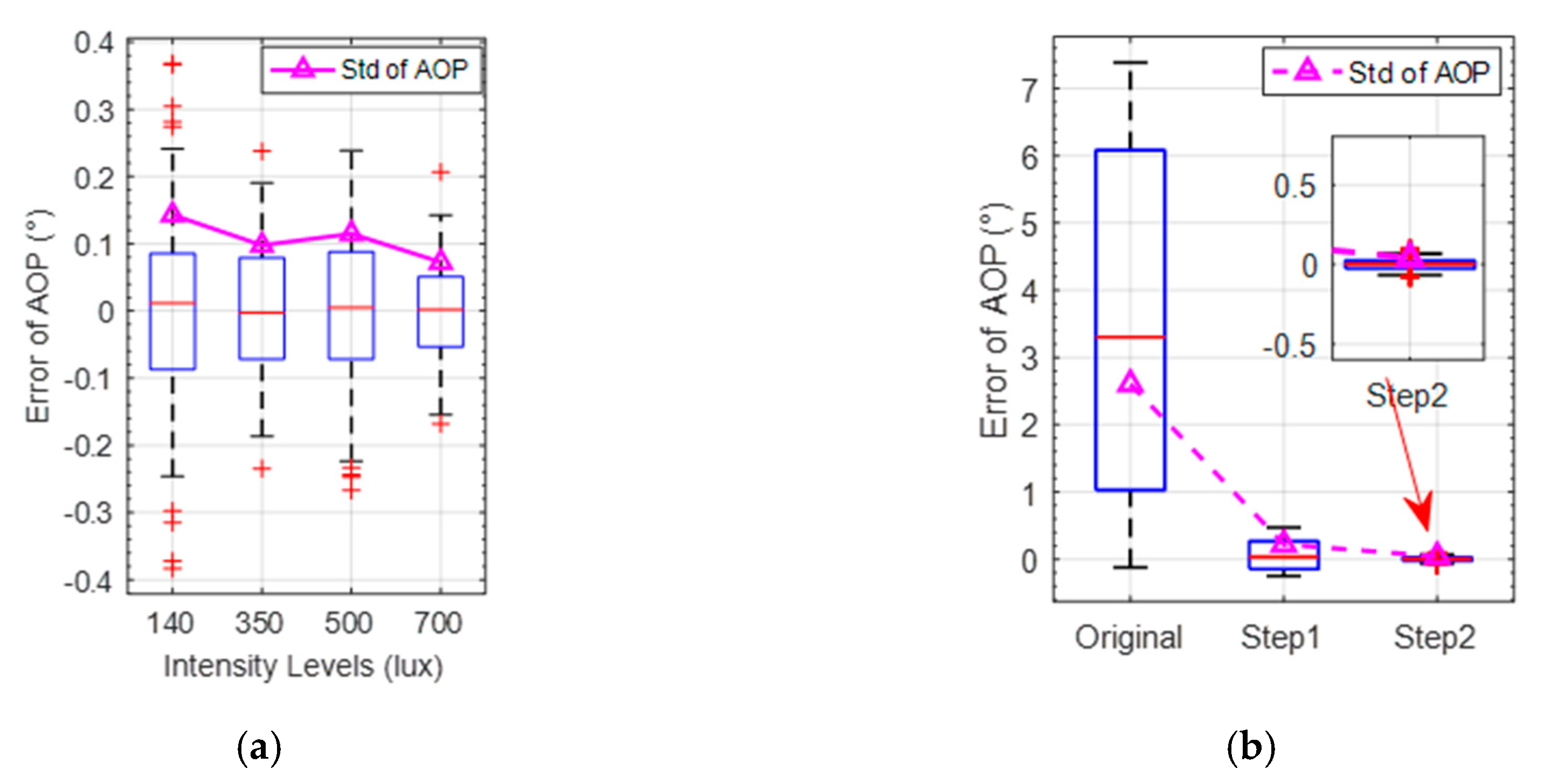

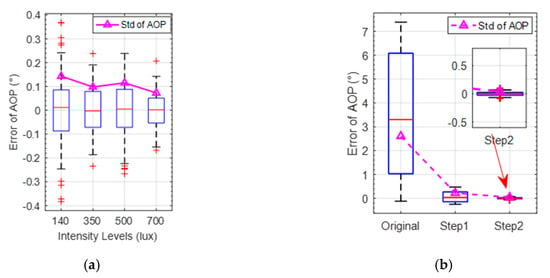

To demonstrate the influence of the incident light intensity on the calibration performance, the experiment was carried out under the incident polarized light with the different intensity levels of 700 lux, 500 lux, 350 lux and 140 lux. The CMOS camera was not overexposed under the maximum incident light intensity. As the exposure time and the rotation of the turntable could cause fluctuations of the photosensitive values derived from the pixel channels of every polarization unit, it is necessary to eliminate the influence of these factors during the calibration. A median filter was first applied to the light-intensity information of every pixel channel. The statistics of the calibrated AOP error values under different light-intensity levels of the same polarization unit are shown in Figure 8a and Table 1. It can be seen that the SD and the maximum absolute error (MAXE) tended to decrease as the intensity level of incident light increased.

Figure 8.

(a) AOP accuracy after calibration under different polarized light-intensity levels; (b) Comparison of SD of AOP error before and after calibration.

Table 1.

Statistics comparison of AOP error under different light-intensity levels.

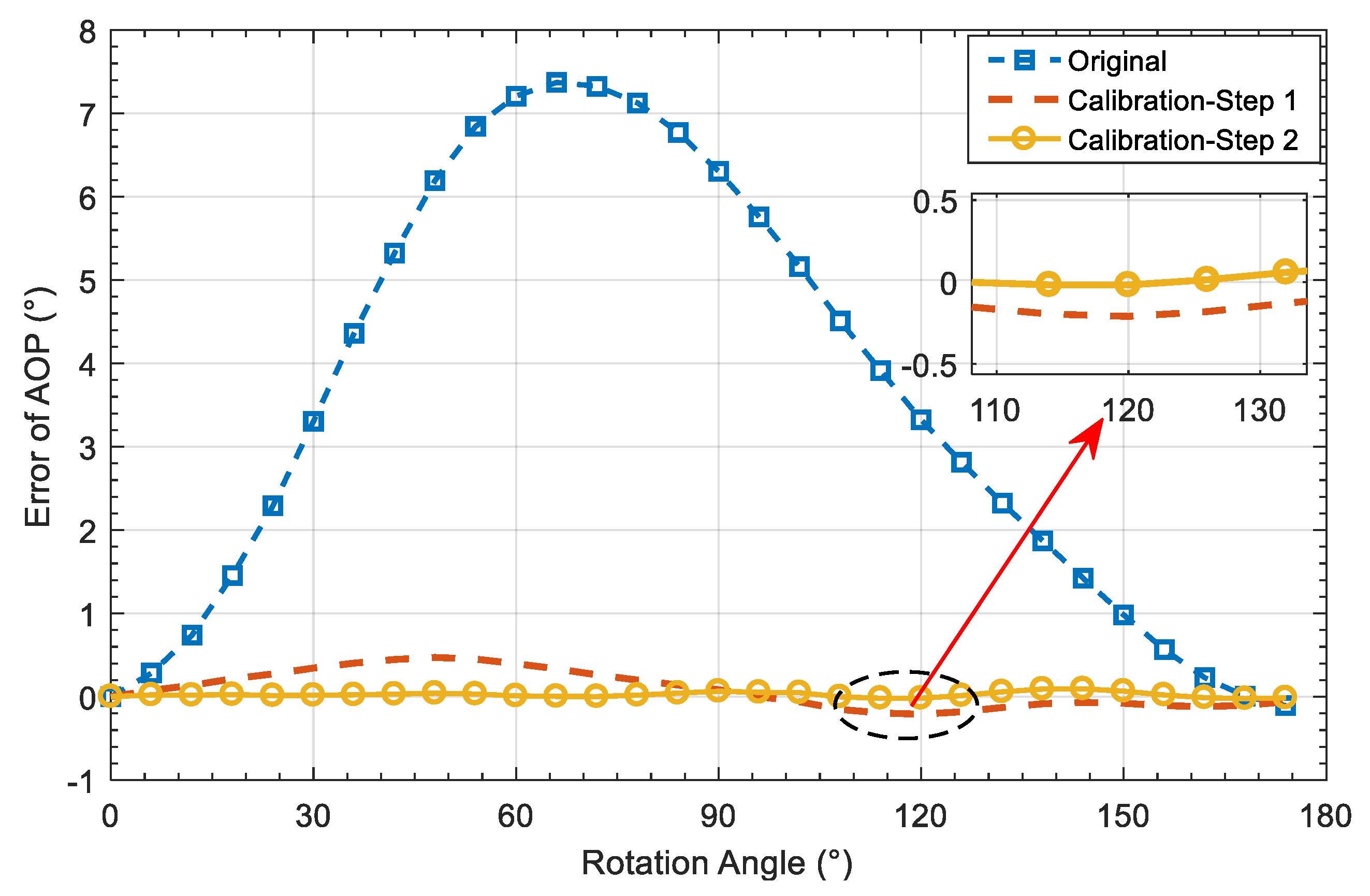

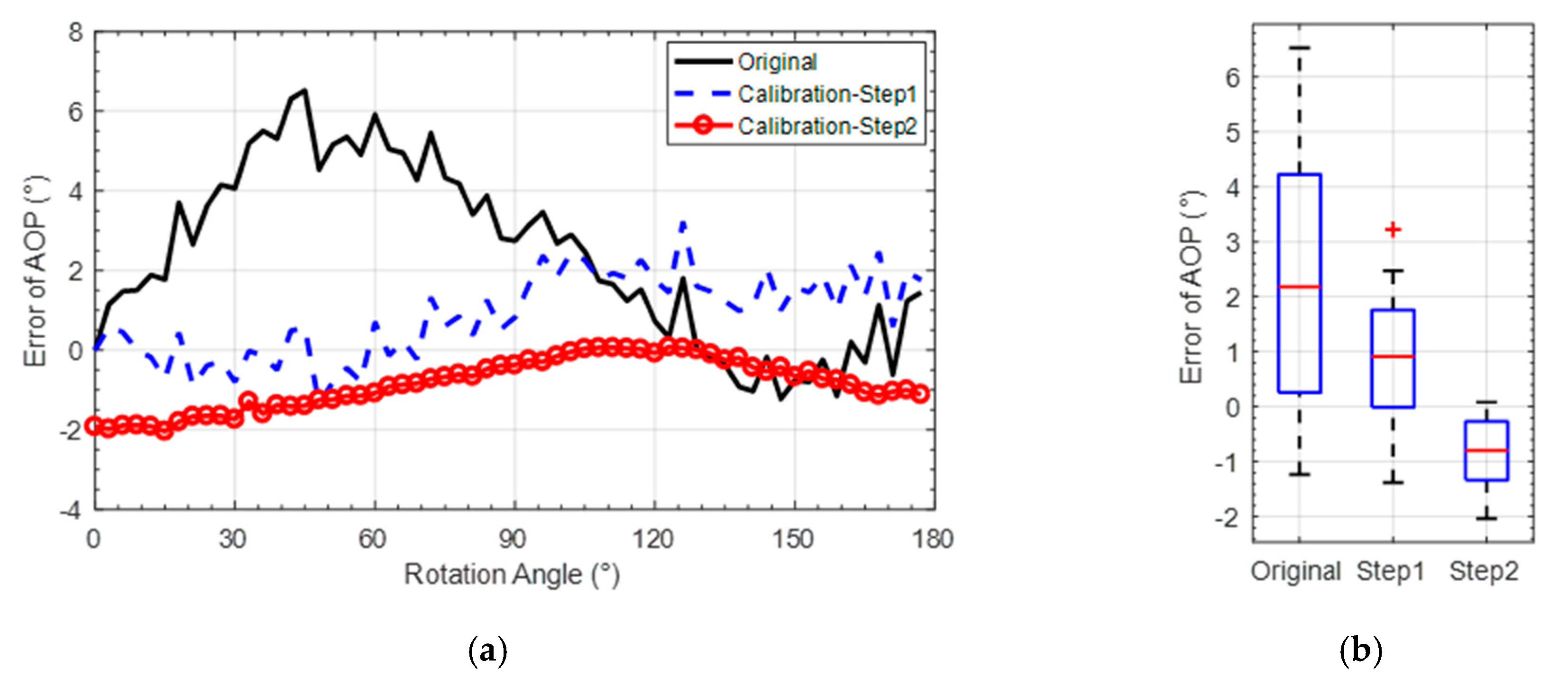

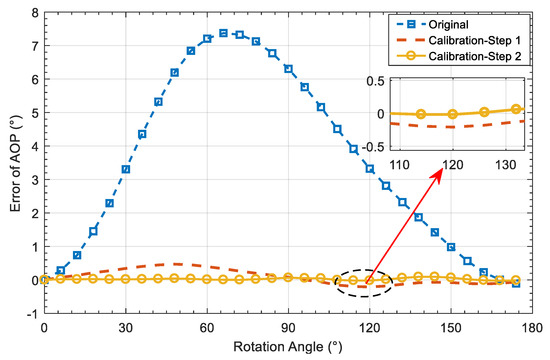

The rotation angle of the platform is used as the orientation reference of the bionic polarization sensor. The mean AOP error calculated over all polarization units was used as the orientation index to evaluate the calibration performance of the sensor, which is shown in Figure 8b and Figure 9. It can be seen that the SD of original AOP is 2.62°. The SD is 0.22° after calibration of CMOS camera photosensitivity model, which is reduced by 91.6% compared with that before calibration. The SD is further reduced to 0.04° after the calibration of polarizer model, which is reduced by 81.8% compared to step1 calibration. In addition, the mean absolute error (MAE) is reduced to 0.03° after the Step 2 calibration. The detailed comparison of orientation error with and without calibration is shown in Table 2.

Figure 9.

Comparison of AOP error before and after calibration.

Table 2.

Orientation-angle error comparison.

4.2.2. Performance Analysis of Calibration Model and Method

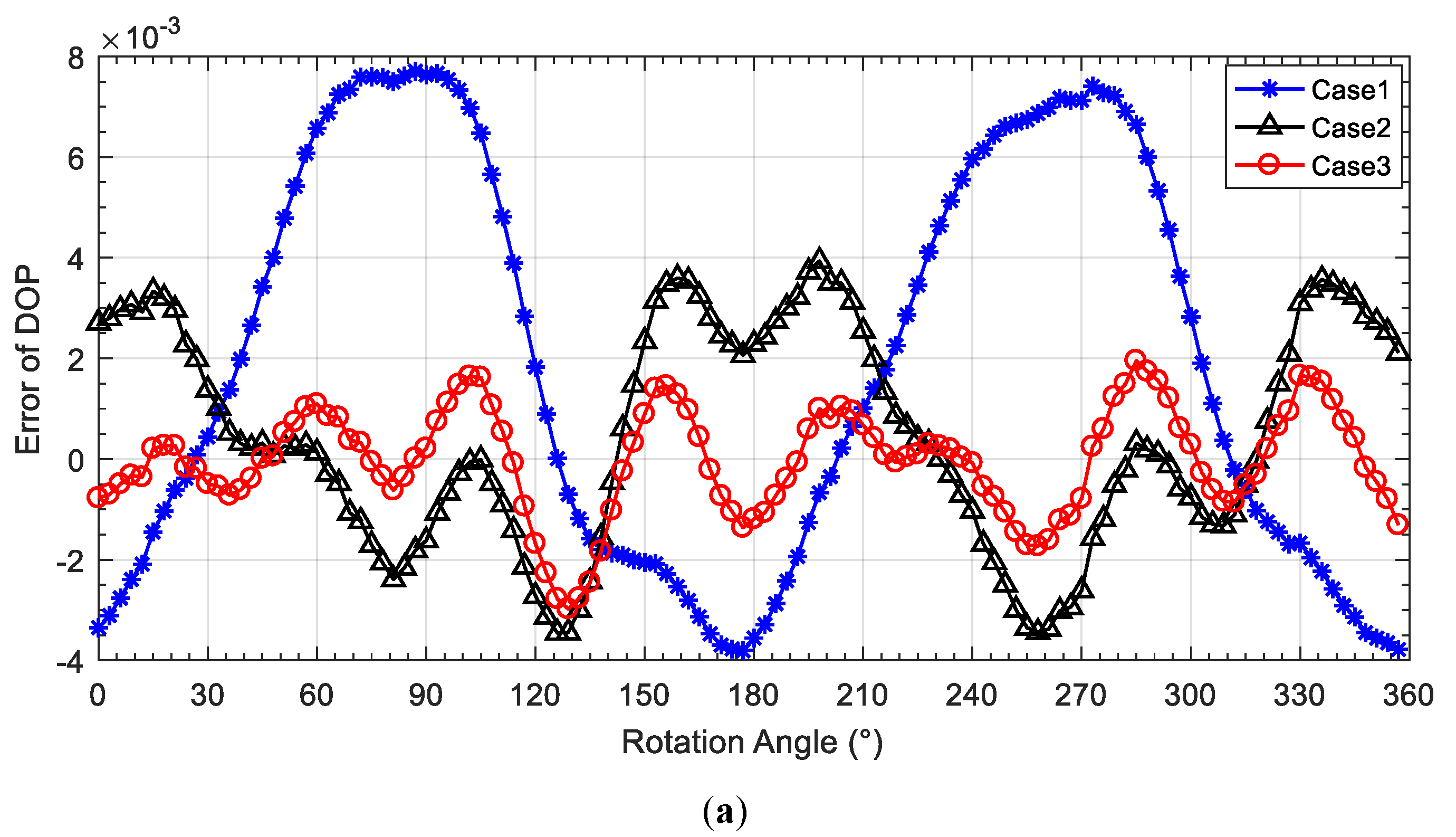

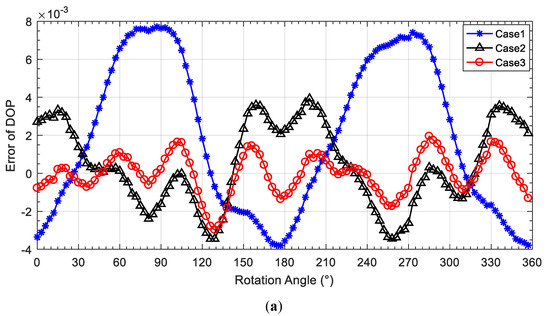

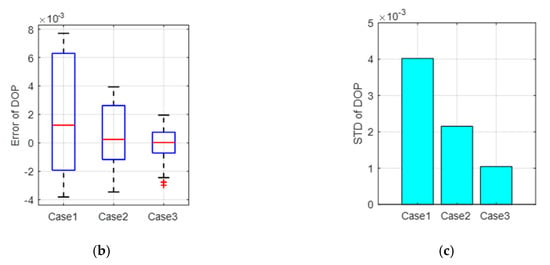

To validate the effectiveness of the proposed calibration model with ER and calibration method base on both AOP error and DOP error, three different comparative experiments were designed, which are referred to as Case1, Case2 and Case3 in the following experiments, which are described in detail in Table 3.

Table 3.

Description of the three cases.

The calibration error curves of DOP of the three cases are shown in Figure 10a. Figure 10b,c shows the error distribution and SD comparison of DOP error of the three cases. It can be seen that the SD DOP error for Case3 is decreased by 74.1% compared with that for Case1 and 51.6% compared with that for Case2. The mean absolute error (MAE) of DOP for Case3 is decreased by 77.9% compared with that for Case1 and 55.4% compared with that for Case2. The detailed experimental results can be found in Table 4. It can be seen that the calibration method considering both AOP and DOP errors for the proposed calibration model with the ER coefficient can effectively improve the DOP calculation of the bionic polarization sensor.

Figure 10.

DOP-error comparison of three cases. (a) DOP-error curve comparison; (b) DOP error distribution; (c) SD of DOP-error comparison.

Table 4.

Statistics comparison of DOP error.

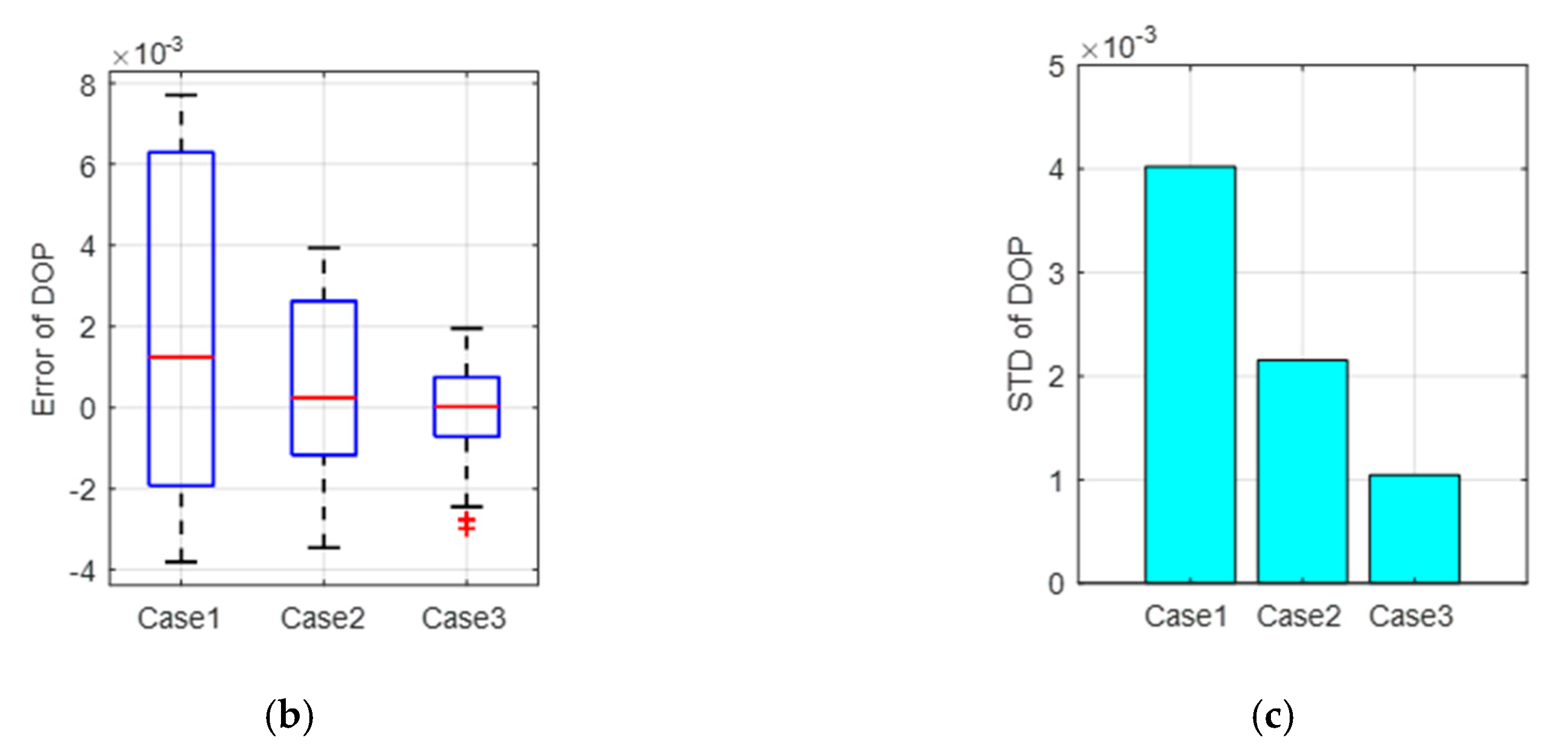

The performance comparison of AOP calibration of the three cases is also shown in Figure 11a. Figure 11b shows the comparison of the maximum absolute error (MAXE) of AOP obtained by the three cases. Detailed AOP calibration accuracies are shown in Table 5. It can be seen that the maximum absolute error of AOP derived from Case3 is decreased by 8.8% compared with that of Case1 and 1.9% compared with that of Case2. By comparing the three cases, it can be seen that the MAE and the SD of AOP are close. In a word, the proposed calibration model and calibration method can improve the sensor performance, mainly improving the DOP measurement performance, which is beneficial to DOP based navigation.

Figure 11.

AOP-error comparison of three cases. (a) AOP-error curve comparison; (b) MAXE of AOP-error comparison.

Table 5.

Statistics comparison of AOP error.

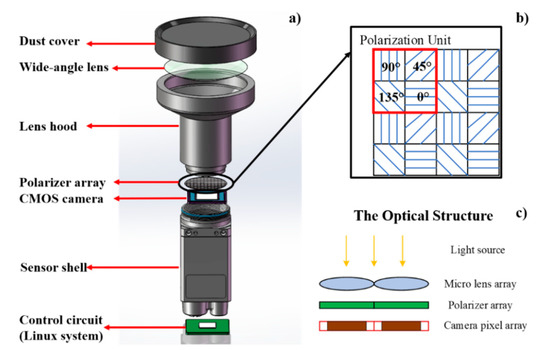

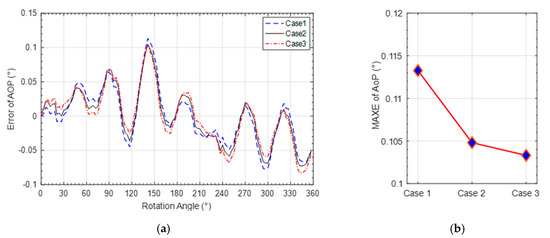

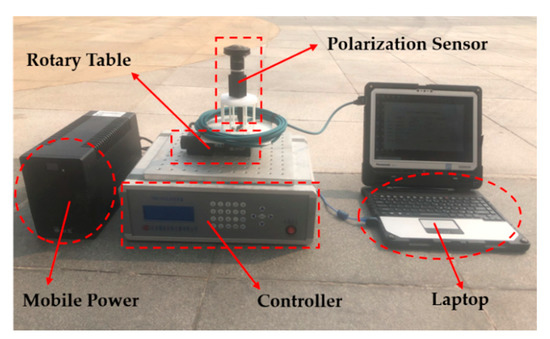

4.3. Outdoor Calibration Experiment

To further validate the effectiveness of the proposed calibration model and calibration method, the bionic camera-based polarization sensor was calibrated and tested outdoors at 16:00 on January 3, 2020 and 8:00 on January 4, 2020. The experiment was conducted on the campus of Beihang University (longitude 116.3398°, latitude 39.9794°, altitude 63.90 m). The same setup was used for the outdoor test as shown in Figure 12. The polarization sensor was fixed on a horizontal electric platform, and a calibration software was used to control the electric platform to rotate from 0° to 360° at 3° intervals. The skylight images were collected and saved with every rotation.

Figure 12.

Setup for outdoor test.

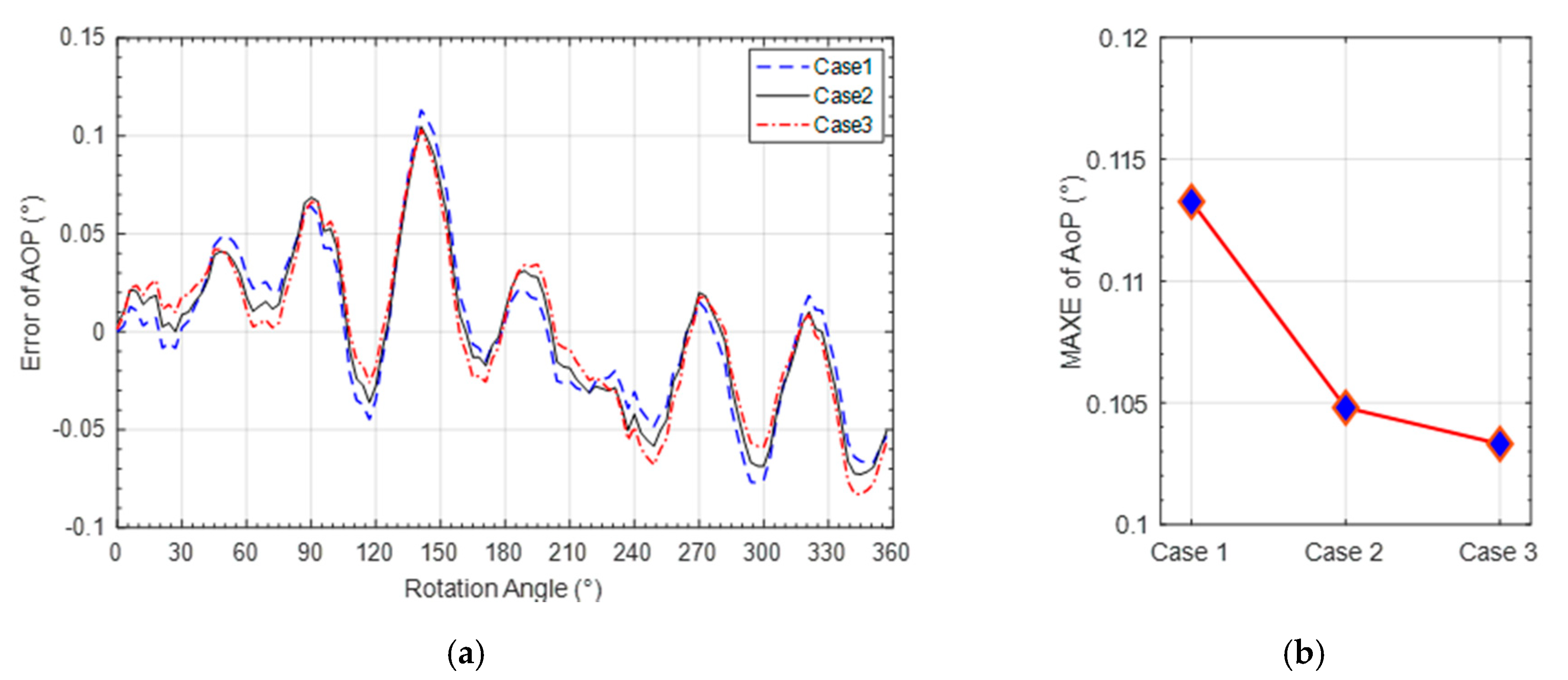

A polarization unit was selected to analyze the accuracy of outdoor calibration navigation. The difference value between the rotation angle of the turntable and the variation of the azimuth of the sun is used as the benchmark of the AOP. The orientation error was defined as the difference between the AOP of the output of the polarization sensor and the benchmark.

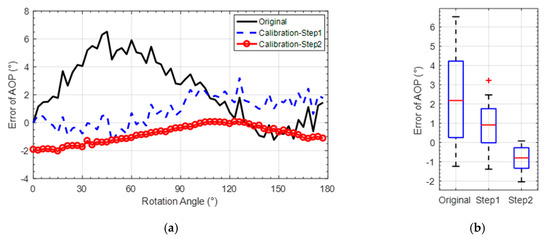

Figure 13a,b show the performance comparison of AOP error before and after calibration in terms of variation and SD values. The detailed calibrated AOP results are shown in Table 6. Compared with the results obtained before calibration, the MAE of AOP is decreased by 51.6% and the SD decreased to 38.8% after the Step 1 calibration. Compared with the Step 1 calibration, the MAE of AOP is decreased by 48.5% and the SD decreased to 38.8% after calibration of the Step 2.

Figure 13.

AOP-error comparison before and after calibration. (a) AOP-error curve comparison; (b) AOP error distribution.

Table 6.

Statistics comparison of AOP error.

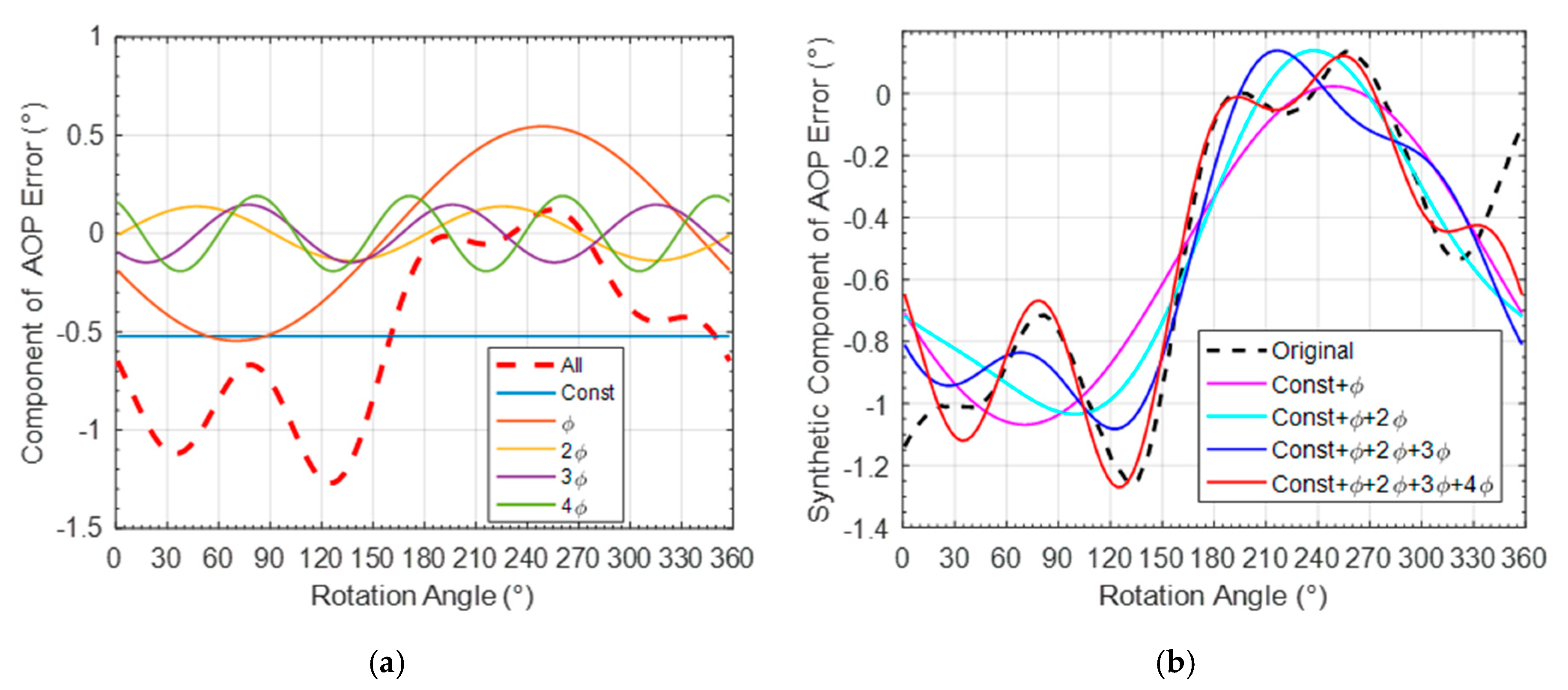

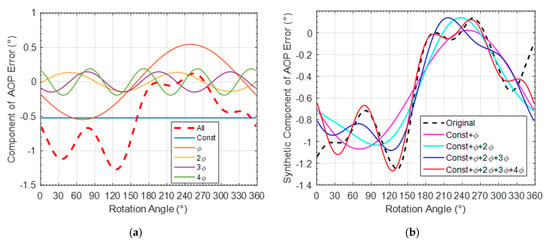

In addition, it can be seen that the calibrated polarization accuracies achieved in outdoor environment are worse than that in indoor environment. To analyze the influence of the complex outdoor interference on the polarization information acquisition, the error of calibrated AOP was analyzed with the frequency spectrum, as shown in Figure 14a ( is the frequency of platform rotation).

Figure 14.

Spectral analysis of the outdoor AOP error. (a) spectrum component; (b) synthetic component of AOP error.

Figure 14b is the synthetic component of the AOP error with different added harmonic components. It can be seen that the AOP error after calibration has a constant error of about 0.5°. and in the first four harmonic components, frequency harmonic component has a maximum amplitude. In the future work, the influence of platform rotation and outdoor environment factors on the sensor will be further analyzed to facilitate the practical navigation application of the bionic polarization sensor.

5. Conclusions

In this study, a new calibration method for camera-based bionic polarization sensor was proposed to account for the inconsistent extinction ratio of polarizer. According to the characteristic of the sensor, an extinction ratio coefficient was introduced to the calibration model. To achieve high accuracies of the estimated model parameters, an iterative least square calibration algorithm considering both AOP and DOP errors was proposed. Both indoor and outdoor calibration experiments were carried out to evaluate the proposed calibration model and calibration method. The results showed that 0.04° (1 ) calculated AOP accuracy with 0.10° maximum absolute error can be achieved after the indoor calibration and 0.71° (1 ) AOP accuracy after the outdoor calibration. To further validate the efficiency of the proposed calibration method, three different comparative experiments were conducted. It showed that the calibration model with extinction ratio coefficient can effectively improve the accuracies of the calculated AOP and DOP. The standard deviations of DOP and AOP were reduced by 46.5% and 2.0%, respectively.

Author Contributions

Conceptualization, H.R., J.Y. and X.L.; data curation, H.R.; methodology, H.R. and X.L.; software, H.R.; formal analysis, H.R. and X.L.; investigation, H.R.; validation, L.G. and J.Y.; project administration, L.G. and Y.J.; funding acquisition, L.G.; writing original draft preparation, H.R.; writing review and editing, J.Y., L.G. and P.H.; All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grant 61627810, Grant 61751302 and Grant 61973012) and Hangzhou Science and Technology Major Innovation Projects (Grant 20182014B06).

Acknowledgments

The authors would like to express their sincere gratitude to the Editor-in-Chief, the Associate Editor and the anonymous reviewers whose insightful comments have helped to improve the quality of this study considerably.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Heinze, S. Unraveling the neural basis of insect navigation. Curr. Opin. Insect. Sci. 2017, 24, 58–67. [Google Scholar] [CrossRef]

- Uwe, H.; Stanley, H.; Keram, P.; Michiyo, K.; Basil, J. Central neural coding of sky polarization in insects. Philos. Trans. Biol. Sci. 2011, 366, 680–687. [Google Scholar]

- Labhart, T. Specialized photoreceptors at the dorsal rim of the honeybee’s compound eye: Polarizational and angular sensitivity. J. Comp. Physiol. 1980, 141, 19–30. [Google Scholar] [CrossRef]

- Rossel, S.; Wehner, R. Polarization vision in bees. Nature 1986, 323, 128–131. [Google Scholar] [CrossRef]

- Collett, M.; Collett, T.; Bisch, S.; Wehner, R. Local and global vectors in desert ant navigation. Nature 1998, 394, 269–272. [Google Scholar] [CrossRef]

- Zhao, K.; Chu, J.; Wang, T.; Zhang, Q. A novel angle algorithm of polarization sensor for navigation. IEEE Trans. Instrum. Meas. 2009, 58, 2791–2796. [Google Scholar] [CrossRef]

- Dupeyroux, J.; Serres, J.; Viollet, S. AntBot: A six-legged walking robot able to home like desert ants in outdoor environments. Sci. Robot. 2019, 4, eaau0307. [Google Scholar] [CrossRef]

- Yang, J.; Du, T.; Niu, B.; Li, C.; Qian, J.; Guo, L. A bionic polarization navigation sensor based on polarizing beam splitter. IEEE Access 2018, 6, 11472–11481. [Google Scholar] [CrossRef]

- Salmah, K.; Diah, S.; Ille, G. Bio-inspired polarized skylight-based navigation sensors: A review. Sensors 2012, 12, 14232–14261. [Google Scholar]

- Lambrinos, D.; Kobayashi, H.; Pfeifer, R.; Maris, M.; Labhart, T.; Wehner, R. An autonomous agent navigating with a polarized light compass. Adapt. Behav. 1997, 6, 131–161. [Google Scholar] [CrossRef]

- Zhao, H.; Xu, W. A Bionic Polarization navigation sensor and its calibration method. Sensors 2016, 16, 1223. [Google Scholar] [CrossRef]

- Zhi, W.; Chu, J.; Li, J.; Wang, Y. A novel attitude determination system aided by polarization sensor. Sensors 2018, 18, 158. [Google Scholar] [CrossRef]

- Dupeyroux, J.; Viollet, S.; Serres, J. An ant-inspired celestial compass applied to autonomous outdoor robot navigation. Robot. Auton. Syst. 2019, 117, 40–56. [Google Scholar] [CrossRef]

- Barta, A.; Horváth, G. Why is it advantageous for animals to detect celestial polarization in the ultraviolet? Skylight polarization under clouds and canopies is strongest in the UV. J. Theor. Biol. 2004, 226, 429–437. [Google Scholar] [CrossRef]

- Dupeyroux, J.; Diperi, J.; Boyron, M.; Viollet, S.; Serres, J. A bio-inspired celestial compass applied to an ant-inspired robot for autonomous navigation. In Proceedings of the 2017 European Conference on Mobile Robots (ECMR), Paris, France, September 2017; pp. 1–6. [Google Scholar]

- Pomozi, I.; Horváth, G.; Wehner, R. How the clear-sky angle of polarization pattern continues underneath clouds: Full-sky measurements and implications for animal orientation. J. Exp. Biol. 2001, 204, 2933. [Google Scholar]

- Ahsan, M.; Cai, Y.; Zhang, W. Information extraction of bionic camera-based polarization navigation patterns under noisy weather conditions. J. Shanghai Jiaotong Univ. (Sci.) 2020, 25, 18–26. [Google Scholar] [CrossRef]

- Zhang, W.; Cao, Y.; Zhang, X.; Liu, Z. Sky light polarization detection with linear polarizer triplet in light field camera inspired by insect vision. Appl. Opt. 2015, 54, 8962. [Google Scholar] [CrossRef]

- Mukul, S.; David, S.; Chris, H.; Albert, T. Biologically inspired autonomous agent navigation using an integrated polarization analyzing CMOS image sensor. Procedia Eng. 2010, 5, 673–676. [Google Scholar]

- Powell, S.; Gruev, V. Calibration methods for division-of-focal-plane polarimeters. Opt. Express 2013, 21, 21039. [Google Scholar] [CrossRef]

- Powell, S.; Gruev, V. Evaluation of calibration methods for visible-spectrum division-of-focal-plane polarimeters. SPIE Opt. Eng. Appl. 2013, 8873, 887306. [Google Scholar]

- Wolfgang, S.; Carey, N. A fisheye camera system for polarization detection on UAVs. Computer Vision–ECCV 2012, 7584, 431–440. [Google Scholar]

- Wolfgang, S. A lightweight single-camera polarization compass with covariance estimation. IEEE ICCV 2017, 1, 5363–5371. [Google Scholar]

- Zhang, S.; Liang, H.; Zhu, H.; Wang, D.; Yu, B. A camera-based real-time polarization sensor and its application to mobile robot navigation. IEEE ROBIO 2014, 271–276. [Google Scholar]

- Wang, D.; Liang, H.; Zhu, H.; Zhang, S. A bionic camera-based polarization navigation sensor. Sensors 2014, 14, 13006–13023. [Google Scholar] [CrossRef] [PubMed]

- Sun, S.; Gao, J.; Wang, D.; Yang, T.; Wang, X. Improved models of imaging of skylight polarization through a fisheye lens. Sensors 2019, 19, 4844. [Google Scholar] [CrossRef] [PubMed]

- Lu, H.; Zhao, K.; You, Z.; Huang, K. Real-time polarization imaging algorithm for camera-based polarization navigation sensors. Appl. Opt. 2017, 56, 3199. [Google Scholar] [CrossRef] [PubMed]

- Fan, C.; Hu, X.; Lian, J.; Zhang, L.; He, X. Design and calibration of a novel camera-based bio-inspired polarization navigation sensor. IEEE Sens. J. 2016, 16, 3640–3648. [Google Scholar] [CrossRef]

- Han, G.; Hu, X.; Lian, J.; He, X.; Zhang, L.; Wang, Y.; Dong, F. Design and calibration of a novel bio-inspired pixelated polarized light compass. Sensors 2017, 17, 2623. [Google Scholar] [CrossRef]

- Aycock, T.; Lompado, A.; Wheeler, B. Using atmospheric polarization patterns for azimuth sensing. SPIE Def. Secur. 2014, 9085, 90850B. [Google Scholar]

- Todd, A.; Art, L.; Troy, W.; David, C. Passive optical sensing of atmospheric polarization for GPS denied operations. Proc. SPIE 2016, 9838, 98380Y. [Google Scholar]

- Xian, Z.; Hu, X.; Lian, J.; Zhang, L.; Cao, J.; Wang, Y.; Ma, T. A novel angle computation and calibration algorithm of bio-inspired sky-light polarization navigation sensor. Sensors 2014, 14, 17068–17088. [Google Scholar] [CrossRef]

- Wang, Y.; Chu, J.; Zhang, R.; Li, J.; Guo, X.; Lin, M. A bio-inspired polarization sensor with high outdoor accuracy and central-symmetry calibration method with integrating sphere. Sensors 2019, 19, 3448. [Google Scholar] [CrossRef] [PubMed]

- Rogers, A. Polarization optics. Essent. Optoelectron. 1997. [Google Scholar] [CrossRef]

- Gruev, V.; Perkins, R.; York, T. CCD polarization imaging sensor with aluminum nanowire optical filters. Opt. Express 2010, 18, 19087–19094. [Google Scholar] [CrossRef]

- Kiyotaka, S.; Sanshiro, S.; Keisuke, A.; Hitoshi, M.; Toshihiko, N.; Takashi, T.; Kiyomi, K.; Jun, O. Image sensor pixel with on-chip high extinction ratio polarizer based on 65-nm standard CMOS technology. Opt. Express 2013, 21, 11132–11140. [Google Scholar]

- Hagen, N.; Shu, S.; Otani, Y. Calibration and performance assessment of microgrid polarization cameras. Opt. Eng. 2019, 58, 082408. [Google Scholar]

- Zhang, H.; Zhang, J.; Yang, B.; Yan, C. Calibration for polarization remote sensing system with focal plane divided by multi-linear array. Acta. Optica. Sinica. 2016, 36, 1128003. [Google Scholar] [CrossRef]

- York, T.; Gruev, V. Optical characterization of a polarization imager. In Proceedings of the International Symposium on Circuits and Systems (ISCAS 2011), Rio de Janeiro, Brazil, 15–19 May 2011; pp. 1576–1579. [Google Scholar]

- Zavrsnik, M.; Donlagic, D. Fiber optic polarimetric thermometer using low extinction ratio polarizer and low coherence source. In Proceedings of the 16th IEEE Instrumentation and Measurement Technology Conference, Venice, Italy, 24–26 May 1999; Volume 3, pp. 1520–1525. [Google Scholar]

- Liao, Y. Polarization Optics; Beijing Science Press: Beijing, China, 2003; Volume 55, pp. 49–51. [Google Scholar]

- Golub, G. Numerical methods for solving linear least squares problems. Numer. Math. 1965, 7, 206–216. [Google Scholar] [CrossRef]

- Yang, J.; Niu, B.; Du, T.; Liu, X.; Wang, S.; Guo, L. Disturbance analysis and performance test of the polarization sensor based on polarizing beam splitter. Sen. Rev. 2019, 39, 341–351. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).