An On-Device Deep Learning Approach to Battery Saving on Industrial Mobile Terminals

Abstract

1. Introduction

2. Related Work

3. Proposed Method

3.1. Motivating Example

3.2. Proposed Solution

- To make the CNN judge whether or not there is frost in the image from the scanner, we train it with examples (i.e., supervised learning). We take the pictures of actual frost on the scanner window and use them to train the CNN.

- We incorporate the CNN in the final frost-identification logic that is embedded in the mobile terminal to classify the image data from the scanner in real time.

4. Implementation

4.1. CNN Classifier for Frost Images

4.1.1. Dataset Preparation

4.1.2. CNN Structure Design

4.1.3. Training and Validation

- Batch size = 30

- Epochs = 20

- Dropout = 0.1

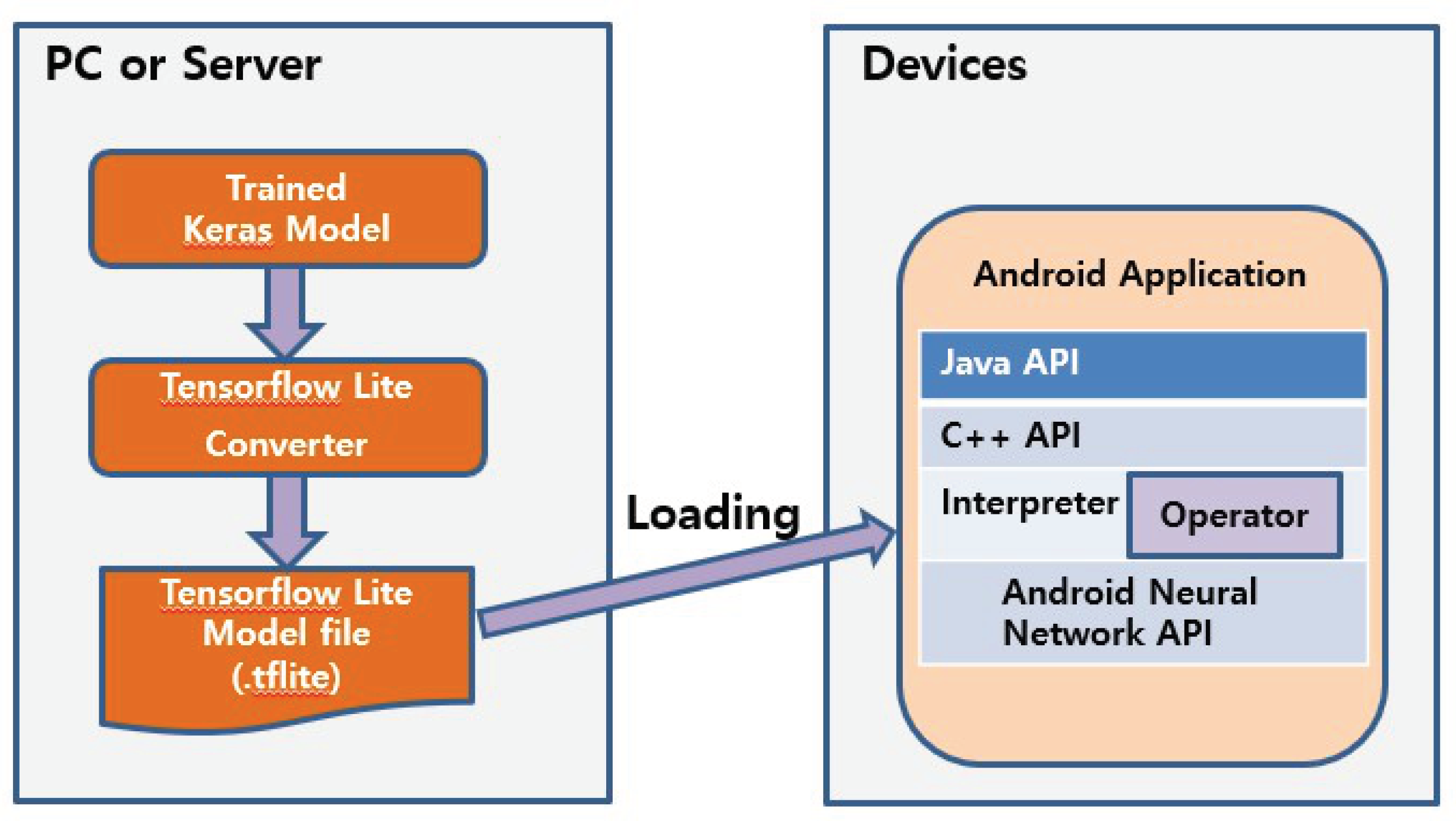

4.2. Transplanting the Trained CNN to an Industrial Mobile Terminal

4.3. Temperature Condition Checking Logic

- Both sensors at the scanner window and at the camera window report below-freezing temperatures;

- At least one of the temperature sensors records the temperature rising by more than a threshold from the measurement in the last second. The threshold is set to 1 C in our test system.

4.4. Image Brightness Checking Logic

4.4.1. Coping with False Negatives

| Algorithm 1 Suppressing false negatives through image brightness examination | |

| 1: if then | ▹ Poor lighting: just say “frost” to avoid false negative |

| 2: decision = “frost” | |

| 3: else | ▹ Favorable condition to run CNN classification |

| 4: decision = CNN_classification | |

| 5: end if | |

4.4.2. Coping with False Positives

4.5. Integrated Logic

5. Energy-Saving Performance of the Proposed System

6. Discussion

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Grand View Research. North America Cold Storage Market Size, Share, and Trends Analysis Report By Warehouse (Private & Semi Private, Public), By Construction, By Temperature (Chilled, Frozen), By Application, and Segment Forecasts 2018–2025. Available online: https://www.researchandmarkets.com/reports/4538884/north-america-cold-storage-market-size-share (accessed on 21 July 2020).

- Keras: The Python Deep Learning library. Available online: https://keras.io/ (accessed on 25 May 2020).

- Tensorflow Lite. Available online: https://www.tensorflow.org/lite (accessed on 25 May 2020).

- Wu, C.J.; Brooks, D.; Chen, K.; Chen, D.; Choudhury, S.; Dukhan, M.; Hazelwood, K.; Isaac, E.; Jia, Y.; Jia, B.; et al. Machine Learning at Facebook: Understanding Inference at the Edge. In Proceedings of the IEEE International Symposium on High Performance Computer Architecture (HPCA), Washington, DC, USA, 16–20 February 2019. [Google Scholar]

- Zou, Z.; Jin, Y.; Nevalainen, P.; Huan, Y.; Heikkonen, J.; Westerlund, T. Edge and Fog Computing Enabled AI for IoT—An Overview. In Proceedings of the IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hsinchu, Taiwan, 18–20 March 2019. [Google Scholar]

- Hu, W.; Gao, Y.; Ha, K.; Wang, J.; Amos, B.; Chen, Z.; Pillai, P.; Satyanarayanan, M. Quantifying the Impact of Edge Computing on Mobile Applications. In Proceedings of the ACM Asia-Pacific Workshop on Systems (APSys), Hong Kong, China, 4–5 August 2016. [Google Scholar]

- Liu, S.; Lin, Y.; Zhou, Z.; Nan, K.; Liu, H.; Du, J. On-Demand Deep Model Compression for Mobile Devices: A Usage-Driven Model Selection Framework. In Proceedings of the ACM Mobisys, Munich, Germany, 10–15 June 2018. [Google Scholar]

- Zeng, L.; Li, E.; Zhou, Z.; Chen, X. Boomerang: On-Demand Cooperative Deep Neural Network Inference for Edge Intelligence on the Industrial Internet of Things. IEEE Netw. 2019, 33, 96–103. [Google Scholar] [CrossRef]

- Teerapittayanon, S.; McDanel, B.; Kung, H.T. Distributed Deep Neural Networks Over the Cloud, the Edge and End Devices. In Proceedings of the IEEE ICDCS, Atlanta, GA, USA, 5–8 June 2017. [Google Scholar]

- Guo, P.; Hu, B.; Li, R.; Hu, W. FoggyCache: Cross-Device Approximate Computation Reuse. In Proceedings of the ACM Mobicom, New Delhi, India, 29 October–2 November 2018. [Google Scholar]

- Wang, J.; Feng, Z.; Chen, Z.; George, S.; Bala, M.; Pillai, P.; Yang, S.-W.; Satayanarayanan, M. Bandwidth-Efficient Live Video Analytics for Drones Via Edge Computing. In Proceedings of the IEEE/ACM Symposium on Edge Computing (SEC), Seattle, WA, USA, 25–27 October 2018. [Google Scholar]

- Jiang, J.; Ananthanarayanan, G.; Bodik, P.; Sen, S.; Stoica, I. Chameleon: Scalable adaptation of video analytics. In Proceedings of the ACM SIGCOMM, Budapest, Hungary, 20–25 August 2018. [Google Scholar]

- Fang, B.; Zeng, X.; Zhang, M. NestDNN: Resource-Aware Multi-Tenant On-Device Deep Learning for Continuous Mobile Vision. In Proceedings of the ACM Mobicom, New Delhi, India, 29 October–2 November 2018. [Google Scholar]

- Ran, X.; Chen, H.; Zhu, X.; Liu, Z.; Chen, J. DeepDecision: A Mobile Deep Learning Framework for Edge Video Analytics. In Proceedings of the IEEE Infocom, Honolulu, HI, USA, 15–19 April 2018. [Google Scholar]

- Zhi, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence With Edge Computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef]

- Dundar, A.; Jin, J.; Martini, B.; Culurciello, E. Embedded streaming deep neural networks accelerator with applications. IEEE Trans Neural Netw. Learn. Syst. 2017, 28, 1572–1583. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Hu, Q.; Leng, C.; Cheng, J. Shoot to Know What: An Application of Deep Networks on Mobile Devices. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence (AAAI-16), Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Alippi, C.; Disabato, S.; Roveri, M. Moving Convolutional Neural Networks to Embedded Systems: The AlexNet and VGG-16 Case. In Proceedings of the 17th ACM/IEEE International Conference on Information Processing in Sensor Networks, Porto, Portugal, 11–13 April 2018. [Google Scholar]

- Ignatov, A.; Timofte, R.; Chou, W.; Wang, K. AI Benchmark: Running Deep Neural Networks on Android Smartphones. arXiv 2018, arXiv:1810.01109. [Google Scholar]

- Alzantot, M.; Wang, Y.; Ren, Z.; Srivastava, M.B. RSTensorFlow: GPU Enabled TensorFlow for Deep Learning on Commodity Android Devices. In Proceedings of the ACM EMDL, Niagara Falls, NY, USA, 23 June 2017. [Google Scholar]

- Wang, C.; Lin, C.; Yang, M. Activity Recognition Based on Deep Learning and Android Software. In Proceedings of the 2018 IEEE International Conference on Cyborg and Bionic Systems, Shenzhen, China, 25–27 October 2018. [Google Scholar]

- Ali, Z.; Jiao, L.; Baker, T.; Abbas, G.; Abbas, Z.H.; Khaf, S. A Deep Learning Approach for Energy Efficient Computational Offloading in Mobile Edge Computing. IEEE Access 2019, 7, 149623–149633. [Google Scholar] [CrossRef]

- Plastiras, G.; Kyrkou, C.; Theocharides, T. EdgeNet: Balancing Accuracy and Performance for Edge-based Convolutional Neural Network Object Detectors. In Proceedings of the 13th International Conference on Distributed Smart Cameras, Trento, Italy, 9–11 September 2019. [Google Scholar]

- Zhang, X.; Wang, Y.; Shi, W. pCAMP: Performance Comparison of Machine Learning Packages on the Edges. In Proceedings of the USENIX Workshop on Hot Topics in Edge Computing (HotEdge 18), Boston, MA, USA, 9 July 2018. [Google Scholar]

- Omnii XT15 Mobile Computer, Product Spec Sheet. Available online: https://www.zebra.com/us/en/products/spec-sheets/mobile-computers/handheld/omnii-xt15.html (accessed on 25 May 2020).

- Pu, Q.; Ananthanarayanan, G.; Bodik, P.; Kandula, S.; Akella, A.; Bahl, P.; Stoica, I. Low latency geo-distributed data analytics. In Proceedings of the ACM SIGCOMM, London, UK, 17–21 August 2015; pp. 421–434. [Google Scholar]

- M3 Mobile. Available online: http://www.m3mobile.net/product/view/427#container (accessed on 25 May 2020).

- An End-to-End Open Source Machine Learning Platform. Available online: https://www.tensorflow.org/ (accessed on 25 May 2020).

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412:6980. [Google Scholar]

- Li, L.; Jamieson, K.G.; Desalvo, G.; Rostamizadeh, A.; Talwalkar, A. Hyperband: A novel bandit-based approach to hyperparameter optimization. J. Mach. Learn. Res. 2018, 18, 1–52. [Google Scholar]

- Neural Networks API. Available online: https://developer.android.com/ndk/guides/neuralnetworks (accessed on 25 May 2020).

| Avg. Current | Voltage | Avg. Power Consumption | Battery Life | |

|---|---|---|---|---|

| System | 200 mA | 3.6 V | 0.72 W | 11.1 h (=19 Wh/1.71 W) |

| 2D scanner | 300 mA | 3.3 V | 0.99 W | |

| System | 200 mA | 3.6 V | 0.72 W | 5.5 h (=19 Wh/3.46 W) |

| 2D scanner | 300 mA | 3.3 V | 0.99W | |

| Film heater | 700 mA | 5.0 V | 1.75 W (at 50%) |

| Dataset | No. of Images | Test Device | Description |

|---|---|---|---|

| Training | 2000 | PC | 1000 frost images + 1000 no-frost images |

| Validation | 200 | PC | 100 frost images + 100 no-frost images |

| Test I | 551 | Mobile terminal | Frost images under various lighting conditions |

| Test II | 600 | Mobile terminal | Frost images under various lighting conditions |

| Test III | 405 | Mobile terminal | No-frost images under various lighting conditions |

| Total | 3756 |

| Layer | Tensor Size | Weights | Biases | Number of Parameters |

|---|---|---|---|---|

| Input image | 28 × 28 × 1 | 0 | 0 | 0 |

| Convolution-1 | 26 × 26 × 32 | 288 | 32 | 320 |

| Subsampling-1 | 13 × 13 × 32 | 0 | 0 | 0 |

| Convolution-2 | 12 × 12 × 64 | 8192 | 64 | 8256 |

| Subsampling-2 | 6 × 6 × 64 | 0 | 0 | 0 |

| Convolution-3 | 4 × 4 × 128 | 73,728 | 128 | 73,856 |

| Subsampling-3 | 2 × 2 × 128 | 0 | 0 | 0 |

| FullyConnected-1 | 624 × 1 | 319,488 | 624 | 320,112 |

| FullyConnected-2 | 64 × 1 | 39,949 | 64 | 40,013 |

| Output | 2 × 1 | 128 | 2 | 130 |

| Total | 441,773 | 914 | 442,687 | |

| Cases | |||

|---|---|---|---|

| Good lighting | > | > | 0 |

| – | 3 | ||

| < | 5 | ||

| Poor lighting | < | < | 6 |

| – | < | 15 | |

| < | < | 40 | |

| Total | 69 | ||

| Mixed Colors | Single Color | ||||

|---|---|---|---|---|---|

| Good Lighting | Poor Lighting | Good Lighting | Poor Lighting | ||

| White/Gray | Other Colors | ||||

| No. of tests | 270 | 30 | 135 | 135 | 30 |

| Correct (“frost”) | 261 | 30 | 135 | 135 | 30 |

| Incorrect (“no frost”) | 9 | 0 | 0 | 0 | 0 |

| Accuracy (%) | 96.7 | 100 | 100 | 100 | 100 |

| Mixed Colors | Single Color | |

|---|---|---|

| No. of tests | 270 | 135 |

| Correct (“no frost”) | 266 | 134 |

| Incorrect (“frost”) | 4 | 1 |

| Accuracy (%) | 98.5 | 99.3 |

| Avg. Current | Voltage | Power Consumption | Battery Life | |

|---|---|---|---|---|

| System (Idle) | 190 mA | 3.6 V | 0.68 W | - |

| 2D Scanner | 300 mA | 3.3 V | 0.99 W | |

| Film heater (traditional) | 335 mA | 5 V | 1.68 W | 7.2 h (=24 Wh/2.36 W) |

| Film heater (edge AI) | 24 mA | 5 V | 0.12 W | 13.4 h (=24 Wh/0.8 W) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, I.; Kim, H. An On-Device Deep Learning Approach to Battery Saving on Industrial Mobile Terminals. Sensors 2020, 20, 4044. https://doi.org/10.3390/s20144044

Choi I, Kim H. An On-Device Deep Learning Approach to Battery Saving on Industrial Mobile Terminals. Sensors. 2020; 20(14):4044. https://doi.org/10.3390/s20144044

Chicago/Turabian StyleChoi, Inyeop, and Hyogon Kim. 2020. "An On-Device Deep Learning Approach to Battery Saving on Industrial Mobile Terminals" Sensors 20, no. 14: 4044. https://doi.org/10.3390/s20144044

APA StyleChoi, I., & Kim, H. (2020). An On-Device Deep Learning Approach to Battery Saving on Industrial Mobile Terminals. Sensors, 20(14), 4044. https://doi.org/10.3390/s20144044