Sensors and Systems for Physical Rehabilitation and Health Monitoring—A Review

Abstract

:1. Introduction

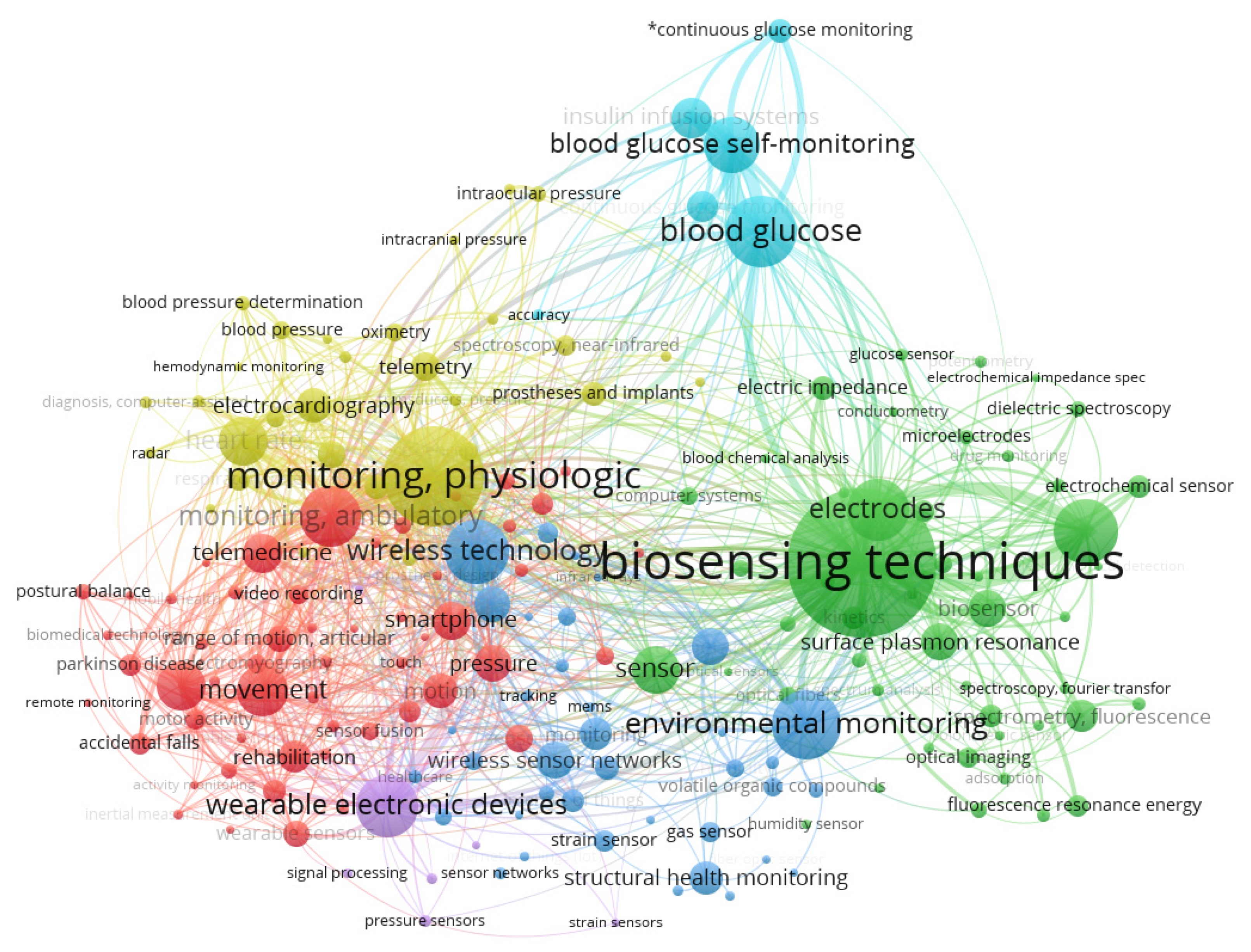

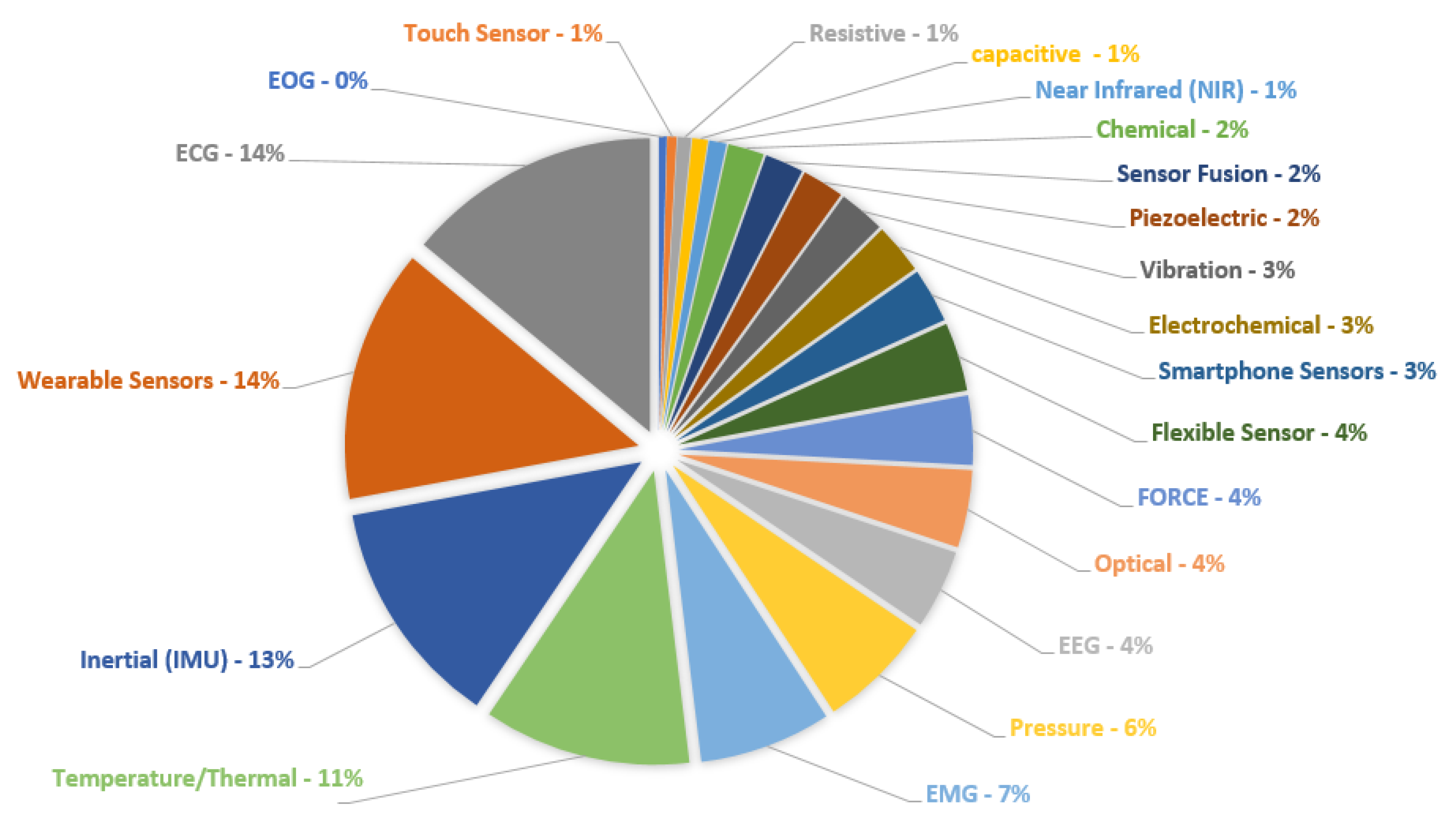

2. Methodology for This Review

3. Sensors and Systems for Rehabilitation and Health Monitoring

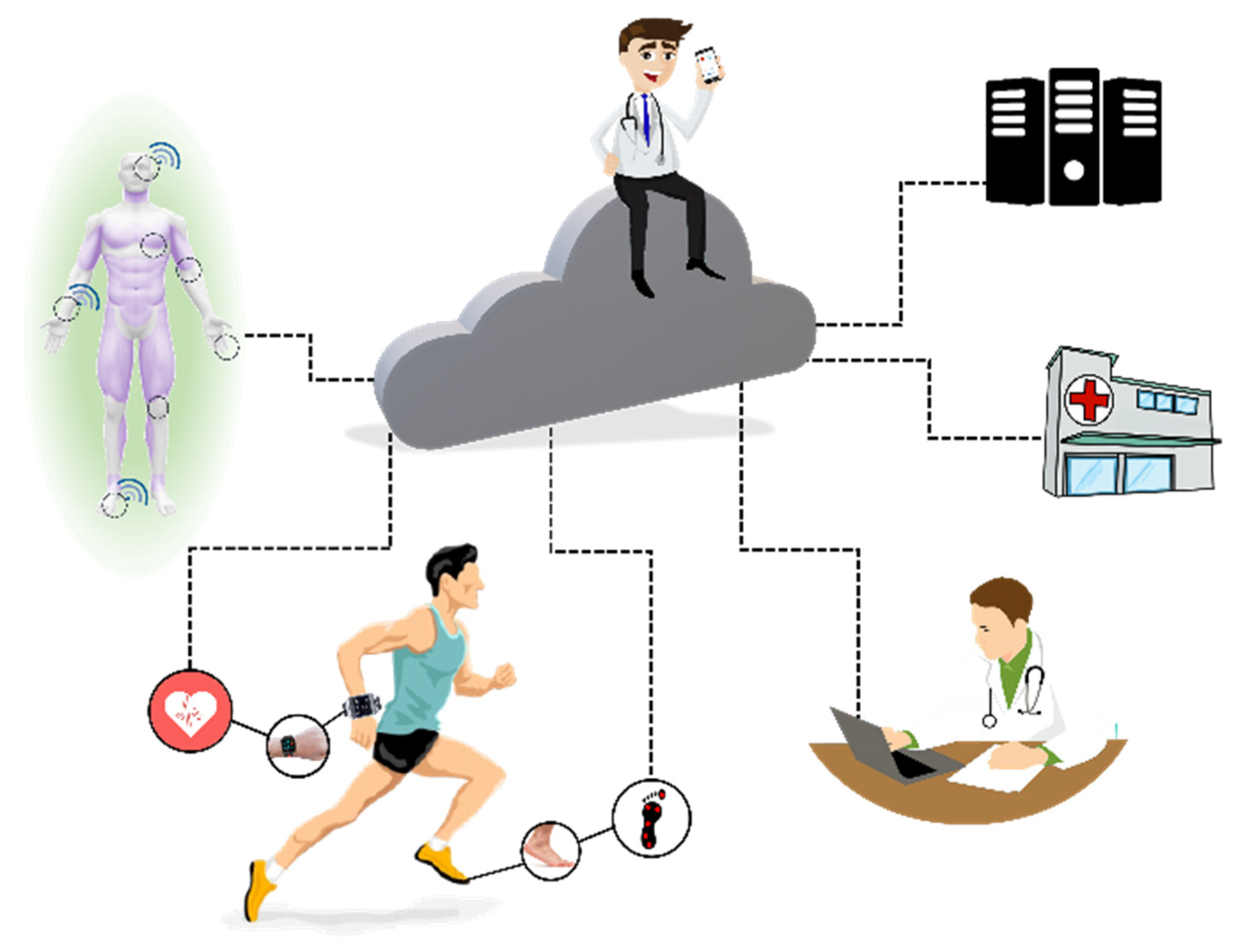

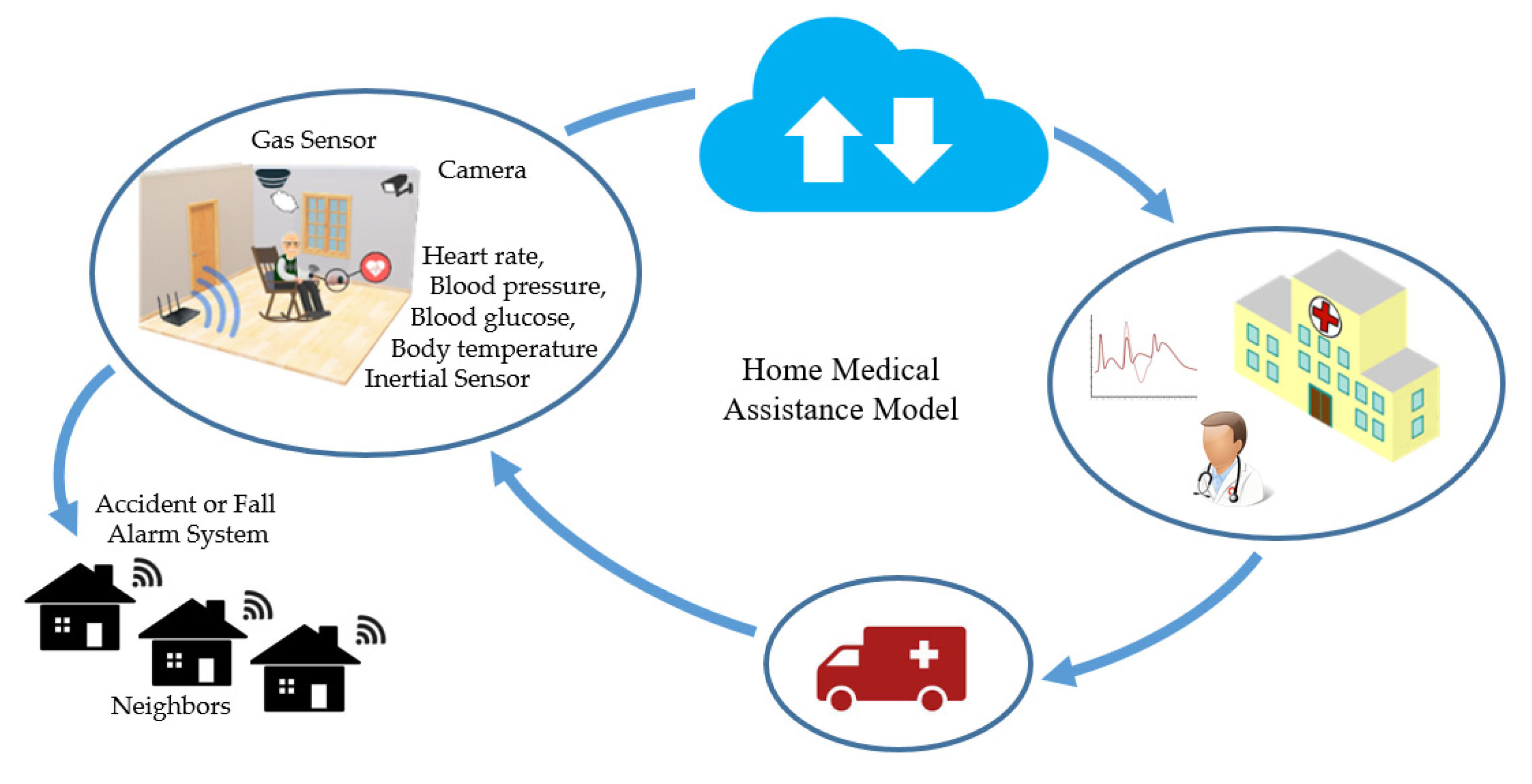

3.1. Sensors in Healthcare, Home Medical Assistance, and Continuous Health Monitoring

3.2. Systems and Sensors in Physical Rehabilitation

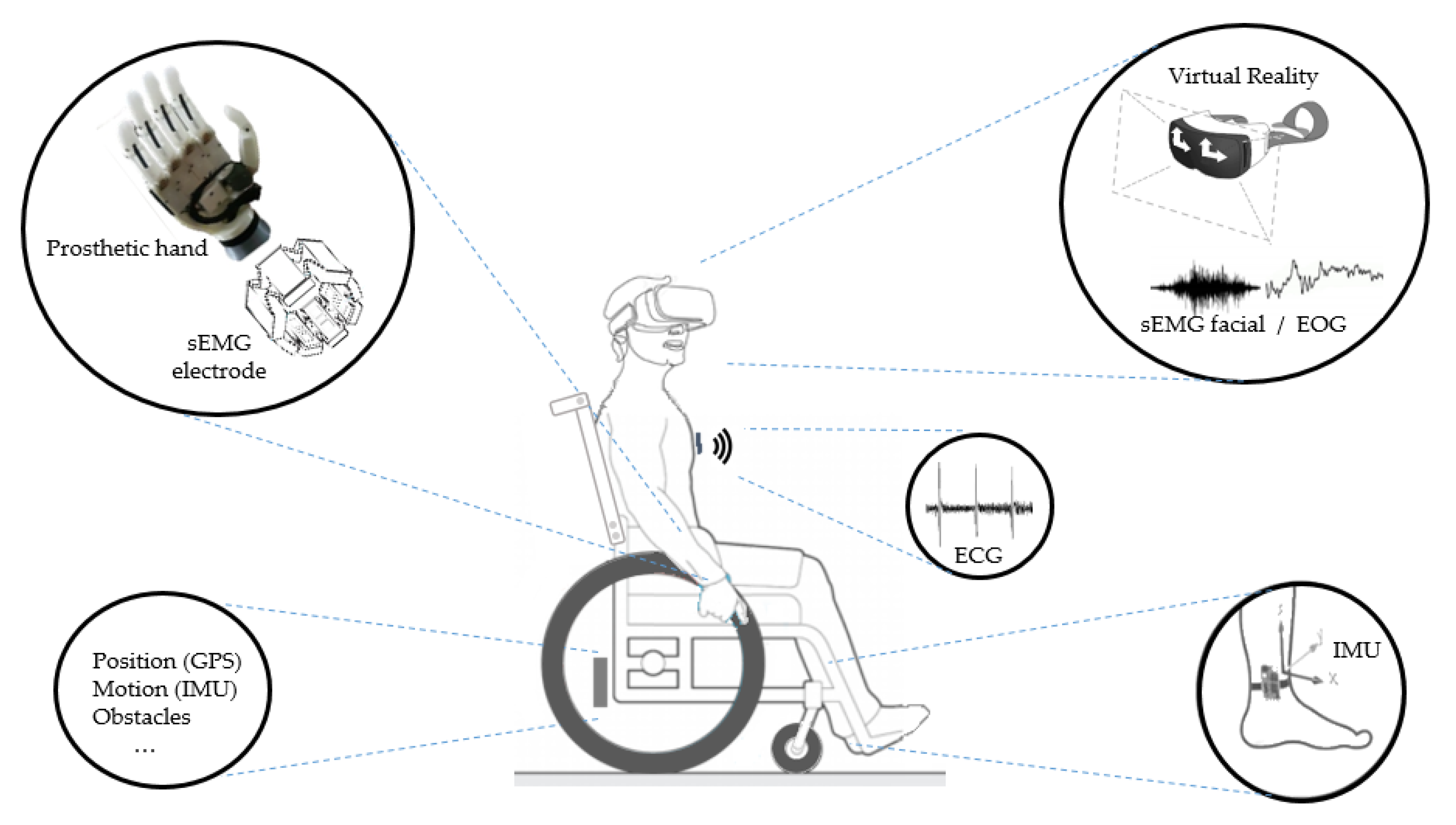

3.3. Assistive Systems

4. Discussion: Limitations and Perspectives

5. Final Considerations

Author Contributions

Funding

Conflicts of Interest

References

- Patel, S.; Park, H.; Bonato, P.; Chan, L.; Rodgers, M. A review of wearable sensors and systems with application in rehabilitation. J. Neuroeng. Rehabil. 2012, 9, 21. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tormene, P.; Bartolo, M.; De Nunzio, A.M.; Fecchio, F.; Quaglini, S.; Tassorelli, C.; Sandrini, G. Estimation of human trunk movements by wearable strain sensors and improvement of sensor’s placement on intelligent biomedical clothes. Biomed. Eng. Online 2012, 11, 95. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, L.; Zhang, Y.; Tang, S.; Luo, H. Privacy Protection for E-Health Systems by Means of Dynamic Authentication and Three-Factor Key Agreement. IEEE Trans. Ind. Electron. 2018, 65, 2795–2805. [Google Scholar] [CrossRef] [Green Version]

- Seshadri, D.R.; Drummond, C.; Craker, J.; Rowbottom, J.R.; Voos, J.E. Wearable Devices for Sports: New Integrated Technologies Allow Coaches, Physicians, and Trainers to Better Understand the Physical Demands of Athletes in Real time. IEEE Pulse 2017, 8, 38–43. [Google Scholar] [CrossRef] [PubMed]

- Municio, E.; Daneels, G.; De Brouwer, M.; Ongenae, F.; De Turck, F.; Braem, B.; Famaey, J.; Latre, S. Continuous Athlete Monitoring in Challenging Cycling Environments Using IoT Technologies. IEEE Internet Things J. 2019, 6, 10875–10887. [Google Scholar] [CrossRef] [Green Version]

- Rossi, M.; Rizzi, A.; Lorenzelli, L.; Brunelli, D. Remote rehabilitation monitoring with an IoT-enabled embedded system for precise progress tracking. In Proceedings of the 2016 IEEE International Conference on Electronics, Circuits and Systems (ICECS), Monte Carlo, Monaco, 11–14 December 2016; pp. 384–387. [Google Scholar] [CrossRef]

- Capecci, M.; Ceravolo, M.G.; Ferracuti, F.; Iarlori, S.; Monteriu, A.; Romeo, L.; Verdini, F. The KIMORE Dataset: KInematic Assessment of MOvement and Clinical Scores for Remote Monitoring of Physical REhabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1436–1448. [Google Scholar] [CrossRef]

- Sannino, G.; De Falco, I.; Pietro, G.D. A Continuous Noninvasive Arterial Pressure (CNAP) Approach for Health 4.0 Systems. IEEE Trans. Ind. Inform. 2019, 15, 498–506. [Google Scholar] [CrossRef]

- García, L.; Tomás, J.; Parra, L.; Lloret, J. An m-health application for cerebral stroke detection and monitoring using cloud services. Int. J. Inf. Manag. 2019, 45, 319–327. [Google Scholar] [CrossRef]

- Thuemmler, C.; Bai, C. E-HEALTH 4.0: How Virtualization and Big Data are Revolutionizing; Springer: New York, NY, USA, 2017; ISBN 978-3-319-47617-9. [Google Scholar]

- Grigoriadis, N.; Bakirtzis, C.; Politis, C.; Danas, K.; Thuemmler, C. Health 4.0: The case of multiple sclerosis. In Proceedings of the 2016 IEEE 18th International Conference on E-Health Networking, Applications and Services (Healthcom), Munich, Germany, 14–16 September 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Sudana, D.; Emanuel, A.W.R. How Big Data in Health 4.0 Helps Prevent the Spread of Tuberculosis. In Proceedings of the 2019 2nd International Conference on Bioinformatics, Biotechnology and Biomedical Engineering (BioMIC)—Bioinformatics and Biomedical Engineering, Yogyakarta, Indonesia, 12–13 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Xu, C.; Chai, D.; He, J.; Zhang, X.; Duan, S. InnoHAR: A Deep Neural Network for Complex Human Activity Recognition. IEEE Access 2019, 7, 9893–9902. [Google Scholar] [CrossRef]

- Evans, J.; Papadopoulos, A.; Silvers, C.T.; Charness, N.; Boot, W.R.; Schlachta-Fairchild, L.; Crump, C.; Martinez, M.; Ent, C.B. Remote Health Monitoring for Older Adults and Those with Heart Failure: Adherence and System Usability. Telemed. E Health 2016, 22, 480–488. [Google Scholar] [CrossRef] [Green Version]

- Banerjee, A.; Chakraborty, C.; Kumar, A.; Biswas, D. Emerging trends in IoT and big data analytics for biomedical and health care technologies. In Handbook of Data Science Approaches for Biomedical Engineering; Elsevier: Amsterdam, The Netherlands, 2020; pp. 121–152. ISBN 978-0-12-818318-2. [Google Scholar]

- Li, C.; Un, K.-F.; Mak, P.; Chen, Y.; Munoz-Ferreras, J.-M.; Yang, Z.; Gomez-Garcia, R. Overview of Recent Development on Wireless Sensing Circuits and Systems for Healthcare and Biomedical Applications. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018, 8, 165–177. [Google Scholar] [CrossRef]

- Sweileh, W.M.; Al-Jabi, S.W.; AbuTaha, A.S.; Zyoud, S.H.; Anayah, F.M.A.; Sawalha, A.F. Bibliometric analysis of worldwide scientific literature in mobile—health: 2006–2016. BMC Med. Inf. Decis. Mak. 2017, 17, 72. [Google Scholar] [CrossRef] [Green Version]

- van Eck, N.J.; Waltman, L. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [CrossRef] [Green Version]

- mHealth Market mHealth Market Size, Share & Industry Analysis, By Category (By Apps {Disease & Treatment Management, Wellness Management}, By Wearable {Body & Temperature Monitors, Glucose Monitors}) By Services Type (Monitoring Services, Diagnostic Services, Treatment Services) By Service Provider (mHealth App Companies, Hospitals, Health Insurance) and Regional Forecast, 2019–2026. Available online: https://www.fortunebusinessinsights.com/industry-reports/mhealth-market-100266 (accessed on 13 April 2020).

- Buke, A.; Gaoli, F.; Yongcai, W.; Lei, S.; Zhiqi, Y. Healthcare algorithms by wearable inertial sensors: A survey. China Commun. 2015, 12, 1–12. [Google Scholar] [CrossRef]

- Nguyen Gia, T.; Jiang, M.; Sarker, V.K.; Rahmani, A.M.; Westerlund, T.; Liljeberg, P.; Tenhunen, H. Low-cost fog-assisted health-care IoT system with energy-efficient sensor nodes. In Proceedings of the 2017 13th International Wireless Communications and Mobile Computing Conference (IWCMC), Valencia, Spain, 26–30 June 2017; pp. 1765–1770. [Google Scholar] [CrossRef]

- Ganesh, G.R.D.; Jaidurgamohan, K.; Srinu, V.; Kancharla, C.R.; Suresh, S.V.S. Design of a low cost smart chair for telemedicine and IoT based health monitoring: An open source technology to facilitate better healthcare. In Proceedings of the 2016 11th International Conference on Industrial and Information Systems (ICIIS), Roorkee, India, 3–4 December 2016; pp. 89–94. [Google Scholar] [CrossRef]

- Jani, A.B.; Bagree, R.; Roy, A.K. Design of a low-power, low-cost ECG & EMG sensor for wearable biometric and medical application. In Proceedings of the 2017 IEEE SENSORS, Glasgow, Scotland, 29 October–1 November 2017; pp. 1–3. [Google Scholar] [CrossRef]

- Sarkar, S.; Misra, S. From Micro to Nano: The Evolution of Wireless Sensor-Based Health Care. IEEE Pulse 2016, 7, 21–25. [Google Scholar] [CrossRef]

- Vezocnik, M.; Juric, M.B. Average Step Length Estimation Models’ Evaluation Using Inertial Sensors: A Review. IEEE Sens. J. 2019, 19, 396–403. [Google Scholar] [CrossRef]

- Li, H.; Shrestha, A.; Heidari, H.; Kernec, J.L.; Fioranelli, F. A Multisensory Approach for Remote Health Monitoring of Older People. IEEE J. Electromagn. RF Microw. Med. Biol. 2018, 2, 102–108. [Google Scholar] [CrossRef] [Green Version]

- Dudak, P.; Sladek, I.; Dudak, J.; Sevidy, S. Application of inertial sensors for detecting movements of the human body. In Proceedings of the 2016 17th International Conference on Mechatronics—Mechatronika (ME), Prague, Czech Republic, 7–9 December 2016; ISBN 978-1-5090-1303-6. [Google Scholar]

- Chen, Y.; Shen, C. Performance Analysis of Smartphone-Sensor Behavior for Human Activity Recognition. IEEE Access 2017, 5, 3095–3110. [Google Scholar] [CrossRef]

- Douma, J.A.J.; Verheul, H.M.W.; Buffart, L.M. Feasibility, validity and reliability of objective smartphone measurements of physical activity and fitness in patients with cancer. BMC Cancer 2018, 18, 1052. [Google Scholar] [CrossRef]

- Syed, A.; Agasbal, Z.T.H.; Melligeri, T.; Gudur, B. Flex Sensor Based Robotic Arm Controller Using Micro Controller. J. Softw. Eng. Appl. 2012, 05, 364–366. [Google Scholar] [CrossRef] [Green Version]

- Zhang, B.; Ren, J.; Cheng, Y.; Wang, B.; Wei, Z. Health Data Driven on Continuous Blood Pressure Prediction Based on Gradient Boosting Decision Tree Algorithm. IEEE Access 2019, 7, 32423–32433. [Google Scholar] [CrossRef]

- Huang, C.; Gu, Y.; Chen, J.; Bahrani, A.A.; Abu Jawdeh, E.G.; Bada, H.S.; Saatman, K.; Yu, G.; Chen, L.A. Wearable Fiberless Optical Sensor for Continuous Monitoring of Cerebral Blood Flow in Mice. IEEE J. Sel. Top. Quantum Electron. 2019, 25, 1–8. [Google Scholar] [CrossRef]

- Meinel, L.; Findeisen, M.; Hes, M.; Apitzsch, A.; Hirtz, G. Automated real-time surveillance for ambient assisted living using an omnidirectional camera. In Proceedings of the 2014 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 10–13 January 2014; pp. 396–399. [Google Scholar] [CrossRef]

- Zaric, N.; Djurisic, M.P. Low cost intelligent notification and alarming system for ambient assisted living applications. In Proceedings of the 2017 40th International Conference on Telecommunications and Signal Processing (TSP), Barcelona, Spain, 5–7 July 2017; pp. 259–262. [Google Scholar] [CrossRef]

- Olivares, M.; Giroux, S.; De Loor, P.; Thépaut, A.; Pigot, H.; Pinard, S.; Bottari, C.; Le Dorze, G.; Bier, N. An ontology model for a context-aware preventive assistance system: Reducing exposition of individuals with Traumatic Brain Injury to dangerous situations during meal preparation. In Proceedings of the 2nd IET International Conference on Technologies for Active and Assisted Living (TechAAL 2016), London, UK, 24–25 October 2016; pp. 1–8. [Google Scholar] [CrossRef] [Green Version]

- Hudec, M.; Smutny, Z. RUDO: A Home Ambient Intelligence System for Blind People. Sensors 2017, 17, 1926. [Google Scholar] [CrossRef] [Green Version]

- Bicharra Garcia, A.C.; Vivacqua, A.S.; Sanchez-Pi, N.; Marti, L.; Molina, J.M. Crowd-Based Ambient Assisted Living to Monitor the Elderly’s Health Outdoors. IEEE Softw. 2017, 34, 53–57. [Google Scholar] [CrossRef]

- Hussain, A.; Wenbi, R.; da Silva, A.L.; Nadher, M.; Mudhish, M. Health and emergency-care platform for the elderly and disabled people in the Smart City. J. Syst. Softw. 2015, 110, 253–263. [Google Scholar] [CrossRef]

- Tabbakha, N.E.; Tan, W.-H.; Ooi, C.-P. Indoor location and motion tracking system for elderly assisted living home. In Proceedings of the 2017 International Conference on Robotics, Automation and Sciences (ICORAS), Melaka, Malaysia, 27–29 November 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Kantoch, E.; Augustyniak, P. Automatic Behavior Learning for Personalized Assisted Living Systems. In Proceedings of the 2013 12th International Conference on Machine Learning and Applications, Miami, FL, USA, 4–7 December 2013; pp. 428–431. [Google Scholar] [CrossRef]

- Yang, G.; Xie, L.; Mantysalo, M.; Zhou, X.; Pang, Z.; Xu, L.D.; Kao-Walter, S.; Chen, Q.; Zheng, L.-R. A Health-IoT Platform Based on the Integration of Intelligent Packaging, Unobtrusive Bio-Sensor, and Intelligent Medicine Box. IEEE Trans. Ind. Inform. 2014, 10, 2180–2191. [Google Scholar] [CrossRef] [Green Version]

- Chung, H.; Lee, H.; Kim, C.; Hong, S.; Lee, J. Patient-Provider Interaction System for Efficient Home-Based Cardiac Rehabilitation Exercise. IEEE Access 2019, 7, 14611–14622. [Google Scholar] [CrossRef]

- Meng, K.; Chen, J.; Li, X.; Wu, Y.; Fan, W.; Zhou, Z.; He, Q.; Wang, X.; Fan, X.; Zhang, Y.; et al. Flexible Weaving Constructed Self-Powered Pressure Sensor Enabling Continuous Diagnosis of Cardiovascular Disease and Measurement of Cuffless Blood Pressure. Adv. Funct. Mater. 2018, 1806388. [Google Scholar] [CrossRef]

- Al-khafajiy, M.; Baker, T.; Chalmers, C.; Asim, M.; Kolivand, H.; Fahim, M.; Waraich, A. Remote health monitoring of elderly through wearable sensors. Multimed. Tools Appl. 2019, 78, 24681–24706. [Google Scholar] [CrossRef] [Green Version]

- Cajamarca, G.; Rodríguez, I.; Herskovic, V.; Campos, M.; Riofrío, J. StraightenUp+: Monitoring of Posture during Daily Activities for Older Persons Using Wearable Sensors. Sensors 2018, 18, 3409. [Google Scholar] [CrossRef] [Green Version]

- Lee, C.M.; Park, J.; Park, S.; Kim, C.H. Fall-Detection Algorithm Using Plantar Pressure and Acceleration Data. Int. J. Precis. Eng. Manuf. 2020, 21, 725–737. [Google Scholar] [CrossRef]

- Saadeh, W.; Butt, S.A.; Altaf, M.A.B. A Patient-Specific Single Sensor IoT-Based Wearable Fall Prediction and Detection System. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 995–1003. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Cang, S.; Yu, H. A noncontact-sensor surveillance system towards assisting independent living for older people. In Proceedings of the 2017 23rd International Conference on Automation and Computing (ICAC), Huddersfield, UK, 7–8 September 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Salat, D.; Tolosa, T. Levodopa in the Treatment of Parkinson’s Disease: Current Status and New Developments. J. Parkinson’s Dis. 2013, 255–269. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, B.-H.; Patel, S.; Buckley, T.; Rednic, R.; McClure, D.J.; Shih, L.; Tarsy, D.; Welsh, M.; Bonato, P.A. Web-Based System for Home Monitoring of Patients with Parkinson’s Disease Using Wearable Sensors. IEEE Trans. Biomed. Eng. 2011, 58, 831–836. [Google Scholar] [CrossRef]

- Doorduin, J.; van Hees, H.W.H.; van der Hoeven, J.G.; Heunks, L.M.A. Monitoring of the Respiratory Muscles in the Critically Ill. Am. J. Respir. Crit. Care Med. 2013, 187, 20–27. [Google Scholar] [CrossRef]

- Tey, C.-K.; An, J.; Chung, W.-Y. A Novel Remote Rehabilitation System with the Fusion of Noninvasive Wearable Device and Motion Sensing for Pulmonary Patients. Comput. Math. Methods Med. 2017, 2017, 1–8. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, S.; Jin, Y.M.; Ouyang, H.; Zou, Y.; Wang, X.X.; Xie, L.X.; Li, Z. Flexible piezoelectric nanogenerator in wearable self-powered active sensor for respiration and healthcare monitoring. Semicond. Sci. Technol. 2017, 32, 064004. [Google Scholar] [CrossRef]

- Cesareo, A.; Biffi, E.; Cuesta-Frau, D.; D’Angelo, M.G.; Aliverti, A. A novel acquisition platform for long-term breathing frequency monitoring based on inertial measurement units. Med. Biol. Eng. Comput. 2020, 58, 785–804. [Google Scholar] [CrossRef]

- Siqueira Junior, A.L.D.; Spirandeli, A.F.; Moraes, R.; Zarzoso, V. Respiratory Waveform Estimation from Multiple Accelerometers: An Optimal Sensor Number and Placement Analysis. IEEE J. Biomed. Health Inform. 2018, 1507–1515. [Google Scholar] [CrossRef]

- Dieffenderfer, J.; Goodell, H.; Mills, S.; McKnight, M.; Yao, S.; Lin, F.; Beppler, E.; Bent, B.; Lee, B.; Misra, V.; et al. Low-Power Wearable Systems for Continuous Monitoring of Environment and Health for Chronic Respiratory Disease. IEEE J. Biomed. Health Inform. 2016, 20, 1251–1264. [Google Scholar] [CrossRef]

- Kabumoto, K.; Takatori, F.; Inoue, M. A novel mainstream capnometer system for endoscopy delivering oxygen. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju Island, Korea, 11–15 July 2017; pp. 3433–3436. [Google Scholar] [CrossRef]

- Yang, J.; Chen, B.; Zhou, J.; Lv, Z. A Low-Power and Portable Biomedical Device for Respiratory Monitoring with a Stable Power Source. Sensors 2015, 15, 19618–19632. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dan, G.; Zhao, J.; Chen, Z.; Yang, H.; Zhu, Z. A Novel Signal Acquisition System for Wearable Respiratory Monitoring. IEEE Access 2018, 6, 34365–34371. [Google Scholar] [CrossRef]

- Formenti, F.; Farmery, A.D. Intravascular oxygen sensors with novel applications for bedside respiratory monitoring. Anaesthesia 2017, 72, 95–104. [Google Scholar] [CrossRef] [PubMed]

- Bruen, D.; Delaney, C.; Florea, L.; Diamond, D. Glucose Sensing for Diabetes Monitoring: Recent Developments. Sensors 2017, 17, 1866. [Google Scholar] [CrossRef] [Green Version]

- Wang, J. Electrochemical Glucose Biosensors. Chem. Rev. 2008, 108, 814–825. [Google Scholar] [CrossRef]

- Rodrigues Barata, J.J.; Munoz, R.; De Carvalho Silva, R.D.; Rodrigues, J.J.P.C.; De Albuquerque, V.H.C. Internet of Things Based on Electronic and Mobile Health Systems for Blood Glucose Continuous Monitoring and Management. IEEE Access 2019, 7, 175116–175125. [Google Scholar] [CrossRef]

- Lucisano, J.Y.; Routh, T.L.; Lin, J.T.; Gough, D.A. Glucose Monitoring in Individuals with Diabetes Using a Long-Term Implanted Sensor/Telemetry System and Model. IEEE Trans. Biomed. Eng. 2017, 64, 1982–1993. [Google Scholar] [CrossRef] [Green Version]

- Xiao, Z.; Tan, X.; Chen, X.; Chen, S.; Zhang, Z.; Zhang, H.; Wang, J.; Huang, Y.; Zhang, P.; Zheng, L.; et al. An Implantable RFID Sensor Tag toward Continuous Glucose Monitoring. IEEE J. Biomed. Health Inform. 2015, 19, 910–919. [Google Scholar] [CrossRef]

- Hina, A.; Nadeem, H.; Saadeh, W. A Single LED Photoplethysmography-Based Noninvasive Glucose Monitoring Prototype System. In Proceedings of the 2019 IEEE International Symposium on Circuits and Systems (ISCAS), Sapporo, Japan, 26–29 May 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Sreenivas, C.; Laha, S. Compact Continuous Non-Invasive Blood Glucose Monitoring using Bluetooth. In Proceedings of the 2019 IEEE Biomedical Circuits and Systems Conference (BioCAS), Nara, Japan, 17–19 October 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Xie, L.; Chen, P.; Chen, S.; Yu, K.; Sun, H. Low-Cost and Highly Sensitive Wearable Sensor Based on Napkin for Health Monitoring. Sensors 2019, 19, 3427. [Google Scholar] [CrossRef] [Green Version]

- Gao, W.; Emaminejad, S.; Nyein, H.Y.Y.; Challa, S.; Chen, K.; Peck, A.; Fahad, H.M.; Ota, H.; Shiraki, H.; Kiriya, D.; et al. Fully integrated wearable sensor arrays for multiplexed in situ perspiration analysis. Nature 2016, 529, 509–514. [Google Scholar] [CrossRef] [Green Version]

- Bandodkar, A.J.; You, J.-M.; Kim, N.-H.; Gu, Y.; Kumar, R.; Mohan, A.M.V.; Kurniawan, J.; Imani, S.; Nakagawa, T.; Parish, B.; et al. Soft, stretchable, high power density electronic skin-based biofuel cells for scavenging energy from human sweat. Energy Environ. Sci. 2017, 10, 1581–1589. [Google Scholar] [CrossRef]

- Abellán-Llobregat, A.; Jeerapan, I.; Bandodkar, A.; Vidal, L.; Canals, A.; Wang, J.; Morallón, E. A stretchable and screen-printed electrochemical sensor for glucose determination in human perspiration. Biosens. Bioelectron. 2017, 91, 885–891. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sempionatto, J.R.; Nakagawa, T.; Pavinatto, A.; Mensah, S.T.; Imani, S.; Mercier, P.; Wang, J. Eyeglasses based wireless electrolyte and metabolite sensor platform. Lab Chip 2017, 17, 1834–1842. [Google Scholar] [CrossRef]

- Parrilla, M.; Guinovart, T.; Ferré, J.; Blondeau, P.; Andrade, F.J. A Wearable Paper-Based Sweat Sensor for Human Perspiration Monitoring. Adv. Healthc. Mater. 2019, 8, e1900342. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Song, Y.; Bo, X.; Min, J.; Pak, O.S.; Zhu, L.; Wang, M.; Tu, J.; Kogan, A.; Zhang, H.; et al. A laser-engraved wearable sensor for sensitive detection of uric acid and tyrosine in sweat. Nat. Biotechnol. 2020, 38, 217–224. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Patel, S.; Hughes, R.; Hester, T.; Stein, J.; Akay, M.; Dy, J.G.; Bonato, P. A Novel Approach to Monitor Rehabilitation Outcomes in Stroke Survivors Using Wearable Technology. Proc. IEEE 2010, 98, 450–461. [Google Scholar] [CrossRef]

- Ma, H.; Zhong, C.; Chen, B.; Chan, K.-M.; Liao, W.-H. User-Adaptive Assistance of Assistive Knee Braces for Gait Rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1994–2005. [Google Scholar] [CrossRef]

- Thakur, C.; Ogawa, K.; Tsuji, T.; Kurita, Y. Soft Wearable Augmented Walking Suit with Pneumatic Gel Muscles and Stance Phase Detection System to Assist Gait. IEEE Robot. Autom. Lett. 2018, 3, 4257–4264. [Google Scholar] [CrossRef]

- Pereira, A.; Folgado, D.; Nunes, F.; Almeida, J.; Sousa, I. Using Inertial Sensors to Evaluate Exercise Correctness in Electromyography-based Home Rehabilitation Systems. In Proceedings of the 2019 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Istanbul, Turkey, 26–28 June 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Liu, L.; Chen, X.; Lu, Z.; Cao, S.; Wu, D.; Zhang, X. Development of an EMG-ACC-Based Upper Limb Rehabilitation Training System. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 244–253. [Google Scholar] [CrossRef]

- Mohamed Refai, M.I.; van Beijnum, B.-J.F.; Buurke, J.H.; Veltink, P.H. Gait and Dynamic Balance Sensing Using Wearable Foot Sensors. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 218–227. [Google Scholar] [CrossRef] [Green Version]

- Ngamsuriyaroj, S.; Chira-Adisai, W.; Somnuk, S.; Leksunthorn, C.; Saiphim, K. Walking Gait Measurement and Analysis via Knee Angle Movement and Foot Plantar Pressures. In Proceedings of the 2018 15th International Joint Conference on Computer Science and Software Engineering (JCSSE), Nakhonpathom, Thailand, 11 July 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Eguchi, R.; Takahashi, M. Insole-Based Estimation of Vertical Ground Reaction Force Using One-Step Learning with Probabilistic Regression and Data Augmentation. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1217–1225. [Google Scholar] [CrossRef] [PubMed]

- Cui, C.; Bian, G.-B.; Hou, Z.-G.; Zhao, J.; Su, G.; Zhou, H.; Peng, L.; Wang, W. Simultaneous Recognition and Assessment of Post-Stroke Hemiparetic Gait by Fusing Kinematic, Kinetic, and Electrophysiological Data. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 856–864. [Google Scholar] [CrossRef] [PubMed]

- Qiu, S.; Wang, Z.; Zhao, H.; Liu, L.; Jiang, Y. Using Body-Worn Sensors for Preliminary Rehabilitation Assessment in Stroke Victims with Gait Impairment. IEEE Access 2018, 6, 31249–31258. [Google Scholar] [CrossRef]

- Lee, S.I.; Adans-Dester, C.P.; Grimaldi, M.; Dowling, A.V.; Horak, P.C.; Black-Schaffer, R.M.; Bonato, P.; Gwin, J.T. Enabling Stroke Rehabilitation in Home and Community Settings: A Wearable Sensor-Based Approach for Upper-Limb Motor Training. IEEE J. Transl. Eng. Health Med. 2018, 6, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Yang, K.; Zhang, X.; Zhang, Q.; Yin, G. Design on Weight Support System of Lower Limb Rehabilitation Robot. In Proceedings of the 2018 15th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 26 June 2018; pp. 309–314. [Google Scholar] [CrossRef]

- Ghassemi, M.; Triandafilou, K.; Barry, A.; Stoykov, M.E.; Roth, E.; Mussa-Ivaldi, F.A.; Kamper, D.G.; Ranganathan, R. Development of an EMG-Controlled Serious Game for Rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 283–292. [Google Scholar] [CrossRef] [PubMed]

- Lin, P.-J.; Chen, H.Y. Design and implement of a rehabilitation system with surface electromyography technology. In Proceedings of the 2018 IEEE International Conference on Applied System Invention (ICASI), Chiba, Japan, 13 April 2018; pp. 513–515. [Google Scholar] [CrossRef]

- Pani, D.; Achilli, A.; Spanu, A.; Bonfiglio, A.; Gazzoni, M.; Botter, A. Validation of Polymer-Based Screen-Printed Textile Electrodes for Surface EMG Detection. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1370–1377. [Google Scholar] [CrossRef]

- Swee, S.K.; You, L.Z.; Hang, B.W.W.; Kiang, D.K.T. Development of rehabilitation system using virtual reality. In Proceedings of the 2017 International Conference on Robotics, Automation and Sciences (ICORAS), Melaka, Malaysia, 27 November 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Bur, J.W.; McNeill, M.D.J.; Charles, D.K.; Morrow, P.J.; Crosbie, J.H.; McDonough, S.M. Augmented Reality Games for Upper-Limb Stroke Rehabilitation. In Proceedings of the 2010 Second International Conference on Games and Virtual Worlds for Serious Applications, Braga, Portugal, 25 March 2010; pp. 75–78. [Google Scholar] [CrossRef]

- Straudi, S.; Benedetti, M.G.; Venturini, E.; Manca, M.; Foti, C.; Basaglia, N. Does robot-assisted gait training ameliorate gait abnormalities in multiple sclerosis? A pilot randomized-control trial. NeuroRehabilitation 2013, 33, 555–563. [Google Scholar] [CrossRef]

- Husemann, B.; Müller, F.; Krewer, C.; Heller, S.; Koenig, E. Effects of Locomotion Training with Assistance of a Robot-Driven Gait Orthosis in Hemiparetic Patients After Stroke: A Randomized Controlled Pilot Study. Stroke 2007, 38, 349–354. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Berger, A.; Horst, F.; Müller, S.; Steinberg, F.; Doppelmayr, M. Current State and Future Prospects of EEG and fNIRS in Robot-Assisted Gait Rehabilitation: A Brief Review. Front. Hum. Neurosci. 2019, 13, 172. [Google Scholar] [CrossRef]

- Shakti, D.; Mathew, L.; Kumar, N.; Kataria, C. Effectiveness of robo-assisted lower limb rehabilitation for spastic patients: A systematic review. Biosens. Bioelectron. 2018, 117, 403–415. [Google Scholar] [CrossRef]

- Marchal-Crespo, L.; Riener, R. Robot-assisted gait training. In Rehabilitation Robotics; Elsevier: Amsterdam, The Netherlands, 2018; pp. 227–240. ISBN 978-0-12-811995-2. [Google Scholar] [CrossRef]

- Hobbs, B.; Artemiadis, P. A Review of Robot-Assisted Lower-Limb Stroke Therapy: Unexplored Paths and Future Directions in Gait Rehabilitation. Front. Neurorobot. 2020, 14, 19. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Garzotto, F.; Messina, N.; Matarazzo, V.; Moskwa, L.; Oliva, G.; Facchini, R. Empowering Interventions for Persons with Neurodevelopmental Disorders through Wearable Virtual Reality and Bio-sensors. In Proceedings of the Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems—CHI’18, Montreal, QC, Canada, 20 April 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Todd, C.J.; Hubner, P.P.; Hubner, P.; Schubert, M.C.; Migliaccio, A.A. StableEyes—A Portable Vestibular Rehabilitation Device. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1223–1232. [Google Scholar] [CrossRef] [PubMed]

- Tannous, H.; Istrate, D.; Perrochon, A.; Daviet, J.-C.; Benlarbi-Delai, A.; Sarrazin, J.; Ho Ba Tho, M.-C.; Dao, T.T. GAMEREHAB@HOME: A new engineering system using serious game and multi-sensor fusion for functional rehabilitation at home. IEEE Trans. Games 2020. [Google Scholar] [CrossRef]

- Fanella, G.; De Salvo, G.; Frasca, V.; Calamani, M.; Onesti, E.; Ceccanti, M.; Cucchiella, C.; Barone, T.; Pozzilli, V.; Fiorini, I.; et al. Amyotrophic Lateral Sclerosis (ALS): Telemedicine system for home care and patient monitoring. Clin. Neurophysiol. 2019, 130, e9–e10. [Google Scholar] [CrossRef]

- Graybill, P.; Kiani, M. Eyelid Drive System: An Assistive Technology Employing Inductive Sensing of Eyelid Movement. IEEE Trans. Biomed. Circuits Syst. 2018. [Google Scholar] [CrossRef] [PubMed]

- Guy, V.; Soriani, M.-H.; Bruno, M.; Papadopoulo, T.; Desnuelle, C.; Clerc, M. Brain computer interface with the P300 speller: Usability for disabled people with amyotrophic lateral sclerosis. Ann. Phys. Rehabil. Med. 2018, 61, 5–11. [Google Scholar] [CrossRef]

- Jakob, I.; Kollreider, A.; Germanotta, M.; Benetti, F.; Cruciani, A.; Padua, L.; Aprile, I. Robotic and Sensor Technology for Upper Limb Rehabilitation. PMR 2018, 10, S189–S197. [Google Scholar] [CrossRef] [Green Version]

- Yap, H.K.; Ang, B.W.K.; Lim, J.H.; Goh, J.C.H.; Yeow, C.-H. A fabric-regulated soft robotic glove with user intent detection using EMG and RFID for hand assistive application. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16 May 2016; pp. 3537–3542. [Google Scholar] [CrossRef]

- Ozioko, O.; Hersh, M.; Dahiya, R. Inductance-Based Flexible Pressure Sensor for Assistive Gloves. In Proceedings of the 2018 IEEE SENSORS, New Delhi, India, 28 October 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Pan, S.; Lv, H.; Duan, H.; Pang, G.; Yi, K.; Yang, G. A Sensor Glove for the Interaction with a Nursing-Care Assistive Robot. In Proceedings of the 2019 IEEE International Conference on Industrial Cyber Physical Systems (ICPS), Taipei, Taiwan, 6 May 2019; pp. 405–410. [Google Scholar] [CrossRef]

- Han, J.; Shao, L.; Xu, D.; Shotton, J. Enhanced Computer Vision with Microsoft Kinect Sensor: A Review. IEEE Trans. Cybern. 2013, 43, 1318–1334. [Google Scholar] [CrossRef]

- Zhang, H.; Chang, B.-C.; Rue, Y.-J.; Agrawal, S.K. Using the Motion of the Head-Neck as a Joystick for Orientation Control. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 236–243. [Google Scholar] [CrossRef]

- Ju, J.; Shin, Y.; Kim, E. Vision based interface system for hands free control of an intelligent wheelchair. J. Neuroeng. Rehabil. 2009, 6, 33. [Google Scholar] [CrossRef] [Green Version]

- Kim, E. Wheelchair Navigation System for Disabled and Elderly People. Sensors 2016, 16, 1806. [Google Scholar] [CrossRef] [PubMed]

- Lamti, H.A.; Ben Khelifa, M.M.; Gorce, P.; Alimi, A.M. The use of brain and thought in service of handicap assistance: Wheelchair navigation. In Proceedings of the 2013 International Conference on Individual and Collective Behaviors in Robotics (ICBR), Sousse, Tunisia, 15 December 2013; pp. 80–85. [Google Scholar] [CrossRef]

- Saravanakumar, D.; Reddy, R. A high performance asynchronous EOG speller system. Biomed. Signal Process. Control 2020, 59, 101898. [Google Scholar] [CrossRef]

- Shao, L. Facial Movements Recognition Using Multichannel EMG Signals. In Proceedings of the 2019 IEEE Fourth International Conference on Data Science in Cyberspace (DSC), Hangzhou, China, 23 June 2019; pp. 561–566. [Google Scholar] [CrossRef]

- Perdiz, J.; Pires, G.; Nunes, U.J. Emotional state detection based on EMG and EOG biosignals: A short survey. In Proceedings of the 2017 IEEE 5th Portuguese Meeting on Bioengineering (ENBENG), Coimbra, Portugal, 16 February 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Yu, Y.; Liu, Y.; Yin, E.; Jiang, J.; Zhou, Z.; Hu, D. An Asynchronous Hybrid Spelling Approach Based on EEG–EOG Signals for Chinese Character Input. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1292–1302. [Google Scholar] [CrossRef]

- Lau, B.T. Portable real time emotion detection system for the disabled. Expert Syst. Appl. 2010, 37, 6561–6566. [Google Scholar] [CrossRef]

- Liu, R.; Salisbury, J.P.; Vahabzadeh, A.; Sahin, N.T. Feasibility of an Autism-Focused Augmented Reality Smartglasses System for Social Communication and Behavioral Coaching. Front. Pediatrics 2017, 5. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, F.; Mao, M.; Duan, L.; Huang, Y.; Li, Z.; Zhu, C. Intersession Instability in fNIRS-Based Emotion Recognition. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1324–1333. [Google Scholar] [CrossRef] [PubMed]

- Cio, Y.-S.L.-K.; Raison, M.; Leblond Menard, C.; Achiche, S. Proof of Concept of an Assistive Robotic Arm Control Using Artificial Stereovision and Eye-Tracking. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 2344–2352. [Google Scholar] [CrossRef]

- Aymaz, S.; Cavdar, T. Ultrasonic Assistive Headset for visually impaired people. In Proceedings of the 2016 39th International Conference on Telecommunications and Signal Processing (TSP), Vienna, Austria, 27 June 2016; pp. 388–391. [Google Scholar] [CrossRef]

- Jani, A.B.; Kotak, N.A.; Roy, A.K. Sensor Based Hand Gesture Recognition System for English Alphabets Used in Sign Language of Deaf-Mute People. In Proceedings of the 2018 IEEE SENSORS, New Delhi, India, 28 October 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Lee, B.G.; Lee, S.M. Smart Wearable Hand Device for Sign Language Interpretation System with Sensors Fusion. IEEE Sens. J. 2018, 18, 1224–1232. [Google Scholar] [CrossRef]

- Galka, J.; Masior, M.; Zaborski, M.; Barczewska, K. Inertial Motion Sensing Glove for Sign Language Gesture Acquisition and Recognition. IEEE Sens. J. 2016, 16, 6310–6316. [Google Scholar] [CrossRef]

- Mendes, J.J.A., Jr.; Freitas, M.L.B.; Stevan, S.L.; Pichorim, S.F. Recognition of Libras Static Alphabet with MyoTM and Multi-Layer Perceptron. In XXVI Brazilian Congress on Biomedical Engineering; Costa-Felix, R., Machado, J.C., Alvarenga, A.V., Eds.; Springer: Singapore, 2019; Volume 70/2, pp. 413–419. ISBN 9789811325168. [Google Scholar] [CrossRef]

- Gupta, R. A Quantitative Performance Assessment of surface EMG and Accelerometer in Sign Language Recognition. In Proceedings of the 2019 9th Annual Information Technology, Electromechanical Engineering and Microelectronics Conference (IEMECON), Jaipur, India, 13 March 2019; pp. 242–246. [Google Scholar] [CrossRef]

- Wu, J.; Sun, L.; Jafari, R. A Wearable System for Recognizing American Sign Language in Real-Time Using IMU and Surface EMG Sensors. IEEE J. Biomed. Health Inform. 2016, 20, 1281–1290. [Google Scholar] [CrossRef]

- Roland, T.; Wimberger, K.; Amsuess, S.; Russold, M.F.; Baumgartner, W. An Insulated Flexible Sensor for Stable Electromyography Detection: Applicationto Prosthesis Control. Sensor 2019, 19, 961. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Sensors in Healthcare, Home Medical Assistance, and Continuous Health Monitoring | ||

|---|---|---|

| Main Application | Sensors | References |

| Fall detection and posture monitoring for elderly, patients with Parkinson’s disease | Inertial/plantar-pressure measurement unit | [45,46,47,50] |

| Assisted Living for elderly/patients with chronic disabilities/impaired people | Wearable sensors: ECG/EEG/GPS/inertial/temperature/blood pressure | [37,38,40,42] |

| Ambient sensors: Infrared/humidity/gas/light/temperature/camera/movement | [33,34,48] | |

| Respiratory monitoring | Pressure transducer/infrared/piezoresistive pressure/pyroelectric/inertial/PPG | [56,58,59] |

| Blood monitoring | PPG/infrared/pressure/camera | [13,31,43] |

| Glucose monitoring | Chemical/glucose/PPG | [61,62,63,64,65,66,67] |

| Sweat monitoring | Metabolites/electrolytes/skin temperature/electrochemical/stick-on flexible sensor/eyeglasses | [69,70,71,72,73,74] |

| Systems and Sensors in Physical Rehabilitation | ||

|---|---|---|

| Main Application | Sensors | References |

| Gait analysis and pressure foot evaluation for patients with multiple sclerosis, Parkinson’s disease, patients that suffered from a stroke | Pressure/inertial/FRS force sensor/camera/EMG | [80,81,83,92] |

| Evaluation of rehabilitation exercises, assess and increase more movements for patients with palsy, Parkinson’s disease, multiple sclerosis, stroke, brain injury | Inertial/EMG/kinect/leap motion sensor | [78,79,89] |

| Rehabilitation exercises analysis | Inertial/kinect | [99,100] |

| Use of VR in rehabilitation | Kinect/leap motion sensor/force | [90,91,92,93] |

| Assistive Systems | ||

|---|---|---|

| Main Application | Sensors | References |

| Movement coding for control keyboards and displays for patients with ALS and people with upper and lower limbs palsy | EEG/EOG/facial EMG/inertial | [103,113,114] |

| Control and implementation of tasks and human-machine interfaces like wheelchair, smart shoe, and robot | Inertial/flex sensor/camera/ultrasonic/EOG/EEG/Kinect/force/torque/FRS/infrared | [107,108,109,110,111,112,120,128] |

| Emotion recognition for patients with palsy, autism spectrum disorder | Camera/movement/sound/infrared | [115,117,118,119] |

| Gesture recognition for aid communication between deaf people and listeners | Flex sensor/inertial/EMG | [122,123,124,125,126,127] |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nascimento, L.M.S.d.; Bonfati, L.V.; Freitas, M.L.B.; Mendes Junior, J.J.A.; Siqueira, H.V.; Stevan, S.L., Jr. Sensors and Systems for Physical Rehabilitation and Health Monitoring—A Review. Sensors 2020, 20, 4063. https://doi.org/10.3390/s20154063

Nascimento LMSd, Bonfati LV, Freitas MLB, Mendes Junior JJA, Siqueira HV, Stevan SL Jr. Sensors and Systems for Physical Rehabilitation and Health Monitoring—A Review. Sensors. 2020; 20(15):4063. https://doi.org/10.3390/s20154063

Chicago/Turabian StyleNascimento, Lucas Medeiros Souza do, Lucas Vacilotto Bonfati, Melissa La Banca Freitas, José Jair Alves Mendes Junior, Hugo Valadares Siqueira, and Sergio Luiz Stevan, Jr. 2020. "Sensors and Systems for Physical Rehabilitation and Health Monitoring—A Review" Sensors 20, no. 15: 4063. https://doi.org/10.3390/s20154063

APA StyleNascimento, L. M. S. d., Bonfati, L. V., Freitas, M. L. B., Mendes Junior, J. J. A., Siqueira, H. V., & Stevan, S. L., Jr. (2020). Sensors and Systems for Physical Rehabilitation and Health Monitoring—A Review. Sensors, 20(15), 4063. https://doi.org/10.3390/s20154063