Real-Time Object Tracking via Adaptive Correlation Filters

Abstract

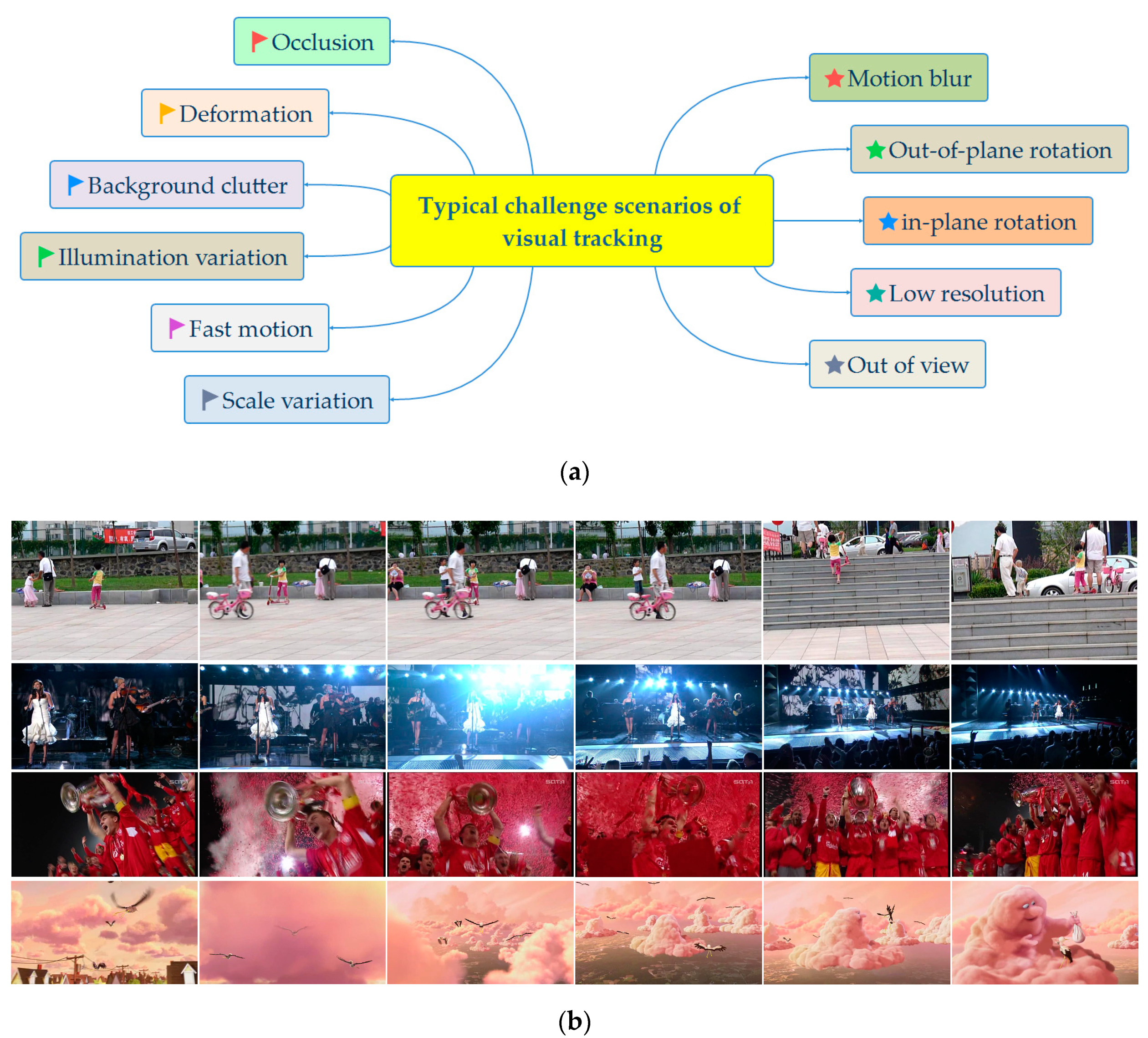

:1. Introduction

- (1)

- To solve the limitation of the sole filter template, a dual-template method is proposed to improve the robustness of the tracker;

- (2)

- In order to solve the various appearance variations in complicated challenge scenarios, the schemes of discriminative appearance model, multi-peaks target re-detection, and scale adaptive are integrated into the tracker;

- (3)

- A high-confidence template updating technique is utilized to solve the problem that the filter model may be drift or even corruption.

2. Related Work

2.1. The Early Object Tracking Algorithms

2.2. The CNN-Based Object Tracking Algorithms

2.3. The Correlation Filter-Based Object Tracking Algorithms

3. Kernel Correlation Filter Tracker

3.1. Ridge Regression and Utilization of Circulant Matrix

3.2. Kernel Trick

3.3. Fast Target Detection and Model Update

4. The Proposed Tracker

4.1. The Framework of the Proposed Approach

4.2. Specific Solution

4.2.1. A Dual-Template Strategy

4.2.2. A Discriminative Appearance Model

4.2.3. A Multi-Peaks Target Re-Detection Technique

4.2.4. A Scale Adaptive Scheme

4.2.5. A High-Confidence Template Updating Technique

5. Experimental Results and Analysis

5.1. Experiment Setup

5.2. Compared Trackers

5.3. Experimental Results on the OTB2013 and OTB2015 Benchmark Databases

5.3.1. The OTB2013 and OTB2015 Benchmark Databases

5.3.2. Overall Performance Evaluation

5.3.3. Attribute-Based Evaluation

5.3.4. Qualitative Comparison

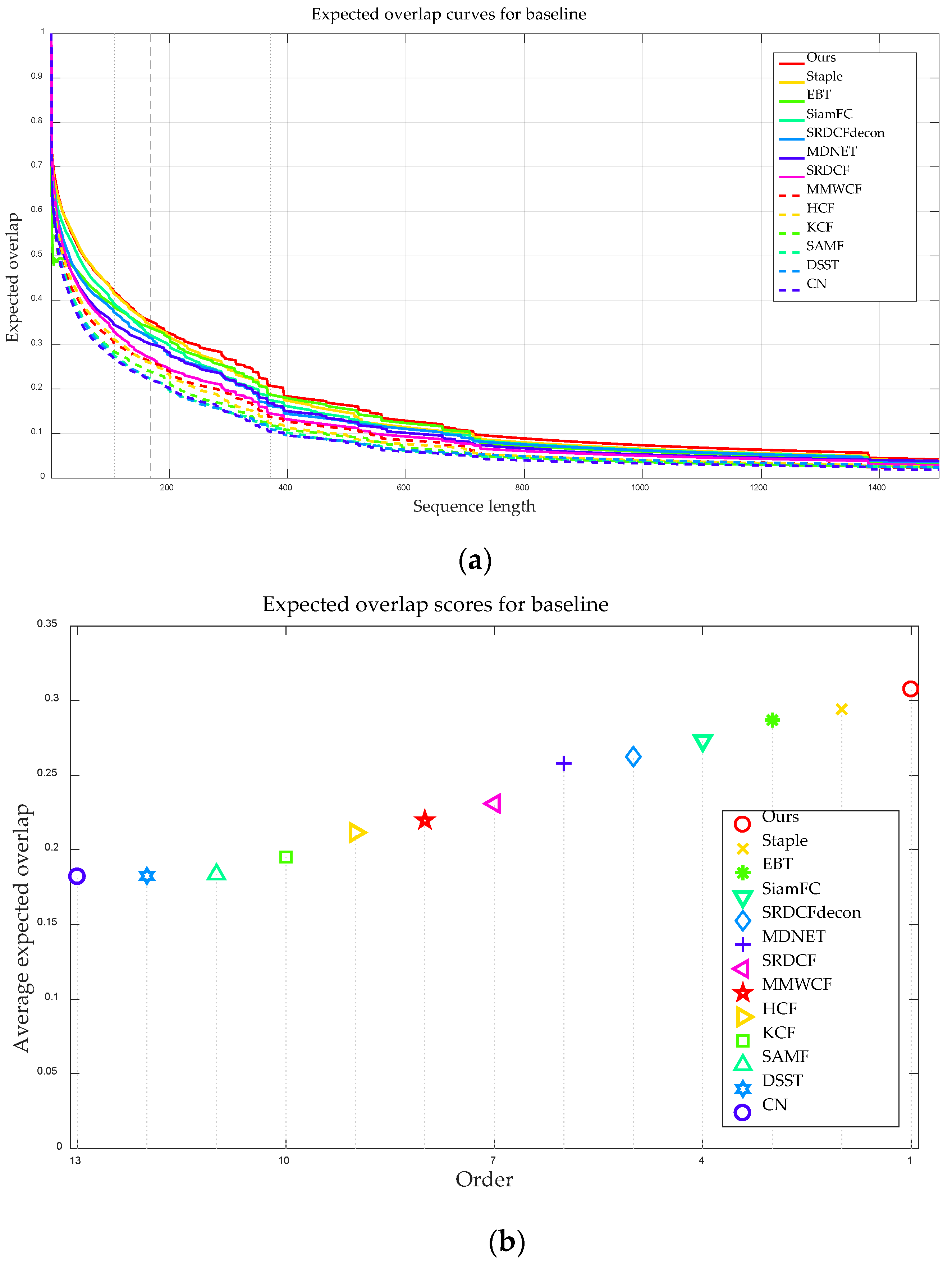

5.4. Experimental Results on the VOT2016 Benchmark Dataset

5.4.1. The VOT2016 Benchmark Database

5.4.2. Quantitative and Qualitative Comparison

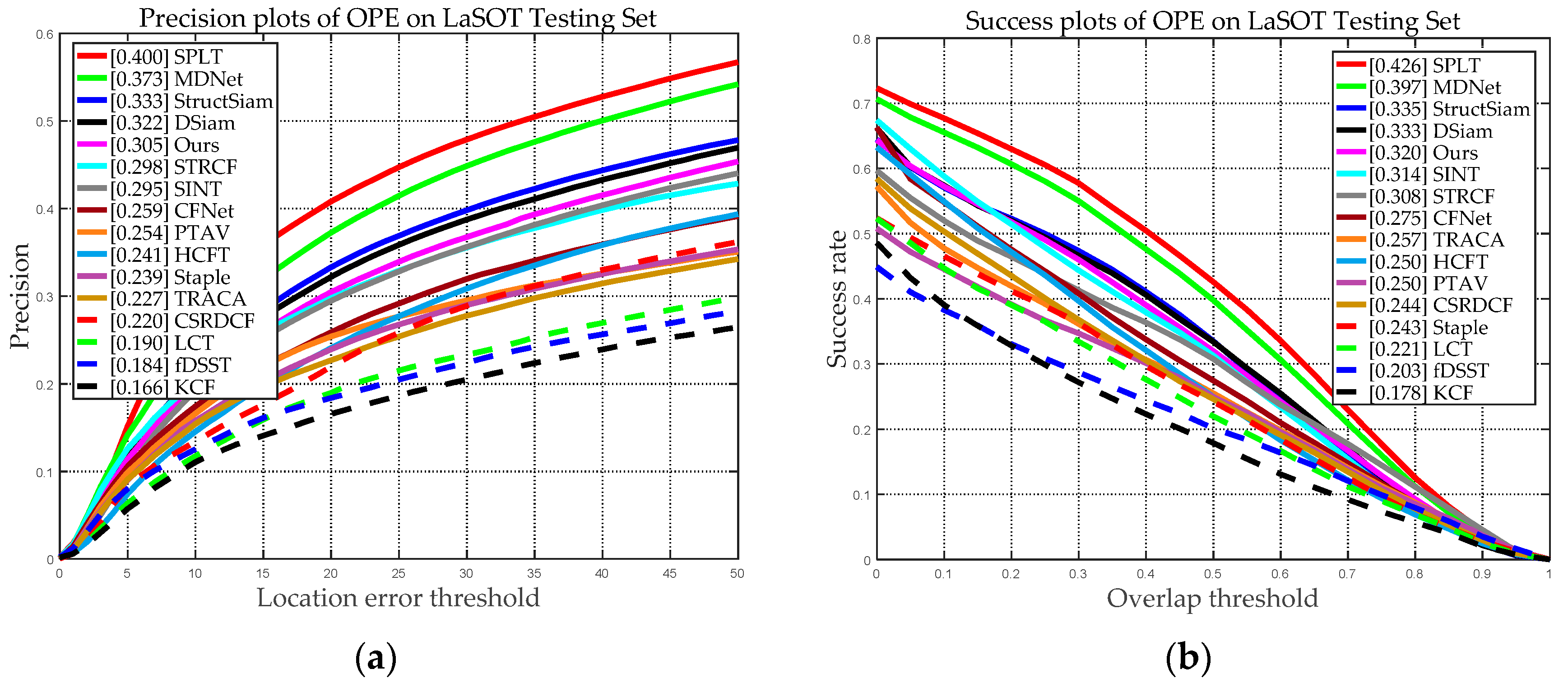

5.5. Experimental Results on the LaSOT Benchmark Dataset

6. Conclusions and Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Han, Y.Q.; Deng, C.W.; Zhao, B.Y.; Zhao, B.J. Spatial-temporal context-aware tracking. IEEE Signal Process. Lett. 2019, 26, 500–504. [Google Scholar] [CrossRef]

- Dong, X.P.; Shen, J.B.; Wang, W.G.; Liu, Y.; Shao, L.; Porikli, F. Hyperparameter optimization for tracking with continuous deep Q-learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 518–527. [Google Scholar] [CrossRef]

- Dong, X.P.; Shen, J.B. Triplet loss in siamese network for object tracking. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 472–488. [Google Scholar] [CrossRef]

- Li, C.P.; Xing, Q.J.; Ma, Z.G. HKSiamFC: Visual-tracking framework using prior information provided by staple and kalman filter. Sensors 2020, 20, 2137. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mercorelli, P. Denoising and harmonic detection using nonorthogonal wavelet packets in industrial applications. J. Syst. Sci. Complex. 2007, 20, 325–343. [Google Scholar] [CrossRef]

- Mercorelli, P. Biorthogonal wavelet trees in the classification of embedded signal classes for intelligent sensors using machine learning applications. J. Frankl. Inst. 2007, 344, 813–829. [Google Scholar] [CrossRef]

- Kim, B.H.; Lukezic, A.; Lee, J.H.; Jung, H.M.; Kim, M.Y. Global motion-aware robust visual object tracking for electro optical targeting systems. Sensors 2020, 20, 566. [Google Scholar] [CrossRef] [Green Version]

- Du, K.; Ju, Y.F.; Jin, Y.L.; Li, G.; Li, Y.Y.; Qian, S.L. Object tracking based on improved mean shift and SIFT. In Proceedings of the 2nd International Conference on Consumer Electronics, Communications and Networks, Yichang, China, 21–23 April 2012; pp. 2716–2719. [Google Scholar] [CrossRef]

- Zhang, T.; Liu, S.; Xu, C.; Yan, S.C.; Ghanem, B.; Ahuja, N.; Yang, M.H. Structural sparse tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 150–158. [Google Scholar] [CrossRef] [Green Version]

- Babenko, B.; Yang, M.H.; Belongie, S. Visual tracking with online multiple instance learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami Beach, FL, USA, 20–25 June 2009; pp. 983–990. [Google Scholar] [CrossRef]

- Hare, S.; Saffari, A.; Torr, P. Struck: Structured output tracking with kernels. In Proceedings of the International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 263–270. [Google Scholar] [CrossRef] [Green Version]

- Wu, Y.; Lim, J.; Yang, M. Online object tracking: A benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar] [CrossRef] [Green Version]

- Wu, Y.; Lim, J.; Yang, M. Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [Green Version]

- Kristan, K.; Jiri, M.; Leonardis, A. The visual object tracking VOT2015 challenge results. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015; pp. 564–586. [Google Scholar] [CrossRef]

- Kristan, M.; Matas, J.; Leonardis, A.; Felsberg, M.; Pflugfelder, R.; Čehovin, L.; Vojír, T.; Häger, G.; Lukežič, A.; Fernández, G.; et al. The visual object tracking VOT2016 challenge results. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, Holland, 8–16 October 2016; pp. 777–823. [Google Scholar] [CrossRef] [Green Version]

- Liu, F.; Gong, C.; Huang, X.; Zhou, T.; Yang, J.; Tao, D. Robust visual tracking revisited: From correlation filter to template matching. IEEE Trans. Image Process. 2018, 27, 2777–2790. [Google Scholar] [CrossRef] [Green Version]

- Han, Z.J.; Wang, P.; Ye, Q.X. Adaptive discriminative deep correlation filter for visual object tracking. IEEE Trans. Circuits Sys. Video Technol. 2020, 30, 155–166. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar] [CrossRef]

- Henriques, J.F.; Rui, C.; Martins, P.; Batista, J. Exploiting the circulant structure of tracking-by-detection with kernels. In Proceedings of the 12th European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012; pp. 702–715. [Google Scholar] [CrossRef]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [Green Version]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Learning spatially regularized correlation filters for visual tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015; pp. 4310–4318. [Google Scholar] [CrossRef] [Green Version]

- Ma, L.; Lu, J.; Feng, J.J.; Zhou, J. Multiple feature fusion via weighted entropy for visual tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015; pp. 3128–3136. [Google Scholar] [CrossRef]

- Makris, A.; Kosmopoulos, D.; Perantonis, S.; Theodoridis, S. Hierarchical feature fusion for visual tracking. In Proceedings of the 2007 IEEE International Conference on Image Processing (ICIP), San Antonio, TX, USA, 16–19 September 2007. [Google Scholar] [CrossRef]

- Fu, C.H.; Duan, R.; Kircali, D.; Kayacan, E. Onboard robust visual tracking for UAVs using a reliable global-local object model. Sensors 2016, 16, 1406. [Google Scholar] [CrossRef] [Green Version]

- Wang, W.; Wang, C.P.; Liu, S.; Zhang, T.Z.; Cao, X.C. Robust target tracking by online random forests and superpixels. IEEE Trans. Circuits Sys. Video Technol. 2018, 28, 1609–1622. [Google Scholar] [CrossRef]

- Jiang, N.; Liu, W.Y.; Wu, Y. Learning adaptive metric for robust visual tracking. IEEE Trans. Image Process 2011, 20, 2288–2300. [Google Scholar] [CrossRef] [PubMed]

- Ma, C.; Huang, J.B.; Yang, X.K.; Yang, M.H. Hierarchical convolutional features for visual tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015; pp. 3074–3082. [Google Scholar] [CrossRef]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4293–4302. [Google Scholar] [CrossRef] [Green Version]

- Zhao, F.; Wang, J.Q.; Wu, Y.; Tang, M. Adversarial deep tracking. IEEE Trans. Circuits Sys. Video Technol. 2019, 29, 1998–2011. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-convolutional siamese networks for object tracking. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, Holland, 8–16 October 2016; pp. 850–865. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Wei, X.; Shen, H.; Tang, X.; Yu, H. Adaptive model updating for robust object tracking. Signal Process. Image Commun. 2020, 80, 115656. [Google Scholar] [CrossRef]

- Han, Y.M.; Zhang, P.; Huang, W.; Zha, Y.F.; Cooper, G.D.; Zhang, Y.N. Robust visual tracking based on adversarial unlabeled instance generation with label smoothing loss regularization. Pattern Recognit. 2020, 97, 107027. [Google Scholar] [CrossRef]

- Huang, L.H.; Zhao, X.; Huang, K.Q. GlobalTrack: A simple and strong baseline for long-term tracking. arXiv 2019, arXiv:1912.08531. Available online: https://arxiv.org/abs/1912.08531 (accessed on 24 July 2020).

- Yan, B.; Zhao, H.J.; Wang, D.; Lu, H.C.; Yang, X.Y. ‘Skimming-perusal’ tracking: A framework for real-time and robust long-term tracking. arXiv 2019, arXiv:1909.01840. Available online: https://arxiv.org/abs/1909.01840 (accessed on 24 July 2020).

- Zhang, Y.H.; Wang, D.; Wang, L.J.; Qi, J.Q.; Lu, H.C. Learning regression and verification networks for long-term visual tracking. arXiv 2018, arXiv:1809.04320. Available online: https://arxiv.org/abs/1809.04320 (accessed on 24 July 2020).

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware siamese networks for visual object tracking. arXiv 2018, arXiv:1808.06048. Available online: https://arxiv.org/abs/1808.06048 (accessed on 24 July 2020).

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 4282–4291. [Google Scholar] [CrossRef] [Green Version]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; Weijer, J. Adaptive color attributes for real-time visual tracking. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1090–1097. [Google Scholar] [CrossRef] [Green Version]

- Zhu, G.; Porikli, F.; Li, H. Beyond local search: Tracking objects everywhere with instance-specific proposals. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 943–951. [Google Scholar] [CrossRef] [Green Version]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Discriminative scale space tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1561–1575. [Google Scholar] [CrossRef] [Green Version]

- Gao, L.; Li, Y.S.; Ning, J.F. Maximum margin object tracking with weighted circulant feature maps. IET Comput. Vis. 2019, 13, 71–78. [Google Scholar] [CrossRef]

- Liu, J.; Xiao, G.; Zhang, X.C.; Ye, P.; Xiong, X.Z.; Peng, S.Y. Anti-occlusion object tracking based on correlation filter. Signal, Image Video Process. 2020, 14, 753–761. [Google Scholar] [CrossRef]

- Ma, C.; Yang, X.K.; Zhang, C.Y.; Yang, M.H. Long-term correlation tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA USA, 7–12 June 2015; pp. 5388–5396. [Google Scholar] [CrossRef]

- Rifkin, R.; Yeo, G.; Poggio, T. Regularized least-squares classification. Nato Sci. Ser. Sub Ser. Ⅲ Comput. Syst. Sci. 2003, 190, 131–154. [Google Scholar] [CrossRef]

- Wang, M.M.; Liu, Y.; Huang, Z.Y. Large margin object tracking with circulant feature maps. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4800–4808. [Google Scholar] [CrossRef] [Green Version]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Accurate scale estimation for robust visual tracking. In Proceedings of the British Machine Vision Conference (BMVC), Nottingham, UK, 1–5 September 2014. [Google Scholar] [CrossRef] [Green Version]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. Lasot: A high-quality benchmark for large-scale single object tracking. arXiv 2018, arXiv:1809.07845. Available online: https://arxiv.org/abs/1809.07845 (accessed on 24 July 2020).

- Li, F.; Tian, C.; Zuo, W.M.; Zhang, L.; Yang, M.H. Learning spatial-temporal regularized correlation filters for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4904–4913. [Google Scholar] [CrossRef] [Green Version]

- Fan, H.; Ling, H. Parallel tracking and verifying: A framework for real-time and high accuracy visual tracking. arXiv 2017, arXiv:1708.00153. Available online: https://arxiv.org/abs/1708.00153 (accessed on 24 July 2020).

- Lukezic, A.; Vojir, T.; Zajc, L.C.; Matas, J.; Kristan, M. Discriminative correlation filter with channel and spatial reliability. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4847–4856. [Google Scholar] [CrossRef] [Green Version]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Adaptive decontamination of the training set: A unified equation for discriminative visual tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1430–1438. [Google Scholar] [CrossRef] [Green Version]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary learners for real-time tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, J.K. A scale adaptive kernel correlation filter tracker with feature integration. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 254–265. [Google Scholar] [CrossRef]

- Tao, R.; Gavves, E.; Smeulders, A.W.M. Siamese Instance Search for Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1420–1429. [Google Scholar] [CrossRef] [Green Version]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient convolution operators for tracking. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6931–6939. [Google Scholar] [CrossRef] [Green Version]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond correlation filters learning continuous convolution operators for visual tracking. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, Holland, 8–16 October 2016; pp. 472–488. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.H.; Wang, L.J.; Qi, J.Q.; Wang, D.; Feng, M.Y.; Lu, H.C. Structured siamese network for real-time visual tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 355–370. [Google Scholar]

- Guo, Q.; Feng, W.; Zhou, C.; Huang, R.; Wan, L.; Wang, S. Learning dynamic siamese network for visual object tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1781–1789. [Google Scholar] [CrossRef]

- Choi, J.; Chang, H.; Fischer, T.; Yun, S.; Lee, K.; Jeong, J.; Demiris, Y.; Choi, J.Y. Context-aware deep feature compression for high-speed visual tracking. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 479–488. [Google Scholar] [CrossRef] [Green Version]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H. End-to-end representation learning for correlation filter based tracking. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5000–5008. [Google Scholar] [CrossRef] [Green Version]

| Ours | ECO | CCOT | HCF | fDSST | SRDCFdecon | Staple | AO-CF | SAMF | |

|---|---|---|---|---|---|---|---|---|---|

| Avg.FPS | 21.536 | 1.271 | 0.524 | 0.740 | 71.079 | 2.203 | 83.484 | 52.107 | 18.469 |

| Ours | ECO | CCOT | HCF | fDSST | SRDCFdecon | Staple | AO-CF | SAMF | |

|---|---|---|---|---|---|---|---|---|---|

| Avg.FPS | 20.103 | 1.245 | 0.407 | 0.720 | 66.353 | 2.195 | 78.356 | 48.936 | 16.120 |

| Ours | SiamFC | EBT | MDNET | HCF | SRDCFdecon | Staple | SRDCF | |

|---|---|---|---|---|---|---|---|---|

| EAO | 0.308 | 0.277 | 0.290 | 0.258 | 0.220 | 0.262 | 0.294 | 0.231 |

| Ours | SiamFC | EBT | MDNET | HCF | SRDCFdecon | Staple | SRDCF | |

|---|---|---|---|---|---|---|---|---|

| Camera motion | 0.560 | 0.563 | 0.491 | 0.547 | 0.438 | 0.530 | 0.551 | 0.551 |

| Illumination change | 0.718 | 0.672 | 0.407 | 0.639 | 0.462 | 0.714 | 0.709 | 0.680 |

| Motion change | 0.528 | 0.530 | 0.439 | 0.508 | 0.423 | 0.466 | 0.507 | 0.486 |

| Occlusion | 0.499 | 0.448 | 0.375 | 0.491 | 0.433 | 0.434 | 0.433 | 0.408 |

| Size change | 0.528 | 0.514 | 0.356 | 0.511 | 0.354 | 0.490 | 0.511 | 0.478 |

| Empty | 0.597 | 0.586 | 0.518 | 0.563 | 0.502 | 0.524 | 0.584 | 0.580 |

| Mean accuracy | 0.572 | 0.552 | 0.431 | 0.543 | 0.435 | 0.526 | 0.549 | 0.530 |

| Weighted mean accuracy | 0.563 | 0.549 | 0.453 | 0.537 | 0.437 | 0.509 | 0.540 | 0.523 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, C.; Lan, M.; Gao, M.; Dong, Z.; Yu, H.; He, Z. Real-Time Object Tracking via Adaptive Correlation Filters. Sensors 2020, 20, 4124. https://doi.org/10.3390/s20154124

Du C, Lan M, Gao M, Dong Z, Yu H, He Z. Real-Time Object Tracking via Adaptive Correlation Filters. Sensors. 2020; 20(15):4124. https://doi.org/10.3390/s20154124

Chicago/Turabian StyleDu, Chenjie, Mengyang Lan, Mingyu Gao, Zhekang Dong, Haibin Yu, and Zhiwei He. 2020. "Real-Time Object Tracking via Adaptive Correlation Filters" Sensors 20, no. 15: 4124. https://doi.org/10.3390/s20154124