A Coarse-to-Fine Framework for Multiple Pedestrian Crossing Detection

Abstract

:1. Introduction

2. Related Work

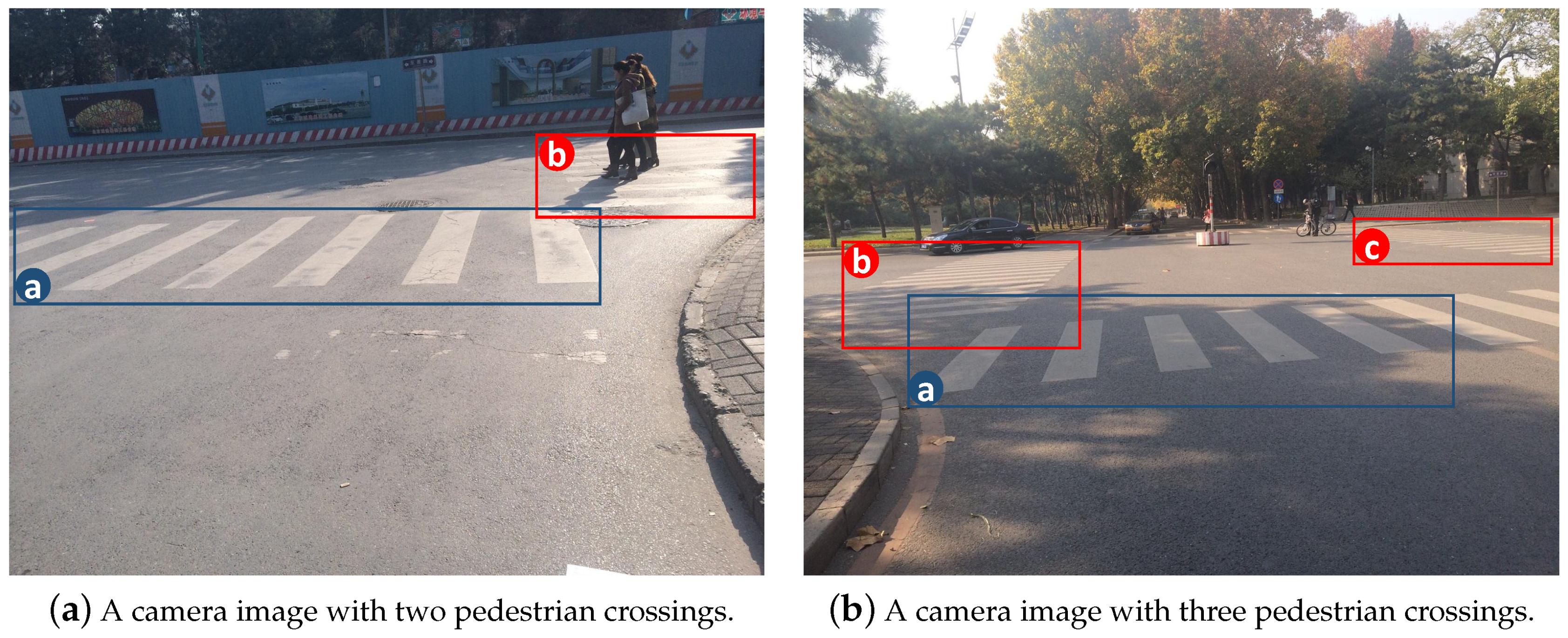

3. Proposed Approach

3.1. Coarse Stage

3.1.1. Searching for Vanishing Points

3.1.2. Line Extraction

3.2. Fine Stage

3.2.1. Cross-Ratio Constraint

3.2.2. Intensity Information Constraint

3.2.3. Aspect Ratio Constraint

4. Experimentation and Evaluation

4.1. Experimental Data

- D1: Contains a total of 331 frames, of which 82 contain no pedestrian crossings, 101 contain one pedestrian crossing, 128 contain two pedestrian crossings, and 20 contain three pedestrian crossings. The total number of pedestrian crossing is 417; and

- D2: Contains a total of 468 frames, of which 97 contain no pedestrian crossings, 140 contain one pedestrian crossing, 200 contain two pedestrian crossings, and 31 contain three pedestrian crossings. The total number of pedestrian crossings is 633; and

- D3: Contains a total of 580 frames, of which 140 contain no pedestrian crossings, 200 contain one pedestrian crossing, 200 contain two pedestrian crossings, and 40 contain three pedestrian crossings. The total number of pedestrian crossings is 720.

4.2. Training

4.2.1. Vanishing Points Detection

4.2.2. Concurrent Lines

4.3. Comparison at Different Steps

4.4. Validation

4.5. Comprehensive Assessment

4.5.1. Multiple Pedestrian Crossing Detection

4.5.2. Comparison with Prior Methods

5. Discussion and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Ye, J.; Li, Y.; Luo, H.; Wang, J.; Chen, W.; Zhang, Q. Hybrid Urban Canyon Pedestrian Navigation Scheme Combined PDR, GNSS and Beacon Based on Smartphone. Remote Sens. 2019, 11, 2174. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Ye, L.; Luo, H.; Men, A.; Zhao, F.; Ou, C. Pedestrian walking distance estimation based on smartphone mode recognition. Remote Sens. 2019, 11, 1140. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Q.; Zhang, B.; Lyu, S.; Zhang, H.; Sun, D.; Li, G.; Feng, W. A CNN-SIFT hybrid pedestrian navigation method based on first-person vision. Remote Sens. 2018, 10, 1229. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Zhang, Y.; Li, Q. Automatic pedestrian crossing detection and impairment analysis based on mobile mapping system. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 251. [Google Scholar] [CrossRef] [Green Version]

- Se, S. Zebra-crossing detection for the partially sighted. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hilton Head Island, SC, USA, 15 June 2000; Volume 2, pp. 211–217. [Google Scholar]

- Meem, M.I.; Dhar, P.K.; Khaliluzzaman, M.; Shimamura, T. Zebra-Crossing Detection and Recognition Based on Flood Fill Operation and Uniform Local Binary Pattern. In Proceedings of the 2019 IEEE International Conference on Electrical, Computer and Communication Engineering (ECCE), Cox’sBazar, Bangladesh, 7–9 February 2019; pp. 1–6. [Google Scholar]

- Boudet, L.; Midenet, S. Pedestrian crossing detection based on evidential fusion of video-sensors. Transp. Res. Part C Emerg. Technol. 2009, 17, 484–497. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Zhang, Z.; Li, S.; Tao, D. Road detection by using a generalized Hough transform. Remote Sens. 2017, 9, 590. [Google Scholar] [CrossRef] [Green Version]

- Collins, R.T.; Weiss, R.S. Vanishing point calculation as a statistical inference on the unit sphere. In Proceedings of the Third International Conference on Computer Vision, Osaka, Japan, 4–7 December 1990; pp. 400–403. [Google Scholar]

- Franke, U.; Heinrich, S. Fast obstacle detection for urban traffic situations. IEEE Trans. Intell. Transp. Syst. 2002, 3, 173–181. [Google Scholar] [CrossRef]

- Hile, H.; Vedantham, R.; Cuellar, G.; Liu, A.; Gelfand, N.; Grzeszczuk, R.; Borriello, G. Landmark-based pedestrian navigation from collections of geotagged photos. In Proceedings of the 7th International Conference on Mobile And Ubiquitous Multimedia, Umeå, Sweden, 3–5 December 2008; pp. 145–152. [Google Scholar]

- Uddin, M.S.; Shioyama, T. Bipolarity and projective invariant-based zebra-crossing detection for the visually impaired. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, Long Beach, CA, USA, 16–60 June 2005; p. 22. [Google Scholar]

- Choi, J.; Lee, J.; Kim, D.; Soprani, G.; Cerri, P.; Broggi, A.; Yi, K. Environment-detection-and-mapping algorithm for autonomous driving in rural or off-road environment. IEEE Trans. Intell. Transp. Syst. 2012, 13, 974–982. [Google Scholar] [CrossRef]

- Coughlan, J.; Shen, H. A fast algorithm for finding crosswalks using figure-ground segmentation. In Proceedings of the 2nd Workshop on Applications of Computer Vision, in Conjunction with ECCV, Graz, Austria, 12 May 2006; Volume 5. [Google Scholar]

- Fang, C.Y.; Chen, S.W.; Fuh, C.S. Automatic change detection of driving environments in a vision-based driver assistance system. IEEE Trans. Neural Netw. 2003, 14, 646–657. [Google Scholar] [CrossRef]

- McCall, J.C.; Trivedi, M.M. Video-based lane estimation and tracking for driver assistance: Survey, system, and evaluation. IEEE Trans. Intell. Transp. Syst. 2006, 7, 20–37. [Google Scholar] [CrossRef] [Green Version]

- Salmane, H.; Khoudour, L.; Ruichek, Y. A Video-Analysis-Based Railway–Road Safety System for Detecting Hazard Situations at Level Crossings. IEEE Trans. Intell. Transp. Syst. 2015, 16, 596–609. [Google Scholar] [CrossRef]

- Dow, C.R.; Ngo, H.H.; Lee, L.H.; Lai, P.Y.; Wang, K.C.; Bui, V.T. A crosswalk pedestrian recognition system by using deep learning and zebra-crossing recognition techniques. Softw. Pract. Exp. 2020, 50, 630–644. [Google Scholar] [CrossRef]

- Kim, J. Efficient Vanishing Point Detection for Driving Assistance Based on Visual Saliency Map and Image Segmentation from a Vehicle Black-Box Camera. Symmetry 2019, 11, 1492. [Google Scholar] [CrossRef] [Green Version]

- Chang, H.; Tsai, F. Vanishing point extraction and refinement for robust camera calibration. Sensors 2018, 18, 63. [Google Scholar] [CrossRef] [Green Version]

- Wu, Z.; Fu, W.; Xue, R.; Wang, W. A novel line space voting method for vanishing-point detection of general road images. Sensors 2016, 16, 948. [Google Scholar] [CrossRef]

- Yang, W.; Fang, B.; Tang, Y.Y. Fast and accurate vanishing point detection and its application in inverse perspective mapping of structured road. IEEE Trans. Syst. Man Cybern. Syst. 2016, 48, 755–766. [Google Scholar] [CrossRef]

- Li, Y.; Ding, W.; Zhang, X.; Ju, Z. Road detection algorithm for autonomous navigation systems based on dark channel prior and vanishing point in complex road scenes. Robot. Auton. Syst. 2016, 85, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Quan, L.; Mohr, R. Determining perspective structures using hierarchical Hough transform. Pattern Recognit. Lett. 1989, 9, 279–286. [Google Scholar] [CrossRef]

- Ding, W.; Li, Y.; Liu, H. Efficient vanishing point detection method in unstructured road environments based on dark channel prior. IET Comput. Vis. 2016, 10, 852–860. [Google Scholar] [CrossRef] [Green Version]

- Förstner, W. Optimal vanishing point detection and rotation estimation of single images from a legoland scene. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Geoinformation Sciences, Paris, France, 1–3 September 2010; pp. 157–162. [Google Scholar]

- Barnard, S.T. Interpreting perspective images. Artif. Intell. 1983, 21, 435–462. [Google Scholar] [CrossRef]

- Lutton, E.; Maitre, H.; Lopez-Krahe, J. Contribution to the determination of vanishing points using Hough transform. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 430–438. [Google Scholar] [CrossRef]

- Hough, P.V. Method and Means for Recognizing Complex Patterns. US Patent 3,069,654, 18 December 1962. [Google Scholar]

- Fernandes, L.A.; Oliveira, M.M. Real-time line detection through an improved Hough transform voting scheme. Pattern Recognit. 2008, 41, 299–314. [Google Scholar] [CrossRef]

- Utcke, S. Grouping based on projective geometry constraints and uncertainty. In Proceedings of the IEEE 1998 Sixth International Conference on Computer Vision, Bombay, India, 7 January 1998; pp. 739–746. [Google Scholar]

- Luo, J.; Gray, R.T.; Lee, H.C. Towards physics-based segmentation of photographic color images. In Proceedings of the IEEE International Conference on Image Processing, Santa Barbara, CA, USA, 26–29 October 1997; Volume 3, pp. 58–61. [Google Scholar]

- Serrano, N.; Savakis, A.E.; Luo, J. Improved scene classification using efficient low-level features and semantic cues. Pattern Recognit. 2004, 37, 1773–1784. [Google Scholar] [CrossRef]

| Scheme | Decision | Crossing | No crossing | Recall Rate (%) | Precision Rate (%) |

|---|---|---|---|---|---|

| Coarse stage | Crossing | 405 | 12 (False positive) | 97.12 | 78.79 |

| No crossing | 109 (False negative) | - | |||

| Cross-ratio | Crossing | 394 | 28 (False positive) | 94.48 | 82.95 |

| No crossing | 81 (False negative) | - | |||

| Intensity cue | Crossing | 383 | 33 (False positive) | 92.07 | 86.46 |

| No crossing | 60 (False negative) | - | |||

| Aspect-ratio | Crossing | 381 | 35 (False positive) | 91.59 | 90.07 |

| No crossing | 42 (False negative) | - |

| Type (♯ Frames) | Decision | Crossing | No Crossing | Recall Rate (%) | Precision Rate (%) |

|---|---|---|---|---|---|

| 0 (140) | Cross | - | - | - | - |

| No crossing | 11 (False negative) | - | |||

| 1 (200) | Cross | 194 | 6 (False positive) | 97 | 95.10 |

| No crossing | 10 (False negative) | - | |||

| 2 (200) | Cross | 376 | 24 (False positive) | 94 | 91.04 |

| No crossing | 37 (False negative) | - | |||

| 3 (40) | Crossing | 112 | 8 (False positive) | 93.33 | 90.32 |

| No crossing | 12 (False negative) | - | |||

| Total (580) | Cross | 682 | 38 (False positive) | 94.72 | 90.69 |

| No crossing | 70 (False negative) | - |

| Scheme | Decision | Crossing | No Crossing | Recall Rate (%) | Precision Rate (%) |

|---|---|---|---|---|---|

| [5] | Cross | 158 | 42 (False positive) | 71.9 | 70.22 |

| No crossing | 67 (False negative) | - | |||

| [12] | Cross | 163 | 37 (False positive) | 81.5 | 76.17 |

| No crossing | 51 (False negative) | - | |||

| [14] | Cross | 187 | 13 (False positive) | 93.5 | 87.38 |

| No crossing | 27 (False negative) | - | |||

| [4] | Cross | 189 | 11 (False positive) | 94.5 | 90.87 |

| No crossing | 19 (False negative) | - | |||

| [6] | Cross | 191 | 9 (False positive) | 95.5 | 91.39 |

| No crossing | 18 (False negative) | - | |||

| Our method | Cross | 194 | 6 (False positive) | 97 | 95.1 |

| No crossing | 10 (False negative) | - |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, Y.; Sun, Z.; Zhao, G. A Coarse-to-Fine Framework for Multiple Pedestrian Crossing Detection. Sensors 2020, 20, 4144. https://doi.org/10.3390/s20154144

Fan Y, Sun Z, Zhao G. A Coarse-to-Fine Framework for Multiple Pedestrian Crossing Detection. Sensors. 2020; 20(15):4144. https://doi.org/10.3390/s20154144

Chicago/Turabian StyleFan, Yuhua, Zhonggui Sun, and Guoying Zhao. 2020. "A Coarse-to-Fine Framework for Multiple Pedestrian Crossing Detection" Sensors 20, no. 15: 4144. https://doi.org/10.3390/s20154144

APA StyleFan, Y., Sun, Z., & Zhao, G. (2020). A Coarse-to-Fine Framework for Multiple Pedestrian Crossing Detection. Sensors, 20(15), 4144. https://doi.org/10.3390/s20154144