Decentralized Mesh-Based Model Predictive Control for Swarms of UAVs

Abstract

:1. Introduction

- In Section 2, the problem is precisely stated and the general scheme of the proposed strategy is illustrated;

- Section 3 focuses on the swarm topology based on the Delaunay triangulation;

- Section 4 describes the potential based guidance algorithm driving the swarm on a regular-meshed flight formation;

- Section 5 focuses on the MPC-based trajectory tracking algorithm with collision avoidance;

- Finally, in Section 6, numerical simulation results are shown for several operational scenarios.

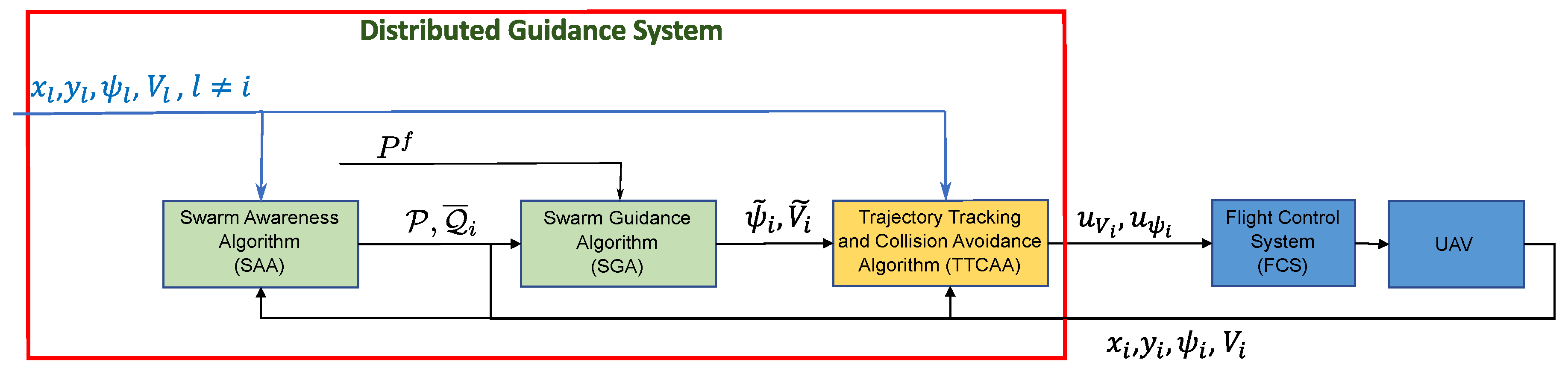

2. Problem Statement and General Architecture

- The so-called swarm awareness algorithm (SAA) aims to compute the connection topology () using an extended Delaunay triangulation technique that takes into account the presence of obstacles.

- The swarm guidance algorithm (SGA) is based on a potential field technique to compute the desired heading angle and speed () so as to maintain the mutual distances between aircraft and reach the target position .

- The trajectory tracking and collision avoidance algorithm (TTCAA) is based on a model predictive controller and provides the reference signals and to the FCS, taking into account environmental constraints (obstacles, no-fly zones, other aircraft) and possible constraint on aircraft state and input (e.g., minimum/maximum speed, maximum turn rate).

3. Swarm Awareness Algorithm

4. The Swarm Guidance Algorithm

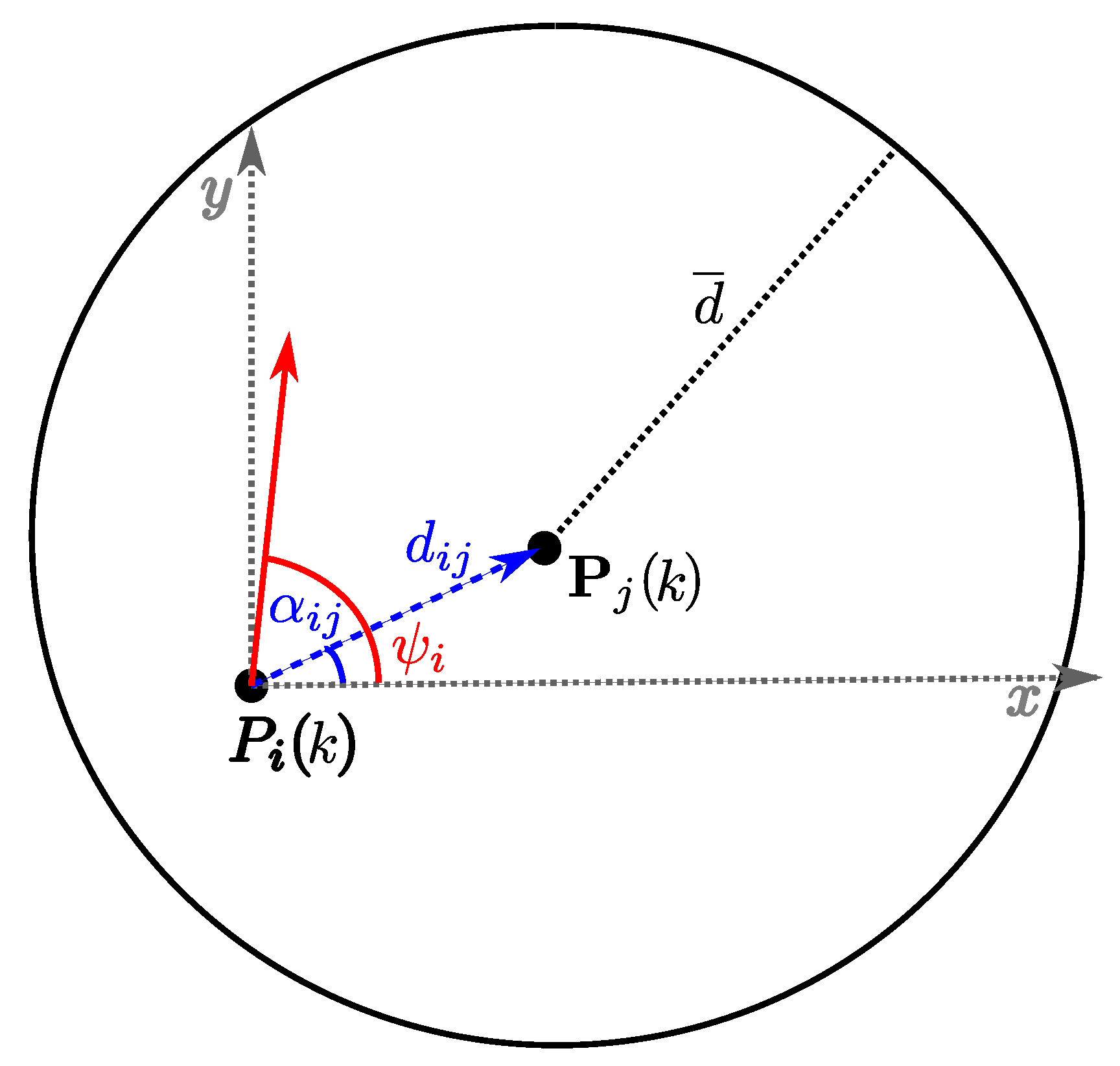

- -

- ,

- -

- the operator wraps the angle in the interval ,

- -

- is the angle between the vector and the horizontal axis, and

- -

- is the heading of the i-th aircraft.

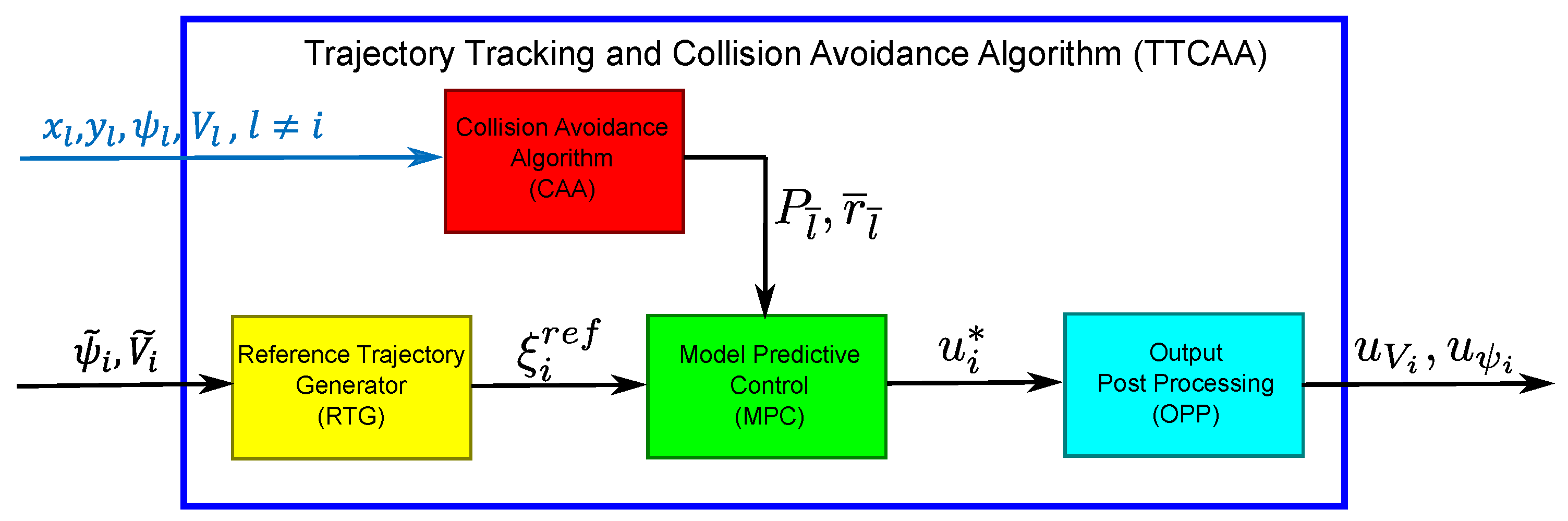

5. Trajectory Tracking and Collision Avoidance Algorithm

- A Reference Trajectory Generation (RTG): on the basis of the inputs from the SGA, it computes the reference trajectory for the MPC;

- A Collision Avoidance Algorithm (CAA): it adds constraints to the MPC problem, whenever a potential collision with other vehicles or obstacles is detected.

- Model Predictive Control (MPC): it computes the acceleration vector needed to follow the reference trajectory and avoid any potential collision;

- MPC Output Post Processing (OPP): it converts acceleration into control signal for the FCS, namely acceleration along the flight trajectory and turn rate .

- -

- is the cost function to be minimized;

- -

- is the predicted state at the time step . This is computed using (12) which represents the dynamics of the aircraft, comprehensive of the initial condition which is measured or estimated;

- -

- is the control signal at the time instant ;

- -

- Equation (11) defines static constraints involving states and inputs.

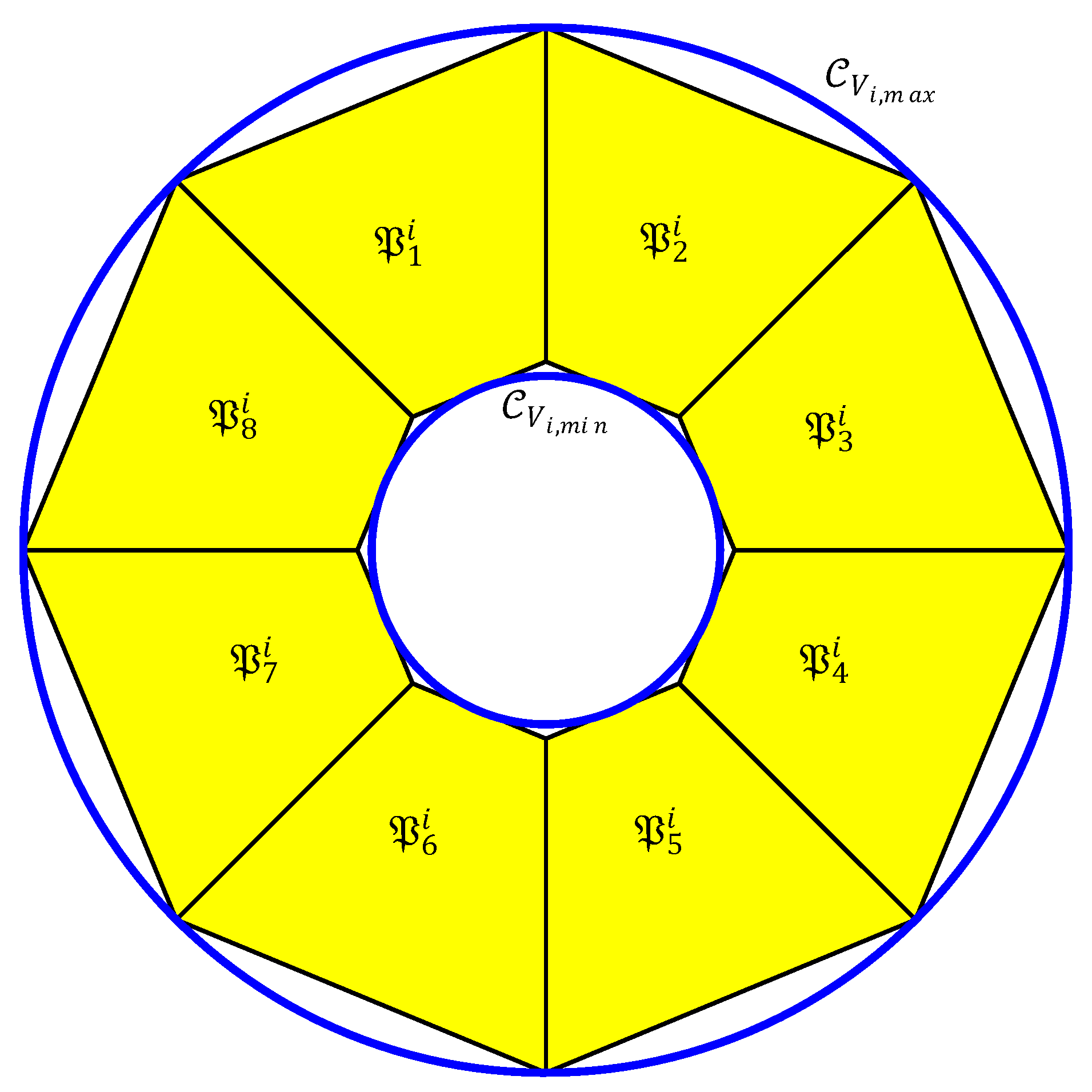

5.1. Acceleration and Speed Limits

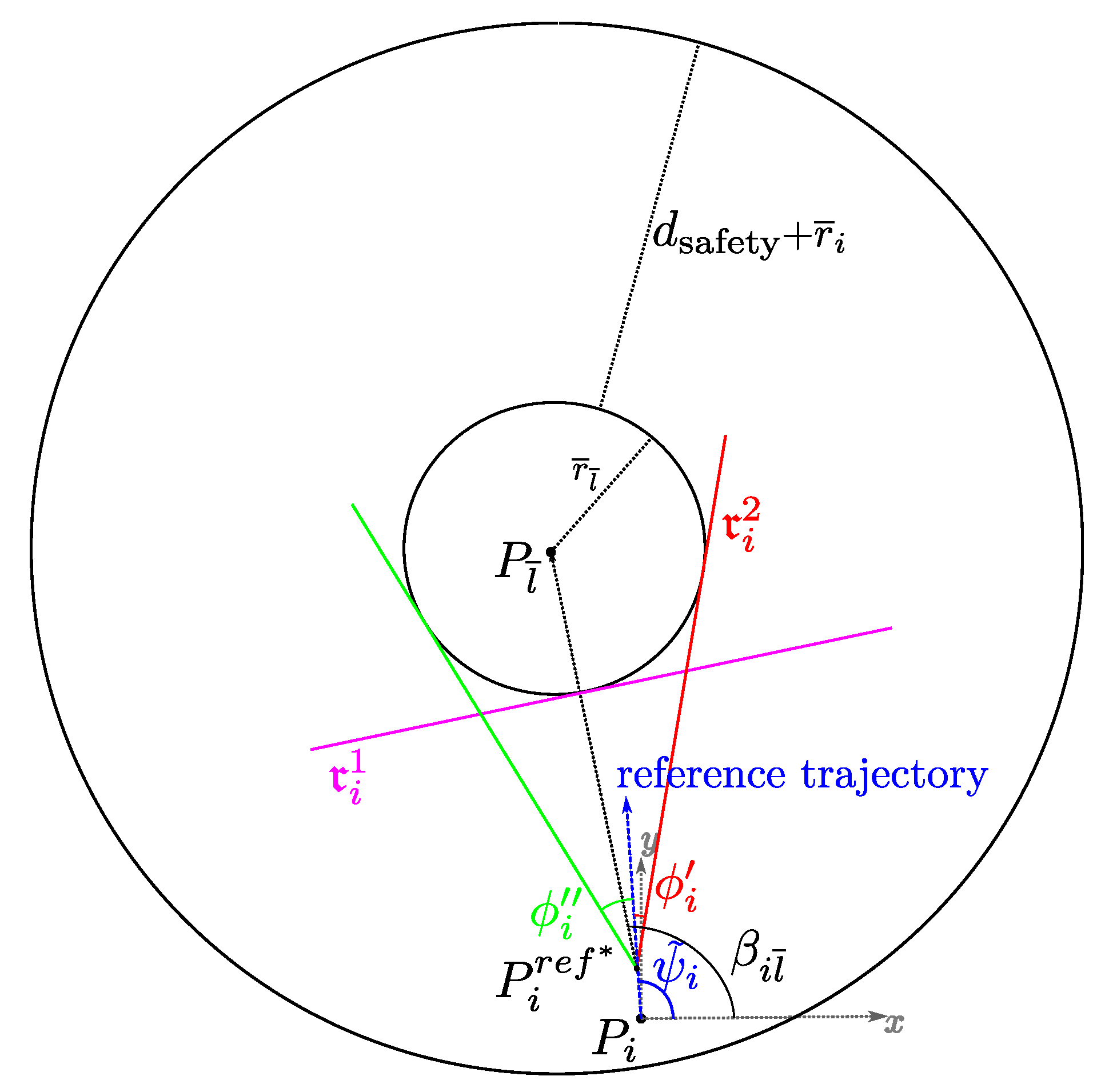

5.2. Collision Avoidance Algorithm and Constraints

6. Numerical Results

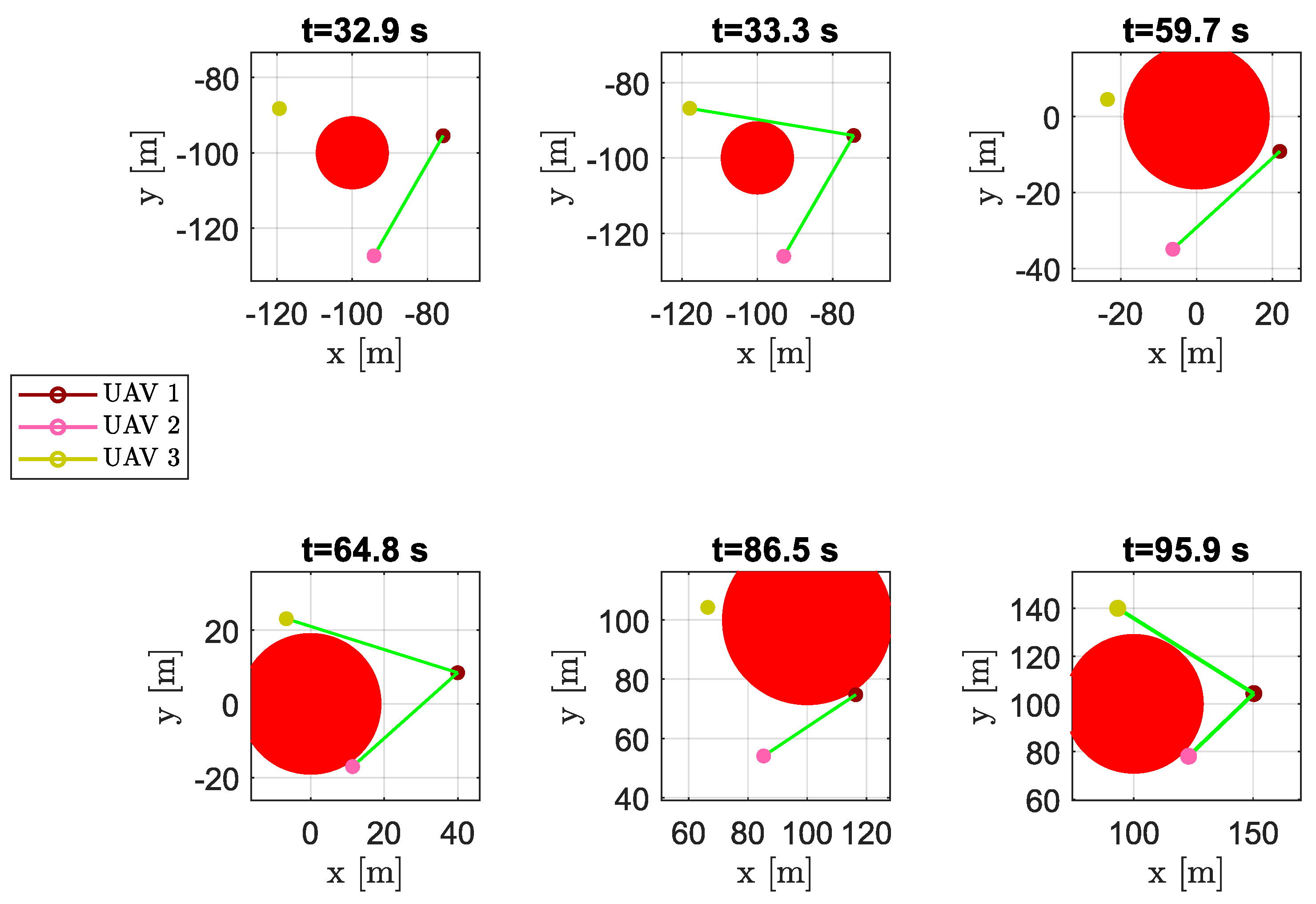

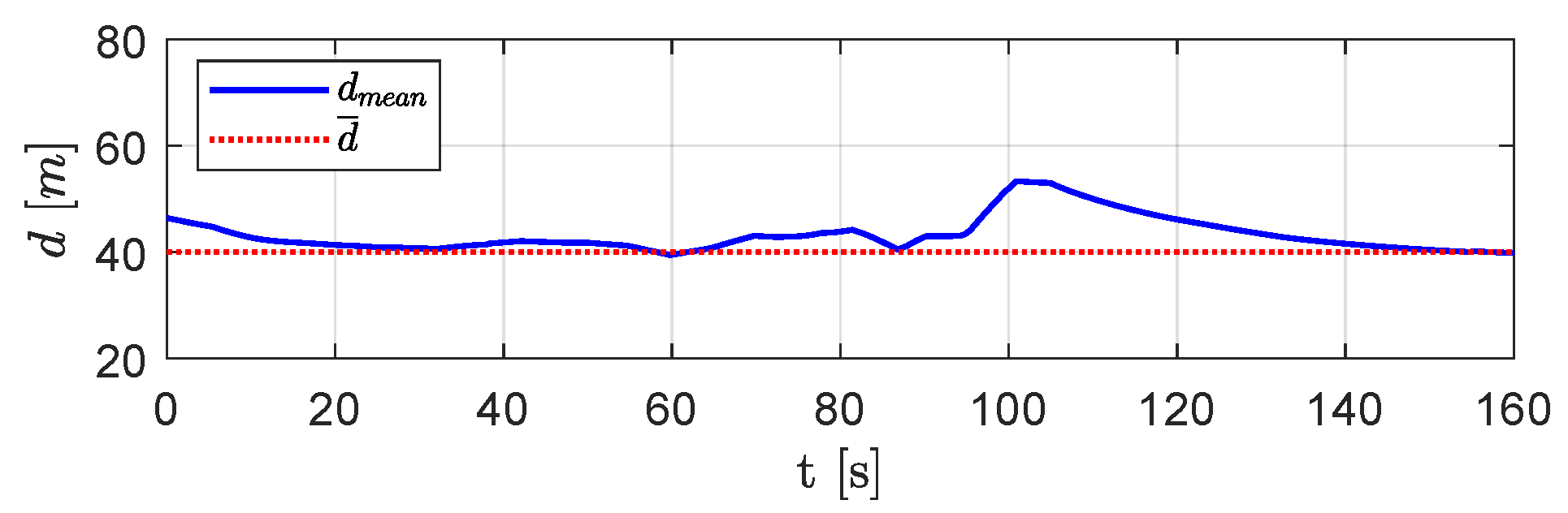

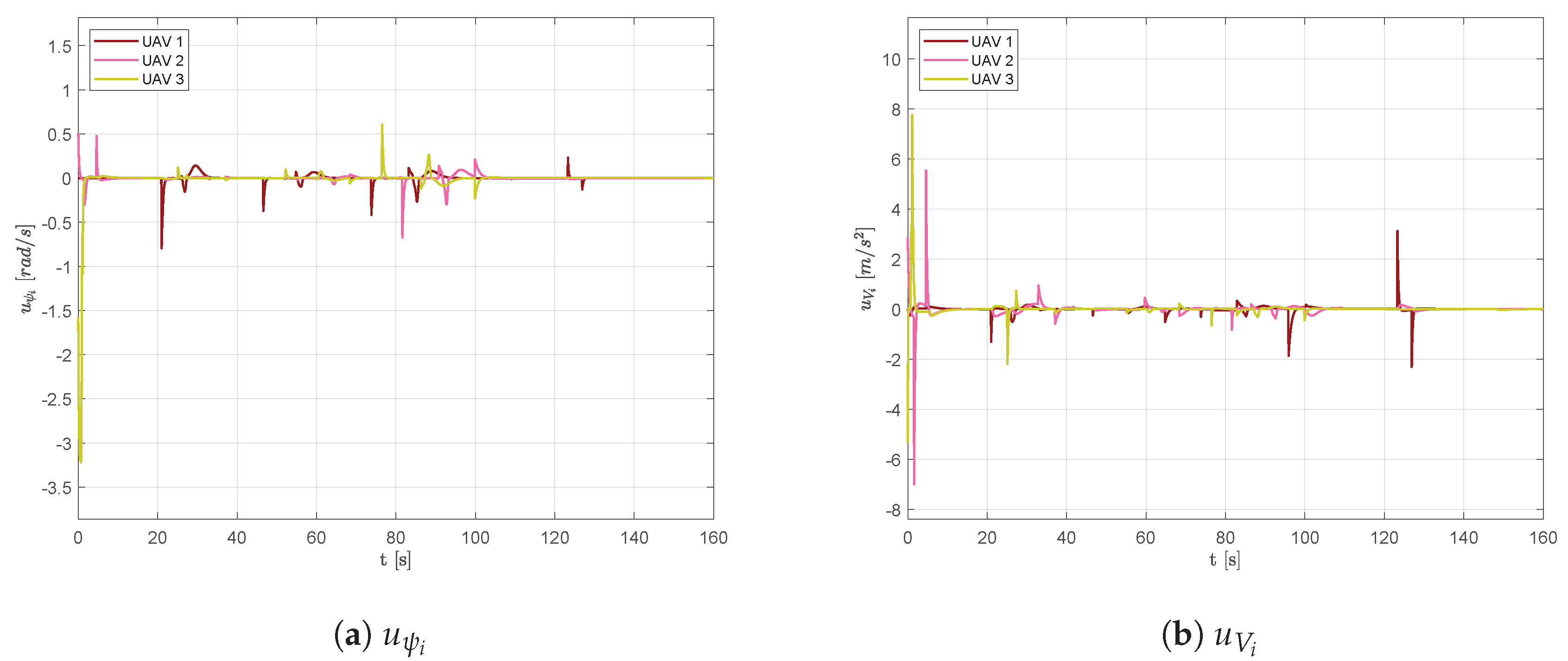

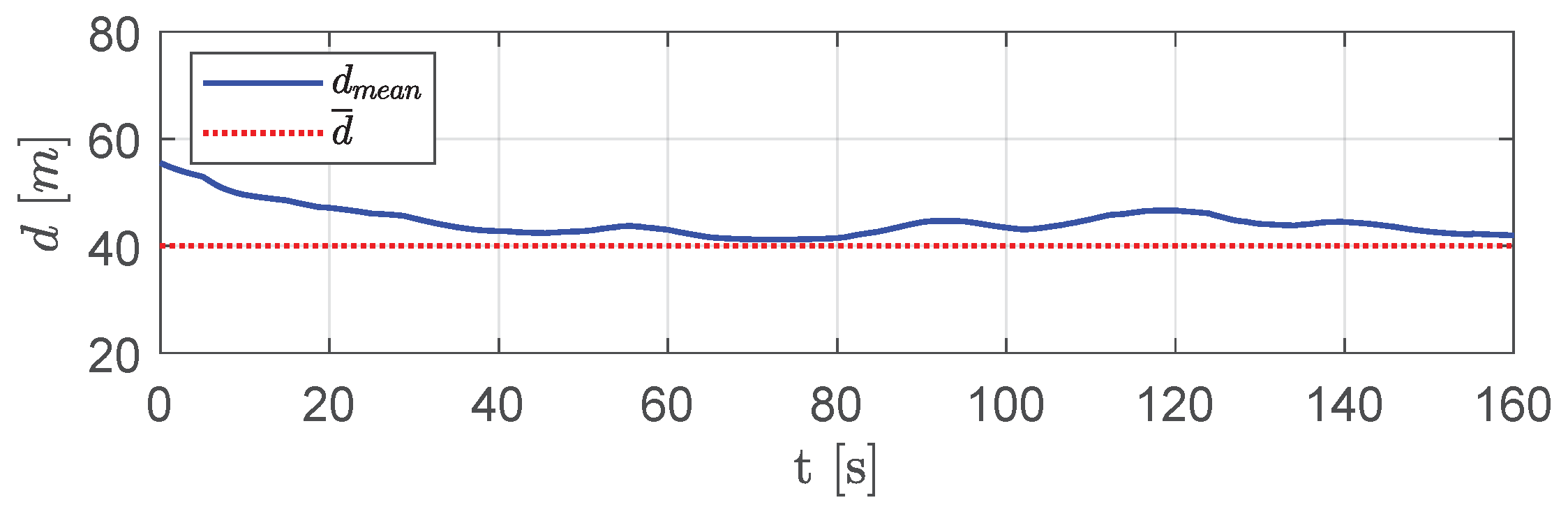

6.1. Scenario #1—Three Aircraft

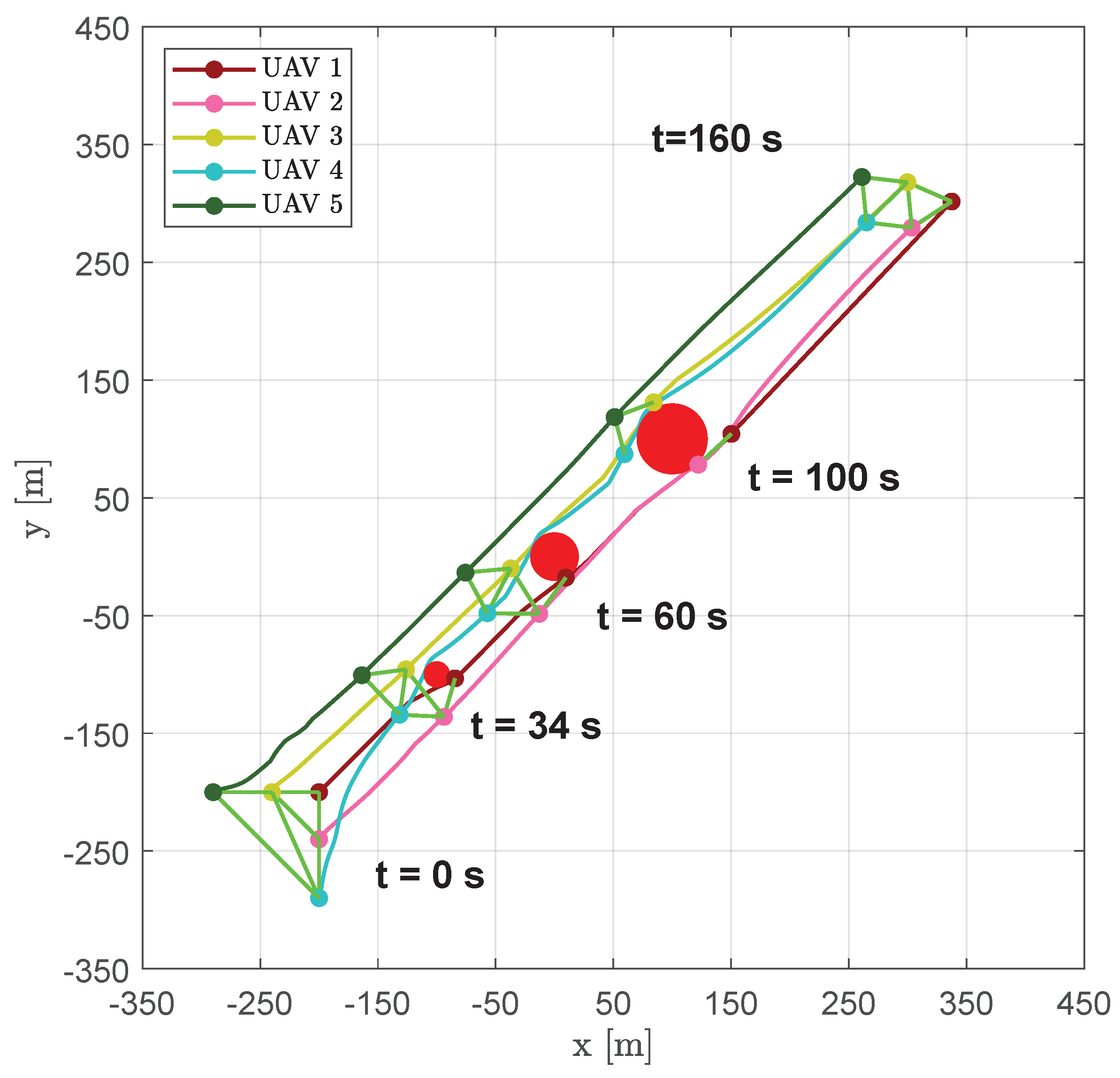

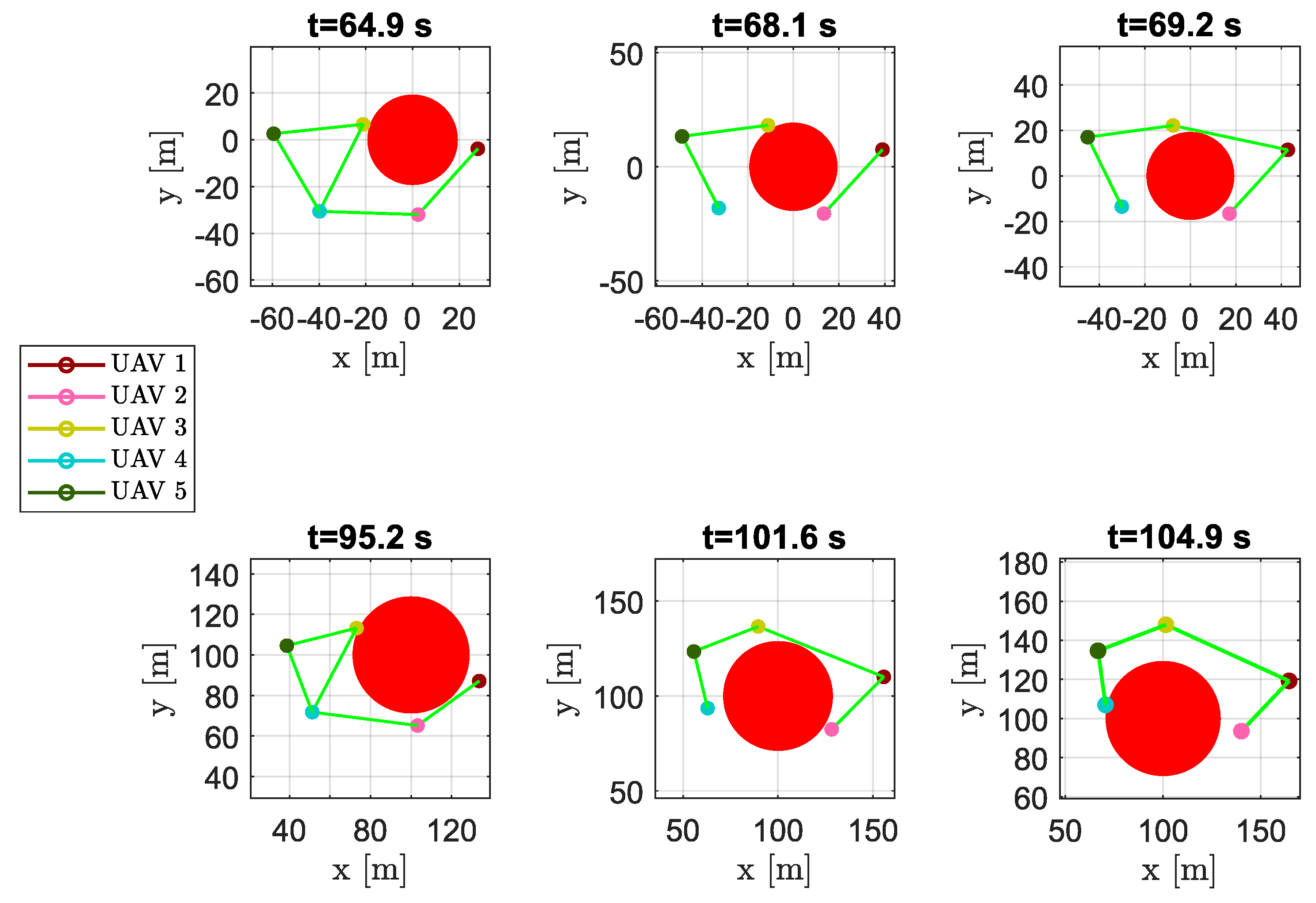

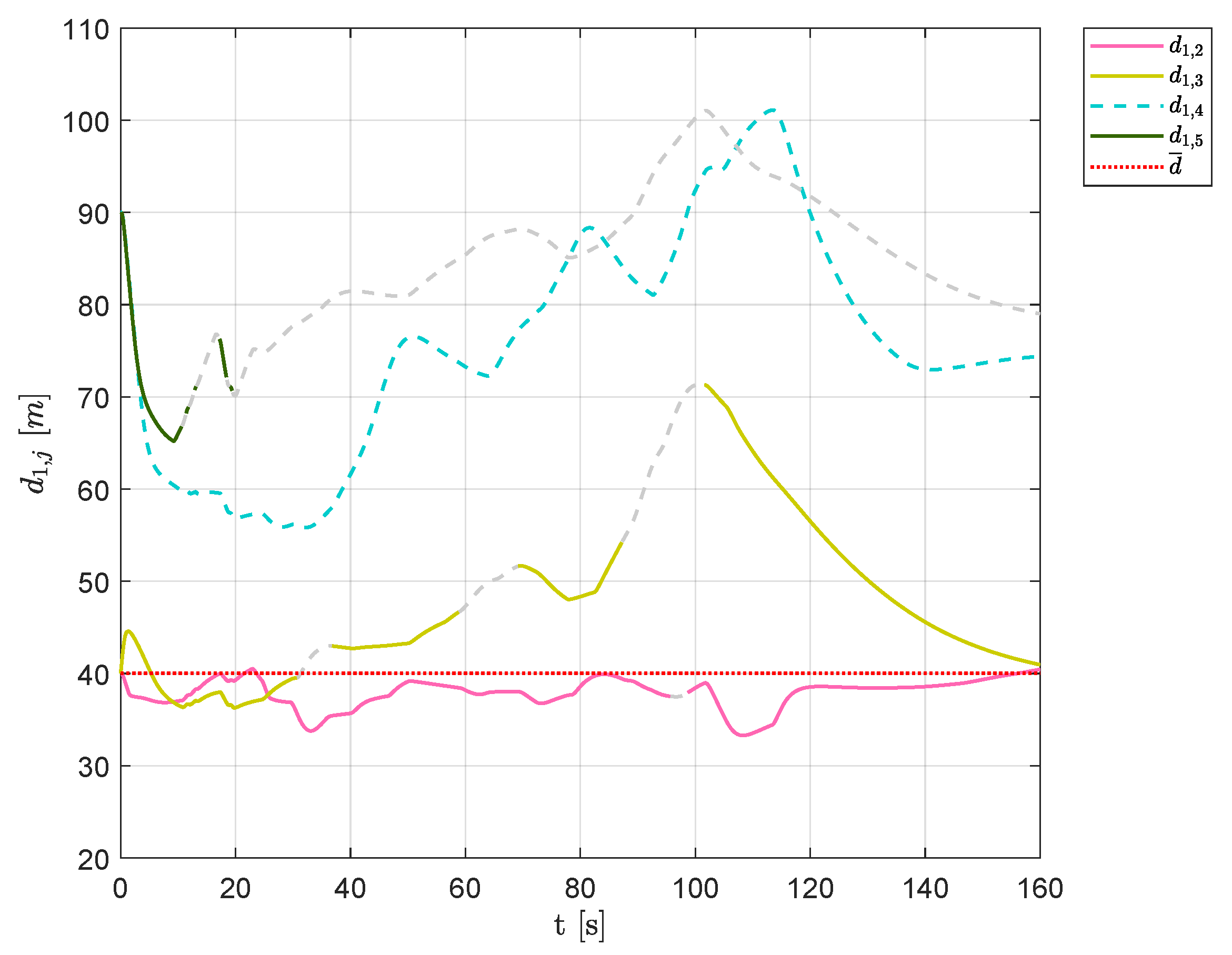

6.2. Scenario #1—Five Aircraft

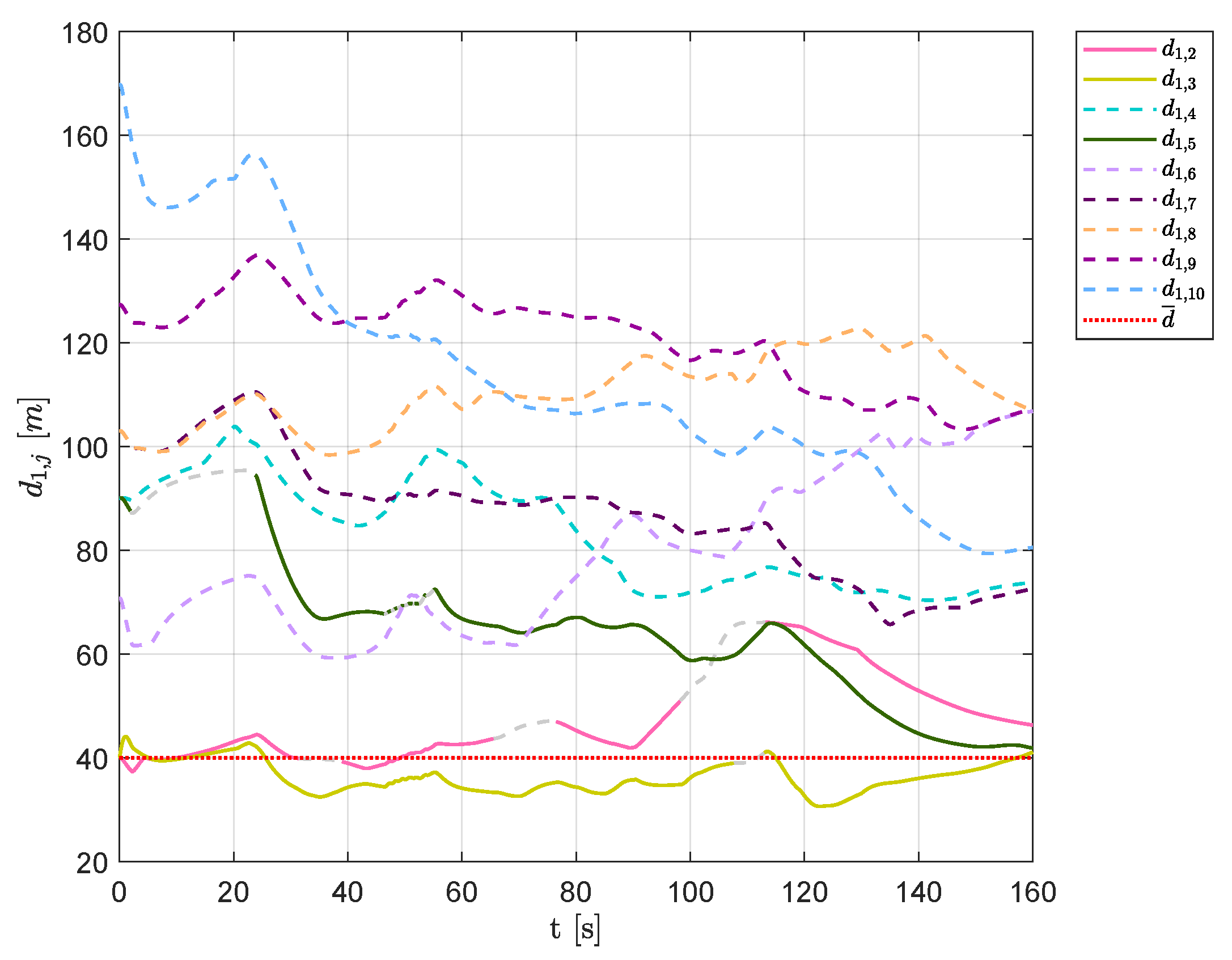

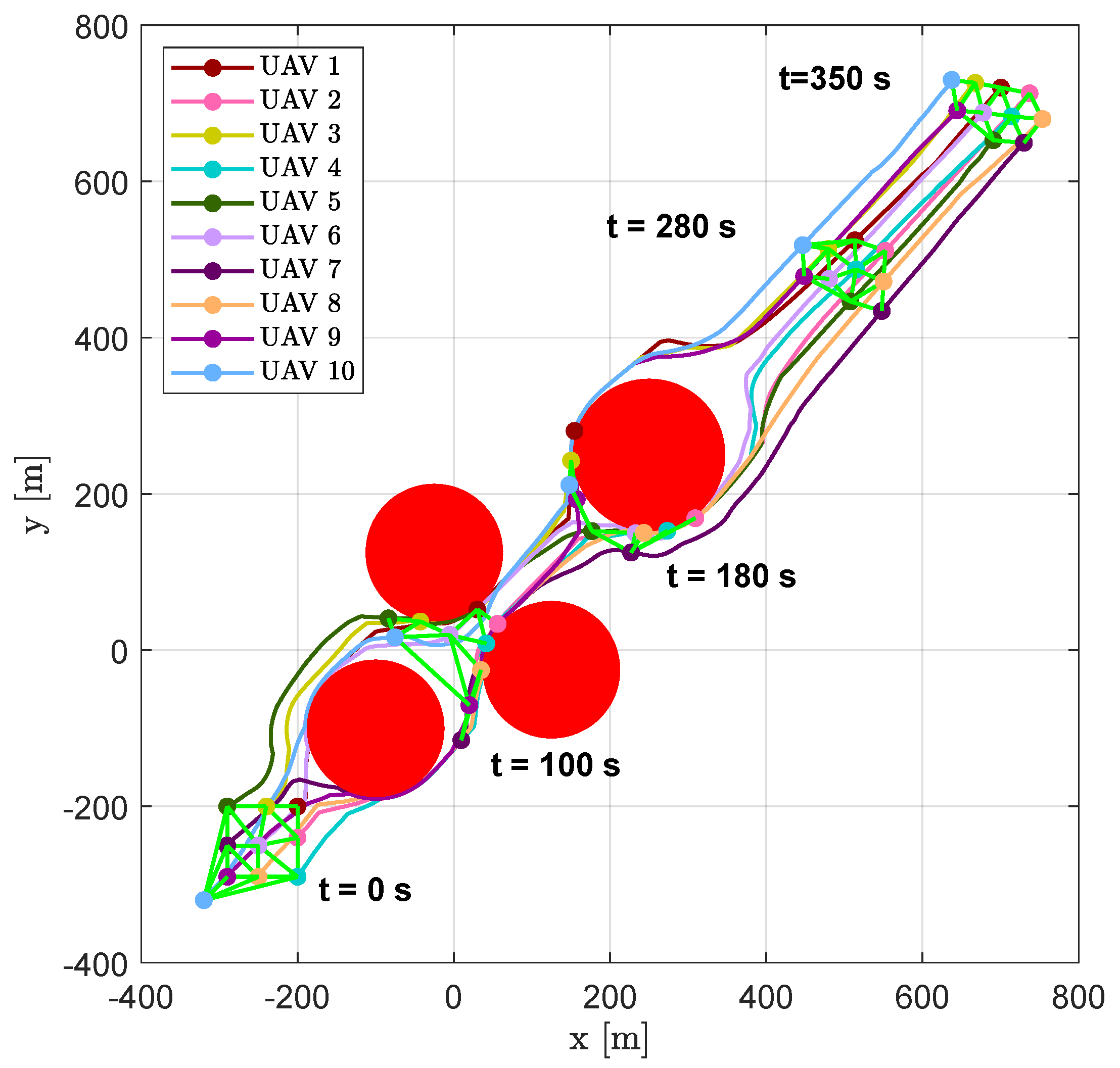

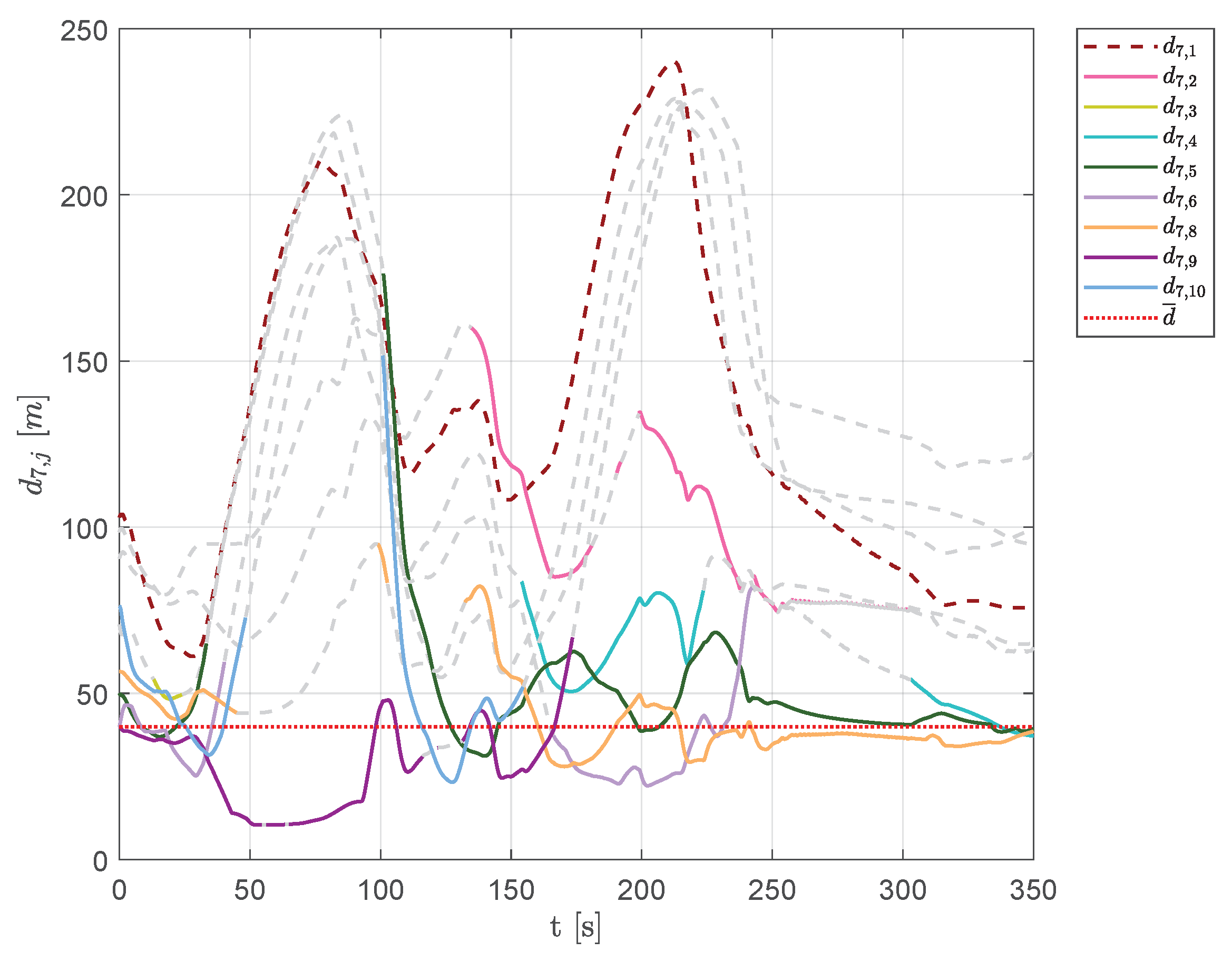

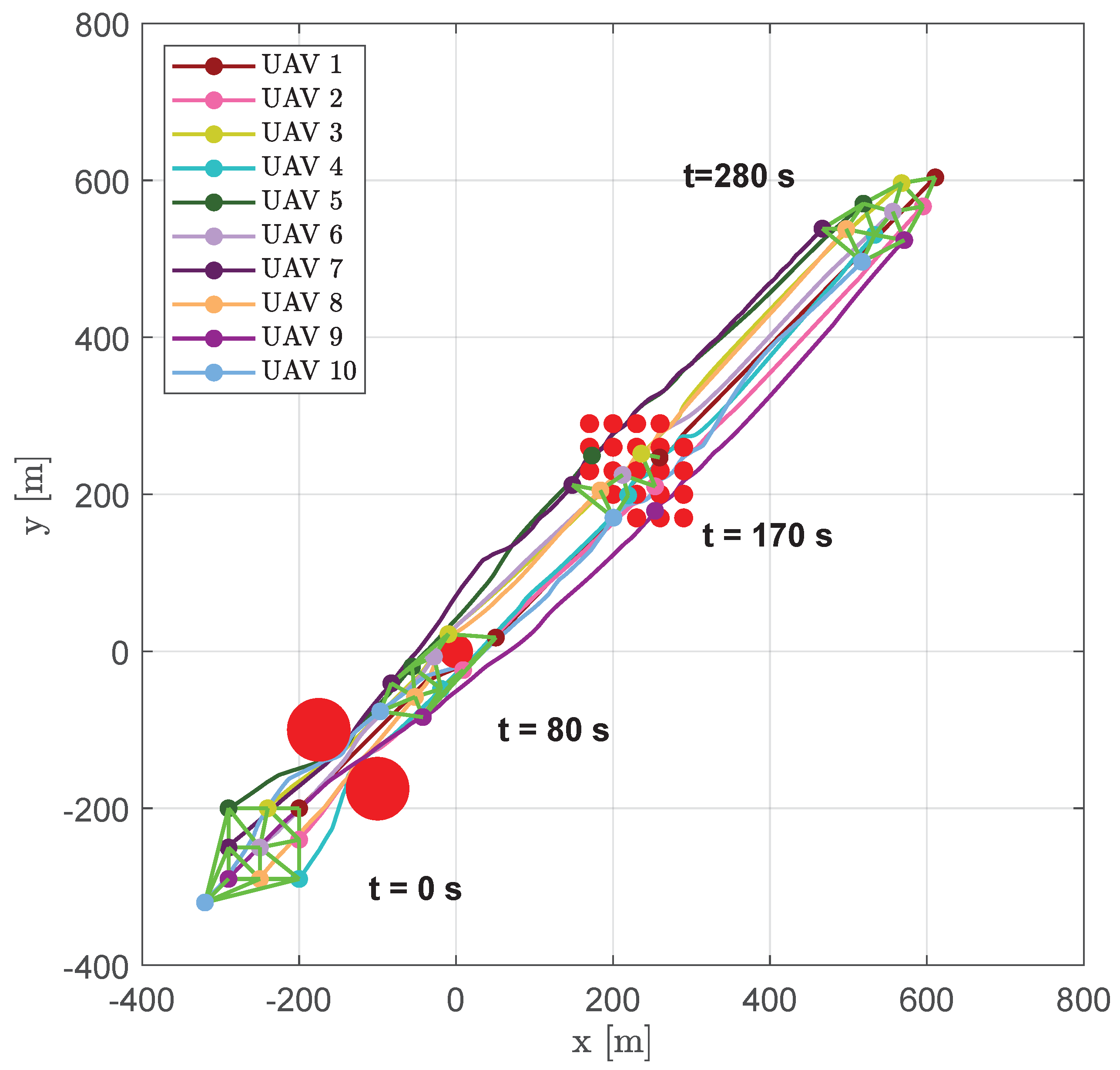

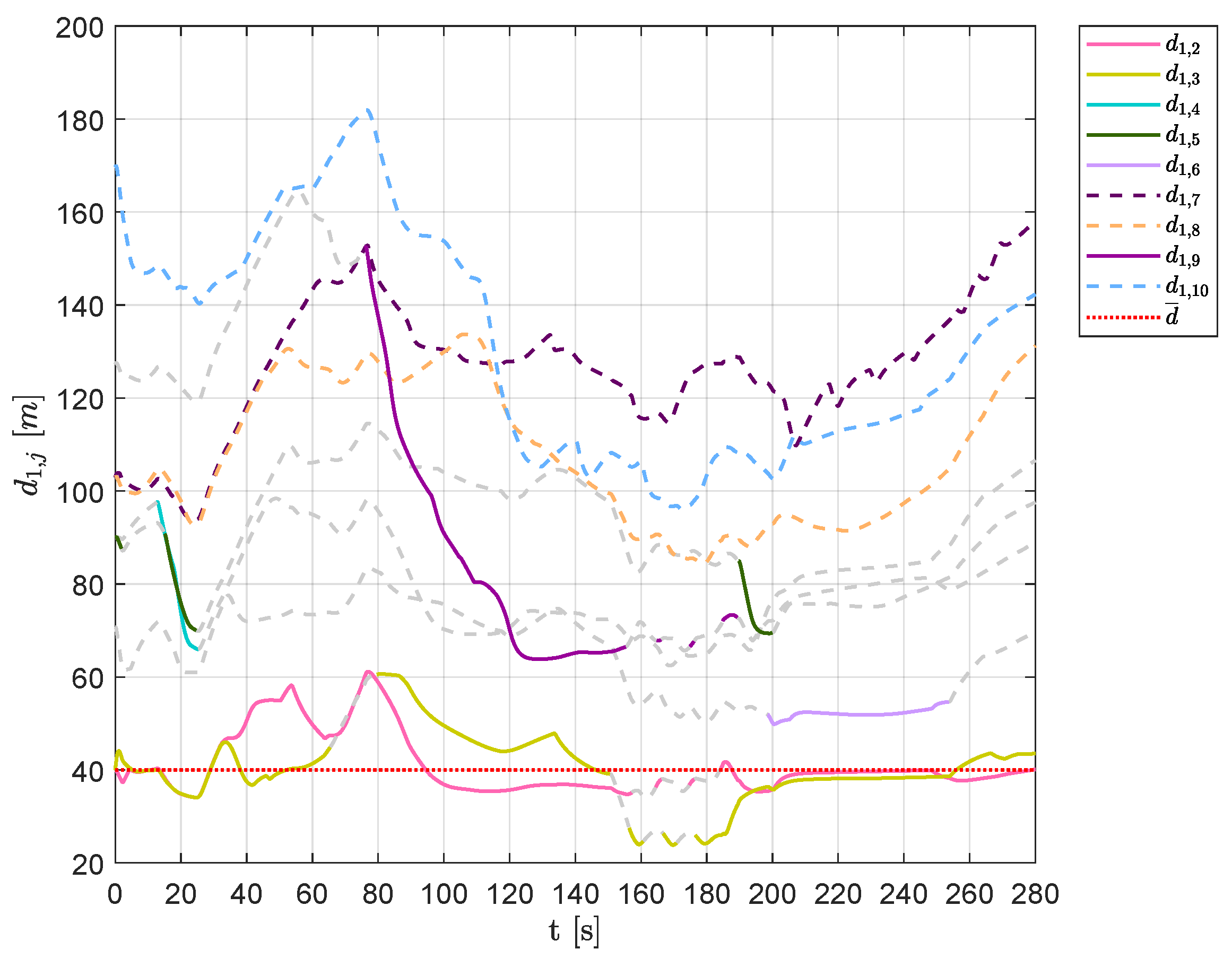

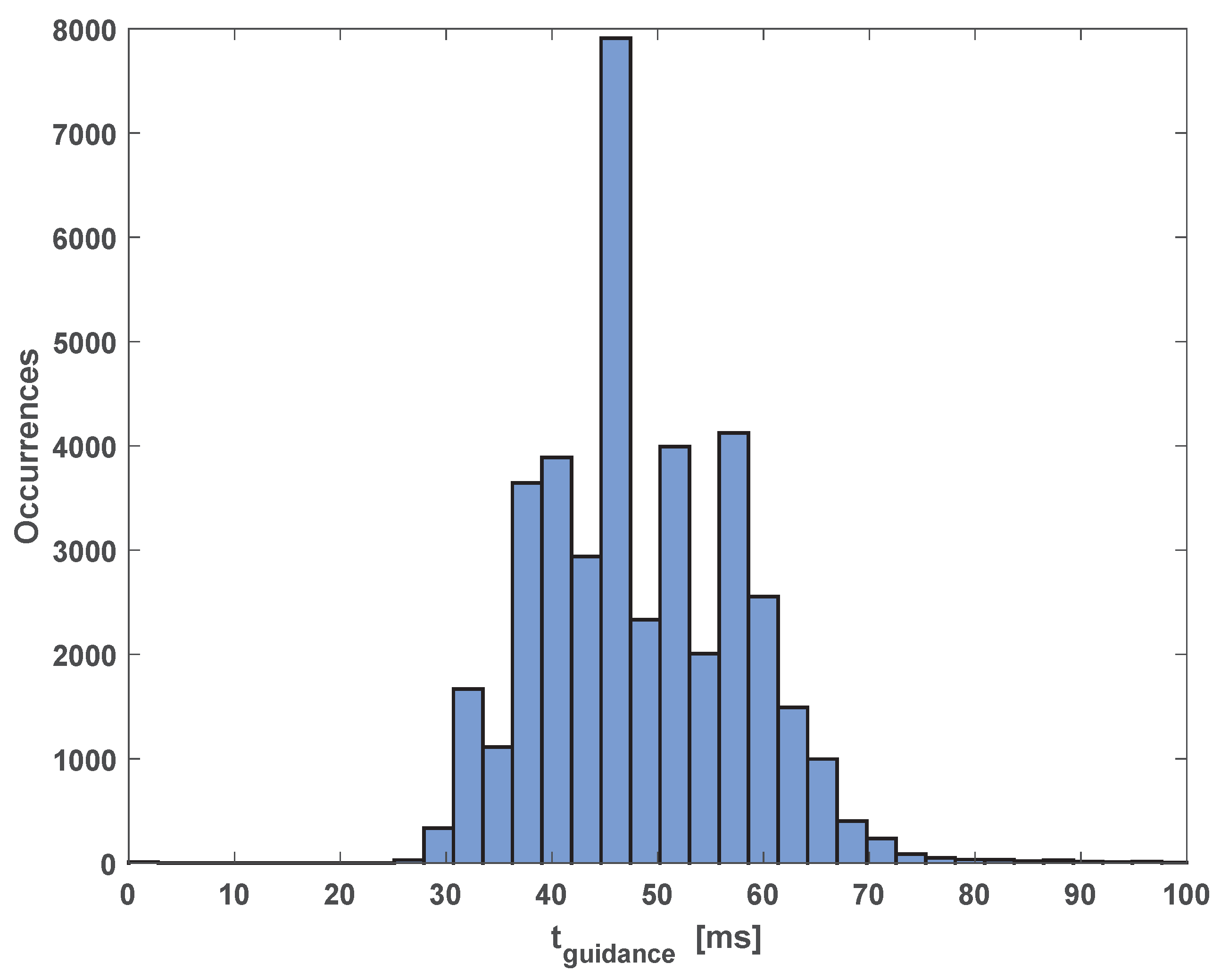

6.3. Scenario #1—10 Aircraft

6.4. Scenario #2—10 Aircraft

6.5. Scenario #3—10 Aircraft

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Raymer, D. Aircraft Design: A Conceptual Approach; American Institute of Aeronautics and Astronautics, Inc.: Reston, VA, USA, 2012. [Google Scholar]

- Roskam, J. Airplane Design; DARcorporation: Lawrence, KS, USA, 1985. [Google Scholar]

- Gudmundsson, S. General Aviation Aircraft Design: Applied Methods and Procedures; Butterworth-Heinemann: Oxford, UK, 2013. [Google Scholar]

- Valerdi, R. Cost metrics for unmanned aerial vehicles. In Proceedings of the AIAA 16th Lighter-Than-Air Systems Technology Conference and Balloon Systems Conference, Arlington, VA, USA, 26–28 September 2005; p. 7102. [Google Scholar]

- Maza, I.; Ollero, A.; Casado, E.; Scarlatti, D. Classification of Multi-UAV Architectures. In Handbook of Unmanned Aerial Vehicles; Valavanis, K.P., Vachtsevanos, G.J., Eds.; Springer: Dordrecht, The Netherlands, 2015; pp. 953–975. [Google Scholar] [CrossRef]

- Kushleyev, A.; Mellinger, D.; Powers, C.; Kumar, V. Towards a swarm of agile micro quadrotors. Auton. Robot. 2013, 35, 287–300. [Google Scholar] [CrossRef]

- Dudek, G.; Jenkin, M.; Milios, E.; Wilkes, D. A taxonomy for multi-agent robotics. Auton. Robot. 1996, 3. [Google Scholar] [CrossRef]

- Cao, Y.U.; Fukunaga, A.S.; Kahng, A. Cooperative mobile robotics: Antecedents and directions. Auton. Robot. 1997, 4, 7–27. [Google Scholar] [CrossRef]

- Schneider-Fontan, M.; Mataric, M. Territorial multi-robot task division. IEEE Trans. Robot. Autom. 1998, 14, 815–822. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.; Thomas, A.G.; Singh, S.; Baldi, S.; Wang, X. A semi-physical platform for guidance and formations of fixed-wing unmanned aerial vehicles. Sensors 2020, 20, 1136. [Google Scholar] [CrossRef] [Green Version]

- Guo, X.; Lu, J.; Alsaedi, A.; Alsaadi, F.E. Bipartite consensus for multi-agent systems with antagonistic interactions and communication delays. Phys. A Stat. Mech. Its Appl. 2018, 495, 488–497. [Google Scholar] [CrossRef]

- Lee, W.; Kim, D. Autonomous shepherding behaviors of multiple target steering robots. Sensors 2017, 17, 2729. [Google Scholar]

- Forestiero, A. Bio-inspired algorithm for outliers detection. Multimed. Tools Appl. 2017, 76, 25659–25677. [Google Scholar] [CrossRef]

- Wang, J.; Ahn, I.S.; Lu, Y.; Yang, T.; Staskevich, G. A distributed estimation algorithm for collective behaviors in multiagent systems with applications to unicycle agents. Int. J. Control. Autom. Syst. 2017, 15, 2829–2839. [Google Scholar] [CrossRef]

- Olfati-Saber, R. Flocking for multi-agent dynamic systems: Algorithms and theory. IEEE Trans. Autom. Control 2006, 51, 401–420. [Google Scholar] [CrossRef] [Green Version]

- Su, H.; Wang, X.; Lin, Z. Flocking of multi-agents with a virtual leader. IEEE Trans. Autom. Control 2009, 54, 293–307. [Google Scholar] [CrossRef]

- Sun, F.; Turkoglu, K. Distributed real-time non-linear receding horizon control methodology for multi-agent consensus problems. Aerosp. Sci. Technol. 2017, 63, 82–90. [Google Scholar] [CrossRef] [Green Version]

- Vásárhelyi, G.; Virágh, C.; Somorjai, G.; Tarcai, N.; Szörényi, T.; Nepusz, T.; Vicsek, T. Outdoor flocking and formation flight with autonomous aerial robots. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 3866–3873. [Google Scholar]

- Bennet, D.J.; MacInnes, C.; Suzuki, M.; Uchiyama, K. Autonomous three-dimensional formation flight for a swarm of unmanned aerial vehicles. J. Guid. Control. Dyn. 2011, 34, 1899–1908. [Google Scholar] [CrossRef] [Green Version]

- Varela, G.; Caamaño, P.; Orjales, F.; Deibe, A.; Lopez-Pena, F.; Duro, R.J. Swarm intelligence based approach for real time UAV team coordination in search operations. In Proceedings of the IEEE 2011 Third World Congress on Nature and Biologically Inspired Computing, Salamanca, Spain, 19–21 October 2011; pp. 365–370. [Google Scholar]

- Alfeo, A.L.; Cimino, M.G.; De Francesco, N.; Lazzeri, A.; Lega, M.; Vaglini, G. Swarm coordination of mini-UAVs for target search using imperfect sensors. Intell. Decis. Technol. 2018, 12, 149–162. [Google Scholar] [CrossRef] [Green Version]

- Saska, M.; Vonásek, V.; Chudoba, J.; Thomas, J.; Loianno, G.; Kumar, V. Swarm distribution and deployment for cooperative surveillance by micro-aerial vehicles. J. Intell. Robot. Syst. 2016, 84, 469–492. [Google Scholar] [CrossRef]

- Acevedo, J.J.; Arrue, B.C.; Maza, I.; Ollero, A. Cooperative large area surveillance with a team of aerial mobile robots for long endurance missions. J. Intell. Robot. Syst. 2013, 70, 329–345. [Google Scholar] [CrossRef]

- Renzaglia, A.; Doitsidis, L.; Chatzichristofis, S.A.; Martinelli, A.; Kosmatopoulos, E.B. Distributed Multi-Robot Coverage using Micro Aerial Vehicles. In Proceedings of the 21st Mediterranean Conference on Control and Automation, Chania, Greece, 25–28 June 2013; pp. 963–968. [Google Scholar]

- Garcia-Aunon, P.; del Cerro, J.; Barrientos, A. Behavior-Based Control for an Aerial Robotic Swarm in Surveillance Missions. Sensors 2019, 19, 4584. [Google Scholar] [CrossRef] [Green Version]

- Kosuge, K.; Sato, M. Transportation of a single object by multiple decentralized-controlled nonholonomic mobile robots. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS’99), Kyongju, Korea, 17–21 October 1999; Volume 3, pp. 1681–1686. [Google Scholar] [CrossRef]

- Tartaglione, G.; D’Amato, E.; Ariola, M.; Rossi, P.S.; Johansen, T.A. Model predictive control for a multi-body slung-load system. Robot. Auton. Syst. 2017, 92, 1–11. [Google Scholar] [CrossRef]

- Bernard, M.; Kondak, K. Generic slung load transportation system using small size helicopters. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation (ICRA), Kobe, Japan, 12–17 May 2009; pp. 3258–3264. [Google Scholar] [CrossRef]

- Michael, N.; Fink, J.; Kumar, V. Cooperative manipulation and transportation with aerial robots. Auton. Robot. 2011, 30, 73–86. [Google Scholar] [CrossRef] [Green Version]

- Palunko, I.; Cruz, P.; Fierro, R. Agile Load Transportation: Safe and Efficient Load Manipulation with Aerial Robots. IEEE Robot. Autom. Mag. 2012, 19, 69–79. [Google Scholar] [CrossRef]

- Bangash, Z.A.; Sanchez, R.P.; Ahmed, A.; Khan, M.J. Aerodynamics of Formation Flight. J. Aircr. 2006, 43, 907–912. [Google Scholar] [CrossRef]

- Ariola, M.; Mattei, M.; D’Amato, E.; Notaro, I.; Tartaglione, G. Model predictive control for a swarm of fixed wing uavs. In Proceedings of the 30th Congress of the International Council of the Aeronautical Sciences (ICAS), Daejeon, Korea, 25–30 September 2016. [Google Scholar]

- Yun, B.; Chen, B.M.; Lum, K.Y.; Lee, T.H. Design and implementation of a leader-follower cooperative control system for unmanned helicopters. J. Control Theory Appl. 2010, 8, 61–68. [Google Scholar] [CrossRef]

- Bayraktar, S.; Fainekos, G.; Pappas, G. Experimental cooperative control of fixed-wing unmanned aerial vehicles. In Proceedings of the 2004 43rd IEEE Conference on Decision and Control (CDC) (IEEE Cat. No.04CH37601), Nassau, Bahamas, 14–17 December 2004; Volume 4, pp. 4292–4298. [Google Scholar] [CrossRef]

- Paul, T.; Krogstad, T.R.; Gravdahl, J.T. Modelling of UAV formation flight using 3D potential field. Simul. Model. Pract. Theory 2008, 16, 1453–1462. [Google Scholar] [CrossRef]

- Dasgupta, P. A Multiagent Swarming System for Distributed Automatic Target Recognition Using Unmanned Aerial Vehicles. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2008, 38, 549–563. [Google Scholar] [CrossRef]

- D’Amato, E.; Mattei, M.; Notaro, I. Bi-level flight path planning of UAV formations with collision avoidance. J. Intell. Robot. Syst. 2019, 93, 193–211. [Google Scholar] [CrossRef]

- Oh, K.K.; Park, M.C.; Ahn, H.S. A survey of multi-agent formation control. Automatica 2015, 53, 424–440. [Google Scholar] [CrossRef]

- Reynolds, C.W. Flocks, herds and schools: A distributed behavioral model. In Proceedings of the 14th Annual Conference on Computer Graphics and Interactive Techniques, Anaheim, CA, USA, 27–31 July 1987; pp. 25–34. [Google Scholar]

- Bellingham, J.S.; Tillerson, M.; Alighanbari, M.; How, J.P. Cooperative path planning for multiple UAVs in dynamic and uncertain environments. In Proceedings of the 41st IEEE Conference on Decision and Control, Las Vegas, NV, USA, 10–13 December 2002; Volume 3, pp. 2816–2822. [Google Scholar]

- Garrido, S.; Moreno, L.; Lima, P.U. Robot formation motion planning using fast marching. Robot. Auton. Syst. 2011, 59, 675–683. [Google Scholar] [CrossRef] [Green Version]

- Gómez, J.V.; Lumbier, A.; Garrido, S.; Moreno, L. Planning robot formations with fast marching square including uncertainty conditions. Robot. Auton. Syst. 2013, 61, 137–152. [Google Scholar] [CrossRef] [Green Version]

- Yang, T.T.; Liu, Z.Y.; Chen, H.; Pei, R. Formation control of mobile robots: State and open problems. Zhineng Xitong Xuebao (CAAI Trans. Intell. Syst.) 2007, 2, 21–27. [Google Scholar]

- Peng, Z.; Wen, G.; Rahmani, A.; Yu, Y. Leader–follower formation control of nonholonomic mobile robots based on a bioinspired neurodynamic based approach. Robot. Auton. Syst. 2013, 61, 988–996. [Google Scholar] [CrossRef]

- Consolini, L.; Morbidi, F.; Prattichizzo, D.; Tosques, M. Leader–follower formation control of nonholonomic mobile robots with input constraints. Automatica 2008, 44, 1343–1349. [Google Scholar] [CrossRef]

- Tan, K.H.; Lewis, M.A. Virtual structures for high-precision cooperative mobile robotic control. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS’96), Osaka, Japan, 8 November 1996; Volume 1, pp. 132–139. [Google Scholar]

- Lewis, M.A.; Tan, K.H. High precision formation control of mobile robots using virtual structures. Auton. Robot. 1997, 4, 387–403. [Google Scholar] [CrossRef]

- Balch, T.; Arkin, R.C. Behavior-based formation control for multirobot teams. IEEE Trans. Robot. Autom. 1998, 14, 926–939. [Google Scholar] [CrossRef] [Green Version]

- Balch, T.; Hybinette, M. Behavior-based coordination of large-scale robot formations. In Proceedings of the Fourth International Conference on MultiAgent Systems, Boston, MA, USA, 10–12 July 2000; pp. 363–364. [Google Scholar]

- Balch, T.; Hybinette, M. Social potentials for scalable multi-robot formations. In Proceedings of the Millennium Conference, IEEE International Conference on Robotics and Automation, Symposia Proceedings (Cat. No. 00CH37065) (2000 ICRA), San Francisco, CA, USA, 24–28 April 2000; Volume 1, pp. 73–80. [Google Scholar]

- Marino, A.; Parker, L.E.; Antonelli, G.; Caccavale, F. A decentralized architecture for multi-robot systems based on the null-space-behavioral control with application to multi-robot border patrolling. J. Intell. Robot. Syst. 2013, 71, 423–444. [Google Scholar] [CrossRef]

- Baizid, K.; Giglio, G.; Pierri, F.; Trujillo, M.A.; Antonelli, G.; Caccavale, F.; Viguria, A.; Chiaverini, S.; Ollero, A. Behavioral control of unmanned aerial vehicle manipulator systems. Auton. Robot. 2017, 41, 1203–1220. [Google Scholar] [CrossRef] [Green Version]

- Barve, A.; Nene, M.J. Survey of Flocking Algorithms in multi-agent Systems. Int. J. Comput. Sci. Issues (IJCSI) 2013, 10, 110. [Google Scholar]

- Chung, S.J.; Paranjape, A.A.; Dames, P.; Shen, S.; Kumar, V. A survey on aerial swarm robotics. IEEE Trans. Robot. 2018, 34, 837–855. [Google Scholar] [CrossRef] [Green Version]

- Vásárhelyi, G.; Virágh, C.; Somorjai, G.; Nepusz, T.; Eiben, A.E.; Vicsek, T. Optimized flocking of autonomous drones in confined environments. Sci. Robot. 2018, 3, eaat3536. [Google Scholar] [CrossRef] [Green Version]

- Cordeiro, T.F.; Ferreira, H.C.; Ishihara, J.Y. Robust and Synchronous Nonlinear Controller for Autonomous Formation Flight of Fixed Wing UASs. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 11–14 June 2019; pp. 378–387. [Google Scholar]

- Singh, S.N.; Zhang, R.; Chandler, P.; Banda, S. Decentralized nonlinear robust control of UAVs in close formation. Int. J. Robust Nonlinear Control. IFAC-Affil. J. 2003, 13, 1057–1078. [Google Scholar] [CrossRef]

- Campa, G.; Gu, Y.; Seanor, B.; Napolitano, M.R.; Pollini, L.; Fravolini, M.L. Design and flight-testing of non-linear formation control laws. Control Eng. Pract. 2007, 15, 1077–1092. [Google Scholar] [CrossRef]

- Cordeiro, T.F.; Ferreira, H.C.; Ishihara, J.Y. Non linear controller and path planner algorithm for an autonomous variable shape formation flight. In Proceedings of the IEEE 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 1493–1502. [Google Scholar]

- Rezaee, H.; Abdollahi, F.; Talebi, H.A. Based Motion Synchronization in Formation Flight With Delayed Communications. IEEE Trans. Ind. Electron. 2014, 61, 6175–6182. [Google Scholar] [CrossRef]

- Han, K.; Lee, J.; Kim, Y. Unmanned aerial vehicle swarm control using potential functions and sliding mode control. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2008, 222, 721–730. [Google Scholar] [CrossRef]

- Quintero, S.A.; Collins, G.E.; Hespanha, J.P. Flocking with fixed-wing UAVs for distributed sensing: A stochastic optimal control approach. In Proceedings of the IEEE 2013 American Control Conference, Washington, DC, USA, 17–19 June 2013; pp. 2025–2031. [Google Scholar]

- Zhihao, C.; Longhong, W.; Jiang, Z.; Kun, W.; Yingxun, W. Virtual target guidance-based distributed model predictive control for formation control of multiple UAVs. Chin. J. Aeronaut. 2020, 33, 1037–1056. [Google Scholar]

- Xi, W.; Baras, J.S. MPC based motion control of car-like vehicle swarms. In Proceedings of the IEEE 2007 Mediterranean Conference on Control & Automation, Athens, Greece, 27–29 June 2007; pp. 1–6. [Google Scholar]

- Peng, Z.; Li, B.; Chen, X.; Wu, J. Online route planning for UAV based on model predictive control and particle swarm optimization algorithm. In Proceedings of the IEEE 10th World Congress on Intelligent Control and Automation, Beijing, China, 6–8 July 2012; pp. 397–401. [Google Scholar]

- Zhang, D.; Xie, G.; Yu, J.; Wang, L. Adaptive task assignment for multiple mobile robots via swarm intelligence approach. Robot. Auton. Syst. 2007, 55, 572–588. [Google Scholar] [CrossRef]

- Altshuler, Y.; Yanovsky, V.; Wagner, I.A.; Bruckstein, A.M. Efficient cooperative search of smart targets using uav swarms. Robotica 2008, 26, 551. [Google Scholar] [CrossRef] [Green Version]

- Najm, A.A.; Ibraheem, I.K.; Azar, A.T.; Humaidi, A.J. Genetic Optimization-Based Consensus Control of Multi-Agent 6-DoF UAV System. Sensors 2020, 20, 3576. [Google Scholar] [CrossRef]

- Duan, H.; Luo, Q.; Shi, Y.; Ma, G. Hybrid particle swarm optimization and genetic algorithm for multi-UAV formation reconfiguration. IEEE Comput. Intell. Mag. 2013, 8, 16–27. [Google Scholar] [CrossRef]

- Bai, C.; Duan, H.; Li, C.; Zhang, Y. Dynamic multi-UAVs formation reconfiguration based on hybrid diversity-PSO and time optimal control. In Proceedings of the 2009 IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 775–779. [Google Scholar]

- Li, X.; Chen, H. An interactive control algorithm used for equilateral triangle formation with robotic sensors. Sensors 2014, 14, 7229–7247. [Google Scholar] [CrossRef] [Green Version]

- Li, X. A Triangular Formation Strategy for Collective Behaviors of Robot Swarm. In Computational Science and Its Applications—ICCSA 2009; Gervasi, O., Taniar, D., Murgante, B., Laganà, A., Mun, Y., Gavrilova, M.L., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5592, pp. 897–911. [Google Scholar] [CrossRef]

- Elkaim, G.H.; Kelbley, R.J. A lightweight formation control methodology for a swarm of non-holonomic vehicles. In Proceedings of the 2006 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2006. [Google Scholar]

- Defoort, M.; Polyakov, A.; Demesure, G.; Djemai, M.; Veluvolu, K. Leader-follower fixed-time consensus for multi-agent systems with unknown non-linear inherent dynamics. IET Control Theory Appl. 2015, 9, 2165–2170. [Google Scholar] [CrossRef] [Green Version]

- Ying, Z.; Xu, L. Leader-follower formation control and obstacle avoidance of multi-robot based on artificial potential field. In Proceedings of the IEEE 27th Chinese Control and Decision Conference (2015 CCDC), Qingdao, China, 23–25 May 2015; pp. 4355–4360. [Google Scholar]

- Barnes, L.; Fields, M.; Valavanis, K. Unmanned ground vehicle swarm formation control using potential fields. In Proceedings of the 2007 Mediterranean Conference on Control Automation, Athens, Greece, 27–29 June 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Beaulieu, A.; Givigi, S.N.; Ouellet, D.; Turner, J.T. Model-Driven Development Architectures to Solve Complex Autonomous Robotics Problems. IEEE Syst. J. 2018, 12, 1404–1413. [Google Scholar] [CrossRef]

- D’Amato, E.; Mattei, M.; Notaro, I. Distributed Reactive Model Predictive Control for Collision Avoidance of Unmanned Aerial Vehicles in Civil Airspace. J. Intell. Robot. Syst. 2020, 97, 185–203. [Google Scholar] [CrossRef]

- Sloan, S. A fast algorithm for constructing Delaunay triangulations in the plane. Adv. Eng. Softw. (1978) 1987, 9, 34–55. [Google Scholar] [CrossRef]

- Kownacki, C.; Ambroziak, L. Local and asymmetrical potential field approach to leader tracking problem in rigid formations of fixed-wing UAVs. Aerosp. Sci. Technol. 2017, 68, 465–474. [Google Scholar] [CrossRef]

- Sun, J.; Tang, J.; Lao, S. Collision avoidance for cooperative UAVs with optimized artificial potential field algorithm. IEEE Access 2017, 5, 18382–18390. [Google Scholar] [CrossRef]

- Rawlings, J.B.; Mayne, D.Q. Model predictive control: Theory and design. Nob Hill Pub. 2009. [Google Scholar]

- Griva, I.; Nash, S.G.; Sofer, A. Linear and Nonlinear Optimization; Siam: Philadelphia, PA, USA, 2009; Volume 108. [Google Scholar]

- Lazimy, R. Mixed-integer quadratic programming. Math. Program. 1982, 22, 332–349. [Google Scholar] [CrossRef]

- Takapoui, R.; Moehle, N.; Boyd, S.; Bemporad, A. A simple effective heuristic for embedded mixed-integer quadratic programming. Int. J. Control 2020, 93, 2–12. [Google Scholar] [CrossRef]

- D’Amato, E.; Notaro, I.; Mattei, M. Reactive Collision Avoidance using Essential Visibility Graphs. In Proceedings of the 2019 6th International Conference on Control, Decision and Information Technologies (CoDIT), Paris, France, 23–26 April 2019; pp. 522–527. [Google Scholar]

- Huang, H.; Savkin, A.; Li, X. Reactive Autonomous Navigation of UAVs for Dynamic Sensing Coverage of Mobile Ground Targets. Sensors 2020, 20, 3720. [Google Scholar] [CrossRef]

- Moschetta, J.M. The aerodynamics of micro air vehicles: Technical challenges and scientific issues. Int. J. Eng. Syst. Model. Simul. 48 2014, 6, 134–148. [Google Scholar] [CrossRef]

- Stellato, B.; Banjac, G.; Goulart, P.; Bemporad, A.; Boyd, S. OSQP: An Operator Splitting Solver for Quadratic Programs. Math. Program. Comput. 2020. [Google Scholar] [CrossRef] [Green Version]

- Stellato, B.; Naik, V.V.; Bemporad, A.; Goulart, P.; Boyd, S. Embedded mixed-integer quadratic optimization using the OSQP solver. In Proceedings of the European Control Conference (ECC), Limassol, Cyprus, 12–15 June 2018. [Google Scholar] [CrossRef]

- Perez-Montenegro, C.; Scanavino, M.; Bloise, N.; Capello, E.; Guglieri, G.; Rizzo, A. A mission coordinator approach for a fleet of uavs in urban scenarios. Transp. Res. Procedia 2018, 35, 110–119. [Google Scholar] [CrossRef]

- Pach, J.; Agarwal, P.K. Combinatorial Geometry; John Wiley & Sons: Hoboken, NJ, USA, 2011; Volume 37. [Google Scholar]

- Chang, H.C.; Wang, L.C. A simple proof of Thue’s Theorem on circle packing. arXiv 2010, arXiv:1009.4322. [Google Scholar]

| Description | Value |

|---|---|

| Cruise speed [m/s] | 5 |

| Minimum speed [m/s] | 3 |

| Maximum speed [m/s] | 10 |

| Minimum normal acceleration [m/s] | −5 |

| Maximum normal acceleration [m/s] | 5 |

| Minimum tangential acceleration [m/s] | −10 |

| Maximum tangential acceleration [m/s] | 10 |

| Safety distance [m] | 15 |

| Aircraft size [m] | 5 |

| Desired distance [m] | 40 |

| Weight matrix for tracking error | diag([10 10 10 10]) |

| Weight matrix for control effort | diag([1 1]) |

| Number of steps of the prediction horizon | 10 |

| Number of steps of the control horizon | 5 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bassolillo, S.R.; D’Amato, E.; Notaro, I.; Blasi, L.; Mattei, M. Decentralized Mesh-Based Model Predictive Control for Swarms of UAVs. Sensors 2020, 20, 4324. https://doi.org/10.3390/s20154324

Bassolillo SR, D’Amato E, Notaro I, Blasi L, Mattei M. Decentralized Mesh-Based Model Predictive Control for Swarms of UAVs. Sensors. 2020; 20(15):4324. https://doi.org/10.3390/s20154324

Chicago/Turabian StyleBassolillo, Salvatore Rosario, Egidio D’Amato, Immacolata Notaro, Luciano Blasi, and Massimiliano Mattei. 2020. "Decentralized Mesh-Based Model Predictive Control for Swarms of UAVs" Sensors 20, no. 15: 4324. https://doi.org/10.3390/s20154324